Abstract

Although models to predict climate impact on crop production have been used since the 1980s, spatial and temporal diffusion of plant diseases are poorly known. This lack of knowledge is due to few models of plant epidemics, high biophysical complexity, and difficulty to couple disease models to crop simulators. The first step is the evaluation of disease potential growth in response to climate drivers only. Here, we estimated the evolution of potential infection events of fungal pathogens of wheat, rice, and grape in Europe. A generic process-based infection model driven by air temperature and leaf wetness data was parameterized with the thermal and moisture requirements of the pathogens. The model was run on current climate as baseline, and on two time frames centered on 2030 and 2050. Our results show an overall increase in the number of infection events, with differences among the pathogens, and showing complex geographical patterns. For wheat, Puccinia recondita, or brown rust, is forecasted to increase +20–100 % its pressure on the crop. Puccinia striiformis, or yellow rust, will increase 5–20 % in the cold areas. Rice pathogens Pyricularia oryzae, or blast disease, and Bipolaris oryzae, or brown spot, will be favored all European rice districts, with the most critical situation in Northern Italy (+100 %). For grape, Plasmopara viticola, or downy mildew, will increase +5–20 % throughout Europe. Whereas Botrytis cinerea, or bunch rot, will have heterogeneous impacts ranging from −20 to +100 % infection events. Our findings represents the first attempt to provide extensive estimates on disease pressure on crops under climate change, providing information on possible future challenges European farmers will face with in the coming years.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There is a clear lack of balance in the literature between the modeling studies aimed at investigating the effects of climate change on crop growth and yields (e.g., Rosenzweig and Parry 1994; Tubiello et al. 2000; Challinor et al. 2010) and the available studies on expected changes in the future distribution of plant diseases (e.g., Anderson et al. 2004; Ghini et al. 2008). A possible explanation is the scarce availability of process-based models for plant disease simulation, whereas crop growth models are widely diffused in research and operational contexts. As underlined by many authors (e.g., Butterworth et al. 2010; Chakraborty and Newton 2011), this gap of knowledge is an issue modelers should urgently deal with, because even nowadays the damages caused by diseases and insect pests are responsible for approximately 50 % of the losses of the eight most important food and cash crops worldwide (Oerke 2006), thus underlining the need for assessing their future impacts. There are evidences that climate change could deeply influence the effects of plant diseases and pests on crop production (e.g., Goudriaan and Zadocks 1995; Garrett et al. 2006), by means of altered spread of some species and introduction of new pathogens and vectors, leading to uncertain dynamics of plant epidemics. An overall increase in the infection patterns of fungal plant pathogens in response to CO2 enrichment, N deposition, and changes in temperature and rainfall regimes is documented in the review by Tylianakis et al. (2008), whereas their expansions in new geographic areas together with heterogeneous responses to environmental conditions are predicted by Parmesan (2006). Mitchell et al. (2003) demonstrated in a field experiment where 16 different plant species were grown under varying levels of CO2, N treatment, and species richness that the decreased plant diversity decidedly contributed to the increase in the leaf area infected, whereas higher CO2 and N increased pathogen load in C4 and C3 grasses, respectively. An increased impact of fungal pathogens due to the lengthening of the growing season is proved by the warming experiment by Roy et al. (2004), in which six plant species were grown in plots where soil was heated and dried over the growing period. Almost all the plants grown under warmer conditions went through an increase in the amount of damage and in the number of the pathogenic species along a phenological gradient. Furthermore, climate change could influence the physiology and the degree of resistance of host plants, other than modify the growing patterns and the development rates of the biological cycles of plant pathogens (Chakraborty and Datta 2003). This could potentially lead to an increase in the number of infection events that in turn could determine an increment in the application of chemicals, thus reducing the environmental sustainability of many cropping systems (e.g., Hannukkala et al. 2007). Moreover, since plant disease epidemics often increase following a compound interest model, a slight increase in the length of the growing season in currently cold areas may have a large impact on inoculum load. These projections are in line with the available studies on climate change impacts on pathosystems. Travers et al. (2010) observed lower expression of the genes involved with disease resistance in big bluestem in response to simulated precipitation change; Chakraborty et al. (2000) noticed a higher fecundity of Colletotrichum gloeosporioides under increased atmospheric CO2 concentration; Bergot et al. (2004) predicted an expansion of Phytophthora cinnamomi in Europe as a result of higher winter survival by using a physiologically based approach.

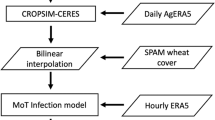

Studies aimed at providing estimates of the future spread of plant pathogens require to clearly identify the goals and to explicitly communicate the limits. In pest risk assessment studies, the main objective is the evaluation of the environmental suitability to a specific pathogen. The key aspect to analyze in these studies is if climate conditions are successfully conducive to the fulfillment of the infection process, which represents the first phase of the establishment of an epidemic (Magarey and Sutton 2007). At the same time, the estimate of the number of potential infection events can give a quantitative measure of plant disease pressure on crops. This can be effective to the timely understanding of the challenges farmers will face with in the coming years, leading—in the most critical cases—even to evaluate the opportunity of cultivating a crop in a specific area. The aim of this paper is to assess the changes in the number of potential infection events of six pathogens of wheat, rice (Fig. 1), and grapes in Europe due to projections of climate change, via the spatially distributed application of a generic infection model driven by weather data and parameters with a clear biological meaning.

Even if the susceptibility of the host plant to fungal diseases varies according to phenological development, we did not couple the infection model with a phenological one in order to evaluate the ecological suitability of the fungal pathogens over the whole year rather than on specific pathosystems which should be analyzed also accounting for alternate hosts. The role of such plants is recognized as crucial for the establishment of an epidemic and at the same time it is unknown under future weather conditions.

This paper represents the first attempt to provide estimates of future plant disease pressure on large areas. The information provided is on the possible criticalities European farmers and political stakeholders will have to deal with in the future, also in terms of comparison across regions as well as changes of known spatial patterns.

2 Materials and methods

2.1 Meteorological data

The database of daily weather data used in this study was specifically built within the AVEMAC project (Donatelli et al. 2012a, b) for the use of biophysical simulation models in climate change studies. This database was derived from the bias-corrected ENSEMBLES dataset of Dosio and Paruolo (2012) with the future projections of the A1B emission scenario given by the HadCM3 General Circulation Model (GCM) nested with the HadRM3 Regional Circulation Model (RCM; the realization is denoted as METO-HC-HadRM3Q0-HadCM3Q0 in the ENSEMBLES project—van der Linden and Mitchell 2009). This represents a “warm” realization of the A1B emission scenario. The A1 scenario family is based on future human activities involving a rapid economic growth, with a fast diffusion of new and efficient technologies and with broad sociocultural interactions worldwide. In specific, A1B scenario implies a balanced emphasis on all energy sources (IPCC 2007). Three target horizons were considered in this work, which are 1993–2007 (baseline), 2025–2034 (2030), and 2045–2054 (2050). Climate studies typically consider a sample of 30 years around the target horizon to characterize a given variable or to derive other data (e.g., fungal pathogen infections); in order to avoid that the short-term random fluctuations—such as daily weather variations—do not influence the outputs derived from the GCM simulations. In this study, projected time windows of 30 years around the two considered horizons (i.e., 2030 and 2050) would have resulted in a meaningless separation of the two horizons. When considering only 20 years (thereby avoiding overlap) the sample size could become small to assume that short-term weather fluctuations do not dominate over the trend. Indeed, 3 or 4 years, which are much warmer than the trend during a period of 20 years, will have stronger consequences on the average values of fungal infections than if these years occurred within a period of 30 years. To solve this problem, the ClimGen (Stockle et al. 1999) stochastic weather generator, was used to increase the sample size corresponding to each horizon. A set of 15 years from the GCM-RCM runs was used around each reference year (e.g., 2030 ± 7 years, so from 2023 to 2037), increasing the robustness of the estimate to characterize a time period. The weather generator uses these data to derive monthly parameters resuming the distribution of each weather variable for each grid cell. These parameters were then used to generate a set of 15 synthetic years for every grid cell, which have the characteristics of the 15-year period.

The hourly agro-meteorological variables needed as input for the potential infection model were generated starting from daily data. The model developed by Kim et al. (2002) was adopted to estimate leaf wetness duration, since it proved to be robust when applied under a wide range of climate conditions (Bregaglio et al. 2011). To run this model, hourly data of air temperature, air relative humidity, wind speed, and dew point temperature are needed. Hourly air temperature data were derived starting from daily maximum and minimum air temperature using the approach proposed by Campbell (1985). Dew point temperature was kept constant in a day (Running et al. 1987; Glassy and Running 1994) and computed according to Linacre (1992). Relative humidity data were estimated according to the best performing approach tested in heterogeneous climatic conditions by Bregaglio et al. (2010), as the ratio of saturation (ASAE 1988 method) and actual vapor pressure (Allen et al. 1998). Hourly wind speed was generated starting from daily wind speed data by using the stochastic approach developed by Mitchell et al. (2000).

For each time frame and for each species, simulations were carried out on the 25 × 25-km spatial units of the MARS database according to the actual crop distributions (European Communities 2008).

2.2 Model and parameterizations

The generic potential infection model developed by Magarey et al. (2005) was used in this study. It proved to be sensitive to diverse parameterizations and to effectively respond to input data variability, thus being suitable for climate change impact assessments (Bregaglio et al. 2012). It simulates the response of a generic fungal pathogen to both air temperature and leaf wetness duration with an hourly time step, by means of parameters with a clear biological meaning.

The air temperature response is modeled according to Yan and Hunt (1999) (Eq. 1):

where f(t) (0–1; unitless) is the temperature response function; T (°C) is the hourly air temperature; T min, T max, and T opt (°C) are the minimum, maximum, and optimum temperatures for infection, respectively. The air temperature response is then scaled to the wetness duration requirement according to Eq. 2:

where W(t) (0–1; unitless) is the wetness response function, WDmin (hours) is the minimum leaf wetness duration for infection, f(t) (0–1; dimensionless) is the temperature response function (Eq. 1) and WDmax (hours) is the maximum leaf wetness duration requirement. The model then takes into account the impact of a dry period via a critical interruption value (D50), as reported in Eq. 3:

where W sum is the sum of the surface wetting periods and W 1 and W 2 are two wet periods separated by a dry period (D, hours). According to this equation, if D > D50 then the model considers the two wet periods as separated. At each hour, the model adds a cohort of spores if the leaf is wet and f(t) > 0, and it considers that an infection event is verified if the value of W sum for a cohort ranges between WD min and WD max. Detailed model description is provided by Bregaglio et al. (2012).

Six air-borne pathogens were simulated (Table 1), whose thermal and moisture requirements were derived from literature. Aiming at applying the model on large areas, no specific calibration was performed in this study and the average of the parameters values found in literature was used as input to the model. Even if this implies that neither genetic diversity nor evolutionary potential of the pathogens population is considered in this study, this choice allowed to use the model to provide quantitative estimates of fungal infections based on reliable published data.

The choice of the pathogens was aimed at identifying relevant biotic stressors on key cultivated herbaceous (i.e., wheat and rice) and tree (i.e., grape) species in Europe, characterized by heterogeneous geographical distribution and by different thermal requirements. Two pathogens for each crop were selected in order to analyze the dynamics of the pressure of multiple organisms on the same crop. Simulations were carried out by considering the current geographic distribution of the three species (European Commission-Joint Research Centre MARS database) and over the entire year, to focus on the relationships between the pathogen and the environment. This choice is supported by the evidence that all the simulated pathogens can develop even on wild plants and weeds (Table 1), thus allowing them to survive from one growing season to the following one and to contribute to the establishment of an epidemic on the cultivated crop. The role of these bridging hosts is recognized as crucial both for the production of additional inoculum load for the following season and to harbor the dormant stages of the pathogens (Dinoor 1974), other than being likely significant under future climate conditions (Dobson 2004).

3 Results and discussion

Results are presented as percentage difference of the total number of potential infection events simulated in the 2030 and 2050 time frames with respect to the baseline results.

3.1 Wheat

Projections for the 2030 time frame for Puccinia recondita (brown rust, Fig. 2a) showed a general increase in the number of infection events throughout Europe, more pronounced in the key wheat-producing areas of the Northern part of Germany, in Great Britain, in Benelux, and in some areas of Spain, France, and of the coastal part of Croatia (+100 %). The remaining areas of Europe will be interested by a lower increase in disease pressure, with small areas in the Mediterranean part of Morocco in which the pathogen will not change its infection pattern. Moving to 2050 time frame (Fig. 2b), simulations indicate a deep worsening in the disease pressure on wheat, with the number of infections increased by more than 100 % in most of the wheat producing countries, except in Italy, Southern Spain, and in the countries of Eastern Europe. As already discussed for the 2030 time frame, Moroccan regions will be less interested by the increase in the infection events.

Differences in the number of potential infection events simulated in the A1B climate scenario compared to the 1993–2007 reference scenario (%); P. recondita in 2030 a and 2050 b time frames shows a general increase in the number of infections; P. striiformis in 2030 c and 2050 d time frames maintains the current infection levels

Simulations performed for Puccinia striiformis (yellow rust) highlighted a completely different scenario, showing for the 2030 time frame (Fig. 2c) a stationary number of potential infections for most of the regions in Central Europe (−5 to +5 %), with the exception of some spot areas in France. A slight increase in the number of infection events (+5 to +20 %) was observed in Italy, in Southern Spain, and in South-Eastern countries, especially in Hungary, Croatia, and Serbia. According to the simulations, Poland, the European part of Russia, and some areas in Morocco will experience a lower number of infections (−5 to −20 %). For the 2050 scenario (Fig. 2d), the disease pressure is forecasted to decidedly increase in the Central and Eastern parts of Europe, whereas in France and Italy it is expected to decrease.

The large differences between the responses simulated for the two pathogens can be due to their very diverse thermal requirements, especially concerning the optimum conditions for infection development. P. recondita, which has an optimum temperature of 25 °C, will be decidedly favored by warmer conditions, especially in the European countries characterized by a continental climate, whereas P. striiformis, which is more adapted to a temperate environment, will not present a clear benefit from the temperature increase, except in the European countries with a colder climate. The reduced suitability of yellow rust to increased temperature is more pronounced in the Mediterranean countries for the 2050 time frame, whereas the decrease in the number of potential infections simulated for 2030 in Russia and Germany can be due to the high suitability of the current climate to yellow rust development (Schröder and Gabriele 2001; Tian et al. 2004). In these areas, even a limited increase in air temperature can strongly affect the completion of the infection events. This confirms the results achieved by the ENDURE EU-FP6 project, in which expert judgments and experimental trials were carried out in eight European countries to investigate the impact of biotic constraints of wheat, indicating the highest value of average yield losses in Germany (−2.5 % in the period from 2003 to 2007).

The projections for P. recondita, currently causing significant yield losses in Europe (i.e., ranging from 3 % in Germany to 15 % in France; ENDURE EU-FP6 project; Jørgensen et al. 2010), indicate that this pathogen could be even more dangerous in the coming years, whereas P. striiformis pressure is expected to remain unchanged or even decrease.

3.2 Rice

Results of the simulations carried out for Bipolaris oryzae (brown spot) in 2030 time frame (Fig. 3a) indicate a marked increase in the number of potential infection events compared to the baseline in almost all the European rice districts and especially in the Italian one (+100 %). The only exceptions are represented by the Hungarian district, for which an unchanged disease pressure was simulated, and by some spot areas of Portugal, which presented a decreased number of infections. For the 2050 time frame (Fig. 3b), simulations depicted conditions more favorable for the pathogen. The only rice districts which experienced a substantially unvaried number of infection events are located in Spain.

Differences in the number of potential infection events simulated in the A1B climate scenario compared to the 1993–2007 reference scenario (%); B. oryzae in 2030 a and 2050 b time frames; P. oryzae in 2030 c and 2050 d time frames. The number of infection events increases for the two pathogens, especially for the former

According to the simulations performed for Pyricularia oryzae (blast disease) for the 2030 time frame (Fig. 3c), the increase in disease pressure will be lower than the one depicted for the other rice pathogen, although a homogeneous increase in the number of infection events is predicted throughout Europe (+5 to +20 %), in some cases even above 20 % (Italy and Hungary). As for B. oryzae, projections for 2050 (Fig. 3d) present a raising disease pressure in the whole Europe, even if the increase of the number of infections remains below the 100 %.

Both the pathogens are expected to intensify the pressure on the crop, although with diverse severity. The higher intensification of the number of potential infections simulated for B. oryzae is explained by the different requirements of the two tropical pathogens in terms of air temperature and leaf wetness duration. According to the data available in literature, B. oryzae presents higher optimum (27.5 °C) and maximum (35 °C) temperature for infection development with respect to P. oryzae (25 and 32 °C, respectively). This implies that the former would explore more favorable conditions, especially in the 2050 time frame. For what concerns their leaf wetness duration requests, B. oryzae needs a longer time to fulfill infection requirements (10 versus 4 h), whereas it is less sensitive than P. oryzae to a dry interruption event (13 versus 4 h).

These results suggest that B. oryzae could replace P. oryzae in the role of most dangerous rice pathogen in Europe. In fact in Italy, which is the larger rice producing country in the European Union, P. oryzae is currently the most impacting pathogen, requiring chemical control in the 75 % of the Italian rice acreage (Food Chain Evaluation Consortium 2011). The simulated intensification of the pressure of brown spot disease is in agreement with Moletti et al. (2011), who underlined that it is recently increasing in Italy also because its development is favored by the Akiochi nutritional disorder, in turn promoted by raising temperatures.

3.3 Grape

In general, the simulations performed with Plasmopara viticola (downy mildew) in the 2030 time frame (Fig. 4a) clearly indicate a slight increased disease pressure all over Europe (+5 to +20 %), with the only exceptions represented by some areas in Spain, Germany, France, and Italy, where the number of infection events remains at the same level of the reference scenario. According to the simulations carried out in 2050 (Fig. 4b), the number of events is expected to remain unchanged with respect to the one simulated for 2030 in most of the European grape producing areas, with a small increase only in the Northern part of Spain. In other zones in which grape is intensively cultivated (France, Southern Italy, and Portugal), the simulated disease pressure is expected to remain constant at the current levels. Results for Botrytis cinerea (bunch rot) showed a very heterogeneous geographical pattern, with a high increase in the number of infections (+70 %) in the 2030 time frame (Fig. 4c) along the coastal parts of the Mediterranean countries and in the French Aquitaine region. Other parts of Europe (e.g., Tuscany, most of the Spanish regions and the Balkans) are forecasted to maintain a similar level of pressure compared to the current one, whereas there are spot areas placed in Hungary, Romania, France, and Portugal that will likely experience a decrease in the number of potential infection events even above than 100 %. For 2050 time frame (Fig. 4d), a similar scenario is depicted, with a general slight intensification in the number of events in the Balkans and in Portugal. As already discussed for the 2030 time frame, large areas in Europe will experience an unvaried disease pressure (e.g., Spain, Central Italy, and Greece), whereas in other regions the number of infections is expected to decrease compared to the baseline (e.g., some areas in Germany and France).

Differences in the number of potential infection events simulated in the A1B climate scenario compared to the 1993–2007 reference scenario (%); P. viticola in 2030 a and 2050 b time frames shows a general slight increase; B. cinerea in 2030 c and 2050 d time frames presents heterogeneous geographical patterns

The responses of the two grape pathogens to climate change are very different. Simulation results showed an overall stationary disease pressure of downy mildew for the 2030 time frame, probably due to the broad range of temperature in which the pathogen can develop (i.e., T min = 1 °C; T max = 30 °C) and to its low requests in terms of leaf wetness duration (two wet hours are enough for the fulfillment of an infection event). The different situation depicted for B. cinerea is probably due to the smaller range of temperatures suitable for its development (i.e., T min = 10 °C; T max = 35 °C), with optimum temperature of 20 °C combined with the minimum wetness duration requirement fixed at 4 h. In the coastal areas of the European Mediterranean countries, characterized by a warmer climate, the generated scenario determined more humid conditions, which in turn led to more suitable environmental conditions for the pathogen development. In the areas of Europe in which the number of infections remained unvaried or decreased, the lower number of leaf wetness hours in the climate scenario considered and the increase in air temperatures above the optimum for infection of B. cinerea played a major role. Simulations suggest that this pathogen, which often imposes price penalties even if only 3–5 % of the grapes is affected (Hill et al. 2010) because of the associated decline in both grape yield and wine quality (Nair and Hill 1992), will continue to be a relevant problem for European farmers, although with different dynamics. This could also lead to the need of modifying the chemical control management in some areas by means of using new active principles, given the great ability of this pathogen to quickly adapt to new chemicals by developing resistant strains (Rosslenbroich and Stuebler 2000).

4 Conclusions

Assessing the dynamics of diseases pressure under climate change scenarios is crucial to understand the challenges farmers will face with in the coming years, which can even lead to evaluate the opportunity of cultivating a crop in a specific area. The results of this study indicate a general increase in infection events for most of the pathogens considered, in particular for P. recondita and B. oryzae. For the other pathogens, projections are more heterogeneous in terms of both space and time. On the whole, moving from the 2030 to the 2050 time frame, an increase in the number of potential infection events is expected. These results are in line with the available studies indicating an increase of plant fungal infections under warmer conditions (e.g., Dale et al. 2001; Harvell et al. 2002) and with the heterogeneity of pathogen responses observed in experimental trials (Mitchell et al. 2003; Roy et al. 2004). This study then represents a first attempt to provide quantitative estimates of such dynamics, via the application of a simple model based on parameters with a clear physiological meaning. Policy makers can use the outcomes of this study to be aware of possible future challenges to face when planning regional or local policies in terms of disease pressure and consequently of chemical control. Also, researchers could be interested in refining model parameterization by testing different ecotypes of the pathogens simulated, or even of different pathogens.

The limits of this study can be summarized as follows: the lack of consideration (1) of the evolutionary potential of the pathogen populations, which can lead to more adapted pathotypes and (2) of the tight interactions between the pathogens and the host plants during the epidemic development, being this study focused on the infection phase. This lead to the lack of consideration of other key aspects which can determine the establishment of an epidemic, such as the time pathogens move in and away from the infected areas and the local wintering pathogens quantity, which should be analyzed to give an integrated assessment of plant disease impacts under climate change scenarios. However, the simulation of these aspects would require specific models and detailed information about the epidemiology of the pathogens, therefore including these processes in this study would lead to add a certain layer of complexity which could mislead the original message. Other limits are represented by the inner uncertainty related (3) to the model parameterization and (4) to the reliability of air humidity estimates, which have an associated uncertainty due to the weather scenario: the realizations of the same emission scenario may differ substantially for spatial patterns of precipitation, while showing a similar air temperature increase.

This exploratory study showed that the response of plant pathogens to climate scenarios can be differentiated across Europe and requires a case-by-case evaluation. A future development of this work will be the implementation of the potential infection model in a modeling solution capable to evaluate the whole crop-disease interaction. This will make available an operational tool to be coupled with cropping system models, to improve the evaluation of the impact of climate change scenarios on crop production levels. Such modeling solution would be a clear step forward to the traditional studies which do not consider dynamically biotic constraints to production, enabling also more articulated adaptation studies.

References

Allen RG, Pereira LS, Raes D, Smith M (1998) Crop evapotranspiration: Guidelines for computing crop water requirements. Irrig Drain 56, 300 pp. UN-FAO, Rome, Italy

Anderson PK, Cunningham AA, Patel NG, Morales FJ, Epstein PR, Daszak P (2004) Emerging infectious diseases of plants: pathogen pollution, climate change and agrotechnology drivers. Trends Ecol Evol 19:535–544. doi:10.1016/j.tree.2004.07.021

Bergot M, Cloppet E, Pérarnaud V, Déqué M, Marcais B, Desprez-Loustau ML (2004) Simulation of potential range expansion of oak disease caused by Phytophthora cinnamomi under climate change. Glob Change Biol 10:1539–1552. doi:10.1111/j.1365-2486.2004.00824.x

Bregaglio S, Donatelli M, Confalonieri R, Acutis M, Orlandini S (2010) An integrated evaluation of thirteen modelling solutions for the generation of hourly values of air relative humidity. Theor Appl Climatol 102:429–438. doi:10.1007/s00704-010-0274-y

Bregaglio S, Donatelli M, Confalonieri R, Acutis M, Orlandini S (2011) Multi metric evaluation of leaf wetness models for large-area application of plant disease models. Agr Forest Meteorol 151:1163–1172. doi:10.1016/j.agrformet.2011.04.003

Bregaglio S, Cappelli G, Donatelli M (2012) Evaluating the suitability of a generic fungal infection model for pest risk assessment studies. Ecol Modell 247:58–63. doi:10.1016/j.ecolmodel.2012.08.004

Butterworth MH, Semenov MA, Barnes A, Moran D, West JS, Fitt BDL (2010) North–south divide: contrasting impacts of climate change on crop yields in Scotland and England. J R Soc Interface 7:123–130. doi:10.1098/rsif.2009.0111

Campbell GS (1985) Soil physics with BASIC: transport models for soil-plant systems. Elsevier, Amsterdam

Cardoso CAA, Reis EM, Moreira EN (2008) Development of a warning system for wheat blast caused by Pyricularia grisea. Summa Phytopathol 34:216–221. doi:10.1590/S0100-54052008000300002

Chakraborty S, Datta S (2003) How will plant pathogens adapt to host plant resistance at elevated CO2 under a changing climate? New Phytol 159:733–742. doi:10.1046/j.1469-8137.2003.00842.x

Chakraborty S, Newton AC (2011) Climate change, plant diseases and food security: an overview. Plant Pathol 60:2–14. doi:10.1111/j.1365-3059.2010.02411.x

Chakraborty S, Pangga IB, Lupton J, Hart L, Room PM, Yates D (2000) Production and dispersal of Colletotrichum gloeosporioides spores on Stylosanthes scabra under elevated CO2. Environ Pollut 108:381–387. doi:10.1016/S0269-7491(99)00217-1

Challinor AJ, Simelton ES, Fraser EDG, Hemming D, Collins M (2010) Increased crop failure due to climate change: assessing adaptation options using models and socio-economic data for wheat in China. Environ Res Lett 034012. doi:10.1088/1748-9326/5/3/034012

Dale VH, Joyce LA, McNulty S, Neilson RP, Ayres MP, Flannigan MD, Hanson PJ, Irland LC, Lugo AE, Peterson CJ, Simberloff D, Swanson FJ, Stocks BJ, Wotton BM (2001) Climate change and forest disturbances. Bioscience 51:723–734. doi:10.1016/j.gloplacha.2006.07.028

Dennis JL (1987) Temperature and wet-period conditions for infection by Puccinia striiformis f. sp. tritici race 104e137a+. Trans Br Mycol Soc 88:119–121. doi:10.1016/S0007-1536(87)80194-8

Dinoor A (1974) Role of wild and cultivated plants in the epidemiology of plant diseases in Israel. Annu Rev Phythopathol 12:413–436. doi:10.1146/annurev.py.12.090174.002213

Dobson A (2004) Population dynamics of pathogens with multiple host species. Am Nat 164:S64–S78. doi:10.1086/424681

Donatelli M, Fumagalli D, Zucchini A, Duveiller G, Nelson RL, Baruth B (2012a) A EU27 database of daily weather data derived from climate change scenarios for use with crop simulation models. In: Seppelt R, Voinov AA, Lange S, Bankamp D (ed) International Environmental Modelling and Software Society (iEMSs), 2012 International Congress on Environmental Modelling and Software, Managing resources of a limited planet, Leipzig, Germany

Donatelli M, Duveiller G, Fumagalli D, Srivastava A, Zucchini A, Angileri V, Fasbender D, Loudjani P, Kay S, Juskevicius P, Toth T, Haastrup P, M’barek R, Espinosa M, Ciaian P, Niemeyer S (2012b) Assessing agriculture vulnerabilities for the design of effective measures for adaptation to climate change (AVEMAC project). Luxembourg: Publications Office of the European Union. doi:10.2788/16181

Dosio A, Paruolo P (2012) Bias correction of the ENSEMBLES high-resolution climate change projections for use by impact models: evaluation on the present climate. J Geophys Res 116, D16106. doi:10.1029/2011JD015934

European Communities (2008) CGMS version 9.2: User manual and technical documentation. JRC Scientific and Technical Reports. Joint Research Centre, European Commission. Office for Official Publications of the European Communities, Luxembourg

Food Chain Evaluation Consortium (2011) Economic damage caused by lack of plant protection products against rice blast (Pyricularia grisea) in rice in Italy. Study on the establishment of a European fund for minor uses in the field of plant protection products: Final report, pp. 159–164. http://ec.europa.eu/food/plant/protection/evaluation/study_establishment_eu_fund.pdf. Accessed 03 October 2012

Garrett KA, Dendy SP, Frank EE, Rouse MN, Travers SE (2006) Climate change effects on plant disease: genomes to ecosystems. Annu Rev Phytopathol 44:489–509. doi:10.1146/annurev.phyto.44.070505.143420

Ghini R, Hamada E, Bettiol W (2008) Climate change and plant diseases. Sci Agr 65:98–107. doi:10.1590/S0103-90162008000700015

Glassy JM, Running SW (1994) Validating diurnal climatology logic of the MT-CLIM model across a climatic gradient in Oregon. Ecol Appl 4:248–257

Goudriaan J, Zadocks JC (1995) Global climate change: modelling the potential responses of agro-ecosystems with special reference to crop protection. Environ Pollut 87:215–224. doi:10.1016/0269-7491(94)P2609-D

Hannukkala AO, Kaukoranta T, Lehtinen A, Rahkonen A (2007) Late-blight epidemics on potato in Finland 1933–2002; increased and earlier occurrence of epidemics associated with climate change and lack of rotation. Plant Pathol 56:167–176

Harvell CD, Mitchell CE, Ward JR, Altizer S, Dobson AP, Ostfeld RS, Samuel MD (2002) Climate warming and disease risks for terrestrial and marine biota. Science 296:2158–2162

Hill GN, Beresford RM, Evans KJ (2010) Tools for accurate assessment of botrytis bunch rot (Botrytis cinerea) on wine grapes. N Z Plant Protect-Se 63:174–181

IPCC (2007) Climate Change 2007: The Physical Science Basis, Contribution of working group 1 to the fourth assessment report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge

Jørgensen LN, Hovmøller MS, Hansen JG et al (2010) EuroWheat.org: a support to integrated disease management in wheat. Outlooks on Pest Management 21:173–176. doi:10.1564/21aug06

Kim CH, Mackenzie DR, Rush MC (1988) Field testing a computerized forecasting systems for rice blast disease. Phytopathology 78:931–934. doi:10.1094/phyto-78-931

Kim KS, Taylor SE, Gleason ML, Koehler KJ (2002) Model to enhance site-specific estimation of leaf wetness duration. Plant Dis 86:179–185. doi:10.1094/PDIS.2002.86.2.179

Linacre E (1992) Climate data and resources: a reference and guide. Routledge, London

Magarey RD, Sutton TB (2007) How to create and deploy infection models for plant pathogens. In: Ciancio A, Mukerji KG (eds) General concepts in integrated pest and disease management. Springer, The Netherlands, pp 3–25

Magarey RD, Sutton TB, Thayer CL (2005) A simple generic infection model for foliar fungal plant pathogens. Phytopathology 95:92–100. doi:10.1094/phyto-95-0092

Mitchell G, Ray H, Griggs VB, Williams J (2000) EPIC documentation. Texas A&M Blackland Research and Extension Center, Temple

Mitchell CE, Reich PB, Tilman D, Groth JV (2003) Effects of elevated CO2, nitrogen deposition, and decreased species diversity on foliar fungal plant disease. Glob Change Biol 9:438–451. doi:10.1046/j.1365-2486.2003.00602.x

Moletti M, Giudici ML, Villa B (2011) Rice Akiochi-brown spot disease in Italy: agronomic and chemical control. CIHEAM—Options Mediterraneennes 15:79–85

Nair NG, Allen RN (1993) Infection of grape flowers and berries by Botrytis cinerea as a function of time and temperature. Mycol Res 97:1012–1014. doi:10.1016/S0953-7562(09)80871-X

Nair NG, Hill GK (1992) Bunch rot of grapes caused by Botrytis cinerea. In: Kumar J, Chaube HS, Singh US, Mukhopadhyay AN (eds) Plant diseases of international importance. v. III: diseases of fruit crops. Prentice-Hall, New Jersey, pp 147–169

Oerke EC (2006) Crop losses to pests. J Agr Sci 144:31–43. doi:10.1017/S0021859605005708

Parmesan C (2006) Ecological and evolutionary responses to recent climate change. Ann Rev Ecol Evol Syst 37:637–669. doi:10.1146/annurev.ecolsys.37.091305.110100

Percich JA, Nyvall RF, Malvick DK, Kohls CL (1997) Interaction of temperature and moisture on infection of wild rice by Bipolaris oryzae in the growth chamber. Plant Dis 81:1193–1195. doi:10.1094/PDIS.1997.81.10.1193

Rosenzweig C, Parry ML (1994) Potential impact of climate change on world supply. Nature 367:133–138. doi:10.1038/367133a0

Rosslenbroich HJ, Stuebler D (2000) Botrytis cinerea-history of chemical control and novel fungicides for its management. Crop Prot 19:557–561. doi:10.1016/S0261-2194(00)00072-7

Roy BA, Gusewell S, Harte J (2004) Response of plant pathogens and herbivores to a warming experiment. Ecology 85:2570–2581. doi:10.1890/03-0182

Running SW, Nemani RR, Hungerford RD (1987) Extrapolation of synoptic meteorological data in mountainous terrain, and its use for simulating forest evapotranspiration. Can J Forest Res 17:472–483. doi:10.1139/x87-081

Schröder G, Gabriele E (2001) Observation on the appearance of fungal diseases on winter triticales (Triticosecale Wittmack) in field population of Brandenburg County. Gesunden Pflanzen 53:185–190

Sharma BR, Kapoor AS (2003) Some epidemiological aspects of rice blast. Plant Disease Research Ludhiana 18:106–109

Stockle CO, Campbell GS, Nelson R (1999) ClimGen manual. Biological Systems Engineering Department, Washington State University, Pullman

Suzuki H (1975) Meteorological factors in the epidemiology of rice blast. Annu Rev Phytopathol 13:239–256. doi:10.1146/annurev.py.13.090175.001323

Tian S, Weinert G, Wolf GA (2004) Infection of triticale cultivars by Puccinia striiformis: first report on disease severity and yield loss. J Plant Dis Protect 111:461–464

Travers SE, Tang Z, Caragea D et al (2010) Spatial and temporal variation of big bluestem (Andropogon gerardii) transcription profiles with climate change. J Ecol 98:374–383. doi:10.1111/j.1365-2745.2009.01618.x

Tubiello NF, Donatelli M, Rosenzweig C, Stöckle CO (2000) Effects of climate change and elevated CO2 on cropping systems: model predictions at two Italian locations. Eur J Agron 13:179–189. doi:10.1016/S1161-0301(00)00073-3

Tylianakis JM, Didham RK, Bascompte J, Wardle DA (2008) Global change and species interactions in terrestrial ecosystems. Ecol Lett 11:1351–1363. doi:10.1111/j.1461-0248.2008.01250.x

Vallavieille Pope C, Huber L, Leconte M, Goyeau H (1995) Comparative effects of temperature and interrupted wet periods on germination, penetration and infection of Puccinia recondita f. sp. tritici and P. striiformis on wheat seedlings. Phytopathology 85:409–415

van der Linden P, Mitchell JFB (2009) ENSEMBLES: climate change and its impacts: summary of research and results from the ENSEMBLES project. Met Office Hadley Centre, Fitzroy Road, UK

Yan W, Hunt LA (1999) An equation for modelling the temperature response of plants using only the cardinal temperatures. Ann Bot-London 84:607–614. doi:10.1006/anbo.1999.0955

Acknowledgments

This work was partially funded by the Italian Ministry of Agricultural, Food and Forestry Policies under the AgroScenari project.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Bregaglio, S., Donatelli, M. & Confalonieri, R. Fungal infections of rice, wheat, and grape in Europe in 2030–2050. Agron. Sustain. Dev. 33, 767–776 (2013). https://doi.org/10.1007/s13593-013-0149-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13593-013-0149-6