Abstract

Glaucoma is a chronic and irreversible neuro-degenerative disease in which the neuro-retinal nerve that connects the eye to the brain (optic nerve) is progressively damaged and patients suffer from vision loss and blindness. The timely detection and treatment of glaucoma is very crucial to save patient’s vision. Computer aided diagnostic systems are used for automated detection of glaucoma that calculate cup to disc ratio from colored retinal images. In this article, we present a novel method for early and accurate detection of glaucoma. The proposed system consists of preprocessing, optic disc segmentation, extraction of features from optic disc region of interest and classification for detection of glaucoma. The main novelty of the proposed method lies in the formation of a feature vector which consists of spatial and spectral features along with cup to disc ratio, rim to disc ratio and modeling of a novel mediods based classier for accurate detection of glaucoma. The performance of the proposed system is tested using publicly available fundus image databases along with one locally gathered database. Experimental results using a variety of publicly available and local databases demonstrate the superiority of the proposed approach as compared to the competitors.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

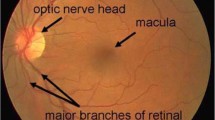

World Health Organization has declared glaucoma to be the second largest cause of blindness all over the world and it encompasses 15 % of the blindness cases in the world. This makes 5.2 million of the world’s population [1] and the number is expected to increase upto 80 million by 2020 [2]. The disease is associated with the malfunctioning of the eye’s drainage system which creates a high intra-ocular pressure which affects the components of the optic nerve. Glaucoma starts with loss of peripheral vision and therefore remains unnoticed in its early stages. However, it can result in permanent blindness if left untreated [2]. Since the disease is irreversible, a screening system is therefore critically required to detect the disease in its early phase and ensure no further loss of vision by curing the drainage structure of eye. One of the indications of glaucoma is the thinning of neuro retinal rim (NRR) and growing of the optic cup hence increasing the cup to disc ratio (CDR). Clinical analysis of glaucoma depends on the CDR value and a CDR of 0.65 or above is declared as glaucomatous [3]. Figure 1 shows fundus images for normal and glaucomatous eye with normal and abnormal optic nerves respectively.

A number of methods have been presented for automated detection of different retinal diseases and optic disc [4]–[6]. Wong et al. [7] used an intelligent fusion based approach for CDR calculation. After localizing the region of interest (ROI), a variational set approach is applied for detection of optic disc (OD) and histogram based intensity analysis is done for segmentation of optic cup. They employed support vector machine (SVM) and neural networks (NN) for classification where SVM performed a little better than NN. A system for glaucoma detection based on High Order Spectra (HOS) features and texture based predictors was proposed in [8]. SVM , Naïve Bayes and Random forest classifier have been used for supervised classification. The techniques were applied on 60 images obtained from local database and achieved accuracy of 91 %. A variation set methodology was applied on the red component of the color image for the segregation of optic disc and vertical cup to disc ratio was computed by Zhang et al. [9]. This system was applied on 1564 images collected from Singapore Eye Research Institute(SERI) and achieved accuracy of 96 %. Sagar et al. [10] presented an automated technique for the segregation of optic disc and cup. Local image information for each point of interest in ROI is generated which was added as a feature to cater for the challenges like image variations near or in ROI. The cup boundary was also segregated using the vessel bending indicator (r bends strategy) at the boundary of cup. Spline fitting technique is applied to crop the boundary and system detected glaucoma with an accuracy of 90 % on 138 images. Recently, a review on glaucoma detection technique is done by Haleem et al. [11]. It was highlighted that the inclusion of some more signs like vascular and shape based changes in OD can be considered along with CDR while detection glaucoma from fundus images. They have also concluded that although there are a number of signs which can be seen in digital fundus images, the use of OCT images is still important for reliable and timely detection of glaucoma.

There are still open issues in automated glaucoma detection which include correct localization of OD even in the presence of other pathologies and a detailed feature set for true representation of glaucoma other than just CDR or RDR. The proposed system addresses these issues and presents a novel robust system for OD localization which works even in the presence of noise and other retinal pathologies. The second contribution of this research is the construction of a detailed hybrid feature set which combines traditional RDR and CDR with spatial and spectral features to make a novel yet comprehensive feature set. The proposed system also contains a novel classifier with the capabilities of handling anomalies and multivariate distributions. Another major contribution is in generation of an annotated database purely for flaucoma detection which can be used by other researchers for evaluation of their methods. We have made this database available on request on our group web page [12].

This article comprises of four sections. "Proposed system" section contains proposed methodology followed by material and experimental results "Experimental results" section. The conclusion and discussion are given "Discussion" section.

Proposed system

Glaucoma is a pathological condition of optic nerve damage and is the second leading cause of vision loss. It is known as silent thief of sight. In this article, we present a computer aided diagnostic system for early and automated detection of glaucoma using a novel feature set. The proposed system consists of four main modules including preprocessing, region of interest detection based on automatically localized OD, feature extraction and finally classification. Figure 2 shows the flow diagram of the proposed system which summarizes the steps of the screening system developed for glaucoma detection.

Optic disc localization

Optic disc (OD) localization is first step of computer aided diagnostic system for glaucoma detection. A number of methods for OD localization and detection have been presented [13]–[20] but the main limitation is OD localization in the presence of other bright lesions and noise. In the proposed system, we employ our previously presented method for robust OD localization [21]. It consists of a novel robust OD localization method with the capabilities of true localization even in presence of large bright lesions and noise. This method is based on the assumption that OD normally has bright intensities and secondly blood vessels originates from OD resulting in highest vessel density in OD. In the proposed system, vascular pattern is enhanced by using 2-D Gabor wavelet for their better visibility followed by thresholding based vessel segmentation. In order to increase the accuracy of vessel segmentation, the proposed system uses multilayered thresholding approach to ensure the extraction of small vessel along with the large ones [22]. The next step is to extract candidate bright regions using red channel of colored image or a weighted gray scaled version of colored image. The decision for this selection is based on the saturation level of red channel. We use red channel if mean intensity value of red channel is less than an empirical values of 220. Due to circular shape of OD, an inverted Laplacian of Gaussian (LoG) filter is used to enhance the high intensity regions in the fundus image [21]. The size of LoG filter is set equal to the size of image. This is estimated by specifying σ = 53 which is calculated empirically using all datasets. The bright candidate regions are extracted by selecting pixels having top 60 % response from the LoG filtered image. A window of 100 × 50 is placed on center of every candidate region and vessel density is computed by counting the ON pixels within window from already segmented vascular map. OD position is localized finally by selecting the region which has the highest vessel density [21]. Figure 3 shows the step wise output for OD localization method.

From left to right and top to bottom: original fundus image; gabor wavelet based enhanced image; vascular mask using multilayered thresholding [22]; red channel of original image; spatial domain LoG filter with sigma = 53; filtered image after applying LoG filter on red channel; candidate regions after application of threshold; region with highest blood vessel density is marked as OD; region of interest extracted from localized OD

Feature extraction

Once OD is localized, the region of interest (ROI) containing OD and its neighboring pixels is then extracted. Average diameter (D) of OD for whole database is measured and a region of 2D × 2D is extracted from original input image. After extracting the ROI, a number of features are extracted to generate comprehensive feature space representation and accurate classification. The proposed system represents each ROI with a hybrid feature set consisting of following features.

Cup to disc ratio CDR (f1)

CDR, which is the ratio of diameter of optic cup to disc, is the most commonly used indicator of glaucoma. A CDR value of less than or equal to 0.5 is considered as normal whereas the value above 0.5 is an indicator of a glaucoma suspect. Any value above 0.64 shows a high risk of glaucoma [23]. In the proposed system, vertical cup to disc ratio is computed as shown in Fig. 4.

The disc is extracted from colored image by applying morphological closing [24] on the ROI image to suppress the blood vessel. This gives a smooth fundus region ϕ f having bright regions only. The result of morphological closing is converted to gray scale and adaptive thresholding is applied to convert it into binary image. Canny edge detection is applied on the binary image to get the boundary of optic disc.

For the extraction of cup, green plane of colored ROI is used. As the cup is much brighter in contrast as compare to disc, it is extracted by applying a relatively higher threshold than the one which is used for extraction of disc. The threshold for the cup is specified in such a way that it selects pixels having top 20 % intensity values in ROI. Canny edge filter is applied in order to get the boundary of cup. The areas of both cup and disc are measured and used in the calculation of CDR.

Rim to disc ratio RDR (f 2 )

The Neuroretinal rim between disc and cup boundary is of significant importance. The increased IntraOcular Pressure (IOP) results in thinning of the Neuroretinal rim between optic cup and optic disc. In the proposed system, vertical RDR has also been added in feature set as it is an indicator for glaucoma [23]. In computing RDR, the ratio of thickness of NRR and the disc diameter is computed. Figure 5 shows the NNR thickness against disc diameter.

Unlike CDR value, a decrease in the value of RDR indicates an effected eye. The maximum value of CDR can go up to 1 if the cup becomes exactly of the size of disc but RDR value cannot exceed 0.5 [23]. A healthy eye can have RDR up to 0.45 whereas an RDR value of 0.1–0.19 is considered as glaucoma suspect. Any value of RDR which is less than 0.1 is an indicator of glaucoma [3], [25]. In the proposed system, vertical ratios of Rim to Disc diameters have been employed to classify the images as normal or glaucomatous.

Spatial features (f 3 − f 7)

The use of spatial features are motivated by the fact that area of cup changes from normal to glaucomatous eye and intensity of cup is normally higher than the intensity of disc. The proposed spatial features are based on the intensity values of red channel of ROI. Before extraction of spatial features, contrast enhancement is applied on red channel of ROI image to improve the contrast of optic disc and optic cup for easy detection of glaucoma using a w × w sliding window. Here an assumption is made that w is large enough to contain a statistically representative distribution of cup as given in Eq. 1

where \(\Phi _\omega\) is the sigmoidal function for a window defined as

\(\Phi _{fmax}\), \(\Phi _{fmin}\) are the maximum and minimum intensity values of red channel image respectively. \(m_\omega\) and \(\sigma _\omega\) are the mean and variance of intensity values within the window. The spatial features are:

Mean intensity \((f_3)\) It is the mean intensity value of enhanced red channel pixels. A glaucomatous image contains a larger cup than that of a normal image therefore the number of pixels with higher intensity values is also greater in abnormal case.

Standard Deviation \((f_4)\) It is the standard deviation value of enhanced red channel pixels which shows the spread of intensity values.

Energy \((f_5)\) Energy of an image is the sum of squares of all pixel intensities. Glaucomatous images have a higher energy because they contain more brighter parts as compared to normal images.

Mean and standard deviation of gradient magnitude \((f_6, f_7)\) Gradient magnitude for enhanced red channel is computed using sobel operator [24]. The mean and standard deviation of this gradient magnitude image are computed.

Spectral features \((f_8, f_9, f_{10})\)

The spectral features are based on higher order spectra (HOS) to have more reliable information related to image [8]. The bispectrum \(X_{BS}\) of a signal x(nT) for frequencies \(\omega _1\) and \(\omega _2\) is given by Eq. 3

where \(X(\omega )\) is the Fourier transform, \(^*\) denotes the conjugate of Fourier transform and \(E[\cdot ]\) is the expectation operation. Spectral features consist of mean magnitude of bispectrum \((Mag_{BS})\), normalized bispectral entropy \((NE_{BS})\) and normalized bispectral squared entropy \((NE_{BS^2})\). Their mathematical expressions are given in Eqs. 4–6 [8].

where \(p_n = (|X_{BS}(\omega _1,\omega _2)|)/\sum _\Omega |X_{BS}(\omega _1,\omega _2)|)\) and \(q_n = (|X_{BS}(\omega _1,\omega _2)|^2)/(\sum _\Omega |X_{BS}(\omega _1,\omega _2)|^2)\).

The novelty of feature extraction phase lies in generation of a feature vector consisting of hybrid features representing different aspects of OD to discriminate glaucomatous OD from normal. Traditional features such as CDR and RDR contribute mostly in achieving high accuracy but combining them with the other descriptors results in even better accuracies. We have performed Wilcoxon and Ansari-Bradlay rank tests to give a more insight about the effectiveness of individual features. Table 1 shows the performance evaluation of features using these rank tests. The features are arranged in descending order of their absolute scores. Although last few features can be excluded due to low scores in one test but as they give acceptable scores in other test, so we have not performed feature selection here.

Multivariate m-mediods based modeling and classification of glaucoma

The feature extraction phase extracts different features from ROI of each fundus image and represents it in form of a feature vector F

Given the feature vector representation of optic nerve head, we now present our proposed classification approach for classification of retinal image as normal or suffering from glaucoma. The proposed approach transforms feature vector representation by employing supervised transformation to increase inter-class distance whilst decreasing intra-class distance. We employ Local Fisher discriminant analysis (LFDA) to perform supervised enhancement of features. LFDA identifies principal components in the original feature space that results in maximized discrimination between different classes. More formally, let \(DB=\{F_{1},F_{2},..,{F_{n}}\}\) be a set of n training samples belonging to the two classes of retinal images {normal retinal image, glaucoma}. The between class and within class scatter matrices are computed as:

where \(\Vert .,.\Vert\) is a Euclidean distance function and

Here, \(n_{k}\) is the membership count of class \(\mathbf {C_{k}}\) and \(\varsigma _{i}\) is the average distance of sample \(F_{i}\) with its k nearest neighbors. We set \(k=5\) based on empirical evaluation. This local scaling of distance between two samples affinity matrix is critical in handling variation of distribution of samples within a given pattern. Eigenvalue decomposition of \(\aleph _{b}E=\lambda \aleph _{w} E\) is then performed where \(\lambda\) is a generalized eigenvalue and E is the corresponding eigenvector. LFDA-transformed enhanced feature space representation of optic nerve head in retinal image is then computed as:

where \(\{E_{1},E_{2},..,E_{m}\}\) are eigenvectors arranged in descending order w.r.t. their corresponding eigenvalues \(\{\lambda _{1}, \lambda _{2},.., \lambda _{m}\}\).

The enhanced feature space representation of optic nerve head is now used to generate models of normal retinal images and retinal imaged affected by glaucoma. As we expect to have variation in the number and distribution of samples within these two classes, we employ multivariate m-Mediods based modeling and classification approach to handle multimodal distribution of samples within a modeled pattern [26]. Modeling of patterns using the proposed approach is comprised of three steps. In the first step, the quantized representation of training samples, referred to as mediods, are generated. Our approach tends to identify mediods in a fashion that the number of identified mediods in different parts of the distribution is proportional to the density of samples. We present an extension of self organizing maps (SOM) based learning approach to identify mediods. Let \(DB^{(i)}\) be the enhanced feature space representation of training data belonging to class i and W the weight vector associated to each output neuron. The SOM network is initialized with a greater number of output neurons than the desired number of mediods m. The value of \(\#_{output}\) is determined empirically as:

where \(\xi =size(DB^{(i)})/2\). The weight vector representation of output neurons \(W_i\) (where \(1\le i\le \#_{output}\)) are then initialized from the probability density function (PDF) \(N(\mu ,\Sigma )\) estimated from training samples in \(DB^{(i)}\). The enhanced feature space representation of glaucoma are sequentially input to train the network. Identify k Nearest Weights (k-NW) to current training sample F using:

where \(\mathbf {W}\) is the set of all weight vectors, C is the set of k closest weight vectors, \(\Vert ,.,\Vert\) is the Euclidean distance function and \(k=\delta (t)\) where \(\delta (t)\) is a neighborhood size function whose value decreases gradually over time as specified in Eq. (16). Network is trained by updating a subset of the weights (C) using

where \(W_c\) is the weight vector representation of output neuron c, j is the order of closeness of \(W_c\) to F \((1\le j\le k)\), \(\zeta (j,k)=exp(-(j-1)^2/2k^2)\) is a membership function that has value 1 when \(j=1\) and falls off with the increase in the value of j, \(\alpha (t)\) is the learning rate of SOM and t is the training cycle index. The learning rate \(\alpha (t)\) and neighborhood size \(\delta (t)\) are decreased exponentially over time using:

where \(t_{max}\) is the maximum number of training iterations and \(\delta _{init}\) is the initial neighborhood size whose value is empirically set to 5. This learning process is repeated for certain number of training iterations. Training samples are then assigned to their closest output neurons. Output neurons with no memberships are filtered as they are not representing and part of the normality distribution of a given class. As we have updated the network with greater number of output neurons as compared to the desired number of groupings, we merge the most similar output neurons (i, j) (indexed by (a, b)) as:

where |.| is the membership count function and the index of most similar output neurons is obtained as:

The output neurons are merged iteratively till the number of weight vectors gets equivalent to m. The weight vector \(\mathbf {W}\) is appended to the list of mediods \(\mathbf {M}^{(i)}\) modeling the pattern i. These set of mediods generated for different classes is now used for determining the customized normality ranges as specified in later section.

After the computation of m mediods to represent a give pattern c, we determine the set of possible normality ranges separately for each mediod in a given model \(\mathbf {M}^{(c)}\) representing class c. Customized selection of the set of possible normality ranges for each class will enable the mediods to have its own normality range dependant on the local distribution of samples around a particular mediod. It will resultantly enable our proposed modeling approach to cater for multivariate distribution of samples within a given class. More formally, let \(\mathbf {D}^{(c)}\) be the set of possible normality ranges for pattern c. Values of possible normality ranges for a given pattern c is determined by computing the k nearest mediods of a mediod \(M_p \in \mathbf {M}^{(c)}\) as:

where C is the set of k closest mediods w.r.t. \(M_p\). Set of possible normality ranges \(\mathbf {D}^{(c)}\) is then updated as:

The set of normality ranges is updated for \(\forall M_p \in \mathbf {M}^{(c)}\). The whole process is repeated to compute the set of mediods and their corresponding set of possible normality ranges for all the classes.

Once the mediods and set of possible normality ranges has been identified for all the patterns, we select customized normality range \(\wp\) for each mediod depending on the distribution of samples from the same and different patterns around a given mediod. Instead of using all the training data to learn the customized normality range for each mediod, we employ only those training samples that lie in the neighborhood of a given mediod. The mediod memberships of training data is determined by sequentially inputting labeled training instances belonging to all classes and identifying the closest mediod, indexed by p, using:

where Q is the training sample. Let \(\mathbf {\Gamma ^{(c)}}_j\) represents the subset of training samples that have been identified as members of mediod \(M_j\) belonging to class c. We maintain a false positive (\(FP_j\)) and false negative (\(FN_j\)) statistics, corresponding to each mediod \(M_j\), which are initialized to 0. We sequentially input members of mediod \(M_j\) and update the \(FP_{j}^{k}\) and \(FN_{j}^{k}\) statistics, corresponding to possible values of normality ranges \(D_{k} \in \mathbf {D}^{(c)}\) as:

where L(.) is a function that returns the label of a given mediod or sample. Customized range validity index \((\chi )\), to check the effectiveness of different possible normality ranges for a particular mediod, is then computed as:

where \(\beta\) is a scaling parameter to adjust the sensitivity of proposed classifier to false positives and false negatives according to specific requirements. The index of normality range from \(\mathbf {D}^{(c)}\) giving the optimal value of \((\chi )\) for a given mediod \(M_j\) is identified as:

The customized normality range \(\wp _j\) corresponding to mediod \(M_j\) is then identified as:

Once the mediods and their customized normality ranges are identified, the learned m-Mediods model of normality is used to classify unseen retinal images as normal or affected with glaucoma. The classification of feature vector representation of optic nerve head of unseen retinal images, using learned multivariate m-Mediods model, is performed by identifying k nearest mediods, from the entire set of mediods (\(\mathbf {M}\)), w.r.t. query Q as:

The test sample is now tested w.r.t. all the mediods from the set of k nearest mediods starting from closest to farthest mediod. Let \(\imath\) be the index of nearest mediod in the k-NM result (initialized to 1) and r and c be the index \(\imath {th}\) nearest mediod and its corresponding class respectively. Test sample Q is classified to class c if:

If the condition specified in Eq. (28) is not satisfied, we increment the index \(\imath\) by 1 to test sample Q w.r.t. the next nearest neighbor. This process is repeated till \(\imath =k\). If the test sample Q has not been identified as a valid member of any class, it is assigned to the class with highest number of members in the k-NM result as obtained in Eq. (27).

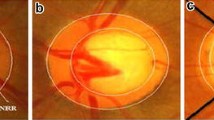

Visualization of proposed multivariate m-Mediods based modeling and classification is presented in Fig. 6. Each point in Fig. 6a depicts a feature vector representation of glaucoma disease. Same color and marker are used to represent samples belonging to same class. The extracted mediods to model glaucoma classes are represented by superimposing squares on each group of instances. Fig. 6b presents a set of possible normality ranges for each modeled pattern. Customized normality ranges identified for various mediods and the resultant normality regions for classes are depicted in Fig. 6c. Test sample is classified to the class if it lies within the normality region represented by one of its constituent mediod.

It is important to note that the proposed classifier doesn’t give a hard classification decision by assigning the feature vector representation of glaucoma disease to the class of majority of nearest mediods. The distance of test sample may be closer to one class but it may not fall in the normality range of that class due to denser distribution of samples around a particular mediod. However, the given feature space representation of glaucoma disease may still fall within the normality threshold of some other mediod with sparse distribution. Our proposed classifier handles this situation by checking the membership of test sample w.r.t mediods belonging to different classes and assigning the sample to the class for which the sample is falling within the normality range. This soft classification enables our proposed approach to handle overlapping complex-shaped class distributions with variable densities which is expected in problem at hand.

Experimental results

Material

The quantitative analysis of the proposed system is performed by using different publicly available databases and one local database for OD localization and Glaucoma detection methods. DRIVE database has 40 retinal fundus images of size 768 \(\times\) 584 [27]. The images were captured using Canon CR5 Non-Mydriatic retinal camera with a 45 degree Field of View (FOV). STARE database has 400 retinal images which are acquired using TopCon TRV-50 retinal camera with 35 degree FOV having size of 700 \(\times\) 605 [28]. DIARETDB (DIAbetic RETinopathy DataBase) is a database which is designed to evaluate automated lesion detection algorithms [29]. It contains 89 retinal images with different retinal abnormalities. The images are captured with a 50 degree FOV and a resolution of 1500 \(\times\) 1152. Digital Retinal Images for Optic Nerve Segmentation Database (DRIONS-DB) contains of 110 images belonging to the Ophthalmology Service at Miguel Servet Hospital, Saragossa Spain [30]. This database contains the ground truths for optic disc segmentation. Hamilton Eye Institute Macular Edema Dataset (HEI-MED) contains 169 fundus images [31]. This database is primarily designed for detection of exudates and macular edema. MESSIDOR is one of the large retinal image database having 1200 images of different diseases, varying dimensions, patients with different ages and ethnicity and at different stage of the disease [32]. HRF (High Resolution Fundus) is another fundus image database which contains 45 images in total [33]. This database contains annotations for vessels, optic disc and glaucoma etc.

A local database of 462 images has been gathered from local hospital. The images are captured using TopCon TRC 50EX camera with a resolution of 1504 \(\times\) 1000. A subset of this database containing 120 images is annotated from two ophthalmologists for glaucoma and named as glaucoma database (GlaucomaDB) [12]. A MATLAB based annotation tool has been used by the ophthalmologists for calculation of CDR and labeling of images as glaucoma or non glaucoma. The sole purpose of this database is to facilitate the researchers in automated detection of glaucoma. The database along with CDR values and glaucoma labeling is available online [12].

HRF is the only database which includes annotations for glaucoma whereas all other databases except STARE have been annotated with the help of two ophthalmologists. In case of MESSIDOR and HEI MED, only 100 and 50 images are annotated for glaucoma out of 1200 and 169 respectively. STARE database is used only to detect the accuracy of OD localization not for glaucoma. Table 2 shows the image level specifications of all databases.

Results

The analysis of the proposed system is done for OD localization and glaucoma detection. The performance of proposed OD localization method is compared with already the proposed systems and the accuracies are reported in Table 3. The pictorial results for OD localization are given in Fig. 7.

The detailed evaluation and analysis of the proposed glaucoma detection method is done by computing sensitivity, specificity, positive predictive value (PPV) and accuracy as shown by Eqs. 29, 30, 31, and 32 respectively.

-

\(T_P\) are true positives, meaning glaucomatous image is correctly classified.

-

\(T_N\) are true negatives, meaning non glaucoma images are correctly classified.

-

\(F_P\) are false positives, meaning non glaucoma images are wrongly classified as glaucomatous.

-

\(F_N\) are false negatives, meaning glaucomatous images are wrongly classified as non glaucoma.

In order to train and test the performance of the system, 70 % of the data is used for training and 30 % is used for testing by getting images of each type and from different databases so that the robustness and versatility of the proposed system can be checked. All databases are first normalized to same size and intensity ranges. The experiments are repeated 10 times in order to avoid bias. Table 4 shows the averaged performance results obtained by the proposed system for each database.

A quantitative comparison of proposed mediods based classifier is performed with other supervised classifier including k nearest neighbors (KNN), multilayered perceptron (MLP) with five hidden layers, Gaussian mixture model (GMM) with 9 mixtures and support vector machine (SVM) with radial basis function as kernel. The experiment has been conducted on newly proposed glaucoma database and the results are presented in Table 5.

Figure 8 shows receiver operating characteristics (ROC) curves for four databases having a good number of glaucoma images. These curves have been generated for the proposed system with mediods based classifier.

Discussion

The proposed method has been tested on seven databases with different image sizes and intensity variation. These variations in the databases have been catered by performing normalization before all processing. Images from all the databases are normalized to a fixed resolution of 1000 \(\times\) 1600. In order to calculate the accuracy of OD localization method, a MATLAB based annotation tool is designed and OD centers are marked for all images with the help of an ophthalmologist. These OD centers are considered as ground truths and the distance of automatically detected OD centers are calculated from these ground truths. OD is considered as correctly detected if the difference between automated and ground truths centers is less than 10 pixels. Table 3 showed the comparison of the proposed system with existing OD localization techniques.

It is important to highlight here that all used databases except DRIVE and DRIONS contain images with bright lesions and acquisition noise. The results support the proposed method for OD localization. The Fig. 7 contains images with large number of lesions especially the bright lesions which complicates the localization of OD. The figure also contains an image with distorted vessels. In all these cases, simple intensity or vessel tracking based methods will not give desired results. However, the proposed method has correctly localized the OD in the presence of unwanted artifacts.

Glaucoma detection results are also presented in Table 5. As is obvious from the table, the proposed glaucoma detection using multivariate m-Mediods based approach outperforms competitors. The superior performance of the proposed approach is due to the fact that it can handle complex shape classes with multivariate distribution of samples as highlighted in Fig. 6. On the other hand, classifiers such as GMM can effectively handle only those patterns whose distribution is majorally Gaussian [34]. It is not effective in handling complex shape classes with tight and complex decision surfaces. SVM, on the other hand, handles patterns with complex decision boundaries. However, SVM has the tendency to tilt more towards classes with more number of training samples in its attempt to maximize the overall accuracy. This is not desirable in medical image analysis where we have only limited training data related to sensitive or extreme cases [34]. It is further noted that incorporating supervised feature enhancement using LFDA improves class separation thus helping different classifiers to improve their original classification capabilities. This fact is clear from the results given for LFDA-GMM and LFDA SVM in Table 5.

The proposed system has addressed four main limitations which are currently present in automated glaucoma detection (i) Robust algorithm for localization of OD in the presence of other pathologies so that region of interest can be extracted accurately for glaucoma detection (ii) A detailed feature set for true representation of glaucoma instead of just cup to disc ratio or rim to disc ratio (iii) An accurate classifier with capability of handling anomalies and multivariate distribution of samples within a class (iv) An annotated database solely designed to facilitate the research related to glaucoma detection. The proposed system has taken care of all these issues as main contributions of this article.

Conclusion

In this article, we have presented a new method for accurate detection of glaucoma from colored retinal images. The proposed system extracted optic disc using a novel method which analyzed vessel based features for accurate detection of optic disc. Once optic disc is detected, a region of interest is extracted for evaluation of optic disc properties. The proposed system extracted a number of features consisting of cup to disc ratio, rim to disc ratio, spatial and spectral features. A multivariate mediods based classifier is used for detection of glaucoma.

The testing of the proposed system has been conducted using publicly available databases and one locally gathered dataset with the help of Armed forces institute of ophthalmology (AFIO). A total of 554 images from different sources are used for proper testing and evaluation of the proposed system. The results have shown the validity of the proposed system. It is also supported by the results that proposed OD detection method and classifier outperformed the existing state of the art methods and classifiers.

References

Thylefors B, Negrel AD (1994) The global impact of glaucoma. Bull World Health Organ 72(3):323–326

Quigley HA, Broman AT (2006) The number of people with glaucoma worldwide in 2010 and 2020. Br J Ophthalmol 90:262–267

Spaeth GL (1981) Appearances of the optic disc in glaucoma: a pathogenetic classification. In: Trans Acad Ophthalmol Symposium on Glaucoma. CV Mosby, St. Louis

Akram MU, Khalid S, Khan SA (2013) Identification and classification of microaneurysms for early detection of diabetic retinopathy. Pattern Recognit (Elsevier) 46(1):107–116

Esmaeilia M, Rabbania H, Dehnavia AM (2012) Automatic optic disk boundary extraction by the use of curvelet transform and deformable variational level set model. Pattern Recognit 45(7):2832–2842

Xua J, Chutatape O, Sung E, Zheng C, Kuan P (2007) Optic disk feature extraction via modified deformable model technique for glaucoma analysis. Pattern Recognit 40(7):2063–2076

Wong DWK, Liu J, Lim JH, Tan NM, Zhang Z, Lu S, Li H, Teo MH, Chan KL, Wong TY (2009) Intelligent fusion of cup-to-disc ratio determination methods for glaucoma detection in ARGALI. In: 31st Annual international conference of the IEEE EMBS Minneapolis, Sept 2–6

Rajendra Acharya U, Dua S, Du X, Vinitha Sree S, Chua CK (2011) Automated diagnosis of glaucoma using texture and higher order spectra features. IEEE Trans Inf Technol Biomed 15(3):449–455

Zhang Z, Lee BH, Liu J, Wong DWK, Tan NM, Lim JH, Yin F, Huang W, Li H (2010) Optic disc region of interest localization in fundus image for glaucoma detection in ARGALI. In: 5th IEEE conference on industrial electronics and applicationsis

Sagar V, Balasubramanian S, Chandrasekaran V (2007) Automatic detection of anatomical structures in digital fundus retinal images. In: MVA2007 IAPR conference on machine vision applications, pp 483–486

Haleem MS, Han L, van Hemert J, Li B (2013) Automatic extraction of retinal features from colour retinal images for glaucoma diagnosis: a review. Comput Med Imaging Graph 37(7):581–596

Biometrics and medical image/signal anaysis research group(BIOMISA), www.biomisa.org/glaucomadb

Ying H, Zhang M, Liu JC (2007) Fractal-based automatic localization and segmentation of optic disc in retinal images. In: 29th Annual international conference of the IEEE EMBS, pp 4139–4141

Qureshi RJ, Kovacs L, Harangi B, Nagy B, Peto T, Hajdu A (2012) Combining algorithms for automatic detection of optic disc and macula in fundus images. Comput Vis Image Underst 116(1):138–145

Zhang D, Yi Y, Shang X, Peng Y (2012) Optic disc localization by projection with vessel distribution and appearance characteristics. In: 21st international conference on pattern recognition (ICPR 2012), pp 3176–3179

Lupascu CA, Tegolo D, Rosa LD (2008) Automated detection of optic disc location in retinal images. In: 21st IEEE international symposium computer based medical systems (CBMS), pp 17–22

Akram MU, Khan A, Iqbal K, Butt WH (2010) Retinal image: optic disk localization and detection. Image Anal Recognit Lect Notes Comput Sci LNCS 6112:40–49

Youssif AR, Ghalwash AZ, Ghoneim AR (2008) Optic disc detection from normalized digital fundus images by means of a vessels’ direction matched filter. IEEE Trans Med Imaging 27(1):11–18

Sekhar S, Nuaimy WA, Nandi AK (2008) Automated localisation of optic disc and fovea in retinal fundus images. In: 16th European signal processing conference (EUSIPCO 2008), Lausanne, Aug 25–29

Aquino A, Arias ME, Marin D (2010) Detecting the Optic Disc Boundary in Digital Fundus Images using morphological, edge detection and feature extraction techniques. IEEE Trans Med Imaging 29:1860–1869

Usman A, Abbas S, Akram MU, Nadeem Y (2014) A robust algorithm for optic disc segmentation from colored fundus images. Image Analysis and Recognition, Lecture Notes in Computer Science, LNCS. Springer, Berlin, pp 303–310

Akram MU, Khan SA (2013) Multilayered thresholding-based blood vessel segmentation for screening of diabetic retinopathy. Eng Comput (EWCO) 29(2):165–173

Spaeth GL, Lopes F, Junk AK (2006) Systems for staging the amount of optic nerve damage in glaucoma: a critical review and new material. Surv Ophthalmol 51:293–315

Gonzalez RC, Woods RE (2008) Digital image processing, 3rd edn. Prentice Hall, Upper Saddle River

Spaeth GL (2003) Primary open angle glaucoma. In: Rhee DJ (ed) Glaucoma color atlas and synopsis of clinical ophthalmology, vol 5. McGraw-Hill Professional, New York, pp 204–229

Khalid S (2010) Activity classification and anomaly detection using m-Mediods based modeling of motion patterns. Pattern Recognit 43(10):3636–3647

Lalonde M, Gagnon L, Boucher MC (2000) Non-recursive paired tracking for vessel extraction from retinal images. Vis Interface 2000:61–68

Hoover A, Goldbaum M (2003) Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans Med Imaging 22(8):951–958

Kauppi T, Kalesnykiene V, Kamarainen JK, Lensu L, Sorri I, Raninen A, Voutilainen R, Uusitalo H, Klviinen H, Pietil J (2006) DIARETDB1 diabetic retinopathy database and evaluation protocol. Technical Report

Carmona EJ, Rincón M, García-Feijoo J, Martínez-de-la-Casa JM (2008) Identification of the optic nerve head with genetic algorithms. Artif Intell Med 43(3):243–259

Giancardo L, Meriaudeau F, Karnowski T, Li Y, Garg S, Tobin KW Jr, Chaum E (2012) Exudate-based diabetic macular edema detection in fundus images using publicly available datasets. Med Image Anal 16(1):216–226

MESSIDOR, http://messidor.crihan.fr/index-en.php

Khler T, Budai A, Kraus M, Odstrcilik J, Michelson G, Hornegger J (2013) Automatic no-reference quality assessment for retinal fundus images using vessel segmentation. In: 26th IEEE internatioal symposium on computer-based medical systems, Porto

Khalid S, Arshad S (2013) A robust ensemble based approach to combine heterogeneous classifiers in the presence of class label noise. In: IEEE 5th international conference on computational intelligence, communication system and networks, Seoul, pp 24–26. September, 2013

Acknowledgments

This research is funded by National ICT R&D fund, Pakistan. We are also thankful to armed forces institute of ophthalmology (AFIO) for their clinical support and help.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Akram, M.U., Tariq, A., Khalid, S. et al. Glaucoma detection using novel optic disc localization, hybrid feature set and classification techniques. Australas Phys Eng Sci Med 38, 643–655 (2015). https://doi.org/10.1007/s13246-015-0377-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-015-0377-y