Abstract

There has been a recent surge of eHealth programs in cancer and other content areas, but few reviews have focused on the methodologies and designs employed in these studies. We conducted a systematic review of studies on eHealth interventions on cancer prevention and control published between 2001 and 2010 applying the Pragmatic Explanatory Continuum Indicator Summary (PRECIS) criteria and external validity components from the Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) framework. We identified 113 studies that focused on cancer prevention and control of eHealth interventions. Most studies fell midway along the explanatory/pragmatic trial continuum, but few reported on various practical feasibility criteria for translation. Despite vast interest in cancer eHealth and the applied nature of this field, few studies considered key external validity issues. There is a need for use of alternative pragmatic study designs and transparent reporting of external validity components to produce more rapid and generalizable results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

BACKGROUND

Reductions in cancer morbidity and mortality are partly attributable to interventions addressing modifiable risk factors and screening behaviors [1]. However, such advancements in cancer outcomes are not observed among all populations, specifically those with limited access to cancer care and efficacious behavior interventions [2, 3]. The recent surge of eHealth interventions (EHIs) presents unique opportunities to enhance cancer prevention and control by increasing intervention reach, adapting to various contextual conditions, being readily available where users live, work, and play, and tailoring information to patients' needs [4–6].

eHealth research is a relatively young field that is rapidly growing. EHIs have been found to be effective in promoting change in behaviors, knowledge, self-efficacy, and clinical outcomes [4–8]. While substantial progress has been made, few efficacious EHIs are adopted or sustained in real-world settings beyond the scope of the research project [5]. This lack in translation may be due, in part, to the use of predominantly explanatory (efficacy) research methods, which do not usually evaluate external validity, and to issues with limited reporting of intervention details (e.g., intervention cost and contextual factors of implementation setting), which would allow for replication [9, 10]. Moreover, the extent to which eHealth studies have addressed both effectiveness and generalizability is unknown.

To address these issues, two Consolidated Standards of Reporting Trials (CONSORT) statements have been developed describing reporting criteria for EHIs [10] and pragmatic trials [11] that provide guidance for study designs and evaluation methods, and reporting to inform decisions pertaining to both effectiveness and practical implementation of EHIs [12]. The Pragmatic Explanatory Continuum Indicator Summary (PRECIS) developed by the CONSORT Work Group on pragmatic trials is designed to assist researchers in study design and assess a study along the pragmatic–explanatory continuum, a multidimensional continuum displaying varying levels of pragmatism across study dimensions of a particular trial [11, 12]. PRECIS has been used to develop intervention trial design [13, 14], to assess interventions with common goals and measures [15], and to draw contextual information from systematic reviews [16]. Additional ratings of practical feasibility and other implementation factors related to CONSORT criteria for EHIs [10] may be useful for study design and have been applied in a similar assessment of intervention trials [15].

The purposes of this paper are as follows: (1) review and summarize the literature to assess the extent to which EHI studies in cancer prevention and control have utilized pragmatic trial design features and reported on issues related to translation, generalizability, and feasibility measures; (2) apply practical feasibility and generalizability reporting criteria to EHI trials; and (3) describe our experiences, including review procedures and inter-rater reliability in applying both rating criteria.

METHODS

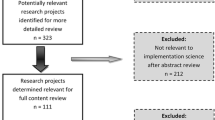

Identification of studies

For the purpose of this review, the description of eHealth interventions put forth by Eng et al. was used [17]. eHealth interventions are defined as “the use of emerging information and communication technology, especially the Internet, to improve or enable health and health care,” which includes internet, email, mobile phone text or applications, interactive voice response, automated and electronic programs, CD-ROMs, and computer-tailored print but exclude telemedicine targeted solely at clinicians that do not have a patient- or consumer-facing interface [17]. This review was based on a larger systematic review of the EHI literature focused on health promotion and disease management published in English between 1980 and mid 2010 [18]. Methods of the original systematic review are described first to provide a context for the current review.

The original systematic review by Rabin et al. [18] included 467 papers. An inclusion criterion for the review was evaluation of an EHI as the main intervention component primarily directed toward patients and/or their caregivers rather than healthcare providers. We excluded studies of telemedicine interventions, and those that were expressly described as feasibility, preliminary, or pilot studies were excluded since it was not considered appropriate to hold these latter studies to the methodological standards used for intervention trials. Studies were classified as T1 (efficacy studies) or T2 and later stage (T2+), operationalized as effectiveness, dissemination and implementation, and scale-up studies using the translational research stages [19]. Given T1 studies focus mostly on efficacy of basic biological discovery to candidate health application, T1 studies had to use an experimental design using randomized or nonrandomized comparison condition(s) and had to include at least one broadly defined behavioral-oriented outcome (e.g., change in health behavior, knowledge, and self-efficacy) or biological outcome (e.g., BMI and HbA1c). Given the broad range of T2+ studies focus on effectiveness, dissemination, and outcomes research of existing health applications to practice and population impact, T2+ studies were included regardless of their study design or measured outcomes. A more detailed description of methods is provided elsewhere [18].

For the current review, we selected a subsample of 149 papers related to cancer prevention and control from the original review described above. Studies from the original review were included if they had relevance to any stage of the cancer control continuum, specifically EHIs that applied behavioral, social, and populations sciences to study new approaches that address cancer-related issues including primary prevention, screening, treatment/disease management, survivorship, and end-of-life care [20]. Studies focusing on management of diabetes and cardiovascular disease were included if the main focus was management of cancer-related risk behaviors (e.g., diet, physical activity, etc.). Interventions had to have an interactive component (e.g., we excluded tailored print and phone interventions unless the provider used them to counsel patients). We limited our review to studies published between 2001 and 2010. These years reflect a period in which there was a large increase in peer-reviewed publications of both T1 and T2+ EHI study results. We only included studies that focused on adults, since eHealth modalities for adults and adolescents are quite different within the context of cancer prevention and control.

After articles were identified, we grouped them into studies. Since study results could be published in multiple papers, we assigned reviewers by study groups rather than individual papers so reviewers would have the full range of information provided about the study across the various papers. After assignments were made, each reviewer was instructed to conduct a brief web search of publications (using the combination of the intervention name and first author) within PubMed to identify any additionally relevant papers to the main study paper and and/or group of papers and include those in the rating process.

Rating of studies for pragmatism and practical feasibility

Reviewers abstracted general information (i.e., citation, topic area, eHealth modality, setting, study design, and target audience) from each study and rated them for pragmatism and practical feasibility using two rating scales. The pragmatic rating scale was adapted from the PRECIS review tool with PRECIS criteria as a means to identify the extent to which a trial was widely applicable (pragmatic) or more mechanistic (explanatory) [12, 16]. PRECIS criteria includes 10 domains related to participant eligibility criteria, flexibility of the experimental and comparison conditions, follow-up intensity, primary trial outcomes, participant compliance, practitioner adherence to study protocol, and primary analysis. A more detailed description illustrating the extremes of explanatory and pragmatic approaches to each PRECIS domain and the PRECIS review tool is provided elsewhere [12, 16].

The practical feasibility rating scale was previously developed by members of the study team (BR, RG, and BG) based on the Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) framework [15, 21]. We adapted this rating scale to include translational aspects of CONSORT reporting criteria of eHealth intervention trials [10, 21]. Similar to PRECIS, the practical feasibility rating criteria were adapted to assess the extent to which a trial was pragmatic or explanatory in addressing issues important to potential eHealth adopters that were not included within the PRECIS criteria. Practical feasibility domains focused on setting and participant representativeness (i.e., how typical are recruited settings/participants of target population), participant engagement with all parts of the intervention, intervention adaptation during study, program sustainability, unintended effects (i.e., harmful or beneficial consequences), monetary costs of intervention, and intervention resources (i.e., extent to which minimal intervention resources were reported). Both rating scales used a five-point Likert scale for responses, where “5” indicated a completely pragmatic and “1” a completely explanatory approach. The rating form is available upon request from the lead author.

Seven reviewers participated in the rating process. All reviewers were trained on both the PRECIS and practical feasibility criteria. Training sessions also served to develop consensus on all rater domains. The rating form was pilot-tested and refined based on the ratings of a subsample of four papers by all reviewers. For studies in which EHI replaced practitioners with no personal or phone contact, ‘not applicable’ ratings were applied to relevant PRECIS domains on practitioner expertise and practitioner compliance to study protocol. After refinements and clarification of rating process, the entire group reviewed two additional papers to pilot the revised criteria. For the remainders of the papers, two reviewers were assigned to each study.

Analyses

A total of 113 studies covered by 149 individual publications were independently rated by two reviewers (Table 1). Average scores were calculated for each study on PRECIS and practical feasibility domain for areas in which ratings were appropriate. Overall composite mean scores across all studies were calculated for PRECIS and practical feasibility domains (Table 2), and individual scores are available upon request. These scores were aggregated by study characteristics including intervention settings, intervention topic, eHealth modalities, target audience, cancer control continuum, year published, and translational phase. Analyses of variance (ANOVA) with Tukey's post hoc corrections were conducted to compare average scores across study characteristics (Table 3). A trend analysis of composite mean scores by domain across all publication years was conducted using a nonparametric test for trends based on the Wilcoxon–Mann–Whitney test.

RESULTS

Of the 113 studies, 23 (20 %) were physical activity interventions, 78 (69 %) focused on primary prevention, 69 (61 %) were delivered through web-based or computer-tailored (CT) web-based modalities, and 68 (60 %) were not tailored. The majority of the studies were implemented in community settings (51, 45 %), with at-risk populations (58, 51 %), conducted as randomized-controlled trials (99, 88 %) and as T1 studies (97, 86 %). More details about study characteristics are summarized in Table 3.

Each study was rated by two reviewers with reasonable reliability on almost all ratings for individual PRECIS and practical feasibility domains. Weighted percent agreement scores for PRECIS domains ranged from 63.9 to 78.5 %, with a median of 73.9 %, and for practical feasibility domains, these ranged from 63.7 to 84.7 %, with median of 78.9 %.

PRECIS scores

Average ratings by PRECIS domains were fairly uniform and ranged between 2.7 and 3.6 across all 10 PRECIS domains (Table 2). Lower domain average ratings (indicating less pragmatic approach) were observed on experimental intervention flexibility (2.8, SD = 0.9), practitioner expertise (experimental) (2.7, SD = 0.9), and practitioner expertise (comparison) (2.8, SD = 1), while participant adherence to protocol (3.6, SD = 1) and primary analysis (3.5, SD = 0.8) had higher mean scores. Composite mean scores varied by some study characteristics (Table 3). Noticeable differences were seen within study characteristics for intervention topic including multicomponent (3.2, SD = 0.5), cancer screening (3.2, SD = 0.6), and other (3.2, SD = 0.5) compared to smoking studies (2.9. SD = 0.6); eHealth modality including CT print (3.3, SD = 0.4) compared to web-based/email (3.0, SD = 0.5); and study design including quasiexperimental (3.4, SD = 0.6) and group-randomized trial (3.1, SD = 0.4). However, these differences were not statistically significant. Interestingly, a trend analysis showed a significant increase in composite mean scores within the experimental intervention flexibility domain across all publication years (p = 0.02). However, no differences were seen on PRECIS scores across publication years (2001–2005 vs. 2006–2010) or translation phase (T1 vs. T2+).

Practical feasibility scores

Average ratings for practical feasibility domains ranged from 1.5 to 2.8 using the same five-point scale as for the PRECIS ratings (Table 2). Lower domain average ratings (indicating lower levels of reporting or less pragmatic approach on practical characteristics) were observed on domains related to adaptation/change (1.5, SD = 0.6), program sustainability (1.6. SD = 0.7), and monetary cost of existing treatment [1.6, SD = 0.7)], while setting representativeness (2.8, SD = 1) and participant engagement (2.7, SD = 0.7) received higher average ratings. Composite means scores varied by study characteristics (Table 3). Noticeable differences by study characteristics were seen across intervention setting including schools (1.6, SD = 0.2) compared to both healthcare (2.1, SD = 0.5) and community (2, SD = 0.4), target population including interventions targeted to healthy (1.8, SD = 0.3) vs. diseased individuals (2.2, SD = 0.5), year of publication when comparing 2001–2005 (2.1, SD = 0.5) to 2006–2010 (1.9, SD = 0.4), and translational phase when comparing T1(1.9, SD = 0.4) to T2+ (2.2, SD = 0.5). These differences were all statistically significant (p = < 0.05). Additionally, a trend analysis revealed a significant decrease in composite mean scores within the intervention resource domain across all publication years (p = 0.05).

Overall, PRECIS composite mean scores across all studies were significantly higher than practical feasibility mean scores. In addition, the range of these scores by domain was consistently larger for PRECIS than for practical feasibility across all studies. Figure 1 uses two “spoke and wheel diagrams” to illustrate where PRECIS and practical feasibility domain ratings fell within the pragmatic–explanatory continuum for the highest and lowest scored studies on each scale [12].

Pragmatic Explanatory Continuum Indicator Summary (PRECIS) and practical feasibility “spoke and wheel” diagrams: a PRECIS lowest versus highest scored studies*; b practical feasibility lowest versus highest scored studies *Illustrates lowest and highest scores of studies for which all domains where scored

DISCUSSION

To our knowledge, there have been no published systematic reviews using PRECIS review criteria or practical feasibility criteria to evaluate studies of EHIs. The purpose of this systematic review was to assess the extent to which studies of EHI in cancer control and prevention published between 2001 and 2010 utilized pragmatic trial design features and reported on issues related to translation, generalizability, and feasibility measures. Our analyses revealed two noteworthy findings. First, there was little variability in PRECIS scores across all studies. PRECIS overall composite mean score was 3.12 (domain range, 2.7–3.6) and is consistent with composite mean score of the original application of the PRECIS review criteria [16]. However, firm conclusions concerning a meaningful magnitude of score has not been feasible given the limited application of PRECIS within the systematic review literature. There were small, statistically nonsignificant differences of overall PRECIS composite mean scores by study characteristics including intervention topic, eHealth modality, and study design. No differences were observed for year of publication and translation phase. However, a trend analysis did reflect a significant increase in composite mean scores within the experimental intervention flexibility domain that may be reflective of the adaptation capabilities of eHealth technology. From prior reports of PRECIS scores in obesity interventions [15], we were surprised to see so little variability in PRECIS scores both across study characteristics and over time within a larger sample of intervention trials. This may be partly attributable to the fact that PRECIS domains specific to practitioner delivery were often not scored (i.e., missing data), since in many cases, EHI replaced the role of the practitioner. Additionally, the majority of studies reviewed (88 %) were conducted as randomized-controlled trials. One would expect such homogeneity of study design to affect average PRECIS scores towards a more explanatory end. However, randomized-controlled trials may not be appropriate for testing all aspects of EHI components, especially attributes such as implementation strategies, intervention reach, and multiple intervention settings, for which pragmatic trial features would be more appropriate [22, 23].

Second, studies consistently rated lower on practical feasibility scores than on PRECIS scores. This finding held true when comparing scores across study characteristics. Average ratings for practical feasibility domains (ranged from 1.5 to 2.8) were much closer to the explanatory or efficacy end of the rating scale than PRECIS domain ratings. This finding was due to the low frequency at which studies reported on factors related to costs, setting representativeness, adaptation flexibility, and program sustainability. This lack of reporting on practical feasibility measures and especially the relative absence of cost and setting representativeness has been reported in other content areas and is a considerable challenge to translation [24]. EHI trials seem to be the ideal context to address several of these measures. Furthermore, adaptation/change scored highly explanatory (average of 1.5), meaning that EHI studies rarely reported adapting an intervention during a trial. This notion assumes EHIs must be completed and static throughout the implementation period or at least indicates that no such adaptations were reported in the published articles. Static EHI contradicts common practice by eHealth industry and rapid learning approaches for which iterative testing of intervention components are integral to eHealth research and development [22, 25, 26]. Similarly, experimental methods such as Sequential Multiple Assignment Randomized Trial (SMART) and Multiphase Observation Strategy (MOST) systematically test components of EHIs, including implementation components, while addressing both rigor and relevance of EHI trials [27] that were seldom applied in these eHealth studies.

Our review has several limitations. We reviewed only the published literature between 2000 and 2010 and realize that more recent publications exist on EHIs in cancer control and prevention. However, we feel our current review spans an extensive EHI literature, and therefore, we do not expect these additional publications would change the conclusion of our current review. We also realize reporting constraints imposed by peer-reviewed journals may not be reflective of other aspects of EHIs, especially practical feasibility characteristics. Additionally, PRECIS and eHealth CONSORT criteria were developed post 2005, meaning that applying such criteria retrospectively may not be reflective of study design decisions in response to these criterions or, in some sense, “fair” to evaluate earlier investigations by these standards. However, our paper reviews a relatively large number of studies over a 10-year span and applies two innovative scoring frameworks to identify gaps in the reporting of key translational issues. We demonstrated that such frameworks could be productively and reliably applied to eHealth studies, including review procedures and inter-rater reliability.

CONCLUSION

Cancer eHealth interventions have made great, provocative use of cutting edge technology and are uniquely positioned to study a broader level intervention impact and test new behavior theories based on interactive technologies [28]. Despite vast interest in cancer eHealth and the applied nature of this field, our findings suggest that few studies used innovative designs to address key translation issues or reported transparently on issues central to dissemination. Given the surge of EHIs, health technology, and the lack of evidence-based interventions readily available to consumers [29, 30], there is a need for use of alternative pragmatic study designs, transparent reporting of external validity components to produce more rapid and generalizable results, and comparison of intervention effects assessed along the pragmatic–explanatory continuum by both PRECIS domains and practical feasibility criteria. We encourage investigators to utilize PRECIS and practical feasibility criteria used in this review to design, test, and evaluate EHIs in the future. Such research can lead to both interventions that work and that can be translated more rapidly into practice.

References

Eheman C et al. Annual report to the nation on the status of cancer, 1975–2008, featuring cancers associated with excess weight and lack of sufficient physical activity. Cancer. 2012; 118(9): 2338-66.

Chu, K.C., B.A. Miller, and S.A. Springfield, Measures of racial/ethnic health disparities in cancer mortality rates and the influence of socioeconomic status. J Natl Med Assoc, 2007. 99(10): p. 1092–100, 1102–4.

Saldana-Ruiz, N., et al., Fundamental causes of colorectal cancer mortality in the United States: understanding the importance of socioeconomic status in creating inequality in mortality. Am J Public Health, 2012.

Noar SM, H.N., eHealth Applications: Promising Strategies for Behavior Change, Routledge, NY, 2012.

Bennett GG, Glasgow RE. The delivery of public health interventions via the internet: actualizing their potential. Annu Rev Public Health. 2009; 30: 273-92.

Strecher V. Internet methods for delivering behavioral and health-related interventions (eHealth). Annu Rev Clin Psychol. 2007; 3: 53-76.

Wantland DJ et al. The effectiveness of web-based vs. non-web-based interventions: a meta-analysis of behavioral change outcomes. J Med Internet Res. 2004; 6(4): e40.

Murray E et al. Interactive health communication applications for people with chronic disease. Cochrane Database Syst Rev. 2005; 4: CD004274.

Glasgow RE. eHealth evaluation and dissemination research. Am J Prev Med. 2007; 32(5 Suppl): S119-26.

Eysenbach G. CONSORT-EHEALTH: improving and standardizing evaluation reports of web-based and mobile health interventions. J Med Internet Res. 2011; 13(4): e126.

Zwarenstein M et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. BMJ. 2008; 337: a2390.

Thorpe KE et al. A pragmatic–explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J Clin Epidemiol. 2009; 62(5): 464-75.

Riddle DL et al. The pragmatic–explanatory continuum indicator summary (PRECIS) instrument was useful for refining a randomized trial design: experiences from an investigative team. J Clin Epidemiol. 2010; 63(11): 1271-5.

Selby P et al. How pragmatic or explanatory is the randomized, controlled trial? The application and enhancement of the PRECIS tool to the evaluation of a smoking cessation trial. BMC Med Res Methodol. 2012; 12: 101.

Glasgow RE et al. Applying the PRECIS criteria to describe three effectiveness trials of weight loss in obese patients with comorbid conditions. Health Serv Res. 2012; 47(3 Pt 1): 1051-67.

Koppenaal T et al. Pragmatic vs. explanatory: an adaptation of the PRECIS tool helps to judge the applicability of systematic reviews for daily practice. J Clin Epidemiol. 2011; 64(10): 1095-101.

Eng TR. The eHealth Landscape: A Terrain Map of Emerging Information and Communication Technologies in Health and Health Care. Princeton: Robert Wood Johnson Foundation; 2001.

Rabin BA, G.R., Dissemination of interactive health communication programs, in Interactive Health Communication Technologies: Promising Strategies for Health Behavior Change: Routledge, NY, 2012.

Khoury MJ, Gwinn M, Ioannidis JP. The emergence of translational epidemiology: from scientific discovery to population health impact. Am J Epidemiol. 2010; 172(5): 517-24.

Hiatt RA, Rimer BK. A new strategy for cancer control research. Cancer Epidemiol Biomarkers Prev. 1999; 8(11): 957-64.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999; 89(9): 1322-7.

Pagliari C. Design and evaluation in eHealth: challenges and implications for an interdisciplinary field. J Med Internet Res. 2007; 9(2): e15.

Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clin Transl Sci. 2012; 5(1): 48-55.

Glasgow RE et al. Disseminating effective cancer screening interventions. Cancer. 2004; 101(5 Suppl): 1239-50.

Bennett, G., eHealth and D&I research: what next?, in Training Institute for Dissemination and Implementation Research in Health. July 9–13, 2012.

Greene SM, Reid RJ, Larson EB. Implementing the learning health system: from concept to action. Ann Intern Med. 2012; 157(3):207-10.

Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. 2007; 32(5 Suppl): S112-8

Riley WT et al. Health behavior models in the age of mobile interventions: are our theories up to the task? Transl Behav Med. 2011; 1(1): 53-71.

West JH et al. There's an app for that: content analysis of paid health and fitness apps. J Med Internet Res. 2012; 14(3): e72.

Abroms LC et al. iPhone apps for smoking cessation: a content analysis. Am J Prev Med. 2011; 40(3): 279-85.

Acknowledgments

The preparation of this manuscript was partially funded through the National Cancer Institute Centers of Excellence in Cancer Communication Research (award number P20CA137219). This project has been funded, in whole or in part, with federal funds from the National Cancer Institute, the National Institutes of Health, under contract no. HHSN261200800001E. The content of this publication does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does it mention of trade names, commercial products, or organizations implying endorsement by the US government. The opinions expressed are those of the authors and do not necessarily reflect those of the National Cancer Institute.

Author information

Authors and Affiliations

Corresponding author

Additional information

Implications

Practice: Practitioners should look for and expect research reports to provide transparent information to make it possible to determine whether an eHealth program is possible to implement in their setting.

Policy: eHealth journals and grant funding organizations should encourage more transparent reporting on issues related to translation and external validity.

Research: Researchers should more consistently report on PRECIS criteria and other factors related to translation.

About this article

Cite this article

Sanchez, M.A., Rabin, B.A., Gaglio, B. et al. A systematic review of eHealth cancer prevention and control interventions: new technology, same methods and designs?. Behav. Med. Pract. Policy Res. 3, 392–401 (2013). https://doi.org/10.1007/s13142-013-0224-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13142-013-0224-1