Abstract

We present the results of a pre-registered randomised controlled trial (RCT) that tested whether two smartphone-based mindfulness meditation applications (apps) lead to improvements in mental health. University students (n = 208, aged 18 to 49) were randomly assigned to use one of the three apps: Headspace, Smiling Mind, or Evernote (control group). Participants were instructed to use their assigned app for 10 min each day for 10 days, after which they received an extended 30 days’ access to continue practicing at their discretion. Participants completed measures of depressive symptoms, anxiety, stress, college adjustment, flourishing, resilience, and mindfulness at baseline, after the 10-day intervention, and after the 30-day continued access period. App usage was measured by self-report. Mindfulness app usage was high during the 10-day period (used on 8 of 10 days), but low during the 30-day extended use period (less than 20% used the app 2+ times per week). Mindfulness app users showed significant improvements in depressive symptoms, college adjustment, resilience (Smiling Mind only), and mindfulness (Headspace only) from baseline to the end of 10 days relative to control participants. Participants who continued to use the app frequently were more likely to maintain improvements in mental health, e.g. in depressive symptoms and resilience (Headspace only), until the end of the 30-day period. Thus, brief mobile mindfulness meditation practice can improve some aspects of negative mental health in the short term and may strengthen positive mental health when used regularly. Further research is required to examine the long-term effects of these apps.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Mindfulness meditation is an increasingly popular practice aimed at improving mental health in clinical and non-clinical populations (Creswell 2017; Hofmann et al. 2010). Practicing mindfulness meditation and mindfulness-based therapies like mindfulness-based stress reduction (MBSR) (Kabat-Zinn 1982) and mindfulness-based cognitive therapy (MBCT) (Teasdale et al. 2000) have been associated with a number of beneficial effects including reductions in depressive symptoms, negative affect, stress, and anxiety, as well as increases in positive affect, life satisfaction, and vitality (Creswell 2017; Hofmann et al. 2010). Despite these benefits, many mindfulness meditation programmes remain inaccessible because they require the presence of highly qualified instructors (Kabat-Zinn 2003), involve multiple in-person and group training sessions (e.g. MBCT and MBSR 8–12 weeks of 2–2.5 h sessions; Carmody and Baer 2009), and can incur significant financial costs to the meditator (Cavanagh et al. 2014).

The recent development of mobile applications (apps) for smartphones presents a promising opportunity to overcome a number of the barriers associated with typical mindfulness meditation training (Cavanagh et al. 2014; Mani et al. 2015; Plaza et al. 2013). For example, mindfulness meditation delivered via a mobile app allows an experienced instructor to deliver high quality guided meditation training to far more people than face-to-face training can practically allow (Cavanagh et al. 2014). Further, the portable nature of the mobile phone can reduce geographical, social and financial barriers to access (Cavanagh et al. 2014). Smartphone ownership is also increasing rapidly. In 2016, 77% of Americans owned a smartphone, up from just 35% in 2011 (Smith 2017). For some populations, namely younger adults, non-white, and lower-income Americans, smartphones are the only means of access to the Internet at home (Anderson and Horrigan 2016) and they are the preferred method of contact for young people (Oliver et al. 2005). These socio-demographic factors also coincide with populations that are less likely to seek or receive treatment for mental health issues (Prins et al. 2008). Thus, mobile apps can overcome barriers to introducing mindfulness meditation practice to a wide range of people.

Although there are hundreds of mindfulness meditation apps available with collectively millions of downloads, there is a relatively high turnover rate for mindfulness meditation apps in app stores (Larsen et al. 2016; also see Bakker et al. 2016 on Donker et al. 2013). The relative newness of mindfulness meditation apps, combined with the high turnover rate of app technology, means that few studies have rigorously examined the effectiveness of mobile mindfulness apps for improving mental health (Mani et al. 2015; Plaza et al. 2013). Given that mindfulness meditation practice is increasingly used in mental health care settings (Brody et al. 2017) and these apps are promoted as delivering health benefits (e.g. “creating healthier, happier and more compassionate people around Australia and across the globe” Smiling Mind 2017), the evidence gap is particularly concerning because people may turn to mindfulness meditation apps in the absence of contact with a health professional.

While researchers are making inroads in testing web-based mindfulness meditation interventions (see: Spijkerman et al. 2016 for review and meta-analysis), to our knowledge, there are now eight published studies that have tested whether mindfulness meditation apps improve mental health (Ahtinen et al. 2013; Bostock et al. 2018; Carissoli et al. 2015; Economides et al. 2018; Howells et al. 2016; Lim et al. 2015; Wen et al. 2017; Yang et al. 2018). In a randomised, controlled trial, Howells et al. (2016) tested the mindfulness meditation app Headspace for 10 days against an attention control app in a non-clinical adult sample of 121 “self-selected …happiness-seekers” who were recruited to a study that “aimed to enhance well-being” (Howells et al. 2016, p. 169). Participants randomly assigned to use Headspace showed significant improvements in depressive symptoms and positive affect measured after the 10 days of app use when compared to the attention-matched control group. No changes were found for satisfaction with life, flourishing, or negative affect. These findings suggest that using Headspace for 10 days reduces depressive symptoms and improves happiness, albeit over a short period of time and in those motivated to become happier.

In another study, researchers supplied 45 sessions of Headspace to 123 employed adults from the UK (Bostock et al. 2018). Headspace users reported significant improvements in depressive symptoms, anxiety, global well-being and several measures of job strain at 8 weeks post-baseline compared to a waitlist control group (n = 55). Further, those who used Headspace more frequently during the 45 days showed greater improvements in the psychological outcomes than those who used the app less frequently (Bostock et al. 2018). Improvements in well-being and job strain were also sustained at a 16-week follow-up. Other studies with smaller sample sizes have also demonstrated some benefit. For example, in one study, the 27 adults who received 2 weeks of Headspace-based mindfulness training showed improvements in compassionate prosocial responding to an injured confederate compared to 29 adults who received 2 weeks of Luminosity-based cognitive training (Lim et al. 2015). In a one-arm pilot study, 30 medical residents who were given 4 weeks of access to Headspace reported higher mindfulness and positive affect when accounting for frequency of app use (Wen et al. 2017). Carissoli et al. (2015) compared the use of a ‘mindfulness-inspired’ meditation app (“it’s time to relax”) to listening to music or a waitlist control in 56 employed Italian adults over a 3-week period. Both the meditation app users and music listeners showed some improvements in coping with psychological stress.

In a more recent study conducted by Headspace, use of Headspace by 41 adults was associated with a significant improvement in irritability, affect, and stress resulting from external pressure when compared to 28 adults assigned to an audiobook educational control (Economides et al. 2018). Similarly, 45 medical students who were randomised to use Headspace for 30 days reported significant improvements in perceived stress over time and improvements in general well-being when compared to a waitlist control group (Yang et al. 2018). Finally, in a feasibility study, 15 adult users of the Finnish app Oiva (grounded in Acceptance and Commitment Therapy [ACT] incorporating mindfulness training) showed significant improvements in stress and satisfaction with life following 1 month of use (Ahtinen et al. 2013).

On the basis of the previous app-based research, we believe that there may be benefits of app-based mindfulness meditation training, but the conclusions are by no means definitive and consensus on the effectiveness of mindfulness training remains elusive in the broader mindfulness literature (Van Dam et al. 2017). Of the eight mobile mindfulness meditation studies, only one of the published studies used an app-based attention control as the comparison condition (Howells et al. 2016). The rest have used waitlist, alternate attention control, or no controlled comparison conditions, which can only provide insight into whether mobile mindfulness interventions have an impact over and above no treatment. Such designs also do not control for digital placebo effects, that is, the potential for placebo-like non-specific treatment effects in mobile interventions resulting from the high levels of mental and physical attachment, trust, affinity, and reliance on our mobile phones (Clayton et al. 2015; Torous and Firth 2016). Further, with the exception of Bostock et al. (2018), Wen et al. (2017), and Yang et al. (2018), most of these studies have been relatively short—i.e. they deliver less than 2 weeks’ mindfulness meditation practice. Given that the end goal of these tools is to provide a training platform to establish long-term mindfulness practice, it would be useful to know whether these apps are effective over longer periods of time. Also, it is important to address whether use of these tools improves mental health under more naturalistic conditions (e.g. when people use the app at their own discretion) and not just under optimal conditions (e.g. when people are requested to use the app for a specific period of time).

One challenge in testing mindfulness meditation apps is choosing the apps to test. Although a small number of mHealth apps are responsible for over 90% of consumer downloads (Aitken and Lyle 2015), the majority of research-validated apps have already disappeared from the app stores (see Bakker et al. 2016 on Donker et al. 2013). For these reasons, we agree with Meinlschmidt et al.’s (2016) assertion that there is little value in testing a single new app. Unlike Meinlschmidt et al. (2016), we instead believe there is value in identifying and testing popular and currently available tools that have a committed user-base and are likely to remain in use in the near future.

In the present study, we conducted a pre-registered randomised, controlled trial of the effectiveness of two popular and currently available mindfulness meditation apps—Headspace and Smiling Mind—on changes in mental health compared to an attention placebo control app. We selected Headspace and Smiling Mind based on several factors: both apps have a high download rate (over 100,000 downloads at the time of writing, suggesting a higher user-base), similar introductory mindfulness meditation practices that have been well-received by users (e.g. Headspace: 4.7 stars v. Smiling Mind 3.7 stars in Google Play Store at the time of writing), and high-quality ratings as assessed by the Mobile Application Rating Scale (MARS; Stoyanov et al. 2015; 4.0 and 3.7 out of 5.0, respectively; median MARS score of 23 apps was 3.2 out of 5.0; Mani et al. 2015). A key differential is cost; Headspace incurs a fee after a brief trial, whereas Smiling Mind is free. While neither app is necessarily comparable with more rigorous and evidence-based programmes like MBSR (Kabat-Zinn 1982), these apps do provide a number of meditations consistent with common meditation practices (Kabat-Zinn 2013). We also incorporated an app-based attention placebo control condition (Evernote) to help account for digital placebo and treatment expectancies. We examined changes in mental health outcomes over a short-term adherence-requested period (10 days) and over a medium-term discretionary use period (30 days) to mimic both optimal and naturalistic use and uptake of the app. We predicted that individuals using the mindfulness apps would report improved mental health outcomes from baseline to the 10-day follow-up, compared to those using the attention control app. We also predicted that app usage would be higher during the initial 10 days of access, and much lower during the subsequent 30 days of access. This prediction is based on evidence that health and fitness app usage typically drops off to below 50% user retention after 1 month (Farago 2012). Finally, in line with Bostock et al. (2018); pilot data first available in Bostock and Steptoe 2013), we predicted that sustained improvement in mental health outcomes for mindfulness app users (but not attention control app users) following the 30 days of discretionary use would only occur for those who continued to use the app.

Method

Participants

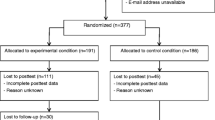

Participants were a convenience sample of 208 undergraduate students between 18 and 49 years old (M = 20.08 years, SD = 2.8 years) from the University of Otago, Dunedin, New Zealand. An additional two participants did not complete the required study measures and thus were excluded from analysis (see Fig. 1. CONSORT diagram for further details). At an alpha level of .05, power of .80, and expected effect sizes of between d = .36 (informed by the depressive symptoms effect size we calculated for Howells et al. 2016—an app-based mindfulness intervention) and d = .62 (informed by the .41 and .62 effect sizes for depression/anxiety and stress, respectively, in Cavanagh et al. 2013—an online mindfulness-based intervention), we registered a recruitment target of 80 participants per condition (i.e. between 42 [d = .62] and 125 [d = .356] participants per condition). We fell short of this recruitment number by approximately 10 people per condition.

Participants were recruited from April–August 2015 through the Department of Psychology’s online psychology research participation pool where research participation could be applied to a small component of their undergraduate Psychology course grade. Participants were largely of New Zealand European descent (73.6%; 12.0% Asian; 5.8% Māori or Pacific Islander; 8.6% other) which reflected demographics of the wider university community. Informed consent was obtained from all individual participants included in the study. It was recommended that participants access the University’s primary healthcare provider should they have any concerns about their feelings before, during, or after the study. This study was approved by the University of Otago Human Ethics Committee (D15/063).

Procedure

Design

The study consisted of a pre-registered three-arm randomised controlled trial (RCT) that tested the effect of two mobile mindfulness apps (Headspace and Smiling Mind) on changes in mental health, relative to a control app (Evernote). The trial and outcome measures were registered prior to recruitment with the Australia and New Zealand Clinical Trials Registry (#368325). The pre-registered mental health outcome measures were depressive symptoms, anxiety, and stress as primary outcome measures, and mindfulness, resilience, flourishing, and college adjustment as secondary outcome measures. The mental health measures were completed at three time points: at baseline before the intervention (time 0), following a 10-day trial of their randomly assigned app (time 1), and following a further 30-day access to their assigned app to use at their own discretion (time 2).

Materials

Headspace is a smartphone application that offers hundreds of hours of guided and unguided mindfulness meditations delivered by Andy Puddicombe (a novice monk in the Theravada Tradition and fully ordained Tibetan Buddhist monk in the Karma Kagyu Lineage; Puddicombe 2017). At the time of this research, the app was structured so that participants must complete the Foundation Level 1 training (previously known as ‘Take 10’; available free of charge) before accessing other content in the app (available following purchase). Foundation Level 1 is a 10-day introduction to mindfulness with formal meditation practices such as mindful breathing (i.e. using the breath as an attentional object of intense focus), body scan (systematically focusing on certain parts of the body), sitting meditation, practice of non-judgement of thoughts and emotions, and other guided meditations that vary the orientation (inward vs. outward vs. no orientation), spatial focus (fixed vs. moving), and aperture of attention (narrow vs. diffuse). Headspace is currently available on iOS and Android platforms and is also accessible via the Headspace website. Participants randomised to the Headspace condition were instructed to download the app and complete the Foundation Level 1 training across 10 days. Following the introductory period, participants were invited to access the other meditation tracks for the next 30 days using a pre-paid Headspace voucher to access the paid content.

Smiling Mind is a smartphone application developed by psychologists and educators that offers hundreds of hours of guided and unguided mindfulness meditation practices across several different mindfulness programmes targeting different age groups (e.g. 16–18 years, adults) and themes (e.g. mindfulness in the classroom, workplace, or to complement sports training). The meditations vary in duration from 1 to 45 min. Smiling Mind is available free of charge. It is currently available on iOS and Android platforms and through the Smiling Mind website. Participants randomised to the Smiling Mind condition were instructed to download the app and use the ‘For adults’ programme for 10 min each day for 10 days. The ‘For adults’ programme features practices such as mindful breathing, body scan, mindful eating (i.e. focusing intently on the thoughts, feelings, and experiences of eating a piece of food), sitting meditation, practice of non-judgement of thoughts and emotions, and other guided meditations that vary the orientation (inward vs. outward vs. no orientation), spatial focus (fixed vs. moving), and aperture of attention (narrow vs. diffuse). If participants ran out of content on that programme during the 10 days, they were instructed to revisit the content they enjoyed or to try out a different programme. Following the 10 days, participants were invited to continue using Smiling Mind for the next 30 days.

Evernote (the attention placebo control application) is an organisation application with a variety of free and pay-for features. Participants randomised to the control condition were instructed to download the Evernote app and use the note-taking function to “jot down all the things you can remember doing on this day last week” for 10 min every day, following a similar control procedure as used in Howells et al. (2016) and Lyubomirsky et al. (2011). Unlike previous research, we provided a more elaborate cover story by describing this activity as a means to practice ‘organisational reminiscing’ (See Supplemental Materials: A for precise scripts). In doing so, we aimed to account for placebo and digital placebo effects (Torous and Firth 2016). As with the other conditions, following the initial 10-day period, participants were invited to continue using Evernote for the next 30 days.

Testing

Participants met with a researcher in groups of one to five people in a psychology laboratory to complete study enrolment. There were up to three study enrolment sessions per day. Using a random number generator, each enrolment session was randomly assigned to an app condition meaning that all participants in a shared session were assigned to the same condition. Following online informed consent, participants completed a survey of basic demographic information (age, gender, and ethnicity), the time 0 mental health measures (depressive symptoms, anxiety, stress, resilience, flourishing, college adjustment, and mindfulness), and perceptions of their assigned practice (mindfulness meditation or control task) on computers in private cubicles. Next, they received instructions for their randomly assigned condition and were asked to download the app prior to leaving the lab. Starting that day, participants completed a 10-day trial of their randomly assigned app (Headspace n = 72, Smiling Mind n = 63, or attention control app n = 73). Participants were encouraged to complete one 10-min session on their app each day during the first 10 days.

Starting the first night of the 10-day trial, participants completed a short daily online survey (sent via SMS) of activity adherence. Following the 10-day trial, participants completed a follow-up survey that was sent to their email address (time 1). Excluding demographic questions, this survey was identical to the one they completed at time 0. Finally, all participants were given an additional 30-day access to their assigned app to use at their own discretion. For Headspace users, this involved emailing them a ‘user code’ voucher which they could apply online to gain an addition 30 days of access to the content; for Smiling Mind and attention control app users, this involved emailing them to inform them that their access had rolled over. All participants were told to use the apps at their own discretion and that their app use would not be actively tracked. Participants completed a final survey (time 2; identical to the time 1 survey) at the end of their 30-day access. All participants were debriefed about the nature of the study via email approximately 2 weeks after the time 2 survey. Participants could receive a small amount of course credit upon completion of a worksheet about the study, but no remuneration was tied to app adherence.

Measures

Depressive Symptoms

Symptoms of depression were assessed using the 20-item Center for Epidemiological Studies Depression Scale (CES-D; Radloff 1977). Participants rated how much they had experienced a range of depressive symptoms over the past week (e.g. I was bothered by things that do not usually bother me) on a 4-point scale ranging from 0 [Rarely or none of the time (< 1 day)] to 3 [Most or all of the time (5–7 days)]. Items were summed to create a score ranging from 0 to 60, with higher scores indicating more symptoms of depression. Although we analysed the CES-D as a continuous measure, a score of 16 or higher is frequently used to identify individuals experiencing significant symptoms of depression (Lewinsohn et al. 1997). This scale has high internal consistency (αs .89, .91, and .93 at time 0, time 1, and time 2 survey points in this study, respectively, similar to α .85 in Radloff 1977) and acceptable concurrent and discriminant validity (Radloff 1977).

Anxiety

Anxiety was assessed using the Hospital Anxiety and Depression Scale–Anxiety Subscale (HADS-A; Zigmond and Snaith 1983). Participants rated how much they agreed with 7 statements (e.g. I feel tense or ‘wound up’) over the past week using a Likert scale from 0 [e.g. Not at all] to 3 [e.g. Most of the time]. Responses were summed over the 7 items to obtain a score for each participant ranging from 0 to 21, with higher scores indicating greater anxiety. This scale has high internal consistency (αs .81, .81, .83 at time 0, time 1, time 2, respectively) and good to very good concurrent validity (Bjelland et al. 2002).

Stress

Perceived stress was assessed using the 10-item Perceived Stress Scale (PSS; Cohen and Williamson 1988). Participants rated how often they experienced 10 statements over the past month (e.g. In the last month, how often have you felt confident about your ability to handle your personal problems?) using a Likert scale from 0 [Never] to 4 [Very often]. Responses were summed over the 10 items to obtain a score for each participant ranging from 0 to 40, with higher scores indicating greater perceived stress. This scale has high internal consistency (αs .87, .89, .90 at time 0, time 1, time 2, respectively) and acceptable convergent and divergent validity (Roberti et al. 2006).

Resilience

Resilience was assessed using the 6-item Brief Resilience Scale (Smith et al. 2008), a measure of the ability to bounce back or recover from stress. Participants rated how much they generally agreed with 6 statements (e.g. I tend to bounce back quickly after hard times) using a Likert scale from 1 [Strongly disagree] to 5 [Strongly agree]. Responses were averaged across the 6 items to obtain a score for each participant ranging from 1 to 5, with higher scores indicating greater resilience. This scale has high internal consistency (αs .88, .87, .89 at time 0, time 1, time 2, respectively, similar to αs .80 to .91 in Smith et al. 2008), good test-retest reliability and acceptably convergent and divergent validity (Smith et al. 2008).

Flourishing

Flourishing was assessed using the 8-item Flourishing Scale (Diener et al. 2010), a measure of perceived achievement in areas such as relationships, self-esteem, purpose, and optimism that is commonly used as a proxy for psychological well-being. Participants rated how much they agreed with 8 statements in general (e.g. I lead a purposeful and meaningful life) using a Likert scale from 1 [Strongly disagree] to 7 [Strongly agree]. Responses were summed over the 8 items to obtain a score for each participant ranging from 8 to 56, with higher scores indicating greater well-being. This scale has high internal consistency (αs .88, .94, .95 at time 0, time 1, time 2, respectively, similar to α .87 in Diener et al. 2010, and .91 and .81 in Hone et al. 2014), good test-retest reliability (Diener et al. 2010) and adequate convergent and divergent validity (Hone et al. 2014).

College Adjustment

College adjustment was assessed using the 19-item College Adjustment Test (Pennebaker et al. 1990), which captures experiences related to adjusting to college life in terms of positive affect, negative affect, and homesickness. Participants rated to what extent they had experienced 19 statements in the past week (e.g. worried about how you will perform academically at college) on a scale of 1 [Not at all] to 7 [A great deal]. Items were reverse scored where appropriate and summed to create a score from 19 to 133, with higher values indicating greater adjustment to college. This scale has high internal consistency (αs .83, .85, .86 at time 0, time 1, time 2, respectively, similar to α .79 in Pennebaker et al. 1990) and good test-retest reliability (Pennebaker et al. 1990).

Mindfulness

Mindfulness was assessed using the 12-item Cognitive Affective Mindfulness Scale–Revised (Feldman et al. 2007), a scale consisting of several facets of mindfulness including attention, present focus, awareness, and acceptance. Participants rated how much each statement applied to them in general (e.g. it is easy for me to concentrate on what I am doing) using a Likert scale from 1 [Rarely/Not at all] to 4 [Almost always]. Responses were summed over the 12 items to obtain a score for each participant ranging from 12 to 48, with higher scores indicating a greater level of the mindfulness facets: attention, present focus, awareness, and acceptance. This scale has acceptable internal consistency (αs .78, .84, .86 at time 0, time 1, time 2, respectively, similar to αs .74 and .77 in Feldman et al. 2007) and acceptable convergent and discriminant validity (Feldman et al. 2007).

Expectation and Perceptions of App Use

Participants were asked to rate their randomly assigned practice of either mindfulness meditation (Headspace, Smiling Mind) or organisational reminiscing (Control; Evernote) through two questions (‘How useful do you think mindfulness meditation / organisational reminiscing would be for yourself [Time 0]/has been for yourself [Time 1, Time 2]?’ and ‘How effective do you think mindfulness / organisational reminiscing would be for yourself [Time 0]/ has been for yourself [Time 1, Time 2]?’) on a scale from 1 [Not at all] to 4 [Very useful]. Scores on these two items were highly correlated (rs > .76) and averaged at each time point. Higher scores indicated a higher overall positive expectation (at time 0) or perception (at time 1 and time 2) of utility and effectiveness their assigned activity.

App Adherence

App adherence during the first 10 days was assessed via an online daily diary sent directly as a hyperlink to participants’ mobile phones at 7 pm every day for 10 days. Participants were asked if they had completed a session using their app today (yes/no), and yesterday (yes/no) to capture app use after the previous day’s survey and/or account for missing surveys. These questions were embedded in a number of daily measures outside of the scope of this article. Daily diaries were lagged to account for missing diaries to create an overall measure of activity adherence as a sum from 0 (app used on zero days) to 10 (app used on all 10 days). App adherence for the 30-day open access to the app was assessed using a single question in the final survey asking “How often did you use the app in the last 30 days?” with answer options including 0 [Never], 1 [Once], 2 [2–4 times a month], 3 [2–3 times a week], 4 [4 or more times a week], and 5 [Daily or almost daily].

Data Analyses

Prior to our main analyses, we conducted between-group comparisons of baseline characteristics (demographic and time 0 mental health measures) to test for equivalency of conditions at trial outset. We also computed changes in mental health within each condition separately in order to show the percentage change, effect sizes (within Cohen’s d; between Hedges’ g with correction factor) and achieved power within each condition (using Cohen’s dz) prior to conducting our main analyses. This was done using paired t tests to measure changes in mental health from time 0 to time 1 (baseline to the end of the 10-day period) and, from time 0 to time 2 (from baseline to the end of the 30-day discretionary period, approximately 40 days after baseline) within each condition separately.

For our main analyses, we used a multiple regression approach to compare the changes in the mental health outcomes over time between the three conditions using dummy codes. A regression approach was used instead of ANOVA because it provided more flexibility, precision, and consistency in analysing group differences and patterns of moderation by app use. The first set of regressions compared changes in mental health from time 0 to time 1 (baseline to the end of the 10-day period) between the three conditions: The outcome measure was mental health after the 10 days of app use (e.g. depressive symptoms at time 1), which was predicted from mental health at baseline (e.g. depressive symptoms at time 0), plus two condition dummy codes with the control condition as the reference group [Dummy 1 = Control (0), Headspace (1), Smiling Mind (0); Dummy 2 = Control (0), Headspace (0), Smiling Mind (1)].

We conducted these analyses a second time using Headspace as the reference group in order to establish whether there were any differences between the two mindfulness apps [Dummy Code 2 = 0 (Control), 0 (Headspace), 1 (Smiling Mind); Dummy Code 3 = 1 (Control), 0 (Headspace), 0 (Smiling Mind)]. The second set of regressions compared changes in mental health from time 0 to time 2 (baseline to the 30-day follow-up) between the three conditions following a similar process as with testing from time 0 to time 1. For analyses of the time 2 mental health measures, the sample size was reduced from 208 to 192 because 16 participants did not complete the time 2 survey (17.7% attrition; see Fig. 1. CONSORT diagram for details).

The third set of regressions consisted of moderation analyses to determine whether frequency of app use during the 10-day and 30-day discretionary period moderated the effect of condition on changes in mental health from time 0 to time 1, and from time 0 to time 2, respectively. This was done by adding self-reported app use (10-day or 30-day) (centred), plus the cross-product interaction terms between app use (centred) and the group dummy codes (e.g. app use centred × Dummy Code 1 and app use centred × Dummy Code 2; then, separately, app use centred × Dummy Code 2 and app use centred × Dummy Code 3) to the regression models. Lastly, in all models, we had originally controlled for participants’ age, gender, ethnicity, and previous experience with mindfulness/organisational reminiscing, but removed them from the final models because they did not affect the results. However, the final models controlled for app expectation scores (average of expected usefulness and effectiveness at time 0, mean centred, in time 0 to time 1 analyses) or app perception scores (average of perceived usefulness and effectiveness at time 1, mean centred, in time 0 to time 2 analyses).

Results

Descriptive Statistics and Baseline Characteristics

Chi-square tests and one-way ANOVAs showed no significant differences between conditions in any of the baseline measures (all ps > .25). Where cut-offs exist, participants’ average scores fell into the commonly accepted normative ranges (depressive symptoms, Radloff 1977; anxiety, Snaith 2003; resilience, Smith et al. 2013). Supplemental Table 1 presents the demographic characteristics and baseline mental health measures for the sample overall and separately for the three conditions.

At baseline, a subset of participants from each condition (control: 31.5%, Headspace: 25.0%, Smiling Mind: 27.0%) reported that they had previous experience with their assigned activity (mindfulness meditation or the attention placebo control activity, organisational reminiscing). There were no significant differences between conditions in reports of previous experience with mindfulness or organisational reminiscing nor perceived usefulness or effectiveness of the tool (all ps > .20), suggesting that the control task was a theoretically feasible attention placebo. However, there were differences at time 1 and time 2 for perceived usefulness and effectiveness whereby participants in the mindfulness app conditions reported that the tool was more useful and effective than participants in the control condition (all ps < .05) suggesting that in practice, the mindfulness tasks were more convincing than the control task. (See Supplementary Table 2 for descriptive statistics).

Participants reported high app adherence between time 0 to time 1, using their app on 8.24 days for Headspace users (SD = 2.02, range 2–10), on 8.00 days for Smiling Mind users (SD = 2.03, range 3–10) and on 8.74 days for attention control app users (SD = 1.76, range 2–10), which did not differ between conditions (F(2,205) = 2.63, p = .075). App use during the 30-day open access period was much lower. Nearly half of all participants reported ‘never’ using their app again during that 30-day open access period (41.8% Headspace; 50.0% Smiling Mind; 53.7% Control app) (F(2,188) = .026, p = .975). In fact, only 16.4% of Headspace, 15.4% of Smiling Mind, and 17.9% of the control app users reported using their app two or more times per week during that open access period; again, there were no differences between the conditions (F(2,188) = .053, p = .948).

Changes in Mental Health within Conditions

Table 1 presents the paired t test results and descriptive statistics for the mental health measures at all three time points for the separate conditions. Headspace users reported significant reductions in depressive symptoms, anxiety, and stress, and significant improvements in college adjustment and mindfulness, but not flourishing or resilience, from baseline to the end of the 10-day period. These changes were mostly maintained until the final time point 40 days later, with the exception of depressive symptoms. Resilience showed a different pattern, exhibiting a significant increase only at the final time point, but not immediately after the 10 days for Headspace users, suggesting a ‘sleeper effect’. Smiling Mind users reported significant reductions in depressive symptoms and anxiety, but not stress, and significant improvements in resilience and college adjustment, but not flourishing or mindfulness, from baseline to the end of the 10-day period. These changes were only maintained for anxiety and college adjustment at the final time point 40 days later. By contrast, control app users reported small but significant increases in depressive symptoms and stress, and significant decreases in flourishing from baseline to the end of the 10-day period, which were mostly maintained 40 days later.

Changes in Mental Health Between Conditions

Table 2 presents the multiple regression results comparing changes in mental health outcomes over time between the three conditions. Users of Headspace and Smiling Mind reported significantly reduced depressive symptoms over time compared to the control group at both time points. The size of the coefficients suggested that both mindfulness apps reduced depressive symptoms by approximately 3–3.5 points (equivalent to approximately a .4 standard deviation change in depressive symptoms), bringing mindfulness app users below the cut-off for significant symptoms of depression (cut-off 16; Lewinsohn et al. 1997). There were no significant differences in changes in depressive symptoms between the two mindfulness app’s users. Headspace and Smiling Mind users showed improvements in college adjustment at time 1 (equivalent to approximately a .3 standard deviation change in college adjustment), but these changes did not last through to time 2. Headspace users had significant improvements in trait mindfulness at time 1 compared to both Smiling Mind and control app users (equivalent to approximately a .25 standard deviation change in trait mindfulness), although the trait mindfulness changes did not last through to time 2. Finally, Headspace users had significant improvements in resilience that emerged at time 2 (equivalent to approximately a .3 standard deviation change in resilience), but not at time 1, compared to the control group. By contrast, Smiling Mind users reported significant improvements in resilience at time 1 relative to the control group (equivalent to approximately a .2 standard deviation change in resilience), but this change did not last through to time 2.

Moderation by App Use

Frequency of app use during the 10 days did not show clear patterns of moderation (see Supplementary Table 3 and Supplementary Figure 1). This is likely because usage was quite high and consistent during the 10 days (overall M(SD) = 8.34(1.95) days). However, app use during the 30-day discretionary period significantly moderated the effect of condition on changes in depressive symptoms, anxiety, college adjustment, and mindfulness from time 0 to time 2 (see Supplementary Table 4). Figure 2 shows this pattern of moderation by 30-day app use for depressive symptoms (panel A), anxiety (panel B), college adjustment (panel C), and mindfulness (panel D) with significant simple slopes indicated (Aiken and West 1991). Participants who used the mindfulness meditation apps more frequently during the discretionary period showed statistically greater improvements in college adjustment and mindfulness [Smiling Mind only] compared to participants who did not use the mindfulness apps as frequently or who used the control app. Similar but weaker patterns were found for depressive symptoms and anxiety. By contrast, participants who used the control app most frequently reported poorer mental health than those who used the control app less frequently.

The relationship between frequency of app use during the 30-day self-directed use of app and mental health scores (a depressive symptoms, b anxiety, c college adjustment, d mindfulness) for control, Headspace, and Smiling Mind app users at time 2 (after 30 days) controlling for time 0 mental health scores. Frequency of app use was modelled around − 1SD, M, + 1SD, for low, med, and high app frequency. Depressive symptom scores can range between 0 and 60 with higher scores indicating higher symptoms of depression; anxiety scores can range between 0 and 21 with higher scores indicating greater symptoms of anxiety; college adjustment scores can range between 19 and 133 with higher scores indicating greater college adjustment; mindfulness scores can range between 12 and 48 with higher scores indicating greater mindfulness. †p < .10, *p < .05, **p < .01, ***p < .001

Discussion

In line with our hypotheses, mindfulness meditation app users reported greater improvements in several mental health outcomes than did attention placebo control app users. The improvements varied by the mindfulness app, timeframe, frequency of use, and reference point (i.e. comparing to self-baseline vs. comparing to control app users) but the results provide preliminary evidence of the mental health utility of mobile mindfulness meditation apps, which warrants further investigation. The most consistent improvements were observed for depressive symptoms and college adjustment, where both Headspace and Smiling Mind users reported small but significant improvements after 10 days of requested app use relative to the control condition. These improvements may represent a clinically meaningful change given that a 3–3.5 point change in depressive symptoms brings mindfulness app users under the cut-off for significant symptoms of depression, unlike the control app users. This change in depressive symptoms relative to control was further maintained after 30 days of discretionary use. Thus, our patterns for depressive symptoms replicated the work of Bostock et al. (2018) and Howells et al. (2016) who also found reductions in depressive symptoms after Headspace mindfulness meditation app use.

Changes in mental health outcomes were also largely dependent upon frequency of app use. People who used their mindfulness apps more frequently during the 30 days of discretionary use showed the greatest benefits in terms of their college adjustment and mindfulness, and to a lesser extent, depressive symptoms and anxiety. Importantly, this benefit for frequent app users only occurred for those randomly assigned to use a mindfulness app, suggesting that the mental health benefits of mobile mindfulness apps were not explained by digital placebo effects. By contrast, users of the control app Evernote reported mostly poorer mental health outcomes with more frequent use. We are not sure what explains this finding. Although it is possible that excessive ‘organisational reminiscing’ across an extended period of time could have triggered rumination or an awareness of unmet goals, which could be distressing, it could also simply be that the more people use an ineffective tool, the worse they feel—because the tool is not designed to improve mental health. Either way, the patterns suggest that the Evernote app might not have been a completely neutral control condition. Although this does not take away from our findings, it suggests that future research should carefully consider the nature of the control condition.

When looking at our secondary pre-registered outcomes, we found that mindfulness practice was beneficial for increasing adjustment to college life after 10 days of requested app use with additional time inconsistent improvements in resilience by app. Smiling Mind users reported immediate but not sustained improvements in resilience, whereas, Headspace users reported a lagged improvement in resilience after the 30-day discretionary use period. Previous research has linked mindfulness with increases in adaptive stress responses and coping resources (Weinstein et al. 2009). Given that the transition to college life can be tumultuous (Fisher and Hood 1987) and young adults are heavily reliant on their mobile phones (Oliver et al., 2005; Smith, 2017), mobile mindfulness may present a promising tool to improve adjustment to college life, build resilience, and enhance the ability to cope with stressors in incoming college students. Thus, these findings may have valuable applications for colleges that are implementing mindfulness programmes (Swain 2016).

Mobile mindfulness users did not report significant changes in flourishing. Improvements in trait well-being can be extremely difficult to achieve (Weiss et al. 2016). Therefore, the low-intensity of this intervention (i.e. 10 min meditations, once a day) may not have been sufficient to produce changes in flourishing. In addition, although we attempted to investigate the notion of a digital placebo effect (Torous and Firth 2016) by implementing an attention placebo control condition, control participants did not report that their app was as useful or effective as the mindfulness apps, suggesting that this was not an adequate comparison condition to tease apart the relationship between app use in general and the therapeutic benefits of mindfulness, specifically. Nonetheless, when controlling for participants’ expectations (time 0 to time 1) and their subsequent perceptions following use of their apps (time 0 to time 2), we still found some improvements in mental health (namely, depressive symptoms at both time points and college adjustment at time 1 only).

Changes in mindfulness were much less consistent than the other mental health measures. Interestingly, those who used Headspace showed sustained small increases in mindfulness over the course of the intervention (at least as we measured it, using the Cognitive and Affective Mindfulness Scale–Revised; Feldman et al. 2007), whereas Smiling Mind users did not (although more frequent Smiling Mind users were equivalent in mindfulness to more frequent Headspace users at the end of the 30-day discretionary period). Given that both apps provide similar introductory mindfulness training content (e.g. body scan, breathing exercises), we believe that differences in the app interface may be responsible for any observed differences here. Previous research has established that interactive, beautiful, and well-designed apps are more appealing and encourage more loyalty from their users (Cyr et al. 2006). In ongoing research, we are qualitatively addressing questions about differences in user experience with Headspace and Smiling Mind app users where initial themes suggest interface differences influenced participant willingness and ability to use the apps. Further, Smiling Mind has subsequently been redesigned with substantial additional content added; therefore, additional research is required to establish whether Smiling Mind in its current design is equivalent to Headspace.

Limitations and Future Research

Although our study had high ecological validity, this came at the cost of strict control over app adherence. While the drop-off in self-reported app use during the 30-day discretionary period was particularly high across conditions, this drop-off is typical of naturalistic use of these sorts of tools (Aitken and Lyle 2015; Farago 2012) and was in line with our hypotheses. Given the high drop-off, the fact that some changes in mental health outcomes held is reassuring in light of the short follow-up times in previous research (Ahtinen et al. 2013; Carissoli et al. 2015; Howells et al. 2016; Lim et al. 2015). Even so, continued practice of mindfulness may be necessary to fully reap the benefits (Bergomi et al. 2015). In this respect, face-to-face delivery of mindfulness instruction likely provides a superior social environment for new mindfulness practitioners (Segal et al. 2013) but it should not be forgotten that apps provide wide reach, immediate access, superior scalability, and generally are available at lower cost than many alternatives (Price et al. 2014). Further, when a health app is prescribed by a health provider (e.g. doctor, counsellor), 30-day retention rates typically increase by 10–30% (Aitken and Lyle 2015). Our sample was a convenience sample of healthy undergraduate students, meaning we cannot extrapolate the current findings to a clinical sample. Nevertheless, mobile mindfulness apps could have potential as an adjunct-to-treatment or may serve as a suitable homework component in therapy to facilitate the treatment of patients (Kladnitski et al. 2018; Price et al. 2014) with anxiety and depressive symptoms, although this remains to be tested.

Further, while our sample size was higher than most previous mobile mindfulness research and we were adequately powered to detect within group change over time, we were still underpowered to detect between-group differences. Future researchers should consider using more conservative effect size estimates when conducting their power analyses. Our strongest effect sizes in depressive symptoms (g = .23) were in general, smaller than those found in previous research on mobile, web-based, and face-to-face mindfulness meditation programmes (Howells et al. 2016: single study of mobile mindfulness, g = .35; Spijkerman et al. 2016: meta-analysis of web-based mindfulness, g = .29; Goyal et al. 2014: meta-analysis of face-to-face mindfulness, d = .30). Nevertheless, given the brevity and ease of implementation, the reported mental health improvements may still represent meaningful change for those experiencing them (a topic we are exploring in ongoing qualitative research) and clinically meaningful change where app users move further from established cut-off points (e.g. those used to suggest experience of clinically significant experience of depression, Lewinsohn et al. 1997). Our reliance on self-reported outcomes may also have led participants to over- or under-estimate their app use during the discretionary period and their responses may be subject to a number of response biases (e.g. social-desirability bias). Future researchers should design their protocol to collect objective measures of app usage. Attempts to measure trait mindfulness via self-report are being challenged by researchers (e.g. Van Dam et al. 2017); as such, future researchers should consider collecting more objective behavioural measures to support their self-reported measures (e.g. breath counting; Levinson et al. 2014).

Finally, to establish compelling evidence for the effectiveness of mobile mindfulness meditation, in the future, researchers should investigate mindfulness-based apps as stand-alone vs. adjunct-to-treatment as usual and should compare mindfulness-based apps to not only established mindfulness meditation programmes (e.g. MBSR, Kabat-Zinn 1982) but also to active app-based controls. For instance, given our modest findings of small improvements in depressive symptoms, there would be merit in comparing mindfulness meditation apps to an evidence-supported cognitive-behavioural therapy (CBT) app. Doing so would allow us to establish whether there is non-inferiority or superiority to an established treatment modality such as CBT, when delivered by mobile phone.

References

Ahtinen, A., Mattila, E., Välkkynen, P., Kaipainen, K., Vanhala, T., Ermes, M., et al. (2013). Mobile mental wellness training for stress management: Feasibility and design implications based on a one-month field study. JMIR mHealth and uHealth, 1(2), e11. https://doi.org/10.2196/mhealth.2596.

Aiken, L. & West, S. (1991). Multiple regression: Testing and interpreting interactions. Newbury Park, CA: Sage.

Aitken, M., & Lyle, J. (2015). Patient adoption of mHealth: Use, evidence and remaining barriers to mainstream acceptance. Parsippany: IMS Institute for Healthcare Informatics.

Anderson, M., & Horrigan, J. B. (2016, October 3). Smartphones help those without broadband get online, but don’t necessarily bridge the digital divide. PEW Research Report. Retrieved from pewresearch.org/fact-tank/2016/10/03/smartphones-help-those-without-broadband-get-online-but-dont-necessarily-bridge-the-digital-divide/

Bakker, D., Kazantzis, N., Rickwood, D., & Rickard, N. (2016). Mental health smartphone apps: Review and evidence-based recommendations for future developments. JMIR mental health, 3(1), e7. https://doi.org/10.2196/mental.4984.

Bjelland, I., Dahl, A. A., Haug, T. T., & Neckelmann, D. (2002). The validity of the hospital anxiety and depression scale: An updated literature review. Journal of Psychosomatic Research, 52(2), 69–77. https://doi.org/10.1016/s0022-3999(01)00296-3.

Bergomi, C., Tschacher, W., & Kupper, Z. (2015). Meditation practice and self-reported mindfulness: A cross-sectional investigation of meditators and non-meditators using the comprehensive inventory of mindfulness experiences (CHIME). Mindfulness, 6(6), 1411–1421. https://doi.org/10.1007/s12671-015-0415-6.

Bostock, S., Crosswell, A. D., Prather, A. A., & Steptoe, A. (2018). Mindfulness on-the-go: Effects of a mindfulness meditation app on work stress and well-being. Journal of Occupational Health Psychology. Advanced online publication. https://doi.org/10.1037/ocp0000118.

Bostock, S. K., & Steptoe, A. (2013, April). Can finding headspace reduce work stress? A randomised controlled workplace trial of a mindfulness meditation app. Psychosomatic Medicine, 75(3), A36–A37.

Brody, J. L., Scherer, D. G., Turner, C. W., Annett, R. D., & Dalen, J. (2017). A conceptual model and clinical framework for integrating mindfulness into family therapy with adolescents. Family Process. Advanced online. https://doi.org/10.1111/famp.12298.

Carissoli, C., Villani, D., & Riva, G. (2015). Does a meditation protocol supported by a mobile application help people reduce stress? Suggestions from a controlled pragmatic trial. Cyberpsychology, Behavior, and Social Networking, 18(1), 46–53. https://doi.org/10.1089/cyber.2014.0062.

Carmody, J., & Baer, R. A. (2009). How long does a mindfulness-based stress reduction program need to be? A review of class contact hours and effect sizes for psychological distress. Journal of Clinical Psychology, 65(6), 627–638. https://doi.org/10.1002/jclp.20555.

Cavanagh, K., Strauss, C., Cicconi, F., Griffiths, N., Wyper, A., & Jones, F. (2013). A randomised controlled trial of a brief online mindfulness-based intervention. Behaviour Research and Therapy, 51(9), 573–578. https://doi.org/10.1016/j.brat.2013.06.003.

Cavanagh, K., Strauss, C., Forder, L., & Jones, F. (2014). Can mindfulness and acceptance be learnt by self-help?: A systematic review and meta-analysis of mindfulness and acceptance-based self-help interventions. Clinical Psychology Review, 34(2), 118–129. https://doi.org/10.1016/j.cpr.2014.01.001.

Clayton, R. B., Leshner, G., & Almond, A. (2015). The extended iSelf: The impact of iPhone separation on cognition, emotion, and physiology. Journal of Computer-Mediated Communication, 20(2), 119–135. https://doi.org/10.1111/jcc4.12109.

Cohen, S., & Williamson, G. (1988). Perceived stress in a probability sample of the United States. In S. Spacapan & S. Oskamp (Eds.), The social psychology of health: Claremont symposium on applied social psychology. Newbury Park, CA: Sage.

Creswell, J. D. (2017). Mindfulness interventions. Annual Review of Psychology, 68, 491–516. https://doi.org/10.1146/annurev-psych-042716-051139.

Cyr, D., Head, M., & Ivanov, A. (2006). Design aesthetics leading to m-loyalty in mobile commerce. Information & Management, 43(8), 950–963. https://doi.org/10.1016/j.im.2006.08.009.

Diener, E., Wirtz, D., Tov, W., Kim-Prieto, C., Choi, D. W., Oishi, S., & Biswas-Diener, R. (2010). New well-being measures: Short scales to assess flourishing and positive and negative feelings. Social Indicators Research, 97(2), 143–156. https://doi.org/10.1007/s11205-009-9493-y.

Donker, T., Petrie, K., Proudfoot, J., Clarke, J., Birch, M. R., & Christensen, H. (2013). Smartphones for smarter delivery of mental health programs: A systematic review. Journal of Medical Internet Research, 15(11), e247. https://doi.org/10.2196/jmir.2791.

Economides, M., Martman, J., Bell, M. J., & Sanderson, B. (2018). Improvements in stress, affect, and irritability following brief use of a mindfulness-based smartphone app: A randomized controlled trial. Mindfulness. https://doi.org/10.1007/s12671-018-0905-4.

Farago, P. (2012, October 22). App engagement: The matrix reloaded. Flurry Analytics Blog. Retrieved from http://flurrymobile.tumblr.com/post/113379517625/app-engagement-the-matrix-reloaded

Feldman, G., Hayes, A., Kumar, S., Greeson, J., & Laurenceau, J. P. (2007). Mindfulness and emotion regulation: The development and initial validation of the cognitive and affective mindfulness scale revised (CAMS-R). Journal of Psychopathology and Behavioral Assessment, 29(3), 177–190. https://doi.org/10.1007/s10862-006-9035-8.

Fisher, S., & Hood, B. (1987). The stress of the transition to university: A longitudinal study of psychological disturbance, absent-mindedness and vulnerability to homesickness. British Journal of Psychology, 78, 425–441. https://doi.org/10.1111/j.2044-8295.1987.tb02260.x.

Goyal, M., Singh, S., Sibinga, E. M., Gould, N. F., Rowland-Seymour, A., Sharma, R., et al. (2014). Meditation programs for psychological stress and well-being: A systematic review and meta-analysis. JAMA Internal Medicine, 174(3), 357–368. https://doi.org/10.1001/jamainternmed.2013.13018.

Hofmann, S. G., Sawyer, A. T., Witt, A. A., & Oh, D. (2010). The effect of mindfulness-based therapy on anxiety and depression: A meta-analytic review. Journal of Consulting and Clinical Psychology, 78(2), 169–183. https://doi.org/10.1037/a0018555.

Hone, L., Jarden, A., & Schofield, G. (2014). Psychometric properties of the flourishing scale in a New Zealand sample. Social Indicators Research, 119(2), 1031–1045. https://doi.org/10.1007/s11205-013-0501-x.

Howells, A., Ivtzan, I., & Eiroa-Orosa, F. J. (2016). Putting the ‘app’ in happiness: A randomised controlled trial of a smartphone-based mindfulness intervention to enhance wellbeing. Journal of Happiness Studies, 17(1), 163–185. https://doi.org/10.1007/s10902-014-9589-1.

Kabat-Zinn, J. (1982). An outpatient program in behavioral medicine for chronic pain patients based on the practice of mindfulness meditation: Theoretical considerations and preliminary results. General Hospital Psychiatry, 4(1), 33–47. https://doi.org/10.1016/0163-8343(82)90026-3.

Kabat-Zinn, J. (2003). Mindfulness-based interventions in context: Past, present, and future. Clinical Psychology: Science and Practice, 10(2), 144–156. https://doi.org/10.1093/clipsy/bpg016.

Kabat-Zinn, J. (2013). Full catastrophe living, revised edition: How to cope with stress, pain and illness using mindfulness meditation. Hachette UK.

Kladnitski, N., Smith, J., Allen, A., Andrews, G., & Newby, J. M. (2018). Online mindfulness-enhanced cognitive behavioural therapy for anxiety and depression: Outcomes of a pilot trial. Internet Interventions. Advanced online publication. https://doi.org/10.1016/j.invent.2018.06.003.

Larsen, M. E., Nicholas, J., & Christensen, H. (2016). Quantifying app store dynamics: Longitudinal tracking of mental health apps. JMIR mHealth and uHealth, 4(3), e96. https://doi.org/10.2196/mhealth.6020.

Levinson, D. B., Stoll, E. L., Kindy, S. D., Merry, H. L., & Davidson, R. J. (2014). A mind you can count on: Validating breath counting as a behavioral measure of mindfulness. Frontiers in Psychology, 5, e1202. https://doi.org/10.3389/fpsyg.2014.01202.

Lewinsohn, P. M., Seeley, J. R., Roberts, R. E., & Allen, N. B. (1997). Center for Epidemiological Studies-Depression Scale (CES-D) as a screening instrument for depression among community-residing older adults. Psychology and Aging, 12, 277–287. https://doi.org/10.1037//0882-7974.12.2.277.

Lim, D., Condon, P., & DeSteno, D. (2015). Mindfulness and compassion: An examination of mechanism and scalability. PLoS One, 10(2), e0118221. https://doi.org/10.1371/journal.pone.0118221.

Lyubomirsky, S., Dickerhoof, R., Boehm, J. K., & Sheldon, K. M. (2011). Becoming happier takes both a will and a proper way: An experimental longitudinal intervention to boost well-being. Emotion, 11, 391–402. https://doi.org/10.1037/a0022575.

Mani, M., Kavanagh, D. J., Hides, L., & Stoyanov, S. R. (2015). Review and evaluation of mindfulness-based iPhone apps. JMIR mHealth and uHealth, 3(3), e82. https://doi.org/10.2196/mhealth.4328.

Meinlschmidt, G., Lee, J. H., Stalujanis, E., Belardi, A., Oh, M., Jung, E. K., et al. (2016). Smartphone-based psychotherapeutic micro-interventions to improve mood in a real-world setting. Frontiers in Psychology, 7, e1112. https://doi.org/10.3389/fpsyg.2016.01112.

Oliver, M. I., Pearson, N., Coe, N., & Gunnell, D. (2005). Help-seeking behaviour in men and women with common mental health problems: Cross-sectional study. British Journal Psychiatry, 186, 297–301. https://doi.org/10.1192/bjp.186.4.297.

Pennebaker, J. W., Colder, M., & Sharp, L. K. (1990). Accelerating the coping process. Journal of Personality and Social Psychology, 58, 528–537. https://doi.org/10.1037//0022-3514.58.3.528.

Plaza, I., Demarzo, M. M. P., Herrera-Mercadal, P., & García-Campayo, J. (2013). Mindfulness-based mobile applications: Literature review and analysis of current features. JMIR mHealth and uHealth, 1(2), e24. https://doi.org/10.2196/mhealth.2733.

Price, M., Yuen, E. K., Goetter, E. M., Herbert, J. D., Forman, E. M., Acierno, R., & Ruggiero, K. J. (2014). mHealth: A mechanism to deliver more accessible, more effective mental health care. Clinical Psychology & Psychotherapy, 21(5), 427–436. https://doi.org/10.1002/cpp.1855.

Prins, M. A., Verhaak, P. F., Bensing, J. M., & van der Meer, K. (2008). Health beliefs and perceived need for mental health care of anxiety and depression—The patients’ perspective explored. Clinical Psychology Review, 28(6), 1038–1058. https://doi.org/10.1016/j.cpr.2008.02.009.

Puddicombe, A. (2017). User profile, LinkedIn. 31 October 2017, linkedin.com/in/andypuddicombe/.

Radloff, L. S. (1977). The CES-D scale: A self-report depression scale for research in the general population. Applied Psychological Measurements, 1, 385–401. https://doi.org/10.1177/014662167700100306.

Roberti, J. W., Harrington, L. N., & Storch, E. A. (2006). Further psychometric support for the 10-item version of the perceived stress scale. Journal of College Counseling, 9(2), 135–147. https://doi.org/10.1002/j.2161-1882.2006.tb00100.x.

Segal, Z. V., Williams, J. M. G., & Teasdale, J. D. (2013). Mindfulness-based cognitive therapy for depression: A new approach to preventing relapse. (2nd edition). New York: Guilford Press.

Smiling Mind (2017). Smiling mind. Retrieved from smilingmind.com.au/

Smith, A. (2017). Record shares of Americans now own smartphones, have home broadband. PEW Research Report. Retrieved from pewresearch.org/fact-tank/2017/01/12/evolution-of-technology/

Smith, B. W., Dalen, J., Wiggins, K., Tooley, E., Christopher, P., & Bernard, J. (2008). The brief resilience scale: Assessing the ability to bounce back. International Journal of Behavioral Medicine, 15(3), 194–200. https://doi.org/10.1080/10705500802222972.

Smith, B.W., Epstein, E.E., Oritz, J.A., Christopher, P.K., & Tooley, E.M. (2013). The foundations of resilience: What are the critical resources for bouncing back from stress? In Prince-Embury, S. & Saklofske, D.H. (Eds.), Resilience in children, adolescents, and adults: Translating research into practice, The Springer series on human exceptionality (pp. 167–187). New York: Springer.

Snaith, R. P. (2003). The hospital anxiety and depression scale. Health and Quality of Life Outcomes, 1, 29. https://doi.org/10.1186/1477-7525-1-29.

Spijkerman, M. P. J., Pots, W. T. M., & Bohlmeijer, E. T. (2016). Effectiveness of online mindfulness-based interventions in improving mental health: A review and meta-analysis of randomised controlled trials. Clinical Psychology Review, 45, 102–114. https://doi.org/10.1016/j.cpr.2016.03.009.

Stoyanov, S. R., Hides, L., Kavanagh, D. J., Zelenko, O., Tjondronegoro, D., & Mani, M. (2015). Mobile app rating scale: A new tool for assessing the quality of health-related mobile apps. JMIR mhealth and uhealth, 3(1), e27. https://doi.org/10.2196/mhealth.3422.

Swain, H. (2016) Mindfulness: The craze sweeping through schools is now at a university near you. The Guardian. Retrieved from http://www.theguardian.com/education/2016/jan/26/mindfulness-craze-schools-university-near-you-cambridge

Teasdale, J. D., Segal, Z. V., Williams, J. M. G., Ridgeway, V. A., Soulsby, J. M., & Lau, M. A. (2000). Prevention of relapse/recurrence in major depression by mindfulness-based cognitive therapy. Journal of Consulting and Clinical Psychology, 68(4), 615–623. https://doi.org/10.1037//0022-006X.68.4.615.

Torous, J., & Firth, J. (2016). The digital placebo effect: Mobile mental health meets clinical psychiatry. The Lancet Psychiatry, 3(2), 100–102. https://doi.org/10.1016/s2215-0366(15)00565-9.

Van Dam, N. T., van Vugt, M. K., Vago, D. R., Schmalzl, L., Saron, C. D., Olendzki, A., et al. (2017). Mind the hype: A critical evaluation and prescriptive agenda for research on mindfulness and meditation. Perspectives on Psychological Science. https://doi.org/10.1177/1745691617709589.

Wen, L., Sweeney, T. E., Welton, L., Trockel, M., & Katznelson, L. (2017). Encouraging mindfulness in medical house staff via smartphone app: A pilot study. Academic Psychiatry, 41(5), 646–650. https://doi.org/10.1007/s40596-017-0768-3.

Weinstein, N., Brown, K. W., & Ryan, R. M. (2009). A multi-method examination of the effects of mindfulness on stress attribution, coping, and emotional well-being. Journal of Research in Personality, 43(3), 374–385. https://doi.org/10.1016/j.jrp.2008.12.008.

Weiss, L. A., Westerhof, G. J., & Bohlmeijer, E. T. (2016). Can we increase psychological well-being? The effects of interventions on psychological well-being: A meta-analysis of randomized controlled trials. PLoS One, 11(6), e0158092. https://doi.org/10.1371/journal.pone.0158092.

Yang, E., Schamber, E., Meyer, R. M., & Gold, J. I. (2018). Happier healers: Randomized controlled trial of mobile mindfulness for stress management. The Journal of Alternative and Complementary Medicine, 24(5), 505–503. https://doi.org/10.1089/acm.2015.0301.

Zigmond, A. S., & Snaith, R. P. (1983). The hospital anxiety and depression scale. Acta Psychiatrica Scandinavica, 67, 361–370. https://doi.org/10.1111/j.1600-0447.1983.tb09716.x.

Acknowledgements

This research was funded by the Office of the Vice-Chancellor, University of Otago. The authors thank research assistants Tayla Boock, Todd Johnston, and Samantha McDiarmid for their assistance in collecting data.

Author information

Authors and Affiliations

Contributions

JF: co-conceived the study idea and research design, co-conducted the statistical analyse, co-wrote the manuscript, and managed the data collection and research team. HH co-conceived the study idea and research design and co-wrote the manuscript. BR: contributed to the data collection, and co-wrote the manuscript. LT: contributed to the data collection. TC: co-conceived the study idea and research design, co-conducted the statistical analyses, and co-wrote the manuscript.

Corresponding author

Ethics declarations

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards. This article does not contain any studies with animals performed by any of the authors.

Conflict of Interest

The authors declare that they have no conflict of interest.

Electronic Supplementary Material

ESM 1

(DOCX 225 kb)

Rights and permissions

About this article

Cite this article

Flett, J.A.M., Hayne, H., Riordan, B.C. et al. Mobile Mindfulness Meditation: a Randomised Controlled Trial of the Effect of Two Popular Apps on Mental Health. Mindfulness 10, 863–876 (2019). https://doi.org/10.1007/s12671-018-1050-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12671-018-1050-9