Abstract

In this electronic era, everyone is in fast communication and sharing of data through social Medias. Within a fraction of second we received millions of text in whatsapp, facebook, twitter, mails and etc. It is really hard to categorize relevant data and information from massive volume of text documents. Instead of reading all documents fully, there is a need to determine Topic and subtopic of a corpus. Existing technique takes more time to detect topic and subtopic of a corpus, so we proposed dynamic multiple spider hunting algorithm. Due to the usage of multiple spiders, this technique could effectively recognize the desired artifacts with minimum amount of time and have superior performance compared to other techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Web based text are further and growing tremendously day by day. Text is the most common technique for the recognized discussion of information. The computer facilitated communication via documented messaging has become widespread (Le and Mikolov 2014). This kind of automated discourse is detected in point-to-point or multicast, text-based online messaging facilities such as chat servers, discussion forums, email and messaging services, online searching, distance learning, newsgroups and IRC.

There is an enormous capacity of data and information collective through social network in every instant. Interaction and communication in social broadcasting repeatedly reflect real world occasions and dynamics as the user base of social networks (Dan et al. 2011) grows broader and more energetic in manufacturing content around real world actions almost in real time.

Social networks and micro blogging facilities like whatsapp, facebook and twitter are identified for the huge volume of data published every second by the users (Lee and Fu 2008). News articles like Reuters and Bloomberg broadcast thousands of articles everyday covering extensive variety of topics. The information blast demands for new tools and tactics to process this amount of data as a sole user cannot read all the information available (Yoon et al. 2016). This text leads to replicated, redundant and unreliable data. To raise the eminence and exactness of data there is a requirement to achieve high superiority information from the text. Text mining is the technique of organizing input and developing pattern. Text analysis tries to extract important information from natural language text as described by Yao et al. (2016).

Text mining is the finding of new, formerly unknown information through computer, by automatically mining information from dissimilar written resources. Text mining procedures are the ultimate and enabling tools for efficient association, navigation, retrieval and summarization of huge document corpus as discussed in Mörchen et al. (2008). With more and more text information are scattering everywhere on Internet, text mining is accumulative in importance. Text clustering and text classification are two essential responsibilities in text mining. For viable usage text mining will be the follow-up of data mining. With the rising amount of digitized documents and having huge text databases, text mining will become progressively significant. Text mining can be an enormous advantage for finding related and preferred text data from unstructured data sources.

Text mining takes unstructured documents as input data. In other words, documents that is tough to recognize in terms of meaning. There are rare companies working on gainful claims for text mining. Because of the challenges involved in working with text and the differences among languages it is a challenge to generate general solution or application. Proposed work is to essence on multiple topics and areas. The Multiple Spider Hunting Algorithm effectively detects the topics and subtopics as explained in Turan et al. (2012) based on the frequency measure with limited time complexity.

2 Related work

Earlier research on topic development has often leveraged refinements to Latent Dirichlet Allocation (LDA) to recognize emerging topics. However, such techniques do not answer the query of which studies contributed to the development of a topic. Blei et al. (2003) Latent Dirichlet Allocation (LDA) model, using a bag of words approach to find out topics in a text corpus of documents (frequently the title and the abstract).

Early approaches to topic extraction, such as cluster models (Liu and Croft 2004), depict documents by a single topic, but succeeding research has focused on recognizing several topics, based on LDA or its extensions. An example of a system planned to classify rising topics as discussed in Mörchen et al. (2008). As extensions to the LDA model such as the inheritance topic model and dynamic topic model as described by Blei and Lafferty (2006).

The side information is difficult to estimate when it contains noisy data (Bhanuse et al. 2016). Its not only text data which mined but also text stream mining is a new research area where new techniques are proposed for text stream classification and evolution analysis of the same as discussed in Aggarwal and Zhai (2012a, b). It worked on classifying blog text according to the mood reported by its author during the writing. Mishne considered different textual features like frequency counts of words, emotional polarity of posts, length of posts, PMI, emphasized words and special symbols like emoticons and punctuation marks (Mishne 2005) Ongoing rapid progress and extensive application of the internet, there is a massive amount of information distributed on the web.

The conventional string based search often failed to hit the relevant pages and feedbacks a lot of irrelevant pages from user request. A common problem for a user is that “Everything is on the web, but we just cannot find what we need” is partially true as most of the data over the web is scattered, unstructured, often inconsistent and insufficient (Hepp 2006).

Data sets are not interlinked with each other which makes mining even more difficult to manage. Web Usage Mining is to find out extract the useful information from web data or web log files. The other goals are to enhance the usability of the web information and to apply the technology on the web applications, for instance, pre-fetching and catching, personalization etc. For decision management, the result of web usage mining can be used for target advertisement, improving web design, improving satisfaction of customer, guiding the strategy decision of the enterprise and market analysis as discussed in Lee and Fu (2008).

Karampatsis et al. (2014) described the twitter sentiment analysis for specifying the polarity of messages. They used the two stage pipeline approach for analysis. Authors used the sum classifier at each stage and several features like morphological, POS tagging, lexicon etc. are identified. A general algorithm for web log cleaning is presented below. The algorithm takes as input the web log file (Web Log File) and produces as output, a cleaned log file (New-Log File), which is free from irrelevant and redundant entries as described in Shivaprasad et al. (2015).

Pre-processing involves cleaning the data of inconsistencies and/or noise, and combining or removing redundant entries. Pre-processing also involves converting the attributes of the dataset into numeric data and saving in a readable format. Social networking websites, blogs, SMS, chat applications are attractive source of data for data mining as they offer large quantity of real time data (Duque and Bin Omar 2015).

Latent semantic indexing (LSI) provides a determining technique for search latent semantics in free-text as discussed in Seo (2004). It involves generating a weighted term-document matrix and relating singular value decomposition (SVD) to produce a lower-rank factorization, used for evaluating terms or documents. The keyword-based filtering is not so efficient for tracking tweets that articulate client opinions about profitable brands (e.g., Delta Airlines). Therefore, they leveraged crowd-sourcing property to tag tweets that satisfy predefined queries to instruct a supervised binary classifier (Chen et al. 2013).

A bootstrapping approach for tracking tweets that are associated to precise TV shows as discussed in Banea et al. (2008). They used domain knowledge and a semi-supervised training of a classifier, where they tagged tweets physically for relevance, then used them to classify candidate tweets (Dan et al. 2011).

The first line of work on sentiment analysis functional several pre developed sentiment lexicons, for example, Subjectivity Wordlist (Dan et al. 2011), Word Net Affect (Strapparava and Valitutti 2004) and Senti-Word Net (Baccianella et al. 2010) to categorize documents by emotions.

For topic detection and tracking working topic segmentation by using a dictionary that mapped deviations of wording to parameters as discussed in Liu et al. (2014). To notice the latent topics inherent in a set of documents, we first cluster the learned paragraph vectors using the k-means clustering algorithm to obtain K cluster centre of the paragraph vectors (Hashimoto et al. 2016). As a distance metric for k-means clustering, we use the cosine similarity between paragraph vector as discussed in Dhillon et al. (2001).

Whilst optional distance metrics could be used in k-means clustering (e.g., Euclidean distance), previous work has demonstrated that the cosine of the angle between word or paragraph vectors provides robust results as discussed in Collobert et al. (2011). Producing a word requires the selection of a topic based on its proportion in the document and then drawing a word from that latent class’s word allocation. Model parameters may be optimized using the Expectation Maximization (EM) algorithm as discussed by Chien and Chueh (2011). Adaptive micro blog filtering tasks that focuses on tracking topics of wide and dynamic nature and suggest an entirely (Hu et al. 2013) unsupervised approach that adjusts to new aspects of the topic to recover relevant micro blogs as discussed in Magdy and Elsayed (2016).

Hierarchical clustering (Chien and Chueh 2011) is functional to cluster burst topics and reveal burst patterns from the macro viewpoint (Dong et al. 2017). Frequent sub graph mining is used to determine the information flow prototype of burst topic from the micro perspective as described by Dong et al. (2017). Topic detection sense topics in news articles or blog posts (Chen et al. 2007; Harabagiu and Lacatusu 2005) removed hot terms from the text by combining TF n PDF and the Age Theory.

A simple and effective topic detection model called the sequential discriminative probabilistic model (DPM) to suffice for both offline and online topic recognition tasks (He et al. 2010). Commercial system like Data miner, Sysomos, Brand watch, Media Miser and Topsy. Non-commercial system like CMU system, UMass system. Meme Detection system or Blogscope. CMU system attempts to cluster continuously arriving news stream into groups that distribute the same event.

Twitter monitor primary focus on bursty keywords (Dong et al. 2017) that have a higher absolute frequency than usual. It uses bursty keywords as a seed to discover them future. EDCOW applies a wavelet analysis on words to model their frequencies. Trivial words are identified with their cross correlation value. The focus lies on building taxonomy from tendency for a precise area and to classify them rather than finding co-occurrence trends. Text data in real world is not consistent Eg. E-news it enclose news articles but the amount of articles is unlimited. Chat log data is repeatedly adding terms and sentences called text streams. With text stream it is very hard to distinguish boundaries between stories. Each data may have metadata i.e. group of contents. Extraction of topic characteristic vector singular value decomposition () SVDis a traditional method for extracting feature vectors in data. Depending on the application it is principal component analysis (PCA), latent semantic indexing (LSI) or Karhunen–Loeve Transformation for extracting feature vectors.

Probing several illustrative cases discovered that most of these inconsistencies were caused by improper data preprocessing, including huge data, incomplete data normalization, subjective data linearization or non-linearization, biased weight adjustment, and information-loss discretization as discussed in Chen and Honda (2018).

A well-organized semantic reference technique that helps users filter the Twitter stream for exciting content has been explained in Karidi et al. (2018). The groundwork of this method is a knowledge graph (KG) that can denote all user topics of interest as a diversity of concepts, objects, events, persons, entities, locations and the relations between them. Our method uses the KG and graph theory algorithms not yet applied in social network analysis in order to build user interest profiles by recovering semantic information from tweets.

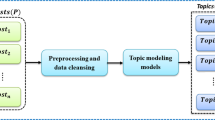

3 Preparing data

The frequently used preprocessing steps in text mining are gathered together and to form a framework called ERT. There are three major phases in ERT framework i.e. expansion, removal and tokenization.

Documents (or) corpus are feed as an input to the ERT framework. The first phase expansion searches the document which includes any acronyms, short forms, polysemes, mis-spelling, icons or abbreviations. This phase expands the short text content and forward to removal phase (Rao et al. 2016).The second phase removes the prefix and suffix of the terms and the non-keyword terms (Zhang et al. 2016). The output of this phase is only keywords and root terms. The last phase converts continuous word collection into the list of words called tokens and storedin a database (Fig. 1).

4 Spider construction

4.1 Topic design

Topic model (Fig. 2) is built in the format of web. The focal center is called Spider; every one of the topics are associated to spider. This system comprises of five distinctive emerging topics like Sports, Defense, Education, Tourism and Media. If there is a need to change the topics with some new emergent topics this model can easily support as described in Elakiya and Rajkumar (2018).

4.2 Sub topic design

The topics are further sub divided into sub-topics. Sub-topics are the major sub division relevant to that particular topic. Topic and subtopic are represented as nodes and are associated together through links (Fig. 3). Every sub topic node contain group of words connected to the topic node and the links hold the weightage as discussed in Langlet and Clavel (2016). Some topic contains less number of sub topics and some other contains more sub topics and the number of sub topics should be automatically enhanced when the corpus is belonging to the particular sub topic. The frequently accessed sub topics are only linked in the graphical representation (Elakiya and Rajkumar 2018).

4.3 Spider design

The entire topic and sub-topic model of all the five topics and their relevant subtopics are committed and the central spider is connected with different topics like sports, defense, education, tourism and media (Fig. 4). Then, the topics in turn linked with their frequently accessed sub-topics as described in Elakiya and Rajkumar (2018).

4.4 Multiple spider design

If a document contains more number of pages then the number of paragraphs and sentences also increased rapidly. Developing cluster for each sentence and processing the clusters to detect topic will take more time.

To reduce the time complexity instead of using single spider move with multiple spiders (Fig. 6). This spider system can run multiple processes in parallel to each other efficiently. In multiple spider hunting approach, construction of spider is dynamic and the number of spider is based on token length of corpus. Spiders are created automatically and connected to the central core.

In layered view topic model, nucleus is the core and the spiders are directly connected to the core. Topic lists are the next layer connected to the spiders and outer most layer is the list of subtopics connected to the topics (Fig. 5).

In this figure, the core is connected to the four spiders and each spider in turn connected to the four different topics like sports, health, education and tourism (Fig. 6).

4.5 Cluster of words

A corpus is divided into pages and pages are divided into paragraphs in further paragraphs are divided into sentence. Then, the sentence is preprocessed and exacts the keywords and form one new cluster. Each cluster contain group of keywords of every sentence and the clusters are named as S. The sentence clusters are grouped into one paragraph cluster P and in turn paragraph clusters are grouped into one page cluster Pg. Finally, the corpus cluster C contains the collection of page clusters. This cluster of keywords is called bag of related words (Fig. 7)

Given text corpus is preprocessed using ERT framework and generate the list of tokens, then token list is feed as an input to multiple spider hunting algorithm. The input corpus is broken into equal number of token groups based on the number of spiders. Each spider takes the allotted inputs and start running simultaneously. If the spiders are completed their given token lists, then the results are transferred to the central hub (core) and the core waits to get topic list from the processed spider.

All the spiders are running in equal speed and all having the same token inputs so the core gets the spider return their results immediately without delay. For Example, the topic detection model consist of four spiders S1, S2, S3 and S4 and each spider contain four topics T1, T2, T3 and T4. The central hub spider is represented as CH

where CH (T1) represents the Core of Topic 1 is the aggregate Topic 1 of all four spiders.

The percentage of topic detection is calculated using

4.6 Multiple spider hunting algorithms

Spider hunting is unimodal optimization algorithm inspired on the collective behavior of spider. The mechanisms of constructing web and catching prey were used as inspiration to create the search operators. The core idea is to make the spiders “spin” around the positive angle in order to “eat” and “gain weight”.

Collectively, the heavier spiders are more influent in the search process as a whole; it makes the barycenter of the spider moves toward better places in the search space over the iterations. Spider hunting is a population based search algorithm inspired in the behavior of spinning spiders that expand and contract while searching for nourishment. Each spider dimensional location represents a conceivable answer for the advancement issue.

4.7 Notations

Notations used in spider hunting algorithm (Table 1)

5 Experimental analysis

To check the precision of a framework when it recognizes topics for a number of documents on the basis of user’s input. Let the arrangement of correct topic detection of a document be signified as {Relevant} and the arrangement of topic detection of a document as {Detected} (Table 2). The set of documents that are Relevant and Detected can be meant as {Relevant} ∩ {Detected} (Fig. 8)

Precision is the level of topic detected document is in reality correct to the given corpus. Precision can be characterized as

Recall is the level of correct topic detection of a corpus and was in actuality topic detected. Recall is characterized as

F-score is the generally utilized trade-off. The information retrieval framework regularly needs to trade-off for precision or vice versa. F-score is characterized as harmonic mean of recall or precision as follows

5.1 Time complexity

The processing time of multiple spider hunting algorithm is very less compared to spider hunting algorithm due to the usage of optimal number of spiders based on the volume of a corpus. Example, the topic detection of 3000 words takes the time factor of SHA and MSHA in the ratio of 3:1 (Fig. 9).

6 Conclusion

The dynamic multiple spider hunting algorithm has been proposed to reduce the time complexity by creating more number of spiders based on corpus length. Given corpus is preprocessed using ERT Framework and the tokens are directed to the multiple spider topic model. The tokens are processed to detect topics and subtopics. This topic model can be easily enhanced for several new topics and subtopics. The proposed Spider algorithms are assessed against widely used standard algorithms and our proposed algorithms have greater performance when compared with other state-of-the-art meta-heuristics. In future, the topic and subtopic detection can be based on Semantics of the content and in some circumstance it can also consider opinion. This paper concentrates only English Language and in future this work can be enhanced in various languages.

Change history

30 May 2022

This article has been retracted. Please see the Retraction Notice for more detail: https://doi.org/10.1007/s12652-022-04002-9

References

Aggarwal CC, Zhai C (2012a) Mining text data. Springer, Tokyo, pp 140–521

Aggarwal CC, Zhai C (2012b) A survey of text clustering algorithms. In: Mining text data, pp 77–128

Baccianella S, Esuli A, Sebastiani F (2010) Sentiwordnet 3.0: an enhanced lexical resource for sentiment analysis and opinion mining. LREC 10:2200–2204

Banea C, Mihalcea R, Wiebe J (2008) A bootstrapping method for building subjectivity lexicons for languages with scarce resources. LREC 8:2764–2767

Bhanuse SS, Kamble SD, Kakde SM (2016) Text mining using metadata for generation of side information. Proc Comput Sci 78:807–814

Blei DM, Lafferty JD (2006) Dynamic topic models. In: ACM proceedings of the international conference on machine learning, pp 113–120

Blei DM, Ng AY, Jordan MI (2003) Latent dirichlet allocation. J Mach Learn Res 3:993–1022

Chen T, Honda K (2018) Solving data preprocessing problems in existing location-aware systems. J Ambient Intell Human Comput 9(2):253–259

Chen KY, Luesukprasert L, Seng-cho TC (2007) Hot topic extraction based on timeline analysis and multidimensional sentence modeling. IEEE Trans Knowl Data Eng 19(8):1016–1025

Chen J, Cypher A, Drews C, Nichols J (2013) CrowdE: filtering tweets for direct customer engagements. In: Proceedings of the seventh international AAAI conference on weblogs and social media, pp 51–60

Chien JT, Chueh CH (2011) Topic-based hierarchical segmentation. IEEE Trans Audio Speech Lang Process 20(1):55–66

Collobert R, Weston J, Bottou L, Karlen M, Kavukcuoglu K, Kuksa P (2011) Natural language processing (almost) from scratch. J Mach Learn Res 12:2493–2537

Dan O, Feng J, Davison BD (2011) A boot strapping approach to identifying relevant tweets for social TV. In: Proceedings of the fifth international AAAI conference on weblogs and social media, pp 462–465

Dhillon IS, Fan J, Guan Y (2001) Efficient clustering of very large document collections. In: Data mining for scientific and engineering applications, Springer, pp 357–381

Dong G, Yang W, Zhu F, Wang W (2017) Discovering burst patterns of burst topic in twitter. Comput Electr Eng 58:551–559

Duque S, Bin Omar MN (2015) Using data mining algorithms for developing a model for intrusion detection system (IDS). Proc Comput Sci 61:46–51

Elakiya E, Rajkumar N (2018) Topic detection using spider hunting algorithm. J Comput Theor Nano Sci 15(4):1402–1408

Harabagiu S, Lacatusu F (2005). Topic themes for multi-document summarization. In: Proceedings of the annual international ACM SIGIR conference on research and development in information retrieval, pp 202–209

Hashimoto K, Kontonatsios G, Miwa M, Ananiadou S (2016) Topic detection using paragraph vectors to support active learning in systematic reviews. J Biomed Inform 62:59–65

He Q, Chang K, Lim EP, Banerjee A (2010) Keep it simple with time: a reexamination of probabilistic topic detection models. IEEE Trans Pattern Anal Mach Intell 32(10):1795–1808

Hepp M (2006) Semantic web and semantic Web services: father and son or indivisible twins? IEEE Internet Comput 10(2):85–88

Hu X, Tang J, Gao H, Liu H (2013) Unsupervised sentiment analysis with emotional signals. In: ACM proceedings of the international conference on world wide web, pp 607–618

Karampatsis RM, Pavlopoulos J, Malakasiotis P (2014) AUEB: two stage sentiment analysis of social network messages. In: Proceedings of the international workshop on semantic evaluation, pp 114–118

Karidi DP, Stavrakas Y, Vassiliou Y (2018) Tweet and followee personalized recommendations based on knowledge graphs. J Ambient Intell Human Comput 9(6):2035–2049

Langlet C, Clavel C (2016) Grounding the detection of the user’s likes and dislikes on the topic structure of human–agent interactions. Knowl Based Syst 106:116–124

Le Q, Mikolov T (2014) Distributed representations of sentences and documents. In: International conference on machine learning, pp 1188–1196

Lee CH, Fu YH (2008) Web usage mining based on clustering of browsing features. IEEE Eighth Int Conf Intell Syst Design Appl 1:281–286

Liu X, Croft BW (2004) Cluster-based retrieval using language models. In: ACM proceedings of the annual international ACM SIGIR conference on research and development in information retrieval, pp 186–193

Liu X, Tao D, Song M, Zhang L, Bu J, Chen C (2014) Learning to track multiple targets. IEEE Trans Neural Netw Learn Syst 26(5):1060–1073

Magdy W, Elsayed T (2016) Unsupervised adaptive microblog filtering for broad dynamic topics. Inf Process Manage 52(4):513–528

Mishne G (2005) Experiments with mood classification in blog posts. Proc ACM SIGIR Workshop Stylist Anal Text Inf Access 19:321–327

Mörchen F, Dejori M, Fradkin D, Etienne J, Wachmann B, Bundschus M (2008) Anticipating annotations and emerging trends in biomedical literature. IN: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining, pp 954–962

Rao Y, Xie H, Li J, Jin F, Wang FL, Li Q (2016) Social emotion classification of short text via topic-level maximum entropy model. Inf Manag 53(8):978–986

Seo YW (2004) Text clustering for topic detection. Carnegie Mellon University, Pittsburgh, pp 1–12

Shivaprasad G, Reddy NS, Acharya UD, Aithal PK (2015) Neuro-fuzzy based hybrid model for web usage mining. Proc Comput Sci 54:327–334

Strapparava C, Valitutti A (2004) Word net affect: an affective extension of wordnet. LREC 4:1083–1086

Turan M, Kececi O, Kesim AE (2012) Article (document) topic and subtopic detection. (Undergraduate thesis). İstanbul KültürUniversity, İstanbul

Yao L, Zhang Y, Wei B, Li L, Wu F, Zhang P, Bian Y (2016) Concept over time: the combination of probabilistic topic model with wikipedia knowledge. Expert Syst Appl 60:27–38

Yoon HG, Kim H, Kim CO, Song M (2016) Opinion polarity detection in twitter data combining shrinkage regression and topic modeling. J Inf 10(2):634–644

Zhang C, Wang H, Cao L, Wang W, Xu F (2016) A hybrid term–term relations analysis approach for topic detection. Knowl Based Syst 93:109–120

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1007/s12652-022-04002-9

About this article

Cite this article

Elakiya, E., Rajkumar, N. RETRACTED ARTICLE: In text mining: detection of topic and sub-topic using multiple spider hunting model. J Ambient Intell Human Comput 12, 3571–3580 (2021). https://doi.org/10.1007/s12652-019-01588-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-019-01588-5