Abstract

Moving object detection is crucial for cognitive vision-based robot tasks. However, due to noise, dynamic background, variations in illumination, and high frame rate, it is a challenging task to robustly and efficiently detect moving objects in video using the clue of motion. State-of-the-art batch-based methods view a sequence of images as a whole and then model the background and foreground together with the constraints of foreground sparsity and connectivity (smoothness) in a unified framework. But the efficiency of the batch-based methods is very low. State-of-the-art incremental methods model the background by a subspace whose bases are updated frame by frame. However, such incremental methods do not make full use of the foreground sparsity and connectivity. In this paper, we develop an incremental method for detecting moving objects in video. Compared to existing methods, the proposed method not only incrementally models the subspace for background reconstruction but also takes into account the sparsity and connectivity of the foreground. The optimization of the model is very efficient. Experimental results on nine public videos demonstrate that the proposed method is much efficient than the state-of-the-art batch methods and has higher F1-score than the state-of-the-art incremental methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Object detection is the basis of intelligent video analysis [1, 2]. Generally, object recognition, action and behavior recognition, and tracking rely on the success of object detection [3–6]. In a sequence of images, there are both moving and static objects. In this paper, the focus is on detecting the moving objects. Using the technique of blur estimation, moving objects can be detected if only a single image is given [7]. But when a sequence of images is available, the performance of the blur estimation-based methods is not as good as that of the video-based ones. So this paper concentrates on detecting moving objects in a video (i.e., a sequence of images).

Usually, a video is recorded in about 10–30 frames per second. So there is a strict demand on the efficiency of the algorithm of moving object detection. Though classical methods such as simple background subtraction and frame difference are efficient in detecting moving objects, their performance in terms of precision and recall is not satisfactory [8, 9]. Online subspace updating is also an efficient way for moving object detection. But its performance in precision and recall is also unsatisfactory [8, 9]. Generally speaking, the subspace-based methods are superior to the methods of simple background subtraction and frame difference. Though batch-style algorithms for background and foreground separation such as DECOLOR [8, 10] are able to get good detection performance, it is time-consuming. To deal with the problem, in this paper, we propose an incremental algorithm which is not only computationally inexpensive and also superior to the state-of-the-art incremental algorithms in terms of precision and recall. The proposed method is called ISC where I, S, and C stand for incremental, sparsity, and connectivity, respectively.

In summary, the contributions and characteristics of the paper are as follows.

-

1.

We propose an incremental algorithm for detecting moving objects in video. The algorithm can be categorized into subspace-based method. The basis of the subspace is updated frame by frame. Therefore, it is more efficient than the state-of-the-art batch-style methods developed in [8] and [11].

-

2.

With a unified framework, the proposed incremental algorithm simultaneously takes into account the sparsity and connectivity (a.k.a. smoothness) of the foreground. Existing subspace-based algorithms make use of either sparsity or connectivity but do not leverage both sparsity and connectivity for forming their objective functions. Therefore, the proposed method achieves larger F-score (i.e., the harmonic mean of precision and recall) than the state-of-the-art incremental algorithms developed in [12, 13].

The rest of the paper is organized as follows. We first discuss related work in “Related Work” section. Then, we introduce the proposed ISC method in “Proposed Method: ISC” section. Experimental results are presented in “Experimental Results” section. Finally, “Conclusion and Future Work” section draws conclusions based on these experimental results.

Related Work

Moving object detection can be implemented by different manners: detecting followed by tracking, subtracting frames, modeling background by density function, modeling background by subspace, modeling background by low-rank matrix.

Detecting Followed by Tracking

This kind of methods detects objects by applying trained classifier on a frame and then tracking the detected objects in subsequent frames. The main steps of object detection are feature extraction and classifier design. Classical features include Haar-like (rectangle) features [14], Histograms of Oriented Gradients (HOG) [15, 16], Shift Invariant Feature Transform (SIFT) [17], Integral channel features [18, 19]. Representative tracking methods are Kalman filter and particle filter (sequential Monte Carlo) [20]. Biologically inspired approach is also promising for moving object detection [21].

Subtracting Frames

This kind of methods detects moving objects based on the differences between adjacent frames [22, 23]. But these methods were proved not robust against illumination variations, changing background, camera motion, and noise.

Modeling Background by Density Function

This strategy assumes that the background is stationary and can be modeled by Gaussian, Mixture of Gaussians, or Dirichlet Process Mixture Models [13, 24, 25]. The foreground (moving objects) can then be obtained by subtracting the current frame with the background model.

Modeling Background by Subspace

Instead of using a density function, subspace-based method models the background as a linear combination of the bases of a subspace [9, 12, 26–29]. Because the subspace can be updated in an incremental (online) manner, its efficiency is very high. This kind of subspace-based algorithms needs to impose constraints on the foreground in order to obtain valid solutions. Foreground sparsity is one of the widely used constraints which implies that the area of moving objects is small relative to the background. Principal Component Pursuit (PCP) [11, 30] is an important pioneer work which adopts \(l_1 \) norm for measuring the foreground sparsity. It is the constraint of foreground sparsity that makes PCP suitable for foreground–background separation. Without this constraint, traditional robust subspace methods can only deal with noise and outliers [31–34].

But PCP [11] is a batch algorithm. Its detection speed cannot arrive at real-time level. Therefore, incremental (online) subspace methods are crucial for real-time detection [35]. He et al. [12] proposed an online subspace tracking algorithm called GRASTA (Grassmannian Robust Adaptive Subspace Tracking Algorithm). Similar to PCP, GRASTA also explores \(L_1 \) norm for imposing sparsity on foreground. But the GRASTA algorithm does not utilize any connectivity (a.k.a. smoothness) property of foreground. The GOSUS (Grassmannian Online Subspace Updates with Structured-sparsity) algorithm [26] imposes a connectivity constraint on the objective function by grouping the pixels with a superpixel method and encouraging sparsity of the groups. Because of the large computational cost of the superpixel algorithm [36], GOSUS is not as efficient as GRASTA.

Modeling Background by Low-Rank Matrix

Low-rank modeling is effective in video representation [5, 10]. A sequence of vectorized images is represented as a matrix, and the matrix is approximated by the sum of matrices of vectorized foreground, background, and noise [8]. It is reasonable to assume that the background matrix is low-rank. DECOLOR (DEtecting Contiguous Outliers in the LOw-rank Representation) [8] is considered as one of the most successful low-rank-based algorithms. In DECOLOR, both foreground sparsity and contiguity (connectivity) are taken into account. It can be interpreted as \(\ell _0 \)-penalty regularized RPCA (Robust Principal Component Analysis) [8]. But the matrix computation can be started only if all of a predefined number of successive images is available. Obviously, such a batch method is not suitable for real-time video analysis due to its low efficiency.

The proposed ISC method incrementally updates the subspace bases and incorporates both foreground sparsity and foreground connectivity.

Proposed Method: ISC

In this section, we propose a subspace-based method for detecting moving objects in a video. The objective function is a function of the subspace matrix \({\mathbf{U}}\) and the locations of the foreground. The algorithm is an incremental one because the subspace matrix is updated frame by frame. Besides, the foreground sparsity and connectivity are included in the objective function. As mentioned in “Introduction” section, the proposed method is called ISC where I, S, and C stand for Incremental, Sparsity, and Connectivity, respectively.

Objective Function

The objective function L is composed of terms of background fidelity \(L_{\text{ fidelity }}\), foreground sparsity \(L_{\text{ sparse }} \), and foreground connectivity \(L_{\text{ connect }} \):

where the weights \(\beta \) and \(\gamma \) balance \(L_{\text{ sparse }} \) and \(L_{\text {connect}}\), respectively.

We begin by stating the notations. Denote the width and height of an image as w and h. Each image is vectorized into a column vector \({\mathbf{x}}_t \in {{\mathrm{R}}}^n\) where t indexes the time. The intensity at pixel i is denoted by \({\mathbf{x}}_t (i)\). The sequence of the images is \({\mathbf{X}} = [{\mathbf{x}}_{t - T} ,{{\mathbf{x}}_{t - T + 1} ,\ldots , {\mathbf{x}}_t ] \in {{\mathrm{R}}}^{n\times T}}\). The task is to segment the image \({\mathbf{x}}_t \) into background and foreground regions. The foreground is indicated by a binary vector \({\mathbf{s}} \in \{0,1\}^n\):

The foreground can be extracted from \({\mathbf{x}}_t \) by the foreground indicator vector \({\mathbf{s}}\):

where \(P_{\mathbf{s}} \) is called foreground extraction operator. Similarly, the background can be extracted from \({\mathbf{x}}_t \) by a background extraction operator \(P_{{\mathbf{s}}_ \bot } \):

In addition to \({\mathbf{x}}_t \), \(P_{\mathbf{s}} \) and \(P_{{\mathbf{s}}_ \bot } \) can also be applied on other vectors.

The term of background fidelity \(L_{\text {fidelity}} \) is a function of a subspace matrix \({\mathbf{U}}\) and a foreground indicator vector \({\mathbf{s}}\). The subspace matrix \({\mathbf{U}} = [{\mathbf{u}}_1 ,{\mathbf{u}}_2,\ldots ,{\mathbf{u}}_d ] \in {{\mathrm{R}}}^{n\times d}\) consists of d bases. Each basis \({\mathbf{u}}_i \) is an n-dimensional column vector. The weighted sum of the bases is used for estimating the background. Specifically, the background is estimated as \(P_{{\mathbf{s}}_ \bot } ({\mathbf{Uy}})\) where \({\mathbf{y}} \in {{\mathrm{R}}}^d\) is called coefficient vector. The error between \(P_{{\mathbf{s}}_ \bot }({\mathbf{Uy}})\) and the observation \(P_{{\mathbf{s}}_ \bot } ({\mathbf{x}}_t )\) forms \(L_{\text{ fidelity }} \):

The term of foreground sparsity \(L_{\text{ sparse }} \) is used to regularize \(L_{\text {fidelity}} \) so that meaningful solution can be obtained. The foreground sparsity states that the area of foreground is small relative to the whole image. The area of foreground is equal to the sum of the foreground indicator vector \({\mathbf{s}}\):

Because the element of \({\mathbf{s}}\) is either zero or one, \(L_{\text {sparse}} \) can be equivalently expressed by \(\ell _0 \) norm or \(\ell _1 \) norm:

The foreground sparsity \(L_{\text {sparse}} \) is also used in GRASTA [12], an online subspace method. But the sparsity itself is not enough for describing the properties of a foreground. \(L_{\text {sparse}} \) only makes use of the fact that the area of foreground is small. But it does not explicitly utilize the connectivity of foreground. The connectivity property states that the pixels of a foreground are not scattered anywhere. Instead, the foreground pixels should connect to each other. The term of foreground connectivity is expressed as

where N is the set of interacting (i.e., neighboring) pairs of pixels. To the best of our knowledge, we are the first to introduce \(L_{\text {connect}} \) into the incremental subspace-based method for moving object detection.

Substituting (5), (7), and (8) into (1) yields

In (9), we write the objective function as \(L({\mathbf{U}},{\mathbf{y}},{\mathbf{s}})\) in order to show that \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\) are unknown.

Optimization

The goal is to find the optimal solutions:

But this is a difficult problem because the function is highly nonconvex and nonlinear. To solve this problem, we propose to minimize \(L({\mathbf{U}},{\mathbf{y}},{\mathbf{s}})\) by alternately optimizing over \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\).

Find \({\mathbf{U}}\) When Keep \({\mathbf{y}}\) and \({\mathbf{s}}\) Fixed

Computing \({\mathbf{U}}\) is a key for subspace-based method. When \({\mathbf{y}}\) and \({\mathbf{s}}\) are considered as known, the objective function \(L({\mathbf{U}},{\mathbf{y}},{\mathbf{s}})\) reduces to \(L_{\text{fidelity}} \) because \(L_{\text {sparse}} \) and \(L_{{\text {connect}}} \) are independent to \({\mathbf{U}}\). A reasonable constraint on \({\mathbf{U}}\) is that \({\mathbf{U}}\) is an orthogonal matrix:

where \({\mathbf{I}} \in {{\mathrm{R}}}^{d\times d}\) is the identity matrix and \({\mathbf{{U}'}}\) is the transpose of \({\mathbf{U}}\). It is known that orthogonal matrices representing linear subspaces of the Euclidean space can be represented as points on the Grassmann manifolds [37]. So subspace estimation can be equivalently formulated into an optimization problem on Grassmann manifolds [37]. The optimization can be performed by using the gradient \(\partial L_{\text {fidelity}} / \partial \mathbf U\) on the Euclidean space and the gradient \(\nabla L_{{\text {fidelity}}} \) on the Grassmannian [38]. The gradients are given by

and

where the residual vector \({\mathbf{r}}\) is

According to [10], the subspace matrix \({\mathbf{U}}\) is updated by

where \(\eta \) is the gradient step length and \(\sigma \) is given by

Find \({\mathbf{y}}\) When Keep \({\mathbf{U}}\) and \({\mathbf{s}}\) Fixed

\({\mathbf{y}}\) is the coefficient vector for approximating \({\mathbf{x}}_t \) using \({\mathbf{U}}\). Because the update rule of (15) guarantees the orthogonality of \({\mathbf{U}}\), \({\mathbf{y}}\) can be directly computed by projecting \({\mathbf{x}}_t \) onto \({\mathbf{U}}\):

Find \({\mathbf{s}}\) When Keep \({\mathbf{U}}\) and \({\mathbf{y}}\) fixed

The foreground indicator rector \({\mathbf{s}}\) is the final output of the proposed algorithm. Investigating (9) and noting that \({\mathbf{s}}\) is a binary vector, one can find that minimizing (9) is a standard graph cuts problem [39] when keep \({\mathbf{U}}\) and \({\mathbf{y}}\) unchanged. Therefore, \({\mathbf{s}}\) can be obtained by applying the graph cuts algorithm [39] which has been frequently used in the task of image segmentation.

The above three steps iterate until convergence or a predefined number of iterations is conducted. The procedure runs for each frame, resulting in updated \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\). The output \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\) for the last frame is used as the initialization for the current frame. This incremental algorithm is able to detect moving objects frame by frame. This contrasts with the batch algorithm DECOLOR [8]. Though DECOLOR also adopts the foreground sparsity and connectivity, it runs group by group with each group containing a number (e.g., 50) of frames. It is noted that the proposed ISC (Incremental Sparsity Connectivity) algorithm utilizes the first m (e.g., \(m = 30\)) frames for obtaining stable solutions of \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\). The detection performance for the first m frames is not satisfactory because the information containing in the small number of frames is not rich enough. So the first m frames are used for initialization. The training algorithm of the proposed ISC method is given in Algorithm 1. The training algorithm has an inner loop and an outer loop. To obtain mature \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\), the outer loop iterates until convergence or a predefined number K of loops is achieved. Experimental results show that \(K=10\) yields good performance. Convergence is obtained if the improvement in decreasing the value of the object function is less than a threshold \(\delta \). Specifically, \(\delta =10^{-5}\) in our experiments.

The frames after time m can be robustly detected by our ISC algorithm. Algorithm 2 is the testing stage of the ISC method. The frames from \(m + 1\) to T are detected frame by frame. Once a new frame comes, the subspace matrix, coefficient vector, and foreground indicator vector are updated. The output \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\) of the training algorithm is used as input of the testing algorithm. The testing algorithm contains one layer of loops, and the solutions of \({\mathbf{U}}\), \({\mathbf{y}}\), and \({\mathbf{s}}\) are updated frame by frame.

Experimental Results

Data Sets and Setup

The Perception Test Images Sequences [24] are used for evaluation. The dataset consists of 9 videos captured in a variety of indoor and outdoor environments, including offices, campuses, sidewalks, and other private and public sites [24]. The weather conditions when collecting the data cover sunny, cloudy, and rainy weather. The videos with static background are named Bootstrap, Shopping Mall, and Hall. The videos with dynamic background are called Fountain, Escalator, Water Surface, Curtain, and Campus. The Lobby video was captured when there were drastic variations in illumination. The sizes (widths and heights) of the frames includes [160, 130], [160, 128], [176, 144], [160, 120], [160, 128], and [320, 256]. The details of the dataset are given in Table 1.

We compare the proposed ISC algorithm with PCP (Principal Component Pursuit) [11], DP-GMM (Dirichlet Process–Gaussian Mixture Models) [13], GRASTA (Grassmannian Robust Adaptive Subspace Tracking Algorithm) [12], and DECOLOR (DEtecting Contiguous Outliers in the LOw-rank Representation) [8]. PCP and GRASTA are the state-of-the-art subspace-based algorithms. DP-GMM is the state-of-the-art density-based algorithm and DECOLOR is the state-of-the-art low-rank-based algorithm. DP-GMM and GRASTA belong to incremental algorithms, whereas PCP and DECOLOR are batch algorithms. We run the source codes provided by the authors of four methods on the above-mentioned dataset to get the experimental results. Note that GRASTA randomly samples a fraction of pixels in an image for subspace modeling and object detecting. Its detection accuracy decreases with the fraction. To reduce randomness and get its best accuracy, 100 % pixels are used in our experiments.

The parameters of our ISC algorithm are given in Table 2. The value of \(\beta \) is set as the same manner as in DECOLOR [8]. In Table 2, \(\hat{\sigma }^2\) is defined as

where the elements of vector \({\mathbf{a}} \in {{\mathrm{R}}}^n\) have the same value a

In (19), \({\mathbf{e}} \in {{\mathrm{R}}}^n\) is vector of all ones.

The \(F_1 \)-score, the harmonic mean of precision and recall, is used for objective evaluation:

Note that both ISC and DECOLOR cannot generate the ROC curves because of their binary values of the foreground indicator vector. The ISC and DECOLOR algorithms can only produce one operating point from which the \(F_1\)-score is calculated.

Moreover, the detection speed, frames per second (FPS), is employed for comparing the efficiency of different algorithms.

Results

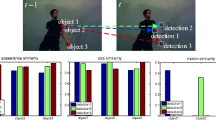

We first show in Fig. 1 two instances of detection results of our method and the incremental algorithm GRASTA. The first images of rows 1 and 2 are frames of the videos Escalator and Fountain, respectively. It is challenging to detect moving objects from the two videos because of their dynamic backgrounds: moving escalators and fountain. It is seen from Fig. 1c and d that our ISC method greatly reduces the influence of the dynamic backgrounds whereas GRASTA mistakenly classifies some of the dynamic grounds as moving objects. The reason that ISC is superior to GRASTA is that the former adopts the constraints of both foreground sparsity and connectivity whereas the later only employs the constraint of foreground sparsity. The connectivity of the fountain is very weak and so the influence of moving fountain can be suppressed and even eliminated by exploring foreground connectivity.

Figure 2 shows the detection results on the videos of Bootstrap (top) and Shopping mall (bottom) where the backgrounds are relatively static. ISC also achieves better detection results than GRASTA. Comparing the first row of Fig. 2c and d, we find that GRASTA gives rise to many holes in the moving object regions whereas our ISC method smoothly connects the component of the objects. The bottom of Fig. 2c and d shows that GRASTA misses some persons.

In addition to dynamic background, varying illumination is also an important factor for degrading the detection performance. The Lobby video was captured in a lobby environment in an office building with switching on/off lights [24]. So this video can be used for evaluating the robustness to variations in illumination. The top of Fig. 3 shows the frames at time 392, 428, 512, 542, and 628, respectively. The bottom of Fig. 3 shows the reconstructed background of the ISC algorithm. It is observed that the background can be reconstructed perfectly no matter how the illumination changes.

Table 3 shows the F1-scores on the 9 videos of DP-GMM, GRASTA, PCP, DECOLOR, and our ISC. Among the 9 videos, there are 6 videos (i.e., Escalator, Lobby, Hall, Bootstrap, Fountain, and Shopping Mall) on which ISC gets higher F1-scores than GRASTA. There are 5 videos (i.e., Escalator, Hall, Bootstrap, Fountain, and Shopping Mall) on which ISC obtains higher F1-scores than DP-GMM. DP-GMM, GRASTA, ISC are incremental algorithms and ISC averagely has better F1-score than DP-GMM and GRASTA.

Now compare ISC with the batch algorithms PCP and DECOLOR. Among the 9 videos, there are 6 videos (i.e., Escalator, Lobby, Hall, Bootstrap, Fountain, and Shopping Mall) on which ISC gets higher F1-scores than PCP, showing the superiority of the incremental algorithm ISC over the batch algorithm PCP. From Table 3, one can also see that there are 3 videos on which ISC has higher F1-scores than the batch algorithm DECOLOR. DECOLOR is better than ISC in terms of F1-score. But as shown in Table 4, the detection speed of DECOLOR is too slow. The detection speed of ISC is about 15 fps, whereas it is roughly 1.5 fps for DECOLOR.

Table 4 also tells that ISC is efficient than the batch algorithm PCP. The detection speed of ISC is about half of that of GRASTA. Note that the results of ISC, GRASTA, and DECOLOR are obtained by running the algorithms on a 3.2 GHz CPU and 4G RAM computer. The speed of PCP origins from [11] where the results are obtained by a computer with 2.33 GHz Core2 Duo processor and 2 GB RAM. According to [13], DP-GMM achieves 23.5 fps for frame size \(320\times 240\) when using a 4.4 GHz CPU together with GeForce GTX 580 GPU. It is unknown what its speed is if GPU is not employed for speeding up.

Generally speaking, the proposed ISC method has higher F1-score than the incremental algorithm GRASTA and DP-GMM. The efficiency of ISC is lower than GRASTA but much higher than the batch algorithms PCP and DECOLOR.

It is noted that the performance (F1-score) of ISC on the videos Campus, Curtain, and Water Surface is not satisfactory. The three videos have large-area dynamic background. In this situation, the foreground connectivity gives arise to negative effect: background are wrongly connected and considered as moving objects. In the future, we will focus on solving this problem.

Conclusion and Future Work

In this paper, we have presented an incremental algorithm (called ISC) for detecting moving objects in video. In ISC algorithm, the background is modeled by a subspace and the foreground is represented by a foreground indicator vector. The main contribution is that the constraints of foreground sparsity and connectivity are integrated into the subspace-representation-based objective function. The detection accuracy (F1-score) is not only better than state-of-the-art incremental algorithms GRASTA and DP-GMM but also better than the classical batch algorithm PCP. Though the F1-score of ISC is not as high as the state-of-the-art batch algorithm DECOLOR, ISC is much efficient than DECOLOR. Our future work will extend the proposed model by incorporating saliency map to suppress the negative effect caused by the foreground connectivity. Moreover, other useful constraints are to be added to the proposed model.

References

Yuen P, Gao Y, Griffiths A, Coates A, Muller J, Smith A, Walton D, Leff C, Hancock B, Shin D. Exomars rover pancam: autonomous & computational intelligence. IEEE Comput Intell Mag. 2013;8(4):52–61.

Dios PF, Chung PWH, Meng Q. Landmark-based methods for temporal alignment of human motions. IEEE Comput Intell Mag. 2014;9(2):29–37.

Gao F, Zhang Y, Wang J, Sun J, Yang E, Hussain A. Visual attention model based vehicle target detection in synthetic aperture radar images: a novel approach. Cogn Comput. 2015;7(4):434–44.

Zeng W, Wang C, Li Y. Model-based human gait recognition via deterministic learning. Cogn Comput. 2014;6(2):218–29.

Shah M, Deng J, Woodford B. Video background modeling: recent approaches, issues and our proposed techniques. Mach Vis Appl. 2014;25(5):1105–19.

Zhao J, Du C, Sun H, Liu X, Sun J. Biologically motivated model for outdoor scene classification. Cogn Comput. 2015;7(1):20–3.

Pang Y, Zhu H, Li X, Li X. Classifying discriminative features for blur detection. IEEE Trans Cybern. 2015. doi:10.1109/TCYB.2015.2472478.

Zhou X, Yang C, Zhao H, Yu W. Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans Pattern Anal Mach Intell. 2013;35(3):597–610.

Guo X, Wang X, Yang L, Cao X, Ma Y. Robust foreground detection using smoothness and arbitrariness constraints. In: Proceedings of European conference on computer vision, 2014.

Zhou X, Yang C, Zhao H, Yu W. Low-rank modeling and its applications in image analysis. CoRR. abs/1401.3409 (2014).

Candes E, Li X, Ma Y, Wright J. Robust principal component analysis? J ACM. 2011;58(3):1–37.

He J, Balzano L, Szlam A. Incremental gradient on the Grassmannian for online foreground and background separation in subsampled video. In: Proceedings of IEEE international conference on computer vision and pattern recognition, 2012.

Haines T, Xiang T. Background subtraction with Dirichlet process mixture models. IEEE Trans Pattern Anal Mach Intell. 2014;36(4):670–83.

Viola P, Jones M. Robust real-time face detection. Int J Comput Vis. 2004;57(2):137–54.

Dalal N, Triggs B. Histograms of oriented gradients for human detection. In: Proceedings of IEEE international conference on computer vision and pattern recognition, 2005.

Pang Y, Zhang K, Yuan Y, Wang K. Distributed object detection with linear SVMs. IEEE Trans Cybern. 2014;44(11):2122–33.

Lowe DG. Distinctive image features from scale-invariant keypoints. Int J Comput Vis. 2004;60(2):91–110.

Dollar P, Appel R, Belongie S, Perona P. Fast feature pyramids for object detection. IEEE Trans Pattern Anal Mach Intell. 2014;36(8):1532–45.

Dollar P, Tu Z, Perona P, Belongie S. Integral channel features. In: Proceedings of british machine vision conference, 2009.

Isard M, Blake A. CONDENSATION—conditional density propagation for visual tracking. Int J Comput Vis. 1998;29(1):5–28.

Tu Z, Zheng A, Yang E, Luo B, Hussain A. A biologically inspired vision-based approach for detecting multiple moving objects in complex outdoor scenes. Cogn Comput. 2015;7(5):539–51.

Wang K, Xu L, Fang Y, Li J. One-against-all frame differences based hand detection for human and mobile interaction. Neurocomputing. 2013;120:185–91.

Neri A, Colonnese S, Russo G, Talone P. Automatic moving object and background separation. Signal Process. 1998;66:219–32.

Li L, Huang W, Gu I, Tian Q. Statistical modeling of complex backgrounds for foreground object detection. IEEE Trans Image Process. 2004;13(11):1459–72.

Haritaoglu I, Harwood D, Davis L. W4: real-time surveillance of people and their activities. IEEE Trans Pattern Anal Mach Intell. 2000;22(8):809–30.

Xu J, Ithapu VK, Mukherjee L, Rehg JM, Singh V. GOSUS: Grassmannian online subspace updates with structured-sparsity. In: Proceedings of IEEE international conference on computer vision, 2013.

Yuan Y, Pang Y, Pan J, Li X. Scene segmentation based on IPCA for visual surveillance. Neurocomputing. 2009;72(10–12):2450–4.

Pang Y, Wang S, Yuan Y. Learning regularized LDA by clustering. IEEE Trans Neural Netw Learn Syst. 2014;25(12):2191–201.

Pang Y, Ji Z, Jing P, Li X. Ranking graph embedding for learning to rerank. IEEE Trans Neural Netw Learn Syst. 2013;24(8):1292–303.

Bouwmans T, Zahzah E. Robust PCA via principal component pursuit: a review for a comparative evaluation in video surveillance. Comput Vis Image Underst. 2014;122(4):22–3.

Qiu CN, Vaswani N. Missing link between recursive robust pca and recursive sparse recovery in large but correlated noise. arXiv:1106.3286, 2011.

Torre F, Black M. A framework for robust subspace learning. Int J Comput Vis. 2003;54(1):117–42.

Favaro P, Vidal R, Ravichandran A. A closed form solution to robust subspace estimation and clustering. In: Proceedings of IEEE international conference on computer vision and pattern recognition, 2011.

Vidal R, Ma Y, Sastry. Generalized principal component analysis (GPCA). IEEE Trans Patt Anal Mach Intell. 2005;27(12):1945–59.

Balzano L, Nowak R, Recht B. Online identification and tracking of subspaces from highly incomplete information. In: Proceedings of Allerton conference on communication, 2010.

Achanta R, Shaji A, Smith K, Lucchi A, Fua P, Susstrunk S. SLIC: superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell. 2012;34(11):2274–82.

Mittal S, Meer P. Conjugate gradient on Grassmann manifolds for robust subspace estimation. Image Vis Comput. 2012;30(6–7):417–27.

Arias T, Edelman A, Smith S. The geometry of algorithms with orthogonality constraints. SIAM J Matrix Anal Appl. 1998;20(2):303–53.

Boykov Y, Veksler O, Zabih R. Fast approximate energy minimization via graph cuts. IEEE Trans Pattern Anal Mach Intell. 2001;23(11):1222–39.

Acknowledgments

This work was supported in part by the National Basic Research Program of China (973 Program) (Grant No. 2014CB340400), the National Natural Science Foundation of China (Grant Nos. 61172121, 61271412, 61472274, and 61222109, 61503274), the Key Research Program of the Chinese Academy of Sciences (Grant No. KGZD-EW-T03), and Excellent Young Scholar of the Tianjin University of Technology and Education (Grant No. RC14-46).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Jing Pan, Xiaoli Li, Xuelong Li, and Yanwei Pang declare that they have no conflict of interest.

Informed Consent

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008 (5). Additional informed consent was obtained from all patients for which identifying information is included in this article.

Human and Animal Rights

This article does not contain any studies with human or animal subjects performed by the any of the authors.

Rights and permissions

About this article

Cite this article

Pan, J., Li, X., Li, X. et al. Incrementally Detecting Moving Objects in Video with Sparsity and Connectivity. Cogn Comput 8, 420–428 (2016). https://doi.org/10.1007/s12559-015-9373-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-015-9373-5