Abstract

Higher education institutions typically express the quality of their degree programs by describing the qualities, skills, and understanding their students possess upon graduation. One promising instructional design approach to facilitate institutions’ efforts to deliver graduates with the appropriate knowledge and competencies is curriculum mapping. To support the complex activity of curriculum mapping and to address existing problems associated with current practices around unit guides, that many Australian higher education institutions are developing unit guide information systems (UGISs). This study examines factors influencing the acceptance and use of UGIS by unit conveners and academics. This study proposed a model for the acceptance of UGIS, which integrated key constructs from the technology acceptance model (TAM), social cognitive theory and model of PC utilization including seven main factors: perceived usefulness, perceived ease of use, attitude towards using the UGIS, intention to use the UGIS, social influence, unit guide specific self-efficacy, and unit guide specific anxiety. The model was tested on a sample of 134 unit guide users from 39 Australian universities and analyzed using structural equation modeling and partial least squares methods. Analysis showed that attitude, perceived usefulness, and perceived ease of use from the basic TAM model contributed significantly to explain the intention of academics and unit conveners to use UGIS. In addition, the integration of self-efficacy, anxiety and social influence as constructs were found to improve the fit of the model. Implications of the results are discussed within the context of unit guides and curriculum mapping.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Higher education institutions often express the quality of their degree programs by describing the attributes of their graduates. Moreover, there is increasing pressure from accrediting bodies, professional organizations and prospective employers for graduates to provide evidence of their qualities, skills, and understandings relevant to their domain of study and their future careers—such as communication skills, critical thinking, team work, creativity, ethics, and social responsibility. These qualities and skills are most commonly referred to as competencies in the US and graduate attributes or generic graduate attributes in Europe. Rigby et al. (2010) highlighted the various, interchangeable terms related to graduate attributes such as transferrable skills, generic skills, key skills, employment skills, soft skills, graduate outcomes, learning outcomes, and graduate capabilities.

One promising instructional design approach to facilitate institutions’ efforts to deliver graduates with the appropriate knowledge and competencies is curriculum mapping. Curriculum mapping ensures correspondence among desired learning outcomes, learning activities, and assessment tasks that validate an institution’s graduates have attained the attributes appropriate to their discipline and qualification levels. This information is often contained in what is called a unit guide (UG). A unit guide outlines the unit content such as its learning objectives, assessments, and rules governing the learning and teaching in that unit. Additionally, unit guides often include other components such as teaching staff details, teaching activities, and learning resources. Unit guides may be known under many different names such as course/unit outlines, study/course/learning guides, course/unit plans, course finders, syllabi, or course/unit catalogues.

However, according to Jones (2009), problems associated with current practice around unit guides include: loss of knowledge, duplication of effort, little or no knowledge of how one course relates to another, curriculum gaps, lack of ability for people teaching within a program to understand the overall picture, and no ability to provide the overall view to students. To address the issues with the current practices in curriculum mapping, universities in Australia are investing a substantial amount of financial and human capital into developing and implementing technology solutions to manage this complex activity. Many Australian institutions are introducing a new class of technology-based information systems to support the development of unit guides. These systems are usually tailored software applications with institution-specific names such as Units at Macquarie University, GAMP (Graduate Attribute Mapping Program) at Murdoch University, ReView at University of Technology Sydney, and CCMap (Curtin Curriculum Mapping) at Curtin University. More generally they may be called unit guide tools or curriculum mapping tools (Oliver 2010). For this study, we are calling such systems unit guide information systems (UGIS).

A key motivation for adopting UGIS is to ensure that all students—regardless of campus, mode, or lecturer—can have access to the course and unit catalog and have an equivalent understanding of the requirements of the unit. On the other hand, UGIS must allow staff to create, accurately maintain, and report on curriculum information such as graduate attributes and learning outcomes. The main purpose of a UGIS is to determine how much unit/course level information could be stored within an institution (not across institutions) and to develop a consistent process for all schools/faculties/departments to produce, deliver, and store unit guides. Most institutions have a range of web-based tools and Microsoft Word templates/Microsoft Excel workbooks in use to handle unit guides. While research on curriculum mapping exists, it does not consider UGIS or technology acceptance of such systems. However, use of an institution-wide computer-based system such as UGIS is just emerging at many institutions, including our own. Other institutions do not have a UGIS in place yet. Given the newness of these systems, it is not surprising that the acceptance of UGIS has not been previously studied.

The important, complex, and unique combination of functions and features related to UGIS necessitate the proposal and validation of a UGIS-specific technology acceptance model. For example, a unit guide forms a contract and communication channel between the student and the academic staff. The complexity is demonstrated in the fact that a UGIS supports centralized administration by support staff, strategic decision-making at the department level and above, and program-wide curriculum mapping by academic staff, while also supporting the day-to-day operational management of a unit by dispersed and individual teaching staff. Therefore, this study was undertaken to propose and validate a technology acceptance model for unit guide information systems (TAMUGIS). To achieve this purpose, the study reported here asked the following research question:

-

Which factors can predict the acceptance of a unit guide information system (UGIS) by academics?

The following sections present a review of relevant literature on technology acceptance followed by our proposed framework -TAMUGIS, hypotheses, and methodology. The analysis of data and survey results are then presented followed by a discussion, limitations, and conclusion.

A review of technology acceptance

Acceptance of technology is defined by Dillon and Morris (1996) as “the demonstrable willingness within a user group to employ information technology for the tasks it is designed to support” (p. 6). The term technology acceptance is also used in longitudinal studies where new technology has recently been implemented (Venkatesh and Davis 2000), or where no technology has yet been implemented but the intentions to use new technology in the future are intended to be measured (Clausen 2007). In our literature review, we identified approximately 20 articles concerning the acceptance of technology in higher education –none concerned UGISs. Technology acceptance in higher education is still an emerging area of research with only a few scientific studies in the validation and acceptance of computer and web-based educational tools.

In educational settings, technology acceptance has been investigated in the following contexts:

-

Student teachers’ and experienced teachers’ perceptions of computer usage intentions (Anderson et al. 2007; Clausen 2007; Smarkola 2007).

-

Digital technology acceptance by higher education students (Bennet et al. 2011).

-

Perceptions of computer technology in pre-service teachers (Kiraz and Ozdemir 2006; Teo 2008; Teo and Lee 2010).

-

Influences of gender and learning style on technology-mediated learning (Hu and Hui 2011).

-

Students’ perceptions of web-enhanced instruction tool/web-based course management systems (Kumar 2007; Ring et al. 2012; Kuna 2012).

-

Students’ acceptance of online learning (e-learning) or mobile learning (m-learning) (Davis and Wong 2007; Park et al. 2012).

-

Gender differences in students’ acceptance of an internet-based learning medium (Cheung and Lee 2011).

-

Usefulness of deploying the theory as an outcomes assessment instrument to assist in the accreditation process (Wolk 2009).

However, in all of these studies, end users’ lack of acceptance of the information system limited the benefits of the system. The study reported here, therefore, attempts to identify the key determinants for the acceptance of the UGIS in the Australian higher education sector.

The theoretical framework

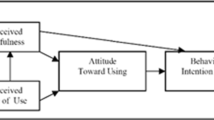

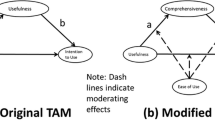

To understand the factors affecting acceptance of UGIS, we chose to build on existing theory and extend Davis’ (1989) technology acceptance model (TAM). TAM measured the impact of four internal variables upon the actual usage of the technology. The internal variables in TAM were: perceived ease of use, perceived usefulness, attitude toward use, and behavioural intention to use. We chose to use TAM to further examine the relationships between users (academics) and technology (UGIS) for three main reasons identified in the literature. First, a TAM has been considered to be both robust and parsimonious for predicting user behaviour across a broad range of end-user computing technologies and user populations. For example, different usage conditions (Venkatesh and Davis 2000), across genders (Venkatesh et al. 2003), across cultures (Teo 2008) and user acceptance of a variety of new technologies (Schepers and Wetzels 2007). Second, TAM is a well-researched model with a strong theoretical base and has been validated by a set of psychometric measurement scales (such as Likert scales used in the questionnaire to measure the attitudes and intentions about the acceptance of UGIS) (Venkatesh and Bala 2008). Third, TAM offers strong empirical support for its core constructs such as perceived usefulness and perceived ease of use in predicting user’s acceptance of technology (Zhang et al. 2010).

Many researchers have criticized TAM for its limited explanatory and predictive power, triviality, and lack of any practical value (Bagozzi 2007; Benbasat and Barki 2007; Chuttur 2009). Therefore, the research framework applied for this study was based on combination of three additional constructs from various models that have been validated in research on technology acceptance such as social cognitive theory (SCT) and the model of PC utilization (MPCU). Social cognitive theory published by Bandura (1986), is one of the most powerful theories of human behaviour. It is a learning theory based on the idea that people learn by watching what others do (observation) within the context of social interactions and experiences. Compeau and Higgins (1995) applied and extended SCT to the context of computer utilization. The model of PC utilization is derived largely from Triandis’ (1977) theory of human behaviour. Thompson et al. (1991) adapted and refined Triandis’ model for IS contexts and used the model to predict PC utilization.

Kaplan and Maxwell (2005) suggested that a combined model that presents an integration of constructs from these models may present a considerable improvement over any one of these models taken alone. Figure 1 shows the core constructs of the three models in the top row and our selected constructs in the bottom row. Each of these models has significant illustrative power. The elements of these models are selected as theoretical concepts for the best description of the attitude of academics towards using UGIS. We selected these constructs based on characteristics, requirements, issues, and other features identified in the literature discussed above as being relevant to the domain of unit guides and curriculum mapping.

As an educational information system driven by quality in learning and teaching goals that seeks to automate a mandatory activity well-established in most Australian universities, we chose to include constructs focused on the acceptance of the system for educational purposes rather than more general workplace issues. Thus, we did not include outcome expectations (personal and performance related) and affect from social cognitive theory and job-fit, complexity, facilitating conditions, and long-term consequences from a model of PC utilization. This is in line with other studies involving the use of TAM in the educational context (Li 2010). In keeping with the theory’s roots, the current research incorporates intention to use, attitude, perceived usefulness, and perceived ease of use from TAM and three external constructs: social influence from the model of PC utilization; anxiety and self-efficacy from social cognitive theory to capture the internal control; and the emotional aspect of technology acceptance and usage.

The research model and hypotheses

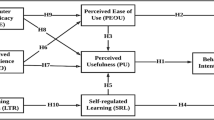

Figure 2 depicts the research model with seven theoretical concepts (constructs/latent variables/unobservable variables). The dependent (endogenous) variable is intention to use UGIS whereas the independent (exogenous) variables are UGIS-specific anxiety, UGIS-specific self-efficacy, perceived usefulness, perceived ease of use, attitude towards using UGIS, and social influence. Our proposed research model also contains 41 empirical concepts (indicators/measurement items/manifest variables/observable variables) (see “Appendix”).

To simplify the model, empirical concepts are excluded from Fig. 2. The arrows (solid lines) linking constructs specify hypothesized relationships in the direction of the arrows.

Table 1 shows the summary of constructs with our code names and definitions within the context of UGIS. Table 2 summarizes the set of hypotheses showing the hypothesized codes from H1 to H9 in column 1 and the description of the hypotheses in column 2.

Method

Participants and data collection procedure

The population of the study comprised academics in all 39 Australian universities (at the time of our survey, there are 40 universities in 2015). The target population of the survey was academics such as professor, associate professor, senior lecturer, lecturer, and associate lecturer. The population may not be precisely defined, as the number of academics in higher education institutions changes every year. Prior to recruitment, there were no data available to determine how many academics or which universities were using different UGIS (web-based) and unit guide templates as well as tools such as Microsoft Word templates/Microsoft Excel workbooks to map their graduate attributes. Therefore, an indirect recruitment method was used; with each university, learning and teaching associate deans and heads of schools/head of department were identified from public web pages as the contact persons for this study. Four-hundred personalized emails were sent explaining the purpose of the study and requesting that recipients distribute the invitation and link to the survey and consent forms to the appropriate faculties/departments/schools. We sent three follow-up emails. Data were collected from December 2011 to June 2012.

Table 3 shows the breakdown of the 400 individuals who were sent invitations by state and how many universities in each state were included.

Total responses (column 5) indicates how many responses we received from each state. The completion rate (column 6) is the number of responses for the state as a percentage of the number of invitations for that state. However, that does not clarify how many responses were the result of one individual passing on the invitation. Faculty/school responses (column 7) are the number of faculty members within a university and state that had one or more responses. This is an indicator of how many of the original 400 recipients actually passed on our survey.

A total of 184 responses were collected for this case study. After being screened for usability and reliability, 134 responses were found to be complete and usable (50 were dropped because they were blank). There were 55 (41 %) male and 79 (59 %) female respondents. In total, there were 12 professors, 24 associate professors, 34 senior lecturers, 43 lecturers, 2 assistant/associate lecturers, 12 adjuncts and 4 tutors. Of these, 93 are unit conveners (people responsible for unit management including writing unit guide) convening both undergraduate and postgraduate units.

In order to assess the possibility of non-response bias, a linear extrapolation method was undertaken to compare the responses of early (n = 85) and late respondents (n = 99) (Armstrong and Overton 1977; Lahaut et al. 2003). No significant differences could be determined between the groups (t test, p > 0.05), suggesting that non-response bias was not a major concern in this study.

Instrument and measures

The survey instrument consisted of an online questionnaire in English to ease participation and data collection. The questionnaire was created and administered in Qualtrics (Qualtrics Labs 2009). The survey comprised questions relating to: (I) demographic information; (II) unit guide tool information; (III) unit guide tool evaluation; and (IV) other considerations about the unit guide tool/template. The survey items from sections I, II, and IV were open ended and multiple choice. However, in the unit guide tool evaluation (section III) 41 items measuring the seven constructs in the research model (Fig. 2) were presented: perceived usefulness (8 items); attitude towards using unit guide tool (4 items); perceived ease of use (9 items); intention towards using unit guide tool (4 items); unit guide specific anxiety (4 items); unit guide specific self-efficacy (7 items); and social influence (5 items). The scalar items were measured using a 5-point Likert scale ranging from 1 = strongly disagree to 5 = strongly agree with a middle neutral point. These items were based on technology acceptance constructs validated in prior research (Venkatesh et al. 2003). Minor modifications to wording were made to fit the study context. The constructs and items are listed in the “Appendix”.

Results

Data analysis

Data collected from the online survey were analyzed using two different software tools: Microsoft Excel for descriptive statistics and Smart PLS 2.0 (Ringle et al. 2005) for conducting structural equation modeling (SEM) analyses. Partial least squares, a component-based approach is more prediction oriented and seeks to maximize the variance explained in constructs. Given the prediction-oriented nature of this research and the relatively small sample size compared to the number of constructs (Gefen et al. 2000; Tenenhaus 2008), we determine the PLS path modelling was more suitable for testing the hypotheses in this study.

Descriptive statistics

For this research, data normality for individual measured items was checked by determining the skewness statistics. The skewness portrays the symmetry of distribution. Hair et al. (2010) and Kline (2010) suggested that skewness scores outside the −3 to +3 range demonstrate substantially skew distribution. According to these and other authors, violation of normality might affect the prediction process or the interpretation of results in SEM analysis. The skewness statistics for the seven constructs under consideration in this study were between −3 and +3, which indicated no deviation from normality.

Evaluation of the unit guide tool information

To determine whether the institution had a unit guide information system (UGIS) or not and what unit guide activities they were involved with, we purposely included the question “what is the title of the tool/template for creating unit guides in your university?” in section II of the survey. A total of 92 participants responded to this question. Sixteen respondents reported that their institution either did not have a unit guide tool/template/UGIS in place or they were not sure. The question required entry of a free text response. We performed some pre-processing on the data and then considered the frequency of term usage. After the screening, “Template” was the most frequent word in the data. Other frequent terms are unit/course guide, unit/course outline, unit guide editor, subject learning guide, course information booklets, subject handout, and builder. We also found mention of some unit guide tools as avatar, proforma, unit of study (UoS), curriculum tracking system (CTS), course information booklet (CIB), Monatar, Sydney scientist, interact, units, and a few institutions use Microsoft Word templates.

Table 4 shows the results for the technique or tool used by the respondents’ institutions for creating unit guides. It is important to note that 63 (62 %) of the respondents’ institutions used a Microsoft Word template for creating unit guides.

However, 70 % of the respondents said that the UG tool used at their institution was linked to another student management system (such as handbook, student e-portfolio, and the like) and 56 % of the respondents said that the unit guide tool at their institution was being used or could be used for curriculum mapping. The majority (84 %) said that the UG tool at their institution was mandatory.

Model validation

After the data quality was evaluated, a PLS algorithm was used to calculate the model parameters’ estimates. Model validation denotes the process of systematically evaluating whether the hypotheses expressed by the structural model are supported by the data. Because PLS does not provide an established global goodness-of-fit (GoF) criterion, the criteria adopted for assessing partial model structures is a two-step process, encompassing (1) the assessment of the measurement model, and (2) the assessment of the structural model (Urbach and Ahlemann 2010).

Evaluation of the measurement model (outer model): the reflective way

Reliability analysis

Reliability analysis is the measure taken to ensure that the scale is consistently measuring the constructs used in the questionnaire. It is used to measure the internal consistency of measurement items. Internal consistency is evaluated using (1) composite reliability (CR) or Cronbach’s alpha, and (2) indicator reliability.

The reliability of the constructs were measured using the composite reliability (CR). Cronbach’s alpha was not used in this study because it is likely to violate key assumptions when used with a multi-item scale (Teo and Fan 2013). A more conventional indicator of validity is the average variance extracted (AVE), which measures the amount of variance captured by the construct in relation to the amount of variance attributable to measurement error. Both the CR and AVE are mediated to be adequate when they equal or exceed 0.50 (Hair et al. 2010).

We assessed the significance level of indicators and path coefficients using the bootstrapping procedure with 200 sub-samples (where n refers to sub-samples in the bootstrapping procedure, not actual sample size). Five items from perceived ease of use (PEOU4-PEOU7, PEOU9), four items from UGIS-specific self-efficacy (UGIS-S-SE2–UGIS-S-SE5), and one item from social influence (SI3) were dropped, since very small and insignificant item loadings were present (Li and Ku 2011). Table 5 shows that all of the internal consistency reliabilities are above 0.70, loadings are in the acceptable range, and the t values (Table 7) show that they are significant at the 0.05 level.

Validity analysis

Validity is related with the accuracy of measures. In this research the measurement model is assessed by estimating the construct validity. Construct validity is comprised of convergent validity and discriminate validity. Convergent validity is the degree to which similar constructs are related; while discriminant validity is the degree to which different constructs are different from each other.

Table 5 shows the constructs’ strong convergent validity, since they each had an AVE of more than 0.5 and the items loaded highly (loading >0.70) on their associated constructs. Table 6 shows the correlation matrix, with the inter-construct correlations off the diagonal and the square root of AVE on the diagonal. The results suggested an adequate discriminant validity of the measurements.

Evaluation of the structural model (inner model) and hypotheses testing

The nine hypotheses for this study were used to test the relationships between the constructs. The structural model is assessed by estimating: (1) path coefficients, and (2) coefficient of determination (R 2 Value). Path coefficients are interpreted with the t statistic calculated using bootstrapping (200 sub-samples). The study includes both directional and non-directional hypotheses, thus both one-tailed and two-tailed tests were conducted. The tests indicated the positive or negative relationships between the dependent and independent constructs and the strength of these relationships.

Coefficient of determination (R2 value) represents the amount of variance of the endogenous variables explained by the exogenous variables. The R2 values for each dependent variable indicate the predictive power of the model and the path coefficients should be significant and directionally consistent with the hypothesis. Chin (1998) described R2 values of 0.67, 0.33 and 0.19 in PLS path models as substantial, moderate, and weak respectively. Our research model in Fig. 3 is able to explain 70 % of the variance in attitudes towards using UGIS (ATT-UGIS), 49 % of the variance in perceived usefulness (PU), 36 % of the variance in intention to use UGIS (INT-UGIS), and around 36 % of the variance in perceived ease of use (PEOU).

Considering the results from Chin (1998), the model is capable of explaining the endogenous latent variables (ATT-UGIS, INT-UGIS, PU, and PEOU). The path coefficient (t statistics) values for n = 200 (sub-samples from bootstrapping), p < 0.05, p < 0.01, and p < 0.1 as shown in Fig. 3, found sufficient evidence for each hypotheses posed in the earlier sections.

-

Consistent with hypothesis 1 (H1), UGIS-specific self-efficacy (UGIS-S-SE) was found to have a positive influence on perceived ease of use (PEOU) with path coefficient = 0.37 and t = 3.13 (p < 0.01, 1-tail).

-

UGIS-specific anxiety (UGIS-S-ANX) was found to have a positive influence on perceived usefulness (PU), which is not consistent with hypothesis 2 (H2) with values for path coefficient = 0.09 and value for t = 1.29 (p < 0.05, 1-tail).

-

Hypothesis 3 (H3) was supported, UGIS-specific anxiety (UGIS-S-ANX) had a negative influence on perceived ease of use (PEOU) with t = 3.33 and path coefficient = −0.35 (p < 0.01, 1-tail). The negative value of the path coefficient between UGIS-S-ANX and PEOU suggests that UGIS-S-ANX was negatively associated with (or related to) PEOU.

-

Consistent with hypothesis 4 (H4), social influence (SI) had a significant influence on intention to use UGIS (INT-UGIS) with path coefficient = 0.32 and t = 3.40 (p < 0.01, 2-tail).

-

Social influence (SI) had a positive influence on perceived usefulness (PU) of UGIS and was consistent with hypothesis 5 (H5) with path coefficient = 0.27 and t = 2.86 (p < 0.01, 1-tail).

-

Hypothesis 6 (H6) was also supported, that perceived usefulness (PU) had a positive influence on attitude towards using UGIS (ATT-UGIS) with the path coefficient = 0.81 and t = 13.31 (p < 0.01, 1-tail).

-

Hypothesis 7 (H7) was not supported—that perceived ease of use (PEOU) will have a positive influence on attitude towards using UGIS (ATT-UGIS), with path coefficient = 0.02 and t = 0.33 (p < 0.1, 1-tail).

-

Consistent with hypothesis 8 (H8), perceived ease of use (PEOU) had a positive influence on perceived usefulness (PU) with path coefficient = 0.56 and value for t = 6.50 (p < 0.01, 1-tail).

-

Similarly, the attitude towards using UGIS (ATT-UGIS) had a positive influence on intention to use UGIS (INT-UGIS) and was consistent with hypothesis 9 (H9) with path coefficient = 0.39 and t = 3.57 (p < 0.01, 1-tail).

Table 7 shows a summary of the hypothesis testing results. Assessment of the structural model estimates results suggested that seven out of nine hypothesised paths were significant. As discussed above, the t values for both H2 and H7 did not exceed the cut-off point required for statistical significance. Thus, these paths were not statistically significant.

Modifying the structural model by removing non-significant paths

The results suggested that after removing two non-significant paths of hypotheses H2 and H7, the best parsimonious model can be achieved.

The model was revised in order to achieve a prudent model that fits the data well; not only this, but the revised model is now consistent with the observed data. The revised structural model is shown in Fig. 4.

Discussion

This research used an integrated technology acceptance framework developed to identify the user characteristics of UGIS acceptance in a higher education context. Based on data collected from 39 Australian universities, the support and evidence for the hypothesized model was evaluated. The results showed that the proposed model is significant and will help us to explain the relationships between different aspects of the acceptance of UGIS. More specifically, our study shows that academics use UG tools/templates mainly because they perceive them as useful tools to improve their performance and productivity and will enhance their image among their colleagues and university administrators.

The results of this research provide empirical support for the extension of a TAM. The beliefs about ease of use and usefulness were observed to have a significant impact on attitude and intentions towards acceptance of UGIS, revealing more variance in the acceptance intention. However, beliefs about usefulness had a stronger effect on the intended use than the perceived ease of use suggesting that beliefs about usefulness (path coefficient = 0.81 and R2 = 0.49) contribute more in UGIS acceptance than the beliefs concerning ease of use (path coefficient = 0.02 and R2 = 0.36). These findings provide additional external validity to a technology acceptance model, especially in a new contextual setting such as UGIS.

The findings of the present extended model show a significant impact of social influence on the behavioural intent to use a UGIS (path coefficient = 0.32 and t = 3.40). Thus, this study has revealed that, apart from the beliefs about usefulness and the ease of use, social influence also predicted intended behaviour about UGIS and suggests the importance of social influence on an individuals’ behavioural intention towards acceptance of a UGIS. Moreover, social influence also demonstrated a significant influence on the major TAM belief construct usefulness (path coefficient = 0.27 and t = 2.86). This implies that UGISs need to have adequate support, be integrated with existing systems, support curriculum mapping- and be easier and more pleasant to use.

This study has revealed that application-specific self-efficacy has a significant effect on users’ ease of use perception about a UGIS (path coefficient = 0.37 and t = 1.29). This finding suggests that individuals’ confidence in their abilities to use the technology in general would favourably influence their perceptions of the ease of use, which would subsequently influence their intention towards acceptance of a UGIS. Some factors such as application-specific anxiety (path coefficient = 0.09 and t = 1.29) and perceived ease of use (path coefficient = 0.02 and t = 0.33) do not have a significant influence on the perceived usefulness and intent to use UGIS. These results call for a re-evaluation of the efficacy of these factors in the context of unit guide information systems. Since UGIS-specific anxiety is a kind of individual confidence/emotion towards using a new IS, it is intuitively understandable that the anxiety would have a direct and strong impact on ease of use (path coefficient = −0.35 and t = 3.33). In order to improve UGIS-specific anxiety, upper administrators together with the learning and teaching team should provide adequate support to help potential users effectively use UGIS. The results agree with what the TAMUGIS postulated.

Limitations and conclusion

This empirical analysis of the proposed TAMUGIS has some limitations. First, there are numerous factors affecting the acceptance of new information systems, but in our study we focused just on three factors—self-efficacy, anxiety, and social influence—as seen in our model. We intend to study the effects of other potential determinants such as system familiarity or experience, top management support, user involvement, and complexity in future research. Second, the survey was conducted across Australian universities only. We hope to extend this study by doing an international survey inviting many universities from other countries. Third, the data for this study were collected using a cross-sectional survey. Future research is needed to obtain longitudinal data to investigate what factors will influence individuals’ perceptions in continuing to use the UGIS. Prior literature indicates that individuals’ perceptions are formed with the passage of time, experience, and continuous feedback from surroundings (Davis 1989; Venkatesh and Davis 2000). Thus, it is expected that the future research will investigate the findings of this research using longitudinal data.

This study is a first attempt to investigate a technology acceptance of UGIS and provides a better understanding of the individual users’ acceptance to use a UGIS. The findings of this study strongly suggest that technology acceptance is affected by the attitude of academics towards the UGIS. Intention to use UGIS was found to be mainly affected by individuals’ perceptions about both usefulness and social influence. We suggest the use of training programs, newsletters, active operational support and appropriate organizational support to improve the self-efficacy of the potential users and in easing the anxiety and reducing possible attitudinal barriers to the use of UGIS. Thus, one can conclude that the proposed technology acceptance framework show promise to predict UGIS acceptance by unit conveners and academics.

References

Anderson, R., Anderson, R., Davis, P., Linnell, N., Prince, C., Razmov, V., & Videon, F. (2007). Classroom presenter: Enhancing interactive education with digital ink. IEEE Computer, 40(9), 56–61.

Armstrong, J. S., & Overton, T. S. (1977). Estimating non-response bias in mail surveys. Journal of Marketing Research, 14(3), 396–402.

Bagozzi, R. P. (2007). The legacy of the technology acceptance model and a proposal for a paradigm shift. Journal of the Association for Information Systems, 8(4), 244–254.

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Upper Saddle River: Prentice-Hall.

Benbasat, I., & Barki, H. (2007). Quo vadis, TAM? Journal of the Association of Information Systems, 8(4), 211–218.

Bennet, S., Maton, K., & Carrington, L. (2011). Understanding the complexity of technology acceptance by higher education students. In T. Teo (Ed.), Technology acceptance in education: Research and issues (pp. 63–77). Rotterdam: Sense Publisher.

Cheung, C. M., & Lee, M. K. (2011). Exploring the gender differences in student acceptance of an internet-based learning medium. In T. Teo (Ed.), Technology acceptance in education research and issues (pp. 183–199). Rotterdam: Sense Publisher.

Chin, W. W. (1998). Issues and opinions on structural equation modeling. MIS Quarterly, 22(1), vii–xvi.

Chuttur, M. Y. (2009). Overview of the technology acceptance model: Origins, developments and future directions. Sprouts: Working papers on information systems, Indiana University, USA.

Clausen, J. M. (2007). Beginning teachers’ technology use: First-year teacher development and the institutional context’s affect on new teachers’ instructional technology use with students. Journal of Research on Technology in Education, 39(3), 245–261.

Compeau, D. R., & Higgins, C. A. (1995). Application of social cognitive theory to training for computer skills. Information Systems Research, 6(2), 118–143.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340.

Davis, R., & Wong, D. (2007). Conceptualising and measuring the optimal experience of the elearning environment. Decision Sciences Journal of Innovative Education, 5(1), 97–126. doi:10.1111/j.1540-4609.2007.00129.x.

Dillon, A., & Morris, M. G. (1996). User acceptance of new information technology: Theories and models. In M. Williams (Ed.), Annual review of information science and technology (pp. 3–32). Medford, NJ: Information Today.

Gefen, D., Straub, D. W., & Boudreau, M. (2000). Structural equation modeling techniques and regression: Guidelines for research practice. Communications of AIS, 4(7), 1–79.

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2010). Multivariate data analysis (7th ed.). Upper Saddle River, NJ: Prentice Hall.

Hu, P. J.-H., & Hui, W. (2011). Is technology-mediated learning made equal for all? Examining the influences of gender and learning style. In T. Teo (Ed.), Technology acceptance in education research and issues (pp. 101–124). Rotterdam: Sense Publisher.

Jones, D. (2009). Supporting curriculum mapping? (Web log Post). Retrieved from http://davidtjones.wordpress.com/2009/12/21/supporting-curriculum-mapping/

Kaplan, B., & Maxwell, J. A. (2005). Qualitative research methods for evaluating computer information systems. In J. Anderson, C. Aydin (Eds.), Evaluating the organizational impact of health care information systems: Methods and applications (pp. 30–55). doi:10.1007/0-387-30329-4_2

Kiraz, E., & Ozdemir, D. (2006). The relationship between educational ideologies and technology acceptance in pre-service teachers. Educational Technology & Society, 9(2), 152–165.

Kline, R. B. (2010). Principles and practice of structural equation modelling (3rd ed.). New York: Guilford Press.

Kumar, S. (2007). Student and professor perceptions of course web-site use in web-enhanced instruction. In C. Montgomerie & J. Seale (Eds.), Proceedings of world conference on educational multimedia, hypermedia and telecommunications (pp. 4321–4326). Chesapeake, VA: AACE.

Kuna, A. S. (2012). Learner interaction patterns and student perceptions toward using selected tools in an online course management system. Graduate Theses and Dissertations.

Lahaut, V., Jansen, H., Mheen, D., Garretsen, H., Verdurmen, J., & Dijk, A. (2003). Estimating non-response bias in a survey on alcohol consumption: Comparison of response waves. Alcohol and Alcoholism, 38(2), 128–134.

Li, L. (2010). A critical review of technology acceptance literature. Southwest Region of the Decision Sciences Institute. Retrieved from http://www.swdsi.org/swdsi2010/SW2010_Preceedings/papers/PA104.pdf

Li, C., & Ku, Y. (2011). The effects of persuasive messages on system acceptance. In Proceedings of Pacific Asia conference on information systems (PACIS). Retrieved from http://aisel.aisnet.org/pacis2011/110

Oliver, B. (2010). Teaching fellowship: Benchmarking partnerships for graduate employability (Final Report). Sydney, NSW: Australian Learning and Teaching Council. Retrieved from http://tls.vu.edu.au/portal/site/design/resources/Benchmarking%20Partnerships%20for%20Graduate%20Employability.pdf

Park, S. Y., Nam, M. W., & Cha, S. B. (2012). University students’ behavioural intention to use mobile learning: Evaluating the technology acceptance model. British Journal of Educational Technology, 43, 592–605. doi:10.1111/j.1467-8535.2011.01229.x.

Qualtrics Labs, I. (2009). Qualtrics. Provo, Utah: Qualtrics Labs Inc.

Rigby, B., Wood, L., Clark-Murphy, M., Daly, A., Dixon, P., Kavanagh, M. et al. (2010). Review of graduate skills: Critical thinking, team work, ethical practice and sustainability. Australian Learning & Teaching Council Project: Embedding the Development and Grading of Generic Skills Across the Business Curriculum.

Ring, J. K., Kellermanns, F. W., Pearson, A. W., & Pearson, R. A. (2012). The use of a web-based course management system causes and performance effects. Journal of Management Education, 37(6), 854–882. doi:10.1177/1052562912459853.

Ringle, C. M., Wende, S., & Will, A. (2005). SmartPLS, 2.0 (Beta). Hamburg, Germany: SmartPLS. Retrieved from http://www.smartpls.de

Schepers, J., & Wetzels, M. (2007). A meta-analysis of the technology acceptance model: Investigating subjective norm and moderation effects. Information & Management, 44(1), 90–103.

Smarkola, C. (2007). Technology acceptance predictors among student teachers and experienced classroom teachers. Journal of Educational Computing Research, 31(1), 65–82.

Tenenhaus, M. (2008). Structural equation modelling for small samples. Working Paper Number 885, HEC Paris, Jouy-en-Josas.

Teo, T. (2008). Pre-service teachers’ attitude towards computer use: A Singapore survey. Australasian Journal of Educational Technology, 24(4), 413–424.

Teo, T., & Fan, X. (2013). Coefficient alpha and beyond: Issues and alternatives for educational research. The Asia-Pacific Education Research, 22(2), 209–213.

Teo, T., & Lee, B. C. (2010). Explaining the intention to use technology among pre-service teachers. Campus-Wide Information Systems, 27(2), 60–67.

Thompson, L. R., Higgins, A. C., & Howell, M. J. (1991). Personal computing: Toward a conceptual model of utilization. MIS Quarterly, 15(1), 124–143.

Triandis, H. C. (1977). Interpersonal behaviour. Monterey, CA: Brooke/Cole.

Urbach, N., & Ahlemann, F. (2010). Structural equation modeling in information systems research using partial least squares. Journal of Information Technology Theory and Application, 11(2), 5–40.

Venkatesh, V., & Bala, H. (2008). Technology acceptance model 3 and a research agenda on interventions. Decision Sciences, 39(2), 273–315.

Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204.

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478.

Wolk, R. M. (2009). Using the technology acceptance model for outcomes assessment in higher education. Information Systems Education Journal, 7(43), 1–18.

Zhang, C., Huang, J., Chen, J., Li, M., Lee, H. J., Choi, J. et al. (2010). Research on adoption of mobile virtual community in China and Korea. International Conference on Mobile Business, pp. 220–229. doi:10.1109/ICMB-GMR.2010.26

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The following scalar items were measured using a 5-point Likert scale ranging from 1 = strongly disagree, 2 = disagree, 3 = neutral, 4 = agree and 5 = strongly agree.

Perceived usefulness (PU)

-

PU1: Using the unit guide tool improves the quality of the work I do.

-

PU2: Using the unit guide tool enables me to accomplish tasks more quickly.

-

PU3: Using the unit guide tool enables me to accomplish curriculum mapping more quickly.

-

PU4: Using the unit guide tool enhances my effectiveness on the job.

-

PU5: I would find the unit guide tool useful in my job.

-

PU6: If I use the unit guide tool, I will spend less time in curriculum mapping.

-

PU7: Using the unit guide tool increases my productivity.

-

PU8: Using the unit guide tool makes it easier to do my job.

Attitude towards using unit guide tool (ATT-UGIS)

-

ATT1: Using the unit guide tool is a good idea.

-

ATT2: Using the unit guide tool for curriculum mapping is a good idea.

-

ATT3: The unit guide tool makes work more interesting.

-

ATT4: I like working with the unit guide tool.

Perceived ease of use (PEOU)

-

PEOU1: It would be easy for me to become skillful at using the unit guide tool.

-

PEOU2: My interaction with the unit guide tool is clear and understandable.

-

PEOU3: It is easy for me to remember how to perform tasks using the unit guide tool.

-

PEOU4: Using the unit guide tool takes too much time from my normal duties.

-

PEOU5: Working with the unit guide tool is so complicated.

-

PEOU6: Using the unit guide tool involves too much time undertaking mechanical operations.

-

PEOU7: It takes too long to learn how to use the unit guide tool to make it worth the effort.

-

PEOU8: I (would) find the unit guide tool easy to use.

-

PEOU9: I (would) find the unit guide tool easy for curriculum mapping.

Intention towards using unit guide tool (INT-UGIS)

-

INT1: I intend to use the unit guide tool frequently next semester.

-

INT2: I intend to use the unit guide tool regularly next semester.

-

INT3: I intend to use the unit guide tool next semester to assist me.

-

INT4: I intend to use the unit guide tool for curriculum mapping next semester.

Unit guide specific anxiety (UGIS-S-ANX)

-

ANX1: I feel apprehensive about using unit guide tool.

-

ANX2: I feel apprehensive about using unit guide tool for curriculum mapping.

-

ANX3: I hesitate to use the unit guide tool for fear of making mistakes I cannot correct.

-

ANX4: The unit guide tool is somewhat intimidating to me.

Unit guide specific self efficacy (UGIS-S-SE)

-

SE1: I could complete the job using the unit guide tool on my own/without support.

-

SE2: I could perform curriculum mapping using a unit guide tool on my own/without support.

-

SE3: I could complete the job using the unit guide tool if I could call someone for help.

-

SE4: I could complete the job using the unit guide tool, if I had a lot of time to complete my job.

-

SE5: I could complete the job using the unit guide tool, if I had just the built-in help facility.

-

SE6: I could complete the job using the unit guide tool, if I had never used a system like it before.

-

SE7: I could complete… if I had used similar information systems like this one before to do the job.

Social influence (SI)

-

SI1: I anticipate I will use the tool because of the proportion of co-workers who use this tool.

-

SI2: I anticipate the organization will support the use of the unit guide tool.

-

SI3: I anticipate the organization will support the use of the unit guide tool for curriculum mapping.

-

SI4: I anticipate people who influence my behaviour will think that I should use the unit guide tool.

-

SI5: If I use the unit guide tool, my co-workers will perceive me as competent.

Rights and permissions

About this article

Cite this article

Atif, A., Richards, D., Busch, P. et al. Assuring graduate competency: a technology acceptance model for course guide tools. J Comput High Educ 27, 94–113 (2015). https://doi.org/10.1007/s12528-015-9095-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12528-015-9095-4