Abstract

This research aimed to explore the fusion of multispectral optical SPOT data with microwave L-band ALOS PALSAR and C-band RADARSAT-1 data for a detailed land use/cover mapping to find out the individual contributions of different wavelengths. Many fusion approaches have been implemented and analyzed for various applications using different remote sensing images. However, the fusion methods have conflict in the context of land use/cover (LULC) mapping using optical and synthetic aperture radar (SAR) images together. In this research two SAR images ALOS PALSAR and RADARSAT-1 were fused with SPOT data. Although, both SAR data were gathered in same polarization, and had same ground resolution, they differ in wavelengths. As different data fusion methods, intensity hue saturation (IHS), principal component analysis, discrete wavelet transformation, high pass frequency (HPF), and Ehlers, were performed and compared. For the quality analyses, visual interpretation was applied as a qualitative analysis, and spectral quality metrics of the fused images, such as correlation coefficient (CC) and universal image quality index (UIQI) were applied as a quantitative analysis. Furthermore, multispectral SPOT image and SAR fused images were classified with Maximum Likelihood Classification (MLC) method for the evaluation of their efficiencies. Ehlers gave the best score in the quality analysis and for the accuracy of LULC on LULC mapping of PALSAR and RADARSAT images. The results showed that the HPF method is in the second place with an increased thematic mapping accuracy. IHS had the worse results in all analyses. Overall, it is indicated that Ehlers method is a powerful technique to improve the LULC classification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Agricultural and environmental management relies on rapid information extraction via monitoring. Detailed thematic map production necessitates satellite images, which have both high spectral and spatial resolution information (Jalan and Sokhi 2012). However, these information may not be covered by same satellite. Due to fact that image fusion techniques are used to combine information, which is acquired from different sensors. Variety of spectral and spatial resolution provides a better estimation of land use and land cover analysis. Image fusion is used to enhance the image quality that is not obtainable by individual sensor (C. Pohl and van Genderen 1998). Different fusion algorithms have been proposed and several comparisons of fusion techniques have been investigated. Christine Pohl and van Genderen (2015) assessed a comprehensive analysis of current studies of image fusion applications, algorithms and sensors.

Conventionally, high spatial resolution Panchromatic (PAN) image is merged with high Multispectral (MS) image acquired from same satellite to produce high spatial and spectral image (Nikolakopoulos 2008; Rodriguez-Galiano et al. 2012; Yıldırım and Güngör 2012). Furthermore, integration of multi sensor PAN and MS images (Jalan and Sokhi 2012; Sunar and Musaoglu 1998) and, PAN and hyperspectral (Cetin and Musaoglu 2009) are also widely used. Another approach is using optical and synthetic aperture radar (SAR) images which are complementary regarding attainment of different characteristics of target (S. Abdikan and Sanli 2012; Amarsaikhan et al. 2012; Ehlers et al. 2010; Zeng et al. 2010). Lastly, fusion of SAR and SAR images is also rarely used way of image enhancement (Abdikan et al. 2014; Amarsaikhan et al. 2012).

Rodriguez-Galiano et al. (2012) used Landsat 7 ETM + and its corresponding PAN image for a comparative assessment of seven fusion algorithms; PCA, IHS, Wavelet à trous, Additive Wavelet Luminance Proportional (AWLP), Downscaling CoKriging (DCK), and Bayesian methods. They compared the results qualitatively and quantitatively over both urban and agricultural areas. Additionally, ISODATA unsupervised clustering method is used for the original and fused images. Amongst in all, DCK achieved the most coherent and suitable method. By testing five different fusion methods [Gram-Schmidt (GS), Ehler, Modified Intensity-Hue-Saturation (M-IHS), High Pass Filter (HPF), and Wavelet-Principal Component Analysis (W-PCA)] with multispectral Quickbird and WorldView2 with their corresponding PAN images, it is found that Ehlers method performance achieved the best accuracy among all applying Support Vector Machines (SVM) supervised learning algorithm (Yuhendra et al. 2012).

Amarsaikhan et al. (2012) performed Brovey, PCA, GS, Wavelet-based and Ehlers fusion methods for both optical-SAR and SAR–SAR combinations. Wavelet-based fusion result of optical-SAR combination is obtained the best. For the SAR and optical fusion (Ehlers et al. 2010) concluded that only the Ehlers method preserved the spectral information of the MS for classification purposes. The other methods (PC, Color Normalization spectral sharpening (CN), Gram-Schmidt (GS) and University of New Brunswick (UNB)), are suggested to be used for single sensor and single date images. They also applied AWLP method but it preserved the spectral characteristics poorer than Ehlers method and, it caused to have spatial artifacts rather than improvements.

Another study evaluated twelve different algorithms Brovey, CN, Ehlers, GS, HPF, M-IHS, PCA, W-PCA, UNB, Local Mean Matching (LMV), Local Mean Variance Matching (LMVM), Subtractive Resolution Merge (SRM) over urbanized areas using very high-resolution GeoEye-1 images (Witharana et al. 2013). Among all methods, Ehlers, Wavelet and HPF had the best results as a spectral quality. While, UNB and GS methods are scored the best in spatial metrics, and in overall HPF obtained as the best algorithm.

TerraSAR-X and Landsat ETM+ data was fused applying HPF, PCA and PCA with Wavelet (WPCA) methods using C 4.5 decision tree classifier for the mapping of a protected area (Otukei et al. 2015). The results of classification of fused images indicated that WPCA had highest and HPF had lowest overall classification accuracies.

Lu et al. (2011b) used both dual polarized (HH-HV) RADARSAT-2 and PALSAR images for fusion with LANDSAT-TM image to compare its efficiency on the land cover classification. The analysis is performed with PCA, Discrete Wavelet Transformation (DWT), HPF and Normalized Multiplication (NMM) methods and highlighted that Wavelet method improved the accuracy of overall classification (3.3–5.7 %). HPF showed also similar results, hence in particular these two methods improved the classification of vegetation. Same dataset is used also for impervious surface mapping distribution of the complex urban and rural areas (Lu et al. 2011a). Here just DWT method is chosen and concluded that despite the higher resolution SAR data improved mapping impervious surface, different wavelength did not have any significant impact. Similarly, Roberts et al. (2008) did not achieve additional benefit from classification results of different fusion techniques (Brovey, IHS, DWT, Principal Component Substitution and IHS modified wavelet) using ASTER, and RADARSAT-1 images.

For the qualitative comparison of the original multispectral and fused images spatial improvement and color preservation are the most widely used and effective criteria for evaluating the various fusion methods. Another approach is quantitatively comparing the fusion process with statistical parameters (Abdikan and Sanli 2012; Cetin and Musaoglu 2009; Rodriguez-Galiano et al. 2012; Witharana et al. 2013). Additionally, different enhancement and mapping techniques such as vegetation indices (Munoz-Robles et al. 2012), ISODATA classification (Rodriguez-Galiano et al. 2012), edge detection (Ehlers et al. 2010; Witharana et al. 2013) SVM (Yuhendra et al. 2012) and Maximum Likelihood Classification (MLC) method (Lu et al. 2011a; Roberts et al. 2008) have been used for the evaluation of the fusion algorithms performances.

Even though several researches have made studies over fusion methods, there are still challenges for the assessment of SAR and optical data fusion performances. It is also uncertain the preference of C-band or L-band for the land use and cover analysis. The present study tackles the investigation and evaluation of considerable effect of image fusion methods over classification results. It was aimed to enrich the exploit of SAR and optical fused images in the context of land use/cover monitoring purposes. In this context, a detailed amplification of previous work is depicted (Abdikan et al. 2008; Abdikan and Sanli 2012). In this study, optical SPOT-2 image is fused with both HH polarized C-band RADARSAT-1 and L-band PALSAR images, and a comparative analysis is performed over urban and agricultural sites separately using five fusion methods in the Menemen Plain of Izmir Province (Abdikan and Sanli 2012). In the previous studies urban and agricultural areas were analyzed visually and statistically. In this study, besides urban and agricultural areas; wetlands, pasture and meadow classes were also considered. Apart from the visual and statistical analyses, the examination of the contribution of image fusion methods to the land use/cover mapping is aimed. For this purpose the evaluation of fused images was done applying Maximum Likelihood Classification (MLC) as a supervised method. In total 11 land use/cover maps, as one from original MS image SPOT-2 and ten from optical and SAR fused images, were produced and for each class accuracies were compared.

Study area and data set used

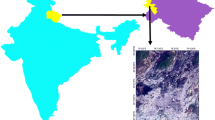

The study area is located in the Menemen Plain of Izmir Province lying to the west of Turkey and east of the Aegean Sea. The Gediz River lies to the north part of the study area and the Gulf of İzmir to the south. In the western part is positioned adjacent to the coastline (Fig. 1). The area is about 13 km × 14 km covering agricultural fields and residential areas. Agricultural fields were mostly covered with crops such as wheat and vineyard. Apart from the previous studies, in this study, the investigated area is more complex including pasture, meadow and wetlands. Besides, there were also unplanted fields. Study area has a homogeneous surface roughness and a micro relief which is approximately 1 %.

Southwest and west part of the area, which covers some part of wetland and agriculture, is under protection according to the Ramsar agreement (Çağırankaya and Meriç 2013). The Ramsar site known as Gediz Delta covers wetlands which are pivotal for the endangered bird spices such as Dalmatian pelican (Pelecanus crispus), Lesser kestrel (Falco naumanni) which are in the red list of International Union for Conservation of Nature (IUCN) (Çağırankaya and Meriç 2013). Furthermore, it is also a very important area for breeding of many sea birds and supports endemic species known as Carex divisia, Puccinellia kociana anatolica, Salsola kali, Stachys cretica smyrnaea and Sueda prostrate prostrate (Çağırankaya and Meriç 2013). Additionally, it is a crucial wintering site for many birds, and especially for flamingo (Phoenicopterus roseus) which use this site regularly.

Methodology

The study consists of five main processing steps: (1) image preprocessing (rectification, filtering and co-registration), (2) application of fusion techniques, (3) quality analysis of fused images (visual and spectral analysis), (4) land use/cover mapping (MLC application) and (5) accuracy assessment of the classification results.

In the analysis, one SPOT image was merged with two SAR images. Both SAR images (L-band PALSAR and C-band RADARSAT-1) have the same pixel spacing (6.25 m) and same polarization, but they have different frequencies (Table 1). In the preprocessing step, two SAR images were filtered to reduce the speckles. A 3x3 kernel size of Gamma filtering were applied before the fusion processes. Then the SAR images were registered to the SPOT image less than ±1 pixel rms accuracy. SAR images were resampled to 8 m ground resolution and SPOT image was rectified with a first degree polynomial function of nearest neighbor resampling technique using 1/5000 scaled cadastral maps and 1/25,000 scaled topographic maps. A detailed flowchart of the methodology is given in the Fig. 2.

Image fusion

In the present study two sets of SAR images were fused with multispectral SPOT image (NIR-Red-Green as RGB composite) by five different image fusion methods. These are Intensity Hue and Saturation (IHS), Principle Component Analysis (PCA), Discrete Wavelet Transformation (DWT), High Pass Filter (HPF) and Ehlers methods. As an output, a set of 10 fused images, which have same resolution as SAR images were generated.

A brief introduction of the methods used are given below:

-

Intensity Hue and Saturation (IHS) method transforms the red (R), green (G), blue (B) domain into IHS color space. A high spatial resolution image is replaced in place of the intensity component to improve the spatial resolution of the image. An inverse transformation is applied on IHS to produce high resolution RGB image (Pohl and van Genderen 1998). The drawback of this method is that it has to be applied more than once if image has more than three bands (Ehlers et al. 2010).

-

As a statistical method Principle Component Analysis (PCA) creates an uncorrelated variables from correlated MS dataset. After applying PCA to all MS images the first PC is replaced to high spatial resolution data. Next a reverse transformation is need to be applied to output spatially enhanced MS data (Ehlers et al. 2010, Amarsaikhan et al. 2012). The PCA method does not have limitation of the input number of bands as IHS method.

-

In Discrete Wavelet Transformation (DWT) a spatial high resolution image is decomposed to its high and low frequency components which represent the higher and lower spatial information, respectively. Low resolution MS data is replaced with low pass component. Finally, an inverse transformation is applied to spectral information with high spatial resolution (Abdikan et al. 2008, Amarsaikhan et al. 2012). The method is defined for more than three bands.

-

High Pass Filter (HPF) based fusion method applies convolution of high-pass filter on the high spatial resolution image and extracts the high frequency component. Spatial information which concerns to high frequency component of high resolution image is added to low spatial resolution MS data (Lu et al. 2011b). The advantage of the HPF method is that it allows using more than three bands.

-

Ehlers methods was developed to keep the spectral characteristics during image fusion. A combination of IHS transformation and Fourier domain filter is used. IHS is used to separate optimal color information. This method extends the limitation of using three band in conventional IHS method. Therefore, the number of bands is not restricted (Ehlers et al. 2010).

Quality analysis

For the quality analysis, both qualitative and quantitative methods were used. The color preservation of the fused images was compared to the original MS images. For visual evaluation, a sub-image covering different LULC classes were selected (Fig. 3). The improvement of the spatial resolution and its effects on the results were analyzed qualitatively.

For quantitative analysis, statistical evaluation of fused results was compared using two metrics, namely the Correlation Coefficient (CC) and Universal-Image-Quality Index (UIQI). CC measures the correlation between the fused and the original images. The spectral values of fused images are estimated better if the CC is equal to 1. UIQI was proposed to modeling image distortions as a composite of loss of correlation, luminance distortion and contrast distortion (Wang and Bovik 2002). There are different approaches for statistical analysis (Rodriguez-Galiano et al. 2012; Witharana et al. 2013), a detailed comparison were also presented in the previous study (S. Abdikan and Sanli 2012).

In the qualitative analysis, a subset image, covering complex LULC classes such as agriculture, settlements and salt crust area, was selected (Fig. 3). For the interpretation, a total of ten fused images were compared visually with original SPOT image. In this comparison, linear features such as roads and field borders, and patterns such as residential and agricultural patterns were taken into consideration. In general, it is noticed that all fusion results were spatially enhanced within various degrees. However, some methods were introduced spectral distortions. The IHS output images produced by using both RADARSAT and PALSAR data were found as the worse ones in preserving colors among the five methods (Figs. 4b, 5b).

Visual comparison

In the fused images, it is clear that some characteristic features such as roads, field borders (i.e. linear features) and residential features were inherited from SAR images. These are more obvious in all images except the results of DWT and Ehlers method (Fig. 4). Among them IHS shows distinction between different features, especially in PALSAR images together with its inherent noisy texture (Fig. 4g). Similarly, PCA method preserved also SAR characteristics (Fig. 4c, h). Lowest preservation in linear details is observed in Ehlers (Fig. 4f–k) and DWT (Fig. 4d, i). HPF indicates linear features especially at salt crust (Fig. 4e, j) and features at residential areas in both results. However in agricultural area and around the airport PALSAR results kept the linear features (Fig. 4e–j). Ehlers results showed that the spectral consistency is kept much better than the others (Fig. 4f, k). Alike, DWT method also had high spectral similarity with the original SPOT image (Fig. 4d, i). Nevertheless, it is observed some color distortions in salt crustal and agricultural areas. HPF also preserves spectral information which is close to the DWT results. PALSAR fused result is found much better than RADARSAT, especially in agricultural areas. In all results PALSAR fused results have a slightly higher quality as image brightness and sharpness (Fig. 4g–k).

Quantitative analysis

The statistical results indicated that PALSAR fused Ehlers results has the best CC and UIQI values (Fig. 5). Contrary to this, IHS gave the worse result among the all methods with low CC and UIQI values in both RADARSAT and PALSAR fused images. Furthermore, DWT results of RADARSAT has slightly better results than HPF results, while DWT and HPF results of PALSAR are very close to each other. In both RADARSAT and PALSAR results of PCA method was the fourth better result among all methods.

Classification results and comparison

The contribution of fusion methods to the classification was analyzed with comparison of original SPOT and fused images. For this application nine classes were selected namely wheat1 (first crop wheat), wheat2 (second crop wheat), moist soil, dry soil, residential area, pasture (located on the naturally irrigated lands covered very dense vegetation during the whole year), meadow (located on the gentle or deep sloppy areas covered with medium or scarce vegetation), vineyard and salt crust, and MLC method was employed.

For each class 50 pixels (in total 450 pixels) were selected randomly for nine classes to assess the accuracy of each eleven classified image. For both original and fused images Kappa coefficients (KC) for all classes (Fig. 6), overall Kappa accuracy (OKA) and overall classification accuracy (OCA) were calculated (Congalton 1991) (Table 2).

Multispectral SPOT image has 80 % OKA and 82.22 % OCA using MLC method (Table 2). Ehlers method was improved OKA in both PALSAR and RADARSAT images with 5.24 and 2.92 %, respectively (Fig. 7f, k). Besides, HPF was contributed only in PALSAR result with 2.21 %, while RADARSAT result of HPF was slightly lower than the result of SPOT −0.43 %) (Fig. 7e, j). Other three methods did not improve the OKA in both PALSAR and RADARSAT fused images (Table 2; Fig. 7b–d and g–i). Regarding to the comparison of each class wheat1 class improved with all fusion methods except IHS with PALSAR data. HPF method showed the highest impact as 9.12 % with PALSAR, and 8.44 % with RADARSAT. Furthermore, Ehlers result of RADARSAT was in the second order following the HPF with an 8.24 % increase.

The contributions of each fusion methods to the mapping of each class were illustrated in Fig. 7. Wheat2 class was improved 9.73 % using Ehlers method with PALSAR, and 7.45 % using HPF with RADARSAT as maximum. L-band is superior to C-band for the differentiation of the class wheat2. From the comparison of the L-band and C-band images, it is concluded that the dry soil and moisture soil areas differ prominently due to the differences in backscatter values. Moist soil class was dramatically improved with PALSAR results using Ehlers (17.17 %) and HPF (13.87 %) methods. Although PCA and IHS improved KC by 9.53 % and 1.69 % respectively, DWT was found as unsuccessful (Fig. 7).

In the results of RADARSAT images, except for the IHS method all the methods improved the classification accuracy of moist soil (Ehlers: 4.44 %, HPF: 6.79 %, DWT: 4.48 % and PCA: 4.15 %). It is clear that L-band which may penetrate deeper to soil improved the accuracy of moist soil better than C-band. Dry soil class was considerably improved as 11.21 % with Ehlers, and 6.69 % with HPF methods using PALSAR. PCA and DWT methods were also slightly improved dry soil class using PALSAR 3.11 and 1.14 % respectively. On the other hand, for RADARSAT KC results using DWT was significantly improved as 6.46 %, and Ehlers followed it with a small increase (1.65 %). For the dry soil, L-band also enhanced the results better than C-band. Classification accuracy of residential area was increased considerably when using IHS and PCA methods with PALSAR as 9.02 % and 5.79 %, respectively. In addition to that, HPF was improved with a small amount as 1.99 %, however, impact of Ehlers method was insignificant with 0.17 % increase. The results of RADARSAT fused images showed an increase in the quality compared to original data especially when using IHS and PCA methods as 6.64 % and 6.45 %, respectively. The Ehlers method using RADARSAT data indicates slightly better result than using PALSAR data with a 2.88 % improvement. However, the contribution of L-band is higher than C-band for the classification of residential class. KC of pasture was improved with only using Ehlers and HPF in both PALSAR (6.96 and 7.32 % respectively) and RADARSAT images (9.97 and 1.24 % respectively). Meadow is the only class which was not improved with any fusion method and using both RADARSAT and PALSAR data. The vineyard class was again improved only with the Ehlers method using both RADARSAT (2.26 %) and PALSAR (4.24 %) data.

The results of HPF were not significant as the 0.74 % with RADARSAT and 0.01 % with PALSAR. Lastly, salt crust was not improved with any fusion method using PALSAR data. Conversely, RADARSAT results of HPF and IHS methods were enhanced slightly with the same amount of improvement as 2.25 % for both RADARSAT and PALSAR data. Overall, OCA results showed that Ehlers delivered the highest accuracy among the five fusion methods in both PALSAR and RADARSAT results as 5.34 % and 2.67 % accuracy increase respectively (Fig. 7f and 7k). Furthermore, HPF contributed to the accuracy slightly with 2.21 % and 1.11 % using PALSAR and RADARSAT, respectively (Fig. 7e, j). The results of DWT, PCA and IHS were not improved using RADARSAT, and were insignificant using PALSAR data (Table 2; Fig. 7).

Conclusion

The main aim of this research was to compare the contribution of image fusion methods in terms of using multi-sensor data for the improvement of LULC analyses. For the image fusion one optical data and two SAR images were considered. Five fusion approaches namely; IHS, PCA, DWT, HPF and Ehlers were applied. As a result five SPOT-RADARSAT-1 and five SPOT-PALSAR fused images were generated. In total ten fused images were compared both qualitatively and quantitatively with the original MS SPOT images. Hence, fused images were visually interpreted and spectrally compared.

Eventually, the fused images indicated distinct results that show improvements in various degrees. Among all fusion methods, Ehlers showed the best and IHS showed the worst results for both PALSAR and RADARSAT-1 fused images in qualitative and quantitative analysis.

Contribution of image fusion techniques to the SAR and optical fused images were investigated by also comparing the classification results of original SPOT with SPOT-RADARSAT-1 and SPOT-PALSAR fused Images.

For each selected classes KC, and for each image OKC and OCA were computed. As a comparison, it is clearly shown that Ehlers method improved the classification results for both fused images using PALSAR and RADARSAT data; however it is superior in the result of PALSAR data. HPF can be considered to be as second alternative method which improved classification when the PALSAR image used.

L-band data presented greatest success in LULC classification versus C-band data. The contribution of PALSAR is almost double more compared to RADARSAT data. Although, IHS, PCA and DWT methods improved the accuracy of some classes; they had limitations and did not improve the overall accuracy. Among the five methods, IHS results were the worst in both PALSAR and RADARSAT image outputs which are compatible with the visual comparisons. In general the results demonstrated that SPOT-PALSAR fusion were superior to the SPOT-RADARSAT-1 fused image (i.e. the accuracy of LULC classification results are improved for all fusion methods using PALSAR images, while it decreases for two out of five fusion methods using RADARSAT images).

Overall, it is concluded that information gathered from multi-sensor data considerably enhance the accuracy of LULC classification for a better and reliable map production. Except for the IHS method, all the methods used in this paper are capable of fusing multispectral images which have more than three bands, however, among them Ehlers method showed more promising results. As a further research, the analysis of the data fusion performances of the high resolution radar images and hyperspectral images are planned.

References

Abdikan, S., Balik Sanli, F., Bektas Balcik, F., & Goksel, C. (2008). Fusion of sar images (PALSAR and RADARSAT-1) with multispectral spot image: a comparative analysis of resulting images. The International Archives of the Photogrammetry Remote Sensing and Spatial Information Sciences, 37, 1197–1202.

Abdikan, S., & Sanli, F. B. (2012). Comparison of different fusion algorithms in urban and agricultural areas using sar (palsar and radarsat) and optical (spot) images. Boletim De Ciencias Geodesicas, 18(4), 509–531.

Abdikan, S., Sanli, F. B., Sunar, F., & Ehlers, M. (2014). A comparative data-fusion analysis of multi-sensor satellite images. International Journal of Digital Earth, 7(8), 671–687. doi:10.1080/17538947.2012.748846.

Amarsaikhan, D., Saandar, M., Ganzorig, M., Blotevogel, H. H., Egshiglen, E., Gantuyal, R., et al. (2012). Comparison of multisource image fusion methods and land cover classification. International Journal of Remote Sensing, 33(8), 2532–2550. doi:10.1080/01431161.2011.616552.

Çağırankaya, S. S., & Meriç, B. T. (2013). Turkey’s important wetlands: RAMSAR Sites. Ankara, Turkey: Ministry of Forestry And Water Affairs, General Directorate of Nature Conservation and National Parks.

Cetin, M., & Musaoglu, N. (2009). Merging hyperspectral and panchromatic image data: qualitative and quantitative analysis. International Journal of Remote Sensing, 30(7), 1779–1804.

Congalton, R. G. (1991). A review of assessing the accuracy of classifications of remotely sensed data. Remote Sensing of Environment, 37(1), 35–46. doi:10.1016/0034-4257(91)90048-B.

Ehlers, M., Klonus, S., Johan Åstrand, P., & Rosso, P. (2010). Multi-sensor image fusion for pansharpening in remote sensing. International Journal of Image and Data Fusion, 1(1), 25–45.

Jalan, S., & Sokhi, B. S. (2012). Comparison of different pan-sharpening methods for spectral characteristic preservation: multi-temporal CARTOSAT-1 and IRS-P6 LISS-IV imagery. International Journal of Remote Sensing, 33(18), 5629–5643. doi:10.1080/01431161.2012.666811.

Lu, D., Li, G., Moran, E., Batistella, M., & Freitas, C. C. (2011a). Mapping impervious surfaces with the integrated use of Landsat Thematic Mapper and radar data: A case study in an urban–rural landscape in the Brazilian Amazon. ISPRS Journal of Photogrammetry and Remote Sensing, 66(6), 798–808.

Lu, D., Li, G., Moran, E., Dutra, L., & Batistella, M. (2011b). A comparison of multisensor integration methods for land cover classification in the Brazilian Amazon. GIScience & Remote Sensing, 48(3), 345–370.

Munoz-Robles, C., Frazier, P., Tighe, M., Reid, N., Briggs, S. V., & Wilson, B. (2012). Assessing ground cover at patch and hillslope scale in semi-arid woody vegetation and pasture using fused Quickbird data. International Journal of Applied Earth Observation and Geoinformation, 14(1), 94–102. doi:10.1016/j.jag.2011.08.010.

Nikolakopoulos, K. G. (2008). Comparison of nine fusion techniques for very high resolution data. Photogrammetric Engineering and Remote Sensing, 74(5), 647–659.

Otukei, J. R., Blaschke, T., & Collins, M. (2015). Fusion of TerraSAR-x and Landsat ETM plus data for protected area mapping in Uganda. International Journal of Applied Earth Observation and Geoinformation, 38, 99–104. doi:10.1016/j.jag.2014.12.012.

Pohl, C., & van Genderen, J. L. (1998). Multisensor image fusion in remote sensing: concepts, methods and applications. International Journal of Remote Sensing, 19(5), 823–854. doi:10.1080/014311698215748.

Pohl, C., & van Genderen, J. (2015). Structuring contemporary remote sensing image fusion. International Journal of Image and Data Fusion, 6(1), 3–21.

Roberts, J. W., van Aardt, J., & Ahmed, F. (2008). Assessment of image fusion procedures using entropy, image quality, and multispectral classification. Journal of Applied Remote Sensing, 2, 023522. doi:10.1117/1.2945910.

Rodriguez-Galiano, V. F., Pardo-Iguzquiza, E., Chica-Olmo, M., Mateos, J., Rigol-Sanchez, J. P., & Vega, M. (2012). A comparative assessment of different methods for Landsat 7/ETM + pansharpening. International Journal of Remote Sensing, 33(20), 6574–6599. doi:10.1080/01431161.2012.691612.

Sunar, F., & Musaoglu, N. (1998). Merging multiresolution SPOT P and landsat TM data: the effects and advantages. International Journal of Remote Sensing, 19(2), 219–224. doi:10.1080/014311698216206.

Wang, Z., & Bovik, A. C. (2002). A universal image quality index. Signal Processing Letters, IEEE, 9(3), 81–84.

Witharana, C., Civco, D. L., & Meyer, T. H. (2013). Evaluation of pansharpening algorithms in support of earth observation based rapid-mapping workflows. Applied Geography, 37, 63–87. doi:10.1016/j.apgeog.2012.10.008.

Yıldırım , D., & Güngör, O. (2012). A novel image fusion method using IKONOS satellite images. Journal of Geodesy and Geoinformation, 1(1), 75–83.

Yuhendra, Y., Alimuddin, I., Sumantyo, J. T. S., & Kuze, H. (2012). Assessment of pan-sharpening methods applied to image fusion of remotely sensed multi-band data. International Journal of Applied Earth Observation and Geoinformation, 18, 165–175. doi:10.1016/j.jag.2012.01.013.

Zeng, Y., Zhang, J., Van Genderen, J., & Zhang, Y. (2010). Image fusion for land cover change detection. International Journal of Image and Data Fusion, 1(2), 193–215.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Sanli, F.B., Abdikan, S., Esetlili, M.T. et al. Evaluation of image fusion methods using PALSAR, RADARSAT-1 and SPOT images for land use/ land cover classification. J Indian Soc Remote Sens 45, 591–601 (2017). https://doi.org/10.1007/s12524-016-0625-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-016-0625-y