Abstract

Emotional expressiveness in robots has attracted increased research interest, and development of this technology has progressed in recent years. A wide variety of methods employing facial expressions, speech, body movements, and colors indicating emotional expression have been proposed. Although previous studies explored how emotional expressions are recognized and affect human behavior, cooperative and competitive human-robot relationships have not been well studied. In some cases, researchers have examined how cooperative relationships can be influenced by agents that exhibit detailed facial expressions on a screen. It remains unclear whether and how expressive whole-body movements of real humanoid robots influence cooperative decision-making. To explore this, we conducted an experiment in which participants played a finite iterated prisoner’s dilemma game with a small humanoid robot that exhibits multimodal emotional expressions through limb motions, LED lights, and speech. Results showed that participants were more cooperative when the humanoid robot showed emotional expression. This implies that real humanoid robots that lack ability to show sophisticated facial expressions can form cooperative relationships with humans by using whole-body motion, colors, and speech.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, interest in robotic emotional expressions has increased, and significant advancements have been reported. Researchers have proposed a wide variety of methods for conveying emotional expressions [9], including facial expressions [4, 12, 27, 29, 37], speech [17, 31, 32, 44], body movement [8, 21, 26, 35, 51, 68,69,70], colors [26, 31, 32, 55, 57], as well as combinations of these modalities [66]. Implementing a display of emotion in robots could help increase their perceived friendliness and help them influence people without actually speaking to them [11, 12].

Research has shown that emotional expressions from robots can be intuitively recognized and categorized. Emotions have been successfully conveyed through the upper body movements of a human-like robot with 13 DOFs and a realistic human face [38], head movements and chest-mounted color LEDs on a robot with 10 DOFs in its upper body [21], acceleration and curvature in the movement of a mobile robot (Roomba) and an animal-like upper body robot (iCat) [47], the head positions of a full body humanoid robot (NAO) [5, 6], color changes displayed by an abstract shape robot [57], and a combination of vocal prosody and whole-body expression in a humanoid robot (Pepper) [59]. In addition to categorical recognition of emotions, studies have shown that robotic emotions affect human behavior in areas such as exercise advice [10], storytelling [54, 66], lecturing [70], teaching [34], emotional awareness and self-disclosure [66], and learning effectiveness [28].

Although non-humanoid robots are occasionally used to study robotic emotional expressions [47, 57], a majority of such studies are conducted using humanoid robots equipped with the multimodality to show emotional expressions. Salem et al. [48] investigated how humans evaluate a humanoid robot (ASIMO) exhibiting various gestures along with speech. They found that non-verbal behaviors led humans to evaluate the robot more positively. Costa et al. [16] used a humanoid robot (ZECA) to investigate how robotic facial expressions and gestures are conveyed to human participants. Aly and Tapus [2] developed a communication system for a humanoid robot (ALICE) by using gestures, speech, and facial expressions modified with emotions, and investigated the extent to which these multimodal emotional expressions were recognized by humans.

As mentioned above, although numerous studies have reported that emotional expressions are recognized as emotional categories and affect daily human behavior, few researchers are focusing on the fundamental nature of robotic emotional expressions [18, 19, 56]. There are a few underlying theories of how emotional expressions shape behavior. Van Kleef’s emotions as social information (EASI) theory postulates that emotional expressions shape behavior and regulate social life by triggering a series of affective reactions and/or inferential processes in observers [33], especially in order to maintain a cooperative relationship [61, 63]. According to appraisal theories [15, 41, 53], emotional states arise as a result of evaluating the current situation to determine whether the actor’s goals or concerns are satisfied or obstructed. Then, the reaction in the observer occurs through a process of reverse appraisal [19, 67]. Joy, for example, signals that the current situation is evaluated as favorable and informs the observer they should continue their current behavior, usually leading to cooperative relationship [1, 13, 14, 25, 39, 42, 45, 49, 58]. On the other hand, expressions of anger signal that the current situation is evaluated as unfavorable and inform the observer that they should change their behavior [61], likely inducing concessions from a competitor [22, 46, 50, 52, 60, 62, 64, 65]. Based on the above background, de Melo et al. showed that the emotional expressions of an agent can increase cooperation in a prisoner’s dilemma [18,19,20]. Consistent with de Melo’s study, Terada et al. showed that emotional expressions in a simple line drawing of a robot’s face increased altruism among people playing the ultimatum game [56].

Although understanding the fundamental nature of human-robot relationships is important for establishing long-term cooperative relationships with robots, little is known about how cooperative decision-making by humans can be affected by emotional expressions of physically present robots. de Melo et al. [18, 19] used virtual agents’ faces that are able to show emotional expressions and confirmed that social decisions are affected by emotional expressions. However, it is still unclear whether movements of real humanoid robots that lack sophisticated facial expression mechanisms affect social decision making. If the reverse appraisal theory is applicable to various types of robots and various behaviors, it is hypothesized that the non-facial emotional expressions shown by real humanoid robots also influence human social decision making. Under the same motivation, Kayukawa et al. [30] conducted a study on how human cooperation is affected by emotional expressions of humanoid robots; their results indicated that emotional expressions of humanoid robots did not affect the cooperation rate. However, owing to the small number of participants in their study and the high variation in their instructions, their results are still open to consideration.

In the present study, similar to the study conducted by Kayukawa et al. [30], we examined how robotic emotional behavior conveyed through motion, eye color, and speech affects cooperative decision-making in the prisoner’s dilemma game. In particular, we invited a higher number of participants than those in the study by Kayukawa et al. [30] and, as a result, were able to more thoroughly analyze the influence on human decision-making; further, we reduced the variations in the instructions as much as possible by designing the robot to convey instructions by itself.

2 Method

2.1 Participants

The sample size was calculated using G*Power [23] with a medium effect size (\(\eta _p^2=0.05\)) based on previous studies [18, 61], an \(\alpha = .05\), and a statistical power of .95. The recommended total sample size was 52 participants. Eventually, 57 participants were recruited at the University of Fukui. All were students between 18 and 23 years old. Their average age was 19.2, and the standard deviation of their ages was approximately 1.08. Gender distribution was as follows: males, 57.2%; females, 42.8%. They volunteered to participate in the experiment as part of a class. They were not familiar with the prisoner’s dilemma game.

2.2 Prisoners Dilemma Game Setting

We adopted the experimental design developed by de Melo et al. [20], with one important difference: they used a virtual agent, which showed emotional states via facial expressions displayed on a computer screen, while we employed a real full-body humanoid robot that uses body motion, speech, and colorfully illuminated eyes to show emotional states. We developed a system in which a human plays an iterated prisoner’s dilemma game with a small humanoid robot, in order to investigate how the emotional expression of the real humanoid robot influences the decision-making of the human participant [30].

Figure 1c shows the experimental setting. NAO, a humanoid robot, stands behind a cardboard partition such that the human participants cannot see the robot’s “cooperate” and “defect” buttons. Participants also have buttons allowing them to select “cooperate” or “defect.” The status of the game is displayed on the laptop screen.

Table 1a shows the payoff matrix for the prisoner’s dilemma game. If both players select cooperate they each receive 5 points. If the robot selects cooperate and the participant selects defect, the robot receives only 3 points and the participant receives 7 points and vice versa. If both select to defect, they each receive 4 points. The point matrix is the same as that in the reference paper [20]. One game is composed of 25 rounds. Immediately after each round of the game, the humanoid robot shows emotional expressions indicating their reaction to the results of the round.

Figure 1d shows the game monitor. The point matrix of the prisoner’s dilemma game is displayed on the top right, to remind the human participant of the points they can gain in each round. The game monitor shows the outcome of the previous round on the matrix; the total points earned by the humanoid robot and human participant are displayed at the bottom. The monitor also shows the number of the round and the countdown for the selection.

2.3 Robot’s Emotional Expressions

The multimodal emotional expression of the humanoid robot was developed specifically for the iterated prisoner’s dilemma game. The humanoid robot can express Joy, Anger, Shame, and Sadness using limb motions, colorful eye illumination, and speech.

The limb motions for each emotion were designed by hand. A designer moved the limbs of the humanoid robot by hand and the motion of the robot was recorded. The recorded motion is reproduced on the humanoid robot to express the emotion. Figure 1f shows the emotional expressions exhibited by the motion of the limbs during the game.

Terada et al. [57] showed that dynamic changes in color luminosity on the robot can be used for expression of emotions. We introduced their idea into our study and designed a technique for illuminating the eyes on the humanoid robot. Table 1g shows the luminosity patterns used in the humanoid robot eyes to express emotion. For example, when the robot shows Joy, the lights in the eyes of the robot flash yellow three times in 1.5 s.

The voice of a six-year-old girl was recorded for use as the voice of the humanoid robot. The humanoid robot utters speech according to the expressed emotion. For example, it says “Yattah!” (“Yes!” in Japanese) to express Joy, “Ah Mou” (“Come on!” in Japanese) to express Anger, “Gomen-nasai” (“I’m sorry” in Japanese) to express Shame, produces a deep sigh to express Sadness, and so on.

We conducted a pilot test to check how people recognize the emotional expression of the humanoid robot. 10 participants, who were to be involved only in the pilot test, were recruited. We prepared a total of 12 emotional expressions: 3 different patterns for each of the 4 emotions. The experimenter showed the emotional expressions of the humanoid robot one by one in random order to the participants. After every expression of the robot, the experimenter asked the participants to recognize expressions the robot showed by choosing from a list of options. The number of times they identified the emotion correctly was recorded. Most participants answered correctly, and all emotional expressions were recognized with almost equal accuracy. The average percentage of the correct answers was 83.3 %. It can be said that the robot was able to successfully convey its desired emotions to the participants through emotional expressions. We chose one of the emotional expressions for each emotion, Joy, Angry, Shame, Sadness (small), and Sadness (large) that are shown in Fig. 1f.

We conducted an experiment to validate that emotional expressions were being perceived as expected. See Supplemental Information (SI) for details. The results indicated that the emotions Joy, Anger, Sadness-small, and Sadness-large were perceived by the participants as we expected although the emotion Shame might have been perceived as Shame and Sadness. Since the emotion of shame often includes the emotion of sadness, our designed emotional expression could be effective for expressing shame.

We also measured the gender perceived from the robot’s voice in the validation experiment. See Supplemental Information (SI) for details. The result indicated that the robot’s voice did not convey clear gender information.

2.4 Emotional Expression Conditions

We prepared two emotional expression conditions for the robot. One condition causes the robot to behave cooperatively, while the other causes it to be competitive. When reacting to the selections made during the game, a cooperative robot will show different emotions than the competitive robot, while a robot operating under both conditions follows the exact same selection policy. The emotional expression conditions of the cooperative and competitive robots are shown in Table 1b. These conditions were designed with reference to the paper [20].

When the robot behaves cooperatively, it expresses positive emotion when the robot and the human participant select cooperate. When the human participant betrays the robot by selecting defect while the robot selects to cooperate, it exhibits Anger. Conversely, the robot shows Shame when it betrays the human participant by selecting defect when the human participant selects cooperate. The robot shows Sadness if the both select defect.

On the other hand, the competitive robot expresses Joy if it successfully betrays the human participant and earns a higher point total. If both select to defect, the robot shows a small amount of Sadness (Sadness (small)) that the robot just nods and sighs. When the robot selects cooperate and the human chooses defect, the robot shows a high degree of Sadness (Sadness (large)) that the robot shakes the head from side to side and says “Ahhh”. If both select to cooperate, the robot does not exhibit any emotional expression.

2.5 Procedure

The following instructions were provided to the human participants prior to the experiment. First, the experimenter guided the human participants into a room containing the humanoid robot and a computer display. The experimenter then introduced the humanoid robot, and instructed the human participants to listen to the robot. The robot started to explain the prisoner’s dilemma game; additional instructions were also shown on the computer display. The spoken instructions were recorded prior to the experiment and automatically replayed on the robot. The content on the computer display was controlled by the experimenter, who was positioned behind the participants. The robot emphasized the idea of the prisoner’s dilemma so that the human participants clearly understood the game. It also informed them that the objective of the game is obtaining as many total points as possible, but that winning or losing the game is not the objective of this experiment.

After listening to the instructions from the robot, the experimenter clarified that the experiment had been reviewed and approved by the ethics committee of our organization. After the explanation from the robot, the experimenter asked each human participant to sign a consent form agreeing to participate in the experiment.

The experimenter then took each human participant to another room where another robot was on standby, as described in Sect. 2.2. The experimenter explained the purpose of the selection buttons used by the human participant and humanoid robot, and explained that the partition prevents the human participant from watching the selections of the robot. The experimenter also explained how the game monitor works during the game. Before the experiment started, the participants reconfirmed their agreement to participate in the experiment and the experimenter left the room.

The human participant started the play the game with the robot after the reconfirmation. The first round began with a 10-second countdown. If the human participant pushed one of the buttons before the countdown reached zero, the outcome of the round was displayed on the monitor, and the humanoid robot exhibited an emotional expression according to the outcome. Then, the countdown for the next round began at 5 s. If the human participant failed to push the button before the countdown reached zero, the round restarted from the beginning while the total outcomes of the rounds remained the same.

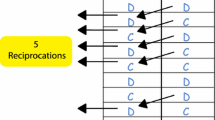

Figure 1e shows the selection strategy of the robot, which was adopted from [20]. During the first five rounds, the robot followed a fixed selection sequence: cooperate, cooperate, defect, defect, and cooperate. After the five rounds fixed strategy, from the 6th to 25th (last) round, the robot followed a tit-for-tat strategy, i.e., the robot repeated the selection of the human participant from the previous round. The fixed selection sequence in the first five rounds was intended to show the emotional expressions of the robot to the human participant. The selection strategy of the robot was not disclosed to the human participant.

The human participant played three games in total; in each game, the humanoid robot exhibited a different emotional expression pattern, i.e., cooperative robot, competitive robot, or neutral robot. The neutral robot did not show any emotional expression while playing the game. The order of the emotional expression pattern was randomized between participants to avoid order effects. To avoid a possibility that participants considered the robots were identical, the experimenter prepared two rooms for them: one was for the interaction experiment and the other was for answering the questionnaire. The participants left the room and completed a questionnaire survey in another room immediately after each game. While the participants were completing the questionnaire survey, the experimenter changed the clothes of the robot. The clothing was blue, red, or none (the robot wore no clothes) and changed randomly so that the counterbalance of the effect of cloth color was considered.

2.6 Measure

Participant impressions of the humanoid robot were recorded. Nine seven-point semantic differential scales were used:

-

Grown-up(1)–childish(7)

-

Cooperative(1)–competitive(7)

-

Emotional(1)–mechanical(7)

-

Interesting(1)–boring(7)

-

Friendly(1)–estranged(7)

-

Natural(1)–awkward(7)

-

Clever(1)–foolish(7)

-

Complex(1)–simple(7)

-

Cheerful(1)–gloomy(7)

2.7 Analysis

The cooperation rates during 20 rounds of game play were calculated for each participant and subjected to an analysis. To calculate the cooperation rates, we excluded the first five rounds because those rounds were intended to help human participants familiarize themselves with the emotional expressions of the robot. The normality and homogeneity of sphericity of the cooperation rates were checked using a Shapiro-Wilk test and a Mauchly test, respectively, prior to undertaking parametric tests. In cases in which the sphericity assumption was violated, the degrees of freedom were corrected using Greenhouse-Geisser estimates of sphericity. If the Greenhouse-Geisser \(\epsilon \) was not close to zero, a one-way analysis of variance (ANOVA) was used. Gender and the nine items in the questionnaire were used in a stepwise multiple regression analysis to predict the cooperation rate. Gender was scored as \(0 =\) female and \(1 =\) male. All statements of significance were based on the probability of \(P \le 0.05\).

3 Results

According to the normality test (Shapiro–Wilk), the assumption of normality was adopted for cooperative (\(p=0.163\)), competitive (\(p=0.214\)), and neutral (\(p=0.389\)) conditions. Mauchly’s tests indicated that the assumption of sphericity was violated (\(\chi ^2(2) = 7.132\), \(p=0.028\)). Therefore, degrees of freedom were corrected using Greenhouse-Geisser estimates of sphericity (\(\epsilon =0.892\)). Figure 2a shows the average cooperation rate of the participants. A one-way ANOVA revealed that the cooperation rate was affected by emotional expressions, \((F(1.78,99.86) = 8.28, p < 0.01, \eta _p^2 = 0.13)\), and Bonferroni post-hoc tests showed that participants cooperated more when the robot behaved cooperatively (\(M=11.49, SD=4.66\)) than when it behaved competitively (\(M=9.26, SD=4.18\), \(p<0.01\)) or neutrally (\(M=9.25, SD=4.52\), \(p<0.01\)).

Table 2b shows means and standard deviations for ratings for semantic differential items. Table 2c shows results of a stepwise multiple regression analysis. The final prediction model contained four predictors (“cooperative-competitive,” gender, “friendly-estranged,” and “grown-up-childish”) and was reached in four steps with six variables removed. The model was statistically significant (\(F (4, 167) = 12.912\), \(p<0.001\)), and accounted for 23.7% of the variance in cooperation rate (\(R2 = 0.237\)). The results indicate that participants were most cooperative when they perceived the robot as cooperative, friendly, and grown-up, and female participants were more cooperative than male participants.

As a manipulation check, we compared the degree to which participants perceived the robot as cooperative, measured by the SD scale, between conditions. A one-way ANOVA revealed that the impression of cooperative-competitive was affected by emotional expressions, \((F(2, 112) = 15.36, p < 0.001, \eta _p^2 =0.215)\), and Bonferroni post-hoc tests showed that participants perceived that the robot is less competitive when the robot behaved cooperatively than when it behaved competitively (\(p<0.001\)) or neutrally (\(p<0.001\)), indicating that cooperative expression was perceived as more cooperative than competitive and neutral expression, while competitive expression might have perceived as neutral. Furthermore, one sample t tests (two-tailed) revealed that whereas cooperative emotion was perceived as more cooperative in comparison with the rating of 4 for ’neither’ (\(t(56) = -5.20, p<0.001\)), competitive emotion was not perceived as competitive (\(t(56) = 1.104, p=0.30\)). Neutral emotion was perceived as neither cooperative nor competitive (\(t(56) = -0.29, p=0.77\))

4 Discussion

Results showed that cooperation rate in the cooperative condition was higher than those of competitive and neutral conditions, suggesting that cooperative emotional expression by the robot can elicit more cooperation from participants, as compared to competitive emotional expression or no emotional expression. This indicates that robots expressing cooperative emotions by non-facial modalities might make people choose cooperate more than defect.

The significant difference between cooperative and neutral conditions indicates that communication between humans and robots can be controlled by designing a robot that expresses more cooperative emotions. This result is consistent with an experiment by Salmen et al. [48] in which the gestures by a humanoid robot lead a human to evaluate the robot more positively. It is also consistent with the experimental results of Terada and Takeuchi [56] in which the emotional expression in simple line drawings of a robot’s face elicits altruistic behavior from humans. The difference in cooperation rate between competitive and neutral conditions was not observed. This might be because competitive expression might have been perceived as neutral rather than competitive, indicated by the results of the manipulation check.

The results reported by de Melo et al. [20] indicated a significant difference between cooperative and competitive behavioral conditions, and our experiment showed similar results. An agent on a computer screen is able to express a wide range of facial emotions; in contrast, facial expressions on a general humanoid robot are usually limited. Nevertheless, our experiment with NAO, a commercially available humanoid robot, showed the effect of emotional behavior generated by motion, eye color, and voice. The relatively high participant cooperation rate achieved in our study is consistent with findings of conventional studies.

When the robot behaved cooperatively but was betrayed by the human participant, the resulting anger exhibited by the robot elicited cooperation from the human; this result agrees with findings of conventional studies [22, 46, 50, 52, 60, 62, 64, 65] showing that an angry person obtains concessions from a competitor in a conflict situation. The positive emotion expressed by the robot when it was cooperative, and both the human participant and robot chose to cooperate, also elicited cooperation from the human; numerous studies [1, 13, 14, 25, 39, 42, 45, 49, 58] suggest that positive emotions have evolved to maintain cooperative relationships.

Bell et al. [7] conducted experiments on sequential prisoner’s dilemma games and found that participants cooperate more with smiling opponents than with angry-looking opponents. They suggested that a smiling facial expression helps to construe the situation as cooperative rather than competitive. The regression result is consistent with previous studies in the sense that the coefficient of the “cooperative–competitive” factor was negative, which indicated that the participants cooperated more if the robot appeared to be cooperative.

Our results indicate that female participants were more likely to cooperate than male ones, which is partially consistent with previous studies. Ortmann and Tichy [43] found no difference between the cooperation rates of males and females when the games were repeated, even though females cooperated more than males in the first round. Balliet et al. [3] reviewed studies on the iterated prisoner’s dilemma and found differences relating to the types of dilemmas. They concluded that there were no meaningful gender differences in cooperation in general; however, they also found that females were marginally more cooperative than males in the prisoner’s dilemma. On the other hand, our regression results indicate that the gender of the participant had an influence on the cooperation rate in our experiment. This insight might owe to differences in experimental conditions. Whereas previous studies designated male and female participants as opponents, our study used a humanoid robot that does not convey its gender clearly.

In experiments, Majolo et al. [36] showed that humans cooperate more with friends than with strangers. Mienaltowski and Wichman [40] also concluded that participants cooperated more with friends than with strangers. Our analysis results are partially consistent with their studies because the coefficient of the “friendly-estranged” term is negative, indicating that friendliness is a factor that increases the cooperation rate. Of course, it is fair to point out that being a friend or a stranger is not the same as being friendly or estranged; however, it is not difficult to imagine that friends are friendly to each other and that strangers are more or less estranged in their experiments.

Our results suggest that the perceived maturity of the robot (grown-up or childish) affected the cooperative tendency of the participants. In particular, participants who rated the robot as more grown-up cooperated more than those who rated the robot as childish. Regarding the relationship between age and cooperation, Fehr et al. [24] showed that young children at age 3–4 behave selfishly whereas children at age 7–8 prefer egalitarian choices. Mienaltowski and Wichman [40] reported that older adults cooperated more than younger adults during an iterated prisoner’s dilemma game. These studies imply that as people become more mature, they become more cooperative. Therefore, participants who attributed the grown-up property to the robot might have expected cooperative decisions from the robot, and as a result, they might have tended to cooperate with the robot. Further study to confirm this prediction is needed.

The conclusion of this study is that multimodal emotional expression by humanoid robots, as expressed through limb motions, illuminated eyes, and speech, may aid the formation of cooperative relationships during a game. Although the conclusions of this study could be predicted from previous studies, our study suggests how decision-making by humans during the repeated prisoner’s dilemma game can be affected by emotional expressions of physically present robots. In particular, our study posits that the design principles of robots elicit human cooperation: it may be useful to not only express cooperative emotions but also to give the impression that they are friendly and grown-up.

Our findings have some limitations that elicit prospects for future work. First, there is a possibility that a participant considers the robots are identical. To avoid the possibility, the experimenter prepared two rooms, one for the interaction experiment and the other for answering the questionnaire, and changed the clothes of the robot for each condition randomly. However, it was not enough to eliminate the possibility that the participant considered the robots were identical. To avoid this possibility reasonably, the between-group design would be preferable for the interaction experiment rather than within-subjects design.

Our study utilized a single type of small humanoid robot to investigate how emotional expressions affect the strategy of human. It is possible that the aesthetics of the robot may have an impact on the emotional response of the people. Subsequent research will be conducted with other types of robots.

Personality traces have not been considered in our study. There is a possibility that the game strategy of the participants is related to their personality. It is another future work to investigate the personality of the participants and relationship between the personality and game strategy of the participants. Moreover, there is a possibility that the participants’ knowledge about robots or robotics affects the strategy of the participants in the game. It is also a future work to investigate the relationship between the knowledge about robots or robotics and the strategy of the participants. Another limitation of our study is that the experiment is conducted in a controlled environment. It is a future work to extend the experiment to a non-laboratory environment.

References

Alexander RD (1987) The Biology of Moral Systems. Aldine De Gruyter, Berlin

Aly, A., Tapus, A.: Multimodal adapted robot behavior synthesis within a narrative human-robot interaction. In: IEEE international conference on intelligent robots and systems, vol. 2015-Decem, pp 2986–2993 (2015). https://doi.org/10.1109/IROS.2015.7353789

Balliet D, Li NP, Macfarlan SJ, Van Vugt M (2011) Sex differences in cooperation: a meta-analytic review of social dilemmas. Psychol Bulletin 137(6):881–909. https://doi.org/10.1037/a0025354

Bartneck, C.: Interacting with an embodied emotional character. In: Proceedings of the 2003 international conference on designing pleasurable products and interfaces, DPPI ’03, pp. 55–60. ACM, New York, NY, USA (2003). https://doi.org/10.1145/782896.782911

Beck, A., Canamero, L., Bard, K.A.: Towards an affect space for robots to display emotional body language. In: 19th international symposium in robot and human interactive communication. IEEE (2010). https://doi.org/10.1109/roman.2010.5598649

Beck, A., Cañamero, L., Damiano, L., Sommavilla, G., Tesser, F., Cosi, P.: Children interpretation of emotional body language displayed by a robot. In: Social Robotics, pp. 62–70. Springer Berlin Heidelberg (2011). https://doi.org/10.1007/978-3-642-25504-5_7

Bell R, Mieth L, Buchner A (2017) Separating conditional and unconditional cooperation in a sequential Prisoner’s Dilemma game. PLoS ONE 12(11):1–21. https://doi.org/10.1371/journal.pone.0187952

Bethel, C.L., Murphy, R.R.: Non-facial/non-verbal methods of affective expression as applied to robot-assisted victim assessment. In: Proceedings of the ACM/IEEE international conference on Human-robot interaction, HRI ’07, pp. 287–294. ACM, New York, NY, USA (2007). https://doi.org/10.1145/1228716.1228755

Bethel CL, Murphy RR (2008) Survey of non-facial/non-verbal affective expressions for appearance-constrained robots. Syst, Man, Cybern, Part C: Appl Revi, IEEE Trans 38(1):83–92. https://doi.org/10.1109/TSMCC.2007.905845

Bickmore TW, Picard RW (2005) Establishing and maintaining long-term human-computer relationships. ACM Trans Comput-Human Interact 12(2):293–327. https://doi.org/10.1145/1067860.1067867

Breazeal C (2003) Emotion and sociable humanoid robots. Int J Human-Comput Stud 59(1–2):119–155. https://doi.org/10.1016/S1071-5819(03)00018-1

Breazeal C (2004) Function meets style: insights from emotion theory applied to hri. Syst, Man, Cybern, Part C: Appl Rev, IEEE Trans 34(2):187–194. https://doi.org/10.1109/TSMCC.2004.826270

Brown WM, Palameta B, Moore C (2003) Are there nonverbal cues to commitment? an exploratory study using the zero-acquaintance video presentation paradigm. Evolutionary Psychology 1

Brown WM, Moore C (2002) Smile asymmetries and reputation as reliable indicators of likelihood to cooperate: An evolutionary analysis. Chap 3. Nova Science Publishers, New York, pp 59–78

Clore G, Ortony A, (2000) Cognition in emotion: Always, sometimes, or never? In: Lane RD, Nadel L (eds) The cognitive neuroscience of emotion. Oxford University Press, pp 24–61

Costa S, Soares F, Santos C (2013) Facial expressions and gestures to convey emotions with a humanoid robot. In: Social robotics, vol. 8239 LNAI, pp. 542–551. https://doi.org/10.1007/978-3-319-02675-6_54

Crumpton J, Bethel CL (2015) A survey of using vocal prosody to convey emotion in robot speech. Int J Social Robot 8(2):271–285. https://doi.org/10.1007/s12369-015-0329-4

de Melo CM, Terada K (2019) Cooperation with autonomous machines through culture and emotion. PLOS ONE 14(11):e0224758. https://doi.org/10.1371/journal.pone.0224758

de Melo CM, Carnevale PJ, Read SJ, Gratch J (2014) Reading people’s minds from emotion expressions in interdependent decision making. J Personal Soc Psychol 106(1):73–88. https://doi.org/10.1037/a0034251

de Melo C.M, Carnevale P, Gratch J (2010) The Influence of Emotions in Embodied Agents on Human Decision-Making. Springer Berlin Heidelberg, Berlin, Heidelberg, pp 357–370. https://doi.org/10.1007/978-3-642-15892-6_38

Embgen S, Luber M, Becker-Asano C, Ragni M, Evers V, Arras KO (2012) Robot-specific social cues in emotional body language. In: 2012 IEEE RO-MAN: The 21st IEEE international symposium on robot and human interactive communication. IEEE. https://doi.org/10.1109/roman.2012.6343883

Fabiansson EC, Denson TF (2012) The effects of intrapersonal anger and its regulation in economic bargaining. PLoS ONE 7(12):e51595. https://doi.org/10.1371/journal.pone.0051595

Faul F, Erdfelder E, Buchner A, Lang AG (2009) Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behav Res Methods 41(4):1149–1160. https://doi.org/10.3758/BRM.41.4.1149

Fehr E, Bernhard H, Rockenbach B (2008) Egalitarianism in young children. Nature 454(7208):1079–1083. https://doi.org/10.1038/nature07155

Frank RH (1988) Passions within reason: The strategic role of the emotions. Norton, New York

Haring M, Bee N, Andre E (2011) Creation and evaluation of emotion expression with body movement, sound and eye color for humanoid robots. In: 2011 RO-MAN. IEEE. https://doi.org/10.1109/roman.2011.6005263

Itoh K, Miwa H, Nukariya Y, Zecca M, Takanobu H, Roccella S, Carrozza MC, Dario P, Takanishi A (2006) Mechanisms and functions for a humanoid robot to express human-like emotions. In: Proceedings of the 2006 IEEE international conference on robotics and automation, pp 4390–4392

Jimenez F, Yoshikawa T, Furuhashi T, Kanoh M (2015) An emotional expression model for educational-support robots. J Artif Intell Soft Comput Res 5(1):51–57. https://doi.org/10.1515/jaiscr-2015-0018

Kanoh M, Kato S, Itoh, H (2004) Facial expressions using emotional space in sensitivity communication robot “ifbot”. In: IEEE/RSJ international conference on intelligent robots and systems (IROS 2004), vol. 2, pp 1586–1591 (2004). https://doi.org/10.1109/IROS.2004.1389622

Kayukawa Y, Takahashi Y, Tsujimoto T, Terada K, Inoue H (2017) Influence of emotional expression of real humanoid robot to human decision-making. In: 2017 IEEE international conference on fuzzy systems (FUZZ-IEEE), pp. 1–6. https://doi.org/10.1109/FUZZ-IEEE.2017.8015598

Kim EH, Kwak SS, Han J, Kwak YK (2009) Evaluation of the expressions of robotic emotions of the emotional robot, “mung”. In: Proceedings of the 3rd international conference on ubiquitous information management and communication, ICUIMC ’09, pp. 362–365. ACM, New York, NY, USA. https://doi.org/10.1145/1516241.1516304

Kim EH, Kwak S, Kwak YK (2009) Can robotic emotional expressions induce a human to empathize with a robot? In: Robot and human interactive communication, 2009. RO-MAN 2009. The 18th IEEE international symposium on, pp 358 –362. https://doi.org/10.1109/ROMAN.2009.5326282

Kleef GAV (2009) How emotions regulate social life: The emotions as social information (easi) model. Current Dir Psychol Sci 18(3):184–188. https://doi.org/10.1111/j.1467-8721.2009.01633.x

Leyzberg D, Avrunin E, Liu J, Scassellati B (2011) Robots that express emotion elicit better human teaching. In: HRI ’11: Proceeding of the 6th ACM/IEEE international conference on Human robot interaction, HRI ’11, pp. 347–354. ACM, New York, NY, USA. https://doi.org/10.1145/1957656.1957789

Li J, Chignell M (2010) Communication of emotion in social robots through simple head and arm movements. Int J Soc Robot 3(2):125–142. https://doi.org/10.1007/s12369-010-0071-x

Majolo B, Ames K, Brumpton R, Garratt R, Hall K, Wilson N (2006) Human friendship favours cooperation in the iterated prisoner’s dilemma. Behaviour 143(11):1383–1395. https://doi.org/10.1163/156853906778987506

Matsui Y, Kanoh M, Kato S, Nakamura T, Itoh H (2010) A model for generating facial expressions using virtual emotion based on simple recurrent network. J Adv Comput Intell Intell Inform 14(4):453–463

McColl D, Nejat G (2014) Recognizing emotional body language displayed by a human-like social robot. Int J Soc Robot 6(2):261–280. https://doi.org/10.1007/s12369-013-0226-7

Mehu M, Grammer K, Dunbar RI (2007) Smiles when sharing. Evol Human Behav 28(6):415–422. https://doi.org/10.1016/j.evolhumbehav.2007.05.010

Mienaltowski A, Wichman AL (2020) Older and younger adults’ interactions with friends and strangers in an iterated prisoner’s dilemma. Aging, Neuropsychol Cogn 27(2):153–172. https://doi.org/10.1080/13825585.2019.1598537

Moors A, Ellsworth PC, Scherer KR, Frijda NH (2013) Appraisal theories of emotion: State of the art and future development. Emotion Rev 5(2):119–124. https://doi.org/10.1177/1754073912468165

Mussel P, Göritz AS, Hewig J (2013) The value of a smile: Facial expression affects ultimatum-game responses. Judgm Decis Making 8(3):381–385

Ortmann A, Tichy LK (1999) Gender differences in the laboratory: evidence from prisoner’s dilemma games. J Econom Behav Organ 39(3):327–339. https://doi.org/10.1016/S0167-2681(99)00038-4

Oudeyer PY (2003) The production and recognition of emotions in speech: Features and algorithms. Int J Human-Comput Stud 59:157–183. https://doi.org/10.1016/S1071-5819(02)00141-6

Reed LI, Zeglen KN, Schmidt KL (2012) Facial expressions as honest signals of cooperative intent in a one-shot anonymous prisoner’s dilemma game. Evol Human Behav 33(3):200–209

Reed LI, DeScioli P, Pinker SA (2014) The commitment function of angry facial expressions. Psychol Sci 25(8):1511–1517. https://doi.org/10.1177/0956797614531027

Saerbeck M, Bartneck C (2010) Perception of affect elicited by robot motion. In: 2010 5th ACM/IEEE international conference on human-robot interaction (HRI). IEEE. https://doi.org/10.1109/hri.2010.5453269

Salem M, Rohlfing K, Kopp S, Joublin F (2011) A friendly gesture: Investigating the effect of multimodal robot behavior in human-robot interaction. In: Proceedings–IEEE international workshop on robot and human interactive communication, pp. 247–252. https://doi.org/10.1109/ROMAN.2011.6005285

Scharlemann JP, Eckel CC, Kacelnik A, Wilson RK (2001) The value of a smile: Game theory with a human face. J Econ Psychol 22(5):617–640. https://doi.org/10.1016/S0167-4870(01)00059-9

Sell A, Tooby J, Cosmides L (2009) Formidability and the logic of human anger. Proc Nat Acad Sci 106(35):15073–15078

Shimokawa T, Sawaragi T (2001) Acquiring communicative motor acts of social robot using interactive evolutionary computation. In: Systems, Man, and Cybernetics, 2001 IEEE international conference on, vol. 3, pp 1396 –1401. https://doi.org/10.1109/ICSMC.2001.973477

Sinaceur M, Tiedens LZ (2006) Get mad and get more than even: When and why anger expression is effective in negotiations. J Exp Soc Psychol 42(3):314–322

Smith CA, Haynes KN, Lazarus RS, Pope LK (1993) In search of the “hot” cognitions: Attributions, appraisals, and their relation to emotion. J Personal Soc Psychol 65(5):916–929. https://doi.org/10.1037/0022-3514.65.5.916

Striepe H, Donnermann M, Lein M, Lugrin B (2019) Modeling and evaluating emotion, contextual head movement and voices for a social robot storyteller. Int J Soc Robot. https://doi.org/10.1007/s12369-019-00570-7

Sugano S, Ogata T (1996) Emergence of mind in robots for human interface–research methodology and robot model. In: IEEE international conference on robotics and automation, vol. 2, pp 1191–1198 (1996). https://doi.org/10.1109/ROBOT.1996.506869

Terada K, Takeuchi C (2017) Emotional expression in simple line drawings of a robot’s face leads to higher offers in the ultimatum game. Front Psychol 8:724. https://doi.org/10.3389/fpsyg.2017.00724

Terada K, Yamauchi A, Ito A (2012) Artificial emotion expression for a robot by dynamic color change. In: The 21th IEEE international symposium on robot and human interactive communication (RO-MAN 2012), pp 314–321

Trivers RL (1971) The evolution of reciprocal altruism. Quart Rev Biol 46(1):35–57

Tsiourti C, Weiss A, Wac K, Vincze M (2019) Multimodal integration of emotional signals from voice, body, and context: Effects of (in)congruence on emotion recognition and attitudes towards robots. Int J Soc Robot. https://doi.org/10.1007/s12369-019-00524-z

van Dijk E, van Kleef GA, Steinel W, van Beest I (2008) A social functional approach to emotions in bargaining: When communicating anger pays and when it backfires. J Personal Soc Psychol 94(4):600–614. https://doi.org/10.1037/0022-3514.94.4.600

van Kleef GA, Dreu CKD, Manstead AS (2010) An interpersonal approach to emotion in social decision making. In: Advances in experimental social psychology, pp. 45–96. Elsevier (2010). https://doi.org/10.1016/s0065-2601(10)42002-x

van Kleef GA, Côté S (2007) Expressing anger in conflict: When it helps and when it hurts. J Appl Psychol 92(6):1557–1569. https://doi.org/10.1037/0021-9010.92.6.1557

van Kleef GA, Côté S (2018) Emotional dynamics in conflict and negotiation: Individual, dyadic, and group processes. Ann Rev Organ Psychol Organ Behav 5(1):437–464. https://doi.org/10.1146/annurev-orgpsych-032117-104714

van Kleef GA, Dreu CKWD, Manstead ASR (2004) The interpersonal effects of anger and happiness in negotiations. J Personal Soc Psychol 86(1):57–76. https://doi.org/10.1037/0022-3514.86.1.57

van Kleef GA, van Dijk E, Steinel W, Harinck F, van Beest I (2008) Anger in social conflict: Cross-situational comparisons and suggestions for the future. Group Decis Negot 17:13–30. https://doi.org/10.1007/s10726-007-9092-8

von der Pütten AMR, Krämer NC, Herrmann J (2018) The effects of humanlike and robot-specific affective nonverbal behavior on perception, emotion, and behavior. Int J Soc Robot 10(5):569–582. https://doi.org/10.1007/s12369-018-0466-7

Wu Y, Baker CL, Tenenbaum JB, Schulz LE (2017) Rational inference of beliefs and desires from emotional expressions. Cogn Sci. https://doi.org/10.1111/cogs.12548

Xu J, Broekens J, Hindriks K, Neerincx MA (2015) Mood contagion of robot body language in human robot interaction. Auton Agents Multi-Agent Syst 29(6):1216–1248. https://doi.org/10.1007/s10458-015-9307-3

Xu J, Broekens J, Hindriks K, Neerincx MA (2013) Mood expression through parameterized functional behavior of robots. In: 2013 IEEE RO-MAN. IEEE. https://doi.org/10.1109/roman.2013.6628534

Xu J, Broekens J, Hindriks K, Neerincx MA (2014) Effects of bodily mood expression of a robotic teacher on students. In: 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE. https://doi.org/10.1109/iros.2014.6942919

Acknowledgements

We would like to thank Dr. Junko Yoshida and Dr. Norie Takaku from University of Fukui for their efforts in recruiting participants.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human Rights

This experiment was reviewed and approved by the ethics committee for human participants, Department of Human and Artificial Intelligent Systems, Graduate School of Engineering, University of Fukui, No. H2016002 and H2018001.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Takahashi, Y., Kayukawa, Y., Terada, K. et al. Emotional Expressions of Real Humanoid Robots and Their Influence on Human Decision-Making in a Finite Iterated Prisoner’s Dilemma Game. Int J of Soc Robotics 13, 1777–1786 (2021). https://doi.org/10.1007/s12369-021-00758-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-021-00758-w