Abstract

The four-dimensional model of empathy presented in this paper addresses human–human, human–avatar and human–robot interaction, and aims at better understanding the specificities of the empathy that humans might develop towards robots. Its first dimension is auto-empathy and refers to an empathetic relationship with oneself: how can a human directing a robot expand the various components of empathy he feels for himself to this robot? The second is direct empathy: what does a human attribute to a robot in terms of thoughts, emotions, action potentials or even altruism, on the model of what he imagines and attributes to himself? The third dimension is reciprocal empathy that consists of thinking that a robot is able to identify with me, feel or guess my emotions and thoughts, anticipate my actions and wear me assistance if necessary. Finally, the fourth dimension, intersubjective empathy, is about thinking and imagining that a robot can inform me of things - emotions, thoughts that I am likely to experience- that I do not know about myself. Each of these four dimensions includes four different components: (1) Action (empathy of action), (2) Emotion (emotional empathy), (3) Cognition (cognitive empathy) and (4) Assistance (empathy of assistance). This theoretical model of empathy in four dimensions and four components defines sixteen items whose relevance will be tested in the near future through comparative experimental research involving human-human and human-robot interaction.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Preliminary

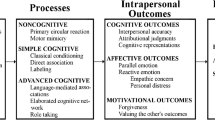

In future research, we propose to confirm or infirm, through a series of experiments, a four-dimensional model of empathy applied to human-robot interaction: in a first step, we are working to validate a Questionnaire on Empathy and Auto-empathy, the QEAE (Tisseron & Tordo). This questionnaire is based on our first model of empathy [1–4], empathy being considered here in a broad sense. This model of empathy is in four dimensions including each four components. The first dimension, auto-empathy, is an empathetic relationship with oneself. The second dimension is direct empathy and allows us to know the other while remaining aware of our difference with him/her. The third dimension is reciprocal empathy which adds to the representation of the inner world of the other, the principle of a possible reciprocity. Finally, the fourth dimension of empathy corresponds to what is commonly called intersubjectivity, and is to recognize to the other the possibility to enlighten us on aspects of ourselves that we do not know. Each of these four dimensions of empathy consists of four components: action (empathy of action); emotion (emotional empathy); cognition (cognitive empathy); assistance (empathy of assistance).

This questionnaire was designed to be adaptable to the measurement of empathy during a human interaction with robots or with avatars. The validation process must be completed by July 2014. In a second step, the questionnaire will be used in two experimental contexts. The first concerns a study of empathic relationship to robots and avatars, from a psychological point of view, with a non-clinical population, coordinated by Tisseron and Tordo. The second is part of a broader partnership including collaborations with various academic, public and industrial partners from the field of robotics in France and in Japan. This study aims to conduct, in an interdisciplinary approach, a research study on the interaction between the elderly and a humanoid robot, and will assess the possible impacts of their interaction on the maintenance or the rehabilitation of mental and motor functions, as well as of emotional and social skills. In this context, we will use the QEAE and study empathy in the human interacting with a robot, to try to determine whether the robot can be considered as an operational and efficient assistant in the frame of the mental and motor training and treatment of the elderly.

2 Empathy: A Model in Four Dimensions

This paper presents our four-dimensional model of empathy in relation to human-human and human-avatar interactions. This model in sixteen items is then expanded to robots as we address some of the adjoining questions and hypotheses that arise from its possible applications to human-robot interaction.

2.1 Auto-empathy

Auto-empathy is the first dimension of our four-dimensional model of empathy. It is an empathetic relationship with oneself in which one has representations of his own states of subjectivity (actions, emotions and thoughts) [4]. It is not about how one perceives or acknowledges the subjectivity of another individual, as it is the case in empathy with others, but how one appropriates his/her own subjectivity and coincides with it. When we started working on empathy in human-intelligent machine interaction, we made the assumption that auto-empathy could mediate via animated objects and we investigated auto-empathy mediated by an avatar [3, 5]. This avatar was considered as a pixel figure representing the human in the digital world: in that sense, it is an empathic relationship to a part of us that would have been projected in our avatar. Thus, it allows us to put ourselves in the place of a figure (the avatar) that represents us, so that our attention and our empathy for this figure are indirectly directed towards ourselves.

Two characteristics seem to be essential to achieve this. The first is a projection of one’s own subjective states in this object. The second concerns the mediation of the human potential of motion in the avatar [6], so that one truly becomes a spectator of his own actions when directing it [7]. Therefore, this object can become a possible technological version of the human image reflected by the mirror [8].

In relation to the robot, we hypothesize that auto-empathy does not mediate, or else incompletely. As it is the case with the avatar, the human will be able to project a part of himself in the robot. However, unlike with the avatar, we are not spectators of our own actions in the relationship with the robot. We do not direct or manipulate its behavior as it follows its own program and has a certain degree of autonomy. The robot favors and supports the distinction between what we imagine of our own actions and what we imagine of its own actions. In fact, the relationship with a robot would be more comparable to the one we have with an avatar which would be controlled by another person (user or player) that we have not encountered in real life: we imagine that it is a bit like ourselves, at least by the emotions it is supposed to express and feel and by the behaviors it shows. But since we are not spectators of our own actions with it—the robot’s actions are distinguished from ours-, we will rather describe what we experience as auto-empathic empathy [2] or direct empathy [1]. As we will see, we find in the robot what we expect, which is modeled by what we know and imagine of ourselves, without losing the distinction between ourselves and the robot.

2.2 Direct Empathy

In the case of direct empathy, the relationship with an avatar prefigures the form of empathetic relationship that we have with a robot. In fact, the avatar, responsible for representing us in virtual spaces, can have a double polarity. On the one hand, it embodies us, that is to say that we direct it and act through it, thus binding to it our own subjectivity and our own actions. This is the dimension of mediated auto-empathy.

But on the other hand, the avatar is in a position to be “another person than ourselves” and not “another of ourselves”. A young woman who’s an adept of Second Life reported feeling the itchiness of the bubbles on her own skin when she plunged her avatar in a virtual Jacuzzi [4]. It is possible to presume that the tingling she felt was really in the range of a physical sensation, but this physical sensation is not as direct as the one experienced in real life when plunging in a real Jacuzzi. It was indirect and comparable to what a mother would feel when she bathes her baby and is moved to see him enjoying his bath or, oppositely, when she sees him falling or bumping himself and aches for him. In other words, this feeling belongs to a primary form of empathy in which I imagine experiencing the same thing as what I see the other feels [1].

The same kind of relationship would be observed with a robot: we project in a robot a part of our psychological and subjective life, by letting ourselves be guided by the reactions of the robot and by the memory of traces of similar relationships that we have experienced before. One imagines feeling the same as what is felt by the robot. In certain contexts, a person could also imagine the robot as being a bit like his/her own child [9]. For Ishiguro [9], an elderly person who has to be taken care of by a robot that is designed to help her and assist her, welcomes it as her own child. This is to avoid at all costs that the robot appears as a threatening force. One might think that this is just a way of designing robots in response to our own way of being in direct empathy with them, as we would indeed be with a child. In order to create empathy, H. Ishiguro imagines then that the robot is to be controlled and educated by the smile of its owner. An owner who becomes or behaves as the “mother” of the robot s/he hosts.

2.3 Reciprocal Empathy and Intersubjective Empathy

In [1], we proposed to expand the meaning of the word “empathy” in order to bring in other dimensions. The ability to identify with someone from the perspective of what s/he feels would be the first of a three-stage process that could be represented in the form of a pyramid. This pyramid consists of three superimposed stages corresponding with increasingly rich relationships, shared with an increasingly smaller number of people. Some authors [10] proposed to designate these dimensions under the word “compassion”, taken no longer in the Christian tradition’s sense but in the meaning it has in the Buddhist tradition: human beings are considered to be in permanent suffering and in perpetual search for a happiness that is impossible to reach. The word “compassion” does not refer here to mercy or pity, but to humanity and sensitivity, and covers most of the phenomena that we have gathered here under the word “empathy”.

Reciprocal empathy is to recognize to the other the opportunity to self-identify with me, whereas intersubjective empathy means accepting that the other can give me information on things that I might ignore about myself. Although technology is still far from making this possible, it is not impossible that these abilities might be present in the relationships that human have with robots. Indeed, some studies [11–13] have shown that humans tend to draw inferences about the robot’s abilities and personality in a way going beyond its observable actions. A robot cannot feel emotions, but it can simulate expressions of emotion through its facial mimics and its attitudes. These abilities probably support the human experience of mutual empathy during human-robot interaction. As shown in various studies, including [14], humans tend to attribute emotions to the robot, have feelings for the robot, or think that the robot has emotions towards them. Some recent studies [15, 16] focus on supporting this emotional dimension of the interaction by endowing the robot with empathic capabilities thus enabling it to recognize the affective state and the personal preferences (in relation to a particular task) of its human partner and to adapt its response accordingly.

3 The Four Components of the Four-dimensional Model of Empathy with Robots

We present in the following the four components that are at work in each of the four dimensions of empathy, and that we aim to experimentally explore in a future research: action, emotion, cognition and assistance.

3.1 Empathy of Action

The first component of the four-dimensional model of empathy is the empathy of action. It consists of putting oneself in the place of another person’s actions, in order to understand them or to reproduce them, in the context of representations of intentions of shared actions [17]. This mimo-gestural “echoïsation” [18] is often associated with imitation, imitation being considered here not only as a reproduction of the movements of the other, but also as an interpersonal function going beyond the acquisition of motor skills. In other words, imitation has a function of social communication [19].

This component of the action in a relationship with a robot has been extensively studied in robotics. For instance, the studies that work on developing the imitative abilities of robots can bring an important input to the field of clinical psychology and psychiatry. In the field of autism for example, imitation can be a good way to partially restore social communication [20], not only to reestablish the connection to the other in autistic children, but also to increase their repertoire of actions [21]. Indeed, regarding some aspects such as movement patterns, predictability in gestures and facial expressions, the robot might seem less worrying for the autistic person than the human.

3.2 Emotional Empathy

The second component is emotional empathy which allows humans to be affected by the emotions of other humans. Emotional empathy helps identifying one’s own emotions and emotions felt by an interlocutor, thus responding appropriately. It would be a kind of emotional reproduction, in the sense that an emotion triggered in one would be similar or identical to that which we perceive in the other [22]. Studies such as [23] focus on this component of empathy in human-robot interaction. In this study, the brain activation of fourteen participants is assessed using functional magnetic resonance imaging (fMRI). The participants are exposed to a film in which they see a human being pampered or abused by another human, or a robot (dinosaur shape) being pampered or abused by a human. The first results indicate that in both cases, the same neural activation patterns involved in emotional regions are activated.

Nevertheless, there is a stronger activation of emotional response in the case where another human is mistreated. These results, like many other results of studies led in the field of human-robot interaction, suggest that humans tend to experience emotional empathy with robots. The question that remains however is about the nature of such empathy. Furthermore, is emotional empathy activated at all levels of the empathy’s four dimensions? For example, are we able to accept that the robot feels what we feel (emotional reciprocal empathy)? Are we likely to accept that it puts us on the path of emotions that we do not know (intersubjective emotional empathy)? The model we are currently developing will soon attempt to answer these questions.

3.3 Cognitive Empathy

The cognitive dimension of empathy allows having a representation of the mental contents of our interlocutors. This dimension of empathy is part of a theory of mind: an active operation, in which the subject tries to access the awareness of others, and where processes of cognitive perspective taking are used to imagine or mentally transpose oneself in the place of another [24], that is to say, in the place of his/her own cognitions. In the field of human-robot interaction, research such as [25] has shown that brain regions (temporoparietal junction, medial frontal cortex) involved in the ability to attribute thoughts to others (theory of mind), are also activated when we are interacting with a technological object or a robot. These regions would activate all the more when these objects have physical characteristics similar to the human ones [25]. Thus, higher cognitive functions are indeed involved in human-robot interaction. But if we tend to assign thoughts to robots, will the robots be reciprocally able to assign thoughts to us? And if it is the case, will we be able to accept the fact that robots anticipate our actions and get a more or less precise perspective of what we think?

Some roboticists are seeking to develop robots that can read our minds based on emotion [16] or motion [26] analysis and recognition. Koppula and Saxena [26] have designed a domestic robot: PR2, able to analyze the human motion, and then convene its own database of domestic activities to finally decide which human action will probably follow.

3.4 Empathy of Assistance

Finally, in order for humans to be assisted by a robot, it is first necessary that they get to accept this assistance. This is the reason why it was mostly important to introduce in our model of empathy the empathy of assistance. This dimension of empathy concerns the will to assist, help and eventually protect the other. We previously underlined that many studies tend to show expressions of human empathy, mostly in its emotional dimension, in a relationship with a robot. It is known that in war zones and in combat situations, some soldiers tend to become emotionally attached to their robotic aid and to show a kind of empathy towards it [27–29]. Some are even ready to risk their own lives or to endanger their comrades’ lives to help a robot [27–29]. But this situation is very specific: a soldier who thinks that a robot has saved him will be inclined to attempt saving a threatened robot, even at the peril of his own life, knowing that mutual assistance is part of the logic of military actions.

But it is mostly crucial to differentiate between the civil robot and the military robot, the civil robot being here our main focus. In a collaborative situation or a joint-task, it is one thing to recognize that the robot needs our help, but it is another to accept that it helps us, and yet another to actually help it if required. In [30], the authors have shown that it is probable that humans will not exactly react to robots as they would to other humans. Nevertheless, recent studies such as [15] suggested that adapting the emotional state of a robot to the mood of the human user had a significant effect on inducing human empathy towards the robot and on increasing helpfulness towards it. When it comes to interactions involving children, authors in [31] have observed that adult instructions about a robot are most likely to impact children’s perceptions and helping behaviors towards it: the children tend to help a robot after experiencing a positive introduction to it. When this positive introduction is combined with the permission to help it, the majority of the children (70 %) tended to help the robot completing the task. A comparison between the levels of each of the four dimensions of empathy in specific experimental designs of human-robot interactions, especially in regards to the empathy of assistance, would probably contribute to clarify some results such as the ones presented in [15, 30, 31] as well as to distinguish between the specificities of the empathy experienced during human-human interaction and during human-robot interaction.

Empathy of assistance seems like a major factor to consider in future research on empathy with robots, to the extent that one of the most important objectives in robotics today is to develop robot companions that can establish long-term relationships with humans and that can assist them on a daily basis in situations of loss of autonomy or disability. Knowing that it is sometimes difficult for some people with disabilities to accept help from another human, it is legitimate and interesting to consider how they would respond to a robot designed to aid them. All the robot-assisted therapies cannot see the day if we do not accept the help from an assistant or from a companion that is also a machine. Thus, empathy of assistance is a very important component to consider in the research on human-robot interaction since it could largely determine the success or the failure of robot-assisted treatment programs.

4 Conclusion

We tried to demonstrate the importance of presenting a model of empathy extended to four dimensions: auto-empathy, direct empathy, reciprocal empathy, and intersubjective empathy; as well as to four components: action, emotion, thought, and assistance. According to our hypotheses, this extended model of empathy could cover all aspects of empathic relationship with robots. The QEAE, Questionnaire of Empathy and Auto-empathy (Tisseron and Tordo) will enable us to measure these factors during human-human, human-agent and human-robot interactions, thus improving the understanding of the dimensions of the empathy that humans display towards their robot partner. Using the QEAE will also enable us to progressively point out which aspects of the interactional context, and of the robot’s features, as well as of the human partner, support experiencing one item, or more, of the model of empathy in sixteen items.

An interesting direction to explore would be of investigating the possible dependencies existing between the manifestations of two or three different dimensions of empathy. For example, in [32], authors have shown that understanding the robot’s actions and making sense of the interaction with it on one hand, as well as perceiving this interaction as familiar on the other hand, are both strongly associated to the adequacy and the efficiency of the human response to the robot. The research presented in [32] has also revealed that the more the participants made sense of the robot’s actions and found it easy to react to it, the more intense their motion was, when responding to the robot’s greeting goodbye. In [35], the motion intensity of humans in answer to the robot’s goodbye gestures, showed to be positively correlated with the appreciation of its sociability. Evaluating possible correlations between emotional empathy, empathy of action and cognitive empathy, would probably give a more comprehensive understanding and interpretation of such results.

Most studies such as the ones described in [32–34] agree on the fact that further research is expressly required to better determine which dimensions and degrees of likeability and similarity between humans and robots, are needed to empower more empathic and intuitive human-robot interactions. Additionally, some recent studies [15, 16, 36] have shown that the robot’s ability to display an empathic behavior and induce empathy had an impact on how its human partners perceived it in terms of behavior, social presence, engagement and interaction ease. Thus, the development of this model of empathy in sixteen items, and its future experimental investigation, can bring a significant input to better understand and sustain human acceptance of assistive companion robots on a daily basis.

References

Tisseron S (2010) L’empathie au cæur du jeu social. Albin Michel, Paris

Tordo F, Tisseron S (2013) Les diverses formes de l’empathie dans le jeu vidéo en ligne : propositions et experimentation. In: Tisseron S (ed) Empathie et subjectivation dans les mondes numériques. Dunod, Paris, pp 83–110

Tordo F (2013) Le jeu vidéo, un espace de subjectivation par l’action. L’auto-empathie médiatisée par l’action virtuelle. Revue québécoise de psychol 34(2):245–263

Tordo F, Binkley C (2016) L’Auto-empathie, ou le devenir de l’autrui-en-soi : définition et clinique du virtuel. Evol Psychiatr 81(1):1–16

Tordo F (2012) Psychanalyse de l’action dans le jeu vidéo. Adolescence 79(1):119–132

Virole B (2003) Du bon usage des jeux vidéo, et autres aventures virtuelles. Hachette littératures, Paris

Tisseron S (2008) Le corps et les écrans. Toute image est portée par le désir d’une hallucination qui devienne réelle. Champ Psychosom 52(04):47–57

Tisseron S (ed) (2006) L’enfant au risque du virtuel. Dunod, Paris

Ishiguro H (2006) Interactive humanoids and androids as ideal interfaces for humans. In: Proc 11th Int Conf on Intelligent user interfaces, IUI ’06. New York, NY, USA, pp 2–9

Varela FJ, Thompson E, Rosch E (1991) The embodied mind; cognitive science and human experience. MIT Press, Cambridge

Powers A, Kiesler S (2006) The advisor robot: Tracing people’s mental model from a robot’s physical attributes. In: Proc Conf. Human-Robot Interaction, pp 218–225

Powers A, Kramer A, Lim S, Kuo J, Lee S, Kiesler S (2005) Eliciting information from people with a gendered humanoid robot. In: Proc IEEE Int. Workshop Robot and Human Interactive Communication, pp 158–163

Eyssel F, Hegel F, Horstman G, Wagner C (2010) Anthropomorphic inferences from emotional nonverbal cues: a case study. In: Proc IEEE Int. Symp. Robot and Human Interactive Communication, pp 681–686

Turkle S (2006) A nascent robotics culture: new complicities for companionship. AAAI Technical Report Series

Kühnlenz B, Sosnowski S, Buß M, Wollherr D, Kühnlenz K, Buss M (2013) Increasing helpfulness towards a robot by emotional adaptation to the user. Int J Soc Robot 5:457–476

Leite I, Castellano G, Pereira A, Martinho C, Paiva A (2014) Empathic robots for long-term interaction. Int J Soc Robot 6:329–341

Decety J (2004) L’empathie est-elle une simulation mentale de la subjectivité d’autrui? In: Berthoz A, Jorland G (eds) L’empathie. Odile Jacob, Paris, pp 53–88

Cosnier J, Rey F, Robert F (1996) Le corps, les affects et la relation á l’autre. Thér Fam 17(2):195–200

Meltzoff A (2002) La théorie du “like me”, précurseur de la compréhension sociale chez le bébé : imitation, intention et intersubjectivité. In: Nadel J, Decety J (eds) Imiter pour découvrir l’humain. Psychologie, neurobiologie, robotique et philosophie de l’esprit. PUF, Paris

Nadel J, Decety J (2002) Imiter pour découvrir l’humain. Psychologie, neurobiologie, robotique et philosophie de l’esprit. PUF, Paris

Nadel J, Revel A, Andry P, Gaussier P (2004) Toward communication : first developmental steps of imitation in infants, children with autism and robots. Stud Interact 5(1):45–75

Favre D, Joly J, Reynaud C, Salvador LL (2005) Empathie, contagion émotionnelle et coupure par rapport aux émotions. Enfance 57(4):363–382

Rosenthal-Von der Pütten A, Schulte F, Sobieraj S et al. (2013) Investigation on Empathy Towards Humans and Robots Using Psychophysiological Measures and fMRI. In: The annual meeting of the International Communication Association, Hilton Metropole Hotel, London, England

Boulanger C, Lançon C (2006) L’empathie : réflexions sur un concept. Ann Méd Psychol 164:497–505

Krach S, Hegel F, Wrede B, Sagerer G, Binkofski F et al (2008) Can machines think? interaction and perspective taking with robots investigated via fMRI. PLoS ONE 3(7):e2597

Koppula H, Saxena A (2013) Learning spatio-temporal structure from RGB-D videos for human activity detection and anticipation. In: Proc Int Conf on machine learning. Atlanta, USA, pp 792–800

Tisseron S (2012) An assessment of combatant empathy for robots with a view to avoiding inappropriate conduct in combat. In: Doaré R, Danet D, Hanon J-P, de Boisboissel G (eds) Robots on the battlefield, contemporary issues and implications for the future. Combat Studies Institute press, US Army Combined Arms Center, Fort Leavenworth, Kansas & Ecoles de Saint-Cyr Coëtquidan, pp 165–180

Singer PW (2009) Wired for war : the robotics revolution and conflict in the 21st century. Penguin, New York

Carpenter J (2013) The quiet professional: an investigation of US military explosive ordnance disposal personnel interactions with everyday field robots

Cowley S, Kanda T (2005) Friendly machines: interaction-oriented robots today and tomorrow, Alternation

Beran T, Ramirez-Serrano A, Kuzyk R, Nugent S, Fior M (2010) Would children help a robot in need? Int J Soc Robot 3:83–93

Baddoura R, Zhang T, Venture G (2013) Experiencing the familiar, understanding the interaction and responding to a robot proactive partner. In: Proc ACM/IEEE Int Conf on Human-Robot Interaction. Tokyo, Japan, pp 247–248

Bartneck C, Kanda T, Ishiguro H, Hagita N (2009) My robotic doppelganger - a critical look at the uncanny valley theory. In: Proc 18th IEEE Int Symposium on Robot and Human Interactive Communication, pp 269–276

Canamero L (2002) Playing the emotion game with feelix: what can a lego robot tell us about emotion? In: Dautenhahn K, Bond A, Canamero L et al (eds) Socially intelligent agents: creating relationships with computers and robots. Kluwer Academic Publishers, Norwell, pp 69–76

Baddoura R, Venture G (2013) Social versus useful HRI: experiencing the familiar, perceiving the robot as a sociable partner and responding to its actions. Int J Soc Robot 5:529–547

Niculescu A, Van Dijk B, Nijholt A, Li H, See SL (2013) Making social robots more attractive: the effects of voice pitch, humor and empathy. Int J Soc Robot 5:171–191

Acknowledgments

The authors thank Carol Baddoura and Grégoire Pointeau for their efficient and valuable help in revising some technical aspects of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tisseron, S., Tordo, F. & Baddoura, R. Testing Empathy with Robots: A Model in Four Dimensions and Sixteen Items. Int J of Soc Robotics 7, 97–102 (2015). https://doi.org/10.1007/s12369-014-0268-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-014-0268-5