Abstract

This paper presents a study conducted to evaluate and optimize the interaction experience between a human and a 9 DOF arm-exoskeleton by the integration of predictions based on electroencephalographic signals (EEG). Due to an ergonomic kinematic architecture and the presence of three contact points, which enable the reflection of complex force patterns, the developed exoskeleton takes full advantage of the human arm mobility, allowing the operator to tele-control complex robotic systems in an intuitive way via an immersive simulation environment. Taking into account the operator’s percept and a set of constraints on the exoskeleton control system, it is illustrated how to quantitatively enhance the comfort and the performance of this sophisticated human–machine interface. Our approach of integrating EEG signals into the control of the exoskeleton guarantees the safety of the operator in any working modality, while reducing effort and ensuring functionality and comfort even in case of possible misclassification of the EEG instances. Tests on different subjects with simulated movement prediction values were performed in order to prove that the integration of EEG signals into the control architecture can significantly smooth the transition between the control states of the exoskeleton, as revealed by a significant decrease in the interaction force.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Teleoperated robotic systems are without a doubt powerful tools to conduct exploration and perform manipulation tasks in a wide range of hazardous environments. However, such systems, their action spaces as well as missions nowadays reached a level of complexity which makes it increasingly difficult for human operators to control them. Therefore, it is imperative that more intuitive human–machine interfaces, which make this job easier and more efficient are developed. Thus, we developed a teleoperation system that encompasses an arm exoskeleton, a brain-reading interface, and visual immersion environment to support Man-Machine Interaction.

When developing the system we focused on improving the exoskeleton control by biosignals, i.e. electroencephalographic signals, that allow insights into the user’s intentions to improve the overall interaction. Such an improvement would not be achieved without the knowledge gained from analyzing the electroencephalogram (EEG). This is most important since it is well known, that the analysis and classification of EEG, as done in many brain–computer interfaces (BCIs) requires a high effort, e.g. setting up an electrode cap, recording training data and training time for the classifier. The positive effect of the integration of EEG signals has to outweigh the effort required to introduce the EEG based modification.

Since during motion the control system of the exoskeleton can be optimized using sensors information and accurate models to compensate for dynamic and nonlinear friction effects [10], the integration of biosignals in this specific case would probably not significantly improve the situation. However, a prediction of movement onset of a subject out of a rest position is, just by the analysis of the behavior of the operator or the interaction with the system, not possible and can therefore not be integrated into the control of the exoskeleton to improve its behavior. We therefore introduce EEG based predictions about movement onset to prepare the exoskeleton for lock out, i.e. to smooth the transfer from rest (full user support mode) to free movements (transient mode) [19] (see Fig. 1).

Adaptation of the control of the exoskeleton by Brain Reading (BR): The exoskeleton is supporting the user while he is moving. In case the user stops moving, the exoskeleton will lock in to support the arm in mid air. For lock out the user has to press against sensors. To support lock out, movement predictions made by BR are used to modulate the exoskeleton control. High prediction scores result in low effort for the user to lock out. Pressure against the sensors is always required for lock out, minimizing the risk of false lock out in case of false movement prediction made by BR

With the work presented here, we show that by integrating biosignals, the responsiveness of the interface and comfort of interaction could be enhanced without compromising the overall system safety. Hence, this adaptation of the exoskeletons control results in an effortless and seamless integration of human and exoskeleton. We focus on measuring this improvement of the interaction between man and exoskeleton by the integration of EEG signals and render a novel framework to overcome current issues in the literature (see below) by following basically two principles: First, the biosignal (EEG) is integrated in a way that is not directly controlling the exoskeleton, but instead influencing parameters of the exoskeleton’s control. More specifically, with the help of movement prediction the exoskeleton is able to prepare for a movement, thereby reducing the effort of the user for its initiation. Further, we made sure that the exoskeleton is behaving optimally with respect to the control based purely on force sensor data (i.e. without EEG based movement prediction). Second, we adjust the effect of this integration according to the user and evaluate the benefit. Since the integration of the biosignal is indirect, i.e. not controlling the exoskeleton, it is not immediately clear that there is indeed a benefit for the user due to some biosignal-based modification. Following these principles, this investigation and adjustment of the effect is essential for the proposed procedure, because the overall goal is to achieve a perceptual improvement for the human. This improvement is technically realized by reducing the time threshold the exoskeleton needs for switching between full user support and transient mode (see Fig. 1 and more details in Sect. 2.2) whenever a movement is predicted. One major advantage here is that the user maintains the executive power in every situation. If the system correctly predicts a behaviour, the exoskeleton can prepare for it resulting in improved transition from one exoskeleton modality to another. Moreover, if no or a wrong prediction is made, most likely nothing noticeable for the user will happen.

Here, we use simulated classifier output by determining three levels of prediction certainty in order to evaluate the benefit for the user independently of the applied classifier. EEG data comprise high noise levels and our prediction is achieved in real time (i.e. based on single trials), which would yield in fluctuations in prediction scores making a systematic evaluation more difficult.

In the following we will deal more specifically with existing exoskeleton systems and approaches to make use of biosignals.

Requirements for Exoskeletons

Among the possible solutions, exoskeletons represent a category of devices that can effectively increase the immersion of the operator into the work scenario [5, 41, 46, 49]. According to [42], the performance of a teleoperation session is directly correlated with the quality of the telepresence, thus with the feeling of being present at the remote site. Here, it is of particular importance to have an interface which is able to transfer as realistically as possible all the relevant information regarding the remote scenario [29]. An exoskeleton, like any other human–machine interface, has to fulfill specific requirements regarding safety, comfort and usability. Among these three, safety is undoubtedly the most important one [21]. In any working modality the system must not harm the user. This can be assured implementing different levels of safety mechanisms both in the hardware and in the software. Backdrivability and compliance in the actuation system is also highly desirable [51, 52], meaning that the user, at any event and disregarding any possible failure, has the option to move the interface according to his will. The comfort in wearing and using the interface depends on different factors and has a strong influence on the execution of the task at hand [48]. Considering that in a tele-operation session the exoskeleton is worn for a prolonged period of time, it has to be as light as possible. Furthermore, its kinematics should be configured in a way to avoid restriction of the user’s mobility, while at the same time maximizing the reachable workspace [16, 37]. In addition to that, the interface should be transparent for the user. This means that the effort to operate it has to be minimized, namely, its mass and inertia should be compensated by the control system. The interaction forces, when no feedback is required, have to be kept as low as possible. In this way the fatigue of the operator will also be minimized. Finally, usability in this specific case means easiness to wear and intuitiveness in the usage. The exoskeleton has to be designed in a way that the wearing and calibration procedures do not take too long. Furthermore, its operation should be intuitive, not requiring long training sessions.

Benefit of Biosignals Integration

In order to further improve interaction we make use of the close coupling between exoskeleton and human by utilizing biosignals to influence the exoskeleton’s control. Although appealing, the integration of biosignals always comes with some pitfalls regarding the user’s safety and comfort. Both aspects are largely affected by the fact that biosignals inherently include a lot of noise making misclassification likely. Therefore, when developing applications based on automatic classification of biosignals one has to deal with the problem of imperfect classification rates caused by the high noise and high dimensionality of the signal, see e.g. [3] for monitoring attention and [53] for classifying moods or emotions. A classical approach to use biosignals with machines is the implementation of brain–computer interfaces (BCIs) [6, 55], which usually classify data from the electroencephalogram (EEG) in order to control a device, e.g. a wheelchair [34]. For active and reactive BCIs [56], where the user (which is often a patient) is really controlling a device, misclassification directly causes bad functioning of the system and might also have fatal consequences for the user. The introduction of certain safety mechanisms is of critical importance here. When voluntary control is not intended, the BCI is of a passive type [56] which is the approach we follow with the Brain Reading component in our system.

BCIs are often used in the context of rehabilitation (for an overview see [38]), a field where exoskeletons are also applied (see [22]). For exoskeletons that are used for rehabilitation, muscular activity is often used to control the exoskeleton in order to restore the full mobility of the patient. Therefore, when the aim is to control the exoskeleton, the typical biosignal used is the activity in the electromyogram (EMG) [14, 20, 44, 45]. Similar EMG based predictions can be performed for the interface with a prosthetic wrist [40]. In the present study, we are aiming at movement prediction just before the onset of muscular activity, so we use the preceding brain activity, i.e., EEG. The idea of EEG based movement classification or prediction is again widely used: A lot of different approaches exist comprising different tasks like voluntary wrist extension [4, 24], cued hand, tongue or foot movements [35] or left versus right finger movement [9]. Further, different signals have been used for classification, i.e. event-related potentials [9, 54] or frequency components [4, 24, 35, 50].

To summarize, a high number of different systems and approaches exist to integrate biosignals to enhance man-machine interaction, but the literature is extremely diverse: there is no clear unified methodology of how to integrate a biological signal and, as for movement classification or prediction, it is also debated which signal serves best for the purpose at hand. Since there is a common goal, namely improving the interaction for the user it is essential to evaluate the benefit of this integration. The latter issue, in particular, remains often disregarded, although it is most important to show a measure of the user’s and the system’s benefit. When a suitable user is not present, some studies use simulations [40] to evaluate the modifications in their system, but in principle one should use experimental data from the user and the robotic system, as was recently done with a one-arm exoskeleton [44] and a commercial haptic interface [36]. The calibration procedure proposed in the present study can be performed before each teleoperation session, is independent of the classifier (see Fig. 7) and guarantees a baseline behavior of the whole system, maximally adapted to the current user. Further, we eliminate differences between sessions and users (e.g. how the exoskeleton fits, how well the user is trained) and finally evaluate improvements by the integration of EEG into the calibrated and therefore already optimized system.

The organization of this paper is as follows: next section briefly introduces the overall human–machine interface and more in detail the components involved in this study, Sect. 3 explains the methods and the experimental setup whose results are presented in Sect. 4, finally, Sect. 5 draws the conclusions and indicates possible future developments.

2 VI-Bot Multimodal Teleoperation Interface

The human–machine interface considered in this study [19] encompasses: an arm exoskeleton, a brain–reading interface, and an immersion environment composed of a head-mounted display (HMD), a data glove and a motion-tracking system (see Fig. 2). In this Section we will describe the control mechanism of the developed exoskeleton and how biosignals, i.e., EEG signals, are integrated to increase comfort by the means of reducing interaction force (see Sect. 2.1.2) while ensuring that safety mechanisms are never affected.

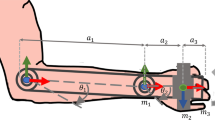

The developed exoskeleton consists of 9 DOF, seven of which are actuated and two purely passive. In order to deliver a proper force feedback and to distribute the weight of the system over the body, different contact points have to be defined between the limb and the exoskeleton. The interfacing surfaces have to be rigid and well fixed to transfer properly the forces, but at the same time they have to be soft to avoid excessive stress on the body [43]. Therefore, a good balance between stability and ergonomics has to be found. In the developed exoskeleton three contact points with the human arm were chosen: shoulder, upper-arm, and forearm (wrist proximity). The kinematics is configured according to the human anatomy in order to maximize the usable workspace [15, 16] while, at the same time, to minimize the restrictions to the user’s movements. The system is actuated via a relative low-pressure hydraulic circuit operating at 25 bar [17, 18]. In comparison to standard industrial systems, which normally work in a range of 120–400 bar, a low operative pressure is chosen in order to increase the safety of the interface and to allow the usage of lighter hydraulic components. Additionally passive and active safety features are integrated within the mechanical, electric, and software components of the system, Table 1 resumes the most important ones.

Each joint is equipped with position and torque sensors. In addition to that, at each contact point with the limb, namely acromion (shoulder), distal parts of the humerus (upper arm), and ulna-radio bones (forearm), the interaction forces can be measured via dedicated force/torque sensors. On the one hand, these sensors allow to control the force feedback, giving the possibility to display complex force patterns. On the other hand their influence on the control system can be modulated and allow the integration of biosignals. In the control of the exoskeleton we make use of EEG signals for online modulation by means of machine learning approaches.

It is worth to mention at this point that, in this study, only the wrist contact point was considered. There are mainly two reasons for it. First the sensor that equips this part of the interface (see Sect. 2.1), compared to the sensors in the other two contact points, is more precise, more reliable, and capable to detect the interaction force in all directions. This is important to evaluate correctly the effort made by the user during the interaction with the interface. Furthermore, in the first instance we preferred to keep the experimental setup and methods as simple as possible. To this end, e.g. we avoided the usage of the kinematic/dynamic model to map the forces from the Cartesian workspace to the joint workspace. Nevertheless, we are aware about the fact that in this latter case a more precise analysis can be conducted, and that therefore the topic deserves further consideration.

To support the control of the exoskeleton by the integration of EEG signals it must be ensured that classification errors will not disturb, while correct classification will clearly support the system. To allow this, several requirements for the successful usage of EEG signals have to be fulfilled. First, the integration of the predictions regarding the cognitive state of the operator should not disturb the action of the operator. To achieve this, EEG signals should be used passively such that the operator’s active behavior is not required and his natural behavior is not disturbed, e.g., by forcing him to produce certain brain activity. This requirement is fulfilled by brain reading, a method that passively analyses the operator’s EEG to draw conclusions about his cognitive state which can be used in general to improve robot-machine interaction [25, 26]. Second, the outcome of the prediction based on the EEG analysis is integrated into the exoskeleton control in a way that the effort (see definition in Sect. 3) of the user to switch between the operational states is reduced while the control of the exoskeleton still assures that possible misclassifications very likely have no impact and leave the exoskeleton and tele manipulated robot under full control of the user. In Sect. 2.2 we will describe our EEG based approach.

2.1 Exoskeleton Control Modalities

The control system of VI-Bot exoskeleton is organized in a hierarchical way. At the lowest level, the torque of each joint is regulated via a dedicated μController. At the middle level, a set of modules implementing position control, impedance regulation, and gravity compensation are operating in parallel. Finally, at the top level, a bi-directional mapping enables the connection between the target robot and the exoskeleton. It is at this level that the LOCK GUARD module is also integrated. This is one of the core control elements relevant for this study, taking care of the transition from one control modality to the other.

More specifically there are three possible states the exoskeleton can change between: “teleoperation mode”, “transient mode” and “full user support mode”. Those three modes are chosen to allow the operator to control a robot (teleoperation mode), to interact with the virtual environment without influencing the robot’s state (transient mode) and to rest the arm in a comfortable position (full user support mode). This last modality is particularly advantageous in case the operator wants to keep fixed the position of his arm and doing some other tasks (e.g. monitoring the state of the exoskeleton on a screen).

The switch from “full user support mode” to “transient mode” is triggered by the interaction force measured at forearm contact point. This naturally makes sense, having in mind that movement patterns for grasping objects or manipulating things are executed by humans in an endeffector based way [1, 13]. In particular the focus of this study is to prove that the usage of EEG signals can improve this transition (see Fig. 3). This, reducing the interaction force and consequently decreasing the effort required to the user to change from one control mode to the other.

To measure the interaction forces, at the forearm contact point, a six axes force/torque sensor from ATI series Nano25 is integrated into the mechanical structure of the exoskeleton. The Nano25 is capable of measuring forces up to ±250 N in x- and y-direction and forces up to ±1000 N in z-direction with a resolution of \(\frac{1}{24} N (x,y)\) and \(\frac{1}{8} N(z)\) respectively. The two additional contact points, at the upper arm and shoulder respectively, are also equipped with single axis force sensors from SMD series S420. Nevertheless, although these sensors are generally important to detect the interaction forces between exoskeleton and arm, they are not used in this study.

Figure 3 shows a schema of the exoskeleton’s operational modes which are handled by the LOCK GUARD module. Starting from the last item, the “teleoperation mode” describes the modality where the exoskeleton is following the movements of the user, while at the same time it actively compensates for gravitational effects on the mechanical structure. Only in this mode, the operator is able to control the robot which is intended to be telemanipulated. This mode is initiated by the operator clenching the fist and recognized by the system using a gesture recognition algorithm. If the user teleoperates a robot, the exoskeleton additionally provides a directional force feedback in case the user drives the target system into a contact situation with the environment. This enhances the immersion of the user into the telemanipulation scenario. As soon as the fist is re-opened, the teleoperated system is released from operator control and force feedback is switched off. In case the user does not want to control another robot, it is possible to change the operational state of the exoskeleton to “transient mode”. This is done by pressing a button on a remote control. As soon as the system enters the “transient mode”, the user is absolved from being able to pilot the teleoperated robot even if he clenches his fist. The re-entering to “teleoperation mode” is only possible by pressing the button on the remote again. Generally, in “transient mode” the haptic device only compensates for gravitational effects on the mechanical structure without delivering any force feedback, which means that the user can move the limb freely being unrestricted by the exoskeleton, have a closer look at warnings, and interact with real and virtual interfaces.

During this state, the LOCK GUARD is permanently checking the activity level A level of the user (Eq. 1). If it falls under a certain value, which can be arbitrarily chosen by the user himself, the exoskeleton changes its state to “full user support mode”. In this case, the control system of the exoskeleton activates the joint position controllers which keep the system in the last known pose before the user support mode was activated, enabling the operator to completely rest his arm in a desired pose. The transfer from “transient mode” to “full user support mode” is done if the “Lock-in” condition is fulfilled. The change from “full user support mode” back to “transient mode” is induced if the “Lock-out” condition is fulfilled. This causes the control system to switch off the position controllers which releases the user arm. Both cases are described more precisely in the following two sections.

2.1.1 Lock-in Condition

In “transient mode”, the user has the chance to change the working modality of the exoskeleton to “full user support mode”, in which the haptic device fully supports the weight of the operator’s arm. The transfer to this support mode is done automatically by the supervisor (LOCK GUARD) integrated into the control environment. This supervisor is permanently checking the activity level of the user according to the amount of the accumulated Cartesian velocity in a floating window of fixed size which is defined as:

with T representing the sampling time of the control loop, n representing the window size to be monitored, and v x ,v y and v z the Cartesian velocities, respectively. In our case, velocity checking is done in a floating window of 2 s, i.e., the activity level represents the overall movement of the operator during the last n=200 samples of the control system which is running at T=10 ms. If A level falls under a certain free definable threshold A th , the LOCK GUARD activates the “full user support mode” (see Fig. 4). This causes the system to switch ON the joint position controllers which afterwards hold the exoskeleton in the pose where the user left the device. Generally, the assumption is valid the smaller the threshold, the longer the user has to stay in one place to activate the transition from “transient mode” to “full user support mode”.

For the presented scheme, a sharp threshold A th for the “Lock-in” condition can be used. Only requirement for the value of A th is that it is to be chosen larger than the noise level of the position sensors. On the other hand by choosing the threshold small enough, false detection of a wanted “Lock-in” can be avoided, because the user has to stay quite calm in his movements to keep the activity level under a certain value.

2.1.2 Lock-out Condition

Changing the operational state from “full user support mode” back to “transient mode” is possible if the user interacts with the position-controlled haptic device. In case a physical interaction takes place between the operator and the wrist contact point of the exoskeleton system, the LOCK GUARD checks whether the so-called “Lock-out” condition (see Fig. 5), which is described in the following, is fulfilled. Generally, all forces exerted by the user to the haptic interface points are permanently measured and evaluated. This facilitates a continuous monitoring of the energy dissipated by the system.

The lock-out mechanism is based on the measurement of interaction forces and time resulting in the idea of detecting a movement intended by the user. Figure 5 shows the general working principle. If the contact force between the user and the exoskeleton exceeds a certain defined threshold F th , a counter (CNT) starts measuring the time. As soon as the counter value defined by the lockout time-frame reaches the specified value T th , the LOCK GUARD transfers the exoskeleton control system from “full user support mode” back to “transient mode” by switching off the joint controllers which kept the haptic device in the rest pose. Afterwards the user is free to move.

The presented lock-out scheme demands adjustments due to some system limitations. The main factors influencing the behavior of the presented mechanism are: force sensor noise, disturbances forces generated by movements of the user body, change in the rest pose. The first point is solved by using a counter in up/down-counting mode, i.e. up-counting is performed as long as the interaction force is larger than the threshold while it counts back to zero if the contact force falls under the threshold level before the final counter value was reached. The following equations show the counting modes:

This avoids counter saturation in case the force value oscillates around the force threshold due to the noise, furthermore unwanted peak forces are rejected due to the filter characteristics of the counter.

The second problem is solved via a proper choice of the force and time thresholds, this in order to avoid transitions in the operational state of the exoskeleton due to movements of the body (see Sect. 3.1). The last point implies the fact that for different resting poses of the operator, the force sensors at the contact points are pre-loaded with different values. To have a unified force threshold for all rest positions in the overall workspace, the LOCK GUARD offsets the force sensor measurements 3 seconds after the user was transfered from “transient mode” to “full user support mode” (Lock-in). This nulls the force sensors, assuming that the user really rests his arm during this initial delay time of 3 seconds and improves robustness and response time of the mechanism described.

Finally, it is worth to stress the fact that the time threshold T th is not static, but modulated by the brain reading interface. It is in the scope of this paper to understand the benefits of integrating biosignals within the control scheme of the exoskeleton. Next section is dedicated to better explain this last point.

2.2 Integration of a Brain Reading Interface for the Prediction of Movements

In the following we will describe our EEG based approach of improving the control of the developed exoskeleton by modulating the lock out mechanism.

2.2.1 Lateralized Readiness Potential and other Movement Related Activity

Moving one’s arm to a desired position, as it is the case while controlling an exoskeleton, is a directed movement and planned by the brain, involving the primary motor cortex contralateral to the side of movement. A differentiation between body parts to be moved is possible, since the primary motor cortex is somatotopically organized such that certain areas are directly mapped to certain body parts, e.g., hand or arm [39]. Therefore, by detecting EEG activity produced by these parts of the primary motor cortex during movement planning, it is generally possible not only to predict the execution of movements but also which side (right or left body side) and part of the body will be moved.

EEG activity of brain areas involved in motor planning of the right hand and arm can be recorded with electrodes C3 and FC3 (extended international 10-20 system electrode placement [23], see Fig. 6) placed over the hand and arm area of the primary motor cortex. The recorded negative activity at these electrodes is an event related potential (ERP) called lateralized readiness potential (LRP) [27, 47] (see Fig. 6). Since in our application a movement is planned out of a complete rest situation it is also possible to use the Bereitschaftspotential (BP) [27, 47] for movement prediction. The non lateralized BP can be detected before the LRP with onsets of one to 2 seconds before the start of a movement [47]. According to the way the classifier is trained [19], the prediction of movement preparation in the situation where the user is in a full rest position, i.e. fully supported by the exoskeleton, is therefore based on real-time single trial detection of both the BP and LRP.

Left: Electrode position of extended international 10-20 system (128 electrodes). Right: average EEG activity of one subject before arm and hand movement (movement marker); Lateralized readiness potential: defined as a difference in EEG activity between signal recorded at electrode positions over the primary motor cortex contra- and ipsilateral to the side of movement (LRP difference between C3 and C4, see above). Bereitschaftspotential: defined as central non lateralized negative activity recorded at electrode over the supplementary motor area (SMA) (BP at Cz, see below)

The utilized data processing for ERP detection (see Sect. 2.2.2) needs only information in the very low frequency range (below 4 Hz), rigorous filtering can be applied that eliminates or at least strongly reduces the artifacts that are pronounced in our application, i.e., muscle artifacts and noise induced by the exoskeleton itself. However, eye artifacts contribute to the recorded signal in the very low frequency range and can therefore not be completely removed by the applied filtering methods explained below. To avoid eye artifacts in general, subjects have to fixate a target location in the training sessions while being in the rest condition. With this approach we try to maximally reduce the occurrence of eye artifacts during training, so that the classifier cannot reliably base its prediction on these. These experimental conditions were applied in earlier experiments [19] and should help to avoid eye artifacts.

2.2.2 Signal Processing and Classification Procedure

The challenge of our approach is the detection of a rather weak signal of short duration at an unknown time point. Both, BP and LRP are about 10 to 100 times smaller in amplitude than the superimposed brain activity and can normally only be detected in average analysis (enhancing time-triggered ERP activity and reducing so-called activity-unrelated noise). For our approach, single trial EEG analysis in real time is needed that has to be fast to allow multiple testing for the occurrence of ERPs before the movement. For this discrimination of movement preparation and rest state signal processing techniques and machine learning are used.

As outlined above, the LRP prediction was simulated in the present study (see also Fig. 7). In the final application, data is acquired as follows (compare [19]): EEG is recorded with a 128-channel actiCap system using an extended 10-20 system referenced at FCz with electrode impedances below 5 kΩ. Data are digitized with 5000 Hz by four 32-channel BrainAmp DC amplifiers (Brain Products GmbH, Munich, Germany). The continuos EEG stream is segmented into windows of 1000 ms length. To train the classifier and evaluate experimental results, a movement marker is written in the EEG whenever the subject moves his arm 5 cm out of the position during full user support, i.e. the exoskeleton is locked out. Differences between training and testing sessions are described in [19]. While in the full user support mode, windows are cut and classified every 50 ms. Before classification, the data are preprocessed to increase the signal-to-noise ratio. Data from each electrode channel are standardized (mean is subtracted and divided by standard deviation). After decimating the data to 20 Hz using a finite impulse response (FIR) filter, a FFT band-pass filter with a passband of 0.1 to 4 Hz is applied. This rigorous filtering is useful to focus just on the important frequency components (BP and LRP are slow shift potentials) and has been applied also elsewhere [7, 19, 30]. Then, data from each channel are scaled with a cosine function of the form \(s(n):=1-\cos(\frac{n\cdot\pi}{20})\) for n=0,…,20 as proposed by Blankertz et al. [8]. Classification is based on all electrode channels, i.e. we use the last 4 values of each channel (total number of features: 128×4=512). Each feature dimension is linearly mapped between 0 and 1, treating 10 % of the training examples as outliers. We use a linear Support Vector Machine (SVM) implemented in the LIBSVM software [11]. In order to integrate the SVM prediction back into the exoskeleton control, the unlimited SVM prediction score is mapped linearly to a value between 0 and 1 and integrated in the exoskeleton’s control (these values were simulated within this range in the current study).

Preparation required for the usage of the exoskeleton with adaptation by movement prediction and experimental procedure performed in this work. Here, EEG was not recorded and analyzed (marked as grey box). To measure the effect of modulating the exoskeleton control by integrating biosignals we used fixed values for movement prediction (simulated classifier output) representing certainty of prediction (see text)

The Brain Reading Interface has already been tested on real data [19] and achieved a correct LRP-prediction rate of 0.76±0.08 at −200 ms before the actual movement onset (movement marker).Footnote 1 The overall accuracy was 0.70±0.07 for the distinction between no movement preparation and movement preparation.Footnote 2

2.2.3 Integration of Movement Prediction into the Exoskeleton Control

In the VI-Bot system, the normalized movement preparation score is used to reduce the time that is needed to change from “full user support mode” to “transient mode” (see Figs. 3 and 5). By reducing the time the operator has to press against the exoskeleton, we enhance the comfort of the user interacting with the exoskeleton by reducing the effort required to leave a rest position (to exit “full user support mode”, see Fig. 3). To ensure the overall safety and to safely integrate a biosignal that inheres uncertainties, two mechanism were implemented and will be explained here in more detail. (i) The positive prediction of movement preparation by detecting the BP and LRP only has impact on the control system in case that the user presses against the exoskeleton meaning that he tries to leave the “full user support mode”. (ii) During rest situations the operator is not connected to the robot (see Sect. 2 and Fig. 3) and any possible false lock out will not harm the robot or environment either.

Due to the quality of the EEG signal with low signal to noise values (see Sect. 2.2.2) and overlaying activity induced by cognitive activity that takes place in parallel, single trial EEG classification cannot be 100 percent correct and is prone to classification errors. Two kinds of classification errors are possible: false negative ones—the classifier did not predict a movement though there was movement planning and false positive ones—the classifier did predict a movement though there was no movement planning. Both types of errors have different influence on the overall system. In case of false negative predictions the user can still lock out but needs to apply a force as if no biosignal is improving the control. This means that the control of the exoskeleton will not be improved, but the system will still work under conditions optimized for a classical approach without worsen it. In case of false positive classifications the time required to press against the sensors of the exoskeleton for lock out will be reduced and a lock out is more easily possible (see Sect. 2.1.2). However, in most cases a lock out of the exoskeleton, i.e., to leave the rest state, is unlikely since the operator is not moving and will not press against the sensors to release the exoskeleton. One could imagine that in case of coincident sensor noise it might be possible that the exoskeleton does lock out. By choosing an appropriate threshold (see Sects. 2 and 3.2) this situation of coincidence will be covered by our approach.

There are two other issues that have to be addressed when implementing the integration of EEG activity related to movement preparation. (i) A subject, who is operating the exoskeleton, could for different reasons imagine to move his arm without executing the movement. In this case very similar brain activity can be recorded in comparison to movement planning which would actually lead to a movement [47]. Therefore, the classifier will detect movement planning and the exoskeleton control will be adjusted regarding the normalized prediction value of the class movement preparation. However, since the operator is only imagining the movement, he will in the end not move his arm and therefore will not press against the sensors integrated into the exoskeleton which is required to unlock it. (ii) A subject might for different reasons move his whole body massively while in the rest position. In such case strong motor movement planning is involved, that will very likely be detected and lead to a mode change. However, in such situations the exoskeleton would, due to the movement induced forces against the sensors, in most cases also lock out without any modulation by the biosignal, though a lock out is more likely when it is integrated. Hence, to cover any case of unwanted lock out and to ensure safety, the exoskeleton is never attached to the robot right after leaving the rest position (“full user support mode” see Fig. 3).

2.2.4 Measuring Reduction of Effort by Movement Prediction

For the analysis presented in this paper, we simulated the detection of movement related ERPs and transferred three representative prediction values into three values for the time threshold T th . This was done in order to allow a systematic and comparable analysis and to mimic uncertainties in the movement prediction and its impact on the control of the exoskeleton. The time thresholds directly correspond to different classifier outputs: While a minimal time threshold corresponds to perfect BP/LRP detection, the maximal threshold represents the situation where no BP/LRP has been detected. Therefore, we represent maximum movement prediction impact with a time threshold (\(T_{\mathit{th}}^{\mathit{Min}}\)) corresponding to the exoskeleton’s control frequency (i.e., with 100 Hz every 10 ms) in samples and no movement prediction impact with a time threshold (\(T_{\mathit{th}}^{\mathit{Max}}\)) experimentally determined as minimal time needed to avoid unwanted lock out. Further, we use a realistic movement prediction impact with a middle time threshold (\(T_{\mathit{th}}^{\mathit{Mid}}\)) corresponding to a situation where the classifier predicts an upcoming movement with a normalized score of 0.75 (see Sect. 3).

3 Experimental Setup and Methods

In order to decrease the user’s effort required to switch from the “full user support mode” to the “transient mode” and therefore to improve the responsiveness of the system and the user experience in interacting with the interface, we conducted a series of tests on different subjects. The general flow of the experiments conducted inclusive preparation is shown in Fig. 7. In the context of this paper we define the effort as the integral over time of the interaction force (see Eq. 6) at the wrist contact point during the switch phase. In general the bigger is this integral, the larger is the momentum that the user needs to transfer to the interface in order to initiate the movement. This is clearly related with the energy that the user have to spend to change control modality, therefore has also significant influence on the fatigue and comfort feeling in using the interface.

More in specific our main goals were:

-

to find the minimal F th and T th thresholds that avoid the occurrence of a lock-out due to the eventual interaction forces generated at the wrist by a rotation of the upper body,

-

to find the optimal F th and T th for different users,

-

to demonstrate that the usage of the LRP gives us the possibility to decrease these thresholds (e.g. T th ) in order to minimize the effort of the user during the lock-out phase.

Experiments were performed on five males having different anthropometric characteristics. Table 2 reports for each subject: height, weight, upper-arm length, forearm length, and distance between the shoulders. To avoid additional stress to the subject due to weight of the interface, a special tutor was developed that allows sustaining the exoskeleton without sacrificing the mobility of the body (see Fig. 8a).

3.1 Exoskeleton Calibration Procedure

The first experiment is intended to discover a proper set of parameters that avoid an unwanted transition from the “full user support mode” to the “transient mode” due to an eventual movement of the upper body. More specifically, the minima for both the force (F th ) and time threshold (T th ) are identified for each subject with an iterative method.

The experimental protocol was designed in order to have a unified calibration procedure that applies to different users, but at the same time that avoids complicate or too restrictive operative conditions. This with the idea in mind that in a real application we have to perform the calibration procedure in a reasonable amount of time. It is worth to notice that the selected procedure was guided by empirical considerations, therefore there is still room for improvements. Nevertheless, thanks to its iterative nature, it guaranties that the minimal thresholds are correctly identified.

In the experiment the user is asked to perform a predefined rotational movement of the upper-body keeping the lower extremities fixed. In order to have the same experimental conditions in different sessions, a prerecorded movie was displayed to the user that shows the exact movement sequence and timing. In particular we chose a movie as conditioning method, in alternative to other possible approaches, due to the fact that human beings are prone to learn new motor skill faster by imitation [32]. In the starting position, the forearm is completely extended (see Fig. 8(a)) and the shoulder is flexed forward in order to bring the third joint of the exoskeleton in an angular range between 15–20°. The user is then asked to repeat an oscillatory movement of about 90∘ with a regular speed (see Fig. 8(b)). This simulates the case were the user, during a resting phase, is involved in monitoring activities that requires a reorientation of the upper body. At the beginning of each session, the force threshold is set to a maximum value \(F_{\mathit{th}}^{\mathit{Max}}\) and the time threshold to a minimum value \(T_{\mathit{th}}^{\mathit{Min}}\), this in oder to assure that no movement can cause the system to lock-out The user is asked to perform the rotational movement 10 times. If the lock-out does not occur, F th is decreased for about 10 % and the experiment is repeated. This goes on until the user locks-out due to the movement. At this point, the force threshold is kept constant while the time threshold is increased until the perturbation movement is not anymore able to cause the lock-out event. The so found values are in the rest of the paper refereed as \(F_{\mathit{th}}^{\mathit{Min}}\) and \(T_{\mathit{th}}^{\mathit{Max}}\), respectively.

3.2 Lock-out Experiment

In the second experiment, the user is first asked to bring the arm in a starting configuration (arm parallel to the body) and keep it till the exoskeleton enters in the “full user support mode” due to a decrease in the activity level of the device. In the following, a signal is presented to the subject which advises him to initiate an extension movement of about 90∘. During the movement, the interaction force between the exoskeleton and the user’s wrist is measured via a 6-axis force/torque sensor (ATI nano 25). The subject is asked to do 30 lock-out trials. Within each attempt, the time threshold is changed randomly in the background between three different possible values:

with

In this way, the user is not aware of the policy adopted to regulate the lock-out mechanism. This is particularly important to avoid that a priori movement preparation affects the amount of force delivered by the user to the exoskeleton. Due to the fact that we are interested in calculating the effort required to pass from the lock-in to the lock-out state, we integrated the force according to Eq. 6.

Where T 0 is the time when the force starts to rise due to the limb movement, and T Lout is the time where the transient that brings the system back to the lock-out state is terminated. To demonstrate the advantages of the integration of the movement prediction in controlling the device, it is necessary to verify the disequation 7, where I Min , I Mid , I Max correspond to \(T_{\mathit{th}}^{\mathit{Min}}\), \(T_{\mathit{th}}^{\mathit{Mid}}\), and \(T_{\mathit{th}}^{\mathit{Max}}\), respectively.

Doing that we can state that a decrease in the threshold T th , due to a correct movement prediction, will also bring a decrease in the correspondent I and therefore a reduction of the user’ effort needed to change the control modality.

4 Experimental Results

As introduced in Sect. 3.1, the first set of experiments is meant to calibrate the parameters of the Lock-in/out mechanism on the basis of requirements in terms of robustness against occurring noise forces. The determined set of optimal thresholds are resumed in Table 3, where each column is representing one of the five subjects. Figure 9(a) reports an exemplary trajectory of the wrist movement recorded during the experiment together with the corresponding velocity (Fig. 9(b)) projected on the transversal plane. Note that the trend is quite regular showing a good repeatability in performing the experiment.

In total the calibration procedure required an average of 63 movements for each subject. Each movement lasted on average 2.6 seconds.

Results of the Lock-out experiment relative to Subject-5 are presented in Fig. 10. The upper graph reports the normalized values (0 to 1) for the chosen T th threshold, the lower one indicates the integral of the force calculated among the interval [T 0 T Lout ]. In detail, each line represents an exemplary instance of the Lock-out experiment, in total 30 values are reported. The additional three horizontal lines indicate the averages of a set of 10 trials that share the same time thresholds. Note that there is a clear distinction between the three cases. This confirms the fact that the time threshold has a strong impact on the interaction force and therefore on the effort the user needs to apply to pass from one control modality to the other.

As a further comparison Fig. 11, reports the experimental data relative to Subject-3. It can be observed from the figure that the three lines representing the force integral averages are distinct again, however, this time the distances between the three appears reduced. Comparing the two subjects it can also be observed that the average relative to the \(T_{\mathit{th}}^{\mathit{Mid}}\) classes are not centered between \(T_{\mathit{th}}^{\mathit{Min}}\) and \(T_{\mathit{th}}^{\mathit{Max}}\), but are shifted toward the bottom. This is generally confirmed by the data of the other three subjects, too, showing a non-linear dependence between the threshold value and the force integral (see Fig. 16). Figure 12 shows the wrist trajectory generated by the user during the Lock-out experiment.

Tables 4 and 5 reports in detail the results of this experiment for all subjects. The average of the force integral is indicated for each threshold class together with other descriptive measures. On one hand, the descriptive measures in Table 4 are summarized in Fig. 13 which depicts the average of the force integral calculated by averaging the means of 5 subjects across 10 measured movements for each time threshold to show the subject-specific differences in threshold values (e.g. high standard deviation). On the other hand, the average of the force integral is calculated by averaging the means of 10 measured movements across 5 subjects to show the difference in values between threshold classes (Table 5 and Fig. 14).

Since the three different classes of time threshold were repeatedly measured within each subject, the data were analyzed by repeated measures ANOVA with time threshold (3 levels: \(T_{\mathit{th}}^{\mathit{Min}}\), \(T_{\mathit{th}}^{\mathit{Mid}}\), \(T_{\mathit{th}}^{\mathit{Max}}\)) and subject (5 levels: 5 subjects) as within-subjects factors. For pairwise comparisons, the Bonferroni correction was applied.

As expected (see Fig. 14), the force integral were significantly affected by the time threshold [main effect of time threshold: F(2,18)=280.13, p<0.001]. As shown in Fig. 15, the force integral associated with both the middle and lowest time threshold were significantly distinguished from the force integral associated with the highest time threshold [\(T_{\mathit{th}}^{\mathit{Max}}\) vs. \(T_{\mathit{th}}^{\mathit{Min}}\): mean difference = 12.57 standard error = 0.63 p<0.001; \(T_{\mathit{th}}^{\mathit{Max}}\) vs. \(T_{\mathit{th}}^{\mathit{Mid}}\): mean difference = 7,39 standard error = 0.49 p<0.001]. Also, there was a difference between the measured force integrals associated with the middle and lowest time threshold [\(T_{\mathit{th}}^{\mathit{Mid}}\) vs. \(T_{\mathit{th}}^{\mathit{Min}}\): mean difference = 5.17 standard error = 0.45 p<0.001].

Further, we found that the threshold values have different impact on the different subjects [main effect of subject: F(4,36)=34.37, p<0.001]. Measured differences in force integral for each threshold value among the subjects are due to different reasons. At first, for a fixed limb trajectory, the interaction force is strongly depending on the dynamics of the combined arm-exoskeleton system. This in turn depends on the physical properties of the limb (e.g. mass, geometry) as well as the way the exoskeleton is worn by the user. Indeed, the contact points between the limb and the interface may change from user to user affecting the force distribution. Furthermore, the force applied by the subject together with the level of muscle coactivation may also influence the amount of energy exchanged during the movement.

Although the threshold values were different among the subjects, all subjects showed the same pattern regarding the effect of time threshold on the force integral [interaction between time threshold and subject: F(8,72)=27.88, p<0.02]. This is obviously shown in Fig. 16 which illustrates the pattern of time threshold for each subject.

Our results indicate the advantages of using the movement prediction to modulate the transition from “full user support mode” to “transient mode”.

This advantages were shown for all subjects. As mentioned earlier, the threshold value itself is subjects-specific (i.e. different threshold values are measured depending on subject). However, all subjects showed the same pattern of threshold value (i.e. the effect of time threshold on the force integral was observed for all subjects). This facts suggest that the exoskeleton and its modulation by movement prediction works very stable for individual subjects. Further, as can be seen in Fig. 15 the impact on the reduction of the time threshold does not seem to linearly modulate the force integral, meaning that a reduction of the time threshold value might have an overproportional effect on the force integral. However, further investigations with more fine-grained levels of time threshold have to be conducted to analyze which range of the modulation of time threshold is mostly affected. This would be most interesting, since this fine-grained modulation of the time threshold would occur when using real LRP detections.

5 Conclusions and Future Work

The presented work aims at evaluating and improving the interaction experience between a user and a multi-modal interface intended for teleoperation. More in detail, the studied system is composed of a 9 DOF arm exoskeleton integrated with a brain–reading interface. A series of experiments conducted on five subjects are meant to discover the best set of control parameters that guarantee the minimal effort for the user to pass from a resting to an operative modality. Results clearly demonstrate how the usage of the movement prediction has the potential to improve the comfort for the user and to increase the responsiveness and safeness of the overall interface.

Our results show that the users of our system used similar forces to achieve the transition from lock-in to lock-out mode in each experimental condition. This implies that an overall force and time threshold may be sufficient for all subjects. However, the fit of the exoskeleton as well as the handling can be different between sessions, even when the same user is teleoperating. Such differences may then influence the perceived effect of the movement prediction, because the same force and time threshold lead to a different percept, e.g. due to training effects in the handling of the exoskeleton. If such effects are concerned, a short calibration session using the setup presented in this study guarantees maintenance of the fine calibrated level of interaction.

The integrated biosignal comes from the users brain activity that is measured via surface EEG and analyzed by the brain–reading interface allowing to predict certain cognitive states. This prediction is based on a continuously classification of single-trial EEG windows using time optimized signal processing and machine learning techniques. However, the predictions are not used to directly control the exoskeleton, as it would be the case in an active brain–computer-interface (BCI), instead the gained knowledge about the cognitive state of the operator is used to improve its control. By using this architecture, we improve the whole system while avoiding malfunction in case of misclassifications. Such integration of the biosignal is meant to enhance the comfort of the subject and by this the man-machine interaction.

Our approach presented here requires short calibrations per session, i.e., each time an operator is putting on the exoskeleton a new, at most. Afterwards, the only changes can be introduced during the session which is highly unlikely since the exoskeleton fits in the same way, the EEG electrodes stay in position. We assume that training effects are minimal within one session regarding the use of the lock-out mechanism. However, further experiments will have to verify this assumption. We will have to investigate how easily we could transfer determined optimal sets of parameters between session and whether it would be possible to decide on some standard parameter sets depending on, e.g., physiological measures of the subject, e.g. arm length to partly avoid calibration. Alternatively further developments can be focused on the automization of the optimization phase described in Sect. 3.1. In a possible scenario, the user may be asked to perform a certain task where his/her arm is fully sustained by the exoskeleton while the system performs the parameter’s adaptation. A more precise and faster calibration can also be achieved by using kinematic measurements (e.g. body velocity and acceleration) to directly modulate the step size of the parameter’s adaptation. This will have the advantage of better tailoring the control system on the base of the user’s biophysical characteristics.

However, due to the integration of movement prediction even a sub optimal parameter set could be used since the integration of the movement prediction does reduce the effort significantly as could be shown with this study. Experiments to integrate movement prediction online and measure the effect on the exoskeleton control, as done here in simulation by using three fixed values for a normalized movement preparation score, are planned to prove our results for online modulation of the exoskeleton control. Besides the optimization of the control parameter of the exoskeleton the movement prediction requires training for each session as well. As in any BCI application classifiers are not easily transferable between sessions but training time and required training data can be reduced by different methods [2, 12, 28, 31]. First results on reducing training time and amount of training data required for classifying ERP signals are promising [33], but have further to be improved to allow easy application of complex support systems for rehabilitation or in daily life.

Notes

Recomputed from pseudo-online results of 2 subjects presented in [19].

Trials from −1000 to −850 ms are considered as no movement preparation and trials from −300 to −150 are considered as movement preparation.

References

Abend W, Bizzi E, Morasso P (1982) Human arm trajectory formation. Brain 105(PT.2):331–348

Alamgir M, Grosse-Wentrup M, Altun Y (2010) Multi-task learning for Brain-Computer Interfaces. In: Proceedings of the 13th international conference on artificial intelligence and statistics. JMLR: workshop and conference proceedings, vol 9

Apolloni B, Malchiodi D, Mesiano C (2004) An attention monitoring system for high demanding operational tasks. In: Computational intelligence for homeland security and personal safety. CIHSPS 2004. Proceedings of the 2004 IEEE international conference, pp 23–29

Bai O, Rathi V, Lin P, Huang D, Battapady H, Fei DY, Schneider L, Houdayer E, Chen X, Hallett M (2011) Prediction of human voluntary movement before it occurs. Clin Neurophysiol 122(2):364–372. doi:16/j.clinph.2010.07.010

Bergamasco M, Allotta B, Bosio L, Ferretti L, Parrini G, Prisco G, Salsedo F, Sartini G (1994) An arm exoskeleton system for teleoperation and virtual environments applications. In: Robotics and automation, proceedings, 1994 IEEE international conference, vol 2, pp 1449–1454. doi:10.1109/ROBOT.1994.351286

Birbaumer N (2006) Breaking the silence: brain–computer interfaces (BCI) for communication and motor control. Psychophysiology 6(43):517–532

Blankertz B, Curio G, Müller K (2002) Classifying single trial EEG: towards brain computer interfacing. In: Advances in neural information processing systems 14: proceedings of the 2001 conference, pp 157–164. MIT Press, Cambridge

Blankertz B, Dornhege G, Schäfer C, Krepki R, Kohlmorgen J, Müller K, Kunzmann V, Losch F, Curio G (2003) Boosting bit rates and error detection for the classification of fast-paced motor commands based on single-trial EEG analysis. IEEE Trans Neural Syst Rehabil Eng 11(2):127

Blankertz B, Dornhege G, Lemm S, Krauledat M, Curio G, Müller KR (2006) The Berlin brain–computer interface: machine learning based detection of user specific brain states. J Univers Comput Sci 12(6):581–607

Borghesan G, Melchiorri C (2008) Model and modeless friction compensation: application to a defective haptic interface. In: Lecture notes in computer science, vol 5024. Springer, Berlin, pp 94–103

Chang CC, Lin CJ (2011) LIBSVM: A library for support vector machines. ACM Trans Intell Syst Technol 2:27:1–27:27. Software available at http://www.csie.ntu.edu.tw/~cjlin/libsvm

Fazli S, Popescu F, Danóczy M, Blankertz B, Müller K, Grozea C (2009) Subject-independent mental state classification in single trials. Neural Netw 22(9):1305–1312. doi:10.1016/j.neunet.2009.06.003

Flash T, Hogan N (1985) The coordination of arm movements: an experimentally confirmed mathematical model. J Neurosci 5(7):1688–1703

Fleischer C, Wege A, Kondak K, Hommel G (2006) Application of emg signals for controlling exoskeleton robots. Biomed Tech (Berl, Z) 51(5–6):314–319

Folgheraiter M, Bongardt B, Albiez J, Kirchner F (2008) A bio-inspired haptic interface for tele-robotics applications. In: IEEE international conference on robotics and biomemetics (ROBIO-2008), Thailand, Bangkok

Folgheraiter M, Bongardt B, Schmidt S, de Gea Fernandéz J, Albiez J, Kirchner F (2009) Design of an arm exoskeleton using an hybrid motion-capture and model-based technique. In: IEEE international conference on robotics and automation (ICRA-2009), May 12–17, 2009, Kobe, Japan

Folgheraiter M, de Gea Fernandéz J, Bongardt B, Albiez J, Kirchner F (2009) Bio-inspired control of an arm exoskeleton joint with active-compliant actuation system. Appl Bionics Biomech 6(2):193–204

Folgheraiter M, Jordan M, Benitez LMV, Grimminger F, Schmidt S, Albiez J, Kirchner F (2011) Development of a low-pressure fluidic servo-valve for wearable haptic interfaces and lightweight robotic systems, 1st edn. In: Lecture notes in electrical engineering, vol 89. Springer, Berlin. ISBN 978-3-642-19538-9

Folgheraiter M, Kirchner EA, Seeland A, Kim SK, Jordan M, Wöhrle H, Bongardt B, Schmidt S, Albiez J, Kirchner F (2011) A multimodal brain-arm interface for operation of complex robotic systems and upper limb motor recovery. In: Proc 4th int conf biomedical electronics and devices, Rome

Hayashi T, Kawamoto H, Sankai Y (2005) Control method of robot suit hal working as operator’s muscle using biological and dynamical information. In: Intelligent robots and systems (IROS 2005). 2005 IEEE/RSJ international conference, pp 3063–3068. doi:10.1109/IROS.2005.1545505

Herrmann G, Melhuish C (2010) Towards safety in human robot interaction. Int J Soc Robot 2(3):217–219

Hesse S, Schmidt H, Werner C, Bardeleben A (2003) Upper and lower extremity robotic devices for rehabilitation and for studying motor control. Curr Opin Neurol 16(6):705–710

Homan RW, Herman J, Purdy P (1987) Cerebral location of international 10-20 system electrode placement. Electroencephalogr Clin Neurophysiol 66(4):376–382

Ibáñez J, Serrano J, del Castillo M, Barrios L, Gallego J, Rocon E (2011) An EEG-based design for the online detection of movement intention. Adv Comput Intell 370–377

Kirchner EA, Metzen JH, Duchrow T, Kim SK, Kirchner F (2009) Assisting telemanipulation operators via real-time Brain Reading. In: Lemgoer Schriftenreihe zur industriellen Informationstechnik, Paderborn

Kirchner EA, Wöhrle H, Bergatt C, Kim SK, Metzen JH, Kirchner F (2010) Towards operator monitoring via brain reading—an eeg-based approach for space applications. In: Proceedings of the 10th international symposium on artificial intelligence, robotics and automation in space

Kornhuber H, Deecke L (1965) Hirnpotentialänderungen bei Willkürbewegungen und passiven Bewegungen des Menschen: Bereitschaftspotential und reafferente Potentiale. Plügers Archiv gesamte Physiol Menschen Tiere 284:1–17

Krauledat M, Tangermann M, Blankertz B, Müller K (2008) Towards zero training for Brain-Computer interfacing. PLoS ONE 3(8):e2967. doi:10.1371/journal.pone.0002967

Labonte D, Boissy P, Michaud F (2010) Comparative analysis of 3-d robot teleoperation interfaces with novice users. IEEE Trans Syst Man Cybern, Part B, Cybern 40(5):1331–1342. doi:10.1109/TSMCB.2009.2038357

Li Y, Gao X, Liu H, Gao S (2004) Classification of single-trial electroencephalogram during finger movement. IEEE Trans Biomed Eng 51(6):1019–1025

Li Y, Guan C, Li H, Chin Z (2008) A self-training semi-supervised SVM algorithm and its application in an EEG-based brain computer interface speller system. Pattern Recognit Lett 29(9):1285–1294. doi:10.1016/j.patrec.2008.01.030

Mataric MJ (1994) Learning motor skills by imitation. In: AAAI spring symposium toward physical interaction and manipulation. Stanford University

Metzen JH, Kim SK, Kirchner EA (2011) Minimizing calibration time for brain reading. In: Mester R, Felsberg M (eds) Pattern recognition. Lecture notes in computer science, vol 6835. Springer, Berlin/Heidelberg, pp 366–375

Millán JR, Galán F, Vanhooydonck D, Lew E, Philips J, Nuttin M (2009) Asynchronous non-invasive brain-actuated control of an intelligent wheelchair. In: Proc 31st annu conf IEEE eng med biol soc, Minneapolis, MN, pp 3361–3364

Morash V, Bai O, Furlani S, Lin P, Hallett M (2008) Classifying EEG signals preceding right hand, left hand, tongue, and right foot movements and motor imageries. Clin Neurophysiol 119(11):2570–2578. doi:16/j.clinph.2008.08.013

Ott R, Gutiérrez M, Thalmann D, Vexo F (2005) Improving user comfort in haptic virtual environments trough gravity compensation. In: Proceedings of the first joint eurohaptics conference and symposium on haptic interfaces for virtual environment and teleoperator systems. IEEE Computer Society Press, Los Alamitos, pp 401–409

Perry J, Rosen J, Burns S (2007) Upper-limb powered exoskeleton design. IEEE/ASME Trans Mechatron 12(4):408–417. doi:10.1109/TMECH.2007.901934

Pfurtscheller G, Müller-Putz G, Scherer R, Neuper C (2008) Rehabilitation with brain–computer interface systems. Computer 41(10):58–65

Rao SM, Binder JR, Hammeke TA, Bandettini PA, Bobholz JA, Frost JA, Myklebust BM, Jacobson RD, Hyde JS (1995) Somatotopic mapping of the human primary motor cortex with functional magnetic resonance imaging. Neurology 45(5):919–924

Rao S, Carloni R, Stramigioli S (2010) Stiffness and position control of a prosthetic wrist by means of an emg interface. In: Engineering in medicine and biology society (EMBC), 2010 annual international conference of the IEEE, pp 495–498

Repperger D, Remis S, Merrill G (1990) Performance measures of teleoperation using an exoskeleton device. In: Robotics and automation, proceedings, 1990 IEEE international conference on, vol 1, pp 552–557. doi:10.1109/ROBOT.1990.126038

Riley JM, Kaber DB, Draper JV (2004) Situation awareness and attention allocation measures for quantifying telepresence experiences in teleoperation. Hum Factors Ergon Manuf Service Ind 14(1):51–67. doi:10.1002/hfm.10050. URL http://dx.doi.org/10.1002/hfm.10050

Rocon E, Ruiz A, Pons J, Belda-Lois J, Sanchez-Lacuesta J (2005) Rehabilitation robotics: a wearable exo-skeleton for tremor assessment and suppression. In: Robotics and automation, ICRA 2005. Proceedings of the 2005 IEEE international conference on, pp 2271–2276. doi:10.1109/ROBOT.2005.1570451

Ronsse R, Vitiello N, Lenzi T, van den Kieboom J, Carrozza M, Ijspeert A (2011) Human–robot synchrony: flexible assistance using adaptive oscillators. IEEE Trans Biomed Eng 58(4):1001–1012

Rosen J, Brand M, Fuchs M, Arcan M (2001) A myosignal-based powered exoskeleton system. IEEE Trans Syst Man Cybern, Part A, Syst Hum 31(3):210–222. doi:10.1109/3468.925661

Rosen J, Perry J, Manning N, Burns S, Hannaford B (2005) The human arm kinematics and dynamics during daily activities—toward a 7 dof upper limb powered exoskeleton. In: Advanced robotics. ICAR’05, proceedings, 12th international conference on, pp 532–539. doi:10.1109/ICAR.2005.1507460

Santucci E, Balconi E (2009) The multicomponential nature of movement-related cortical potentials: functional generators and psychological factors. Neuropsychol Trends 5:59–84

Schiele A (2009) Ergonomics of exoskeletons: Subjective performance metrics. In: Intelligent robots and systems, IROS 2009. IEEE/RSJ international conference on, pp 480–485. doi:10.1109/IROS.2009.5354029

Schiele A, van der Helm F (2006) Kinematic design to improve ergonomics in human machine interaction. IEEE Trans Neural Syst Rehabil Eng 14(4):456–469

Stastn J, Sovka P High-resolution movement EEG classification. Comput Intell Neurosci 54925 (2007)

Tsagarikis N, Jafari A, Caldwell D (2010) A novel variable stiffness actuator: minimizing the energy requirements for the stiffness regulation. In: Engineering in medicine and biology society (EMBC). 2010 annual international conference of the IEEE, pp 1275–1278. doi:10.1109/IEMBS.2010.5626413

Vallery H, Veneman J, van Asseldonk E, Ekkelenkamp R, Buss M, van Der Kooij H (2008) Compliant actuation of rehabilitation robots. IEEE Robot Autom Mag 15(3):60–69. doi:10.1109/MRA.2008.927689

Van Den Broek E, Lisý V, Westerink J, Schut M, Tuinenbreijer K (2009) Biosignals as an advanced man-machine interface. In: Proceedings of the second international conference on health informatics, HEALTHINF 2009, Porto, Portugal, pp 15–24

Wang B, Wan F (2009) Classification of Single-Trial EEG based on support vector clustering during finger movement. Adv neural netw, ISNN 2009, 354–363

Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM (2002) Brain-computer interfaces for communication and control. Clin Neurophysiol 113(6):767–791

Zander TO, Kothe C (2011) Towards passive brain–computer interfaces: applying brain–computer interface technology to human–machine systems in general. J Neural Eng 8(2):025005

Acknowledgements

This work was supported by the German Bundesministerium für Bildung und Forschung (BMBF, grant FKZ 01IW10001), and the German Bundesministerium für Wirtschaft und Technologie (BMWi, grant FKZ 50 RA 1012 and grant FZK 50 RA 1011). We would also like to thank all the people that took part in the experimental phase.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Folgheraiter, M., Jordan, M., Straube, S. et al. Measuring the Improvement of the Interaction Comfort of a Wearable Exoskeleton. Int J of Soc Robotics 4, 285–302 (2012). https://doi.org/10.1007/s12369-012-0147-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-012-0147-x