Abstract

Background

Reporting standards promote clarity and consistency of stress myocardial perfusion imaging (MPI) reports, but do not require an assessment of post-test risk. Natural Language Processing (NLP) tools could potentially help estimate this risk, yet it is unknown whether reports contain adequate descriptive data to use NLP.

Methods

Among VA patients who underwent stress MPI and coronary angiography between January 1, 2009 and December 31, 2011, 99 stress test reports were randomly selected for analysis. Two reviewers independently categorized each report for the presence of critical data elements essential to describing post-test ischemic risk.

Results

Few stress MPI reports provided a formal assessment of post-test risk within the impression section (3%) or the entire document (4%). In most cases, risk was determinable by combining critical data elements (74% impression, 98% whole). If ischemic risk was not determinable (25% impression, 2% whole), inadequate description of systolic function (9% impression, 1% whole) and inadequate description of ischemia (5% impression, 1% whole) were most commonly implicated.

Conclusions

Post-test ischemic risk was determinable but rarely reported in this sample of stress MPI reports. This supports the potential use of NLP to help clarify risk. Further study of NLP in this context is needed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Approximately 4 million stress tests are performed annually in the United States, nearly 90% of which are done with cardiac imaging, such as single photon electron computed tomography (SPECT) or other nuclear myocardial perfusion imaging (MPI) methods.1 Based on stress test results, clinicians make an assessment of post-test ischemic risk that is critical for directing downstream care. For example, whether stress MPI findings are low, moderate, or high- risk determines the appropriateness of coronary angiography and revascularization.2,3

Clinicians’ assessment of risk starts with the nuclear cardiology report. Many of the required reporting elements for stress MPI are designed to help clinicians determine post-test risk.4,5 Unfortunately, recent evidence from a large accrediting body found that between 20% and 50% of reports in accredited facilities do not adhere to reporting standards.6 Even though given a structured description of ischemic lesions, one study showed that referring providers frequently underestimate the extent of ischemia.7 Therefore, while efforts to accelerate adoption of stress MPI reporting standards are likely to improve the overall clarity and consistency of reports, this may not ensure that the clinical significance of results are understood by referring providers.

Data analytic tools such as Natural Language processing (NLP) could be used to augment providers’ understanding of stress MPI results. NLP has previously been used in radiology and other fields to process free text medical reports in order to facilitate billing, assess the quality of reporting, and as it relates to the current study, underscore pertinent or high-risk findings.8 Using this approach, a NLP tool could conceivably be used to interpret stress MPI reports, extracting unstructured information to provide structured estimates of post-test ischemic risk.

A NLP approach to estimate risk is only viable, however, if the underlying reports include all of the critical data elements required for accurate risk estimation. Accordingly, in our study, we used a national sample of stress MPI reports from the Veterans Health Administration (VA) to assess whether these reports contained all the necessary data elements for determining risk—the post-test risk—used to guide appropriateness of coronary revascularization.2 For comparison, we also hoped to quantify the percentages of reports that explicitly commented on the clinical significance of the results.

Methods

Setting, Patient Population, and Data Source

Figure 1 describes how we identified and acquired our sample of stress MPI reports. Given our focus on ischemic risk estimation, we sought to enrich our sample corpus with reports from patients likely to have abnormal findings. We therefore limited our initial document search to VA patients who underwent cardiac catheterization as captured by the VA clinical assessment reporting and tracking program (CART).9 In these patients, we searched the VA corporate data warehouse (CDW) for any stress test report between January 1, 2009 and December 31, 2011 based on current procedural terminology (CPT) and international classification of diseases ninth revision (ICD-9) codes for myocardial nuclear imaging (78451–78454, 78460, 78461, 78464, 78465, 78472, 78473, 78481, 78483, 78491, 78492, 89.44). Reports that did not contain VA station number (facility identifier), clinical history, report text, or impression text were excluded.

Acquisition of a sample of stress myocardial perfusion imaging reports within the VA corporate data warehouse. This figure describes how the corpus of stress MPI reports was acquired. Given the focus on post-test risk estimation, the authors limited the initial search to only VA patients who underwent cardiac catheterization as captured by the VA CART Program (120,580 patients). The records of these patients were then searched using the VA Corporate Data Warehouse (CDW) for any stress test report between January 1, 2009 and December 31, 2011 based on CPT and ICD-9 codes for myocardial nuclear imaging (45,321 reports). Reports that did not contain station number, clinical history, report text, impression text, or relevant text strings (e.g., “Spect,” “Myoview,” etc.) were excluded (22,981 reports). From this larger corpus, 4000 reports were randomly selected using proportional stratification based on VA station. To eventually allow iterative improvement of an NLP tool, these 4000 documents were partitioned into 20 groups of 200 documents. One of those groups was randomly selected for a pilot-scale analysis. 113 nuclear imaging reports were randomly selected from this group, of which 99 were stress nuclear MPI studies

To confine the corpus to stress MPI reports only, we included only documents containing one of the following text strings, “spect,” “pet,” “technetium,” “myoview,” “sestamibi,” “tc-99,” tetrofosmin,” “thallium,” “radionuclide,” “isotope,” “radiopharm,” or “radioisotop” or the space-separated strings “mci ” or “ tc “ anywhere in the fields of name of test, report text, or impression text.

The CART database used to define our study population contained a total of 112,784 catheterization procedures between 1/1/2009 and 12/31/2011, carried out in 112,580 distinct patients. Within this date range, 45,321 of these patients underwent 45,363 cardiac stress tests. Using the preceding inclusions and exclusions to search for stress MPI reports in the VA CDW, our initial document corpus contained 22,981 reports collected from 22,981 distinct patients across 68 separate VA stations. From this larger corpus, we randomly selected 4000 reports using proportional stratification based on VA station. To eventually allow iterative improvement of an NLP tool, these 4000 documents were partitioned into 20 groups of 200 documents.

Before we could embark on developing an NLP tool, we needed to understand the composition and quality of the stress MPI reports captured by our search. Namely, we hoped to determine whether reports consistently contained enough unstructured data for an NLP tool to estimate post-test risk. Therefore, we randomly selected one group of 200 documents for a pilot-scale analysis. The randomly generated document corpus included 113 studies; 14 of these studies were not nuclear myocardial perfusion studies. The remaining 99 reports from 44 different VA stations were included in our analysis.

Assessment of Stress MPI Documentation Quality

We focused our efforts on documentation of stress MPI findings and our ability to determine post-test ischemic risk based on the description of relevant imaging findings. Critical data elements for risk estimation, listed in Table 1, were defined by collating required and recommended inputs from the 2009 guidelines for standardized reporting of nuclear MPI with clinically important elements from the 2009 appropriateness criteria for coronary revascularization.2,4 This list is consistent with recommendations from the Intersocietal Accreditation Commission for nuclear cardiology, nuclear medicine, and PET laboratories (IAC Nuclear/PET, formerly ICANL).5 In addition, these data elements represent the key ingredients for a natural language processing system to determine patients’ post-test risk from a nuclear MPI report.

Two reviewers (AL, SB) independently analyzed the 99 stress MPI reports in our sample. Reviewers examined each test report for the presence or absence of critical data elements, both within the impression alone and within the report in its entirety. The presence or absence of critical data elements was adjudicated according to prespecified rules.

Regarding the presence or absence of a perfusion abnormality, if the words “ischemia” or “infarct” were not explicitly used, the reviewers attempted to identify terms that might otherwise be used to describe these findings (e.g., “scar” to describe infarct). We called these ‘implicit’ descriptions. In terms of the characteristics of a perfusion abnormality, we looked for descriptors of size, location, and severity of the lesion. We also assessed each report for the presence or absence of summed rest (SRS), stress (SSS), and difference (SDS) scores as well as the presence or absence of transient ischemic dilation (TID). With regard to left ventricular systolic function, we accepted either a quantitative ejection fraction or qualitative descriptors such as “moderately depressed” or “normal.” Finally, we assessed the reports for the presence or absence of a formal risk assessment by the interpreting physician.

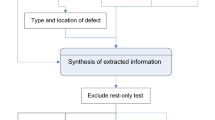

Within this framework, the reviewers then independently graded each report for its ability to confer post-test ischemic risk based on the 2009 appropriateness criteria for coronary revascularization.2 If the appropriateness of revascularization could be determined based on available report elements, we considered post-test ischemic risk to be “determinable.” Specifically, risk was considered determinable if the report described the following basic elements: the presence or absence of ischemia; description of ischemic lesion if present; description of left ventricular systolic function (Figure 2).

The ability to determine post-test ischemic risk based on critical data elements in a stress nuclear MPI report. This figure describes how critical data elements were used to assess whether ischemic risk was “determinable” or “not determinable.” If a formal ischemic risk assessment was not provided in the stress test report, the authors assessed the extent to which risk was “determinable.” Risk was considered “determinable” if the report described the following basic elements: the presence or absence of ischemia; description of ischemic lesion if present; description of left ventricular systolic function. The inset table provides reasons why risk was not determinable based on review of the impression section alone (n = 25) and considering the entire report (n = 2)

After initial ratings were made by each reviewer, final grades were assigned based on consensus between the reviewers. Interrater agreement was assessed by Cohen’s kappa calculations.

Results

Impression-Only Review

Results with respect to both the impression section alone and the full report are summarized in Table 2. In the review limited to the ‘impression’ section of 99 stress MPI reports, the presence or absence of ischemia was directly reported in 70 (71%) reports. In an additional 21 (21%) reports, ischemia was described in other terms, most commonly as a “reversible” defect (10 of 21). Meanwhile, the presence or absence of infarct was directly reported in 22 (22%) reports and implicitly reported in another 35 (35%) reports using terms such as “scar” (9 of 35) and “fixed” defect (10 of 35). Of the 67 cases (68%) where ischemia or infarct was reported, 65 (94%) commented on the location, 37 (57%) the severity, and 36 (55%) the size of the abnormality. An ejection fraction was reported in 79 (80%) reports. Sum stress, rest, or difference scores were provided in 6 (6%) reports, and an assessment of transient ischemic dilation was provided in 13 (13%) cases. A formal post-test ischemic risk assessment was provided in 3 (3%) reports. When a formal statement of risk was not explicitly provided, ischemic risk was determinable in 71 (74%) cases. Of the 25 (26%) cases where risk was not determinable, inadequate description of LV systolic function (9 of 25), inadequate description of ischemia (5 of 25), or both (11 of 25) were the most common deficiencies in reporting (Figure 2).

Full Report Review

Analyzing the 99 reports in their entirety, the presence or absence of ischemia was directly reported in 72 (73%) reports, while an additional 23 cases (23%) used other terms such as a “reversible” defect (15 of 23). Meanwhile, the presence or absence of infarct was directly reported in 25 (25%) reports and implicitly reported in another 40 cases (40%), using the terms “scar” (11 of 40) and “fixed” defect (11 of 40). Of 67 cases (68%) where an ischemic or infarct lesion was described, 44 (66%) commented on the size, 49 (73%) the severity, and 67 (100%) the location of the abnormality. An ejection fraction was given in 99 (100%) studies. Sum stress/difference scores and TID were reported in 13 (13%) and 36 (36%) cases, respectively—an improvement from the impression alone. Ischemic risk was judged to be determinable in 97 (98%) cases based on critical elements in the report. Of the two cases where risk could not be determined from report elements, one case did not adequately describe LV systolic function, and the other case contained no description of an ischemic lesion. A formal post-test risk assessment was provided in 4 (4%) reports.

Interrater Agreement

There was strong agreement between the reviewers in the individual assessment of the measured elements of stress test reporting (2519 of 2574 measures; 97.9%; Cohen’s kappa 0.89). There was also strong agreement in the individual assessment of determinable risk from stress reports (189 of 198 measures; 95.5%; Cohen’s kappa 0.81).

Discussion

Our study is a critical first step in describing the content of stress MPI reports as it relates to the determination of post-test risk. We found that 98% of reports in a sample from the Veteran’s Health Administration included all of the critical data elements required for estimation of risk but only 4% included a direct statement about this risk. These findings highlight an opportunity for NLP solutions to assist with clinical interpretation of stress test reports.

Stress myocardial perfusion imaging techniques are among the most commonly used non-invasive methods to further define risk of coronary disease in patients with an intermediate or borderline high pretest probability.10 Concise but comprehensive reporting of test findings is essential for promoting appropriate downstream treatment decisions. In particular, it is critical that referring providers be able to estimate post-test ischemic risk since this affects the appropriateness of more invasive diagnostic testing and treatment.2,3,11

Recent data suggest that ordering providers underestimate ischemia when reading stress MPI reports.7 This is troubling in that it suggests that referring providers may not be able to accurately estimate their patient’s post-test risk based on their review of stress test results. Who then can make an assessment of risk for the patient? While it is not required by reporting standards, accrediting agencies for stress MPI recommend that interpreting physicians comment on the clinical significance of perfusion results—and specifically, the risk—in the “overall impression” section of the report.4 However, many “readers” are reluctant to supersede the role of the referring clinician, who knows the patient and the pretest probability, in making such a calculation. Bearing this in mind, it is not surprising that < 5% of reports in our sample contained a comment on risk.

Our results are heartening if you consider that the basic data elements necessary for estimating post-test ischemic risk are present in nearly 100% of reports. Data mining tools, such as natural language processing, could be trained to automatically extract the relevant text from imaging reports and synthesize this data in a coherent way. Similar efforts have led to the extraction of ejection fraction data from unstructured echocardiography reports.12 Natural language processing has been used to identify high-risk findings within radiology reports, such as those pertaining to appendicitis, thromboembolic disease, and premalignant lesions.8 In the case of stress imaging reports, NLP could not only be used to identify basic information about systolic function (e.g. ejection fraction), but also direct and indirect descriptors of ischemia and relevant modifiers such as size, location, and severity.

If an NLP tool could be designed to pluck these elements out of stress test reports, one could even imagine automating a basic assessment of post-test ischemic risk. We imagine this tool would not only assist both referring and interpreting physicians in guiding appropriate clinical care for patients after stress MPI testing, but it would inform research and quality efforts. Referrals for cardiac catheterization after stress testing could be routinely assessed for appropriateness. Disparities between expected and measured risk could be studied. You could even study how the calculation of post-test risk itself affects outcomes: do patients with high-risk stress MPI findings have better outcomes because of their greater likelihood to receive PCI?

Unfortunately, our data also suggest that designing an NLP tool for unstructured stress imaging reports may be challenging, since roughly 1 in 4 reports did not disclose critical report elements in the impression section. Analyzing an entire report with NLP requires complicated rules to relate separate text clauses spread throughout the document, whereas focusing on a single section can simplify these functions. NLP tools for imaging reports are therefore somewhat limited by the complexity of syntax and how the language within the report is structured.

This is why structured reporting is so essential to overall improvement of report quality. A structured report is written using standardized definitions and content in a predictable and clinically relevant format, as opposed to more free form reporting. While structured reporting constrains information entry, attenuating efficiency, and overall satisfaction among users of these documents, recipients of imaging reports have been shown to prefer structured reports to free text presumably because such standardization promotes consistency and clarity.13,14,–15 Cardiac imaging societies, as well as other imaging subspecialty societies, have endorsed structured reporting as a means of improving the quality of reports.16 With appropriate input and buy-in from local stakeholders, it has been shown that standardized reporting can be implemented successfully and to the satisfaction of imaging specialists.17

Still, uptake of structured reporting related to nuclear stress imaging reports has been slow. A recent study by Maddux et al showed that only about 60% of accredited institutions are compliant with IAC Nuclear/PET reporting standards.6 This percentage for non-accredited institutions is likely even lower. Improving compliance with reporting of required data elements—many of which are designed to augment clinician’s assessment of post-test risk—will be essential to the success of NLP tools for processing risk. Special attention should be paid to the impression section, not only because this would simplify NLP systems as described above, but because this is an essential section of the report and the first place ordering providers look for information.18,19

This study should be considered in light of the following limitations. First, the study population was limited to Veterans seeking care within the VA, and our results are perhaps not generalizable to other care provision settings. Second, we lacked data on institutional, patient, and provider factors that may have contributed to a report’s adequacy, such as the experience and specialty of the report author. Future studies may seek to identify reporting provider- and system-level characteristics associated with higher-quality reporting. Third, we restricted our analysis to those reporting elements most directly applicable to the determination of risk and subsequent clinical care. Future study may identify gaps in other technical aspects of reporting that relate to procedural safety. Fourth, our analysis does not consider that increased attention to appropriateness of invasive coronary procedures may, over time, result in a greater proportion of reports incorporating a risk assessment. A logical next step in this work would be to examine temporal trends in the reporting of post-test risk. Finally, our work does not directly inform the impact of poor documentation on subsequent clinical care. Future work should seek to understand the impact of poor documentation quality on the quality of downstream care.

In conclusion, this study demonstrates that most stress MPI reports do not provide an estimate of ischemic risk even though these reports contain the basic data required for such a summary interpretation. Broader application of structured data reporting will improve the quality and consistency of stress test documentation. In doing so, these efforts may also facilitate using natural language processing systems to compute and reliably communicate post-test ischemic risk.

New Knowledge Gained

In a sample of stress MPI reports in the VA, two independent reviewers agreed that reports often contained the basic elements necessary to make a summary interpretation of post-test risk, but these summary results were rarely reported by interpreting providers. This suggests an opportunity for Natural Language Processing (NLP) tools to assist with providing a summary interpretation. Greater standardization of report content and syntax will assist these efforts.

Abbreviations

- ASNC:

-

American Society of Nuclear Cardiology

- CART:

-

VA clinical assessment reporting and tracking program

- CDW:

-

VA corporate data warehouse

- CPT:

-

Current procedural terminology

- IAC:

-

Intersocietal accreditation commission

- ICD-9:

-

International classification of diseases, ninth revision codes

- MPI:

-

Myocardial perfusion imaging

- NLP:

-

Natural language processing

- PCI:

-

Percutaneous coronary intervention

- SPECT:

-

Single photon electron computed tomography

- SDS:

-

Summed difference score

- SRS:

-

Summed rest score

- SSS:

-

Summed stress score

- TID:

-

Transient ischemic dilation

- VA:

-

Veterans health administration

References

Ladapo JA, Blecker S, Douglas PS. Physician decision making and trends in the use of cardiac stress testing in the United States. Ann Intern Med 2014;161:482-90.

Patel MR, Dehmer GJ, Hirshfeld JW, Smith PK, Spertus JA. ACCF/SCAI/STS/AATS/AHA/ASNC 2009 appropriateness criteria for coronary revascularization. Circulation 2009;119:1330-52.

Patel MR, Bailey SR, Bonow RO, Chambers CE, Chan PS, Dehmer GJ, et al. ACCF/SCAI/AATS/AHA/ASE/ASNC/HFSA/HRS/SCCM/SCCT/SCMR/STS 2012 appropriate use criteria for diagnostic catheterization. JACC 2012;59:1995-2027.

Tilkemeier PL, Cooke CD, Grossman GB, McCallister BD, Ward RP. ASNC imaging guidelines for nuclear cardiology procedures. J Nucl Cardiol 2009;16:650.

The IAC standards and guidelines for Nuclear/PET accreditation. Ellicott City, MDFinal report. International Accreditation Commission, Nuclear/PET. 2015. http://www.intersocietal.org/nuclear.

Maddux PT, Farrell MB, Ewing JA, Tilkemeier PL. Improved compliance with reporting standards: A retrospective analysis of intersocietal accreditation commission nuclear cardiology laboratories. J Nucl Cardiol 2016;9:350.

Trägårdh E, Höglund P, Ohlsson M, Wieloch M, Edenbrandt L. Referring physicians underestimate the extent of abnormalities in final reports from myocardial perfusion imaging. EJNMMI Res 2012;2:27.

Pons E, Braun LMM, Hunink MGM, Kors JA. Natural language processing in radiology: A systematic review. Radiology 2016;279:329-43.

Maddox TM, Plomondon ME, Petrich M, Tsai TT, Gethoffer H, Noonan G, et al. A national clinical quality program for veterans affairs catheterization laboratories (from the veterans affairs clinical assessment, reporting, and tracking program). Am J Cardiol 2014;114:1750-7.

Fihn SD, Gardin JM, Abrams J, Berra K, Blankenship JC, Dallas AP, et al. 2012 ACCF/AHA/ACP/AATS/PCNA/SCAI/STS guideline for the diagnosis and management of patients with stable ischemic heart disease. JACC 2012;60:e44-164.

Wolk MJ, Bailey SR, Doherty JU, Douglas PS, Hendel RC, Kramer CM, et al. ACCF/AHA/ASE/ASNC/HFSA/HRS/SCAI/SCCT/SCMR/STS 2013 multimodality appropriate use criteria for the detection and risk assessment of stable ischemic heart disease. JACC 2014;63:380-406.

Garvin JH, DuVall SL, South BR, Bray BE, Bolton D, Heavirland J, et al. Automated extraction of ejection fraction for quality measurement using regular expressions in unstructured information management architecture (UIMA) for heart failure. J Am Med Inform Assoc 2012;19:859-66.

Rosenbloom ST, Denny JC, Xu H, Lorenzi N, Stead WW, Johnson KB. Data from clinical notes: a perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc 2011;18:181-6.

Schwartz LH, Panicek DM, Berk AR, Li Y, Hricak H. Improving communication of diagnostic radiology findings through structured reporting. Radiology 2011;260:174-81.

Naik SS, Hanbidge A, Wilson SR. Radiology reports. Am J Roentgenol 2001;176:591-8.

Douglas PS, Hendel RC, Cummings JE, Dent JM, Hodgson JM, Hoffmann U, et al. ACCF/ACR/AHA/ASE/ASNC/HRS/NASCI/RSNA/SAIP/SCAI/SCCT/SCMR 2008 health policy statement on structured reporting in cardiovascular imaging. JACC 2009;53:76-90.

Larson DB, Towbin AJ, Pryor RM, Donnelly LF. Improving consistency in radiology reporting through the use of department-wide standardized structured reporting. Radiology 2013;267:240-50.

Bosmans JML, Weyler JJ, De Schepper AM, Parizel PM. The radiology report as seen by radiologists and referring clinicians: Results of the COVER and ROVER surveys. Radiology 2011;259:184-95.

Wackers FJT. The art of communicating: The nuclear cardiology report. J Nucl Cardiol 2011;18:833-5.

Disclosures

AEL, NRS, MEM, RMR, GTG and SMB have no relevant disclosures.

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors of this article have provided a PowerPoint file, available for download at SpringerLink, which summarizes the contents of the paper and is free for re-use at meetings and presentations. Search for the article DOI on SpringerLink.com.

Funding

This project was supported by funding from VA Health Services Research & Development (HSR&D-QUERI RRP 12-448). Dr. Levy receives funding from the National Institutes of Health (NIH) T32 Training Grant 5T32-HL-007822-19. Dr. Shah is supported by an Agency for Healthcare Research and Quality award (5K12HS022998).

Electronic Supplementary Material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Levy, A.E., Shah, N.R., Matheny, M.E. et al. Determining post-test risk in a national sample of stress nuclear myocardial perfusion imaging reports: Implications for natural language processing tools. J. Nucl. Cardiol. 26, 1878–1885 (2019). https://doi.org/10.1007/s12350-018-1275-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12350-018-1275-y