Abstract

Learning collaboratives (LCs) have often been used to improve somatic health care quality in hospitals and other medical settings, and to some extent to improve social services and behavioral health care. This initiative is the first demonstration of a national, systematic LC to advance comprehensive school mental health system quality among school district teams. Twenty-four districts representing urban, rural, and suburban communities in 14 states participated in one of two 15-month LCs. Call attendance (M = 73%) and monthly data submission (M = 98% for PDSA cycles and M = 65% for progress measures) indicated active engagement in and feasibility of this approach. Participants reported that LC methods, particularly data submission, helped them identify, monitor and improve school mental health quality in their district. Qualitative feedback expands quantitative findings by detailing specific benefits and challenges reported by participants and informs recommendations for future research on school mental health LCs. Rapid-cycle tests of improvement allowed teams to pursue challenging and meaningful school mental health quality efforts, including mental health screening in schools, tracking the number of students receiving early intervention (Tier 2) and treatment (Tier 3) services, and monitoring psychosocial and academic improvement for students served.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Schools and districts are well-positioned to support student mental health and wellness. Given the compelling evidence in favor of early intervention and prevention of children’s mental health risks (Kieling et al., 2011; Kessler et al., 2007) and the well-documented gap between children’s need for and access to mental health services (Merikangas et al., 2010), schools are an ideal setting for delivering a full continuum of student mental health services and supports (Rones & Hoagwood, 2000; Weist & Evans, 2005). Accordingly, a comprehensive model of school mental health (SMH) has grown in the past several decades and federal investments have been made to support the proliferation of more comprehensive, high-quality SMH systems (School-Based Health Alliance, 2020; National Center for School Mental Health, 2020; National Center for Healthy Safe Children, 2020; Mental Health Technology Transfer Center, 2020; U.S. Department of Education, Office of Planning, Evaluation and Policy Development, Policy and Program Studies Service, 2018). National school mental health performance standards in the USA define comprehensive SMH systems as collaborative partnerships between school systems and community mental health programs to provide a full array of prevention, promotion and treatment services, including evidence-based practices and quality improvement processes (Connors et al., 2016; Hoover et al., 2019). The current study provides initial support for a learning collaborative model to drive SMH quality improvement for school districts striving to deliver comprehensive SMH.

Learning collaboratives (LCs) are a strategy to improve health care practices by speeding up the translation of evidence-based science into usual care practice (Nadeem, Olin, Hill, Hoagwood, & Horwitz, 2014). LC targets, settings, and participating teams vary widely, but most LCs include common methods such as in-person learning sessions, phone meetings, data reporting, leadership involvement, and training in quality improvement (Nadeem et al., 2014). Although LCs have been extensively used in health care to address care quality issues such as infant mortality (Ghandour et al., 2017; Hirai et al., 2018), asthma (Mold et al., 2014) and sickle cell disease (Oyeku et al., 2012), LCs are a relatively newer strategy applied to improving behavioral health care quality. For instance, LCs have been conducted to improve depression identification and care on college campuses (Chung et al., 2011), implementation of evidence-based treatments for trauma (Hanson, Self-Brown, Rostad, & Jackson, 2016; (Hanson et al., 2016; S Hoover et al., 2018), foster care placement stability (Conradi et al., 2011) and engagement in early childhood intervention programs (Haine-Schlagel, Brookman-Frazee, Janis, & Gordon, 2013). Similar to LCs, professional learning communities (PLCs) have a long history in education for school improvement, educational reform, and teaching practice improvement (Stoll, Bolam, McMahon, Wallace, & Thomas, 2006). PLCs share many elements of LCs (i.e., shared vision or purpose, collaboration and shared learning among a community of professionals) but often do not reflect the same level of structure as LCs with learning sessions, quality improvement training, rapid-cycle tests of change and data reporting.

LCs are best suited to advance the use of evidence-based approaches in practice by speeding the processes of innovation, diffusion and implementation (Nix et al., 2018). Despite ample evidence for the effectiveness of SMH on student outcomes (Bruns, Walwrath, Glass Siegal, & Weist, 2004; Durlak, Weissberg, Dymnicki, Taylor, & Schellinger, 2011; Flannery, Fenning, Kato, & McIntosh, 2014; Greenberg et al., 2003; Kase et al., 2017; Sanchez, Cornacchio, Poznanski, Golik, & Comer, 2018; Taylor, Oberle, Durlak, & Weissberg, 2017) and the factors that contribute to successful SMH systems (Langley, Nadeem, Kataoka, Stein, & Jaycox, 2010; Hoover et al., 2019), districts and schools have yet to achieve widescale adoption of comprehensive school mental health systems (Hoover et al., 2019). Implementation of evidence-based mental health practices in schools is inconsistent at best (Dix, Slee, Lawson, & Keeves, 2012; Hoagwood, et al., 2001; Lendrum, Humphrey, & Wigelsworth, 2013) Also, there are also numerous practical and fiscal barriers to delivering a full array of prevention, early intervention, and treatment services within schools across a district (McIntosh, Kelm, & Canizal Delabra, 2016; Pinkelman, Mcintosh, Rasplica, Berg, & Strickland-Cohen, 2016). Finally, school-community partnership models have been established, but the quality of these partnerships is often limited and the degree to which they occur nationally is unknown (Weist et al., 2012).

Accordingly, there have been significant efforts to advance high-quality comprehensive SMH for schools and districts nationwide (Rones & Hoagwood, 2000; Stephan, Paternite, Grimm, & Hurwitz, 2014; Stephan, Hurwitz, Paternite, & Weist, 2010; Weist et al., 2019; Weist et al., 2009a, Weist, Paternite, Wheatley-Rowe, & Gall, 2009b). Numerous district and school teams engage in self-assessment and quality improvement to ensure they have a multi-tiered system of support (MTSS) in place for meeting students’ individual education and behavioral needs (c.f. SWPBIS Tiered Fidelity Inventory, (Algozzine et al., 2017); Interconnected Systems Framework Implementation Inventory, (Splett et al., 2016); SMH Quality Assessment, (Connors et al., 2016). In recent years, federal funding supported the development and dissemination of national performance standards for SMH system quality and sustainability in order to drive a consistent standard among schools and districts nationwide (Connors et al., 2016). However, school and district teams likely need structured, ongoing technical assistance and support to identify and improve their system quality.

Given the promising literature supporting LCs as a viable approach to QI for other system improvements in health and behavioral health, this method has the potential to advance innovation and improvement in comprehensive SMH as well. Despite some targeted quality improvement efforts in SMH, there is virtually no literature describing the use of LCs to improve SMH system quality. Only one study documents the use of an LC to improve SMH, and it was limited to SMH in school-based health centers (which exist in less than 3000 schools nationally) and focused primarily on tertiary services (Stephan, Connors, Arora, & Brey, 2013; Stephan, Mulloy, & Brey, 2011). This 15-month LC with 19 school-based health centers in 6 states was successfully implemented and shown to result in an increase in providers’ use of evidence-based practices and improvement in service quality indicators (e.g., screening, risk assessment, diagnostic and procedural coding) and collaborative care quality indicators (e.g., communication between providers and effectiveness of referral processes).

The current study piloted an adapted version of the Institute for Healthcare Quality Breakthrough Series Model (IHI BTS) Model and the Model for Improvement (Langley et al., 2010) to drive quality improvements in SMH among 25 school districts in 14 states. Based on the description of feasibility as an implementation outcome described by Proctor et al. (2009), we examine the likelihood that the LC method can be successfully used to improve SMH quality based on participating teams’ engagement and experience. Engagement was measured by LC call attendance and data submission, and experience of the LC was assessed using a mixed methods survey.

Methods

Participants

Twenty-five school district teams of approximately six team members each committed to participate in a 15-month learning collaborative focused on improving SMH system quality and sustainability. Twelve teams participated in the first cohort and 13 teams participated in the second cohort for a total of 25 teams across the two cohorts. Of note, one district participated in both cohorts, so the 25 teams were from a total of 24 districts. A request for applications (RFA) was developed by faculty and staff at the National Center for School Mental Health (NCSMH) with feedback from external project advisors and disseminated via a national listserv of approximately 2000 subscribed individuals as well as by project advisors and the NCSMH advisory board. District teams identified and obtained commitments from a core team, including at least 1 representative from each of the following groups: (1) school district or community leader, (2) school building administrator (e.g., Principal, Assistant Principal), (3) school-employed supervisor/director (e.g., district school psychology supervisor), (4) community-employed supervisor/director (e.g., clinical director of a community behavioral health organization that provides SMH services), and (5) youth or family member or advocate. Applicants were asked to have the capability to access basic school system and/or behavioral health system data including student population, SMH service delivery, and school outcomes, as well as internet and phone access to participate in virtual learning experiences.

LC teams represented small, medium and large districts in rural, suburban, and urban areas (see Tables 1 and 2 for district characteristics). In total, these districts served 1446 schools, with each district serving anywhere from 2 to 680 schools. LC districts served approximately 815,222 students, with each district/school serving anywhere from 600 to 386,261 students. Although the RFA guidelines, application process, and dissemination strategy were identical for both cohorts, Cohort I teams represented primarily medium and large school districts and Cohort II teams represented primarily small and medium school districts (see Table 1).

Design

Our LC was an adapted version of the Institute for Healthcare Improvement Breakthrough Series Model (IHI BTS; see Fig. 1). The LC started with a pre-work conference call and a 2-day, in-person learning session. Action period calls were held each month for which all teams were asked to submit progress measures and at least one Plan-Do-Study-Act (PDSA) cycle (See Fig. 2 for a sample). PDSAs were used to document specific changes in school mental health quality or sustainability. Teams did not address all drivers of SMH system quality with their PDSAs during the LC, which would not have been practical or realistic for the 15-month period. Instead, they focused, as encouraged, on those drivers of most importance for their district. Action period calls focused on reviewing and discussing observed changes in progress measures and reviewing PDSAs to facilitate shared learning and accelerate innovative and effective quality improvement cycles. Action period call agendas were set by CoIIN developers. Calls started with a “roll call” to track attendance and welcome participants, followed by approximately 5-10 min for shared learning on any special topics or resources the teams requested between calls or live during that agenda item. Next, 4-5 PDSAs were selected by CoIIN developers for teams to present and invite feedback from the group aloud or in the chat box function. PDSA selection was decided by the study team on a rotating basis to ensure all teams had the opportunity to present numerous times throughout the LC. PDSA selection was also based on ensuring exemplar quality improvement methods and diverse areas of school mental health innovation could be disseminated to the group. Run chart data were reviewed for some or all domains, depending on time and signals in the data to discuss. There were approximately 25 LC participants who attended each call (i.e., two team members per team), plus study team faculty and staff.

The most notable adaptation of our LC model as compared to the traditional IHI BTS model is that our follow-up learning sessions were held virtually for 90 min long instead of 2-day, in-person follow-up learning sessions. This was driven primarily by limited time and cost resources of participating teams. Learning sessions focused on best practices of interest to teams by presenting in-depth didactic content from expert faculty as well as sharing practice-based evidence. As opposed to the action period calls which focused on PDSA and data submission sharing and discussion, the learning sessions emphasized learning from expert faculty, with teams invited to present PDSA or data submissions aligned with that topic. Expert faculty also held individual team calls as requested by teams, which ended up ranging from 1-3 calls during the entire LC.

Measures

LC Engagement

Team engagement in the LC was assessed by three measures, (1) monthly call attendance, (2) virtual learning session attendance, and 3) data submission of PDSAs and progress measures. Participants received several reminders about monthly calls, learning sessions, and data submission to improve the likelihood of engagement in each type of activity. Attendance was based on at least one team member joining learning sessions. PDSA submission was defined as submitting a PDSA with at least the “plan” section completed.

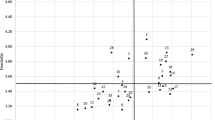

Progress measure submission was defined as completing at least one process or outcome measure per month. There were six quality process measures, one quality outcome measure, five sustainability process measures, and one sustainability outcome measure that were tracked in run charts and reviewed on calls (see Figs. 3 and 4 for sample run charts). Monthly measures included mean scores from the School Mental Health Quality Assessment (SMH-QA) and the School Mental Health Sustainability Assessment (SMH-SA). The SMH-QA and the SMH-SA assess district team self-reports of comprehensive SMH system performance on 12 key domains (Connors et al., 2016). Mean scores in the 1.0–2.9 range are considered “emerging,” 3.0-4.9 are considered “progressing,” and 5.0–6.0 are considered “mastery.” Teams completed the SMH-QA and SMH-SA on a web-based quality improvement system, The SHAPE System (www.theSHAPEsystem.com) where they could log into their team account, complete measures, and automatically view reports. The five quality process measures used for the LC were mean scores on each of the six SMH-QA domains, as follows: (1) teaming; (2) needs assessment/resource mapping; (3) screening; (4) evidence-based services and supports; (5) evidence-based implementation; and 6) data-based decision making. The quality outcome measure was the number of students receiving early intervention (Tier 2) or treatment (Tier 3) services and supports with any improvement (since their baseline assessment) in academic or psychosocial domains (selected by each team).The five sustainability process measures used for the LC were mean scores on each of the five SMH-SA domains, as follows: (1) funding and resources; (2) resource utilization; (3) system quality; (4) documentation and reporting of impact; and (5) system marketing and promotion). The sustainability outcome measure was the number of domains on the SMH-SA with a mean score of mastery (5.0–6.0).

LC Experience

An online survey querying participants’ experiences of the LC was e-mailed to all individual members of district teams. The survey included questions about professional characteristics (i.e., degree, discipline, years in current position, years in mental health), ratings from 1 (“Strongly Disagree”) to 5 (“Strongly Agree”) on four items about LC objectives and open-ended questions about benefits, challenges, and recommendations for the LC. Survey administration time was approximately 20 min and up to five reminders were sent via email.

Analyses

Quantitative data were cleaned and checked for accuracy among data submission portals, data tracking forms, and call recordings. Descriptive statistics including percentages and frequencies were calculated as well as intercorrelations between the number of PDSA cycles and number of domains with reported improvement. Qualitative data were analyzed using a grounded theory approach using NVivo software (Charmaz & Bryant, 2007). Open codes were applied by question type and collapsed into a refined set of focus codes. Data were re-coded in an iterative fashion as necessary during codebook refinement. Two coders were involved in coding with one additional author to provide review of codes and themes after they were developed.

Results

Learning Collaborative Engagement

Monthly Call Attendance

A total of 15 monthly calls were conducted. Across both cohorts, teams attended 11 calls (73%) on average; the median number of calls attended was 13 (87%), and the range of calls attended was 3 to 15.

Virtual Learning Session Attendance

Teams in Cohort I could attend up to three virtual learning sessions. Three Cohort I teams (25%) missed one session, but nine teams (75%) had 100% attendance across all three sessions. Teams in Cohort II were offered four sessions. Learning session attendance in Cohort II ranged from zero to four sessions (mean = 3 sessions). Five Cohort II teams (38%) had 100% attendance across all four sessions, four teams (31%) attended three (75%) sessions, two teams attended two (50%) sessions, and one team didn’t attend any of these sessions. Overall, learning session attendance for all participating teams across both cohorts was 78.3%.

PDSA and Measures Submission

Teams were asked to complete one PDSA per month, but some completed and submitted more. Over the 15-month LC, teams produced an average of 14.7 PDSAs across the various domains (range = 3 to 54, median = 12, mode = 8). In terms of PDSA content across teams and cohorts, teams submitted the most PDSAs in the screening domain (N = 68Footnote 1) and the least in evidence-based implementation (N = 9). For screening, two teams completed 12 and 14 PDSAs, respectively, thus positively skewing the number of PDSAs completed in that domain. Table 3 displays PDSAs by domain. Teams submitted measures an average of 9.7 times over the course of the LC (range = 1 to 14 submissions; median = 10; mode = 14). Five teams submitted progress measures for one to 5 months, which is less than 33% required progress measure data submission.

Domains with the highest number of teams reporting improvement were screening (N = 18, 75% teams with improvement), needs assessment/resource mapping (N = 21, 84%), teaming (N = 20, 80%), and data-driven decision making (N = 19, 76%). These were also the domains in which teams submitted the most PDSAs. Number of PDSA cycles was positively associated with number of domains with reported improvement (r = .435, p = .034) for the 25 teams with improvement. However, at the team level, the number of PDSAs submitted in each domain was not clearly linked to whether teams reported improvement in that domain during the collaborative. Twenty-four (96%) of teams reported improvement in at least one domain by the end of the collaborative, and most teams reported improvement in numerous domains. None of the teams were able to report the quality outcome at the beginning of the LC. However, after building expertise and capacity around this metric, 14 (56%) were able to report the denominator of this measure (i.e., number of students receiving Tier 2 or Tier 3 services and supports), and six (24%) were also able to report the numerator (i.e., number of those students who had improvements in educational or psychosocial functioning).

Learning Collaborative Experience

Forty-nine participants, representing 20 of the 25 LC teams, completed the LC Experience Survey. There was an average of 2.2 team members per team who completed the survey (range = 1 to 4).

Quantitative Results

Participants reported that LC participation led to improved practices or policies in at least one domain. On a scale of 1 (“Strongly Disagree”) to 5 (“Strongly Agree”), they indicated that completing monthly data on the performance measures helped them identify (M = 4.17, SD = 1.03) and monitor (M = 4.00, SD = 1.12) important areas of improvement. They also agreed that completing the PDSAs helps to produce real change in the identified domain (M = 4.22, SD = 0.88).

Qualitative Results

Theoretical coding resulted in four themes. Three themes included codes on both benefits and challenges. The top two benefits and challenges (i.e., at least five coded instances) for each theme are listed with code frequency, number of teams who endorsed the code, and an illustrative quote in Table 4. One theme, “SMH performance improvements,” only describes benefits. Top benefits with code frequency, number of teams who endorsed the code, and illustrative quotes are also provided in Table 4.

First, a wide variety of benefits and challenges related to team processes were reported. In terms of benefits, 10 teams reported improvements in their communication and/or coordination as a result of the LC, which also provided structure and efficiency to their work by offering a system for accountability, support, and shared focus. Other team process benefits noted were strengthened district-community partnerships (9 codes, 3 teams), assistance using data to inform decisions (8 codes, 8 teams), and facilitated shared learning among teams in the LC (6 codes, 6 teams) and within districts (5 codes, 3 teams). Within the team processes theme, the greatest challenge reported was limited time (21 references, 12 teams). This referred to LC activities but mostly the time for teams to meet with one another to develop and run PDSAs, collect and use data, and keep their communication and momentum up in between LC calls. A need for more buy-in and/or engagement from the LC team, including some references to needing a larger team was noted by 10 teams (10 codes). Other team process challenges were keeping teams connected, perhaps also due to time constraints (8 codes, 7 teams), team member turnover (2 codes, 2 teams) and a sense of stress on team members during participation (2 codes, 2 teams).

Second, LC methods and structure came up frequently in participant’s report of benefits and challenges. Many teams reported that the quality improvement (QI) methods they learned were useful (10 codes, 9 teams) and that the LC offered valuable resources and technical assistance (TA, 9 codes, 8 teams). On the other hand, the QI methods such as PDSAs were quite new for most teams and took time to adopt (7 codes, 6 teams). Collecting and reporting monthly data was difficult due to data system constraints and focus on specific metrics to evaluate quality (7 codes, 6 teams). Only one team member mentioned that LC teams all working on different goals was a challenge and another mentioned that learning the language of the LC and the measures was new.

Third, school mental health system performance improvements in various aspects of quality and/or sustainability were noted by over half of reporting teams. Among those who reported the LC resulted in more or better SMH services (16 codes, 10 teams), some referred to general service effectiveness improvements, increasing the scope of services, improving reach to students in need and greater focus and/or improvements in specific areas (e.g., screening and triage procedures). Sustainability improvements were reported as benefits by 4 teams (7 codes), which ranged from strategies to leverage new resources and more effectively allocating existing resources to maximizing billing opportunities and identifying grant mechanisms.

Finally, several broader system factors were acknowledged as contributing to both benefits of and challenges to LC participation. On the one hand, the LC reportedly led to an enhanced recognition of the importance and value of SMH generally, as well as teams recognizing their own strengths and areas of growth related to their SMH system (10 codes, 7 teams). The LC also enhanced explicit support for SMH development and improvements from stakeholders throughout the district and local community (8 codes, 6 teams). However, some broader system factors also challenged LC teams, such as limited SMH funding, constraining their ability to make desired improvements (5 codes, 3 teams). Also, one team cited leadership changes as a challenge to their participation and another noted difficulty making changes within a large, bureaucratic school district system.

In terms of recommendations for improving the LC, participants’ most frequent request was for more technical assistance (TA). This ranged from general TA support requests such as “more training and resources,” individual calls with expert faculty, and more training on the QI process including designing improvement plans and PDSAs based on measures (12 codes, 8 teams). Participants also recommended additional feedback on and help with data (8 codes, 7 teams), including support with data collection and interpretation, using run charts “both as a self-assessment tool and also as a performance metric,” and “more clarity on [SMH-QA and SMH-SA] questions.” The other common recommendation centered around the in-person and virtual learning sessions. Specifically, teams recommended more interaction with fellow LC teams (4 codes, 4 teams) and/or another in-person learning session (3 codes, 2 teams). Various recommendations were also made to improve the virtual action period calls including technology improvements (e.g., chat box size, adding video capability) and focusing virtual calls on specific topics at a time (which was used toward the end of the LC).

Discussion

Schools hold great promise as a venue to promote youth mental health and to address mental health challenges early. However, established models of comprehensive school mental health systems (CSMHS) require an investment in the structures and practices necessary to support a full continuum of mental health supports and services with the partnership of students, families, and community-based organizations (Hoover et al., 2019). LCs are one approach that may be particularly feasible and effective to help school and district teams advance the comprehensiveness of their local school mental health systems. This study investigated the feasibility of applying a well-established LC model, the IHI BTS, to improve quality and sustainability of SMH in 25 districts across the USA.

Overall, our findings indicate that LC engagement was strong as measured by attendance in LC learning activities, use of PDSAs, and data submissions. LC participants regularly engaged in monthly calls and Virtual Learning Sessions (i.e., 73% average attendance across the 15 monthly calls and 78% average attendance on Virtual Learning Sessions). School districts participating in the LC also regularly used PDSAs as a method to plan, implement, and evaluate change ideas and drive quality improvement in their CSMHS with the median number of PDSAs submitted across the 15-month LC was 12 (80% submission). LC participating school districts also submitted data for most months, but this type of participation was more variable with a wide range (1 to 14 months) of data submissions across teams. However, we find the data submission rates impressive given that harnessing often unwieldy district-level data systems to collect and report actionable school mental health data is a complex endeavor that many districts face (Parke, 2012). Quantitative and qualitative results from this study underscore just how challenging it was for district teams to systematically collect and report numeric data about school mental health services and supports provided and associated student outcomes.

Notably, none of the teams were able to report the quality outcome at the outset of the LC, reportedly due to data system constraints. Several teams noted that reporting data, specifically the number of students screened and the quality outcome (i.e., percentage of students with psychosocial or academic progress as a result of an intervention), was difficult throughout the LC. However, over half of teams were able to report the denominator (i.e., number of students receiving Tier 2 or Tier 3 services and supports) and one quarter were able to report the numerator (i.e., number of students with improvement) by the end of the 15 months. Teams attributed their success in data reporting to having defined measures to focus on, structure and consistency of the LC, and technical assistance about how to use data to inform decisions. In some instances, it appears that a focus on data-driven processes did indeed support coordinated teamwork and communication among teams working to advance mental health in schools, as has been suggested in prior research (Lyon, Borntrager, Nakamura & Higa-McMillan, 2013). These findings highlight the research-to-practice gap within school mental health; scholars who have reviewed research demonstrating the impact of school mental health services on student academic and psychosocial outcomes suggest that, as a field, we must continue to demonstrate the relative effectiveness of evidence-based interventions at all tiers to ensure continued recognition of the value of school mental health and investment in the most effective services (Sanchez, Cornacchio, Poznanski, Golik, Chou & Comer, 2018; Suldo, Gormley, DuPaul, & Anderson-Butcher, 2014). Moreover, requirements for districts to track and report on the effectiveness of services provided are likely unreasonable without usable data systems, quality improvement structures, and ongoing support.

Overall, these LC engagement findings are extremely promising given the fast pace of the school environment, busy workloads of school and district personnel, current state of district data systems, and voluntary, unfunded participation. Even in this context, participating school districts were quite engaged in all LC activities throughout the entire cycle and demonstrated commitment to drive school mental health quality improvement.

In addition to strong engagement in LC activities, LC participating school districts reported that the LC helped them to identify, monitor, and improve key areas of SMH quality improvement. Qualitative results were embedded in the LC experience survey and collected sequentially to expand our understanding of participants’ quantitative responses (per mixed methods guidelines, this design is consistent with QUAN + QUAL, Function = Expansion and Process = Embed; (Palinkas et al., 2011). Specifically, we learned that LC participation offered benefits in terms of improved team processes (communication, coordination, structure and efficiency), new skills using LC methods and resources, system-wide improvements to drive quality and sustainability, and an impact on broader system factors such as enhanced recognition of and support for SMH. Participants’ comments about these benefits provide useful insight into how and why their quantitative ratings of how the LC helped them identify, monitor and improve SMH quality.

Arguably, effecting change within school mental health systems may be more challenging than traditional health care delivery settings such as hospitals or outpatient health clinics given the necessary engagement of at least two systems (education and mental health) in improvement efforts (Kataoka, Rowan, & Hoagwood, 2009; Stephan, Brandt, Lever, Acosta-Price, & Connorsa, 2012; Waxman & Weist, 1999). While education systems are often locally driven with little influence or authority of state education agencies, mental health systems are often more state-driven related to Medicaid reimbursement and other state regulations. Differences in state and local influence and practices across mental health and education are often reflected in distinct technical assistance modalities and resource allocation. Conducting quality improvement efforts in a manner that leads to mutually beneficial results for both systems and positive outcomes for students can be challenging. Even deciding how funding should be allocated and the extent to which a given student’s mental health needs should be paid for by different systems remains problematic. School mental health LCs offer opportunities to bring education and mental health system partners together to identify shared values, recognize distinct system strengths and responsibilities, and collaborate to meet the comprehensive needs of students across a multi-tiered system of support.

To date, LCs have been proven effective in advancing quality improvement efforts in health care settings (American Diabetes Association, 2004; Nadeem et al., 2014). Applying key components of LCs, and particularly the IHI BTS model, to this SMH LC offered an established, well-researched process to enhance access, accountability, and quality in mental health prevention, early intervention, and treatment in schools. Several core features of LCs and their specific application to CSMHS proved essential to attaining quality improvement in comprehensive school mental health systems.

First, participating teams used a uniform mechanism for collecting and reporting progress measures. As previously referenced, existing education and mental health system data platforms are highly variable across communities and typically do not interface with each other even within districts. All multidisciplinary teams in the LC spanning education and behavioral health systems were able to utilize a national quality improvement performance measure, the School Mental Health Quality Assessment (SMH-QA) and an online system, the School Health Assessment and Performance Evaluation (SHAPE) System (www.theSHAPEsystem.com), to collect, report, track and share their progress throughout the LC.

Second, teams benefited from consistent methods and a curated set of resources to plan, test and evaluate their local quality improvement goals. Teams learned how to use Plan-Do-Study-Act Cycles to organize their improvement goals into testable change ideas and had access to strategic planning guides and resources on The SHAPE System to inform their application of evidence-based approaches and innovations in their district. One particularly beneficial aspect of the PDSA method for participating teams was the principle of “starting small.” LC participants received training and coaching to test changes in small increments using PDSAs that could be accomplished in a week or less, allowing opportunities to modify and improve plans to address problems before scaling up. In many cases this was a new concept for districts who were used to a more “top down roll out” of implementation initiatives to schools, and also offered flexibility, creativity and innovation to test and observe the result of change ideas on a small scale for more durable long-term changes. For example, as districts tackled school mental health screening, they were encouraged to consider testing processes and practices with a small number of students before testing scaling up to classrooms, grades and schools.

Third, participating school districts meaningfully engaged in networking and shared learning opportunities to enhance quality improvement. Although 25 individuals may seem like many participants to navigate in a virtual meeting, discussion and participation was plentiful by all teams. Strategies to ensure meaningful participation included pre-requests for rotating teams to present on each call, and the facilitators’ regular practice to spontaneously “call on” teams to share during each call. As a result of shared learning on virtual calls and other communication formats such as the listserv, quality improvement goals, PDSA cycles and even data collection strategies were informed by input from other teams and expert faculty supporting the LC. A key tenet of the IHI BTS model is the concept of “sharing seamlessly and stealing shamelessly.” The opportunity to learn from other districts with similar populations, needs, and strengths offered invaluable lessons and shared momentum to advance key aspects of SMH quality at a faster pace that likely would have been achieved without this network of support and ideas from other teams.

Collectively, this study demonstrated the feasibility of applying a well-established LC model, the IHI BTS, to improve quality and sustainability of SMH in 25 districts across the USA. Findings indicated that LCs can be used to rapidly translate expert knowledge and best practices to practical program change in school mental health. Across teams, the model fostered innovation and program changes that contributed to school mental health quality improvement.

Limitations

These findings suggest that LCs are a feasible strategy to improve SMH system quality but must be considered in the context of several limitations. Participating teams applied to join the LC and thus participants may have been more engaged than would have been observed with a representative sample of school districts with a wider range of commitment to and readiness for SMH quality improvement. However, our results of initial feasibility with this sample of motivated sites provides the foundation for future work to refine and test variations in the LC approach for sites to support sites with a wider range of readiness and capacity of sites.

Participation in the LC Experience Survey was voluntary and subject to some degree of response bias because a small number of teams that did not complete the LC Experience Survey may have had more difficulty engaging with the LC process overall. If we had 100% site participation in the Experience Survey, we may have uncovered more or different experiences with less engaged sites. However, our overall engagement metrics were promising enough across all teams that we do not feel this overly biases the results presented.

Future Directions

Continuing to use and expand the LC model with CSMHS is indicated given the feasibility, high levels of engagement, and promising quality improvement outcomes from these first two cohorts. There are several future directions we are particularly interested in as a result of our findings. First, future SMH LCs may consider broadening district team participation beyond education and behavioral health professionals by requiring and supporting student and family participation. We encouraged student and family feedback throughout this LC, but engagement of these stakeholders was variable based on the team and their quality improvement foci. We believe students and family members would be invaluable partners on district teams to help develop, implement, evaluate, and act on quality improvement goals and tests of change. Future SMH LCs could also be customized to expand the reach and dissemination of this model, as well as engage stakeholders who represent the broader systems supporting teams. Specifically, engagement of state agency personnel within departments of education, behavioral health, and other child-serving systems could provide additional support for teams participating in the LC and ultimately, dissemination, and spread to other teams within a state system. In fact, the current School Health Services Collaborative for Improvement and Innovation Network, supported by a cooperative agreement from the Health Resources and Services Administration, includes state agency sponsors of LC participation to oversee and coordinate participating sites at the school, district, region, local education authority, or tribal community level (Health Resources and Services Administration Maternal and Child Health Bureau, 2019). One purpose of engaging state departments of education and behavioral health in the LC is to build state leaders’ capacity to support participating sites to engage with the LC model as well as their potential to run future LCs with additional LEAs within their state. Finally, it would be important to gain a more refined understanding of the most active components of the LC model. Creating a learning collaborative is one of 73 discrete implementation strategies that may stand alone as a testable approach to drive SMH quality improvement (Powell et al., 2015). However, the LC approach is certainly multi-component, and understanding the impact of various other implementation strategies included in LCs (e.g., audit and provide feedback, centralize technical assistance, use an implementation advisor, provide ongoing consultation, model change) could improve our precision to tailor LC components to the needs of various sites.

Conclusion

LCs offer an incredible opportunity to move the needle in quality improvement for the school mental health field. Findings from the current study indicate that participating school district teams were able to actively engage in a 15-month LC including monthly calls, monthly data submission, team-specific quality improvement work in between scheduled LC activities (i.e., PDSAs), and several virtual learning sessions. Moreover, participants reported numerous benefits to participation including improvements to their team processes as well as access to and quality of services provided to students. LCs are notably resource intensive, with significant data and technical assistance support required, and it will prove useful to understand which teams are likely to most benefit from LC participation and how resources and support can be delivered most effectively, efficiently and economically. As the school mental health field continues to refine the application of LC frameworks to this work, we should consider how to understand and delineate the readiness factors needed for meaningful LC participation and how to maximize the balance of technical assistance and independent quality improvement planning and testing. Investigating these factors and considering dissemination factors, such as building state capacity to lead and expand LEA LCs will support the field in more broadly disseminating this strategy to advance quality in school mental health across the nation.

Notes

1This total is driven in part by two teams who produced a large proportion (N = 26) mental health screening PDSAs.

References

Algozzine, B., Barrett, S., Eber, L., George, H., Horner, R., Lewis, T., et al. (2017). School-wide PBIS Tiered Fidelity Inventory. Retrieved May 4, 2020 from https://www.pbis.org/resource/tfi.

American Diabetes Association. (2004). The breakthrough series: IHI’s collaborative model for achieving breakthrough improvement. Diabetes Spectrum, 17(2), 97–101.

Bruns, E. J., Walwrath, C., Glass Siegal, M., & Weist, M. D. (2004). School-based mental health services in Baltimore: Association with school climate and special education referrals. Behavior Modification, 28(4), 491–512. https://doi.org/10.1177/0145445503259524.

Charmaz, K., & Bryant, A. (2007). The SAGE Handbook of Grounded Theory. https://doi.org/10.4135/9781848607941.

Chung, H., Klein, M. C., Silverman, D., Corson-Rikert, J., Davidson, E., Ellis, P., et al. (2011). A pilot for improving depression care on college campuses: Results of the college breakthrough series-depression (CBS-D) project. Journal of American College Health, 59(7), 628–639. https://doi.org/10.1080/07448481.2010.528097.

Connors, E. H., Stephan, S. H., Lever, N., Ereshefsky, S., Mosby, A., & Bohnenkamp, J. (2016). A national initiative to advance school mental health performance measurement in the US. Advances in School Mental Health Promotion, 9(1), 50–69.

Conradi, L., Wilson, C., Agosti, J., Tullberg, E., Richardson, L., Langan, H., et al. (2011). Promising practices and strategies for using trauma-informed child welfare practice to improve foster care placement stability: A breakthrough series collaborative. Child Welfare, 90(6), 207–225.

Dix, K. L., Slee, P. T., Lawson, M. J., & Keeves, J. P. (2012). Implementation quality of whole-school mental health promotion and students’ academic performance. Child and Adolescent Mental Health, 17(1), 45–51. https://doi.org/10.1111/j.1475-3588.2011.00608.x.

Durlak, J. A., Weissberg, R. P., Dymnicki, A. B., Taylor, R. D., & Schellinger, K. B. (2011). The impact of enhancing students’ social and emotional learning: A meta-analysis of school-based universal interventions. Child Development, 82(1), 405–432.

Flannery, K. B., Fenning, P., Kato, M. M., & McIntosh, K. (2014). Effects of school-wide positive behavioral interventions and supports and fidelity of implementation on problem behavior in high schools. School Psychology Quarterly, 29(2), 111.

Ghandour, R. M., Flaherty, K., Hirai, A., Lee, V., Walker, D. K., & Lu, M. C. (2017). Applying collaborative learning and quality improvement to public health: Lessons from the collaborative improvement and innovation network (CoIIN) to reduce infant mortality. Maternal and Child Health Journal, 21(6), 1318–1326. https://doi.org/10.1007/s10995-016-2235-2.

Greenberg, M. T., Weissberg, R. P., Obrien, M. U., Zins, J. E., Fredericks, L., Resnik, H., et al. (2003). Enhancing school-based prevention and youth development through coordinated social, emotional, and academic learning. American Psychologist, 58(6/7), 466–474. https://doi.org/10.1037/0003-066x.58.6-7.466.

Haine-Schlagel, R., Brookman-Frazee, L., Janis, B., & Gordon, J. (2013). Evaluating a learning collaborative to implement evidence-informed engagement strategies in community-based services for young children. Child & Youth Care Forum, 42(5), 457–473. https://doi.org/10.1007/s10566-013-9210-5.

Hanson, R. F., Self-Brown, S., Rostad, W. L., & Jackson, M. C. (2016). The what, when, and why of implementation frameworks for evidence-based practices in child welfare and child mental health service systems. Child Abuse and Neglect, 53, 51–63. https://doi.org/10.1016/j.chiabu.2015.09.014.

Health Resources and Services Administration Maternal and Child Health Bureau. (2019). Collaborative improvement & innovation networks (CoIINs). Retrieved August 28, 2019 from https://mchb.hrsa.gov/maternal-child-health-initiatives/collaborative-improvement-innovation-networks-coiins.

Hirai, A. H., Sappenfield, W. M., Ghandour, R. M., Donahue, S., Lee, V., & Lu, M. C. (2018). The collaborative improvement and innovation network (CoIIN) to reduce infant mortality: An outcome evaluation from the US South, 2011 to 2014. American Journal of Public Health. https://doi.org/10.2105/AJPH.2018.304371.

Hoagwood, K., Burns, B. J., Kiser, L., Ringeisen, H., & Schoenwald, S. K. (2001). Evidence-based practice in child and adolescent mental health services. Psychiatric Services, 52(9), 1179–1189. https://doi.org/10.1176/appi.ps.52.9.1179.

Hoover, S., Lever, N., Sachdev, N., Bravo, N., Schlitt, J., Acosta Price, O., et al. (2019). Advancing comprehensive school mental health: Guidance from the field. Baltimore, MD: National Center for School Mental Health. University of Maryland School of Medicine.

Hoover, S., Sapere, H., Lang, J. M., Nadeem, E., Dean, K., & Vona, P. (2018). Statewide implementation of an evidence-based trauma intervention in schools. School Psychology Quaterly, 33, 44.

Kase, C., Hoover, S., Boyd, G., West, K. D., Dubenitz, J., Trivedi, P. A., et al. (2017). Educational outcomes associated with school behavioral health interventions: A review of the literature. Journal of School Health, 87(7), 554–562.

Kataoka, S. H., Rowan, B., & Hoagwood, K. E. (2009). Bridging the divide: In search of common ground in mental health and education research and policy. Psychiatric Services, 60, 1510–1515. https://doi.org/10.1176/ps.2009.60.11.1510.

Kessler, R. C., Amminger, G. P., Aguilar-Gaxiola, S., Alonso, J., Lee, S., & Üstün, T. B. (2007). Age of onset of mental disorders: A review of recent literature. Current Opinion in Psychiatry, 20(4), 359–364. https://doi.org/10.1097/YCO.0b013e32816ebc8c.

Kieling, C., Rohde, L. A., Baker-Henningham, H., Belfer, M., Conti, G., Ertem, I., et al. (2011). Child and adolescent mental health worldwide: Evidence for action. The Lancet, 378(9801), 1515–1525. https://doi.org/10.1016/S0140-6736(11)60827-1.

Langley, A. K., Nadeem, E., Kataoka, S. H., Stein, B. D., & Jaycox, L. H. (2010). Evidence-based mental health programs in schools: Barriers and facilitators of successful implementation. School Mental Health, 2(3), 105–113.

Lendrum, A., Humphrey, N., & Wigelsworth, M. (2013). Social and emotional aspects of learning (SEAL) for secondary schools: Implementation difficulties and their implications for school-based mental health promotion. Child and Adolescent Mental Health, 18(3), 158–164. https://doi.org/10.1111/camh.12006.

Lyon, A. R., Borntrager, C., Nakamura, B., & Higa-McMillan, C. (2013). From distal to proximal: Routine educational data monitoring in school-based mental health. Advances in School Mental Health Promotion, 6(4), 263–279.

McIntosh, K., Kelm, J. L., & Canizal Delabra, A. (2016). In search of how principals change: A qualitative study of events that help and hinder administrator support for school-wide PBIS. Journal of Positive Behavior Interventions, 18(2), 100–110. https://doi.org/10.1177/1098300715599960.

Mental Health Technology Transfer Center Network. (2020). School mental health. Retrieved December 21, 2019 from https://mhttcnetwork.org/schoolmentalhealth.

Merikangas, K. R., He, J. P., Brody, D., Fisher, P. W., Bourdon, K., & Koretz, D. S. (2010). Prevalence and treatment of mental disorders among US children in the 2001–2004 NHANES. Pediatrics, 125(1), 75–81. https://doi.org/10.1542/peds.2008-2598.

Mold, J. W., Fox, C., Wisniewski, A., Lipman, P. D., Krauss, M. R., Robert Harris, D., et al. (2014). Implementing asthma guidelines using practice facilitation and local learning collaboratives: A randomized controlled trial. Annals of Family Medicine, 12(3), 233–240. https://doi.org/10.1370/afm.1624.

Nadeem, E., Olin, S. S., Hill, L. C., Hoagwood, K. E., & Horwitz, S. M. (2014). A literature review of learning collaboratives in mental health care: Used but untested. Psychiatric Services, 65(9), 1088–1099. https://doi.org/10.1176/appi.ps.201300229.

National Center for Healthy Safe Children. (2020). Retrieved June, 06, 2019 from https://healthysafechildren.org/.

National Center for School Mental Health. (2020). School health assessment and performance evaluation system (SHAPE). Retrieved January, 13, 2020 from http://theshapesystem.com/.

Nix, M., McNamara, P., Genevro, J., Vargas, N., Mistry, K., Fournier, A., et al. (2018). Learning collaboratives: Insights and a new taxonomy from AHRQ’s two decades of experience. Health Affairs, 37(2), 205–212. https://doi.org/10.1377/hlthaff.2017.1144.

Oyeku, S. O., Wang, C. J., Scoville, R., Vanderkruik, R., Clermont, E., McPherson, M. E., et al. (2012). Hemoglobinopathy learning collaborative: Using quality improvement (QI) to achieve equity in health care quality, coordination, and outcomes for sickle cell disease. Journal of Health Care for the Poor and Underserved, 23(3A), 34–48. https://doi.org/10.1353/hpu.2012.0127.

Palinkas, L. A., Aarons, G. A., Horwitz, S., Chamberlain, P., Hurlburt, M., & Landsverk, J. (2011). Mixed method designs in implementation research. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 44–53. https://doi.org/10.1007/s10488-010-0314-z.

Parke, C. S. (2012). Making use of district and school data. Practical Assessment, Research, and Evaluation, 17(1), 10.

Pinkelman, S. E., Mcintosh, K., Rasplica, C. K., Berg, T., & Strickland-Cohen, M. K. (2016). Perceived enablers and barriers related to sustainability of school-wide positive behavioral interventions and supports. Behavioral Disorders, 40(3), 171–183. https://doi.org/10.17988/0198-7429-40.3.171.

Powell, B. J., Waltz, T. J., Chinman, M. J., Damschroder, L. J., Smith, J. L., Matthieu, M. M., et al. (2015). A refined compilation of implementation strategies: Results from the expert recommendations for implementing change (ERIC) project. Implementation Science, 10(1), 1. https://doi.org/10.1186/s13012-015-0209-1.

Proctor, E. K., Landsverk, J., Aarons, G., Chambers, D., Glisson, C., & Mittman, B. (2009). Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research, 36(1), 24–34.

Rones, M., & Hoagwood, K. E. (2000). School-based mental health services: A research review. Clinical Child and Family Psychology Review, 3(4), 223–241. https://doi.org/10.1023/A:1026425104386.

Sanchez, A., Cornacchio, D., Poznanski, B., Golik, A., & Comer, J. (2018). The effectiveness of school-based mental health services for elementary-aged children: A meta-analysis. Journal of the American Academy of Child and Adolescent Psychiatry, 57(3), 153–165.

School-Based Health Alliance. (2020). Quality counts: About the national quality initiative (NQI). Retrieved October 20, 2019 from https://www.sbh4all.org/current_initiatives/nqi/.

Splett, J., Owell, A., Perales, K., Eber, L., Barrett, S., Putnam, R., et al. (2016). Interconnected systems framework—Implementation Inventory (ISF-II). Columbia: University of South Florida, University of South Carolina.

Stephan, S., Brandt, N., Lever, N., Acosta-Price, O., & Connorsa, E. (2012). Key priorities, challenges and opportunities to advance an integrated mental health and education research agenda. Advances in School Mental Health Promotion, 5(2), 125–138. https://doi.org/10.1080/1754730X.2012.694719.

Stephan, S. H., Connors, E. H., Arora, P., & Brey, L. (2013). A learning collaborative approach to training school-based health providers in evidence-based mental health treatment. Children and Youth Services Review, 35(12), 1970–1978. https://doi.org/10.1016/j.childyouth.2013.09.008.

Stephan, S., Hurwitz, L., Paternite, C., & Weist, M. (2010). Critical factors and strategies for advancing statewide school mental health policy and practice. Advances in School Mental Health Promotion, 3(3), 48–58.

Stephan, S., Mulloy, M., & Brey, L. (2011). Improving collaborative mental health care by school-based primary care and mental health providers. School Mental Health, 3(2), 70–80. https://doi.org/10.1007/s12310-010-9047-0.

Stephan, S., Paternite, C., Grimm, L., & Hurwitz, L. (2014). School mental health: The impact of state and local capacity-building training. International Journal of Education Policy and Leadership, 9(7), n7.

Stoll, L., Bolam, R., McMahon, A., Wallace, M., & Thomas, S. (2006). Professional learning communities: A review of the literature. Journal of Educational Change, 7(4), 221–258. https://doi.org/10.1007/s10833-006-0001-8.

Suldo, S. M., Gormley, M. J., DuPaul, G. J., & Anderson-Butcher, D. (2014). The impact of school mental health on student and school-level academic outcomes: Current status of the research and future directions. School Mental Health, 6(2), 84–98.

Taylor, R. D., Oberle, E., Durlak, J. A., & Weissberg, R. P. (2017). Promoting positive youth development through school-based social and emotional learning interventions: A meta-analysis of follow-up effects. Child Development, 88(4), 1156–1171.

U.S. Department of Education, Office of Planning, Evaluation and Policy Development, Policy and Program Studies Service. (2018). Collaboration for safe and healthy schools: Study of coordination between school climate transformation grants and project AWARE. Retrieved from https://www2.ed.gov/rschstat/eval/school-safety/school-climate-transformation-grants-aware-full-report.pdf.

Waxman, R., & Weist, M. (1999). Toward collaboration in the growing education–mental health interface. Clinical Psychology Review, 19(2), 239–253.

Weist, M. D., & Evans, S. W. (2005). Expanded school mental health: Challenges and opportunities in an emerging field. Journal of Youth and Adolescence, 34, 3–6. https://doi.org/10.1007/s10964-005-1330-2.

Weist, M. D., Hoover, S., Lever, N., Youngstrom, E. A., George, M., McDaniel, H. L., et al. (2019). Testing a package of evidence-based practices in school mental health. School Mental Health, 11(4), 692–706. https://doi.org/10.1007/s12310-019-09322-4.

Weist, M., Lever, N., Stephan, S., Youngstrom, E., Moore, E., Harrison, B., et al. (2009a). Formative evaluation of a framework for high quality, evidence-based services in school mental health. School Mental Health, 1(4), 196–211. https://doi.org/10.1007/s12310-009-9018-5.

Weist, M. D., Mellin, E. A., Chambers, K. L., Lever, N. A., Haber, D., & Blaber, C. (2012). Challenges to collaboration in school mental health and strategies for overcoming them. Journal of School Health, 82(2), 97–105. https://doi.org/10.1111/j.1746-1561.2011.00672.x.

Weist, M. D., Paternite, C. E., Wheatley-Rowe, D., & Gall, G. (2009b). From thought to action in school mental health promotion. International Journal of Mental Health Promotion, 11(3), 32–41.

Acknowledgements

We are deeply grateful to the twenty-four school districts who participated in the quality improvement collaborative and contributed evaluation data to this project.

Funding

This study was funded by the Health Resources and Services Administration, Maternal Child Health Bureau, in partnership with the School-Based Health Alliance (Grant No. U45MC27804) and also by National Institute of Mental Health (Grant No. K08 MH116119).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Availability of Data and Material

Not applicable.

Code Availability

Not applicable.

Ethical Approval

This study was reviewed and approved by the University of Maryland Human Research Protections Office as exempt from Institutional Review Board review due to being considered Not Human Subjects Research. Participants were informed that their survey responses are confidential. This article does not contain any studies with animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Connors, E.H., Smith-Millman, M., Bohnenkamp, J.H. et al. Can We Move the Needle on School Mental Health Quality Through Systematic Quality Improvement Collaboratives?. School Mental Health 12, 478–492 (2020). https://doi.org/10.1007/s12310-020-09374-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12310-020-09374-x