Abstract

ChatGPT is a large language model that was trained by OpenAI. It is capable of generating human-like text and can be used for a variety of tasks such as text completion, text generation, and conversation simulation. It is based on the GPT (generative pre-trained transformer) architecture, which uses deep learning techniques to generate natural language text. These lines were written by a question posed to Chat GPT, and when it was run through a plagiarism tracker, the text came up as unique! So while the advantages are many, there are also significant disadvantages that could shake the very fabric of evidence-based medicine.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

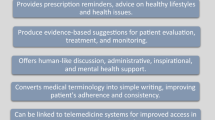

ChatGPT is an AI-powered chatbot developed by the artificial intelligence (AI) research company OpenAI and launched in November 2022. The chatbot uses a field of machine learning known as natural language processing (NLP) to generate responses to users’ questions and prompts. This program is defined by ChatGPT as: “Its AI is trained on a dataset of internet text and can generate human-like text in response to prompts. It can be used for a variety of natural languages processing tasks such as language translation, text summarization, and question answering.” In a gist, ChatGPT works in a conversational interface with its user, responds to follow-up questions, admits and corrects mistakes, rejects improper asks, and even challenges incorrect premises. GPT stands for “generative pre-trained transformers”, which are capable of understanding and producing strings of complex thoughts and ideas. When a command is entered, ChatGPT pulls data from everywhere it can get its hands on, feeds it into a transformer model, then maps the relationships between different pieces of information and guesses what text belongs together in certain contexts. The disadvantage is ChatGPT will eventually make cheating easier for students since the AI would only take a few minutes to furnish a brand-new paper for journal publication, project, or thesis. While ChatGPT has the potential to improve patient care for surgical patients, it is important to consider its limitations and use it as a supplement to, rather than a replacement for, human expertise and surgical judgment.

ChatGPT in Anaesthesia

ChatGPT in anaesthesia can help in clinical decision-making, to analyse patient data, and provide recommendations for the management of postoperative pain on a personalised basis and other symptoms by analysing the patient’s medical history, vital signs, pain tolerance, and other data and thereby suggest the most appropriate anaesthetic agent or dosage for the surgical intervention. ChatGPT in anaesthesia will also help in the delivery of preoperative education. As preoperative patients are anxious and may have questions or concerns about their upcoming surgery, ChatGPT could be used to provide personalised, evidence-based information to help them prepare mentally for the surgery. This could include information about the risks, complications and benefits of different anaesthetic agents, what to expect during the procedure, and how to manage post-operative pain and other symptoms (https://www.hoopcare.com/us-blog/the-potential-impact-of-chatgpt-on-anesthesia).

ChatGPT in Preoperative Surgical Care

ChatGPT can be used to streamline and schedule the appointment of surgical patients with the concerned surgeon, provide information on the preop, intraop, and postop care of the patient, and the surgical wound care management to the relatives of the patient after discharge. There will be no hassle to call the hospital receptionist or hospital management to get this information. In essence, the hospital reception can deal with a much more patient load with faster disposal. ChatGPT plays the role of a “virtual assistant”, especially for patients from rural or remote areas. These virtual assistants can be accessed through a variety of platforms, such as websites, mobile apps, Amazon’s Alexa, or Google Assistant.

ChatGPT During Hospital Admission

ChatGPT could be trained to transcribe patient medical records accurately and efficiently, freeing up healthcare professionals to spend more time interacting with patients and providing care. This could also help reduce the risk of errors in medical records and missing the diagnosis, especially when the illiterate patient cannot clearly express relevant history. All the official letters to be drafted, including insurance approval or clearance prior to surgery, may be taken with ChatGPT, which saves a tremendous amount of time.

ChatGPT for Surgeons

ChatGPT is a powerful tool for preop surgical planning in complex or complicated surgical interventions, providing surgeons with new opportunities to plan procedures and identify potential complications. This technology can analyse vast amounts of medical literature in real-time and give a gist, making it easier to identify patterns and trends and notify the potential complications quickly that might have been missed by traditional methods of identifying the complications involving the tedious manual search through medical literature. In a study, ChatGPT provided the pros and cons for the use of rapamycin considering the preclinical evidence of potential life extension in animals [1].

ChatGPT as an Author in Surgical Publications

The potential of AI-assisted medical education was reviewed in a paper in which ChatGPT was a co-author [https://www.medrxiv.org/content/10.1101/2022.12.19.22283643v1.full]. ChatGPT demonstrated a high level of concordance and insight in its explanations. These results suggested that large language models may have the potential to assist with medical education and potentially clinical decision-making as well. With many medical journals making ChatGPT a co-author in their publication, editors, researchers, and publishers are now debating the place of such AI tools in the published literature and whether it is appropriate to cite the bot as an author [2]. Publishers are racing to create policies for the chatbot, but the picture is still not clear and controversial. Cureus has called for a “Medical Journal Turing Test” for a case report contest written with the assistance of ChatGPT (https://www.cureus.com/newsroom/news/164).

ChatGPT as an Editor in Surgical Publications

As biomedical journal editors, ChatGPT should be extremely handy. It may be an excellent plagiarising detection tool. It will take care of language and syntax in the manuscripts. Statistics checks will become easy. ChatGPT should be able to detect whether the manuscript is a human creation or another version of machine and software production. It may completely transform the way we edit journals, and there may be an era of another kind to disseminate scientific advancements. For example, while someone is operating with a new surgical technique, the camera and the software equipped with GPT will put this innovation in the public domain or scientific communication channel at that very instant. It will be “think it—publish it” or “blink it—publish it”.

ChatGPT as a Focused Learning Tool

Medical education today revolves around memorising and retaining information, and this business of retaining cannot be infinite. AI systems like ChatGPT could facilitate us to redirect our emphasis on curating and applying medical knowledge. This transition will help medical students and physicians learn more efficiently by explaining complex concepts in languages of varying complexity. Thus, the explanation of Tetralogy of Fallot will be tailored to the understanding of a 10th grader, a 1st-year MBBS, or a cardiology DNB fellow, depending on who is asking.

Disadvantages of ChatGPT

But in this seemingly endless plane of progress, an imperfect tool is being deployed without the necessary guardrails in place. Although there may be acceptable uses of ChatGPT across medical education and administrative tasks, we cannot endorse the program’s use for clinical purposes—at least in its current form. Future versions of ChatGPT will certainly improve by leaps and bounds in accuracy and precision. But doctors need to have a seat at the table when it comes to any workflow improvements, so this is no different. The possible uses of ChatGPT are only limited by our creativity and ability to write a good chat “prompt”. What we fear is in the future will really have two classes: one group that can seamlessly and fluidly utilise these tools effectively in their personal and work lives and another that cannot and will struggle to keep pace.

“The development of full artificial intelligence could spell the end of the human race. It would take off on its own and redesign itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, could not compete and would be superseded.” Stephen Hawking.

No matter how informative ChatGPT is, it lacks the ability to provide personalised feedback or have a real-time conversation with a patient. This can limit the learning experience and make it difficult for surgical patients to fully understand a concept or ask for clarification. ChatGPT relies on technology to function, which means it may not always be available or may experience technical difficulties.

The release of a powerful tool such as ChatGPT will instil awe in the public, but in medicine, it needs to elicit appropriate action to evaluate its capabilities, mitigate its harms, and facilitate its optimal use.

-

1.

What will be its legal status? Will the optimum treatment offered by a doctor be compared to the alternative offered by ChatGPT in a court of law? This is a real concern because ChatGPT sometimes tends to produce texts that sound plausible or convincing but are incorrect or nonsensical on the ground. This phenomenon of “hallucination” can be catastrophic because the novice will hold it sacrosanct.

-

2.

Scientists will have difficulty differentiating between real research and fake abstracts generated by ChatGPT. The risk of misinformation is even greater for patients, who might use ChatGPT to research their symptoms, as many currently do with Google and other search engines.

-

3.

If the data entered is biased, then the data expressed by GPT can be biased. In this example, the “medicine data” in certain fields may be limited. As a result, GPT may not be able to answer. Another limitation is the data is accurate until the year 2021. Any events after that, GPT will not have in its database. Keep in mind that GPT does not use the internet.

ChatGPT is only as knowledgeable as the information it has been trained on. It may not have access to the most up-to-date information or be able to provide a comprehensive understanding of a topic as it continues to feed on data based on human-generated texts that are not updated immediately. Researchers have worried about copyright infringement by ChatGPT because its outputs are based on human-generated texts. Unlike Google, verifying the accuracy of ChatGPT takes effort and time consuming because it uses raw text without any links or citations.

Nowadays, ChatGPT not loading or working due to a high number of requests at a time is a significant problem. Users have to perform the ChatGPT functions using a high-speed internet connection. In a nutshell, it tends to write plausible but incorrect content with confidence, and since it can only be used via an OpenAI endpoint, so one is a “slave” of the product and finally, it is an extremely expensive model.

Conclusion

An OpenAI that can write like a human but think and analyse like a machine is a revolutionary tool to have. But being a new kid, users must remember that though it speaks confidently, the actual message can be erroneous and not up-to-date. Substituting a ChatBot to write our essays and journals would be a definite retrograde evolutionary step in medical publishing, and technology should evolve to circumvent this too. If used to make processes that engage a lot of time and patience of hospital staff simpler and more comfortable for the patient, then that would be a step in the right direction for the future.

References

ChatGPT Generative Pre-trained Transformer; Zhavoronkov A (2022) Rapamycin in the context of Pascal’s Wager: generative pre-trained transformer perspective. Oncoscience 9:82–84. https://doi.org/10.18632/oncoscience.571

O’Connor S (2023) ChatGPT. Open artificial intelligence platforms in nursing education: tools for academic progress or abuse? Nurse Educ Pract 66:103537. https://doi.org/10.1016/j.nepr.2022.103537

Author information

Authors and Affiliations

Contributions

Conception or design of the work: Aditya, Kaushik, and Neela; data collection: Aditya and Pankaj; data analysis and interpretation: Vipul, Neela, Sandeep, and Kumar; Drafting the article: Kaushik, Neela, Vipul, Pankaj, and Sandeep; Critical revision of the article: Aditya, Neela, and Vipul; Final approval of the version to be published: Aditya, Neela, Kaushik, Vipul, Pankaj, and Sandeep.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bhattacharya, K., Bhattacharya, A.S., Bhattacharya, N. et al. ChatGPT in Surgical Practice—a New Kid on the Block. Indian J Surg 85, 1346–1349 (2023). https://doi.org/10.1007/s12262-023-03727-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12262-023-03727-x