Abstract

High-yielding potato crops in Canterbury have achieved 90 t ha−1or more. However, soil-borne diseases and poor soil structure are primary factors responsible for reducing this potential to an average of 55 t ha−1. The interaction between these factors was measured by growing the cultivars ‘Russet Burbank’ and ‘Innovator’ in 15 fields, grouped into four categories related to cropping history that influences soil health: soil-restorative (>7 years of grass) and non-restorative (>6 annual crops) with presence or absence of potato crops in the previous 10 years. Although predominantly grass histories with previous potato crops showed greater severity of soil-borne diseases than annual crop history without previous potatoes, this did not lead to yield losses. Improved soil quality increased yields by an average 10 t ha−1 regardless of disease pressure. Modelling suggested that early planting, maintaining crop canopies and managing water supply were also key factors for achieving high potato yields.

Resumen

Los cultivos de papa de alto rendimiento en Canterbury han alcanzado las 90 t ha−1 o mas. No obstante, las enfermedades del suelo y la estructura pobre del suelo son los factores primarios responsables de la reducción de este potencial a un promedio de 55 t ha−1. Se midió la interacción entre estos factores mediante el cultivo de las variedades “Russet Burbank” e “Innovator” en 15 campos, agrupados en cuatro categorías relacionadas a la historia del cultivo que influencia la salud del suelo: restauración del suelo (>7 años de pasto) y no restauración (>6 cultivos anuales) con la presencia o ausencia de cultivos de papa en los 10 años previos. Aunque las historias del pasto predominante con cultivo de papa previo mostraron mayor severidad de las enfermedades del suelo que la historia de cultivos anuales sin papa previa, esto no condujo a pérdidas de rendimiento. El mejoramiento de la calidad del suelo aumentó los rendimientos en un promedio de 10 t ha−1independientemente de la presión de enfermedades. El modelado sugirió que la siembra temprana, el mantenimiento del follaje y el manejo del suministro de agua, también fueron factores clave para lograr altos rendimientos de papa.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Potato yields in the Canterbury region of New Zealand currently average 55 t ha−1, which is well below the measured potential of around 90 t ha−1 (Jamieson et al. 2003). Nutrient supply has been shown to be adequate for the current level of production (Reid et al. 2011, 2016), and crops are irrigated to supplement rainfall, so water should not be limiting to crop growth and yield. In a related study, Sinton et al. (2013) found that pests and diseases above-ground are usually well managed through regular foliar-applied pesticide sprays. However, the seed- and soil-borne diseases Rhizoctonia stem canker, Spongospora root galling and tuber powdery scab are not well controlled. These can reduce gross yields by as much as 36 t ha−1 in some crops when both diseases are widespread and severe. Sinton et al. (2013) also found that the yield of each potato crop was made up of a range of individual plant responses to localized biotic and abiotic stresses; plants unaffected by these yielded to potential, whereas disease-stressed plants suffered up to the equivalent of 69 t ha−1 yield loss. There was also some influence of soil conditions on crop performance. Hernandez-Ramirez et al. (2014) showed that there was a substantial effect of soil conditions on root performance, which would influence access to resources and therefore crop performance. That study was with barley which has much stronger roots than potato, so the expectation would be that potato crops would be affected more severely. Falloon et al. (2016) reported that potato plants inoculated with Spongospora subterranea, then grown in the field and laboratory, used less water than healthy plants, and subsequently had reduced growth rates and yield. An extensive review by Brierley et al. (2013) of the management of Rhizoctonia solani with respect to potato production quoted many instances of Rhizoctonia diseases causing yield reduction. Rhizoctonia solani and S. subterranea have broad host ranges, including species from at least 21 diverse plant families (Wright et al. 2017; Tsror 2010; Qu and Christ 2006; Arcila Aristizabal et al. 2013). In addition, S. subterranea resting spores can remain viable in the soil for many years (Harrison et al. 1997). Thus, infection from these pathogens is difficult to avoid when selecting fields to grow potatoes.

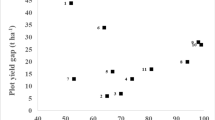

Previous field research has shown that moderately severe soil-borne diseases can reduce potato yields when crops are grown in degraded soils (Sinton et al. 2013), where degradation is often caused by annual cropping cultivation practices and lack of restorative (usually grass) phases. Beare et al. (2003) found that soil aggregate stabilities (a common measure of quality of soil physical structure) below 1.5 mean weight diameter (MWD) increased the risk of crops not reaching their regional average yields (Fig. 1). Soils with low aggregate stability are less able to withstand the impacts of cultivation, trafficking and water drops from irrigation or rainfall. This leads to dispersal of fine soil particles that can fill the surrounding soil pores, reducing the availability of water (and water holding capacity), air and nutrients needed for root and subsequent crop growth. Beare et al. (2003) found that pasture-based soils had greater average aggregate stability (2.36 MWD) than arable soils (1.72 MWD), where both had been in their respective land uses for longer than 9 years, and that compared with arable crops, long-term grass growth could create high volumes of high-quality organic matter.

The relationship between soil aggregate stability (mean weight diameter, MWD, mm) and yield for 105 Canterbury arable crops. The arrows indicate a cut-off point (1.5 MWD, mm) where reduced soil quality risks achieving yields less than the regional average for that crop. Reproduced from Beare et al. (2003), with permission

In terms of land use in Canterbury, even on individual farms, potatoes are not a major crop – only 2400 ha of Canterbury land is devoted to vegetable production, cf. 210,000 ha in grain production and 2.25 million ha in grass/clover pastures for grazing animals (Statistics New Zealand 2016). That means there is no need to use the same fields for potato production at frequent intervals, so that intervals between potato crops may be 7 to 10 years.

The present study aimed to test the hypothesis that growing potato crops in fields with enhanced soil structure could be a simple way to elevate potato yields. Firstly, soil conditions conducive to root growth (because of reduced soil compaction) would cost-effectively increase resource availability, as well as reducing waste of current inputs. Secondly, vigorously growing plants may be less susceptible to soil-borne diseases, the causative pathogens of which are present in most of the arable soils in Canterbury. If the outcome was positive, then a practical method to quickly measure soil structure could be used by growers for informed field selection to maximize yields.

Materials and Methods

Seed Tuber Treatment

Because no potato seed tubers can be guaranteed to be free of tuber-borne diseases, this study used formaldehyde as a seed surface sterilant experimental treatment. Treating seed tubers with a formaldehyde solution reduces the severity of powdery scab on the daughter tubers at harvest (Falloon et al. 1996; Falloon 2008), and may also help reduce the severity of other seed tuber borne diseases. A greenhouse experiment was carried out to assess the effects that these pathogens may have had on subsequent disease in the crops, and to discern seed tuber borne inoculum from that potentially sourced from the field soils. These same seed lines were then planted in 15 fields categorized on the basis of whether they had predominantly grass or annual cropping histories (to produce a range of soil structural qualities), and that included previous potato crops or not (to influence the potential of the soils to harbor potato-related pathogens).

In early spring, whole seed tubers (weight range 80 to 150 g) from one line of each of the potato cultivars ‘Russet Burbank’ and ‘Innovator’ were removed from cool storage and placed into 25 kg lots in sacks, which were then arranged in wooden crates. Half of the sacks were dipped for 2 min in a tank containing 0.39% formaldehyde solution; the other half were not dipped. All dipped crates were then moved to a ventilated potato store and air-dried for 48 h.

Greenhouse Experiment

A random selection of the formaldehyde-treated and untreated ‘Russet Burbank’ and ‘Innovator’ seed tuber lines were grown in a greenhouse (10 tubers of each treatment, total 80 tubers) as individual plants in plastic planter bags (10 L capacity). The greenhouse trial was planted on 25 November 2016 (late spring) and harvested on 17 February 2017 (84 days after planting). The planter bags contained potting mix that had been tested negative for the presence of all soil-borne pathogen DNA, including S. subterranea and R. solani. The potting mix contained 70%, by volume, of aged bark and 30% of coarse sand, and contained 1.45 g L−1 zeolite, 3.2 g L−1 dolomite lime, 2.0 g L−1gypsum, 3.2 g L−1 of a resin-coated 16–9–12-2 N:P:K:MgO fertilizer mix containing trace elements, 1.2 g L−1 superphosphate, 320 mg L−1 potassium sulphate and 320 mg L−1 calcium nitrate. The plants were frequently watered and the temperature was maintained at 17 °C in natural daylight, conditions likely to be conducive to development of soil-borne diseases. No black scurf or powdery scab lesions were visible on the tubers at planting. After plant growth, just before senescence, the stems of each plant were cut off at the soil surface, the plants were removed from their planter bags, and the tubers, roots and underground stems of each plant were washed free of potting mix. Shoots were assessed for any diseases and underground stems and tubers were scored for diseases caused by R. solani and S. subterranea using incidence and severity and scores described in detail by Sinton et al. (2018). The stem canker severity score ranged from 0 (no disease) to 18 (stem death), and stem canker incidence was the number of stems per plant infected by the disease. Spongospora root gall severity score ranged from 0 (no disease) to 3 (more than 20 root galls per plant). Root gall incidence was recorded as presence/absence of the disease per plant. Plants were also checked for the presence of any other diseases.

Field Selection

All of the field experiment sites were on farms on the coastal plain of Canterbury in the South Island of New Zealand, between latitudes 43.8° and 44.4° S. Webb et al., (2000) stated that “The plains of the eastern South Island consist of a series of coalescing fans. The major variations in soil properties are related to the age of fan formation and to natural drainage conditions”. The individual sites were all on silt loams of similar geological origin, and the major variation between sites was the depth to underlying stones. This mostly affected water-holding capacity in the root zone. Particle size distributions vary little among the soils (Webb et al. 2000), with United States of America taxonomic descriptions of either Typic Dystrustepts or Udic/Typic Haplustepts (Table 1).

Each of the 15 selected fields was growing a commercial potato crop of either ‘Innovator’ or ‘Russet Burbank’, and fitted one of four cropping history categories. The fields were a combination of two conditions: “ex-potato” or “no potato”, respectively, one or no potato crop within the last 10 years; “more grass” or “less grass”, respectively, 7 or more years grass, or 6 or more years arable crops in the 10 year history. Thus, the four field categories were:

- 1.

Ex-potato, more grass

- 2.

Ex-potato, less grass

- 3.

No potato, more grass

- 4.

No potato, less grass.

Seven of the 15 fields were located near Timaru in South Canterbury and eight in the Rakaia/Ashburton (mid Canterbury) area. All selected fields had topsoil depths of ≅400 mm, over compacted subsoil layers (often caused by cultivation) or gravels. Soil water supply to depth would depend on the ability of crop roots to penetrate the different compacted layers. Fields 1, 5 and 10 (Table 2) had stony layers starting at 400 mm depth, possibly limiting availability and storage of soil water for the potato crops during the season, even where irrigation was applied.

Not all crop history categories were equally available (Table 2). Only one field was of cropping history Category 1, six fields were of Category 2, four were of Category 3, and four were of Category 4. Seven fields each had a previous potato crop within the last 10 years, eight fields had no previous potato crops, 10 fields had been predominately cropped (6 to 9 years) and five fields had been predominantly in grass (7 to 10 years). The potato crops were planted between 11 October and 3 November 2016.

Field Experiment Setup, Planting and Management

The growers all used a generic approach for preparing the soil for potato crops, related to the need to create a fine tilth to reduce problems at harvest and during factory processing of harvested tubers. The fields were all power harrowed, formed into two-row beds and ‘destoned’ to remove stones and soil clods to channels beside the rows. Soil structural measurements were assessed once the potato crops reached full canopy stage.

Once bed forming in each of the 15 crops was completed by each grower, an experimental area was selected and its outer perimeter (six beds by 50 m) was marked out. At planting, the grower switched off the planting mechanism between the markers and applied only their fertilizer and pesticides to the experimental area. Within 10 days of the 15 commercial crops being planted, the four treatments (formaldehyde-treated and untreated for the two seed lines) were planted in a randomized four-plot design (each plot three beds by 20 m) with a two-row planter (row spacing 900 mm, in-row seed spacing 300 mm).

Management strategies for growing the 15 potato crops were guided by one agronomist, who used the most recent soil tests to calculate the nutrient requirement for each crop. Total nitrogen (di-ammonium phosphate at planting and urea and/or calcium ammonium nitrate for in-season side dressings) application ranged from 290 to 370 kg N ha−1 (standard deviation = 28 kg N ha−1). Phosphorous (di-ammonium phosphate, super phosphate and/or triple super) application rates ranged from 70 to 150 kg P ha−1 (standard deviation = 20 kg P ha−1). Potassium (potassium sulphate) application rates ranged from 190 to 360 kg K ha−1, (standard deviation = 43 kg K ha−1). Sulphur (potassium sulphate) application rates ranged from 90 to 215 kg S ha−1, (standard deviation = 40 kg S ha−1). A potato nutrient study (in the form of replicated field trials) from the same region in 2013 (Michel et al. 2013), determined that current nutrient supply was not limiting yields, and a crop survey conducted that same growing season showed that other factors, in particular soil-borne diseases and soil compaction, were the most likely yield-limiting factors.

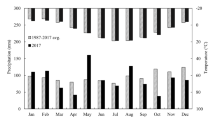

Natural rainfall amounts and timings in summer in the Canterbury region are usually inadequate for maximizing potato yield, and irrigation water is often shared with other crops on farms. Irrigation was based on a schedule provided by the agronomist but this could not always be followed. The aim was to apply water at approx. weekly intervals, with adjustments for rain. Individual crop management records were not analyzed but, despite irrigation, a major yield-limiting factor was likely to have been water shortage.

Soil Quality Measurements

Aggregate stability was measured, along with two simpler methods that farmers could use to determine soil quality. These were: ‘soil structural condition score’, a quick visual field assessment of the state of soil aggregation in relation to its suitability for crop growth (Beare et al. 2003); and ‘crop history score’, a cumulative 10-year score, related to the soil-restorative potential of each previous annual main crop (Sinton et al. 2018).

At the full canopy crop growth stage, a topsoil sample (150 mm3) was removed from one site (ridge) in each experiment for laboratory measurement of aggregate stability using the wet sieve method. Aggregates (2-4 mm diam.) were separated from the whole soil by non-forced sieving, and these were then air-dried at 25 °C before aggregate stability determination using a wet-sieving method (Kemper and Rosenau 1986). These dried aggregates (50 g) were sieved under water for 20 min on a nest of three sieves (2.0, 1.0 and 0.5 mm mesh sizes). The soil remaining on each sieve was weighed after oven drying at 105 °C. The weight of material remaining on the 2 mm sieve was corrected for 2 to 4 mm stone content. The aggregate stability of each sample was expressed as percent >1 mm, and as mean weight diameter (MWD), using the formula:

where Xi is the mean diameter of adjacent sieves and Wi is the proportion of the total sample retained on a sieve.

Soil structural condition was assessed visually for each field at the full canopy crop growth stage, using a standard pictorial score card (Beare et al. 2003). This is a subjective field assessment of soil structure that examines the relative size, shape and porosity of aggregates, their cohesion and root development in and around the aggregates. The assessment broadly described the relative ability of each soil to host healthy root activity. The ‘soil structural condition score’ ranges from 1 to 10, where 1 = very poorly structured soil, reduced root activity predicted; and 10 = a high proportion of well-formed soil aggregates, increased root activity likely. At the full canopy crop stage, a spade was used to cut a segment of soil 150 mm deep and 200 mm wide from the center of a ridge out to mid-furrow. The soil was transferred to a large tray and carefully spread out. A soil condition score was determined by comparing the soil in the sample with standard photographs.

For the crop history score, crop history for each field was collated for the 10 year period of 2006/07 to 2015/16, and grass/annual crop types were aggregated. A simple ‘soil restorative’ score was then applied to each main annual crop in the 10-year period, depending on the annual crop’s ability to maintain or restore soil structure. Vigorously-rooted crops, including a range of grass species, were given the highest score (‘4’), cereals with moderately vigorous root systems scored ‘3’, brassicas, maize and sweet-corn scored ‘2’, weak-rooted crops such as potatoes, carrots or onions scored ‘1’, and fallow scored ‘0’ (Sinton et al. 2018). These ‘soil restorative’ scores were then aggregated for the 10-year history into a single ‘crop history score’. This scoring system resulted in a maximum score of 40 (10 years of grass) and a theoretical minimum score of 0 (a ten-year fallow). A high value indicated an improved soil structure with increased access for crop roots to air, water and nutrients.

Disease Assessments

For the 15 field experiments, incidence and severity of the predominant potato diseases were assessed at four crop growth stages: i) plant emergence (when 50% of plants were emerged); ii) full canopy, maximum crop cover; iii) late canopy, midway between full canopy and senescence; and iv) crop senescence, 50% or more of the crop is dead, ≅ 20 weeks after planting. Above-ground stems and leaves from four plants per plot were checked in the field for any diseases and then discarded. All underground stems were removed to a laboratory, washed free of soil and then assessed for incidence and severity of diseases. The two main soil-borne diseases, Rhizoctonia stem canker and Spongospora root galling were assessed using the incidence and severity scores described by Sinton et al. (2018).

Yield Assessments

Final tuber harvest in each field experiment was carried out as near to crop senescence as possible, without compromising harvest of the surrounding crop. However, ‘Russet Burbank’ plots grown in ‘Innovator’ surrounding crops were most at risk of having their bulking time shortened by early harvest. The latest possible harvest times were negotiated with the growers. Tuber yield assessments were determined for a 5 m by 2 row middle section of each plot. All of the tubers were hand dug and sorted into size categories, to end-user specifications of ‘marketable’ (65–90 mm and > 90 mm diam.) and ‘unmarketable’ (<65 mm diam.). All tubers in these two categories were weighed, and yields were converted to tonnes per hectare (t ha−1). Gross yield (t ha−1) was the sum of marketable and unmarketable yields.

Predicting Potential Yields and the “Yield Gap”

Potential yield for each crop was calculated using the Potato Calculator (Jamieson et al. 2003), hereafter referred to as the “PC”. The PC is a simulation model based on calculating biomass accumulation from intercepted solar radiation (Monteith 1977), and includes functions that calculate the growth and loss of leaf area, the lifetime of the crop, and the effects of water deficits and nitrogen (N) supply (Jamieson et al. 2008). The model has been well-tested and shown to simulate potato crop yields accurately (Jamieson et al. 2003). For the present study, simulations used weather data collected at National Institute for Water and Atmospheric Research (NIWA) official weather stations at Ashburton and Timaru airports. These were within one and 15 km from the experiment sites. Simulations were set so that water and N-fertilizer were adequate and used actual (rather than optimal) planting dates to produce time-courses of leaf area, biomass accumulation, soil moisture and soil N status. The simulated yields were compared with observed yields to assess departures of observations from predictions. The “yield gap” was defined as the difference between the observed and simulated yields predicted for production unconstrained by shortages of water or N, or weeds, pests or diseases.

Statistical Analyses

All statistical analyses were implemented in GenStat (GenStat 17th edition 2017). For the field experiments, data of potato yields, yield gaps and disease incidence and severity were analyzed using a mixed model approach, fitted with REML. The disease incidence data (numbers of diseased stems and Spongospora root galls) were analyzed using a hierarchical generalized linear model approach (HGLM). For both modelling approaches fixed effects in the model were cultivar, previous potato-growing history of a field, previous annual crop/grass history, formaldehyde seed tuber treatment, along with all treatment interactions. Order of the effects was considered and largest P-values for effects is reported. A nested random effect was included for both approaches to account for location and repeated measures.

For the greenhouse experiments, disease severity data was analyzed using a mixed model approach, fitted with REML, and disease incidence data was analyzed using HGLM. For both modelling approaches, fixed effects included in the models were cultivar, seed tuber treatment, and their interaction. Order of the effects was considered and largest P-values for effects are reported. A random effect was included to account for any blocking effects. No analysis was performed for incidence of stems with Rhizoctonia stem canker, as all stems were affected by this disease.

For the REML mixed models, interactions and main effects were assessed using ANOVA. Pairwise comparisons were made using least significant difference (LSD) at 5%. For the HGLM models at the highest level of interaction 95% confidence intervals were calculated and significant differences consider to be non-overlapping intervals.

Results

Soil Physical Quality

Aggregate stability (Table 3) ranged from 0.49 mm MWD (Field 8, 8 years of crop and 2 years grass) to 2.18 mm MWD (Field 1, 10 years of grass). Nine of the 15 fields had soils with an aggregate stability less than the yield-limiting threshold of 1.5 MWD. These were fields with arable crop-dominant histories (Fig. 2). Grass-dominant histories (more than 7 of 10 years in grass, ‘crop history score’ 34+) resulted in aggregate stabilities that were usually greater than 1.5 MWD.

Average soil aggregate stabilities (mean weight diameter, MWD) for groups of fields in the four crop history categories examined in this study. The vertical line indicates the yield-limiting aggregate stability benchmark of 1.5 MWD, the circle symbol represents the field in crop history Category 1 (“ex-potato, more grass”), the square symbols represent the six fields in Category 2 (“ex-potato, less grass”), the diamond symbols represent the four fields in Category 3 (“no potato, more grass”), and the cross symbols represent the four fields in Category 4 (“no potato, less grass”)

Fields 1, 2 and 3 had the maximum possible crop history score of 40, as they each had 10-year histories of grass production. The lowest ‘crop history score’ of 22 was for Field 10, with a history of 8 years of annual crops (plus 2 years of grass), four of which were classified as weak rooted crops (pea, potato, carrot and onion). Soil structure condition scores ranged from 3 (Fields 10, 13, 14 and 15, all fields with at least 8 years of annual cropping), to 7 (Fields 2 and 3, both with 10 years of grass production).

Disease Assessments

Greenhouse Experiment

At plant maturity, there was no statistically significant interaction (F-statistic = 0.17; P > 0.687) between cultivar and formaldehyde seed tuber treatment for severity of Rhizoctonia stem canker on the resulting plants. However, stem canker severity was greater (F-statistic = 8.11; P = 0.009) for the ‘Innovator’ plants (mean severity score = 7.2) compared with ‘Russet Burbank’ (mean = score 3.8). The formaldehyde seed tuber treatment did not affect stem canker incidence, but reduced mean stem canker severity score from 6.5 to 4.6 (F-statistic = 3.05; P = 0.097). On average, there was 84% probability of stem canker occurring on the ‘Innovator’ plants and 42% probability of the disease for ‘Russet Burbank’.

Severity of Spongospora root galling was moderate (mean score = 1.6, equivalent to five to ten galls per plant) for the ‘Russet Burbank’ plants, and high for ‘Innovator’ (mean score = 2.8, ten to 20 galls per plant). All the ‘Innovator’ plants had Spongospora root galls (100% incidence), regardless of seed tuber treatment. Incidence of root galling on ‘Russet Burbank’ was 73%.

Field Experiments

The two main diseases observed in the field experiments were Rhizoctonia stem canker and Spongospora root galling. Other pests or diseases were either controlled, not widespread or not observed during the period of potato crop growth when the assessments were carried out.

Rhizoctonia Stem Canker

There were no statistically significant differences for mean Rhizoctonia stem canker incidence or severity (P = 0.37) resulting from the seed tuber treatments for both potato cultivars. Incidence of Rhizoctonia stem canker was greater in crops grown in ex-potato fields (probability of incidence = 92%) compared with potato-free fields (76% probability of incidence). Incidence of this disease was also 83% greater in crops from ex-grass fields (no previous potato crops), compared to ex-annual crop (no potato) fields (70%). Stem canker severity increased with time in all of the field experiment crops, but was more severe where potato crops had been grown previously (mean score = 6.3) (Table 4), compared with crops that had no previous potato cropping history (mean score = 4.3; F-statistic = 3.69; P = 0.029).

Spongospora Root Galling

Formaldehyde treatment of seed tubers did not affect incidence or severity of Spongospora root galling in plants assessed from the field experiments (P = 0.90). Spongospora root galls were observed in 11 of the 15 field experiments, first appearing at the full canopy growth stage. Averaged for cultivar, there was either no risk (measured at the full canopy growth stage) or a small (5% at late canopy growth stage) risk of Spongospora root galls occurring where the potato crops were grown with a predominately annual cropping history that excluded previous potato crops (Table 5). This compares with a 48% (full canopy) and a 44% (late canopy) risk for current potato crops grown from predominantly grass histories that excluded previous potato crops. In contrast, where potato crops had been grown within the previous 10 years, there was a 32% (full canopy) and a 61% (late canopy) chance of Spongospora root galls occurring after predominantly annual cropping, but a very high incidence of root galls occurred in the one ex-potato field with a long grass history.

Root gall severity was greater in the ex-potato fields (mean score = 1.8) than for potato-free fields (mean = 0.6; F-statistic = 4.7; P = 0.012). Severity of root galling was also greater from ex-grass fields (mean score = 1.6) than from ex-crop fields (mean = 0.7; F-statistic = 2.48; P = 0.089).

Crop Yields

There were no treatment interactions (annual cropping/grass history, potato growing history, cultivar and formalin seed treatment) for gross yields, marketable yields or any yield components. Since there was no yield response to formaldehyde seed tuber treatment, yields for this treatment were nested and the data re-analyzed.

Gross tuber yields (Table 6) for ‘Innovator’ (overall mean = 84 t ha−1) were greater (F-statistic = 31.94; P < 0.001) than for ‘Russet Burbank’ (76 t ha−1). Similarly, marketable yields for ‘Innovator’ (mean = 81 t ha−1) were greater (F-statistic = 68.81; P < 0.001) than for ‘Russet Burbank’ (67 t ha−1). ‘Innovator’ yielded more large tubers (>90 mm diam.) (56 t ha−1) than ‘Russet Burbank’ (30 t ha−1; F-statistic = 157.37; P < 0.001).

Potatoes (irrespective of cultivar) grown in the ex-grass fields yielded more (mean gross yield = 86 t ha−1) than those grown in ex-crop fields (75 t ha−1; F-statistic = 7.75; P = 0.018). For marketable yields, ex-grass fields yielded more (mean = 79 t ha−1) than those grown in ex-crop fields (69 t ha−1; F-statistic = 6.81; P = 0.024). The ex-grass potato crops also produced greater yields and greater proportions of tubers in the large size class (>90 mm diam.) (50 t ha−1, 58% of gross yield, F-statistic = 7.75; P = 0.018) than those grown in ex-crop fields (37 t ha−1, 49% of gross yield).

‘Innovator’ yield of the small marketable size class (60 to 90 mm diam.) was reduced (F-statistic = 7.01; P = 0.011) by an ex-grass history (21 t ha−1) compared with ex-crop history (28 t ha−1), in response to the increased yield of the >90 mm size class for this cultivar. ‘Russet Burbank’ yield of the small marketable size class (60 to 90 mm) was unaffected by cropping history. ‘Russet Burbank’ yielded more of the unmarketable size class (<60 mm) (11 t ha−1, F-statistic = 6.88; P = 0.012) where previous cropping history included potatoes, compared with no potatoes (7 t ha−1). ‘Innovator’ unmarketable yield was unaffected by potato history (average 3.7 t ha−1).

Potential yields calculated from PC simulations, observed yields and yield gaps were re-analyzed for treatment effects. Potential yields ranged from 83 to 112 t ha−1 for ‘Innovator’ and 80 to 107 t ha−1 for ‘Russet Burbank’. These cultivars have different growth parameters (thermal time to reach different developmental stages) in the simulations. Field 15 had the shortest present season potato crop duration, as the crop was planted late and sprayed off before natural senescence to fit with factory demand. This gave predicted yields of 80 t ha−1 for ‘Russet Burbank’ and 83 t ha−1 for ‘Innovator’. The actual yields for these two crops were 60 t ha−1 for ‘Russet Burbank’ and 70 t ha−1 for ‘Innovator’. Field 2 had the longest present season crop duration, was planted early and grew through until natural senescence, and was given a predicted yield of 106 t ha−1 for ‘Russet Burbank’ and 112 t ha−1 for ‘Innovator’. The actual yields for the two cultivars from this field were, respectively, 96 t ha−1 and 103 t ha−1.

Although the crop yields were affected by planting and harvest dates, all but one crop failed to reach potential, indicating that factors other than crop duration were influencing yields. The “yield gap” from the individual fields ranged from −2 to 23 t ha−1 for ‘Innovator’, and from 8 to 30 t ha−1 for ‘Russet Burbank’. Re-analysis using these data (Table 7) still indicated that some of this variation may have been attributable to the field history treatments, with a moderately significant interaction (P = 0.082, maximum LSD = 19 t ha−1), but there was uncertainty as yield variability was high.

Discussion

The fields used for experiments in this study were initially selected using crop history records. According to in-season measurements of soil physical quality (aggregate stability and soil structural condition scores) these fields adequately fitted the desired soil physical structure categories. There were visually detectable and laboratory-measured improvements in soil structural quality between the two categories. Relative aggregate stability levels reflected those found in the study of Beare et al. (2003), which were greatest with a grass dominant history, and at or below the yield-limiting threshold where there had been long-term annual cropping. There was a strong positive correlation between soil structural condition scores and crop history scores (P = < 0.001, correlation coefficient r = 0.9331; Fig. 3), showing that one or both methods could be used to estimate the suitability of fields for obtaining maximum potential potato yields. The R2 value (0.87) indicates the proportion of the variability that could be attributed to the impact of crop history on soil structural condition scores.

Measured potato yields were consistently greater for ‘Innovator’ than ‘Russet Burbank’, which was expected. ‘Innovator’ is described as a determinate cultivar (grows to a pre-determined node number, independent of environmental conditions) growing and developing more quickly than ‘Russet Burbank’ (indeterminate, node production can respond to changes in resource availability). ‘Russet Burbank’ also has a weak root system that can make it more susceptible to soil-borne disease through its longer growing period. Incidence and severity of the two main soil-borne diseases in the field experiments were high overall, which was expected given that these diseases are widespread throughout Canterbury. The greenhouse experiment showed that the seed tuber lines used were contaminated with viable S. subterranea and R. solani inoculum, and were probable contributors to disease in the field-grown crops, already at risk from existing soil-borne pathogens. Disease was also more severe where potatoes had been grown previously, or where fields had been in long-term grass. The initial yield analysis showed that there was an apparent 10 t ha−1 potato yield gain associated with improved soil structure resulting from a long-term grass phase. This agrees with several other findings. Johansen et al. (2015) reviewed the effect of soil compaction on potato yield and found several instances of a reduced potato yields of up to 37%. The initial analysis in the present study also showed that yield was not affected by previous cropping history that included a potato crop in the previous 10 years. Other biotic and abiotic factors may have had a greater influence on the yields obtained, and the possible extent of these was also investigated.

Potential yields were calculated for the actual planting dates of the crops in the present study. However, it is possible to vary the planting date in the PC simulations. A sensitivity test using the PC showed that, starting with the earliest planting and latest harvest dates practicable for the region, a potential yield of 112 t ha−1 was feasible, and there was an approximate 5 t ha−1 yield loss for each week that planting was delayed. The late planting of the crop in Field 15, combined with a harvest that was 10 days early, resulted in an eventual yield loss of 30 t ha−1, equating to a potential yield of 82 t ha−1 which was reduced but still above the measured yield. Apart from having more time to grow, the crop in Field 2 with the greatest potential had achieved full canopy cover by the longest day, thus making efficient use of available radiation. The late-planted crop, however, only had 56% cover by this date. The simulated rate of yield loss was slightly greater (7 t ha−1 per week) when canopy duration was cut short towards the end of the growing season, which interrupted the tuber bulking phase of crop development when resources are translocated from the canopy to tubers.

PC simulations were conducted to help identify and quantify any other likely causes of yield variations, and to help explain why some of the monitored crops did not fit treatment-related trends. To give some examples, Fields 1 and 5 both had low crop yields given their predominantly grass histories and correspondingly very good soil physical quality parameters. However, these crops were both grown on shallow soils. When applying root restrictions in the PC to account for a shallow soil, crop efficiency was reduced, as plants can only access about 10% of the stored water in any one day, and the water storage capacity of these soils was low. This in turn reduced canopy growth and the subsequent maximum green area index and shoot biomass achieved. Additionally, according to the PC, the crop in Field 5 emerged 11 days late and was harvested 8 days before senescence. These restrictions resulted in a predicted yield of 85 t ha−1 for this crop, near to what was measured. In fields 13 and 14 both ‘Innovator’ and ‘Russet Burbank’ plots were surrounded by an ‘Innovator’ crop. In contrast to the above cases, the ‘Innovator’ plots produced near-potential yields of 88 t ha−1, despite less grass in the cropping histories and low soil physical quality scores. For these fields, the crop manager noted that all inputs were timely and there was no crop damage caused by extreme weather events. Management skills were able to keep the crops growing to the maximum possible rate.

Conclusions

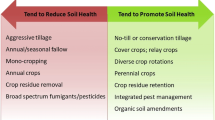

When attempting to isolate the effects of particular yield-influencing factors, other factors will nearly always confound the outcomes. From the evidence collected in this study, however, there is confidence that enhanced soil structure will increase the likelihood of achieving high potato yields, and could do so if no other biotic or abiotic factors cause significant yield reductions. Good soil structure increases root accessibility and water and nutrient storage and availability, and vigorously growing plants are better able to compensate for effects of diseases. Although a grass-dominated field histories increased potato disease incidence and severity, yield increases associated with the improved soil physical quality more than compensated for disease effects. On average in this study, improved soil quality increased yields by 10 t ha−1 regardless of disease pressure.

Informed field choice may reduce costs by negating the need for pesticide treatments or other strategies for management of soil-borne pathogens, and also by reducing the need for intensive pre-planting cultivation.

References

Arcila Aristizabal, I.M., E.P. Gonzales, C.M. Zuluaga, and J.M. Cotes. 2013. Alternate hosts of Spongospora subterranea f.sp. subterranea in Colombia by bio-assay. Revista Facultad Nacional de agronomia Medellin 66: 6987–6998.

Beare MH, Tregurtha CS, Lawrence EJ 2003. Quantifying the effects of management history on soil quality and crop performance using the soil quality management system. Pp 167-174. In: Tools for Nutrient and Pollutant Management: applications to agriculture and the environmental quality. (Eds L.D. Currie and J.A. Hanly) Occasional Report No. 17. Fertilizer & Lime Research Centre, Massey University, Palmerston North.

Brierley J., S. Wale, J. Woodhall, A. Lees, J. Peters, D. Kiezebrink, L. Sparrow, K. Ophelkeller, 2013. Informing Management of Potato Diseases through epidemiology and diagnostics. Rhizoctonia solani Reviews. http://www.potato.org.uk/sites/default/files/publication_upload/R422%20Final%20Report.pdf. Accessed 1 March 2019.

Falloon, R. 2008. Control of powdery scab of potato: Towards integrated disease management. American Journal of Potato Research 85: 253–260. https://doi.org/10.1007/s12230-008-9022-6.

Falloon, R.E., A.R. Wallace, M. Braithwaite, R.A. Genet, H.M. Nott, J.D. Fletcher, and W.F. Braam. 1996. Assessment of seed tuber, in-furrow and foliar chemical treatments for control of powdery scab (Spongospora subterranea f. sp. subterranea) of potato. New Zealand Journal of Crop and Horticultural Science 24: 341–353.

Falloon, R.E., U. Merz, R.C. Butler, D. Curtin, R.A. Lister, and S.M. Thomas. 2016. Root infection of potato by Spongospora subterranea: Knowledge review and evidence for decreased plant productivity. Plant Pathology 65: 422–434.

GenStat 17th edition. VSN International (2017). GenStat for Windows 17th Edition. VSN International, Hemel Hempstead, UK. Web page: GenStat.co.uk.

Harrison, J.G., R.J. Searle, and N.A. Williams. 1997. Powdery scab disease of potato – A review. Plant Pathology 46: 1–25.

Hernandez-Ramirez, G., E.J. Lawrence-Smith, S.M. Sinton, F. Tabley, A. Schwen, M.H. Beare, and H.E. Brown. 2014. Root responses to alterations in macroporosity and penetrability in a silt loam soil. Soil Science Society of America Journal 78: 1392–1403.

Jamieson, P.D., P.J. Stone, R.F. Zyskowski, and S. Sinton. 2003. Implementation and testing of the potato calculator: A decision support system for nitrogen and irrigation management. In Decision support systems in potato production: Bringing models to practice, ed. A.J. Haverkort and D.K.L. Mackerron, 85–99. Wageningen: Academic Publishers.

Jamieson, P.D., R.F. Zyskowski, F.Y. Li, and M.A. Semenov. 2008. Water and nitrogen uptake and responses in models of wheat, potatoes and maize. In Quantifying and understanding plant nitrogen uptake for systems modelling, ed. L.L. Ma, L.R. Ahuja, and T.W. Bruulsema, 127–146. Abingdon: CRC Press.

Johansen, T.J., M.G. Thomsen, A. Løes, and H. Riley. 2015. Root development in potato and carrot crops – Influences of soil compaction. Acta Agriculturae Scandinavica Section B Soil and Plant Science 65: 182–192. https://doi.org/10.1080/09064710.2014.977942.

Kemper, W.D., and R.C. Rosenau. 1986. Aggregate stability and size distribution. In Methods of soil analysis, part 1. Physical and mineralogical methods, ed. A. Klute, 2nd ed., 425–442. Madison: Soil Science Society of America.

Michel A, H. Brown, S. Sinton, E. Meenken, S. Dellow, S Pethybridge, B. Searle, J. Reid 2013. Potato yield gap investigation 2012–13. Part B: Effect of nutrient supply on yield. Report for: Potatoes New Zealand, McCain growers group and Ravensdown Fertiliser co-operative limited. Alexandre Michel - Part B - Effect of Nutrient Supply on Yield, https://potatoesnz.co.nz/library/?mdocs-cat=mdocs-cat-52&mdocs-att=null#. Accessed 20 August 2019.

Monteith, J.L. 1977. Climate and the efficiency of crop production in Britain. Philosophical Transactions. Royal Society of London B281: 277–294.

Qu, X., and B.J. Christ. 2006. The host range of Spongospora subterranea f. sp. subterranea in the United States American Journal of Potato Research 83: 343.

Reid, J.B., B.P. Searle, and S. Sinton. 2011. Does my potato crop need fertiliser? A modelling approach. Agronomy New Zealand 41: 79–95.

Reid, J.B., B. Searle, S. Sinton, A. Michel, E. Meenken, H. Brown, A. Roberts, and M. Manning. 2016. Fertiliser practice and yield losses in process potato crops grown in Canterbury, New Zealand. New Zealand Journal of Crop and Horticultural Science 44: 1–17.

Sinton S, Falloon R, Brown H, Tregurtha C, Michel A, Dellow S, Reid J, Shah F, Pethybridge S, Searle, 2013. Potato yield gap investigation 2012–13. Part A: Factors limiting yield. Report for: Potatoes New Zealand, McCain Growers Group and Ravensdown Fertiliser Co-operative Limited. 0136 (8706) Potato Yield Gap Investigation 2012–13; Part A Factors Limiting Yield (1), https://potatoesnz.co.nz/library/?mdocs-cat=mdocs-cat-52&mdocs-att=null#. Accessed 20 August 2019.

Sinton S, Dellow S, Shah F, Richards K, Michel A, Linton J, 2018. P15–01: Increasing potato yield through understanding the impacts of crop rotations and soil compaction – Year 3. Increasing potato yield through understanding the impacts of crop rotations and soil compaction - Year 3–2018, Sinton S, 2018. https://potatoesnz.co.nz/library/?mdocs-cat=mdocs-cat-52&mdocs-att=null#. Accessed 20 August 2019.

Statistics New Zealand, 2016. Agricultural and Horticultural Land Use. http://archive.stats.govt Statistics New Zealand, 2016. Agricultural and Horticultural Land Use. http://archive.stats.govt.nz/browse_for_stats/environment/environmental-reporting-series/environmental-indicators/Home/Land/land-use.aspx.nz/browse_for_stats/environment/environmental-reporting-series/environmental-indicators/Home/Land/land-use.aspx. Accessed 20 August 2019.

Tsror, L. 2010. Review: Biology, epidemiology and management of Rhizoctonia solani on potato. Journal of Phytopathology 158: 649–658.

Webb, T.H., J.J. Claydon, and S.R. Harris. 2000. Quantifying variability of soil physical properties within soil series to address modern land-use issues on the Canterbury Plains, New Zealand. Australian Journal of Soil Research 38: 1115–1129.

Wright, P.J., R.E. Falloon, and D. Hedderley. 2017. A long-term vegetable crop rotation study to determine effects on soil microbial communities and soilborne diseases of potato and onion. New Zealand Journal of Crop and Horticultural Science 45: 29–54.

Acknowledgements

This research was carried out by The New Zealand Institute for Plant and Food Research Limited, on behalf of the Foundation for Arable Research, the Sustainable Farming Fund (Ministry for Primary Industries) and Potatoes New Zealand. The participating growers are gratefully acknowledged for providing land, assisting with planting, field experiment maintenance and harvest. The New Zealand potato industry provided seed potatoes, access to seed tuber treatment equipment, and facilities for transport of harvested potatoes.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclaimer

The New Zealand Institute for Plant & Food Research Limited does not give any prediction, warranty or assurance in relation to the accuracy of or fitness for any particular use or application of, any information or scientific or other result contained in this paper. Neither Plant & Food Research nor any of its employees shall be liable for any cost (including legal costs), claim, liability, loss, damage, injury or the like, which may be suffered or incurred as a direct or indirect result of the reliance by any person on any information contained in this paper.

Rights and permissions

About this article

Cite this article

Sinton, S.M., Dellow, S.J., Jamieson, P.D. et al. Cropping History Affects Potato Yields in Canterbury, New Zealand. Am. J. Potato Res. 97, 202–213 (2020). https://doi.org/10.1007/s12230-020-09767-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12230-020-09767-3