Abstract

The concept of oscillator problems finds its indispensable presence in numerous dynamical systems. Machine learning techniques for handling dynamical systems is a challenging and rapidly expanding field of research. In this regard, a machine learning approach, namely Symplectic Artificial Neural Network model, has been used for handling the non-linear systems arising in dusty plasma models. The primary objective of this article is to investigate, the dynamics of Van der Pol-Mathieu-Duffing Oscillator problems for different excitation functions using the meshfree Symplectic Artificial Neural Network algorithm. The numerical simulations and graphical representations are carried out to establish the accuracy of the presented algorithm. Also, the obtained simulation results are compared with the existing numerical solutions. In addition, the statistical assessment studies at various testing points confirm an excellent agreement between the present simulation results and the existing results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the present age, the machine learning techniques for handling multimodal dynamic systems have achieved a flurry of research and experienced rapid evolution. Dynamic analysis of multimodal systems is very crucial in engineering structures [1]. Most scientific problems are inherently non-linear in nature, so sometimes, traditional numerical methods fail to find the parametric solution of differential equations. Also, the existing traditional methods may sometimes be problem-dependent and require repetitions of the simulations. In contrast, a neural network-based approach provides the alternate and closed solution for said differential equations (DE), and the prediction using machine learning looks like a black box.

In 1943, McCulloch and Pitts come up with an idea of the first elementary model of the neural network [2]. However, it gets popular among mathematicians in the 1990’s after Lee and Kang [3] developed Hopfield neural network to solve first-order DE. DEs have been extensively investigated by researchers in the past few years [4,5,6,7,8]. Jiang et al. did a good amount of work to model the behaviour of dynamical systems [9] using DEs with respect to time series functions. The micro-level of modeling for DEs using machine learning algorithms has shown potential growth [10]. Deep learning solutions to stochastic DEs are frequently unstable and stuck in local optima [11], particularly for higher-order DE. Sirignano and Spiliopoulos [12] gave a meshfree algorithm of deep neural networks as PDE approximators, which converges as the number of hidden layers tends to infinity. Meade and Fernandez introduced B1 splines as a basis function in a feed-forward neural network (FFNN)2 [13]. This algorithm was employed to solve the linear and non-linear ODE numerically. Mall and Chakraverty [14] developed a single-layer Chebyshev orthogonal polynomial link functional neural model with regression-based weights to handle different non-linear DEs. In the last few decades, many optimization algorithms have emerged in both the research and application sectors. Verma and Kumar designed a multilayer network for solving dynamic models of mathematical physics using BFGS optimization algorithm [15]. Rizk‑Allah and Hassanien [16] developed a neural model using hybrid Harris hawks–Nelder–Mead optimization (HHO-NM) algorithm for solving spring-mass system problems.

In a pioneering work, Mosta and Sibanda [17] proposed a linearization technique for solving Van der Pol bimodal equation. The active control approach to solve the double-well Duffing–Van der Pol oscillator has been developed by Njah and Vincent [18]. Ibsen et al. [19] employed the homotopy analysis approach for solving the double-well and double hump Van der Pol–Duffing oscillator equations. Nourazar and Mirzabeigy [20] used modified transformation techniques to obtain an approximate solution of Van der Pol equations. Stability analysis of the Van der Pol equation was explored by Hu and Chung [21]. An algebraic method was presented by Akbari et al. [22] to find the solution of the Duffing equation. Chaotic behaviours of Van der Pol-Mathieu-Duffing Oscillator (VdPM-DO) system under different forms of excitations were presented by Kimiaeifar [23] excellently. For solving these celebrated non-linear oscillator equations, few other techniques have been developed [24,25,26,27] by the research community in recent years. Whatever numerical techniques are employed, they need stringent step size requirements and repeat of iterations for numerical precision, which will involve a significant amount of computing cost.

So to overcome these limitations, in the ongoing years, ANN models have also been simultaneously developed by researchers to approximate the solutions of dynamical problems. To predict neural solutions of boundary and initial value problems, different ODE and PDE solvers have been developed [28, 29]. Mall and Chakraverty designed a single-layer Hermite link ANN model to handle the Van der Pol-Duffing Oscillator (VdP-DO) equations [7]. Raja et al. [30] studied the power of intelligent computing to compute the solution to Mathieu’s systems. For detecting the weak signals in a strongly coupled Duffing-Van der Pol oscillator, Wang et al. used a long short-term memory (LSTM) neural network [31]. Yin et al. introduced a combination of fractional FLANN filters for solving VdP-DOE [32]. In recent research, Bukhari et al. introduced a non-linear autoregressive radial basic function (NAR-RBFs) neural network filters for solving the Van der Pol-Mathieu-Duffing Oscillator Equations (VdPM-DO) [33].

Motivated by the above considerations, it is natural to propose new and efficient ANN algorithms to understand the dynamical behaviour of linear/non-linear oscillator systems. In this regard, an unsupervised multi-layer neural model viz. Symplectic Artificial Neural Network (SANN) has been developed for solving the VdPM-DO equations for different excitation functions. The SANN is a novel model which guarantees that the network conserves energy when solving the DE [34]. It is more numerically precise and robust method to solve dynamical equations than standard NN and numerical methods. The main contribution of this paper is discussing the Symplectic Artificial Neural Network for solving the Van der Pol-Mathieu-Duffing equations for different values of the excitation function.

The remainder of this paper is organized as follows: Sect. 2 is devoted to the preparation of a multi-layer neural network and also describes the dynamics of VdPM-DO equations. The main result of this paper consists of the SANN algorithm, which is provided in Sect. 3. Section 4 presents several examples to illustrate the performance of our model. Lastly, the concluding remarks have been provided in Sect. 5.

2 Preliminaries

In this section, some preliminaries related to the architecture of multilayer Feed-forward Neural Network, governing equation and significance of Van der Pol-Mathieu-Duffing oscillator equations have been discussed.

2.1 Feed-forward neural networks: an overview

ANN is an exciting form of Artificial Intelligence (AI) that mimics the human brain’s training process to predict patterns from the given historical data. Neural networks are processing devices of mathematical algorithms that may be built by using computer languages [35].

Various learning procedures and parameters are required for modeling a neural network [36,37,38]. The neural network is made up of layers, and layers are made up of several neurons /nodes. Every ith and (i + 1)th layer neuron is interconnected by some signals known as synaptic weights. The weights are numerical values allocated to each link. Signals are received by the input layer, which is multiplied by the weights, then summed and sent to one or more hidden layers. The output layer receives linkages from hidden layers. Each node receives its net input and uses an activation function to process it. The input/output relation is given by

where \(\nabla (x)\) is the swish activation function, \(x_{i}\) denotes the given input, \(w_{qi}\) is weight from the input unit \(i\,\) to the hidden unit \(q\),\(\,\tilde{b}_{q}\) is bias.

ANN analyses data in the same way as the human brain does. It processes the training data and updates the weights to improve the accuracy of neural forecasted value. Epoch refers to a complete cycle of passing the training algorithm and updating the weights. The error is minimized by using the required epochs. The accuracy of a neural network is improved through hyperparameter tuning.

2.2 Van der Pol-Mathieu-Duffing oscillator equations

The governing equation of Van der Pol-Mathieu-Duffing oscillator equations can be written as [23]

It is noted that \(\mu ,\alpha ,\) and \(\sigma\) in the above equation are defined as constant values. Other notations represent different physical properties of the titled equation. This non-linear equation has been extensively used in various scientific problems like non-linear dusty plasma system [33], plasma physics models [30], inverted pendulum [39], radio frequency quadrupole [40], early mechanical failure signal [41], diffraction [42], weak signal detection [43], etc. This system may also be used to study the dynamical behaviour of limit cycles [44].

3 Neural formulation for differential equations

An autonomous system of nth-order ODE can be written as

where \(\upsilon \left( \xi \right)\) and \(\psi\) are the determined solution and discretized domain respectively.

Let \(\upsilon_{\iota } \left( {\xi ,\delta } \right)\) indicates the ANN approximate solution with adjustable parameters \(\delta\) (weights and biases). Then Eq. (3.1) can be rewritten as

The corresponding cost function of ANN can be obtained by converting Eq. (3.2) to an unconstrained optimization problem. It can be written as

The ANN approximate solution \(\upsilon_{\iota } \left( {\xi_{i} ,\delta } \right)\) can be written as the sum of two terms

where the first part of the approximate solution i.e. \(\alpha\) is associated with the initial or boundary conditions, without the adjustable parameters. The second part of the approximate solution \(NN(\xi ,\delta )\) is an output of the FFNN that consist \(M\) hidden layers, with \(k\) neurons in each hidden layer, and a linear output node for a given input \(\xi \, \in R^{n}\). Weights and biases of \(NN(\xi ,\delta )\) are adjusted to deal with the minimization of the cost function. The FFNN outcomes \(NN(\xi ,\delta )\) can be written as:

such that,

where \(\ell_{ji}\) and \(v_{j}\) represent the synaptic weights from the input unit \(i\,\) to the hidden unit \(j\) and the hidden unit \(j\) to the output unit respectively, and \(k\) represents the number of neurons.

3.1 Symplectic neural network algorithm

Symplectic neural network is a type of artificial neural network that is inspired by the mathematical structure of symplectic geometry used to describe the dynamics of physical problems. SANNs are more efficient than traditional neural networks because they use fewer parameters and require less training data, which makes them well-suited for problems in physics and other fields where the dynamics of systems are considered. In contrast, standard neural networks [4] do not have this constraint and can be applied for tasks such as prediction and classification. Practically, SANN is constructed in such a way that it conserves energy [34].

Let us consider the first-order ODE as

Consequently, the neural approximate solution can be written as

where \(NN(\xi ,\delta )\) is the output of the \(M^{{{\text{th}}}}\) hidden layer with one input data \(\xi\), and parameters \(\delta\). The ANN approximate solution \(\upsilon_{\iota } \left( {\xi_{i} ,\delta } \right)\) satisfies the initial conditions of the given DE. In order to find the loss function we may need to calculate gradient of the network \(NN(\xi ,\delta )\). It can be computed as follows:

Differentiating Eq. (3.8) we have

Thus the loss function for Eq. (3.7) is calculated as

Now, consider the second-order ODE as

Here, the neural approximate solution is written as

Weights associated with this multi-layer perceptron are updated by the back-propagation learning algorithm through optimizing the following loss function

During the training of the neural network the epochs will continue until the error is minimized. In each epoch, the Adam optimizer will update the parameters of the network without affecting the learning rates in order to find the optimum weights. Graphical abstract of SANN model for solving VdPM-DO equation is delineated in Fig. 1.

4 Robustness analysis in diverse scenarios and discussions

In this section, we have encountered the titled problem with different excitation functions for three cases and the robustness of the model has been discussed in detail.

4.1 Experimental settings

To show the efficacy of the SANN model, VdPM-DO equations are solved for two separate excitation functions under varied initial conditions. The accuracy of the suggested algorithm is demonstrated in the tables and graphs. Neural network training techniques are often iterative; therefore, the user must designate a starting point for iterations. Furthermore, training neural models is a challenging enough process and the initial guess has a significant impact on most approaches. The initial guess can decide whether or not the method converges at all, with certain initial points being so unstable that the algorithm encounters numerical difficulties during the training and fails altogether. One of the most prominent strategies for the parameter initialization is known as “break the symmetry” [45, 46]. So, the user may start with arbitrary, small and distinct real numbers as the initial weights of the network. As such, arbitrary real numbers in \(\left[ { - 1,1} \right]\backslash \left\{ 0 \right\}\) are considered as initial weights in this experiment.

In the next step, a training method is employed to tune the adjustable parameters of SANN, which is embedded in the approximate solution. The SANN is trained in order to predict the solutions of VdPM-DO equations for any point inside the given domain by unsupervised training, where the adjustable parameters are updated to minimize the cost function. We have taken Adam optimization with learning rate of 0.001 as it converges faster and is relatively stable. We have trained the network for 10,000 epochs for each problem solved here.

On the other hand, we observed that when the model converges, then weights also converge to a minimum in the vicinity of the initial set of weights. But, when the network is diverging, the updated weights move away from the initial weight configuration. More training will not be able to reduce the loss once it reaches this degree of divergence. In addition, Eq. (2.3) is taken as activation function [47] during the training of the model. However, after selecting the basic framework [29], the optimal number of hidden layers and the number of nodes in each hidden layer are selected after several experiments with a different number of hidden layers and nodes.

4.2 Simulation results

This section obtains solutions of VdPM-DO equation with different excitation functions through the SANN model. Following experiments are conducted in Python 3.0 under the Jupyter notebook environment. Moreover, to evaluate the performance of the model, different statistical measures such as MAE, MSE, RMSE and TIC have been calculated with respect to existing HAM [23] and RK4 [23] solutions as follows:

Here \(X(t)\) and \(\hat{X}\left( t \right)\) are numerical solutions (HAM/RK4) and obtained SANN solutions respectively.

Case-1 (For excitation function \(\zeta (t)\, = 1\)).

In Eq. (2.4), by setting the parametric excitation function \(\zeta (t)\, = 1,\,\overline{a} = 1,\overline{b} = 0\) and rewriting the equation, we have [23]

As discussed above, the neural approximate solution can be computed as follows

To solve this non-linear system, we construct a multi-layer network with a single input, single output, and three hidden layers, with \(k = 50\) nodes in each hidden layer. We have trained the network for 150 equidistant points from 0 to 3 s, which are randomly selected at the beginning of each epoch [44].

We have taken the values of parameters from the literature [23] as shown in nomenclature as \(\alpha = 0.1,\beta = 0.1,\varepsilon = 0.1,\mu = 0.5,\vartheta = 0.1,\varpi = 1,\sigma = 1,\Omega = 1\). Table 1 shows the comparison among existing RK4 [23], HAM [23], ANN solutions and the obtained SANN solutions. From the tabular values we found that MSE of SANN is 0.0003393.

The time series plot of SANN solutions are delineated in Fig. 2. Figure 3 demonstrates the curves of training and validation loss over 10,000 epochs for the given model. The accuracy rate of SANN is visualized from the graph of training loss and the validation loss against epochs. It may be perceived that the loss function is minimized as the training period increases. The absolute error between SANN and HAM as well as SANN and RK4 results are compared graphically in Fig. 4. It is worth mentioning that the CPU time for training and computation is 521.794780254364 s.

Case-2 (For excitation function \(\zeta (t)\, = 1\)).

Here we have taken the same equation as case-1 with different initial conditions \(\overline{a} = 0,\overline{b} = 1\)

In this case, the values of parameters \(\alpha = 0.1,\,\beta = 0.1,\,\varepsilon = 0.1,\mu = 0.5,\vartheta = 0.1,\,\varpi = 1,\sigma = 1,\,\Omega = 1\) are being fixed, and accordingly, we get the neural approximate solution as follows:

Here we have designed a multi-layer network having a single input and output node, and each network consists of three hidden layers with \(k = 50\) neurons per layer. Then the network has been trained for 150 equispaced points for \(t\, \in \left[ {0,3} \right]\,.\)

The training and validation loss against the epoch for the neural network is delineated in Fig. 5, and the time series of neural solutions are plotted in Fig. 6. Robustness of the model increases along with the decrease of the value of the loss function. Tabular results for each of the above cases at different testing points are demonstrated in Table 2. Furthermore, the error made using the current approach is calculated between the obtained SANN results and RK4 results. It may be observed from the box plot of absolute errors (Fig. 7) that AE ranges in 1E − 05 to 5E − 02. The time taken by CPU for training of network and computation is 524.65269826 s.

Case-3 (For excitation function \(\zeta (t)\, = t\)).

We conclude this simulation section by considering the following non-linear VdPM-DO equation with a different parametric excitation function, and the equation is of the form [23]:

We have fixed the values of parameters as the literature [23] \(\alpha = 0.2,\beta = 0.5,\varepsilon = 0.1,\mu = 0.5,\) \(\vartheta = 0.1,\,\varpi = 0.1,\sigma = 0.1,\Omega = 0.5\). Then the neural approximate solution Eq. (3.11) reduces to the following

A multi-layer network with a single input, single output, and three hidden layers, \(N = 50\) nodes in each hidden layer, has been designed to illustrate the effectiveness of the proposed method. We have trained the network for 150 equidistant points from 0 to 5 s.

A comparison among the results obtained by RK4 [23], HAM [23], ANN and SANN algorithms are given in Table 3. The loss function of training and validation is contemplated in Fig. 8. The time series of SANN results are portrayed in Fig. 9, and absolute errors between SANN and HAM as well as SANN and RK4 are compared graphically in Fig. 10. It may be noted that MSE of SANN and traditional ANN are found 0.0072385 and 0.0081525 respectively. The CPU time for training and computation is 557.0586869716644 s.

4.3 Discussion and analysis

In the proposed methodology, the independent variables of DE are used as NN input. A feed-forward pass on the network gives us the value of the dependent variables evaluated at that particular point. Since NN is differentiable, we can compute the derivatives of the dependent variable (outputs) w.r.t. the independent variable (inputs) in order to find different derivatives that appear in the original DE. An unsupervised training method has been considered for solving the DE without knowing any solutions in advance. In this regard, an error function has been derived from the given DE, the approximate function and their derivatives. It may be noted that NN output is indeed a solution of DE when the loss function tends to zero.

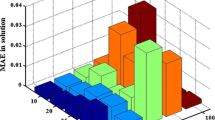

Different statistical measures such as ‘MAE’, ‘TIC’ and ‘RMSE’ are conducted for the titled problem and outcomes are graphically portrayed in the Fig. 11. The accuracy of SANN is witnessed through the comparative assessment of the SANN and existing numerical solutions. One may decipher that the mode of AE values lie around 1E − 04, 1E − 02 and 1E − 03 for Case-1, Case-2 and Case-3 respectively. Moreover, MSE values of Case-1, Case-2 and Case-3 lie in the close vicinity of 3E − 04, 7E − 04 and 7E − 03 that indicate the correctness, precision and efficacy of the SANN model.

It is well known that after training the neural model, it may be utilized as a black box to obtain numerical results of any arbitrary points in the given domain. In this experiment, we have considered three hidden layers with 50 neurons in each hidden layer for modeling of the network. One may consider more number hidden layers to construct a network, but it has been observed that by increasing the number of hidden layers and training a network for a long time, it loses its capacity to generalize. The error calculations, displayed graphs, and CPU processing time have been established to produce a complete robustness study for the SANN model. Based on these results, it is clear that the present unsupervised model is well validated.

5 Conclusion

In recent decades, the VdPM-DO equation has garnered a lot of attention from academics and scientists because of its various applications in mathematical physics and engineering applications. This investigation manifests that the presented SANN algorithm for solving the titled DE is promising. The accuracy of our model is demonstrated by the remarkable agreement of findings between SANN and existing numerical techniques HAM and RK4. As the graph of training loss and validation loss are converging, so it can be concluded that the obtained model is a robust model. Finally, it is worth mentioning that SANN is a reliable, computationally efficient and generic model. Also it can be considered as a powerful tool for the computation of non-linear oscillator problems.

The algorithm addressed in this article can be useful for solving different real-life problems and engineering applications such as modeling for the boundary of corneal model for eye surgery [48], astrophysical events [49], Stuxnet virus propagation [50], nervous stomach TFM model [51] and Bagley–Torvik fractional-order systems [52]. It also can be extended to functional link SANN for the alternate computing paradigm for solving dynamic models.

Abbreviations

- \(\tilde{b}\) :

-

Bias

- \(v,\ell\) :

-

Weight

- \(\nabla\) :

-

Activation function

- \(\zeta (t)\,\) :

-

Excitation function

- \(\vartheta\) :

-

Intensity of periodic coefficient

- \(\Omega\) :

-

Frequency of periodic coefficient

- \(\beta\) :

-

Coefficient of cubic nonlinearity term

- \(\gamma \left( t \right)\) :

-

Displacement from equilibrium position

- PDE:

-

Partial differential eq.

- MSE:

-

Mean squared error

- MAE:

-

Mean absolute error

- ODE:

-

Ordinary differential eq.

- ANN:

-

Artificial neural network

- RMSE:

-

Root mean squared error

- FFNN:

-

Feed-forward neural network

- HAM:

-

Homotopy analysis method

- SANN:

-

Symplectic artificial neural network

- VdP-DO:

-

Van der Pol-Duffing oscillator

- VdPM-DO:

-

Van der Pol-Mathieu-Duffing oscillator

References

Chakraverty S (2020) Mathematical methods in interdisciplinary sciences. Wiley, New York

Mcculloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 5(4):115–133

Lee H, Kang IS (1990) Neural algorithm for solving differential equations. J Comput Phys 91(1):110–131. https://doi.org/10.1016/0021-9991(90)90007-N

Lagaris IE, Likas AC, Papageorgiou DG (2000) Neural-network methods for boundary value problems with irregular boundaries. In: IEEE transactions on Neural Networks, vol 11, no. 5, pp. 1041–1049. https://doi.org/10.1109/72.870037

Mall S, Chakraverty S (2013) Comparison of artificial neural network architecture in solving ordinary differential equations. Adv Artif Neural Syst 2013:1–12. https://doi.org/10.1155/2013/181895

Mall S, Chakraverty S (2013) Regression-based neural network training for the solution of ordinary differential equations. Int J Math Modell Numer Optim 4(2):136–149

Chakraverty S, Mall S (2017) Artificial neural networks for engineers and scientists. Taylor and Francis, CRC Press, Boca Raton

Panghal S, Kumar M (2021) Optimization free neural network approach for solving ordinary and partial differential equations. Eng Comput 37(4):2989–3002. https://doi.org/10.1007/s00366-020-00985-1

Lu XJ, He PZ, Xu J (2019) Error compensation-based time-space separation modeling method for complex distributed parameter processes. J Process Control 80:117–126. https://doi.org/10.1016/J.JPROCONT.2019.05.001

Niska H, Hiltunen T, Karppinen A, Ruuskanen J, Kolehmainen M (2004) Evolving the neural network model for forecasting air pollution time series. Eng Appl Artif Intell 17(2):159–167. https://doi.org/10.1016/J.ENGAPPAI.2004.02.002

Salehizadeh SMA, Yadmellat P, Menhaj MB (2009) Local optima avoidable particle swarm optimization. In: 2009 IEEE Swarm Intelligence Symposium, SIS 2009—Proceedings, 2009, pp. 16–21. https://doi.org/10.1109/SIS.2009.4937839.

Sirignano J, Spiliopoulos K (2018) DGM: a deep learning algorithm for solving partial differential equations. J Comput Phys 375:1339–1364. https://doi.org/10.1016/J.JCP.2018.08.029

Meade AJ Jr, Fernandez AA (1994) Solution of nonlinear ordinary differential equations by feed forward neural networks. Math Comput Model 20:19–44

Chakraverty S, Mall S (2020) Single layer Chebyshev neural network model with regression-based weights for solving nonlinear ordinary differential equations. Evol Intel 13(4):687–694. https://doi.org/10.1007/s12065-020-00383-y

Verma A, Kumar M (2020) Numerical solution of third-order Emden–Fowler type equations using artificial neural network technique. Euro Phys J Plus 135(9). https://doi.org/10.1140/epjp/s13360-020-00780-3.

Rizk-Allah RM, Hassanien AE (2022) A hybrid Harris hawks-Nelder-Mead optimization for practical nonlinear ordinary differential equations. Evol Intel 15(1):141–165. https://doi.org/10.1007/s12065-020-00497-3

Motsa SS, Sibanda P (2012) A note on the solutions of the van der pol and duffing equations using a linearisation method. Math Problems Eng. https://doi.org/10.1155/2012/693453.

Njah AN, Vincent UE (2008) Chaos synchronization between single and double wells Duffing-Van der Pol oscillators using active control. Chaos Solitons Fractals 37(5):1356–1361. https://doi.org/10.1016/j.chaos.2006.10.038

Ibsen LB, Barari A, Kimiaeifar A (2010) Analysis of highly nonlinear oscillation systems using He’s max-min method and comparison with homotopy analysis and energy balance methods. Sadhana 35:433–448. https://doi.org/10.1007/s12046-010-0024-y

Nourazar S, Mirzabeigy A (2013) Approximate solution for nonlinear Duffing oscillator with damping effect using the modified differential transform method. Scientia Iranica 20(2):364–368. https://doi.org/10.1016/j.scient.2013.02.023

Hu K, Chung K (2013) On the stability analysis of a pair of van der Pol oscillators with delayed self-connection, position and velocity couplings. AIP Adv 3(1):112118. https://doi.org/10.1063/1.4834115.

Akbari M (2018) Nonlinear dynamical structures on coupled Duffing-Van der Pol Oscillators with two degrees of freedom by new approach AGM. Res Dev Mater Sci 7(4). https://doi.org/10.31031/RDMS.2018.07.000670.

Kimiaeifar A (2010) An analytical approach to investigate the response and stability of Van der Pol-Mathieu-Duffing oscillators under different excitation functions. Math Methods Appl Sci 33(13):1571–1577. https://doi.org/10.1002/mma.1269

Kalas J, Kadeřábek Z (2014) Periodic solutions of a generalized Van der Pol-Mathieu differential equation. Appl Math Comput 234:192–202. https://doi.org/10.1016/J.AMC.2014.01.161

Li X, Hou J, Chen J (2016) An analytical method for Mathieu oscillator based on method of variation of parameter. Commun Nonlinear Sci Numer Simul 37:326–353. https://doi.org/10.1016/J.CNSNS.2016.02.003

Fan Q, Leung AYT, Lee YY (Feb.2016) Periodic and quasi-periodic responses of Van der Pol-Mathieu system subject to various excitations. Int J Nonlinear Sci Numer Simulat 17(1):29–40. https://doi.org/10.1515/ijnsns-2015-0012

Jadoon I, Raja MAZ, Junaid M, Ahmed A, ur Rehman A, Shoaib M (2021) Design of evolutionary optimized finite difference based numerical computing for dust density model of nonlinear Van-der Pol Mathieu’s oscillatory systems. Math Comp Simulation 181:444–470. https://doi.org/10.1016/j.matcom.2020.10.004.

Lu L, Meng X, Mao Z, Karniadakis GE (2021) DeepXDE: Aa deep learning library for solving differential equations. SIAM Rev 63(1):208–228. https://doi.org/10.1137/19M1274067

Chen F et al (2020) NeuroDiffEq: A Python package for solving differential equations with neural networks. J Open Source Softw 5(46):1931. https://doi.org/10.21105/joss.01931.

Raja MAZ, Manzar MA, Shah FH, Shah FH (2018) Intelligent computing for Mathieu’s systems for parameter excitation, vertically driven pendulum and dusty plasma models. Appl Soft Comput J 62:359–372

Wang K, Yan X, Yang Q, Hao X, Wang J (2020) Weak signal detection based on strongly coupled Duffing-Van der Pol oscillator and long short-term memory. J Phys Soc Japan 89(1):014003. https://doi.org/10.7566/JPSJ.89.014003.

Yin KL, Pu YF, Lu L (2020) Combination of fractional FLANN filters for solving the Van der Pol-Duffing oscillator. Neurocomputing 399:183–192. https://doi.org/10.1016/j.neucom.2020.02.022

Bukhari AH, Sulaiman M, Raja MAZ, Islam S, Shoaib M, Kumam P (2020) Design of a hybrid NAR-RBFs neural network for nonlinear dusty plasma system. Alex Eng J 59(5):3325–3345

Mattheakis M, Protopapas P, Sondak D, di Giovanni M, Kaxiras E (2019) Physical symmetries embedded in neural networks [Online]. Available: http://arxiv.org/abs/1904.08991

Sahoo AK, Chakraverty S (2022) Curriculum learning-based artificial neural network model for solving differential equations. In: Chakraverty S (ed) Studies in Computational Intelligence, 1st edn, vol. 988. Springer, Singapore. https://doi.org/10.1007/978-981-16-4713-0_6.

Zurada JM (1992) Introduction to Artificial Neural Systems. West Publishing Co., USA

Chakraverty S, Jeswal SK (2021) Applied artificial neural network methods for engineers and scientists. World Scientific, Singapore. https://doi.org/10.1142/12097.

Chakraverty S, Sahoo DM, Mahato NR (2019) Concepts of soft computing. Springer, Singapore. https://doi.org/10.1007/978-981-13-7430-2.

Pippard A (1987) The inverted pendulum. Euro J Phys 8(3).

Sadat Kiai SM (1999) Confinement of ions in a radio frequency quadrupole ion trap supplied with a periodic impulsional potential. Int J Mass Spectrometry 188(3):177–182. https://doi.org/10.1016/S1387-3806(99)00019-6.

Hu NQ, Wen XS (2003) The application of Duffing oscillator in characteristic signal detection of early fault. J Sound Vib 268(5):917–931. https://doi.org/10.1016/S0022-460X(03)00002-6

Dartora CA, Zamboni-Rached M, Nóbrega KZ, Recami E, Hernández-Figueroa HE (2003) General formulation for the analysis of scalar diffraction-free beams using angular modulation: Mathieu and Bessel beams. Optics Communications 222(1–6):75–80. https://doi.org/10.1016/S0030-4018(03)01564-5

Zhihong Z, Shaopu Y, Tan ZH (2015) Application of van der Pol–Duffing oscillator in weak signal detection. Comp Electrical Eng 41(C):1–8. https://doi.org/10.1016/J.COMPELECENG.2014.11.007.

Pandey M, Rand RH, Zehnder AT (2008) Frequency locking in a forced Mathieu-van der Pol-Duffing system. Nonlinear Dyn 54(1–2):3–12. https://doi.org/10.1007/s11071-007-9238-x

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT press, Cambridge

Jesus RJ, Antunes ML, da Costa RA, Dorogovtsev SA, Mendes JFF, Aguiar RL (2021) Effect of initial configuration of weights on training and function of artificial neural networks. Mathematics 9(18). https://doi.org/10.3390/math9182246.

Ramachandran B, Zoph B, Le Qv (2017) Searching for activation functions. [Online]. Available: http://arxiv.org/abs/1710.05941

Umar M, Amin F, Wahab HA, Baleanu D (2019) Unsupervised constrained neural network modeling of boundary value corneal model for eye surgery. Appl Soft Comput J 85. https://doi.org/10.1016/j.asoc.2019.105826.

Sahoo AK, Chakraverty S (2022) Machine intelligence in dynamical systems: a state-of-art review. WIREs Data Min Knowl Discovery. https://doi.org/10.1002/widm.1461

Masood Z, Samar R, Raja MAZ (2019) Design of a mathematical model for the Stuxnet virus in a network of critical control infrastructure. Comp Security, 87. https://doi.org/10.1016/j.cose.2019.07.002.

Guerrero Sánchez Y, Sabir Z, Günerhan H, Baskonus HM (2020) Analytical and approximate solutions of a novel nervous stomach mathematical model. Discrete Dyn Nature Soc, https://doi.org/10.1155/2020/5063271.

Verma A, Kumar M (2021) Numerical solution of Bagley-Torvik equations using Legendre artificial neural network method. Evol Intel 14(4):2027–2037. https://doi.org/10.1007/s12065-020-00481-x

Acknowledgements

Council of Scientific and Industrial Research (CSIR), New Delhi, India, is thankfully appreciated for the support and funding to carry out the present research work (File no.: 09/983(0042)/2019-EMR-I).

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sahoo, A.K., Chakraverty, S. A neural network approach for the solution of Van der Pol-Mathieu-Duffing oscillator model. Evol. Intel. 17, 1425–1435 (2024). https://doi.org/10.1007/s12065-023-00835-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-023-00835-1

Keywords

- Van der Pol-Mathieu-Duffing oscillator

- Symplectic artificial neural network

- Unsupervised

- Meshfree

- Excitation function