Abstract

In Smart cities surveillance is an extremely important feature required for ensuring the safety of citizens and also for deterring the crime. Hence, intelligent video surveillance (IVS) frameworks are by and large increasingly more famous in security applications. The investigation and acknowledgment of anomalous practices in a video succession has step by step attracted the consideration in the field of IVS, as it permits sifting through an enormous number of pointless data, which ensures the high productivity in the security assurance, and spare a great deal of human and material assets. Techniques are proposed in the literature for analyzing the IVS systems. Existing systems for video analysis, suffer with some limitations. The one of the major limitation is lack of real time response from the surveillance systems. In order to overcome this limitation, an IVS system design is proposed using convolution neural networks. In case of emergency like fire, thieves’ attacks, Intrusion Detector, the proposed system sends an alert for the corresponding services automatically. Experimentation has done on the number of datasets available for video surveillance testing. The results show that the proposed surveillance system achieves very low false alarm rates.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Vision-based human activity acknowledgement concern the undertaking of naturally interpreting a picture arrangement to choose what activity or action is being carried out by the persons in the picture. It is an important topic in computer vision, with down to earth applications, for example, video observation, human–computer collaboration, gaming, sports intervention, sports preparing, smart homes, life-care frameworks, among numerous others [1, 2] . Because of the enormous conceivable outcomes for viable application, human movement acknowledgement issues have gotten the consideration of specialists in the fields of computer vision, machine learning and AI.

Surveillance means close perception or supervision kept up over an individual or gathering. From this definition we could without much of a stretch feels that video is the best innovation to engage observation. Video observation offers individuals the chance to perceive what is happening in remote spots, in addition, it permits to watch a few remote places in the meantime. The survey of Intelligent Surveillance [3] bunches the advancement of observation frameworks into three ages. The first dependent on simple CCTV frameworks confronted every one of the confinements of simple systems for data circulation. The second era received advanced video revealing another universe of potential outcomes for interchanges and preparing. These potential outcomes increased the interest of cameras for various situations: air terminals, railroads, banks, grocery stores, even homes. The third era of observation frameworks confronted the difficulties made by new systems with a large number of cameras that could be checked from better places. Duplicating the quantity of sensors duplicates too the measure of data produced, therefore expanding significantly the prerequisites of transfer speed. Be that as it may, even with the up and coming of megapixel cameras, the greatest bottleneck isn’t identified with correspondences however to video preparing capacities. First and foremost the main video preparing unit was the administrator’s mind breaking down a lattice with pictures in screens, anyway gazing at a screen isn’t an errand people can execute proficiently amid a significant lot of time [4]. Proficient supervision of video requires visual consideration, a procedure by which the human cerebrum chooses the components that will be investigated. Checking focuses as a rule need to control handfuls, hundreds or even a large number of cameras. The test for wise video observation frameworks is to choose those destined to observe important occasions. There are a few conceivable outcomes for the choice criteria, however the vast majority of them can be assembled into one of these classes of strategies: movement location and example acknowledgement. Frameworks dependent on movement discovery should choose cameras where some component or components are moving. Frameworks dependent on example acknowledgement should choose cameras where a specific example has been perceived.

There are numerous kinds of Video Surveillance Systems, every one attempting to satisfy a bit of the marketplace. A few arrangements can be pinched. Thus, one can order Video Surveillance Systems dependent on the sort of imaging methodology obtained, creating classifications like “one camera frameworks”, “numerous camera frameworks”, “fixed camera frameworks”, “moving camera frameworks” and “crossover camera frameworks”. Another order can be founded on the applications which a Video Surveillance System offers, for example, object following, object acknowledgement, ID re-recognizable proof, redid occasion alarming, conduct examination and so on. At long last, Video Surveillance Systems can be ordered dependent on engineering a framework is based on, for example, independent frameworks, cloud-mindful frameworks and dispersed frameworks. For more often than not, observation frameworks have been latent and constrained in degree. In this specific situation, fixed cameras and other detecting gadgets, for example, security alerts have been utilized. These frameworks can follow people or to identify some sort of occasions (an individual breaking the entryway or the window), be that as it may, they have not been intended to foresee unusual practices for example. Amid the most recent years, there was a gigantic advancement in detecting gadgets, remote broadband innovations, top notch cameras, and information arrangement and investigation. Consolidating such innovations in a suitable manner will permit to grow new arrangements that expand the surveillance extent of the present frameworks and improve their productivity. Inside the setting of surveillance frameworks, proficiency improvement has two headings. To begin with, the improvement of the video handling calculations alongside the determined video examination will build the legitimacy and the precision of a surveillance framework and second the incorporation of observation frameworks with cloud foundations is required to improve dependability (for example create cautions under poor lighting conditions and so on.), diminish the upkeep expenses and increment the reaction time of the frameworks. Surveillance frameworks need to adapt to a few difficulties, including, yet not restricted to, algorithmic and foundation challenges. In this way, observation frameworks need to adjust with the developing system and foundation innovations, for example, cloud frameworks, so as to give increasingly strong and dependable administrations. This pattern will likewise request the joining of various surveillance frameworks for removing increasingly helpful learning. This coordination will require new correspondence conventions and information arranges between observation specialists, just as new surveillance adjusted databases and inquiry dialects. At long last, progressively exact calculations are required, particularly with regards to conduct examination and unusual exercises recognition.

In this paper we proposed an approach based on deep learning for abnormal behavior detection. A neural network which has a deep layered structure is used to find out helpful features from the given input data. We used convolution neural network (CNN) as the model which is to be constructed and used to detect abnormal behavior.

2 Related works

Vassilios et al. [5] carried out a survey on video surveillance systems current status and future trends. The main significant features and analytics are offered, and also the most general techniques for image/video quality enhancement. Distributed computational infrastructures are included like Cloud computing describing the pros and cons of each approach. The most essential deep learning techniques are discussed, alongside of the smart analytics they exploit.

Mabrouk et al. [6] are reviewed various degrees of a intelligent video surveillance system (IVVS). Accessible methodologies for anomalous conduct acknowledgment comparative with each degree of an IVVS are widely assessed. Testing datasets for IVVS assessment are displayed. Confinements of the strange conduct acknowledgment territory are examined.

Patrona et al. [7], A new system for analyzing the real motion is introduced. The contrived structure can perform activity location/acknowledgment and assessment dependent on movement catch information. Programmed and dynamic movement information weighting is presented, changing joint information noteworthiness dependent on activity inclusion going for increasingly effective activity location and acknowledgment. Activity assessment is performed and, abusing fluffy rationale, semantic input is naturally recovered proposing methods for activity execution improvement.

Núñez [8], addresses human action and hand signal acknowledgment issues by utilizing 3D information arrangements got from full-body and hand skeletons individually. For that purpose the author proposed a deep learning based methodology for fleeting 3D recognition issues by considering a mix of a convolution neural network (CNN) and a Long Short-Term Memory (LSTM) network. The author additionally presents a two-step training methodology which concentrates around CNN preparing and, besides, modifies the full strategy (CNN+LSTM). Exploratory testing showed that our preparation technique acquires preferable outcomes over a solitary stage preparing system. Furthermore, he proposed an information growth technique that has additionally been approved tentatively. At last, we play out a broad exploratory investigation on freely accessible information benchmarks. The outcomes got show how the proposed methodology arrives at cutting edge execution when contrasted with the techniques distinguished in the writing. The best outcomes were acquired for little datasets, where the proposed information growth technique has more noteworthy effect.

Early occasion identification is expected to signal an occasion as right on time as could be allowed, yet before it ends is presented by Fan [9]. It is the basic for recognizing on-going occasions in numerous applications for example, spotting perilous or criminal occurrences. He address this issue by changing over video clasps of a procedure occasion into alleged unique pictures, which are prepared to do at the same time catching both the appearance and worldly advancement of the event. By utilizing the dynamic pictures of two classifications of video cuts (total objective occasion as the positive set and arbitrary portions that don’t contain the objective occasion as the negative set), we propose a novel strategy for preparing a finder dependent on profound learning systems. The methodology is equipped for scoring incomplete occasions by observing the level of occasion consummation as it monotonically increments toward end. Specifically, we talk about tests on the discovery of people falling and the breakout of a battling. Analyses on a few datasets delineate the adequacy of the proposed technique.

High Dynamic Range (HDR) video has risen up out of research labs around the globe and entered the domain of customer hardware by Chalmers [10] . The dynamic range that a human can find in a scene with insignificant eye adaption (roughly 1,000,000:1) is inconceivably more noteworthy than conventional imaging innovation which can just catch around 8 f-stops (256:1). HDR innovation, then again, can possibly catch the full scope of light in a scene; significantly beyond what a human eye can see. In his paper, he inspects the field of HDR video from catch to show; past, present and future. Specifically the paper looks past the present promoting publicity around HDR, to demonstrate how HDR video later on can and, to be sure, ought to achieve a stage change in imaging, practically equivalent to the change from high contrast to shading.

Deep learning has as of late accomplished promising outcomes in a wide scope of regions, for example, PC vision, discourse acknowledgement and normal language preparing. It means to learn progressive portrayals of information by utilizing profound engineering models. In a savvy city, a ton of information (for example recordings caught from many appropriated sensors) should be consequently handled and broke down. Wang [11] surveyed the deep learning calculations applied to video investigation of keen city as far as various research subjects: object discovery, object following, face acknowledgement, picture grouping and scene marking.

In video surveillance systems the approaches for motion detection and people detection are used in number of applications. Motion detection algorithms are the general techniques to detect the attackers. Conventional techniques for motion detection are specified in [12] as background subtraction [13], temporal filtering [14], and optical flow [15].

Optical flow is estimation to picture movement characterized as the projection of speeds of 3D surfaces focuses onto the imaging plane of a visual sensor [16]. Distinctive optical flow strategies are given by Barron and Beauchemin in [16]. The majority of them are computationally intricate. One more significant shortcoming is that optical flow techniques are sensitive to noise, which is exceptionally normal in video from CCTV cameras [17].

Temporal filtering depends on temporal differencing [14]. This strategy utilizes a limit distinction of pixel between sequential pictures (a few) to separate the moving item, so it demonstrates high performance in execution. Anyway its discovery precision might be frail, failing in extricating all the important pixels of an objective item or parting holes within the moving articles. Background subtraction procedures are likely the most famous decision from sellers of motion detection frameworks, but at the same time is a repetitive point in scientific meetings. The thought is to concentrate forefront objects from a picture by subtracting a background model picture from the original one. The principle challenge is to produce background model quick and with hearty outcomes. The authors of [18] portray the fundamental difficulties for background subtraction (BS) techniques. The supposed camouflage effect causes BS to flop in foreground discovery. At the point when the quantity of cautions gets too enormous in an observing focus, the measure of work could end up inaccessible or the trust in the security framework could be basically hurt.

The authors of [13] thinks about execution and exactness of various background subtraction strategies, “Running Gaussian Average” has the most excellent speed execution while Mixture of Gaussians [20] or kernel density estimation” [21] give better precision. The authors of [22] categorize the demonstrating techniques in parametric and non-parametric techniques.

The authors of [19] analyze the various ways to deal with background and frontal area detachment which depends on disintegration into low-position in addition to additive matrices. The essential thought is that a data matrix, a pixel lattice on account of pictures, can be disintegrated into two parts: a low-rank matrix and a matrix that can be scanty. The previous is utilized to show the background, while connected inadequate anomalies from the later to represent front area objects. For low resolution pictures (144x176) the time execution is a long way from the consequences of techniques AGMM or ViBe. Convolution neural systems have been actualized to implement the separation of background and frontal area division.

The authors [23] proposed a technique with CNN architecture. In that technique CNN is prepared to figure out how to subtract the background from an input picture. Results are helpful yet the authors identifies that their strategy is certainly not a real-time or versatile method.

The author [24] indicates how the intensity of profound features can be utilized by an algorithm inserted in a camera. The consequences of a gaussian mixture model utilizing profound features outflank the one of RGB features however the running time is multiple times higher.

Another area of video surveillance is detection of people. It is an interesting field of research. The author Ogale [25] portrays number of techniques for human recognition in video, which are partitioned in two categories. The main category use background subtraction as a pre-handling approach, while the second category works on features extricated from picture or video fixes, the authors recommends that the last is better in more consideration than the previous.

The authors of [26] proposed an order of the best in class algorithms as indicated by the fundamental basic tasks of detecting the people: object recognition and person model. Classification of object recognition is like the one in [25] and partitions strategies among division and extensive search. Complex conditions are uncommonly trying for division based techniques, which may expand the quantity of false recognitions. Extensive search techniques are progressively strong to pivot, scale and posture changes, yet require higher computational expenses. The second errand characterizes identified objects into individual or no individual by utilizing a recently characterized or prepared individual model. They proposed two discriminative data sources: appearance and movement. Human acknowledgement may totally come up short when individuals make an effort not to be perceived by wearing costumes. At the same time movement based strategies are not all that effectively conned. Changing movement patterns might be progressively troublesome.

3 Proposed method

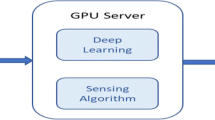

The main aim of a smart video surveillance system is to generate an alarm by identifying efficiently an abnormal event from a massive content of videos for avoiding the problematic situations or incidents. The proposed architecture for this task is shown in Fig. 1.

The first step in the proposed architecture is video data acquisition system. In this step the recorded video data from multiple sources are collected together and video sequences are converted into a sequence of images. This sequence of images contains images of type normal actions and abnormal actions. The RGB images are chosen manually using eye inspection related to normal behavior and abnormal behavior. The behavior is tagged as a label to the images. The next step in the proposed method is the feature extraction. In this step features are derived from the original images which are useful for subsequent learning. The third step is the feature description in which the extracted features are described. The last step which is most crucial is the video analytics in which a model is constructed from the input data with the extracted features. Based on the model constructed, we can identify the abnormal behavior in the given input video.

In the proposed method of this paper, after converting a video into a sequence of images then for all the remaining steps we used a CNN approach. The three main types of layers in CNN are input layer, hidden layers and output layer. Hidden layers are useful for detecting the important features from the given images which are helpful to detect whether the image contains normal or abnormal actions. Hence we used 9 hidden layers. To have unique representation for all the input images in the input layer, all the image sequences are converted to \(32 \times 32\) size. Softmax layer is used as output layer from which it is decided the whether the image belongs to normal action or abnormal action. After giving the image as input in the input layer it is passed onto the hidden layers. The hidden layers of CNN comprises with two main operations which are convolution, pooling. Among the 9 hidden layers, 6 are used as convolution and pooling layers and 3 are fully connected layers. Among the 6 layers, 4 are convolution layers and 2 are pooling layers i.e. after every 2 convolution layers a pooling layer has used. In all the convolution layers rectified linear unit (ReLU) activation function has used. It allows faster training in the network. The architecture of our CNN is shown in Fig. 2.

The main purpose of using convolution layer is to gradually reduce the size of the given input which further reduces the parameters and computations in the network. The convolution layer filters the image and retains certain features like edges, and corners. These are helpful to identify the kind of action being performed. The first and second convolution layers are used with 64 filters of size \(3\times 3\) (stride 1). Because of color images are used as input the filter size can be represented as \(3\times 3\times 3\). Padding is used with sufficient number of pixels to make the resultant image is of same as the input image. Followed by these two layers pooling layer is used with max pooling technique. The pooling layer scans each of the feature maps separately. Pooling layer is implemented with size \(2\times 2\). The stride for the pooling layer is considered as 2 pixels. Because of the stride is 2, the size of the image is reduced to \(16\times 16\) from \(32\times 32\). The combination of two convolution layers followed by pooling layer is repeated one more time for efficient feature extraction. The third and fourth convolution layers are used with 32 filters of size \(5\times 5\) (stride 1). Because of color images are used as input the filter size can be represented as \(5\times 5\times 3\). Padding is used with sufficient number of pixels to make the resultant image is of same as the input image. Followed by these two layers pooling layer is used with max pooling technique. The pooling layer scans each of the feature maps separately. Pooling layer is implemented with size \(2\times 2\). The stride for the pooling layer is considered as 2 pixels. Because of the stride is 2, the size of the image is reduced to \(8\times 8\) from \(16\times 16\). Subsequent to this feature extraction layers, 3 fully connected layers are used for classification. Each fully connected layer is implemented with 64 neurons. As an output layer, softmax layer is used to estimate the probability of each class in the classification task.

The weights in the convolution layer are initialized randomly using normal distribution. Binary cross entropy error function is used to evaluate the error between actual values and identified values. We implemented gradient descent technique to adjust the weights in the training phase of the network. The number of iterations is set to 500. The learning rate is set to 0.001 in gradient descent technique. An outline of the proposed CNN architecture parameters are shown in Table 1.

4 Experimental results

In this paper, an automated method is proposed to detect abnormal human behavior in smart surveillance systems. The main objective of this experiment is identifying the behavior of a human being by assuming that human behavior is composed of a sequence of static postures, and their temporal relationship which contributes to the person behavior. In order to improve the accuracy of the result in static pose recognition, a deep learning model which is convolution neural network is utilized to detect an object in real-time.

4.1 Datasets

Experiments are conducted with two public benchmark databases namely Peliculas Dataset (PEL) [27] and CMU Graphics Lab Motion Capture Database (CMU) [28] to evaluate the performance of proposed method.

The first public dataset used is the PEL dataset which consists of 368 images. There are 268 as abnormal behavior images and 100 normal behavior images. The dataset consists of movie fighting scenes. Based on the fighting behavior of persons in the images they were categorized as abnormal behavior and non-fighting behavior as normal behavior. Among the 268 abnormal behavior images, 188 are taken for training and 80 are taken for testing randomly. Among the 100 normal behavior images, 70 are taken for training and remain 30 are taken for testing. The images are RGB images of size \(352\times 240\) pixels which are resized to \(32\times 32\) pixels.

The second public dataset used is the CMU dataset consists of 11 videos. Among the 11 videos, 5 are abnormal behavior videos and 6 are normal behavior videos. General practices incorporate strolling, hand-shaking, and running. Strange practices incorporate unwilling or opposing actions and vicious signals. For instance, subject A pulls subject B by elbow however subject B opposes; A pulls B by hand yet B opposes; A and B fight with furious hand motions; A grabs a high stool and takes steps to toss at B. There are a total of 2477 pictures in 11 videos, with 1209 positive pictures and 1268 negative pictures. Among the 1209 positive pictures, 846 are taken for training the model and remaining for testing the model. Among the 1268 negative pictures, 888 and 380 are taken for training and testing the model respectively. The pictures are RGB images of size \(352\times 240\) pixels, which are then resized to \(32\times 32\) in order to reduce the training time. The characteristics of the dataset are summarized in the following Table 2.

4.2 Discussion on results

The datasets are applied to the experimentation to classify the images into binary classes: normal and abnormal behavior. The experimentation has done with different learning rates as 0.1 and 0.001. For each learning rate the model trained with different epochs. To evaluate the performance the trained model the considered measure is the accuracy of the model. In the experimentation, accuracy of the dataset is evaluated as percentage of the test set images that are classified by the trained CNN. The experimental results for both the datasets with different learning rates and different epochs are shown in Table 3.

For a learning rate of 0.01, the CMU dataset is trained with 20, 40, 60, 80 and epochs. With 80 and 100 epochs the trained CNN model has 100% accuracy. The accuracy of the model is 98.1, 86.3 and 78.1 for epochs 60, 40 and 20 respectively. For a learning rate of 0.1 also the CMU dataset is trained with 20, 40, 60, 80 and epochs. The accuracy of the model is 93.1, 89.4, 78.5, 72.6 and 68.1 for epochs 100, 80, 60, 40 and 20 respectively.

For a learning rate of 0.01, the PEL dataset is trained with 20, 40, 60, 80 and epochs. With 60, 80 and 100 epochs the trained CNN model has 100% accuracy. The accuracy of the model is 93.8 and 89.2 for epochs 40 and 20 respectively. For a learning rate of 0.1, the PEL dataset is trained with 20, 40, 60, 80 and epochs. The accuracy of the model is 94.5, 88.1, 82.4, 77.6 and 72.6 for epochs 100, 80, 60, 40 and 20 respectively.

The results clearly demonstrate that as the epoch’s increases the accuracy of the model also increases and as the learning increases the accuracy of the model decreases. So in order to get the high accuracy of the model, it would be better to train the model with low learning rate and high number of epochs.

5 Conclusion

Human action recognition in the recorded videos is the task of automatically analyzing the image sequence to identify the activity is being carried out by the persons in the scene. After identifying the activity, deciding whether that activity is normal or abnormal is also be an important task in video surveillance systems. If an abnormal activity id detected then an alarm has to be generated to the corresponding authorities in order to avoid that activity. Here we projected a deep learning based approach using convolution neural network for extracting the features and then for detecting the abnormal activity. The trained CNN is experimented with real datasets to prove its efficiency. The results prove that the proposed CNN accomplishes constructive performance. In the experimentation, the effect of different network configurations is also analyzed. The results demonstrate that the used learning rate for training the model should not be too high to circumvent from overshooting and not too low to avoid over fitting and low convergence of the network. The number of epochs is increased from a small value to achieve the highest accuracy.

In the future, the work can be extended from binary class identification to multi class identification and from single or two persons to a group of persons in different situations. This will help to design a more robust intelligent surveillance system that can tackle different types of practical situations.

Change history

06 December 2022

This article has been retracted. Please see the Retraction Notice for more detail: https://doi.org/10.1007/s12065-022-00809-9

References

Mishra P, Saroha GP (2016) A study on video surveillance system for object detection and tracking

Ovsenik L, Kolesárová AKŽ, Turán J (2010) Video surveillance systems. Acta Electrotechnica et Informatica 10(4):46–53

Valera M, Velastin SA (2005) Intelligent distributed surveillance systems: a review. IEE Proc Vis Image Signal Process 152(2):192–204

Rankin S, Cohen N, Maclennan-Brown K, Sage K (2012) CCTV op1355 Erator performance benchmarking. In: International carnahan conference on security technology, pp 325–330

Vassilios T, Tasos D (2017) Video surveillance systems-current status and future trends. Comput Electr Eng ISSN 0045-7906. https://doi.org/10.1016/j.compeleceng.2017.11.011

Mabrouk AB, Zagrouba E (2018) Abnormal behaviour recognition for intelligent video surveillance systems: a review. Expert Syst Appl 91:480–491. https://doi.org/10.1016/j.eswa.2017.09.029 ISSN 0957-4174

Patrona F, Chatzitofis A, Zarpalas D, Daras P (2018) Motion analysis: action detection, recognition and evaluation based on motion capture data. Pattern Recogn 76:612–622. https://doi.org/10.1016/j.patcog.2017.12.007 ISSN 0031 3203

Núñez JC, Cabido R, Pantrigo JJ, Montemayor AS, Vélez JF (2018) Convolutional neural networks and long short-term memory for skeleton-based human activity and hand gesture recognition. Pattern Recognit 76:80–94. https://doi.org/10.1016/j.patcog.2017.10.033. ISSN 0031-3203

Fan Y, Wen G, Li D, Qiu S, Levine MD (2018) Early event detection based on dynamic images of surveillance videos. J Vis Commun Image Represent 51:70–75. https://doi.org/10.1016/j.jvcir.2018.01.002 ISSN 1047-3203

Chalmers A, Debattista K (2017) HDR video past, present and future: a perspective. Signal Process Image Commun 54:49–55. https://doi.org/10.1016/j.image.2017.02.003 ISSN 0923-5965

Wang L, Sng D (2015) Deep learning algorithms with applications to video analytics for a smart city: a survey. arXiv eprint arXiv:1512.03131

Kim YS, Street WN (2004) An intelligent system for customer targeting: a data mining approach. Decis Support Syst 37(2):215–228

Piccardi M (2004) Background subtraction techniques: a review. IEEE Int Conf Syst Man Cybern 4:3099–3104

Lipton AJ, Fujiyoshi H, Patil RS (1998) Moving target classification and tracking from real-time video. In: Fourth IEEE workshop on applications of computer vision, pp 8–14

Barron JL, Fleet DJ, Beauchemin SS (1994) Performance of optical flow techniques. Int J Comput Vis 12(1):43–77

Beauchemin SS, Barron JL (1995) The computation of optical flow. ACM Comput Surv (CSUR) 27(3):433–466

Hu W, Tan T, Wang L, Maybank S (2004) A survey on visual surveillance of object motion and behaviors. IEEE Trans Syst Man Cybern Part C (Appl Rev) 34(3):334–352

Brutzer S, Hoferlin B, Heidemann G (2011) Evaluation of background subtraction techniques for video surveillance. In: IEEE conference on computer vision and pattern recognition, pp 1937–1944

Bouwmans T, Sobral A, Javed S, Jung SK, Zahzah E-H (2017) Decomposition into low-rank plus additive matrices for background/foreground separation: a review for a comparative evaluation with a large-scale dataset. Comput Sci Rev 23:1–71

Stauffer C, Grimson WEL (1999) Adaptive background mixture models for real-time tracking. In: Computer society conference on computer vision 1375 and pattern recognition, vol 2, pp 246–252

Elgammal A, Harwood D, Davis L (2000) Non-parametric model for background subtraction. In: Computer vision ECCV, pp 751–767

Xu Y, Dong J, Zhang B, Xu D (2016) Background modeling methods in video analysis: a review and comparative evaluation. CAAI Trans Intell Technol 1:43–60

Barnich O, Droogenbroeck MV (2011) ViBe: a universal background subtraction algorithm for video sequences. IEEE Trans Image Process 20(6):1709–1724

Shafiee MJ, Siva P, Fieguth P, Wong A (2016) Embedded motion detection via neural response mixture background modeling. In: IEEE conference on computer vision and pattern recognition workshops (CVPRW). IEEE, pp 837–844

Ogale NA (2006) A survey of techniques for human detection from video. Surv Univ Md 125(133):19

Garcıa Martın A, Hauptmann A, Martınez JM (2011) People detection based on appearance and motion models. In: International conference on advanced video and signal-based surveillance, pp 256–260

Peliculas Movies Fight Detection Dataset. http://academictorrents.com/details/ 70e0794e2292fc051a13f05ea6f5b6c16f3d3635/tech&h it=1&filelist=1. Accessed 2018/1/5.

CMU Graphics Lab Motion Capture Database. http://mocap.cs.cmu.edu/. Accessed 2018/1/2

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1007/s12065-022-00809-9"

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Janakiramaiah, B., Kalyani, G. & Jayalakshmi, A. RETRACTED ARTICLE: Automatic alert generation in a surveillance systems for smart city environment using deep learning algorithm. Evol. Intel. 14, 635–642 (2021). https://doi.org/10.1007/s12065-020-00353-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-020-00353-4