Abstract

As bibliographical classification of published journal items affects the denominator in this equation, we investigated how the numerator and denominator of the impact factor (IF) equation were generated for representative journals in two categories of the Journal Citation Reports (JCR). We performed a full text search of the 1st-ranked journal in 2004 JCR category “Medicine, General and Internal” (New England Journal of Medicine, NEJM, IF = 38.570) and 61st-ranked journal (Croatian Medical Journal, CMJ, IF = 0.690), 1st-ranked journal in category “Multidisciplinary Sciences” (Nature, IF = 32.182) and journal with a relative rank of CMJ (Anais da Academia Brasileira de Ciencias, AABC, IF = 0.435). Large journals published more items categorized by Web of Science (WoS) as non-research items (editorial material, letters, news, book reviews, bibliographical items, or corrections): 63% out of total 5,193 items in Nature and 81% out of 3,540 items in NEJM, compared with 31% out of 283 items in CMJ and only 2 (2%) out of 126 items in AABC. Some items classified by WoS as non-original contained original research data (9.5% in Nature, 7.2% in NEJM, 13.7% in CMJ and none in AABC). These items received a significant number of citations: 6.9% of total citations in Nature, 14.7% in NEJM and 18.5% in CMJ. IF decreased for all journals when only items presenting original research and citations to them were used for IF calculation. Regardless of the journal’s size or discipline, publication of non-original research and its classification by the bibliographical database have an effect on both numerator and denominator of the IF equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

From its beginning in the 1955, when it was developed to ease the selection of journals into a bibliographical database [1], the impact factor (IF) of scientific journals has become the centerpiece of scientific enterprise. Although it was developed primarily as a bibliographical tool, IF is often used as proxy for the quality of research and researchers [2], and is equally important to both authors and editors: authors depend on it for career promotion and research funding, and editors care about it because high IF attracts more and better papers.

IF has been the subject of many heated debates [2–9]. A major criticism is that IF calculation is not transparent and that it is property of a private company from the USA, Thompson Scientific, which releases journals’ annual IFs in its product Journal Citation Reports® (JCR) [5]. There have also been allegations that journals could manipulate their IF [5–7] by affecting the numbers that go into the IF equation—the ratio between the citations journal articles from two previous years receive in the current year and number of articles published in the two previous years. The numerator in the IF formula includes all citations, regardless whether they are to original research work or non-research items, such as letters, comments and editorials; the denominator includes only the journal items that are considered citable, i.e., published items categorized as “Article” or “Review” by the experts at the Thompson Scientific [1, 3, 5, 7].

Although much has been written about IF equation and how it can be effected [1–9], there has not been much evidence [2–4] that would systematically address IF calculation across different journals. To provide necessary evidence for this important debate, we analyzed the bibliographical classification of published items and elements of the IF equation for typical journals from two prestigious categories of the JCR—“Multidisciplinary science” and “Medicine, general and internal”. The analysis included the first ranked, weekly published journals in the categories (Nature and New England Journal of Medicine) and a smaller journal from the middle of the IF ranking in each category (Anais da Academia Brasileira de Ciencias, a quarterly journal from Brazil, and Croatian Medical Journal, a bimonthly journal from Croatia, respectively).

Methods

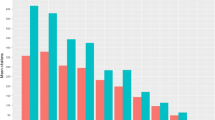

The study included the first and middle-ranking journals in two categories of the 2004 JCR, which was available at the start of the study (Fig. 1): (1) New England Journal of Medicine (NEJM, IF = 38.570) and Croatian Medical Journal (CMJ; IF = 0.690), ranked 61st out of 103 journals in “Medicine, General and Internal” category and (2) Nature (IF = 32.182) and Anais da Academia Brasileira de Ciencias (AABC; IF = 0.435), ranked 26th (the same relative rank as the CMJ) out of 45 journals in “Multidisciplinary Sciences” category. Journals were available in print, except for AABC, which had full text available on-line (http://www.scielo.br/scielo.php?script=sci_serial&pid=0001-3765).

Study protocol and data collection. *Indexing classification of all published items identified by hand search of printed journal issues were verified by a search in the Current Contents database via Gateway Ovid and by General Search of the Web of Science database. Citable items are those used in IF calculation and include “articles” and “reviews”. †One article could not be found in either database [10]

We first performed full text search of all published items in 2003 and 2004 volumes for all four journals. Volumes 2003 and 2004 were selected because they served as the basis for calculating 2005 IF, which was still not officially released at the time of our analysis. Each published item (3,640 items for NEJM, 290 for CMJ, 5,193 for Nature, and 126 for AABC; Fig. 1) was read and assessed for originality, which was defined as presentation of novel, previously unpublished research results expressed in a numerical of graphical form, regardless of the formal structure of the published item.

We then performed the search of Thomson Scientific electronic database Web of Science (WoS) database (http://www.portal.isiknowledge.com/portal.cgi) to confirm indexing and identify bibliographical classification of published items. All items identified by hand search of the journals were identified in the database, except a single article [10]; this item was excluded from further analysis. Bibliographical items were categorized into the following categories by WoS: “Article”, “Review”, “Editorial Material”, “Letter”, “News Item”, “Bibliographical Item”, “Book Review” and “Correction”. Data on all 9249 published items were entered into an electronic database, including the title of the article, authors’ names, source journal, and article classification according to (1) databases, (2) journal’s own categorization, and (3) presentation of original research results.

Finally, citations for individual indexed items were identified by Cited Reference search of the WoS database. Data categorization and collection was performed in June 2006, when the data on 2005 citations to 2003 and 2004 published items should have been entered into WoS but before the official release of the journals’ IFs for 2005 in summer of 2006 (Fig. 1), in order to exclude any bias on our side related to the knowledge of 2005 JCR data and elements of the IF. Official data on IF were collected from the 2005 JCR edition, released in summer 2006. According to the JCR Notices (http://www.portal.isiknowledge.com/portal.cgi?DestApp=JCR&Func=Frame), there were no data adjustments in the JCR or changes in the IF or ranking for the four journals since the initial 2005 JCR release.

Results

Most of the published items in Nature and NEJM were non-research items, classified by WoS database as editorial material, letters, news, book reviews, bibliographical items, or corrections (62.6% and 81.3%, respectively; Table 1). Smaller journals published fewer non-research items, CMJ 30.8% and AABC just 2 (1.6%) items (Table 1). The analysis of full text articles showed that the bibliographical classification into citable items (articles and reviews) corresponded to the original research content of items only in AABC, which published almost exclusively original research articles and reviews. For other journals, original research results were presented in bibliographical items that are not included in IF equation, whereas some of the items classified by WoS as original articles did not contain original research data (Table 1). For Nature, original research data could be identified in 94.7% items classified as original articles and in 9.5% of items classified as editorial material or letters. In NEJM, 92.2% of the original article items and 7.2% of editorial material or letters contained research data (Table 1). In CMJ, these percentages were 91.2% and 13.7%, respectively (Table 1).

The analysis of citations that items published in 2003 and 2004 received in 2005, showed that items classified as non-citable items by WoS, and thus not included in the denominator of the IF equation, received a significant number of citations, which are included in the numerator of the IF equation: 6.95% of all citations in Nature, 14.7% in NEJM, 18.5% in CMJ and none in AABC (Table 1). Most of these citations were to items that did not present original research according to our analysis: 64.8% in Nature, 83.4% in NEJM, and 83.3% in CMJ.

In NEJM, the categories editorial material and letters, regardless of whether they contained original research data, received a total of 4,195 citations, which is considerably more than 3,401 citations to all review articles (Table 1). In Nature, the majority of items classified as non-original by WoS but receiving considerable number of citations were “Brief Communications”. Out of these 311 items, 90 (28.9%) were classified by WoS as “Articles”, and the rest were classified as “Editorial Matter” or “Letters”, although 250 (80.4%) out of all Brief Communications contained original research results, and received 1,983 citations. In CMJ, non-original items that received many citations were essays written for the forum on the Revitalization of Academic Medicine, which ran for more than a year and essays cited each other over this period.

The total number of citations retrieved by cited reference search of WoS was smaller than that declared by JCR for all journals except for CMJ, and comprised 95.5% (Nature), 87.6% (AABC) and 95.6% (NEJM) of total citations reported by JCR (Fig. 1). The denominator of IF equation (items likely to receive citations) in the JCR differed from the number of such items identifiable in WoS database for Nature and NEJM. For Nature, we could identify 1,935 items as “Articles” and “Reviews” in WoS, whereas JCR declared 1,737 items. NEJM had 679 such items registered in WoS, but 682 in JCR. The number of these items for AABC and CMJ was the same in WoS and JCR (Fig. 1 and Table 1).

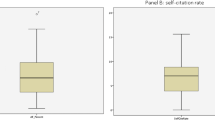

When we entered into the IF formula the number of published items with original data and the number of citations to these items from WoS database, the IFs decreased for all journals: 21.3% for Nature, 12.2% for AABC, 32.2% for NEJM, and 15.7% for CMJ (Table 2).

Discussion

Our study showed that IF equation is most relevant for journals that publish almost solely original research articles and reviews. When a journal publishes items other than research articles and reviews and these contain original research data information relevant for science, these items get a significant number of citations, which increase the numerator of the IF equation. This is true for both large and small journals, and for different disciplines. In our study, the two first ranked journals from two different JCR categories (Nature, the leading multidisciplinary journal, and New England Journal of Medicine, the leading general medical journal) and a small journal from the middle of its JCR category (Croatian Medical Journal) had a similar relative change in the IF because of the citations to items other than articles and reviews. Only Anais da Academia Brasileira de Ciencias, journal that publishes almost exclusively research articles and reviews, was affected by the changes in the numerator of the IF equation.

Journal items that were classified as non-original or non-substantive items by the Thompson scientific contained results of original research and received considerable citations, thus increasing the IF. The editors at the Thompson Scientific emphasize that errors may occur during bibliographical classification of items published in journal and that they “attempt to count only the truly scientific papers and review articles” [3, 7]. This is also the limitation of our study because the judgment on the originality of the work presented in the journal item was made by individuals who could have been biased and could have made errors. We addressed this limitation by strict criteria for the originality of the research described in a journal item: research data presented in numbers, either in the text or/and in a table or figure, and no citation to previous publication of these results. The latter criterion was defined as the absence of citation to the original work in the journal item; we did not verify this by full literature search so it is possible that some authors deliberately did not refer to the original publication. The assessment of journal items was performed by experienced medical doctors (MR and RG), who received formal and mandatory education in the types structure of the scientific article and bibliographical and citation databases [11]. In cases where the two investigators could not agree, they consulted the senior author (AM), and reached consensus on the item classification. Another limitation of the study is that it was restricted to only four journals. Because it would be very difficult to use a random sample of published items as the analysis of IF equation requires the number of published items in two full years, we chose to analyze the typical journals from representative JCR categories: most prestigious journals with high IF and “average” journals from the middle of the JCR IF ranking list of the category. Thus we analyzed 9,249 published items in journals of different size, influence and prestige, and from different scientific fields and JCR categories. Similarity of findings for both prestigious journals and the small medical journals that published items other than articles and reviews indicates that our findings are generalizable.

There were few random errors detected in the WoS database, such as the absence of a single NEJM item from the citation database. We obtained differences in the number of citable items and total citations between the output generated by searching the WoS database for individual articles and the numbers in the official JCR output. The number of items deemed citable (“Articles” and “Reviews”) was lower in JCR than in WoS for Nature, greater for NEJM and identical for CMJ and AABC. The total number of citations was greater in JCR than in WoS for all journals except for CMJ. These differences were probably random and did not greatly affect IF calculation. They may stem from the errors in reference lists in citing articles [8], errors in entering data into the database, or the timing of the citation entry into the database. It is also possible that the sources for the citations quoted in the JCR are not restricted to the WoS database.

The letters and editorial items published in NEJM were categorized as such and received a substantial number of citations that went into the IF equation In Nature, Brief Communications were, although being original research items, mostly classified as editorial material by the Thomson Scientific. Thomson Scientific, in admitting the possibility of errors, welcomes advice from journals [3], and some journals claim that they negotiate IF elements with Thomson Scientific [3, 6, 7]. This is probably the reason for systematic error in the IF denominator, such as was the case for Nature’s Brief Communications. The outcome of such error is that journal items with obviously original work are classified as non-original by the journals. An illustrative example for misclassification of journal items is the study by Martison et al. [12] on misbehavior among researchers. Their study, funded by the Office of Research Integrity and National Institutes of Health in the USA was published as a Commentary in Nature in 2005. The article does not have a typical structure of the research article but has all relevant elements, including detailed methodology and a table with the results of the study survey. According to the reference list, this was an original publication, as there were no citations to the authors’ work on this topic. The article is classified as “Editorial Material” in WoS, and has received 62 citations as of October 2007.

Many large journals have adapted their content and classification to the “requirements” of the IF equation. For example, the last Brief Communication was published in Nature in December 2006. The Lancet, which started its Research Letters in 1997 and experienced a fall in the IF [3, 4], published fewer and fewer of these items, and finally discontinued them in December 2005. BMJ discontinued publishing its short research papers without an abstract, which were grouped with full articles under the section “Papers”, in December 2005. This section now carries the title “Research” and contains the same number of full research articles as before.

What is the solution to the problematic IF equation? Many researchers and journals would say that IF should be abandoned [8, 9, 13], but this is easier said then done, because many academic and research communities have incorporated IF firmly into the criteria for career advancement or research funding [5, 7]. Changing these criteria would need a consensus of many stakeholders and their active involvement in the change, which may not be realistic at the moment, when journals publish editorials and other items about the misuse of IF but still proudly market their IF and carefully supervise its calculation. Even the proposals for novel indicators, such as Y-factor or Eigenfactor (http://www.eigenfactor.org), which use an algorithm similar to the Google’s PageRank, incorporate IF as an important element in calculation [14].

The solution may come from the IF producers themselves—Thomson Scientific is now offering a new database, Journal Performance Indicators, JPI [1, 15]. This database links each source item to its citations, something that was not possible in the JCR, and includes only citations to the items used in the IF denominator. This is a better equation than the current IF in the JCR [1], and journals may start using it as a more adequate representation of their value. Two problems remain. The first is that a new system, with a price tag attached to it, should be accepted by the research and academic communities—without it there is no way out of the IF vicious circle for authors and journals. The second and more important one is that it still is not clear which items are or should be in the denominator, which criteria will be used for their selection, and who will make a final decision.

References

Garfield, E. (2006). The history and meaning of the journal impact factor. JAMA, 295, 90–93.

Seglen, P. O. (1997). Why the impact factor of journals should not be used for evaluating research. BMJ, 314, 498–502.

Joseph, K. S., & Hoey, J. (1999). CMAJ’s impact factor: Room for recalculation. CMAJ, 161, 977–978.

Joseph, K. S. (2003). Quality of impact factors of general medical journals. BMJ, 326, 283.

Adam, D. (2002). The counting house. Nature, 415, 726–729.

The PLoS Medicine Editors (2006). The impact factor game. PLoS Medicine, 3, e291.

Brown, H. (2007). How impact factor changed medical publishing—and science. BMJ, 334, 561–564.

Walter, G., Bloch, S., Hunt, G., & Fisher, K. (2003) Counting on citations: A flawed way to measure quality. MJA, 178, 280–281.

Lundberg, G. D. (2003). The “omnipotent” science citation index impact factor. MJA, 178, 253–254.

Nankivell, B. J., et al. (2003). The natural history of chronic allograft nephropathy. The New England Journal of Medicine, 349, 2326–2233.

Marusic, A., & Marusic, M. (2003). Teaching students how to read and write science: Mandatory course on scientific research and communication in medicine. Academic Medicine, 78, 1235–1239.

Martinson, B. C., Anderson, M. S., & de Vries, R. (2005). Scientists behaving badly. Nature, 435, 737–738.

Williams, G., & Hobbs R. (2007). Should we ditch impact factors? BMJ, 334, 568–569.

Dellavalle, R. P., Schilling, L. M., Rodriguez, M. A., Van de Sompel J., & Bollen J. (2007). Refining dermatology journal impact factors using PageRank. Journal of the American Academy of Dermatology, 57, 116–119.

Thomson Scientific (2005). Journal performance indicators. Available at: http://www.scientific.thomson.com/products/jpi/. Accessibility verified October 19, 2007.

Acknowledgements

Contributions: AM, MM, and NK conceived and designed the study; MR and RG collected all data, and analyzed them with AM. AM wrote the manuscript and MR, RG, NK and MM revised it for important intellectual content. All authors approved the final version of the manuscript for submission. Funding: This study was supported by the grant from the Ministry of Science, Education and Sports of the Republic of Croatia, No. 108-1080314-0245 to MM. Competing interests: AM and MM are Coeditors in Chief of the Croatian Medical Journal. NK is Book Review Editor fro the Croatian Medical Journal. None of them receives any pay for their work in the journal.

Author information

Authors and Affiliations

Corresponding author

Additional information

Preliminary results of the study were presented at the 2006 ORI Research Conference on Research Integrity, Tampa, FL, December 1–3, 2006.

Rights and permissions

About this article

Cite this article

Golubic, R., Rudes, M., Kovacic, N. et al. Calculating Impact Factor: How Bibliographical Classification of Journal Items Affects the Impact Factor of Large and Small Journals. Sci Eng Ethics 14, 41–49 (2008). https://doi.org/10.1007/s11948-007-9044-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11948-007-9044-3