Abstract

This systematic review applied meta-analytic procedures to synthesize medication adherence interventions that focus on adults with hypertension. Comprehensive searching located trials with medication adherence behavior outcomes. Study sample, design, intervention characteristics, and outcomes were coded. Random-effects models were used in calculating standardized mean difference effect sizes. Moderator analyses were conducted using meta-analytic analogues of ANOVA and regression to explore associations between effect sizes and sample, design, and intervention characteristics. Effect sizes were calculated for 112 eligible treatment-vs.-control group outcome comparisons of 34,272 subjects. The overall standardized mean difference effect size between treatment and control subjects was 0.300. Exploratory moderator analyses revealed interventions were most effective among female, older, and moderate- or high-income participants. The most promising intervention components were those linking adherence behavior with habits, giving adherence feedback to patients, self-monitoring of blood pressure, using pill boxes and other special packaging, and motivational interviewing. The most effective interventions employed multiple components and were delivered over many days. Future research should strive for minimizing risks of bias common in this literature, especially avoiding self-report adherence measures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

About half of people with hypertension (HTN) have uncontrolled blood pressure [1]. A significant cause of poor blood pressure control is inadequate medication adherence [2•, 3, 4•, 5–7]. Adherence to anti-hypertensive medications drops after initiating treatment, with about 10 % of patients missing a dose on any given day and around half of HTN patients stopping medication by 1 year after prescription [8]. Among patients with presumed resistant HTN, 43 to 65.5 % of them are medication nonadherent [4•, 9]. Patients with poor adherence to anti-hypertensives are at greater risk for coronary disease, cerebrovascular disease, and chronic heart failure [10•]. Poor medication adherence is associated with higher nondrug medical costs and constitutes a major barrier in reducing cardiovascular mortality [11, 12].

The problem of poor medication adherence (henceforth, adherence) has prompted investigators to conduct clinical trials testing interventions to improve medication taking among adults with HTN. The proliferation of these primary intervention trials has prompted a number of reviews that summarize and synthesize parts of this extant research. Some of these reviews are limited because they include only a few primary studies [13–16], or they synthesize blood pressure outcomes but do not directly analyze adherence [17]. Some reviews restrict their analysis of intervention effects to a specific type of adherence measurement method [3]. Other reviews have restricted their focus to specific types of interventions, for example, including only interventions conducted by specific clinic personnel [18, 19]. The present report attempts to provide a more comprehensive analysis of primary intervention studies aimed at increasing medication adherence in hypertensive patients. To accomplish this, multiple search strategies were employed to permit synthesis of adherence outcomes across as large a sample of primary studies as possible.

The following questions were addressed in this report: (1) What is the overall average effect of interventions designed to increase adherence among subjects with HTN? (2) Do effects of interventions vary depending on sample and study characteristics? (3) Do the effects vary depending on intervention features? (4) What risks of bias are present in studies, and what influence do they have on effect sizes?

Methods

Widely accepted systematic review and meta-analysis methods were used to conduct the project, and PRISMA guidelines were followed in preparing this report [20, 21].

Eligibility Criteria

Studies eligible for inclusion were those testing interventions designed to increase adherence among adults with HTN and reported adherence as an outcome measure. Adherence was defined as the extent to which medication taking behavior is consistent with health care provider recommendations [22]. This project focused on implementation adherence (how accurately patient follows prescribed dosing regimen) because primary studies rarely reported persistence (continued administration over the intended course of therapy) [23]. The meta-analysis included primary studies with varied adherence measures (e.g., electronic bottle cap devices, pharmacy refill data, pill counts, self-report) because meta-analysis methods convert primary study outcomes to unitless indices [5, 24].

Studies with varied research methods were included, and risk of bias related to study design was assessed as described below [21]. Small-sample studies, which may be underpowered to detect differences in outcomes, were eligible for inclusion because meta-analyses do not rely on p values to determine effect sizes [24]. Effect sizes were weighted so larger studies had more influence on aggregate findings. Both published and unpublished studies were eligible for inclusion because the most consistent difference between published and unpublished research is the statistical significance of the findings [25, 26]. This article does not contain any studies with human or animal subjects performed by the any of the authors for this meta-analysis.

Search Strategies and Information Sources

Multiple search strategies were employed to avoid bias that can result from narrow searches [26–28]. An expert health sciences librarian conducted searches in the following electronic databases: MEDLINE, PsycINFO, PUBMED, EBSCSO, Cochrane Central Trials Register, CINAHL, Cochrane Database of Systematic Reviews, EBM Reviews, PDQT, ERIC, INDMed, International Pharmaceutical Abstracts, and Communication and Mass Media. The primary MeSH terms used in constructing search strategies were patient compliance and medication adherence. Patient compliance was used to locate studies published before 2009, and medication adherence was used to locate studies published after 2008, when medication adherence MeSH term was introduced. Other MeSH terms used in search strategies were drugs, dosage forms, generic, prescription drugs, and pharmaceutical preparations. Text words used in searches were adherence, adherent, compliance, compliant, noncompliant, noncompliance, nonadherence, nonadherent, advocate, improve, promote, enhance, encourage, foster, influence, incentive, ensure, remind, optimize, increase, decrease, address, impact, prevent, prescription(s), prescribed, drug(s), medication(s), pill(s), tablet(s), and regimen(s).

Searches were also completed in 19 grant databases and clinical trial registries [29, 30]. Journal hand searches were conducted for 57 journals [31]. Abstracts from 48 conferences were evaluated for eligible studies. Author searches on eligible studies were used to extend the search. Ancestry searches on primary studies and extant reviews were conducted.

Study Selection

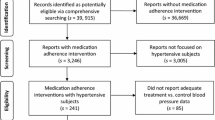

Potentially eligible studies identified through the various searching methods were imported into bibliographic software, and custom fields and term lists were used for study tracking and management. Figure 1 shows how potentially eligible studies flowed through the project [21]. Final eligibility was determined by at least two investigators. If primary studies contained inadequate data to calculate effect sizes, additional publications about the same project were sought or authors were contacted to secure the information. To ensure independence of the data, names of authors on each eligible study were compared against the author names of other eligible studies. Studies with authors in common were examined closely for possible sample overlap [32]. If uniqueness of samples was not clear from examination of the written reports, corresponding authors were contacted for clarification.

Data Collection

A coding frame to extract study data was created based on previous related meta-analyses and the research team’s expertise [33, 34]. This coding frame was pilot tested on 20 studies before implementation in the larger project. The coding frame was designed to capture report level features, participant demographics, intervention characteristics, study design attributes, and data to calculate adherence effect sizes. Year of distribution, publication status, and funding were recorded as report level features. Participant characteristics that were coded included gender, ethnicity, socioeconomic status, age, number of prescribed medications, and whether subjects were selected because of poor adherence.

Intervention characteristics coded from studies included theoretical basis of intervention, intervention delivery site (e.g., pharmacy, ambulatory care setting), delivery medium (e.g., face-to-face, telephone), interventionist profession (e.g., pharmacist, physician), days over which the intervention was delivered, and dose (i.e., duration of sessions and number of sessions). The intervention recipient (i.e., patient, health care provider) was recorded. Whether the intervention targeted adherence behavior alone or also included other health behaviors (e.g., physical activity) was coded.

The content of each intervention was coded in detail. Examples of coded content included specific strategies to address barriers to adherence, medication administration calendar, decisional balance activities, feedback about adherence, feedback about blood pressure, habit analysis and linking adherence with habits, improving health care provider communication with patients, increasing integration of care, motivational interviewing, special packaging of medications (e.g., blister packs, pill boxes), problem solving strategies, self-monitoring adherence behavior, self-monitoring blood pressure, social support for adherence, and written instructions. Other types of intervention content were coded but were reported too infrequently for analyses.

Study design features that were coded included random vs. nonrandom assignment to groups, allocation concealment, comparison group management (i.e., true control group or attention control group), data collector masking, intention-to-treat analyses, and percent attrition [21]. The method used to measure adherence (e.g., electronic medication event monitoring, pharmacy refills, pill counts, self-report) was recorded [5]. Outcome data coded included baseline and outcome sample sizes, means, measures of variability, change scores, t statistics, and success rates. All data used in the calculation of effect sizes were independently verified by a doctorally prepared researcher. If more than one report was available about the same samples, all reports were used in order to code as many details about studies as possible.

Summary Measures and Statistical Analysis

Standardized mean difference effect sizes (d) were calculated for each comparison [24, 35]. This represents the post-intervention difference between treatment and control participants divided by the pooled standard deviation for treatment vs. control two-group comparisons. A positive value for d indicates higher adherence for the treatment group compared to the control group. Pooled effect size estimates were obtained using random-effects models to acknowledge that variation in effect sizes results not only from participant-level sampling error but also from study-level sources of variation due to methodological and other differences [36, 37]. Individual effect sizes were weighted by the inverse of variance to give larger studies more influence in meta-analysis findings [35, 38]. Corresponding 95 % confidence intervals were constructed for each effect size and the overall mean effect size.

Single-group pre-post overall mean effect sizes were also calculated for both treatment and control groups, but they were analyzed and reported separately from two-group treatment vs. control comparisons. The single-group analyses should be considered ancillary information to the more valid treatment vs. control comparisons. All effect size outcomes reported in this paper are for treatment vs. control comparisons unless otherwise specified.

Heterogeneity was expected because it is common in behavioral research [39]. This anticipated heterogeneity was managed in four ways: (1) random-effects models were used because they take into account heterogeneity beyond that which can be explained by moderator analyses, (2) both location and extent of heterogeneity were reported, (3) possible sources of heterogeneity were explored using moderator analysis, and (4) findings were interpreted in the context of discovered heterogeneity [39].

Cochran’s test of the conventional heterogeneity statistic Q was used to determine whether between-studies sampling error was statistically significant [40], and I 2 was computed to quantify the extent of heterogeneity beyond within-studies sampling error [24, 40].

Exploratory moderator analyses were conducted to detect patterns among studies related to sample, intervention, and methodological characteristics. Dichotomous moderators were tested by between-group heterogeneity statistics (Q between ) using a meta-analytic analogue of ANOVA [24]. Continuous moderators were analyzed by testing unstandardized regression slopes in a meta-analytic analogue of regression [24].

Risk of Bias

To avoid introducing bias by including only easy-to-locate studies that may have larger effect sizes, comprehensive search strategies were employed [25, 41, 42]. This strategy permitted identification of eligible unpublished as well as published studies, which minimized inflation of overall effect sizes due to publication bias. To detect the presence of publication bias, funnel plots of effect sizes vs. sampling variance were visually examined [25]. Begg’s test using Kendall’s method was conducted to determine whether detected associations between effect size and variance were greater than might be expected due to chance [25].

To assess risk of bias due to study quality, common indicators of methodological strength (random assignment, allocation concealment, data collector masking, use of attention controls, intention-to-treat analysis, low attrition) were examined via moderator analyses as a form of sensitivity analyses [21]. For all analyses, effect sizes were weighted so more precise estimates from larger sample sizes exerted proportionately more influence on findings [24], but they were not weighted by overall quality scores because existing quality instruments lack validity [43–45]. Effect sizes of control group baseline vs. outcome comparisons were also analyzed to determine whether subjects’ mere participation in a study could have biased the estimated overall treatment effect.

Results

Comprehensive searching located 101 eligible primary study reports [46–146]. Twenty-five additional papers were located that reported on the same studies; these were used as companion papers to enhance coded data. Five reports in Spanish were included. Eighty-eight reports were published articles, ten were dissertations, two were conference presentations, and one was an unpublished report. Seventy papers were published in 2000 or more recently; only 20 reports were published prior to 1990. The earliest article was published in 1973. Thirty-one reports did not report funding for studies.

The primary study reports provided information for 112 treatment vs. control comparisons, 48 treatment pre- vs. post-intervention comparisons, and 32 control baseline vs. outcome comparisons (k indicates the number of comparisons). Some reports had more than one treatment group; eight reports included two comparisons and six reports had three comparisons. All subsequent results are based on comparisons.

Table 1 provides descriptive characteristics of the included treatment vs. control comparisons. Median treatment and control group sample sizes were 56 and 51 participants, respectively. Median attrition rates were 8.1 %. Women were well represented in samples. Only k = 9 comparisons reported the number of prescribed medications taken by participants; for these comparisons, the median number of prescribed medications was 5.1.

Overall Effects of Interventions on Adherence Outcomes

Overall effect sizes for treatment vs. control and single-group pre-post comparisons are presented in Table 2. Effect sizes could be calculated for 112 treatment vs. control comparisons involving 34,272 subjects, but four comparisons were excluded as outliers from the pooled analysis. The estimated overall standardized mean difference effect size for the remaining 108 comparisons was d = 0.300. With the outliers included, the effect size was 0.421 (confidence interval (CI) 0.322, 0.520; Q = 1,429.559). Exclusion of the two largest studies involving more than 2,000 participants resulted in an overall mean effect size of 0.341 (CI 0.257, 0.425; Q = 673.440).

We calculated effect sizes for 47 treatment group pre-post comparisons involving 5,703 participants and for 32 control pre-post comparisons involving 4,603 participants. Three outliers each were excluded from the pooled analysis of the treatment pre-post comparison and control group pre-post comparisons. For treatment outcome vs. baseline comparisons, the overall mean effect size was 0.378, and for control group pre-post comparisons, the overall effect size was 0.096.

Analysis of Q statistics showed significant heterogeneity across studies for all three types of comparisons. The proportion of variation due to between-studies heterogeneity ranged from 78 to 87 %.

Report and Sample Characteristic Moderator Analyses

Moderator analysis was conducted to determine whether effect sizes were linked to study attributes or participant demographics. With respect to study attributes, those studies conducted more recently reported larger effect sizes than older studies (p < 0.001). Effect sizes were larger for unfunded studies (d = 0.454, k = 30) than funded studies (d = 0.253, k = 78), but the difference was not statistically significant.

Although effect sizes were not linked to study attributes, they were affected by participant demographics. Larger effect sizes were associated with higher mean participant age (p = 0.009, k = 85) and with higher proportions of women in the sample (p = 0.001, k = 93). There was no association between effect size and the proportion of participants in the sample belonging to underrepresented ethnic/racial groups (p = 0.974, k = 44). Effect sizes were lower for studies reporting inclusion of low-income participants (d = 0.133, k = 18) than studies not reporting low-income participants (d = 0.327, k = 90); this difference was statistically significant (Q b = 7.294, p = 0.007).

The difference in effect sizes for studies of subjects recruited specifically because they had adherence problems (d = 0.479, k = 13) and studies that did not target such subjects (d = 0.282, k = 95) was not statistically significant (Q b = 2.059, p = 0.151). The number of medications patients were prescribed was not analyzed as a moderator because only nine studies provided this information.

Moderator Analyses of Intervention Delivery Attributes

Moderator analyses were conducted on specific intervention delivery attributes reported by at least seven primary studies. Results of the analysis are shown in Table 3. Similar effect sizes were found regardless of whether interventions targeted adherence exclusively (d = 0.318) or addressed additional health behaviors (d = 0.292). Interventions delivered to health care providers teaching them how to elicit adherence behavior change in their patients were significantly less effective (d = 0.107) than interventions delivered directly to patients themselves (d = 0.316). Effects on interventions were similar whether delivered by physicians (d = 0.356) or pharmacist (d = 0.369), but neither were significantly more effective than interventions delivered by other personnel. Interventions delivered in ambulatory care facilities were similar in effect to interventions delivered in other settings (0.272 vs. 0.282). Although interventions delivered in pharmacies were somewhat more effective than interventions delivered in other settings (0.432 vs. 0.290), this difference did not achieve statistical significance. Interventions delivered face-to-face were not significantly more effective than mediated interventions (0.335 vs. 0.284).

Moderator analysis of theory-based interventions relative to studies not based in theory showed no difference in overall effect size (0.335 vs. 0.284). Effect size was 0.560 for the health promotion model (k = 3), 0.366 for social cognitive theory (k = 7), 0.152 for the health belief model (k = 3), and 0.118 for the transtheoretical model (k = 6). Given the small number of samples contributing to each of the pooled effect sizes, these findings should be regarded with caution.

Intervention dose was poorly reported. Only 29 comparisons reported a mean duration of intervention sessions; the median of those means was 20 min. A median of four intervention sessions was reported across 71 comparisons. The total duration of intervention sessions in minutes could be calculated from data reported in 26 studies, but regression analyses revealed no relationship between total minutes of intervention and effect size (p = 0.534). The median of the mean period of time over which interventions were delivered was 183 days. Interventions delivered over a longer time frame improved adherence more than those delivered over a shorter one (p < 0.001).

Intervention Content Moderator Analyses

Moderator analysis was conducted to determine whether effect size was influenced by specific types of intervention content. A pooled effect size for studies incorporating a particular intervention component was compared to the pooled effect size of studies lacking that component. Results of the moderator analyses are shown in Table 4.

Meta-regression showed that studies with more intervention components had larger effect sizes than studies with fewer components (p < 0.001). Among the 15 individual intervention components tested as moderators, two were found to have statistically significant influences on effect size. Studies that focused on increasing integration of a patient’s care across health care providers (d = 0.185) reported lower effect sizes than studies without integration (d = 0.344). Primary research that used adherence problem-solving strategies reported significantly smaller effect sizes (d = 0.152) than studies lacking this component (d = 0.334).

Self-monitoring of blood pressure is a common recommendation made by health care providers to patients with hypertension. Studies that incorporated blood pressure self-monitoring into interventions had slightly greater effect sizes than interventions lacking a self-monitoring component, but the difference was not significant (0.381 vs. 0.216, p = 0.160). Having patients self-monitor their medication taking also did not result in significantly greater effect sizes (0.381 vs. 0.280, p = .508). Studies in which patients received feedback about their blood pressure did not have significantly great effect sizes than studies that did not incorporate blood pressure feedback (0.298 vs. 0.296, p = 0.974). Although effect sizes were larger for studies in which patients received feedback about their adherence compared to studies in which no adherence feedback was given (0.500 vs. 0.280), the difference was not statistically significant (p = 0.140).

Larger effect sizes were found for studies that linked patient habits with medication taking, employed motivational interviewing, or provided medication in special packaging or pill boxes, but the differences from studies lacking these components also failed to achieve statistical significance. The small number of studies available for analysis for some intervention components may have limited the statistical power of the tests to detect true differences, so findings should be regarded as exploratory.

Risk of Bias Sensitivity Analyses

Several risks of bias were identified in the studies included in the meta-analysis sample: lack of random assignment of subjects (k = 34), allocation not concealed (k = 90), data collectors not masked to group assignment (k = 79), and absence of intention-to-treat analysis (k = 85). Moderator analysis determined that adherence effect size was not linked to any of these risks (Table 5). Effect sizes were also not significantly associated with attrition rates (p = 0.201). Moderator analysis could not be conducted to assess for control bias because only two comparisons used attention control groups.

The method used to measure adherence is an important area of potential bias in this area of science. The largest effect sizes were reported among studies with electronic event monitoring adherence (d = 0.621) followed by pharmacy refill data (d = 0.299) and pill counts (d = 0.299). The smallest effect was found among studies using self-report measures of adherence (d = 0.232).

The effect size for published studies was 0.310 compared to an effect size of 0.230 for unpublished reports. To further assess whether publication bias might be present, funnel plots of effect sizes vs. sampling variance were examined. Asymmetry in the distribution suggested publication bias, and this was confirmed by Begg’s test (p = 0.030). The funnel plot for treatment group pre-post comparisons also showed evidence for publication bias that was confirmed by Begg’s test (p = 0.002). No evidence of publication bias was detected in funnel plots of control group pre-post comparisons, and Begg’s test was not significant (p = 0.333).

Discussion

This project provides the first comprehensive review and meta-analysis of medication adherence intervention outcomes among adults with HTN. Multiple search strategies were employed to locate as large a sample of studies as possible. The overall mean effect size of 0.300, which was calculated across 108 treatment vs. control comparisons, documents that treatment subjects had significantly better medication adherence outcomes than control subjects. This value was comparable to effect sizes reported in meta-analyses of adherence interventions conducted in general populations (d = 0.18 to 0.37) [147, 148], among older adults (d = 0.33) [149], adults with coronary artery disease (d = 0.229) [150], adults with heart failure (d = 0.29) [151], patients with adherence problems (d = 0.301) [152], and in targeted populations of underrepresented racial/ethnic groups (d = 0.211) [153].

The 0.300 effect size is consistent with treatment subjects taking 4 % more of their prescribed daily doses than control subjects at outcome, a difference that may be clinically significant. Cardiovascular risk and outcomes are influenced by the extent of blood pressure control, which in turn is related to adherence to anti-hypertensive medications. Higher adherence rates to HTN medications are correlated with reduced risk for the development of congestive heart failure [154], cardiovascular disease [155], and acute cardiovascular events [156, 157]. Lowy and colleagues showed an increase in adherence for prescribed HTN medication with similar pharmacokinetics improves the reduction in 10-year cardiovascular disease risk [155]. Thus, modest increases in medication adherence can help reduce cardiovascular disease risk through improved blood pressure control.

The modest effect sizes found in this report and previous meta-analyses demonstrate the difficulty in changing adherence behavior. Health care providers need to understand the challenges in changing adherence behavior and make this a priority in providing care. The findings that interventions delivered over more days were more effective than shorter interventions suggest that improved adherence behavior typically will not be achieved in a single visit or with a short-term intervention. Rather, health care providers will be more successful if they repeatedly address adherence across multiple clinic visits.

The drawback of interventions of longer duration is that they may require health policy changes to permit reimbursement for providers’ delivery of health behavior change intervention activities. However, the cost savings to the health care system in terms of prevented disease and reduced illness-related hospitalizations would offset the cost of reimbursing providers for helping patients to improve medication adherence [158, 159].

The moderator analyses of intervention characteristics provide promising directions for future work. The larger effect size for interventions having more components suggests providers need to use multiple strategies in their attempts to improve adherence. This increases the likelihood of an intervention component addressing the reasons for nonadherence for any given patient. Few interventions have attempted to tailor intervention approaches to patients’ reasons for nonadherence. Future intervention research will need to explore the potential benefits of tailored interventions, based either on patients’ type of nonadherence (e.g., intentional vs. unintentional; implementation vs. persistence), and preferences for intervention delivery (e.g., face-to-face vs. technology-mediated).

The finding that mediated delivery of interventions was as effective as face-to-face delivery suggests mediated interventions such as text messaging could be tried. The results also suggest that interventions can target multiple health behaviors without adversely affecting adherence outcomes [150]. This is important information for hypertensive populations in which other health behaviors affecting cardiovascular health such as diet and exercise must also be addressed.

Interventions delivered directly to patients were more effective than those delivered to health care providers [151, 152]. Interventions to increase integration of care were less effective than interventions with other characteristics. Regarding specific content of interventions, exploratory findings suggest future attention to habit-based interventions, adherence feedback to patients, patients self-monitoring blood pressure, special packaging such as pill boxes, and motivational interviewing approaches [152, 160]. These moderator analyses indicated differences in effect sizes, but the differences did not reach statistical significance, likely due to too few studies using these approaches. In contrast, this meta-analysis found no support for specifically addressing adherence barriers or problem solving, medication administration calendars, decisional balance activities, and social support interventions.

The overall effect size reflects aggregate changes. Some individual patients likely experienced little or no adherence improvement while other others ended studies with much higher adherence levels. The sample characteristic moderator analyses suggested interventions were more effective for older, female, and middle- or upper-income subjects. These findings suggest different interventions need to be developed for men, younger subjects, and adults with limited income [161].

Project limitations inherent to meta-analyses and specific to this topic must be considered. Some primary research may not have been retrieved despite comprehensive searching. Effect sizes were significantly heterogeneous, which was expected given the methodological variations among studies. Intervention content may have been incompletely coded due to lack of information provided in study reports. Inadequate description of interventions in some primary studies is a common problem in behavior sciences research reporting [11, 162, 163]. This project did not link adherence with blood pressure outcomes. The scarcity of information regarding the mean number of medications is a serious limitation in this area of science because adherence may be related to the numbers of medications taken or medication regimen complexity [149].

The primary intervention studies located for this meta-analysis focused exclusively on implementation adherence. Given evidence that persistence may be more important than implementation adherence for blood pressure control [164], future research should include persistence measures and consider separate reporting of implementation adherence and persistence outcomes.

Disparities in treatment and control of hypertension are related to the often intertwining influences of socioeconomic status, educational level (which may also be, at times, a proxy for health literacy), and race/ethnicity. Improved reporting in primary studies regarding of socioeconomic and racial/ethnic attributes of participants would permit more precise estimation of these factors in meta-analysis. This meta-analysis found income-associated differences intervention effectiveness, but moderator analysis of the effect of educational level on adherence was not conducted because too few studies reported on the educational demographics of participants. Future comparative effectiveness research to identify interventions most suitable for individuals within specific socioeconomic and racial/ethnic groups would allow for improved blood pressure control across a more diverse spectrum of patient populations.

Potential sources of bias in primary studies diminish confidence in study findings [21]. More than half the studies in this meta-analysis failed to report the use of allocation concealment, data collector masking, or intention-to-treat analysis. Although moderator analysis found no linkage to effect size, the results should still be interpreted within the context of these methodological limitations. Publication bias was detected in this meta-analysis, suggesting calculated overall effect sizes overestimate true effects. Location of more unpublished studies might provide a truer estimate of overall effects of interventions on medication adherence.

Despite documented concerns about the validity of self-report measures in assessing adherence [2•, 11], 43 % of the primary studies relied on self-report to measure adherence. The smallest effect sizes were among studies with self-report adherence measures (d = 0.232), while the largest effects were among studies with electronic event monitoring adherence measures (d = 0.621). The low cost and ease of collecting self-report adherence data may be less important than collecting adherence data with adequate sensitivity to detect intervention effects. Improving methodological rigor in future research will enhance the quality of evidence provided by trials. New measures should be developed that combine the low cost of self-report, with the greater sensitivity of electronic monitoring to improve the validity of clinical trial data as well as the potential usage of adherence assessment in clinical practice as part of adherence-enhancing intervention efforts.

Conclusion

In summary, this comprehensive meta-analysis of interventions documented significant but modest post-intervention improvements in medication adherence among hypertensive patients. These improvements were comparable to findings of previous meta-analyses that examined effects of interventions on medication adherence in diverse chronic illness populations. Interventions to improve anti-hypertensive medication adherence were most effective among female, older, and moderate- or high-income participants. Clinicians should consider interventions that incorporated multiple different components and are delivered over many days. Promising intervention components include linking adherence behavior with daily habits, providing adherence feedback to patients, self-monitoring blood pressure, special packaging of medications, and motivational interviewing. Future research designs should strive to incorporate fewer threats of bias, especially avoiding self-report adherence measures.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance

CDC. High blood pressure fact sheet. In: National Center for Chronic Disease Prevention and Health Promotion DfHDaSP, editor.: Centers for Disease Control and Prevention. 2015.

Burnier M. Managing ‘resistance’: is adherence a target for treatment? Curr Opin Nephrol Hypertens. 2014;23:439–43. This paper documents the importance of inadequate medication adherence in resistant hypertension.

Christensen A, Osterberg LG, Hansen EH. Electronic monitoring of patient adherence to oral antihypertensive medical treatment: a systematic review. J Hypertens. 2009;27:1540–51.

De Geest S, Ruppar T, Berben L, Schonfeld S, Hill MN. Medication non-adherence as a critical factor in the management of presumed resistant hypertension: a narrative review. EuroIntervention. 2014;9:1102–9. This article explains the role of nonadherence to medications in resistant hypertension.

Erdine S, Arslan E. Monitoring treatment adherence in hypertension. Curr Hypertens Rep. 2013;15:269–72.

Simpson SH, Eurich DT, Majumdar SR, Padwal RS, Tsuyuki RT, Varney J, et al. A meta-analysis of the association between adherence to drug therapy and mortality. BMJ. 2006;333:15.

Wofford MR, Minor DS. Hypertension: issues in control and resistance. Curr Hypertens Rep. 2009;11:323–8.

Vrijens B, Vincze G, Kristanto P, Urquhart J, Burnier M. Adherence to prescribed antihypertensive drug treatments: longitudinal study of electronically compiled dosing histories. BMJ. 2008;336:1114–7.

Jung O, Gechter JL, Wunder C, Paulke A, Bartel C, Geiger H, et al. Resistant hypertension? Assessment of adherence by toxicological urine analysis. J Hypertens. 2013;31:766–74.

Dragomir A, Cote R, Roy L, Blais L, Lalonde L, Berard A, et al. Impact of adherence to antihypertensive agents on clinical outcomes and hospitalization costs. Med Care. 2010;48:418–25. This study linked hypertensive medication adherence with vascular events, hospitalizations, and costs of healthcare.

Gwadry-Sridhar FH, Manias E, Lal L, Salas M, Hughes DA, Ratzki-Leewing A, et al. Impact of interventions on medication adherence and blood pressure control in patients with essential hypertension: a systematic review by the ISPOR medication adherence and persistence special interest group. Value Health. 2013;16:863–71.

Bramlage P, Hasford J. Blood pressure reduction, persistence and costs in the evaluation of antihypertensive drug treatment—a review. Cardiovasc Diabetol. 2009;8:18.

Lewis LM. Factors associated with medication adherence in hypertensive blacks: a review of the literature. J Cardiovasc Nurs. 2012;27:208–19.

Lewis LM, Ogedegbe C, Ogedegbe G. Enhancing adherence of antihypertensive regimens in hypertensive African-Americans: current and future prospects. Expert Rev Cardiovasc Ther. 2012;10:1375–80.

Matthes J, Albus C. Improving adherence with medication: a selective literature review based on the example of hypertension treatment. Deutsches Arzteblatt Int. 2014;111:41–7.

Takiya LN, Peterson AM, Finley RS. Meta-analysis of interventions for medication adherence to antihypertensives. Ann Pharmacother. 2004;38:1617–24.

Glynn LG, Murphy AW, Smith SM, Schroeder K, Fahey T. Interventions used to improve control of blood pressure in patients with hypertension. Cochrane Database Syst Rev. 2010;3, CD005182. doi:10.1002/14651858.CD005182.pub4.

Jayasinghe J. Non-adherence in the hypertensive patient: can nursing play a role in assessing and improving compliance? Can J Cardiovasc Nurs. 2009;19:7–12.

Morgado MP, Morgado SR, Mendes LC, Pereira LJ, Castelo-Branco M. Pharmacist interventions to enhance blood pressure control and adherence to antihypertensive therapy: review and meta-analysis. Am J Health Syst Pharm. 2011;68:241–53.

Cooper H, Hedges LV, Valentine JC, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1–34.

World Health Organization. Adherence to long-term therapies: evidence for action. Geneva: World Health Organization; 2003.

Vrijens B, De Geest S, Hughes DA, Przemyslaw K, Demonceau J, Ruppar T, et al. A new taxonomy for describing and defining adherence to medications. Br J Clin Pharmacol. 2012;73:691–705.

Borenstein M, Hedges L, Higgins JPT, Rothstein H. Introduction to meta-analysis. West Sussex: Wiley; 2009.

Sutton AJ. Publication bias. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 435–52.

Rothstein HR, Hopewell S. Grey literature. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 103–25.

White H. Scientific communication and literature retrieval. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 51–71.

Dickersin K, Scherer R, Lefebvre C. Identifying relevant studies for systematic reviews. BMJ. 1994;309:1286–91.

Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997;315:640–5.

Easterbrook PJ. Directory of registries of clinical trials. Stat Med. 1992;11:363–423.

Langham J, Thompson E, Rowen K. Identification of randomized controlled trials from the emergency medicine literature: comparison of hand searching versus MEDLINE searching. Ann Emerg Med. 1999;34:25–34.

Wood JA. Methodology for dealing with duplicate study effects in a meta-analysis. Orgn Res Methods. 2008;11:79–95.

Devine E. Issues and challenges in coding interventions for meta-analysis of prevention research. Meta-analysis of drug abuse prevention programs. Rockville: National Institute on Drug Abuse; 1997.

Orwin R, Vevea J. Evaluating coding decisions. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 177–203.

Lipsey M, Wilson D. Practical meta-analysis. Thousand Oaks: Sage; 2001.

Raudenbush S. Random effects models. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 295–315.

Hedges L, Vevea J. Fixed- and random-effects models in meta-analysis. Psychol Methods. 1998;3:486–504.

Hedges L, Olkin I. Statistical methods for meta-analysis. Orlando: Academic; 1985.

Conn VS, Hafdahl AR, Mehr DR, LeMaster JW, Brown SA, Nielsen PJ. Metabolic effects of interventions to increase exercise in adults with type 2 diabetes. Diabetologia. 2007;50:913–21.

Shadish W, Haddock C. Combining estimates of effect size. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 257–77.

Nony P, Cucherat M, Haugh MC, Boissel JP. Critical reading of the meta-analysis of clinical trials. Therapie. 1995;50:339–51.

Helmer D, Savoie I, Green C, Kazanjian A. Evidence-based practice: extending the search to find material for the systematic review. Bull Med Libr Assoc. 2001;89:346–52.

Conn VS, Rantz MJ. Research methods: managing primary study quality in meta-analyses. Res Nurs Health. 2003;26:322–33.

de Vet HC, de Bie RA, van der Heijden GJ, Verhagen AP, Sijpkes P, Kipschild P. Systematic review on the basis of methodological criteria. Physiotherapy. 1997;1997:284–9.

Valentine J. Judging the quality of primary research. In: Cooper H, Hedges L, Valentine J, editors. The handbook of research synthesis and meta-analysis. 2nd ed. New York: Russell Sage; 2009. p. 129–46.

Adeyemo A, Tayo BO, Luke A, Ogedegbe O, Durazo-Arvizu R, Cooper RS. The Nigerian antihypertensive adherence trial: a community-based randomized trial. J Hypertens. 2013;31:201–7.

Aguwa CN, Ukwe CV, Ekwunife OI. Effect of pharmaceutical care programme on blood pressure and quality of life in a Nigerian pharmacy. Pharm World Sci. 2008;30:107–10.

Al Owaish RA. Compliance with, and effectiveness of, specialized care of hypertension in Kuwait [dissertation]. Chapel Hill, NC: University of North Carolina; 1985.

Alhalaiqa F, Deane KHO, Nawafleh AH, Clark A, Gray R. Adherence therapy for medication non-compliant patients with hypertension: a randomised controlled trial. J Hum Hypertens. 2012;26:117–26.

Amado Guirado E, Pujol Ribera E, Pacheco Huergo V, Borras JM. Knowledge and adherence to antihypertensive therapy in primary care: results of a randomized trial. Gac Sanit. 2011;25:62–7.

Austin DL. Selected nursing interventions for noncompliant hypertensive patients [dissertation]. Denton, TX: Texas Women’s University; 1986.

Avanzini F, Corsetti A, Maglione T, Alli C, Colombo F, Torri V, et al. Simple, shared guidelines raise the quality of antihypertensive treatment in routine care. Am Heart J. 2002;144:726–32.

Becker LA, Glanz K, Sobel E, Mossey J, Zinn SL, Knott KA. A randomized trial of special packaging of antihypertensive medications. J Fam Pract. 1986;22:357–61.

Beeson SA. The effect of teaching by the nurse on patient knowledge of medications and compliant behavior [master’s thesis]. Greensboro, NC: University of North Carolina; 1977.

Beune EJ, van Charante EP M, Beem L, Mohrs J, Agyemang CO, Ogedegbe G, et al. Culturally adapted hypertension education (CAHE) to improve blood pressure control and treatment adherence in patients of African origin with uncontrolled hypertension: cluster-randomized trial. PLoS One. 2014;9:e90103.

Blenkinsopp A, Phelan M, Bourne J, Dakhil N. Extended adherence support by community pharmacists for patients with hypertension: a randomised controlled trial. Int J Pharm Pract. 2000;8:165–75.

Bogner H, de Vries H. Integration of depression and hypertension treatment: a pilot, randomized controlled trial. Ann Fam Med. 2008;6:295–301.

Boissel JP, Meillard O, Perrin-Fayolle E, Ducruet T, Alamercery Y, Sassano P, et al. Comparison between a bid and a tid regimen: improved compliance with no improved antihypertensive effect. Eur J Clin Pharmacol. 1996;50:63–7.

Bosworth HB, Olsen MK, Neary A, Orr M, Grubber J, Svetkey L, et al. Take Control of Your Blood Pressure (TCYB) study: a multifactorial tailored behavioral and educational intervention for achieving blood pressure control. Patient Educ Couns. 2008;70:338–47.

Burrelle TN. Evaluation of an interdisciplinary compliance service for elderly hypertensives. J Geriatr Drug Ther. 1986;1:23–51.

Carter BL, Doucette WR, Franciscus CL, Ardery G, Kluesner KM, Chrischilles EA. Deterioration of blood pressure control after discontinuation of a physician-pharmacist collaborative intervention. Pharmacotherapy. 2010;30:228–35.

Cooper LA, Roter DL, Carson KA, Bone LR, Larson SM, Miller 3rd ER, et al. A randomized trial to improve patient-centered care and hypertension control in underserved primary care patients. J Gen Intern Med. 2011;26:1297–304.

Erickson SR, Ascione FJ, Kirking DM. Utility of electronic device for medication management in patients with hypertension: a pilot study. J Am Pharm Assoc (2003). 2005;45:88–91.

Evans CE, Haynes RB, Birkett NJ, Gilbert JR, Taylor DW, Sackett DL, et al. Does a mailed continuing education program improve physician performance? Results of a randomized trial in antihypertensive care. JAMA. 1986;255:501–4.

Fernandez S, Scales KL, Pineiro JM, Schoenthaler AM, Ogedegbe G. A senior center-based pilot trial of the effect of lifestyle intervention on blood pressure in minority elderly people with hypertension. J Am Geriatr Soc. 2008;56:1860–6.

Fletcher SW, Appel FA, Bourgeois MA. Management of hypertension. Effect of improving patient compliance for follow-up care. J Am Med Assoc. 1975;233:242–4.

Friedberg JP, Rodriguez MA, Watsula ME, Lin I, Wylie-Rosett J, Allegrante JP, et al. Effectiveness of a tailored behavioral intervention to improve hypertension control: primary outcomes of a randomized controlled trial. Hypertension. 2015;65:440–6.

Gomez-Marcos M, Garcia-Ortiz L, Gonzalez-Elena LJ, Ramos-Delgado E, Gonzalez-Garcia AM, Parra-Sanchez J. Effectiveness of a quality improvement intervention in blood pressure control in primary care. Rev Clin Esp. 2006;206:428–34.

Gonzalez-Fernandez RA, Rivera M, Torres D, Quiles J, Jackson A. Usefulness of a systemic hypertension in-hospital educational program. Am J Cardiol. 1990;65:1384–6.

Hacihasanoğlu R, Gözüm S. The effect of patient education and home monitoring on medication compliance, hypertension management, healthy lifestyle behaviours and BMI in a primary health care setting. J Clin Nurs. 2011;20:692–705.

Harowski KJ. The effect of problem solving training on compliance to a medical regimen for hypertension [dissertation]. Missoula, MT: University of Montana; 1983.

Harper DC. Application of Orem’s theoretical constructs to self-care medication behaviors in the elderly. Adv Nurs Sci. 1984;6:29–46.

Hawkins DW, Fiedler FP, Douglas HL, Eschbach RC. Evaluation of a clinical pharmacist in caring for hypertensive and diabetic patients. Am J Hosp Pharm. 1979;36:1321–5.

Haynes RB, Sackett DL, Gibson ES, Taylor DW, Hackett BC, Roberts RS, et al. Improvement of medication compliance in uncontrolled hypertension. Lancet. 1976;1:1265–8.

Hess PL, Reingold JS, Jones J, Fellman MA, Knowles P, Ravenell JE, et al. Barbershops as hypertension detection, referral, and follow-up centers for black men. Hypertension. 2007;49:1040–6.

Hunt JS, Siemienczuk J, Pape G, Rozenfeld Y, MacKay J, LeBlanc BH, et al. A randomized controlled trial of team-based care: impact of physician-pharmacist collaboration on uncontrolled hypertension. J Gen Intern Med. 2008;23:1966–72.

Inui TS, Yourtee EL, Williamson JW. Improved outcomes in hypertension after physician tutorials. A controlled trial. Ann Intern Med. 1976;84:646–51.

Jafar TH, Hatcher J, Poulter N, Islam M, Hashmi S, Qadri Z, et al. Community-based interventions to promote blood pressure control in a developing country: a cluster randomized trial. Ann Intern Med. 2009;151:593–601.

Johnson AL, Taylor DW, Sackett DL, Dunnett CW, Shimizu AG. Self-recording of blood pressure in the management of hypertension. Can Med Assoc J. 1978;119:1034–9.

Kauric-Klein Z. Improving blood pressure control in end stage renal disease through a supportive educative nursing intervention. Nephrol Nurs J. 2012;39:217–28.

Kim KB, Han HR, Huh B, Nguyen T, Lee H, Kim MT. The effect of a community-based self-help multimodal behavioral intervention in korean american seniors with high blood pressure. Am J Hypertens. 2014;27:1199–208.

Kim MT, Kim E, Han H, Jeong S, Lee JE, Park HJ, et al. Mail education is as effective as in-class education in hypertensive Korean patients. J Clin Hypertens. 2008;10:176–84.

Kobalava Z, Villevalde S, Isikova KH, Pavlova E. Impact of integrated approach on blood pressure control in non adherent motivated. 20th European Meeting on Hypertension; Oslo, Norway. pp. e329. Accessed.

Leung LB, Busch AM, Nottage SL, Arellano N, Glieberman E, Busch NJ, et al. Approach to antihypertensive adherence: a feasibility study on the use of student health coaches for uninsured hypertensive adults. Behav Med. 2012;38:19–27.

Levine DM, Green LW, Deeds SG, Chwalow J, Russell RP, Finlay J. Health education for hypertensive patients. J Am Med Assoc. 1979;241:1700.

Logan AG, Milne BJ, Achber C. A comparison of community and occupationally provided antihypertensive care. J Occup Environ Med. 1982;24:901–6.

Ma C, Zhou Y, Zhou W, Huang C. Evaluation of the effect of motivational interviewing counselling on hypertension care. Patient Educ Couns. 2014;95:231–7.

Magadza C, Radloff SE, Srinivas SC. The effect of an educational intervention on patients’ knowledge about hypertension, beliefs about medicines, and adherence. Res Social Adm Pharm. 2009;5:363–75.

Marquez Contreras E, Casado Martinez JJ, Celotti Gomez B, Gascon Vivo J, Martin de Pablos JL, Gil Rodriguez R, et al. Treatment compliance in arterial hypertension. A 2-year intervention trial through health education. Aten Primaria. 2000;26:5–10.

Marquez Contreras E, de la Figuera von Wichmann M, Gil Guillen V, Ylla-Catala A, Figueras M, Balana M, et al. Effectiveness of an intervention to provide information to patients with hypertension as short text messages and reminders sent to their mobile phone (HTA-Alert). Aten Primaria. 2004;34:399–405.

Marquez Contreras E, Martel Claros N, Gil Guillen V, Martin De Pablos JL, De la Figuera Von Wichman M, Casado Martinez JJ, et al. Non-pharmacological intervention as a strategy to improve antihypertensive treatment compliance. Aten Primaria. 2009;41:501–10.

Marquez Contreras E, Vegazo Garca O, Claros NM, Gil Guillen V, de la Figuera von Wichmann M, Casado Martinez J, et al. Efficacy of telephone and mail intervention in patient compliance with antihypertensive drugs in hypertension. ETECUM-HTA study. Blood Press. 2005;14:151–8.

Márquez-Contreras E, Martell-Claros N, Gil-Guillén V, Wichmann MF-V, Casado-Martínez JJ, Martin-de Pablos JL, et al. Efficacy of a home blood pressure monitoring programme on therapeutic compliance in hypertension: the EAPACUM-HTA study. J Hypertens. 2006;24:169–75.

Martin MY, Kim YI, Kratt P, Litaker MS, Kohler CL, Schoenberger YM, et al. Medication adherence among rural, low-income hypertensive adults: a randomized trial of a multimedia community-based intervention. Am J Health Promot. 2011;25:372–8.

McGillicuddy JW, Gregoski MJ, Weiland AK, Rock RA, Brunner-Jackson BM, Patel SK, et al. Mobile health medication adherence and blood pressure control in renal transplant recipients: a proof-of-concept randomized controlled trial. JMIR Res Protoc. 2013;2, e32. doi:10.2196/resprot.2633.

McKenney JM, Brown ED, Necsary R, Reavis HL. Effect of pharmacist drug monitoring and patient education on hypertensive patients. Contemp Pharm Pract. 1978;1:50–6.

McKenney JM, Slining JM, Henderson HR, Devins D, Barr M. The effect of clinical pharmacy services on patients with essential hypertension. Circulation. 1973;48:1104–11.

McKinstry B, Hanley J, Wild S, Pagliari C, Paterson M, Lewis S, et al. Telemonitoring based service redesign for the management of uncontrolled hypertension: multicentre randomised controlled trial. BMJ. 2013;346:f3030. doi:10.1136/bmj.f3030.

Mehos BM, Saseen JJ, MacLaughlin EJ. Effect of pharmacist intervention and initiation of home blood pressure monitoring in patients with uncontrolled hypertension. Pharmacotherapy. 2000;20:1384–9.

Migneault J, Dedier J, Wright J, Heeren T, Campbell M, Morisky D, et al. A culturally adapted telecommunication system to improve physical activity, diet quality, and medication adherence among hypertensive African–Americans: a randomized controlled trial. Ann Behav Med. 2012;1–12.

Mitchell ML. Effects of a self-efficacy intervention on adherence to antihypertensive regimens [dissertation]. Rochester, NY: University of Rochester; 1993.

Moore JM, Shartle D, Faudskar L, Matlin OS, Brennan TA. Impact of a patient-centered pharmacy program and intervention in a high-risk group. J Manag Care Pharm. 2013;19:228–36.

Morgado M, Rolo S, Castelo-Branco M. Pharmacist intervention program to enhance hypertension control: a randomised controlled trial. Int J Clin Pharm. 2011;33:132–40.

Muhlhauser I, Sawicki P, Didjurgeit U, Jorgens V, Berger M. Uncontrolled hypertension in type 1 diabetes: assessment of patients’ desires about treatment and improvement of blood pressure control by a structured treatment and teaching programme. Diabet Med. 1988;5:693–8.

Murray MD, Harris LE, Overhage JM, Zhou X, Eckert GJ, Smith FE, et al. Failure of computerized treatment suggestions to improve health outcomes of outpatients with uncomplicated hypertension: results of a randomized controlled trial. Pharmacotherapy. 2004;24:324–37.

Ogedegbe G, Chaplin W, Schoenthaler A, Statman D, Berger D, Richardson T, et al. A practice-based trial of motivational interviewing and adherence in hypertensive African Americans. Am J Hypertens. 2008;21:1137–43.

Ogedegbe G, Tobin JN, Fernandez S, Cassells A, Diaz-Gloster M, Khalida C, et al. Counseling African Americans to control hypertension: cluster-randomized clinical trial main effects. Circulation. 2014;129:2044–51.

Oliver S, Jones J, Leonard D, Crabbe A, Delkhah Y, Nesbitt S. Improving adherence with amlodipine/atorvastatin therapy: IMPACT study. J Clin Hypertens. 2011;13:598–604. doi:10.1111/j.1751-7176.2011.00478.x.

Oparah AC, Adje DUO, Enato EF. Outcomes of pharmaceutical care intervention to hypertensive patients in a Nigerian community pharmacy. Int J Pharm Pract. 2006;14:115–22.

Oser M. Evaluation of a bibliotherapy intervention for improving patients’ adherence to antihypertensive medications [dissertation]. Reno, NV: University of Nevada; 2008.

Park JJ, Kelly P, Carter BL, Burgess PP. Comprehensive pharmaceutical care in the chain setting. J Am Pharm Assoc. 1996;NS36:443–51.

Park YH, Chang H, Kim J, Kwak JS. Patient‐tailored self‐management intervention for older adults with hypertension in a nursing home. J Clin Nurs. 2013;22:710–22.

Patel S, Jacobus-Kantor L, Marshall L, Ritchie C, Kaplinski M, Khurana PS, et al. Mobilizing your medications: an automated medication reminder application for mobile phones and hypertension medication adherence in a high-risk urban population. J Diabetes Sci Technol. 2013;7:630–9.

Pertusa Martínez S, Quirce F, Saavedra MD, Merino J. Evaluation of 3 strategies to improve therapeutic compliance of patients with essential hypertension. Aten Primaria. 1998;22:670–1.

Pierce JP, Watson DS, Knights S, Gliddon T, Williams S, Watson R. A controlled trial of health education in the physician’s office. Prev Med. 1984;13:185–94.

Pippalla RS. An impact assessment of pharmacist counseling on pharmaceutical care of hypertensives: interrelationships of compliance, quality of life, and therapeutic outcomes, with some policy perspectives [dissertation]. Morgantown, WV: University of West Virginia; 1994.

Pladevall M, Brotons C, Gabriel R, Arnau A, Suarez C, Marquez E, et al. Multicenter cluster-randomized trial of a multifactorial intervention to improve antihypertensive medication adherence and blood pressure control among patients at high cardiovascular risk (the COM99 study). Circulation. 2010;122:1183–91.

Planas L, Crosby KM, Mitchell KD, Farmer KC. Evaluation of a hypertension medication therapy management program in patients with diabetes. J Am Pharm Assoc (2003). 2009;49:164–70.

Powell KW. Strategy to improve blood pressure control and medication adherence [dissertation]. Chicago, IL: Rush University; 2002.

Resnick B, Shaughnessy M, Galik E, Scheve A, Fitten R, Morrison T, et al. Pilot testing of the PRAISEDD intervention among African-American and low-income older adults. J Cardiovasc Nurs. 2009;24:352–61.

Rinfret S, Lussier M, Peirce A, Duhamel F, Cossette S, Lalonde L, et al. The impact of a multidisciplinary information technology-supported program on blood pressure control in primary care. Circulation. 2009;2:170–7.

Robinson JD, Segal R, Lopez LM, Doty RE. Impact of a pharmaceutical care intervention on blood pressure control in a chain pharmacy practice. Ann Pharmacother. 2010;44:88–96.

Roca-Cusachs A, Sort D, Altimira J, Bonet R, Guilera E, Monmany J, et al. The impact of a patient education programme in the control of hypertension. J Hum Hypertens. 1991;5:437–41.

Rodriguez MA. Is behavior change sustainable for diet, exercise, and medication adherence? [dissertation]. New York, NY: Yeshiva University; 2011.

Roumie CL, Elasy TA, Greevy R, Griffin MR, Liu X, Stone WJ, et al. Improving blood pressure control through provider education, provider alerts, and patient education: a cluster randomized trial. Ann Intern Med. 2006;145:165–75.

Ruppar TM. Randomized pilot study of a behavioral feedback intervention to improve medication adherence in older adults with hypertension. J Cardiovasc Nurs. 2010;25:470–9.

Saleem F, Hassali MA, Shafie AA, Ul Haq N, Farooqui M, Aljadhay H, et al. Pharmacist intervention in improving hypertensionrelated knowledge, treatment medication adherence and health-related quality of life: a non-clinical randomized controlled trial. Health Expect. 2013. doi:10.1111/hex.12101.

Santschi V, Rodondi N, Bugnon O, Burnier M. Impact of electronic monitoring of drug adherence on blood pressure control in primary care: a cluster 12-month randomised controlled study. Eur J Intern Med. 2008;19:427–34.

Saunders LD, Irwig LM, Gear JS, Ramushu DL. A randomized controlled trial of compliance improving strategies in Soweto hypertensives. Med Care. 1991;29:669–78.

Schroeder K, Fahey T, Hollinghurst S, Peters TJ. Nurse-led adherence support in hypertension: a randomized controlled trial. Fam Pract. 2005;22:144–51.

Sicras-Mainar A, Ibanez J, Firas X, Bulto C, Costa A, Majos N et al. Evaluation of Telemedicine Program (ITHACA): innovation in the treatment of arterial hypertension increasing the compliance and adherence. International Society for Pharmacoeconomics and Outcomes Research 17th Annual Meeting; Washington, D.C. pp. A528. Accessed.

Skaer TL, Sclar DA, Markowski DJ, Won JKH. Effect of value-added utilities in promoting prescription refill compliance among patients with hypertension. Curr Ther Res Clin Exp. 1993;53:251–5.

Solomon DK, Portner TS, Bass GE, Gourley DR, Gourley GA, Holt JM, et al. Clinical and economic outcomes in the hypertension and COPD arms of a multicenter outcomes study. J Am Pharm Assoc. 1998;38:574–85.

Sookaneknun P, Richards RM, Sanguansermsri J, Teerasut C. Pharmacist involvement in primary care improves hypertensive patient clinical outcomes. Ann Pharmacother. 2004;38:2023–8.

Stewart A, Noakes T, Eales C, Shepard K, Becker P, Veriawa Y. Adherence to cardiovascular risk factor modification in patients with hypertension. Cardiovasc J South Afr. 2005;16:102–7.

Stewart K, George J, Jackson SL, Peterson GM, Hughes JD, McNamara KP et al. Increasing community pharmacy involvement in the prevention of cardiovascular disease. 2008. http://www.5cpa.com.au/iwov-resources/documents/The_Guild/PDFs/CPA%20and%20Programs/4CPA%20General/2007%2008-10/RFT0810%20CVD%20Full%20Final%20Report%20FINAL.pdf. 2012.

Svarstad BL, Kotchen JM, Shireman TI, Brown RL, Crawford SY, Mount JK, et al. Improving refill adherence and hypertension control in black patients: Wisconsin TEAM trial. J Am Pharm Assoc (2003). 2013;53:520–9.

van de Steeg-van Gompel CHPA, Wensing M, De Smet PAGM. Implementation of adherence support for patients with hypertension despite antihypertensive therapy in general practice: a cluster randomized trial. Am J Hypertens. 2010;23:1038–45.

Vivian EM. Improving blood pressure control in a pharmacist-managed hypertension clinic. Pharmacotherapy. 2002;22:1533–40.

Walker CC. An educational intervention for hypertension management in older African Americans. Ethn Dis. 2000;10:165–74.

Wang J, Wu J, Yang J, Zhuang Y, Chen J, Qian W, et al. Effects of pharmaceutical care interventions on blood pressure and medication adherence of patients with primary hypertension in China. Clin Res Regul Aff. 2011;28:1–6.

Wentzlaff DM, Carter BL, Ardery G, Franciscus CL, Doucette WR, Chrischilles EA, et al. Sustained blood pressure control following discontinuation of a pharmacist intervention. J Clin Hypertens. 2011;13:431–7.

Werner RT, Sr. The effects of health education on the compliance of hypertensive patients to medical regimens [dissertation]. Philadelphia, PA: Temple University; 1979.

Wong MC, Liu KQ, Wang HH, Lee CL, Kwan MW, Lee KW, et al. Effectiveness of a pharmacist-led drug counseling on enhancing antihypertensive adherence and blood pressure control: a randomized controlled trial. J Clin Pharmacol. 2013;53:753–61. doi:10.1002/jcph.101.

Zang X, Liu J, Chai Y, Wong FKY, Zhao Y. Effect on blood pressure of a continued nursing intervention using chronotherapeutics for adult Chinese hypertensive patients. J Clin Nurs. 2010;19:1149–56.

Zillich AJ, Sutherland JM, Kumbera PA, Carter BL. Hypertension outcomes through blood pressure monitoring and evaluation by pharmacists (HOME study). J Gen Intern Med. 2005;20:1091–6.

Mullen PD, Green LW, Persinger GS. Clinical trials of patient education for chronic conditions: a comparative meta-analysis of intervention types. Prev Med. 1985;14:753–81.

Peterson AM, Takiya L, Finley R. Meta-analysis of trials of interventions to improve medication adherence. Am J Health Syst Pharm. 2003;60:657–65.

Conn VS, Hafdahl AR, Cooper PS, Ruppar TM, Mehr DR, Russell CL. Interventions to improve medication adherence among older adults: meta-analysis of adherence outcomes among randomized controlled trials. Gerontologist. 2009;49:447–62.

Chase J, Bogener J, Ruppar T, Conn V. The effectiveness of medication adherence interventions among patients with coronary artery disease: a meta-analysis. J Cardiovasc Nurs. 2015. doi:10.1097/JCN.0000000000000259.

Ruppar T. Improvement in heart failure mortality and readmission outcomes from medication adherence interventions: a meta-analysis. 2015.

Conn V, Ruppar T, Enriquez M, Cooper P. Medication adherence interventions that target subjects with adherence problems: systematic review and meta-analysis. Res Social Adm Pharm. In press.

Conn V, Enriquez M, Ruppar T, Chan K. Cultural relevance in medication adherence interventions with underrepresented adults: systematic review and meta-analysis of outcomes. Prev Med. 2014;69:239–47.

Perreault S, Dragomir A, White M, Lalonde L, Blais L, Berard A. Better adherence to antihypertensive agents and risk reduction of chronic heart failure. J Intern Med. 2009;266:207–18.

Lowy A, Munk VC, Ong SH, Burnier M, Vrijens B, Tousset EP, et al. Effects on blood pressure and cardiovascular risk of variations in patients’ adherence to prescribed antihypertensive drugs: role of duration of drug action. Int J Clin Pract. 2011;65:41–53.

Mancia G, Messerli F, Bakris G, Zhou Q, Champion A, Pepine CJ. Blood pressure control and improved cardiovascular outcomes in the International Verapamil SR-Trandolapril Study. Hypertension. 2007;50:299–305.

Mazzaglia G, Ambrosioni E, Alacqua M, Filippi A, Sessa E, Immordino V, et al. Adherence to antihypertensive medications and cardiovascular morbidity among newly diagnosed hypertensive patients. Circulation. 2009;120:1598–605.

Roebuck MC, Liberman JN, Gemmill-Toyama M, Brennan TA. Medication adherence leads to lower health care use and costs despite increased drug spending. Health Aff (Millwood). 2011;30:91–9.

Iuga AO, McGuire MJ. Adherence and health care costs. Risk Manag Healthc Policy. 2014;7:35–44.

Shimizu M, Shibasaki S, Kario K. The value of home blood pressure monitoring. Curr Hypertens Rep. 2006;8:363–7.

Alsabbagh MH, Lemstra M, Eurich D, Lix LM, Wilson TW, Watson E, et al. Socioeconomic status and nonadherence to antihypertensive drugs: a systematic review and meta-analysis. Value Health. 2014;17:288–96.

Conn VS, Groves PS. Protecting the power of interventions through proper reporting. Nurs Outlook. 2011;59:318–25.

Michie S, Prestwich A. Are interventions theory-based? Development of a theory coding scheme. Health Psychol. 2010;29:1–8.

Burnier M, Wuerzner G, Struijker-Boudier H, Urquhart J. Measuring, analyzing, and managing drug adherence in resistant hypertension. Hypertension. 2013;62:218–25.

Acknowledgments

The project was supported by Award Numbers 13GRNT16550001 (Conn-principal investigator) from the American Heart Association and R01NR011990 (Conn-principal investigator) from the National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the American Heart Association or the National Institutes of Health.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Drs. Conn and Cooper report grants from American Heart Association and the National Institutes of Health. Drs. Chase, Enriquez, and Ruppar have no conflicts of interest.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

This article is part of the Topical Collection on Prevention of Hypertension: Public Health Challenges

Rights and permissions

About this article

Cite this article

Conn, V.S., Ruppar, T.M., Chase, JA.D. et al. Interventions to Improve Medication Adherence in Hypertensive Patients: Systematic Review and Meta-analysis. Curr Hypertens Rep 17, 94 (2015). https://doi.org/10.1007/s11906-015-0606-5

Published:

DOI: https://doi.org/10.1007/s11906-015-0606-5