Abstract

Technology-delivered interventions can improve the health behaviors and clinical outcomes of persons with diabetes, but only if end users engage with these interventions. To summarize the current knowledge on engagement with technology-based interventions, we conducted a review of recent mobile- and web-delivered intervention studies for adults with type 2 diabetes published from 2011 to 2015. Among 163 identified studies, 24 studies satisfied our inclusion criteria. There was substantial variation in how intervention engagement was reported across studies. Engagement rates were lower among interventions with a longer duration, and engagement decreased over time. In several studies, older age and lower health literacy were associated with less engagement, and more engagement was associated with intervention improvement in at least one outcome, including glycemic control. Future technology-based intervention studies should report on engagement, examine and report on associations between user characteristics and engagement, and aim to standardize how this is reported, particularly in longer trials.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Suboptimal glycemic control can lead to a variety of complications including stroke, heart attacks, and death [1]. Adherence to recommended self-care behaviors (e.g., healthy eating, exercise, medication adherence, self-monitoring of blood glucose [SMBG]) can maintain optimal glycemic control [2, 3] and prevent complications [1], but self-care adherence is challenging, and, in turn, low among adults with type 2 diabetes (T2DM) [4, 5]. Technology-delivered interventions (e.g., mobile- and web-based) can effectively promote the self-care behaviors [6, 7] and improve the glycemic control of adults with T2DM [8, 9], but assume users will engage with interventions independently. The extent to which they do, however, is unclear [6, 10].

The degree to which users engage with an intervention can tell us why an intervention had an effect or not [6], yet technology-based interventions rarely report on users’ engagement [11]. Among studies that do, engagement is operationalized inconsistently. Schubart et al.’s [12] review of web-delivered self-care interventions found differences in how studies report on user engagement (e.g., frequency of website visits, number of users logging in at least once, and pages viewed). Such reporting differences are also common among mobile-delivered interventions (e.g., text message response rate [13], number of users who responded to text messages at least three times per week [14]).

Users’ level of intervention engagement varies widely across studies operationalizing engagement the same way (e.g., text message response rate, average number of website logins) [6]. In 2009, Fjeldsoe et al. [15] reviewed behavior change text messaging interventions and found substantial variability in users’ compliance to the intervention. Studies report that, on average, users respond to as few as 17 % [16] to as many as 84 % [13] of text messages sent during a study period. Web-delivered intervention studies also report wide variability in user engagement, with some studies reporting an average of one to five logins and others reporting more than ten logins [17]. Intervention engagement variability may be in large part due to the wide variability in mobile- and web-based intervention attributes (i.e., intervention duration, dosing, relevance, timing, user requirements) and/or user attributes (e.g., user age, socioeconomic status, or health literacy status).

Understanding the determinants of intervention engagement can help design interventions that optimize engagement; however, the factors influencing engagement in technology-delivered diabetes interventions are unclear. In their 2011 review of mobile interventions for patients with type 1 diabetes and T2DM, Mulvaney et al. [11] found insufficient reporting on engagement to associate intervention design features with engagement. This review included studies published through 2010 and did not include web-delivered interventions. In 2014, Cotter et al. [10] conducted a review of web-delivered diabetes interventions published through January 2013 and called for more research to understand under which conditions and with what types of users, engagement changes.

Lastly, it is unclear whether engagement with a technology-delivered intervention is associated with changes in outcomes. In Fjeldsoe et al.’s [15] review, they reported on one study in which a subgroup of users who were more actively engaged in the intervention showed a trend toward greater glycemic control (A1c) improvement compared with users who were less engaged in the intervention. Moreover, in Cotter et al.’s [10] review, engagement in web-delivered interventions was inconsistently associated with diabetes outcomes.

In this review, we examine the recent literature to provide an updated account of studies reporting user engagement with both mobile- and web-delivered self-care interventions for adults with T2DM. The goals of our review are to describe the different ways engagement is operationalized, describe the extent to which T2DM users are engaging with technology-delivered interventions and how this varies by user characteristics, identify the design features that may facilitate or hinder engagement, and describe if and when engagement is associated with better outcomes.

Methods

Data Sources and Search Strategy

In November 2015, we searched PubMed for technology-delivered self-care interventions used by adults with T2DM. We searched for studies published between 2011 and 2015 to capture those studies not included in Mulvaney et al.’s [13] review. All searches included a term from each of three categories: (1) technology (e.g., mobile intervention, web program, cell phone program), (2) self-care (e.g., self-management, behavior), and (3) diabetes (i.e., type 2 diabetes adults). Terms were intralinked with “OR” and interlinked with “AND.”

Study Eligibility

Eligible studies: (1) included adults with a diagnosis of T2DM (>50 % of the study sample), (2) tested a technology-delivered intervention designed to improve a diabetes self-care behavior, (3) asked participants to use the intervention on their own time (i.e., outside the lab or clinic setting), (4) tested interventions using <50 % telehealth or human interaction, and (5) reported on participants’ engagement with the intervention. We excluded studies focused on the design and development phase of the intervention and studies testing interventions designed to improve a psychological (e.g., depressive symptoms, distress) or psychosocial (e.g., self-care knowledge, self-efficacy) outcome. Additionally, we excluded studies if they were a systematic review, a meta-analysis, or were not published in English.

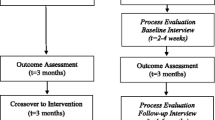

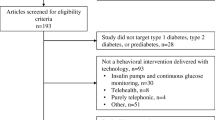

Our search terms identified 163 published articles. Authors LAN and TDC independently reviewed each abstract of the identified articles. Studies were excluded at this stage if both reviewers agreed eligibility criteria were not met. Both authors independently reviewed the full text of the remaining articles, excluding those not meeting eligibility criteria, resulting in 24 articles being included in our review (see Fig. 1).

Data Abstraction and Narrative Synthesis

Given variation in study designs, methodologies employed, and outcomes assessed between the 24 included studies, we used a narrative synthesis approach [18] to describe and identify patterns of engagement across included studies. We created two separate tables to report on both the general intervention characteristics (e.g., study design, sample characteristics, length of intervention, outcomes) (Table 1) and information specific to participants’ engagement with an intervention (e.g., engagement rates, change in engagement across time, and associations between engagement and outcomes) (Table 2). Although all studies in our review tested an intervention designed to improve at least one diabetes self-care behavior, many still reported psychological and psychosocial outcomes and are therefore included in Table 1 and in our results. In both tables, we organized the interventions into two groups based on whether they were primarily a mobile- or web-based intervention. Although some mobile interventions contained web components, all interventions used either mobile phones or the Internet as their dominant platform. The subsequent review represents information available about engagement with mobile- or web-based self-care interventions for adults with T2DM published in PubMed from 2011 to 2015.

Results

Of the 24 articles included in our review, 15 tested a mobile-delivered intervention and 9 tested a web-delivered intervention. The majority of studies (n = 10) were formative research studies (i.e., pilot, feasibility, and usability studies), six were randomized controlled trials, four were non-experimental pre-post studies (i.e., no control group), three were studies reporting data from a larger trial, and one was a quasi-experimental study (i.e., used matched controls). Nearly two thirds (58.3 %) of these articles were published in the past three years (i.e., 2013–2015).

Operationalizing Engagement

The studies in our review operationalized intervention engagement according to the types of technical tasks asked of users (e.g., visiting/logging in to a website, responding to text messages, and uploading blood glucose readings) and how engagement could be assessed based on these tasks (e.g., the average number of website logins or the number of users who logged in to a website). Interventions required users to perform a wide range of technical tasks, resulting in wide variability in how studies operationalized intervention engagement. In addition, there was wide variation in the extent to which studies described and reported on engagement. That is, some studies provided very basic information on the rate of engagement with a single task, whereas others provided detailed information on engagement with different parts of the intervention and during specific periods of time.

Mobile-Delivered Interventions

The 15 mobile-delivered intervention studies in our review reported on engagement with text messages (n = 14), phone calls (n = 5), mobile apps (overall, n = 1; feature-specific, n = 3), and/or a web-based portion of the intervention (n = 2). Six studies only reported the average number of tasks users engaged with (e.g., the rate of text message responses or interactive voice response [IVR] call completions), five studies only reported the number of users who engaged with a specific task (e.g. the number who responded to a text message, the number who opened an app), and four studies reported on both. In most studies (79 %), engagement was based on the full duration of the intervention study period, but in some cases, engagement was based on a specific period of time within the study period. For example, Katz et al.’s [25] 12-month intervention reported the average number of blood glucose uploads during the first and last 10 weeks of the study period, but not the full 12 months. When detailed engagement information was included, studies reported on users’ median time to respond to a text message [22, 26] and the number of users who met a minimum level of engagement (e.g., users who completed at least nine of 12 IVR calls, users who sent responses at least three times/week) [14, 28, 31]. Lastly, several studies categorized users by their level of engagement [21•, 25, 29]. For example, Bell et al. [21•] organized users into three categories based on how often they viewed daily videos sent via text messages (i.e., non-viewers, periodic viewers, and persistent viewers).

Web-Delivered Interventions

Of the nine web-delivered intervention studies in our review, most (67 %) reported the number of times users logged into the website. Because many web-delivered interventions requested users interact with a website, studies also (44 %) reported engagement with these specific tasks (e.g., number of blood glucose uploads and discussion posts, how often goals were set) [36, 37•, 38, 39]. In addition, three studies (33 %) reported the frequency with which different sections of a webpage were viewed [32, 36, 40]. For example, Glasgow et al. [32] reported the exercise section of their website’s “Action Plan” area was visited more than any other self-care section. Two studies (22 %) reported the number of users who logged into a site based on a minimum frequency (i.e., at least monthly [34], and at least once during the study period [37•]), and two studies reported the total average time spent on the site [32, 35]. Similar to Bell et al. [21•], Yu et al. [40] grouped users based on the number of times logged in per month (i.e., non-users, and infrequent, frequent, and heavy users), and Jernigan and Lorig [38] separated users into two groups by their level of website participation (i.e., sporadically vs. regularly).

Levels of Engagement

Due to wide variability in how engagement was operationalized across studies, we were unable to draw conclusions about how much users engage with mobile- or web-delivered interventions. We were, however, able to identify patterns for those studies using a common operationalization.

Mobile Phone Engagement

Among the four studies reporting the average response rate to daily, two-way text messages [13, 22, 23, 26], rates ranged from 52 to 84 %. The types of daily text messages varied with some requesting adherence information (e.g., whether users took their medication) and others requesting blood glucose readings. Additionally, the intervention length (i.e., the period of time users received text messages) varied. For the two studies reporting less engagement (57 and 52 %), the intervention length was 3 [23] and 6 months [26], respectively. Alternatively, for those reporting more engagement (80 and 84 %), the intervention length was 1 [22] and 3 months [13], respectively. Four studies [14, 21•, 23, 28] reported the number of users who did not engage at all or who did so very minimally; rates ranged from 5.8 to 24 %. Of the six studies reporting changes in engagement, engagement decreased in two studies [13, 25], remained relatively stable in three studies [24, 26, 30], and varied by the individual user in one study [29].

Although not reported often enough to identify patterns, there were instances when articles reported associations between user characteristics and intervention engagement. For users around 60 years of age, the probability of completing IVR calls [28] and responding to text messages [13] decreased with increasing age. Additionally, Nelson et al. [13] found users who were non-White and users with lower health literacy were less likely to participate in IVR calls compared with White users and users with higher health literacy, respectively. Lastly, Piette et al. [28] found less education was associated with less IVR participation. The majority of mobile-delivered intervention studies (n = 11) did not report on the associations between user characteristics and engagement or the lack thereof.

Website Engagement

For a web-delivered intervention length of at least 3 months, average logins per month ranged from 0.7 to 7 logins. Studies with fewer logins per month (0.7, 2.4, and 4) had intervention lengths of 9 [40], 12 [37•], and 13 months [39], respectively, whereas those with more logins per month (7 and 7) had intervention lengths of 3 [36] and 4 months [32].

Two studies reported the average time spent on a website [32, 35]. Glasgow et al. [32] reported a total average time of 3 h spent on their website, or about 7 min/visit in a 4-month intervention. Heinrich et al. [35] reported users spent an average of 58 total minutes on their site during a 2-week study or about 16.3 min per visit. Of the four studies reporting on changes in engagement [32, 33, 37•, 40], three described decreased engagement over time [37•] (two described different timeframes from the same intervention [32, 33]); in one study, website logins peaked during weeks 10 and 27 of the intervention, which the authors attributed to an increase in blog use [40].

Only two web-delivered interventions reported associations between user characteristics and engagement [34, 38]. Glasgow et al. [34] reported users with higher health literacy and numeracy skills, and baseline levels of physical activity, were the most engaged (i.e., visited the website at least monthly) during the 12-month intervention period. Additionally, they found users who were non-smokers were more likely to visit the site at least monthly compared with smokers. Jernigan and Lorig [38] did not find differences between American Indian (AI)/Alaskan Native (AN) users and non-AI/AN users in the level of website usage, but reported AI/AN users were more likely to use the website during day time hours compared with non-AI/AN users; the authors noted this may be because more AI/AN users had access to high-speed Internet in an office setting and dial-up Internet at home. Two studies reported no associations between user characteristics and engagement [32, 35], and five studies did not report on this [33, 36, 37•, 39, 40].

Facilitators and Barriers to Intervention Engagement

Our review identified intervention design features used to facilitate user engagement. We also identified barriers to user engagement.

The majority of interventions included in our review employed some form of tailoring (n = 22). However, the extent to which tailoring was used varied widely. The most common form of tailoring was providing users with personalized feedback based on information they provided [13, 27, 36, 39]. For example, Ryan et al.’s intervention [39] delivered educational and motivational messages based on users’ blood glucose uploads. On average, users uploaded data two times per week during a 13-month study period [39]. In Jennings et al.’s intervention [36], users received tailored weekly feedback based on completing an online logbook used to track exercise goals. Over 12 weeks, users logged in to the website an average of 21 ± 34 times [36]. Studies also provided tailored content based on users’ responses to assessments. In Osborn and Mulvaney’s intervention [27], daily text messages were tailored to address users’ self-reported barriers to medication adherence. Users responded to ~82 % of messages in 2-week rounds of testing [27] and 84 % of messages in a 3-month period [13]. In a web-delivered intervention, Jernigan and Lorig [38] tailored content to users’ responses to a baseline questionnaire (e.g., feedback on the meaning of their A1c); 85 % used the website regularly (i.e., about three times per week for 6 weeks). Other studies used very minimal tailoring. Bell et al. [21•] tailored their 6-month intervention by sending text messages at a time specified by the user; over one third of users did not view any video messages or only did so minimally at the start of the study. Similarly, Arora et al. [20] limited tailoring to users’ preference of receiving content in either English or Spanish. During a 3-week study, only 35 and 9 % of users responded to trivia and phone-linked messages, respectively [20]. Fisher et al. [24] also allowed users the choice of English or Spanish content and tailored information in appointment reminder messages (appointment time, date, and location). Over 3 months, users responded to 68 % of messages [24].

Additionally, there were vast differences in how interventions prompted, reminded, or incentivized users to engage with an intervention, which we are calling engagement promotion. In mobile-delivered interventions, intervention components can serve as reminders to use the intervention. For example, receipt of text messages and/or calls reminds users to engage. In two interventions with IVR, the IVR system made multiple attempts to reach users if they did not answer the call [19, 28]. Alternatively, in mobile interventions consisting of only an app and in web-delivered interventions consisting of only a website, users may be expected to engage without prompting. In order to foster continued use in these types of interventions, studies often sent weekly email reminders to visit a website [32, 36, 38, 40]. In one study, if engagement waned, staff members called users to inquire about their low responsiveness [26].

Studies also examined how support from a healthcare provider or peer impacted intervention engagement. In three studies (13 %) users said a provider’s involvement in the intervention (e.g., being able to communicate with a provider or knowing a provider was reviewing their messages) facilitated their engagement [19, 26, 37•]. Further, two RCTs compared engagement between a condition receiving only the technology and a condition receiving both the technology and human support. When reporting the 4-month results of their web-delivered intervention, Glasgow et al. [32] reported greater use of the website among users assigned to the condition with the website plus social support compared to the condition with website access only. In contrast, Torbjørnsen et al. [30] reported no difference in engagement between the condition with access to a mobile app versus the condition with access to the app plus health counseling. This suggests there may be inconsistencies in how providing human support as part of an automated technology impacts users’ engagement.

Studies also relied on users’ feedback to identify barriers to engaging with mobile- or web-delivered interventions. Three studies (13 %), reported technical and usability problems interfered with engagement [13, 37•, 40], and in three studies (13 %) users said an inability to afford a required cell phone plan, or having limited access to a computer or the Internet hindered engagement [23, 25, 40]. To address cost barriers, Katz et al. [25] provided users with a reduction on their cell phone bill each month in exchange for continued engagement. At study end, 59 % who remained active in the program said this discount was needed for their participation. Lastly, there may be unique individual barriers preventing engagement. For example, Yu et al. [40] reported users’ sense of futility affected website use, while users in Jernigan and Lorig’s [38] study said a lack of time, illness, family obligations, and depression were reasons for not completing website activities. Information on these barriers could inform intervention design choices, such as using a technology that can be more easily integrated into users’ lives, requiring minimal deliberate effort. For example, if lack of time is a significant barrier, a text message-based intervention may encourage more engagement than one in which users must access a computer.

Associations Between Engagement and Outcomes

Among studies included in this review, the majority (n = 16) did not report whether there were associations between engagement and outcomes, but some (n = 3) reported null associations and others (n = 7) reported significant associations between engagement and outcomes. Heinrich et al. [35] reported a null association between engagement and diabetes knowledge; however, their web-delivered intervention was only 2 weeks long. Katz et al. [25] found users who remained active in a mobile intervention reduced their hospitalizations and emergency room visits during the 12-month study period compared with the 12 months before the study. Glasgow et al. [32] reported greater website usage was associated with improved eating patterns and physical activity, but not medication adherence at 4 months; notably, of the users whose outcomes improved and who visited the website monthly from months 5 to 12, 53.8 % maintained these outcomes at 12 months [33]. Ryan et al. [39] found more frequent engagement in chat messages and interactive activities were each associated with improved LDL cholesterol. Yu et al. [40] reported users of a diabetes self-care website had greater reductions in diabetes distress than non-users; however, users and non-users did not differ in their self-efficacy or self-care. Finally, in two studies, greater use of an intervention was associated with improved A1c [21•, 37•]. Bell et al. [21•] reported users who persistently viewed text message-delivered videos of diabetes-related tips and reminders experienced the greatest A1c improvement. Jethwani et al. [37•] reported users with more blood glucose uploads had the greatest A1c improvement, with those taking less time to upload their first blood glucose reading experiencing the greatest A1c improvement.

Discussion

With the surge of technology-delivered interventions aimed at improving diabetes outcomes, more research is needed to understand how users engage with these interventions. Unlike most face-to-face approaches, users are typically expected to use mobile- and web-delivered interventions on their own time, that is, the intervention relies on the assumption users will engage with the technology on their own volition. The extent to which users actually engage is critical to understanding how interventions affect outcomes. We conducted a narrative review of studies published between 2011 and 2015 reporting engagement with technology-delivered self-care interventions for adults with T2DM and found variability across studies in engagement reporting, lower rates of engagement in web-based interventions with a longer duration, older users and those with lower health literacy/less education being less engaged, and more engagement being associated with better outcomes in several studies. Based on our findings, we make recommendations for reporting and improving user engagement.

Our findings are consistent with Schubart et al.’s [12] review of web-delivered self-care interventions that also reported wide variation in how engagement is operationalized. In order to systematically combine and evaluate engagement findings, studies need to adopt uniform engagement reporting. Of the more common operationalizations used in our review, interventions using two-way text messaging should report users’ average text message response rate and web-delivered interventions should report average website logins and average time spent on a website. Further, studies should report whether there is an expectation for how much users should engage with an intervention to help identify gaps between intended and actual use. Studies should also report on engagement change by reporting usage at different time intervals throughout the study period (e.g., website logins each month) to identify the point at which engagement wanes. Additionally, rather than only reporting users’ engagement with one primary task, studies should report on engagement with various tasks. For example, reporting the number of different website tasks completed can reveal those parts of a website that were more or less engaging. Finally, dichotomizing users into high- and low-engagement groups and examining group differences in outcomes can identify at which levels of engagement users experience benefit.

Despite variability in operationalizing engagement, we did observe some general patterns across studies. First, it appears engagement may be impacted by intervention length, particularly for web-delivered interventions in which lower rates of website use were reported in longer interventions. Additionally, our results support past reviews indicating engagement decreases over the course of an intervention [7, 10]. It may be the longer users participate in an intervention, the more they lose interest in it. However, because a longer intervention may be necessary to obtain effects, researchers should adopt strategies for boosting engagement throughout an intervention (e.g., tailoring and engagement promotion, discussed below). Lastly, although Cotter et al. [10] did not identify common user characteristics associated with engagement, our review suggests older adults may be less engaged with mobile interventions than younger adults, and individuals with lower health literacy/less education may be less engaged with both mobile- and web-based interventions compared with more health literate/educated individuals.

Based on patterns of engagement reporting, it appears interventions with more tailoring may be more engaging than those with less tailoring. Others have reported tailored, technology-delivered interventions are more effective at improving self-care than those using targeted or generic content [41, 42]. Most interventions in our review used some form of tailoring, although the type and extent of tailoring varied across interventions and was sometimes limited to users’ preferred time to receive elements of the intervention. One way to improve user engagement is to adapt intervention content to users’ individual needs [12]. Rather than only tailoring message delivery based on users’ preferences (e.g., timing and frequency of text messages), more interventions should tailor intervention content based on users’ unique concerns for improving self-care (e.g., content addressing barriers to self-care adherence [27]). Similarly, just-in-time interventions that provide immediate, tailored support based on user data (e.g., feedback on blood glucose uploads) may also improve engagement [43].

When comparing mobile- and web-delivered interventions in our review, we identified a need for more web-delivered interventions to use engagement promotion to remind and encourage users to engage. This finding aligns with both Connelly et al.’s [7] and Brouwer et al.’s [17] reviews that found additional components within the technology (e.g., emails) increases intervention engagement. Future research should explore additional forms of prompting in the form of rewards and/or new content to sustain users’ interest in an intervention over time [17]. This may be particularly important in longer interventions.

Technology-delivered interventions should also anticipate possible barriers to engagement. If working with a low-income population, for example, adopting a low-cost technology (e.g., basic cell phone technology: text messaging and voice communications) and/or providing a stipend to cover the cost of the technology and/or its service plan may improve engagement [20]. Additionally, conducting usability studies can help identify and address technical issues that may discourage engagement in a longer trial [44].

Our review compiled evidence suggesting engagement with a technology-delivered intervention is associated with improved outcomes. Among the eight studies reporting on the association between engagement and outcomes, seven found an association between more engagement and outcome improvement, including two studies reporting improved glycemic control [21•, 37•]. Bell et al. [21•] grouped users by their level of engagement to determine at what dose of the intervention users would benefit. Users who viewed >10 video messages per month experienced the greatest A1c improvement, but none of the users viewed messages daily [21•]. Considering users still benefited despite not engaging every day, Bell et al. [21•] suggest intermittent reinforcement (i.e., frequent, but not daily contact) may be an effective approach for sustaining users’ engagement and improving A1c. More research needs to examine how dosing level may explain the association between engagement and outcomes. Additionally, studies should examine whether dosing should be tailored to different types of users; that is, some users may get annoyed with daily messages and engage more regularly with weekly messages, whereas others may appreciate daily contact.

There are several limitations to our review. The majority of studies included in our review were feasibility, usability, and pilot studies. Because these types of studies tend to have a shorter duration, engagement may be different in longer trials testing the same intervention. Further, we excluded studies from our review testing interventions with >50 % telehealth because we were primarily interested in user engagement with automated interventions. Given the human-to-human element of telehealth, engagement with those interventions may be higher than with automated interventions. Several studies in our review reported a human’s involvement in the intervention was important to users’ engagement. Additionally, we categorized interventions as being primarily mobile- or web-based, but some mobile interventions used web components, which may have affected our results. As more interventions combine mobile and web platforms, research should explore how engagement differs between these combined interventions and those relying on just one platform. Despite our attempt to identify relevant articles, it is possible some were missed and publication bias led to an underreporting of data. Finally, the studies included in our review all had samples with >50 % T2DM, limiting the generalizability of our findings to other patient populations.

Conclusions

Despite variability in engagement reporting and its associations, engagement remains difficult to sustain in technology-delivered self-care interventions for adults with T2DM, and can be impacted by a variety of factors including how the intervention promotes engagement and the characteristics of users. In order to advance our understanding of how engagement varies and impacts outcomes, studies need to report on engagement in a standardized way, consistently report whether or not engagement is associated with user characteristics and outcomes, and report on engagement in longer-term RCTs. Exploring engagement as a mechanism by which an intervention affects outcomes and the optimal dose of engagement will inform how and when users experience benefit.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance

Centers for Disease Control and Prevention. Reports to Congress: Diabetes Report Card. 2014.

Osborn C, Mayberry L, Kim J. Medication adherence may be more important than other behaviors for optimizing glycemic control among low-income adults. J Clin Pharm Ther. 2016.

Gao J, Wang J, Zheng P, Haardörfer R, Kegler MC, Zhu Y, et al. Effects of self-care, self-efficacy, social support on glycemic control in adults with type 2 diabetes. BMC Fam Pract. 2013;14:1.

Kirkman MS, Rowan-Martin MT, Levin R, Fonseca VA, Schmittdiel JA, Herman WH, et al. Determinants of adherence to diabetes medications: findings from a large pharmacy claims database. Diabetes Care. 2015;38:604–9.

García-Pérez L-E, Álvarez M, Dilla T, Gil-Guillén V, Orozco-Beltrán D. Adherence to therapies in patients with type 2 diabetes. Diabetes Ther. 2013;4:175–94.

Holtz B, Lauckner C. Diabetes management via mobile phones: a systematic review. Telemed J E Health. 2012;18:175–84.

Connelly J, Kirk A, Masthoff J, MacRury S. The use of technology to promote physical activity in type 2 diabetes management: a systematic review. Diabet Med. 2013;30:1420–32.

Pal K, Eastwood SV, Michie S, Farmer A, Barnard ML, Peacock R, et al. Computer-based interventions to improve self-management in adults with type 2 diabetes: a systematic review and meta-analysis. Diabetes Care. 2014;37:1759–66.

Liang X, Wang Q, Yang X, Cao J, Chen J, Mo X, et al. Effect of mobile phone intervention for diabetes on glycaemic control: a meta-analysis. Diabet Med. 2011;28:455–63.

Cotter AP, Durant N, Agne AA, Cherrington AL. Internet interventions to support lifestyle modification for diabetes management: a systematic review of the evidence. J Diabet Complications. 2014;28:243–51.

Mulvaney SA, Ritterband LM, Bosslet L. Mobile intervention design in diabetes: review and recommendations. Curr Diab Rep. 2011;11:486–93.

Schubart JR, Stuckey HL, Ganeshamoorthy A, Sciamanna CN. Chronic health conditions and internet behavioral interventions: a review of factors to enhance user engagement. Comput Inform Nurs. 2011;29:TC9–20.

Nelson LA, Mulvaney SA, Gebretsadik T, Ho YX, Johnson KB, Osborn CY. Disparities in the use of a mHealth medication adherence promotion intervention for low-income adults with type 2 diabetes. J Am Med Inform Assoc. 2015.

Capozza K, Woolsey S, Georgsson M, Black J, Bello N, Lence C, et al. Going mobile with diabetes support: a randomized study of a text message-based personalized behavioral intervention for type 2 diabetes self-care. Diabetes Spectr. 2015;28:83–91.

Fjeldsoe BS, Marshall AL, Miller YD. Behavior change interventions delivered by mobile telephone short-message service. Am J Prev Med. 2009;36:165–73.

Hanauer DA, Wentzell K, Laffel N, Laffel LM. Computerized Automated Reminder Diabetes System (CARDS): e-mail and SMS cell phone text messaging reminders to support diabetes management. Diabetes Technol Ther. 2009;11:99–106.

Brouwer W, Kroeze W, Crutzen R, de Nooijer J, de Vries NK, Brug J, et al. Which intervention characteristics are related to more exposure to internet-delivered healthy lifestyle promotion interventions? A systematic review. J Med Internet Res. 2011;13:e2.

Popay J, Roberts H, Sowden A, Petticrew M, Arai L, Rodgers M, et al. Guidance on the conduct of narrative synthesis in systematic reviews. ESRC Methods Program. 2006;15:047–71.

Aikens JE, Rosland AM, Piette JD. Improvements in illness self-management and psychological distress associated with telemonitoring support for adults with diabetes. Prim Care Diabetes. 2015;9:127–34.

Arora S, Peters AL, Agy C, Menchine M. A mobile health intervention for inner city patients with poorly controlled diabetes: proof-of-concept of the TExT-MED program. Diabetes Technol Ther. 2012;14:492–6.

Bell AM, Fonda SJ, Walker MS, Schmidt V, Vigersky RA. Mobile phone-based video messages for diabetes self-care support. J Diabetes Sci Technol. 2012;6:310–9. This study grouped users by their level of engagement in order to examine which group experienced the most improvement in A1c.

Dick JJ, Nundy S, Solomon MC, Bishop KN, Chin MH, Peek ME. Feasibility and usability of a text message-based program for diabetes self-management in an urban African-American population. J Diabetes Sci Technol. 2011;5:1246–54.

Dobson R, Carter K, Cutfield R, Hulme A, Hulme R, McNamara C, et al. Diabetes Text-Message Self-Management Support Program (SMS4BG): a pilot study. JMIR mHealth uHealth. 2015;3. e32.

Fischer HH, Moore SL, Ginosar D, Davidson AJ, Rice-Peterson CM, Durfee MJ, et al. Care by cell phone: text messaging for chronic disease management. Am J Manag Care. 2012;18:e42–7.

Katz R, Mesfin T, Barr K. Lessons from a community-based mHealth diabetes self-management program: “it’s not just about the cell phone”. J Health Commun. 2012;17 Suppl 1:67–72.

Nundy S, Dick JJ, Chou CH, Nocon RS, Chin MH, Peek ME. Mobile phone diabetes project led to improved glycemic control and net savings for Chicago plan participants. Health Aff. 2014;33:265–72.

Osborn CY, Mulvaney SA. Development and feasibility of a text messaging and interactive voice response intervention for low-income, diverse adults with type 2 diabetes mellitus. J Diabetes Sci Technol. 2013;7:612–22.

Piette JD, Valverde H, Marinec N, Jantz R, Kamis K, de la Vega CL, et al. Establishing an independent mobile health program for chronic disease self-management support in Bolivia. Front Public Health. 2014;2:95.

Tatara N, Arsand E, Bratteteig T, Hartvigsen G. Usage and perceptions of a mobile self-management application for people with type 2 diabetes: qualitative study of a five-month trial. Stud Health Technol Inform. 2013;192:127–31.

Torbjørnsen A, Jenum AK, Smastuen MC, Arsand E, Holmen H, Wahl AK, et al. A low-intensity mobile health intervention with and without health counseling for persons with type 2 diabetes. Part 1: baseline and short-term results from a randomized controlled trial in the norwegian part of Renewing Health. JMIR mHealth uHealth. 2014;2:e52.

Wood FG, Alley E, Baer S, Johnson R. Interactive multimedia tailored to improve diabetes self-management. Nurs Clin North Am. 2015;50:565–76.

Glasgow RE, Christiansen SM, Kurz D, King DK, Woolley T, Faber AJ, et al. Engagement in a diabetes self-management Website: usage patterns and generalizability of program use. J Med Internet Res. 2011;13:e9.

Glasgow RE, Kurz D, King D, Dickman JM, Faber AJ, Halterman E, et al. Twelve-month outcomes of an Internet-based diabetes self-management support program. Patient Educ Couns. 2012;87:81–92.

Glasgow RE, Strycker LA, King DK, Toobert DJ. Understanding who benefits at each step in an internet-based diabetes self-management program: application of a recursive partitioning approach. Med Decis Mak. 2014;34:180–91.

Heinrich E, de Nooijer J, Schaper NC, Schoonus-Spit MH, Janssen MA, de Vries NK. Evaluation of the Web-based Diabetes Interactive Education Programme (DIEP) for patients with type 2 diabetes. Patient Educ Couns. 2012;86:172–8.

Jennings CA, Vandelanotte C, Caperchione CM, Mummery WK. Effectiveness of a Web-based physical activity intervention for adults with type 2 diabetes—a randomised controlled trial. Prev Med. 2014;60:33–40.

Jethwani K, Ling E, Mohammed M, Myint UK, Pelletier A, Kvedar JC. Diabetes connect: an evaluation of patient adoption and engagement in a Web-based remote glucose monitoring program. J Diabetes Sci Technol. 2012;6:1328–36. This study provided a thorough description of Website engagement patterns and compared differences in outcomes between users who were more or less engaged.

Jernigan VB, Lorig K. The internet diabetes self-management workshop for American Indians and Alaska Natives. Health Promot Pract. 2011;12:261–70.

Ryan JG, Schwartz R, Jennings T, Fedders M, Vittoria I. Feasibility of an internet-based intervention for improving diabetes outcomes among low-income patients with a high risk for poor diabetes outcomes followed in a community clinic. Diabetes Educ. 2013;39:365–75.

Yu CH, Parsons JA, Mamdani M, Lebovic G, Hall S, Newton D, et al. A Web-based intervention to support self-management of patients with type 2 diabetes mellitus: effect on self-efficacy, self-care and diabetes distress. BMC Med Inform Decis Mak. 2014;14:117.

Lustria ML, Noar SM, Cortese J, Van Stee SK, Glueckauf RL, Lee J. A meta-analysis of Web-delivered tailored health behavior change interventions. J Health Commun. 2013;18:1039–69.

Head KJ, Noar SM, Iannarino NT, Grant Harrington N. Efficacy of text messaging-based interventions for health promotion: a meta-analysis. Soc Sci Med. 2013;97:41–8.

Klasnja P, Pratt W. Healthcare in the pocket: mapping the space of mobile-phone health interventions. J Biomed Inform. 2012;45:184–98.

Nelson LA, Bethune MC, Lagotte A, Osborn CY. The usability of diabetes MAP: a Web-delivered intervention for improving medication adherence. JMIR Human Factors. 2016.

Acknowledgment

Drs. Nelson and Osborn are supported by NIH/NIDDK R01-DK100694, and Dr. Osborn is also supported by K01-DK087894. Dr. Cherrington is supported by NIH/NIDDK P30-DK07962.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Lyndsay A. Nelson, Taylor D. Coston, Andrea L. Cherrington, and Chandra Y. Osborn declare that they have no conflict of interest.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

This article is part of the Topical Collection on Psychosocial Aspects

Rights and permissions

About this article

Cite this article

Nelson, L.A., Coston, T.D., Cherrington, A.L. et al. Patterns of User Engagement with Mobile- and Web-Delivered Self-Care Interventions for Adults with T2DM: A Review of the Literature. Curr Diab Rep 16, 66 (2016). https://doi.org/10.1007/s11892-016-0755-1

Published:

DOI: https://doi.org/10.1007/s11892-016-0755-1