Abstract

One of the objectives of the Intergeo project was to provide European mathematics teachers with “good quality” pedagogical material supporting the use of dynamic geometry software in classrooms. With this aim in view, an online repository/platform was developed to gather various dynamic geometry resources, based on the idea of a teachers’ community feeding the repository, (re)using available resources and sharing practices related to the use of dynamic geometry in classrooms. The repository is thus open to contributions of any user who can deposit, browse, download and use resources, which naturally raises the question how to handle the resource quality issue in such an open environment. This paper reports on the way we tackled this issue in the Intergeo project. We first explain what we mean by a “good quality” dynamic geometry resource. We then provide a rationale behind the design of a questionnaire, the main tool for resource quality reviews, which are at the core of the quality assessment process implemented in the repository. Several experiments carried out with groups of teachers in order to confront our research-based view of the resource quality with the teachers’ one and to observe teachers’ usages of the quality assessment process are also reported in the paper. The outcomes of these experiments highlight strengths and limitations of the resource quality assessment process. They also tend to show that the idea of involving teachers into the resource quality assessment is a promising way of stimulating the use of dynamic geometry in classrooms, provided that teachers benefit from a support to make the quality process their own.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Despite the availability and accessibility of information and communication technologies (ICT) and despite the recommendations to use them in the curricula in many countries, teachers are still reluctant to use these technologies (Artigue 2002; Laborde & Sträßer 2010). One of the reasons for this resistance is certainly a shift in the image teachers have of their profession and the mathematical activity caused by the introduction of ICT into the classrooms (Goos & Soury-Lavergne 2010; Hoyles & Lagrange 2010). Moreover, research studies point out a complexity of processes of ICT integration. An efficient ICT integration requires designing new tasks in which the use of technology gives sense to mathematical concepts (Laborde 2001; Monaghan 2004). However, as pointed out by Laborde (2008), the design of really new tasks is rare; teachers rather adapt tasks given either in paper–pencil or in dynamic geometry environment. The teachers must themselves manipulate and analyze the tasks to take advantage of them. However, the analysis of a task with ICT turns out to be highly complex since the introduction of the “technological” element adds a new dimension to be analyzed and impacts all others—epistemological, didactical and institutional ones—as well. Teachers thus have to be able to solve tasks with technology, analyze the role of technology in the task and evaluate its relevance for learning, as well as the necessity, or the cost, of the new instrumented techniques (Laborde 2008). In order to implement these tasks in their classrooms, teachers have to anticipate techniques that can be used to solve them, learning outcomes they can lead to and classroom settings favorable to the achievement of pedagogical goals (Trouche & Drijvers 2010). They have to cope with the management of relationships between old and new knowledge (Assude & Gélis 2002), and between paper–pencil and computer-based techniques (Guin & Trouche 1999).

Considering the complexity of ICT integration, how teachers can be helped to use them in their classrooms? One would naturally think of training them to design adapted tasks and manage the impact of ICT introduction on students’ learning.

Traditional teacher training courses focusing on ICT use seem to have limited impact on teachers’ practices. They are short-term and consist usually in analyzing ready-made computer-based tasks that had been tested by teacher educators and proved efficient (Little 1993). Moreover, they are not followed by monitoring the teachers’ post-course efforts in using ICT in the classrooms.

Several teacher training initiatives, such as SFODEM (Guin et al. 2008) or Pairform@nce (Gueudet et al. 2009) in France, ICTML project (Fugelstad et al. 2010) in Norway or AProvaME (Healy et al. 2009) in Brazil, have been launched in order to alleviate the above-mentioned limits. Training is focused on a collaborative design of resources by teachers and on a long-term monitoring of teachers’ technology integration efforts. Although these initiatives have demonstrated a positive impact on teachers’ attitude toward ICT and their integration, they are costly in time and human resources (e.g., in the SFODEM, a group of researchers and teacher educators worked with small groups of teachers during 6 years alternating at-presence meetings with at-distance phases).

Another means of supporting ICT integration is to provide teachers with ready-made pedagogical resources, namely over the Internet. This is the case of learning object repositories (cf. Sect. 2.2). However, the availability of resources is not sufficient, as pointed out by Robertson (2006):

“Finding useful and credible resources, educational content of good quality and regularly updated very often comes close to being a utopia. Indeed, the user often faces a lack of support in searching (metadata versus keywords), a need of confidence regarding the quality of information. […] we would claim that appropriation by teachers of pedagogical and didactical materials, favouring students’ learning and complementary to printed resources (school textbooks in particular) seems still difficult, although technologies are available in schools since about twenty years.” (p. 13, authors’ translation).

Robertson stresses three main obstacles: (1) a difficulty to find resources fitting to a specific educational context due to the lack of metadata providing an accurate description of the resource content, (2) a lack of a quality guarantee concerning available resources, which makes it difficult for a teacher to assess the adequacy of a resource to her/his specific context and its possible effects on the students’ learning, and (3) a difficulty of resource appropriation by teachers, which is deemed as one of the main impediments to the exploitation of existing resources.

This paper explores yet another way to stimulate ICT integration in teaching practices all over Europe, focusing on dynamic geometry systems (DGS), and tackling the above-mentioned difficulties. It is based on the idea of involving teachers into a quality assessment of existing dynamic geometry (DG) resources.

This idea has founded the design and implementation of the quality assessment process in the i2geo platform,Footnote 1 an online repository gathering pedagogical material for the use of dynamic geometry in mathematics teaching and learning, developed in the framework of the Intergeo European project.Footnote 2 Intergeo addresses three main issues: (1) interoperability of the main existing DGS, i.e., a possibility to exploit with any DGS a file created with another one thanks to the development of a common file format based on open standards, (2) sharing pedagogical resources well-described by a set of international metadata (Kortenkamp et al. 2009), and (3) a quality assessment process concerned with dynamic geometry resources.

This article focuses on the design and development of the dynamic geometry resource quality assessment process for the i2geo platform. In Sect. 2, we explicit the meanings of the core notions used in the paper, such as resource and quality evaluation. Section 3 describes the design of the quality assessment and its theoretical background. The design process consisted in a cyclical process of a development of a questionnaire, the main tool for resource quality assessment, and its testing with teachers, whose results have led to refined versions of the questionnaire. Some of the experiments are exposed in Sect. 4 followed by concluding remarks and perspectives for further research.

2 Resources and their quality

In this section, we present a review of literature that will serve as groundwork for the definitions of the core notions used in this paper: resource and resource quality.

2.1 Resources or learning objects?

Different expressions are used in the literature when addressing the issue of learning material for students, teachers or teacher educators, particularly in the context of its increasing availability over the Internet. One can thus encounter the terms “instructional components”, “learning objects”, “knowledge elements”, “pedagogical resources”, etc. In what follows, we focus on the terms “resource” and “learning object” that benefit from a particular interest in math education and instructional design communities, and thus from specific theoretical approaches suggesting their more advanced conceptualizations.

The concept of learning object has emerged in the context of e-learning drawing on the “object-oriented paradigm of computer science from the 60s that highly values the creation of components that can be reused” (Del Moral & Cernea 2005, p. 1). Although there is not a consensus on the definition of a learning object, re-usability seems to be its foremost feature. A learning object is thus defined as “any digital resource that can be reused to support learning” (Wiley 2000, p. 7). Metros (2005) stresses that a digital resource (e.g., simulation, video or audio snippet, photo, web page combining various media), although more or less related to learning, is not necessarily a learning object. What makes a resource to be a learning object is the presence of an instructional goal in terms of knowledge or skills to be developed, as well as of a task to be accomplished. The following characteristics of learning objects emerge from the literature, which make them key resources for educators: they should be searchable, granular, re-usable, independent of the context of use and shareable.

The key role of resources in mathematics teachers’ practice has been widely recognized (Adler 2000; Gueudet & Trouche 2009). Like in the case of learning objects, there is not a clear definition of the term “resource”. Some authors propose specific conceptualizations of the term. Adler (2000) suggests using the term “resource” beyond its common sense as material object and thinking about it as “the verb ‘re-source’, to source again or differently”. The concept of resource appears also at the core of a recent theoretical approach, namely the documentational approach of didactics (Gueudet & Trouche 2009). In this perspective, which extends the instrumental approach (Rabardel 2002), a resource (or a set of resources) is considered as an artifact that has to be transformed into an instrument (or a document) by a teacherFootnote 3 in a process including its use in a classroom.

In conclusion, although the terms resource and learning object may appear, at the first sight, as synonymous, assigning similar characteristics to the teaching material, a few significant differences can be highlighted. First, the term resource has a broader meaning going beyond a material object (e.g., verbal interactions with colleagues or students’ productions may be considered as resources for a teacher’s activity). Furthermore, the presented conceptualization confers to resources an evolving character; they are considered as living entities in continuous transformation through their usages. Finally, the most important difference resides in the fact that while learning objects are independent of the context of use, resources cannot be envisaged without their intended or effective usages. This view significantly influences our conceptualization of the i2geo platform content and its quality, as we explain below.

2.1.1 Resources of the i2geo platform

The platform does not impose constraints on the characteristics of uploaded content and welcomes all kinds of teaching material related to the use of dynamic geometry. As we mentioned above, a successful ICT integration supposes using new tasks adapted to the technology. Since designing a new task is a complex process, teachers rather adapt existing ones. For this reason, mathematical tasks involving dynamic geometry, with an explicit educational goal and possibly with documentation for teachers, are a privileged kind of pedagogical material shared on the platform. These tasks are supposed to be used by teachers, who will need to adapt them to their particular classroom contexts. Consequently, they will have the possibility to report about the learning outcomes and to suggest modifications leading to the improvement of the tasks or the accompanying documentation. This is in line with the conceptualization of resources developed by Gueudet and Trouche (2009).

We will therefore use the term resource rather than learning object to refer to this particular kind of pedagogical material on the i2geo platform, to emphasize their foremost characteristics: link with an intended use and evolving character.

2.2 Quality of resources and its evaluation

The issue of resource quality has acquired a high importance in the context of a rapid development of technologies and digital resources, as can be seen from a number of research studies devoted to it. This section draws on some of them.

Some of these studies address the issue of quality in the context of e-learning, i.e., online distance learning. They usually adopt a learner-centered perspective. Quality indicators are thus related to learners’ satisfaction with the e-learning content and their learning. Thus, the e-Quality project (Montalvo 2005) proposes a conceptual model for the quality of a learning material in higher education from the students’ perspective, considering the following aspects as relevant quality indicators: presentation, relation learning objectives/content, content, teaching and learning process, evaluation, links with other materials and technical aspects.

Other studies investigate the quality of resources collected within dedicated learning object repositories that can be addressed to both teachers and learners. Some of these repositories propose quality criteria that authors who wish to deposit a resource have to satisfy. For example, the JEM repositoryFootnote 4 has elaborated quality guidelines proposing the following criteria: “educational quality of content” deemed as the most important characteristic of resources, “presentation of the content”, “technological quality” in terms of usability and portability of resources, “metadata accuracy”, and “availability of case studies” providing feedback from other users (Caprotti & Seppälä 2007).

Other repositories are more open and welcome resources without any a priori evaluation, but rather allow their evaluation by peers. For example, the quality evaluation in the MERLOTFootnote 5 follows three criteria: content quality, potential effectiveness as a teaching tool and ease of use for both students and faculty.

Regarding the evaluation process setup by various learning object repositories, Mahé and Noël (2006) have elaborated a typology of resource evaluation: (1) a priori evaluation by the repository owner; (2) validation of the resource conformity to a deposited content; (3) peer-reviews evaluation; (4) userFootnote 6 evaluation; (5) cross-evaluation both by peers and users. Williams (2000, p. 16) stresses the importance of the user evaluation of resources:

“what constitutes an actual learning object as part of instruction in a given context must be defined by the users of that learning object for it to have useful meaning for those users. Likewise, those same users need to be involved in the evaluation of the learning objects in the context of the instruction in which they learn from those objects.”

To sum up, it turns out that resource quality evaluation issue is quite complex. There is no general model describing the quality of a resource. Criteria suggested by various researchers are rather ad hoc, depending on the nature of resources (e.g., online learning courses, resources for educators, …). They also depend on the purpose of the resource quality evaluation (e.g., measuring students’ satisfaction with learning material, providing authors of resources with guidelines, …).

2.2.1 Quality of dynamic geometry resources

How can we characterize the quality of dynamic geometry resources in the i2geo platform? Drawing on previous research studies related to the quality issue, as well as on our epistemological position linking closely a resource with its usages, we propose nine dimensions contributing to the resource quality.

First, we consider that content and technical aspects are the most important characteristics of a resource. The first one ensures not only mathematical validity of the content, which is in line with the above-mentioned quality studies, but also its accordance with institutional expectations (Chevallard 1992). The second one rates technical exploitability of the resource. Since the resource involves a DGS, two other dimensions have to be taken into account: instrumental aspect and dynamic geometry added-value. The former pertains to the coherence of the figures with the mathematical task at stake. Regarding the latter, the resource should take advantage from dynamic geometry potentialities (Artigue 2002; Laborde 2008) and propose tasks adapted to this technology (these aspects are further developed in Sect. 3.2). Considering a resource closely linked with its use, its didactical and pedagogical implementations constitute other dimensions contributing to its quality, in line with the European Foundation for Quality in eLearning (EFQUELFootnote 7):

“In reality the quality of the resource only has any real mean when considered in context, i.e. in the situation where a resource is employed in a specific context through a specific learner, or teacher. Quality in such an understanding is constituted as a relation between a specific resource […] and the way it is used, perceived and valued through interaction in an educational context.”

Identification of students’ pre-requisites, both mathematical and technical, required for the resource to be used in a classroom, is also essential to make sure the activity can be pursued by students. Similarly, learning outcomes of the resource should be likely to ground further learning. These two criteria rate the potential of the resource integration into a usual teaching process, which is another dimension contributing to its quality. The last two dimensions concern the ergonomic aspect rating the resource presentation and adaptability, and the metadata accuracy facilitating resource searchability. This view of dynamic geometry resource quality has grounded the development of Intergeo quality assessment process presented in the following section.

3 The i2geo quality assessment process

The choice of an open platform in Intergeo, where any user can contribute resources, is a way to overcome the scattering of resources and to rapidly build a large and rich collection (more than 3,300 resources in January 2011). But this choice results also in a very heterogeneous and non-organized collection of resources. Such a collection requires means not only to help users with finding resources that fit to their aims and contexts of use, but also to frame the process of resource improvement. The quality assessment process described in the sequel has been developed and implemented with this aim in view.

The aim of the quality assessment process is to propose a shared vision of dynamic geometry resource quality and to frame the process of resource evaluation by the platform users leading to the improvement of resources in accordance with this vision.

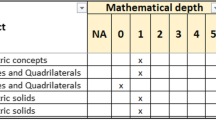

The main tool for the resource quality assessment on the platform is a questionnaire organized around the nine above-mentioned dimensions. It is presented as a list of nine items, one per dimension. Each item can be developed in a set of criteria (Fig. 1), allowing a more detailed analysis of the corresponding dimension of the resource.

General items and associated criteria are formulated as statements to which reviewers are supposed to answer by giving their opinion in terms of a four-level range of agreement. These quantitative answers can be complemented by qualitative comments, which are crucial for the resource improvement. Indeed, they can contain critics and advices enabling the resource author or any other user to subsequently improve the resource along its weaker dimensions, which is the main purpose of the quality assessment process. The process relies on analyses done both prior to any classroom test and after a classroom test of a resource.

The quality assessment process involves all users of the platform, provided they are logged in, be they teachers, researchers or teacher educators. It combines peer and user reviews, which is rare in learning object repositories (cf. Sect. 2.2). The choice of providing a unique questionnaire for all platform users was motivated by two reasons. First, the Intergeo project intends to develop a community of dynamic geometry users (teachers, software designers, teacher educators, researchers, …) all over Europe and the platform is a means to support it. Tools that can be shared by this community have to be designed to favor its development and cohesion. The quality questionnaire can belong to the shared repertoire of this community, providing reification mechanisms to express quality evaluations. Second, the questionnaire is also a means to make teachers using the platform and reviewing resources reflect on criteria and various ways of using dynamic geometry in a classroom (Trgalová et al. 2009). We bring some evidence in Sect. 4 that the questionnaire can thus become a tool for teachers’ professional development.

In the following section, we come back to the design of the questionnaire that supports the resource quality assessment process and we present the design methodology and the theoretical assumptions that have driven the design.

3.1 Design methodology of the questionnaire

Considering the importance of interactions between the design of a tool and its usages (Rabardel 2002), the questionnaire has been elaborated in a cyclical process combining the design of successive versions and their subsequent tests with teachers leading to progressive refinements of the tool. Moreover, the design of the questionnaire has been carried out in close collaboration between math education researchers and a group of experienced teachers.Footnote 8 This was a key feature of the design methodology ensuring that the formulations of items and criteria are accessible to ordinary teachers.

The first step of the elaboration of the questionnaire consisted in listing various characteristics or elements of a dynamic geometry resource related to the nine dimensions (cf. Sect. 2.2) we consider as relevant indicators of the resource quality, drawing on our theoretical and epistemological positions. These characteristics (around 60) have been grouped into classes corresponding to these dimensions, each of which giving rise to the associated general item (Fig. 1).

The following section provides details of the design of some parts of the questionnaire as well as the theoretical considerations underpinning the design.

3.2 Theoretical underpinning of some items of the questionnaire

While designing the questionnaire, we tried to address the challenge of drawing on research results related to the use of dynamic geometry in mathematics education and making them accessible for teachers. In what follows, we provide a rationale of the elaboration of criteria referring to the instrumental dimension, dynamic geometry added-value, and didactical and pedagogical implementations.

3.2.1 Instrumental dimension of the resource content

Research studies focusing on students’ learning with ICT highlight that the conceptualization of mathematical notions is intertwined with students’ interactions with a learning environment (Artigue 2002). More precisely, the way a student uses the affordances of the environment and faces its constraints shapes her/his conceptualization of the objects s/he manipulates. Moreover, s/he does not build technological skills (how to use the tool) independently from the mathematical ones (how to solve the mathematical task). The two aspects of the activity shape each other. Let us consider the following example with dynamic geometry (Fig. 2): given a parallelogram ABCD, O the intersection of its diagonals and AODE another parallelogram. The fact that only three vertices of the parallelogram ABCD can be moved is not a mathematical result, but rather a constraint of the dynamic geometry figure that the student will experience when dragging around the vertices.

It may happen that the interaction with the DG figure produces phenomena in contradiction with the learning goal (Fig. 2, at the right). The segments (AO) and (OD) are both parts of the diagonals of ABCD and sides of AODE. Thus, the properties of the sides of AODE can be inferred from the properties of the diagonals of ABCD. While exploring the figure by dragging around vertices of ABCD, a student can easily obtain the parallelogram ABCD having two adjacent sides equal—ABCD thus being a rhombus—but whose diagonals are not perpendicular, which seemingly contradicts the mathematical theory. The choice of displaying the measurements of lengths and angles rounded to the nearest integer explains this contradiction. However, when analyzing a resource, it is necessary to consider the learning goal. Obviously, the above-mentioned phenomenon would not be dealt with in the same way if the learning goal was to discuss the issue of exact and approximate values. This shows that the quality of a resource cannot be considered without the context of its usage, as explained in Sect. 2.2.

The above-mentioned example shows also that, when a resource includes a dynamic geometry file, it is necessary to check the coherence between the proposed task, intended learning and the dynamic figure. For this reason, we propose the following criterion related to the instrumental dimension of the resource: “The figure behaves consistently with the activity”. In addition, the figure should behave as expected: “The figure shows no ill effect”. Indeed, if the figure does not behave according to mathematical theories and didactical goals, the teacher should be warned. These criteria aim at helping the author of a resource, or its user, to become aware of such problems and possibly to improve the resource.

3.2.2 Dynamic geometry added-value

Numerous research studies on dynamic geometry put forward its potentialities and the diversity of its contribution to the learning of geometry (Laborde 2001) and proof (Mariotti 2000). Criteria related to this aspect aim first at evaluating how these potentialities are exploited, and more specifically to what extent dynamic geometry contributes to improve learning activities comparing to paper–pencil environment. Our main assumption is that new tasks have to be designed in order to take advantage of learning potential of technologies (Laborde 2001; Artigue 2010). The criterion “The activity cannot be transposed to paper and pencil” points out this aspect. The following ones address specific features of dynamic geometry offering an added value to the resource: “Dynamic geometry leads to understand geometrical relations rather than numerical values”, “Dynamic geometry helps the user to explore, experiment and conjecture”. Other criteria focus on the role of the drag mode. Different purposes of the drag mode leading to different mathematical conceptualizations have been identified (Restrepo 2010). Figure 3 shows a specific role of dragging, leading to considering soft constructions (Healy 2000), and its use to learn the distinction between hypothesis and conclusion of a theorem (Gousseau-Coutat 2005). In this example, a quadrilateral ABCD and the midpoints M of the diagonal [AC] and N the midpoint of the diagonal [BD] are drawn (Fig. 3a). A student is asked to drag vertices of ABCD so that the points M and N coincide and to notice what happens to ABCD. The parallelogram ABCD (Fig. 3b) is a soft construction because one of its constraints (its diagonals have the same midpoint) is only temporarily (not always) and locally (for some positions of the vertices of ABCD) fulfilled. This constraint, standing for a hypothesis of a theorem, is materialized by the student’s action, while the consequence (ABCD being a parallelogram) standing for the conclusion of the theorem occurs independently of her/his will. Through the interactions with the figure, the student experiences the dissymmetry of hypothesis and conclusion in a theorem.

Thus, the criterion “Dragging is used to explore validity conditions of a theorem” refers to this specific use of the drag mode in soft constructions.

It is clear that a resource cannot benefit from all dynamic geometry potentialities. Nevertheless, we consider of a rather poor quality a resource that takes no advantage of dynamic geometry. Moreover, since teachers mostly use dynamic geometry only to illustrate invariant properties (Ruthven 2005), the proposed criteria aim at making teachers acquaint themselves with and reflect on other possible ways of using it.

3.2.3 Didactical implementation of the resource

Criteria associated to this aspect draw mainly on the theory of didactical situations (Brousseau 1997) offering tools for analyzing students’ activity and teacher’s role, and on the instrumental approach (Rabardel 2002) providing a framework for students’ instrumented activity analysis.

The notion of feedback provided by the “milieu” is a key idea of the theory of didactical situations. It derives from the assumption that learning results from the interaction between a learner and a “milieu”, knowledge being a characterization of the system [learner ↔ milieu] equilibrium. The “milieu” is defined as the material and non-material organization of a task that potentially reacts, on a mathematical level, to the decisions and actions of the learner. As a counterpart of the learner, the “milieu” is also defined by the level of knowledge of the learner, i.e., its feedback exists only if the learner is able to perceive it and to give it some mathematical meaning. When solving a problem with dynamic geometry, the latter is part of the “milieu” (Laborde & Capponi 1994), because it reacts to the constructions and dragging of the learner. Thanks to the relevant feedback, s/he can (in)validate her/his strategy independently from the teacher’s evaluation. Therefore, when teachers are ready to use a resource in their classrooms, they need to be aware of what can happen during the interactions between the students and the “milieu”. The notion of feedback underpins criteria like “Feedback provided by the software and useful in the activity is discussed” or “Feedback provided by the software helps students in the problem solving activity”. Likewise, the notions of devolution and institutionalization (Brousseau 1997) are behind the items “Hints are given on ways to make students start solving the activity” and “Advices are given to determine how and when to synthesize findings”.

3.2.4 Pedagogical implementation of the resource

Trouche and Drijvers (2010) stress the fundamental role of the teacher in a successful integration of ICT. They thus propose a theoretical tool for analyzing teacher’s activity called instrumental orchestration and defined as

“the teacher’s intentional and systematic organization and use of the various artifacts available in a learning environment in a given mathematical task situation, in order to guide students’ instrumental genesis”.

One of the elements of the instrumental orchestration is a didactical configuration which is “a configuration of the teaching setting and the artifacts involved in it” (Drijvers et al. 2010). Drawing on the orchestration perspective, a quality resource should provide the teacher with information related to the classroom orchestration suggesting didactical configurations conductive to intended learning. The following criteria address this issue: “The possible configurations (one computer per student or projector…) are described” or “A schedule of the activity is proposed”.

3.3 Usage of the questionnaire

The quality criteria in the questionnaire cannot be homogeneous from the point of view of the expertise required from a user to understand them and to be able to provide a sound answer. Thus, users are not supposed to evaluate all aspects of a resource, but rather to focus on those corresponding to their own expertise and representation of what a quality resource is. Given the length of the questionnaire, it was necessary to first propose to the users a “light” version focusing on a few general items, one per quality aspect (cf. Sect. 3), instead of proposing straightaway the complete version, which might deter users by its length and complexity. The use of detailed criteria is at the option of each user. Indeed, at any time, a reviewer has the possibility to deepen her/his analysis by answering more precise criteria related to aspects s/he wishes to analyze further, according to her/his expertise. Moreover, s/he is given opportunity to go back to the review repeatedly. By letting users choose freely the way they contribute to the resource reviewing, we hope to encourage all kinds of users to participate at the quality assessment process and, by so doing, to help improve resources for the benefit of the whole dynamic geometry users’ community.

4 Experiments

As we explained in Sect. 3.1, the questionnaire aiming at framing users’ analysis of resources has been elaborated in a cyclical process combining the design of successive versions and their subsequent tests with teachers leading to a refinement of the questionnaire. Therefore, these experiments played a crucial role in the design process, as we show in Sect. 4.1. Finally, in Sect. 4.2, we report briefly on a long-term observation of online questionnaire usages.

4.1 Short-term experiments

The purpose of the two experiments reported in this section was twofold: (1) analyze the relevance and clarity of the questionnaire items by observing teachers’ uses of the questionnaire, and (2) identify what quality resources are for them, in particular which dimensions of resources inform their selection and/or appropriation for a potential use in their classrooms. These experiments involved groups of users with specific profiles (teachers, teacher educators, teachers with different degree of dynamic geometry integration, …) who were asked to analyze a set of resources carefully chosen with respect to the experimental goals.

4.1.1 First experiment: 22 Brazilian teachers

During this first empirical study, carried out in 2008, some criteria of the initial questionnaire presented in Mercat et al. (2008) were tested within an in-service teacher training course. A few open questions were added to make teachers explicit their views of a quality of a dynamic geometry resource as well as at identifying elements of the resource teachers consider as helpful for the resource appropriation and classroom use. This experiment and its methodology are reported elsewhere (Trgalová et al. 2009). In what follows, we only recall the main findings that helped us refine the quality criteria.

For the participants of this experiment, a resource quality lies in the availability of some elements and information, such as a brief description of the sequence, considered as the resource “visit card”, a synthetic description of the sequence organization (inferred in this case from the student worksheet), and information about the teacher’s orchestration of the classroom (didactical and pedagogical implementation). Regarding the role of dynamic geometry and its added value in the task, the teachers analyzed it in response to the explicit open question asking for a comparison with paper–pencil. They claimed that they would not have paid attention to this dimension without the explicit question, though essential in a dynamic geometry resource. Thus, the questionnaire helped teachers analyze efficiently the resource and identify what is the contribution of dynamic geometry in the students’ tasks.

In conclusion, the criteria setup for the questionnaire proved to be understandable by the teachers. Moreover, they found the dimensions of the resource addressed by the questionnaire important and helpful for analyzing it, which confirmed our choices made in the questionnaire design. The questionnaire appeared both as a tool for highlighting aspects to be improved (e.g., add missing information about the teacher’s role), and as a possible means of training teachers to be able to look at available resources with a critical mind and understand their goals and content. Performing such an analysis can therefore contribute to the development of teachers’ professional skills necessary for a sound usage of resources.

4.1.2 Second experiment: 6 French teachers

The purpose of this experiment was to test a refined version of the questionnaire, which, at that time, addressed the first eight dimensions, the ninth, ergonomic dimension, having been included later on. Three resources were proposed to teachers, addressing the same mathematical notion (quadrilaterals) but having different characteristics. The resources had the same form consisting of a text associated with a dynamic geometry file; the text addressed a teacher, a student or both. In addition, the resources suggested the same type of classroom organization: students solving proposed tasks in a computer lab. An “expert” analysis of the resources was done by the researcher prior to the experiment for the purpose of its comparison with the participants’ responses.

The six French secondary school math teachers, involved in this experiment, had a professional experience ranging from 5 to 15 years, with a very heterogeneous level of ICT integration. The resources were proposed to the teachers according to the following protocol: (1) before the experimental session, every teacher had to become individually acquainted with each resource, (2) during the session, the teachers had to fill in the questionnaire in pairs, and (3) each teacher had to decide individually whether s/he would use the resources in her/his classroom or not, explain her/his choice and possibly make suggestions for resource improvement. The experimental session lasted approximately 3 h. While working with the questionnaire, the teachers were grouped in pairs in order to favor the exchange and confrontation of different points of view. An observer was present to conduct debates when appropriate or to help teachers overcome potential ambiguities arising while answering the questionnaire.

The data analysis showed first that some formulations of the quality criteria were quite ambiguous and depending on their interpretation, they could lead to positive or negative answers for the same resource. This allowed us to significantly improve the criteria wording. Regarding the criteria determining whether the teachers would choose or not a given resource, it turned out that valid mathematical content is a necessary condition for a resource to be eligible. Moreover, the tasks proposed in the resource must take a real benefit from dynamic geometry, otherwise the resource is rejected. Teachers who were not familiar enough with using ICT in a classroom considered also the dimension addressing the didactical implementation of the resource as one of the most important. These results confirm our choices of dimensions to evaluate the resource quality.

The results of this experiment point out another interesting issue: the questionnaire itself was used differently by the teacher pairs. One pair used it to eliminate less suitable resources. Their analysis, framed by the questionnaire, aimed at highlighting unsatisfactory aspects of the resources. Once these were identified, they stopped analyzing the resources without attempting to analyze the remaining dimensions that could have constituted strengths of the resources. For example, one of the resources included a DG file with an erroneous construction (a quadrilateral supposed to be a parallelogram, but in fact it was just any quadrilateral looking like a parallelogram at the time when the file had been opened). This pair of teachers have found irrelevant to continue the analysis after having identified the weakness of the resource residing in the erroneous dynamic figure, as they claimed: “the first thing is the figure”. Unlikely, the two other teacher pairs provided a detailed analysis of all dimensions of this resource. When asked if they would choose the resource or not for a possible implementation in their classrooms, some teachers were able to envisage using the resource provided that they would improve the corresponding dimension. They seem to have bypassed this inconsistency, as they mentioned: “we are going to do as if the figure were correct”. Thus, although all pairs found this weakness unacceptable, some of them suggested improving the resource by “modifying the figure itself so that the parallelogram resists to dragging”.

These results allow foreseeing various possible usages of the questionnaire. They correspond to different instrumental geneses leading to the development of different instruments of resource analysis, such as the following:

-

focusing on a few essential dimensions, using detailed criteria to analyze these and only general items to evaluate the others;

-

starting by analyzing in detail one important dimension: if it is satisfactory, analyze an other one, if not stop the analysis and conclude with a “poor quality” resource;

-

using only general items to analyze the resource;

-

using detailed criteria to perform a deep analysis of all resource dimensions.

The way the questionnaire is used (or, in other words, the nature of the questionnaire as an instrument for resource analysis) is shaped by the teacher’s experience. However, the purpose of the analysis also plays an important role in the instrumental genesis. For example, if the analysis aims at improving the resource quality, one would perform a detailed analysis of (almost) all its dimensions to highlight its weaknesses. If the aim is to get a deeper insight into the resource content prior to its implementation in a classroom, one would analyze in detail the dimensions s/he considers the most important and let aside the others. If the aim is to choose a resource among a set of similar resources, one would focus the analysis on important dimensions and reject resources for which any of these dimensions is unsatisfactory.

4.2 Long-term observation of the i2geo platform usages

Although a research on the usages of the quality assessment process and its efficiency in terms of resource improvement is still to be carried out, we provide some remarks about the questionnaire usages observed over a 1-year period, since the implementation of the current version of the online questionnaire in September 2009.

By September 5, 2010, 238 reviews of 139 different resources have been recorded on the platform.Footnote 9 Light and more complete versions of the questionnaire have been used. 181 reviews (76%) are based on the light version, i.e., the reviewers have only provided answers to general items, while only 55 reviews (23%) provide answers to more precise criteria of at least one dimension of a resource. Most of the reviews have been done without test in class. Only 13 reviews (5.4%) have been completed after the resource has been tested. A qualitative analysis of the reviews shows four different tendencies corresponding to four different ways to process the review.

A small part of the reviews (around 10%) could be called “expert” ones (Fig. 4). They are based on the complete version of the questionnaire and provide answers to (almost) all detailed items, some qualitative comments and sometimes even a feedback from a classroom test. Such reviews are done mostly by teachers who collaborate closely with the Intergeo consortium and associate partners and who are deeply involved in the review process. Such reviews are highly valuable for resource improvement since they highlight its strengths and weaknesses.

A “fan” review consists in providing positive answers to most of the nine general items without considering the more detailed criteria (Fig. 5). Sometimes a highly positive general comment is also provided. It seems that in this case, the user gets a general feeling of satisfaction about the resource under review, s/he likes it and wishes to promote it without providing any information about the reasons why s/he considers the resource interesting or useful. Surprisingly, 74.6% of all reviews based on the light version of the questionnaire are of this type.

A “partially expert” review provides detailed answers to some dimensions of a resource; the other dimensions are either left aside or evaluated only with the general items (Fig. 6). They are as valuable as “expert” reviews since the dimensions of a resource analyzed in more detail are those that the reviewers consider as its weak points, which provides suggestions to improve the corresponding dimension of the resource. However, contrary to our expectations (cf. Sect. 3.3), they are quite rare: 30 reviews, i.e., 12.6%.

Finally, “global” reviews are based on the light version of the questionnaire, but unlike a “fan” review, it points out weaknesses of a resource by means of both quantitative answers to general items and constructive qualitative global comments (Fig. 7). 46 reviews (19.3%) are of this type.

The analysis of reviews presented above shows that the questionnaire is used in various ways. In terms of the instrumental approach, we can say that the users develop different instruments for resource analysis from the questionnaire-artifact. Clearly, some of them are more efficient and helpful for the resource improvement than others. This leads to question the means to support the reviewers of the resources in a way to contribute not only to their ranking, but also to their improvement by helping identifying their weak or problematic points. Experiences of other learning objects repositories show that guidelines for contributors, both authors and reviewers, may be helpful. They make explicit the aims of the assessment process, which is necessary to adhere to its underpinning ideas. These considerations raise the issue of building a community of practice around the i2geo platform (Wenger 1998).

5 Conclusion

The purpose of this paper was to report about the design and development of the i2geo quality assessment process concerned with dynamic geometry resources.

The quality assessment process aims at enabling any platform user to review and comment on any resource available on the platform. It is built around a questionnaire framing the analysis of any available resource along various dimensions. The quality criteria of a dynamic geometry resource, proposed in the questionnaire, draw on results of research studies focusing on teaching and learning mathematics with ICT, and more specifically with dynamic geometry. In order to cross the research-based perspective on the resource quality with the teachers’ one, the design of the questionnaire included test phases with in-service teachers and teacher educators, allowing for successive refinements of the quality criteria and their formulations.

Empirical studies carried out with teachers have produced many significant results. As regards the questionnaire, it turned out that teachers found the items and criteria accessible and useful to get a deeper insight into the resource. It appeared also that while some dimensions of the resource are considered as crucial for the majority of the teachers (mathematical validity, instrumental dimension and dynamic geometry added-value), other are assigned more or less importance according to the teachers’ expertise. Globally, a rather significant convergence between teachers’ vision of resource quality and ours has been observed.

The outcomes of the experiments tend to show that the questionnaire may play a role in the teachers’ professional development. For example, a resource analysis goes through an explicit check, framed by the questionnaire, of the dynamic geometry added-value in comparison with paper–pencil and of the role of the drag mode in the proposed tasks. This is a professional skill needed for an efficient use of dynamic geometry, and the results of the experiments tend to confirm that the use of the questionnaire by the teachers contributes to its development.

Various usages of the questionnaire have been identified, showing different teachers’ instrumental geneses shaped both by their experiences and the purposes of the resource analysis. This result raises the issue of monitoring and accompanying users’ instrumental geneses related to the questionnaire use and their work with the resources available in the platform. Further studies are also necessary to explore to which extent the reviews are effectively taken into account by their authors or other users and to track the evolution of the reviewed resources. This opens routes to further research.

Notes

This applies to any resource user (student, teacher, …). However, the target public in the Intergeo project being math teachers, these will be referred to as principal users of i2geo resources.

“Users” here refer to the population for whom the repository was designed, be they teachers, teacher educators, researchers or students.

These teachers are associated to the National Institute for Pedagogical Research (INRP), Lyon, France, which was an associate partner of the Intergeo project.

By the end of January 2011, the number of resource reviews more than doubled (695 reviews recorded in the platform). The number of available resources has increased from around 2,600 to more than 3,300. This considerable increase of resources and reviews shows that the users’ community keeps being active even after the end of the project.

References

Adler, J. (2000). Conceptualising resources as a theme for teacher education. Journal of Mathematics Teacher Education, 3, 205–224.

Artigue, M. (2002). Learning mathematics in a CAS environment: The genesis of a reflection about instrumentation and the dialectics between technical and conceptual work. International Journal of Computers for Mathematics Learning, 7, 245–274.

Artigue, M. (2010). The future of teaching and learning of mathematics with digital technologies. In C. Hoyles & J. B. Lagrange (Eds.), Digital technologies and mathematics. Teaching and learning: Rethinking the domain. Proceedings of the 17th ICMI Study (pp. 463–475). Hanoi 2006.

Assude, T., & Gélis, J.-M. (2002). La dialectique ancien-nouveau dans l’intégration de Cabri-géomètre à l’école primaire. Educational Studies in Mathematics, 50, 259–287.

Brousseau, G. (1997). Theory of didactical situations in mathematics. Dordrecht: Kluwer Academic Publishers.

Caprotti, O., & Seppälä, M. (2007). Evaluation criteria for eContent quality. JEM-Joining Educational Mathematics ECP-2005-EDU-038208, Deliverable D2.1. Retrieved August 31, 2010 from http://www.jem-thematic.net/.

Chevallard, Y. (1992). Concepts fondamentaux de la didactique: Perspectives apportées par une approche anthropologique. Recherches en Didactique des Mathématiques, 12(1), 77–111.

Del Moral, M. E., & Cernea, D. A. (2005). Design and evaluate learning objects in the new framework of the semantic web. Recent research developments in learning technologies. FORMATEX 2005. Retrieved August 30, 2010 from http://www.formatex.org/micte2005/357.pdf.

Drijvers, P., Doorman, M., Boon, P., Reed, H., & Gravemeijer, K. (2010). The teacher and the tool: Instrumental orchestrations in the technology-rich mathematics classroom. Educational Studies in Mathematics, 75, 231–234.

Fugelstad, A. B., Healy, L., Kynigos, C., & Monaghan, J. (2010). Working with teachers: Context and culture. In C. Hoyles & J. B. Lagrange (Eds.), Digital technologies and mathematics. Teaching and learning: Rethinking the domain. Proceedings of the 17th ICMI Study (pp. 293–310). Hanoi 2006.

Goos, M., & Soury-Lavergne, S. (2010). Teachers and teaching: Theoretical perspectives and classroom implementation. In C. Hoyles & J. B. Lagrange (Eds.), Digital technologies and mathematics. Teaching and learning: Rethinking the domain. Proceedings of the 17th ICMI Study (pp. 311–328). Hanoi 2006.

Gousseau-Coutat, S. (2005). Connaître et reconnaître les théorèmes de la géométrie. Petit x, 67, 12–32.

Gueudet, G., Soury-Lavergne, S., & Trouche, L. (2009). Soutenir l’intégration des TICE: quels assistants méthodologiques pour le développement de la documentation collective des professeurs? In C. Ouvrier-Buffet & M.-J. Perrin-Glorian (Dir.), Approches plurielles en didactique des mathématiques (pp. 161–173). Paris: LDAR.

Gueudet, G., & Trouche, L. (2009). Towards new documentation systems for mathematics teachers? Educational Studies in Mathematics, 71(3), 199–218.

Guin, D., Joab, M., & Trouche, L. (Dir.). (2008). Conception collaborative de ressources pour l’enseignement des mathématiques, l’expérience du SFoDEM (2000–2006), cédérom, INRP et IREM (Université Montpellier 2).

Guin, D., & Trouche, L. (1999). The complex process of converting tools into mathematical instruments. The case of calculators. International Journal of Computers for Mathematical Learning, 3(3), 195–227.

Healy, L. (2000). Identifying and explaining geometrical relationship: Interactions with robust and soft Cabri constructions. In T. Nakahara & M. Koyama (Eds.), Proceedings of the 24th PME Conference (pp. 103–117). Hiroshima, Japan.

Healy, L., Jahn, A. P., & Frant, J. B. (2009). Developing cultures of proof practices amongst Brazilian mathematics teachers. In Proceedings of the ICMI Study 19 Conference: Proof and Proving in Mathematics Education (pp. 196–201). Taipei, Taiwan: National Taiwan Normal University, May 2009.

Hoyles, C., & Lagrange, J. B. (Eds.). (2010). Digital technologies and mathematics. Teaching and learning: Rethinking the domain. Proceedings of the 17th ICMI Study. Hanoi 2006.

Kortenkamp, U., Blessing, A. M., Dohrmann, C., Kreis, Y., Libbrecht, P., & Mercat, C. (2009), Interoperable interactive geometry for Europe—First technological and educational results and future challenges of the Intergeo project. In V. Durrand-Guerrier, et al. (Eds.), Proceedings of the Sixth CERME Conference (pp. 1150–1160). Jan 28–Feb 1 2009, Lyon, France.

Laborde, C. (2001). Integration of technology in the design of geometry tasks with Cabri-geometry. International Journal of Computers for Mathematical Learning, 6, 283–317.

Laborde, C. (2008). Multiple dimensions involved in the design of tasks taking full advantage of dynamic interactive geometry. In A. P. Canavarro, et al. (Eds.), Tecnologias e Educação Matemática (pp. 29–43). Lisboa: SEM/SPCE.

Laborde, C., & Capponi, B. (1994). Cabri-géomètre constituant d’un milieu pour l’apprentissage de la notion de figure géométrique. Recherches en Didactique des Mathématiques, 14(1–2), 165–210.

Laborde, C., & Sträßer, R. (2010). Place and use of new technology in the teaching of mathematics: ICMI activities in the past 25 years? ZDM—International Journal on Mathematics Education, 42, 121–133.

Little, J. W. (1993). Teachers’ professional development in a climate of educational reform. Educational Evaluation and Policy Analysis, 15(2), 129–151.

Mahé, A., & Noël, E. (2006). Description et évaluation des ressources pédagogiques: Quels modèles? ISDM, 25. Retrieved August 31, 2010 from http://isdm.univ-tln.fr/isdm.html.

Mariotti, M. A. (2000). Introduction to proof: The mediation of a dynamic software environment. Educational Studies in Mathematics, 44, 25–53.

Mercat, C., Soury-Lavergne, S., & Trgalova, J. (2008). Quality assessment. Deliverable no. 6.1, Intergeo Project, March 2008. Retrieved August 31, 2010 from http://i2geo.net/files/deliverables/D6.1.pdf.

Metros, S. E. (2005). Learning objects: A Rose by another name… EDUCAUSE Review, 40(4), 12–13. Retrieved September 15, 2010 from http://www.educause.edu/er.

Monaghan, J. (2004). Teachers’ activities in technology-based mathematics lessons. International Journal of Computers for Mathematical Learning, 9(3), 327–357.

Montalvo, A. (2005). Conceptual model for ODL quality process and evaluation grid, criteria and indicators. e-Quality Project 110231-CP-1-2003-FR-MINERVA-M, Deliverable D2.2. Retrieved August 31, 2010 from http://www.e-quality-eu.org/home.html.

Rabardel, P. (2002). People and technology—a cognitive approach to contemporary instruments. Retrieved August 31, 2010 from http://ergoserv.psy.univ-paris8.fr/.

Restrepo, A. M. (2010). Genèse instrumentale du déplacement en géométrie dynamique chez des élèves de 6 ème. Editions Edilivre-Collection universitaire.

Robertson, A. (2006). Introduction aux banques d’objets d’apprentissage en français au Canada. Rapport pour le Réseau d’enseignement francophone à distance du Canada. Retrieved September 15, 2010 from http://www.refad.ca/.

Ruthven, K. (2005). Expanding current practice in using dynamic geometry to teach about angle properties. Micromath, 21(2), 26–30.

Trgalová, J., Jahn, A. P., & Soury-Lavergne, S. (2009). Quality process for dynamic geometry resources: The Intergeo project. In V. Durrand-Guerrier, et al. (Eds.), Proceedings of the Sixth CERME Conference (pp. 1161–1170). Jan 28–Feb 1 2009, Lyon, France.

Trouche, L., & Drijvers, P. (2010). Handheld technology for mathematics education, flashback to the future. ZDM—International Journal on Mathematics Education, 42(7), 667–681.

Wenger, E. (1998). Communities of practice: Learning, meaning and identity. Cambridge: Cambridge University Press.

Wiley, D. A. (2000). Connecting learning objects to instructional design theory: A definition, a metaphor, and a taxonomy. In D. A. Wiley (Ed.), The instructional use of learning objects. Retrieved August 30, 2010 from http://reusability.org/read/.

Williams, D. D. (2000). Evaluation of learning objects and instruction using learning objects. In D. A. Wiley (Ed.), The instructional use of learning objects. Retrieved August 31, 2010, from http://reusability.org/read.

Acknowledgments

The authors would like to thank Luc Trouche and Paul Libbrecht for their valuable feedback on the first versions of the paper. The research presented in this paper was funded partially by European community within its eContentPlus programme. We are also grateful to CAPES for financing the involvement in this research of one of the authors.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Trgalova, J., Soury-Lavergne, S. & Jahn, A.P. Quality assessment process for dynamic geometry resources in Intergeo project. ZDM Mathematics Education 43, 337–351 (2011). https://doi.org/10.1007/s11858-011-0335-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11858-011-0335-4