Abstract

Additive manufacturing (AM) has attracted considerable attention in recent years. This technology overcomes the geometrical limits of workpieces produced with the traditional subtractive methods and so gives the opportunity to manufacture highly complex shapes. Unfortunately, the repeatability of the manufacturing process and the monitoring of quality are not reliable enough to be utilized in mass production. The quality monitoring of AM processes in commercial equipment has been largely based on temperature measurements of the process zone or high-resolution imaging of the layers. However, both techniques lack information about the physical phenomena taking place in the depth of the materials medium and this limits their reliability in real-life applications. To overcome those restrictions, we propose to combine acoustic emission and reinforcement learning. The former captures the information about the subsurface dynamics of the process. The latter is a branch of machine learning that allows interpreting the received data in terms of quality. The combination of both is an original method for in situ and real-time quality monitoring. Acoustic data were collected during a real process using a commercial AM machine. The process parameters were selected to achieve three levels of quality in terms of porosity concentration while manufacturing a stainless steel 316L cuboid shape. Using our method, we demonstrated that each level of quality produced unique acoustic signatures during the build that were recognized by the classifier. The classification accuracy reached in this work proves that the proposed method has high potential to be used as in situ and real-time monitoring of AM quality.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Additive manufacturing (AM) technology is viewed by many as an enabling technology to usher in the next industrial revolution (Ref 1). In contrast to subtractive technologies, AM is often considered to be ideal for rapid prototyping unique workpieces with very few geometrical limitations in terms of shape (Ref 1, 2). The AM sub-branch based on powder bed technology and known as Powder Bed Fusion Additive Manufacturing (PBFAM) has a broad application in the automotive and machine tool industries (Ref 3), aerospace applications (Ref 4), medical devices (Ref 5) and even turbines (Ref 6). However, despite all the advances in this technology, transitioning it to mass production is of great concern. The reason is a lack of process reproducibility and quality between workpieces. Thus, a reliable and cost-effective in situ and real-time quality monitoring technology is in great demand (Ref 7,8,9).

During the last 5 years, the developments of AM quality monitoring have focused in three main areas: (a) temperature measurement of the melt pool either by pyrometers (Ref 10, 11) or highs speed cameras (Ref 11, 12), (b) image analysis of the surfaces of each individual layer of the workpiece (Ref 13) and (c) x-ray phase-contrast imaging (XPCI) (Ref 14,15,16) and/or x-ray computed tomography (XCT) of the entire workpiece (Ref 17). Despite such a diversity, each of the listed technologies have drawbacks that constrain their applicability in mass production (Ref 7,8,9). Temperature measurement of the melt pool is limited to the melt surface, and no information is available regarding the complex liquid movement and heat distribution throughout the depth. The image processing approach assesses the quality after an entire layer is produced and can detect only defects at the surface of the layer being built but, for obvious reasons, not defects created within the melt pool such as pores. Regarding x-ray technologies, XPCI is only used for in situ and in real-time investigations in laboratory conditions. It allows investigating the origin and propagation of defects, but the algorithmic processing of the acquired data is not suitable for real-time implementations. XCT is applied by industries in a limited number of cases due to the high costs, but it can be only performed after the build and the workpiece has been removed from the build plate. Both x-ray methods are costly and time-consuming (Ref 14,15,16,17).

This work is an original approach for in situ and real-time quality monitoring of the PBFAM process, combining acoustic emission (AE) and reinforcement learning (RL). The former captures the information about the subsurface dynamics of the process and evidence of this is in Ref 15, 16. The latter is a sub-branch of machine learning (ML). The attraction of AE is in its reliable detection of the numerous physical phenomena with practical, cost-effective hardware realizations. These advantages are intensively exploited in a number of practical applications (Ref 18). AE was, to some extent, successfully used by Ye et al. (Ref 19) to detect several defects such as various states of balling and overheating during PBFAM processes. Nonetheless, the quality monitoring during a normal AM process is still required. The combination of AE and ML has been successfully applied in a number of applications, such as tribology (Ref 20, 21), fracture mechanics (Ref 22), and AM/laser welding process (Ref 16, 23-25). These examples had similarities with our current work since they were characterized by being complex, highly dynamic and in noisy environments. Those examples gave additional motivations to involve the same techniques for the AM quality monitoring problem.

Experimental Setup, Material and Datasets

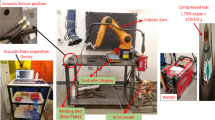

The details on the experimental setup, materials and dataset can be found in Shevchik et al. (Ref 24). Therefore, only a summary is given in this contribution. An industrial Concept M2 PBFAM machine was used to collect the AE dataset and to reproduce the industrial environment. A Concept M2 was equipped with a fiber laser operating in continuous mode with a wavelength of 1071 nm, a spot diameter of 90 μm, and a beam quality M2 = 1.02. In addition, to detect the airborne AE signals generated during the AM process, an optoacoustic sensor, known as fiber Bragg Grating (FBG), was installed in the machine. More details about FBGs can be found in Kashyap (Ref 26). The AM manufacturing was carried out with a CL20ES stainless steel (1.4404/316L) powder with a particle size distribution ranging from 10 to 45 μm. A cuboid workpiece with dimensions 10 × 10 × 20 mm3 was manufactured. The laser power (P), the hatching distance (h), and the layer thickness (t) were kept constant during the experiment with P = 125 W, h = 0.105 mm and t = 0.03 mm. In contrast, three scanning velocities υ were used: 800, 500 and 300 mm/s leading to three classes of quality (different pores concentrations). The corresponding energy density (Edensity) and quality class were (1) 800 mm/s, 50 J/mm3, poor quality = 1.42 ± 0.85%, (2) 500 mm/s, 79 J/mm3, high quality = 0.07 ± 0.02% and (3) 300 mm/s, 132 J/mm3, medium quality = 0.3 ± 0.18%. The energy density was calculated based on Eq 1 taken from Thijs et al. (Ref 27), whereas the pore concentrations was measured from cross sections via visual inspection of light microscope images. A general view of the manufactured piece (after a small piece was taken for the cross sections) and the corresponding quality in terms of pores concentration inside the material medium are given in Fig. 1.

Reprinted by permission from Elsevier License: Elsevier (Ref 24)

(a) Test workpiece produced with three porosity contents; (b-d) typical light microscope cross-sectional images of the regions produced with (b) 300 mm/s, 132 J/mm3 (medium quality), (c) 500 mm/s, 79 J/mm3 (high quality) and (d) 800 mm/s, 50 J/mm3 (poor quality).

The AE signals were recorded throughout the entire manufacturing process using a FBG. The FBG was mounted inside the chamber, at more or less 20 cm distance from the process zone. To increase the sensitivity of the FBG, it was place so that the longitudinal axis of the fiber was perpendicular to the acoustic wave as shown in Fig. 2(a). The scheme of the FBG read-out system is presented in Fig. 2(b). The FBG sensor has several advantages as compared to piezo sensors. The FBG can be used either clamped on the machine or airborne. It is small (with a total diameter of 125 μm and 1 cm in length), highly sensitive to acoustic signals (0-3 MHz), insensitive to dirt and magnetic field, and provides a sub-nanosecond time resolution (Ref 26), thus fitting the needs for real-life application in dirty and noisy environment. A dedicated software from Vallen (Vallen Gmbh, Germany) recorded the AE signals with an original sampling rate of 10 MHz. The signals were then down sampled to 1 MHz sampling rate to fit the dynamic range of the process (0 Hz-200 kHz) (Ref 19, 24, 25). The AE signals recorded during the AM process were then classified based on the quality level.

Reprinted by permission from Elsevier License: Elsevier (Ref 24)

(a) View of the FBG location inside the AM chamber with the optical feedthrough on the chamber panel (left) and the FBG read-out system (right); (b) scheme of the FBG read-out system.

Data Processing

This work investigates the applicability of reinforced learning (RL) (Ref 28) toward the AM quality monitoring problem. Alongside other machine learning methods, RL is a separate paradigm that is inspired by the human cognitive capabilities of learning in its surrounding world (Ref 28). The technique assumes that the knowledge (or classes) is partially or even not structured and the structuring of the newly acquired information is carried out efficiently through an interaction with its surrounding environment (Ref 28). This is the training process of the algorithm, in which the optimal interaction is constructed when winning a maximum numerical reward (e.g., constructing a cost function) (Ref 28, 29). In this work, we employed a general realization of RL from Silver and Huang (Ref 30) due to its potential for future AM quality monitoring systems. The reason behind its great attractiveness is that AM processes are characterized by a complex underlying physical phenomena involving a great number of the momentary events (heating, melting, solidification, etc.). Each of those has a crucial effect on the process dynamics (Ref 8, 15, 16). This complicates the preparation of a detailed training dataset, requiring expensive and time-consuming methods for data labeling (Ref 9, 16, 24). In this context, RL potentially may provide, with a minimum supervision, correlations between the acoustic emission signals and the detected momentary events. This brings two significant advantages in real-life applications. First, taking advantage of the outstanding RL self-learning capabilities in future systems may reduce the costs for preparing the training datasets (Ref 30). Second, the same advantages promise an efficient adaption for an already trained RL-based algorithms to new manufacturing conditions/materials.

In the present study, RL was trained in a supervised manner using a dataset, collected in a previous work (Ref 23, 24). The dataset was labeled and included three quality classes, represented by different porosity concentrations inside a manufactured workpiece as described in section 2 as well as in (Ref 23, 24). The objective of using this specific dataset was to check the capabilities of RL to recognize the differences in the AE spectrograms from the predefined classes.

Based on our previous work (Ref 24), all collected signals were divided into separate datasets representing a time spans of 160 ms for each individual pattern (Ref 24). The relative energies of the frequency bands from the wavelet packet transforms were extracted for each of such pattern individually. A typical example of an AE signal with a 160 ms time span and the corresponding wavelet spectrogram can be viewed in Fig. 3. The wavelet spectrogram is a signals time–frequency domain that contains the information about the evolution of the narrow frequency bands in time. There are three reasons of using wavelet spectrograms. First, the wavelet spectrogram is a sparse representation of the signals that reduces the amount of input data for analysis as compared to the AE raw signal. Second, it keeps the same classification accuracy (Ref 24). Finally, it is a comfortable noise reduction by selection of non-noisy frequency bands. The choice of the aforementioned parameters was obtained via an exhaustive search in our previous work (Ref 24) which provided a successful classification of the AE signals in terms of pore concentrations using neural network classifiers. Actually, the 160 ms time span supplied the best trade-off between the classification accuracy and the spatial resolution. The spatial resolution was estimated in terms of scanned distance (mm), processing area (mm2) and powder volume processed (mm3). The processing area was chosen as it is a function of spot diameter and scanning velocity. The powder volume processed was considered as it is a function of spot diameter, scanning velocity and laser power. The spatial resolutions for the different parameters are given in Table 1. By using the same time span and process parameter as in Ref 24, our present results are directly comparable.

From left to right, (left) typical light microscope cross-sectional images, (middle) their corresponding AE signals with a 160 ms time span, and (right) their corresponding wavelet spectrogram of the regions produced with (a) 300 mm/s, 132 mm3 (medium quality), (b) 500 mm/s, 79 mm3 (high quality) and (c) 800 mm/s, 50 mm3 (poor quality)

The extracted wavelet spectrograms were the direct input to the RL algorithm. The original total dataset (training + test dataset) included a total of 180 spectrograms that were equally distributed between each of the three quality classes. At the same time, the number of the samples in the training set was gradually reduced to observe the algorithm performance. The details on the data processing are given in the section 4.

Reinforcement Learning

We employed a general realization of the Reinforcement Learning (RL) and the details can be found in Ref 28 and 29. In our general setup, the interaction of an RL agent with the given environment is a Markovian process which is characterized by the tuple (S, A, P, R), where S is the state space of the agent, A is an action space in which each action ai transfers from the state s to s|, P is a Markov model that incorporates the probabilities of the transitions between any of the two states s and s| by means of the action ai with the corresponding probabilities p(s, a, s|), and R is the space of the rewards. The individual reward is assigned for any action ai that took place at state si. Despite our limited datasets, we decided to employ the model-free approach (Ref 28, 29, 31), in which the Markov model and actions are not known a priory and are estimated during the training process. The classification process sets the initial state to s0, while the algorithm reaches the goal sg by the actions that win the maximum reward. The governing equation for the optimal reward is given by the following optimality criterion (Ref 28, 29):

where E is the expectation, the discount factor λ ⊂ [0,1), and π(st) is a policy that maps the states to the actions. In Eq 2, all actions and their corresponding rewards T are defined for a specific policy. The search of the optimal policy is an iteration process so that at each iteration step i computes Tπ i, and where the subscript (π i) is the current policy that determines the so-called Q-value according to Eq 3, defined in the framework of the Q learning approach that was applied in this study (Ref 28):

The iterative update of the new policy is made as follows: π (i + 1)(s) = argmaxQ (s, a). The actions in the algorithm follow some specific strategies and we used the ε-greedy strategy (Ref 28). In this strategy, each action is selected randomly with some predefined fixed probability ε ⊂ [0, 1]. The choice of the policy is conditioned by the value

⊂ (0, 1) that is assigned at each step and is performed as follows: π i = random from A(s) if ε >

⊂ (0, 1) that is assigned at each step and is performed as follows: π i = random from A(s) if ε >

, otherwise π i (s) = argmaxQ (s, a).

, otherwise π i (s) = argmaxQ (s, a).

Additionally, we exploited the tabu search from Glover and Laguna (Ref 32). In this framework, the search of a near optimal path is carried out by analyzing a restricted subset of the state space, thus reducing the exploration and preserving the computational time in case of big datasets (Ref 32).

The multi-class case, in this investigation, was resolved using a one-against all strategy and three agents were involved; one for each specific class. In this particular case, the environment for the agent was created by the wavelet spectrograms (Ref 23, 24) that are 2D maps of the time–frequency space of the signals. Those are formed using the relative energies of the narrow bands, localized in time and frequency range. In this context, the search for the optimal policies was carried out by the construction of cost over the aforementioned domain.

We also found it interesting to determine the minimum number of elements inside the wavelet spectrograms that could be used without harming the classification accuracy. To do so, we reduced the number of the elements inside the wavelet spectrograms to a minimum by excluding the decomposition levels that included the noisy frequency bands. The source of noises was generated by the moving mechanical workpieces of the industrial AM printer and was localized in wider frequency bands. The minimum number of frequency bands that provided acceptable results, which was defined in this work as classification accuracies above 70%, was 24 frequency bands per spectrogram. The tests were run in a PC with i7 processor and 16 Gb RAM.

Results and Discussions

As already mentioned in section 2, three quality classes were predefined. Figure 3 shows typical light microscope cross-sectional images of the three different qualities (left), their corresponding AE signal with a 160 ms time span (middle) and their corresponding wavelet spectrogram (right). Based on this figure, two observations can be made. First, the AE signals are distinguishable. Although the amplitude is similar in all AE signals, the signal-to-noise ratio seems to increase by increasing the scanning velocity and evidence of this is visible in Fig. 3. Second, clear differences can be also seen in the wavelet spectrograms, in particular in the decomposition level ranging from 4 to 12. Therefore, we used the wavelet spectrograms due to their higher robustness as compared to the AE raw signals, as explained in section 3.

Each class was characterized by a dataset with 60 wavelet spectrograms, computed according to Shevchik et al. (Ref 24). The signals were split into two completely separate datasets; one for training and one for the tests. It is important to emphasize that the signals for the tests were never seen by the RL algorithm during training, thus imitating new data arrival in real-life conditions. The training dataset contained 40 spectrograms, whereas the other 20 spectrograms for each class were used to test the RL algorithm. The selection of the spectrograms was carried out randomly. Two hundred tests were carried out similarly to a Monte Carlo approach (Ref 33). In other words, for each of the two hundred tests, the signals for building a specific training and test datasets were re-selected randomly from the originally collected dataset. More details about the Monte Carlo method can be found in the review by Robert and Casella (Ref 33). This strategy allowed varying the input conditions for the algorithm and to investigate its performance with different training/test combinations in order to obtain a reliable statistical test over the collection of the AE signals. The accuracy for each test was computed as the number of true positives divided by the total number of the tests (e.g., the number of samples in test dataset). The total accuracy was computed as an averaged value determined as:

where N was equal to two hundreds (the total number of tests). In contrast, the classification errors are computed as the number of the true negatives divided by the total number of the tests for each class. Any further increase in the number of tests did not make any changes in the accuracy rates.

The classification test results are presented in Table 2, where the classification accuracies ranged from 74 to 82% (See the numbers in bold in the diagonal cells). These results demonstrate the feasibility of our approach for quality monitoring of AM processes. It is noteworthy that these results were produced with hardware that was not optimized to fit best the process parameters as discussed in Shevchik et al. (Ref 24). The main potential improvements are twofold. First, the setup and the spectral characteristics of the AE/FBG sensor can be improved. Adjustment of FBG holder position, orientation and/or design can potentially enhance the shockwave in coupling to the sensor. In parallel, by modifying the FBG refractive index profile, we are able to either maximize dynamic range or its sensitivity. Second, the number of data/samples in the dataset could be increased and this will certainly enhance the classification accuracy results.

Analyzing in more detail the results in Table 2, it is seen that the highest accuracy is achieved for the poor quality (82%), followed by the medium quality (79%) and the high quality (74%). In addition, the analysis of the classification errors structure can be evaluated from the non-diagonal rows in Table 2. Statistically, the error structure in the table recovers the overlaps between the distinct features from the predefined quality classes. For example, in Table 2, poor quality is classified correctly with 82% confidence. The highest classification error (11%) for this class is in the overlap with the high-quality class. The overlap with the medium quality leads to a 7% misclassification. Actually, observation of the test results in Table 2 indicates that for the poor quality and medium qualities, the misclassification errors are higher between the classes with the smaller differences in the laser scanning speed velocity (and vice versa). In other words, the misclassification errors increase as the differences in scanning velocity between the classes decrease. Consequently, for the high quality with an intermediate laser scanning velocity (500 mm/s), the misclassification errors are also approximately split equally between the medium quality (12%) and poor quality (14%). At the same time, the medium quality and poor quality showed fewer overlap errors between each other since they had greater differences in the scanning laser velocity. The physical explanation may be that a slight change of the laser scanning velocity still provoked several similar physical events. The acoustic patterns from those provided the overlaps between the predefined quality classes (see Table 2). Further investigation for classification of AE will be focused to minimize the error rates and to provide a higher classification confidence. In addition, optimizations of the FBG setup mentioned earlier should be considered. All these are planned as a continuation of the present work.

Conclusions

This work is a feasibility study for in situ and real-time monitoring of AM processes using fiber Bragg grating (FBG) as an acoustic sensor, and reinforced learning (RL) for data processing. The data collection was made during a real manufacturing process in a Concept M2 industrial machine equipped with a fiber laser (wavelength = 1071 nm, spot diameter = 90 μm, M2 = 1.02) operating in continuous mode. The laser power was set to P = 125 W, the hatching distance to h = 0.105 mm and the layer thickness to t = 0.03 mm. Three different scanning velocities were used to produce a workpiece with three quality classes (poor, medium and high quality). After training of the RL-based algorithm, the classification of the quality was performed with a confidence level between 74 and 82%. This initial result is promising, and is likely to improve when the hardware and analysis algorithms are optimized. The presented approach appears to be a good first step toward realization of useful in situ and real-time quality monitoring in additive manufacturing.

References

Y.W. Zhai, D.A. Lados, and J.L. Lagoy, Additive Manufacturing: Making Imagination the Major Limitation, JOM, 2014, 66(5), p 808–816. https://doi.org/10.1007/s11837-014-0886-2

N. Guo and M. Leu, Additive Manufacturing: Technology, Applications and Research Needs, Front. Mech. Eng., 2013, 8(3), p 215–243. https://doi.org/10.1007/s11465-013-0248-8

W.W. Wits, S.J. Weitkamp, and J. van Es, Metal Additive Manufacturing of a High-Pressure Micro-Pump, Proc. CIRP, 2013, 7, p 252–257. https://doi.org/10.1016/j.procir.2013.05.043

D.A. Türk, R. Kussmaul, M. Zogg, C. Klahn, A.B. Spierings, H. Könen, P. Ermanni, and M. Meboldt, In Additive Manufacturing with Composites for Integrated Aircraft Structures, SAMPE Conference Proceedings, Long Beach, CA, 2016, https://doi.org/10.3929/ethz-a-010691526

M. Salmi, J. Tuomi, K.S. Paloheimo, R. Björkstrand, M. Paloheimo, J. Salo, R. Kontio, K. Mesimäki, and A.A. Mäkitie, Patient-Specific Reconstruction with 3D Modeling and DMLS Additive Manufacturing, Rapid Prototyp. J., 2012, 18(3), p 209–214. https://doi.org/10.1108/13552541211218126 (Special issue: Medical advances in Additive Manufacturing)

K. Kunze, T. Etter, J. Grässlin, and V. Shklover, Texture, Anisotropy in Microstructure and Mechanical Properties of IN738LC Alloy Processed by Selective Laser Melting (SLM), Mater. Sci. Eng. A, 2015, 620, p 213–222. https://doi.org/10.1016/j.msea.2014.10.003

G. Tapia and A. Elwany, A Review on Process Monitoring and Control in Metal-Based Additive Manufacturing, J. Manuf. Sci. Eng, 2014, 136(6), p 060801. https://doi.org/10.1115/1.4028540

K.S. Everton, M. Hirsch, P. Stravroulakis, R.K. Leach, and A.T. Clare, Review of In-situ Process Monitoring and In-situ Metrology for Metal Additive Manufacturing, Mater. Des., 2016, 95, p 431–445. https://doi.org/10.1016/j.matdes.2016.01.099

M. Grasso and B.M. Colosimo, Process Defects and In Situ Monitoring Methods in Metal Powder Bed Fusion: A Review, Meas. Sci. Technol., 2017, 28, p 044005. https://doi.org/10.1088/1361-6501/aa5c4f

T. Craeghs, S. Clijsters, J.P. Kruth, F. Bechmann, and M.C. Ebert, Detection of Process Failures in Layerwise Laser Melting with Optical Process Monitoring, Phys. Proc., 2012, 39, p 753–759. https://doi.org/10.1016/j.phpro.2012.10.097

S. Clijsters, T. Craeghs, S. Buls, K. Kempen, and J.P. Kruth, In Situ Quality Control of the Selective Laser Melting Process Using a High-Speed, Real-Time Melt Pool Monitoring System, Int. J. Adv. Manuf. Technol., 2014, 75, p 1089–1101. https://doi.org/10.1007/s00170-014-6214-8

S. Berumen, F. Bechmann, S. Lindner, J.P. Kruth, and T. Craeghs, Quality Control of Laser-and Powder Bed-Based Additive Manufacturing (AM) Technologies, Phys. Proc., 2010, 5, p 617–622. https://doi.org/10.1016/j.phpro.2010.08.089

L. Scime and J. Beuth, Anomaly Detection and Classification in a Laser Powder Bed Additive Manufacturing Process Using a Trained Computer Vision Algorithm, Addit. Manuf., 2018, 19, p 114–126. https://doi.org/10.1016/j.addma.2017.11.009

C. Zhao, K. Fezzaa, R.W. Cunningham, H. Wen, F. De Carlo, L. Chen, A.D. Rollett, and T. Sun, Real-Time Monitoring of Laser Powder Bed Fusion Process Using High-Speed x-ray Imaging and Diffraction, Sci. Rep., 2017, 7, p 3602. https://doi.org/10.1038/s41598-017-03761-2

T.L. Quang, S.A. Shevchik, B. Meylan, F. Vakili-Farahani, M.P. Olbinado, A. Rack, and K. Wasmer, Why Is In Situ Quality Control of Laser Keyhole Welding A Real Challenge? Proc. CIRP, 2018, 74C, p 649–653. https://doi.org/10.1016/j.procir.2018.08.055

K. Wasmer, T.L. Quang, B. Meylan, F. Vakili-Farahani, M.P. Olbinado, A. Rack, and S.A. Shevchik, Laser Processing Quality Monitoring by Combining Acoustic Emission and Machine Learning: High-Speed x-ray Imaging to Close the Gap, Proc. CIRP, 2018, 74C, p 654–658. https://doi.org/10.1016/j.procir.2018.08.054

A. Thompson, I. Maskery, and R.K. Leach, X-ray Computed Tomography for Additive Manufacturing: A Review, Meas. Sci. Technol., 2016, 27(7), p 072001. https://doi.org/10.1088/0957-0233/27/7/072001

C. Grosse and M. Ohtsu, Ed., Acoustic Emission Testing Basics for Research - Applications in Civil Engineering, 1st ed., Springer, Berlin, 2008

D. Ye, G.S. Hong, Y. Zhang, K. Zhu, J. Ying, and H. Fuh, Defect Detection in Selective Laser Melting Technology by Acoustic Signals with Deep Belief Networks, Int. J. Adv. Manuf. Technol., 2018, 96, p 2791–2801. https://doi.org/10.1007/s00170-018-1728-0

F. Saeidi, S.A. Shevchik, and K. Wasmer, Automatic Detection of Scuffing Using Acoustic Emission, Tribol. Int., 2016, 94, p 112–117. https://doi.org/10.1016/j.triboint.2015.08.021

S.A. Shevchik, F. Saeidi, B. Meylan, and K. Wasmer, Prediction of Failure in Lubricated Surfaces Using Acoustic Time-Frequency Features and Random Forest Algorithm, IEEE Trans. Ind. Informat., 2017, 13, p 1541–1553. https://doi.org/10.1109/TII.2016.2635082

S.A. Shevchik, B. Meylan, A. Mosaddeghi, and K. Wasmer, Acoustic Characterization of Solid Materials Pre-Weakening Using Electric Discharge, IEEE Access, 2018, 6(1), p 40313–40324. https://doi.org/10.1109/access.2018.2853666

K. Wasmer, C. Kenel, C. Leinenbach, and S.A. Shevchik, In Situ and Real-Time Monitoring of Powder-Bed AM by Combining Acoustic Emission and Artificial Intelligence, Industrializing Additive Manufacturing - Proceedings of Additive Manufacturing in Products and Applications - AMPA2017, M. Meboldt and C. Klahn, Ed., Springer, Cham, 2017, https://doi.org/10.1007/978-3-319-66866-6_20

S.A. Shevchik, C. Kenel, C. Leinenbach, and K. Wasmer, Acoustic Emission for In Situ Quality Monitoring in Additive Manufacturing Using Spectral Convolutional Neural Networks, Addit. Manuf., 2018, 21, p 598–604. https://doi.org/10.1016/j.addma.2017.11.012

F. Vakili-Farahani, J. Lungershausen, and K. Wasmer, Wavelet Analysis of Light Emission Signals in Laser Beam Welding. J. Laser Appl., 2017, 29(2), p 022424. https://doi.org/10.2351/1.4983507

R. Kashyap, Fiber Bragg Grating, 2nd ed., Elsevier Inc., London, UK, 2010

L. Thijs, F. Verhaeghe, T. Craeghs, J.V. Humbeeck, and J.P. Kruth, A Study of the Microstructural Evolution During Selective Laser Melting of Ti–6Al–4 V, Acta Mater., 2010, 58(9), p 3303–3312. https://doi.org/10.1016/j.actamat.2010.02.004

R.S. Sutton and A.G. Barto, Reinforcement Learning: An Introduction, 2nd ed., The MIT Press, Cambridge, London, England, 2018

L.P. Kaelbling, M.L. Littman, and A.W. Moore, Reinforcement Learning: A Survey, J. Artif. Intell. Res., 1996, 4, p 237–285

D. Silver and A. Huang, Mastering the Game of Go with Deep Neural Networks and Tree Search, Nature, 2016, 529, p 484–489. https://doi.org/10.1038/nature16961

J.M. Quentin, B. Huysa, C. Anthony, and S. Peggy, Reward-Based Learning, Model-Based and Model-Free, Encyclopedia of Computational Neuroscience, D. Jaeger and R. Jung, Ed., Springer, New York, 2014, https://doi.org/10.1007/978-1-4614-7320-6_674-1

F. Glover and M. Laguna, Tabu Search, Kluwer Academic Publishers, 1997

C. Robert and G. Casella, Monte Carlo Statistical Methods, 2nd ed., Springer Science, New York, 2004, https://doi.org/10.1007/978-1-4757-4145-2

Acknowledgments

The authors would like to thank the Dr. Christoph Kenel for manufacturing the workpiece and the optical images and Dr. Christian Leinenbach for his assistance in the early stage of this work.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wasmer, K., Le-Quang, T., Meylan, B. et al. In Situ Quality Monitoring in AM Using Acoustic Emission: A Reinforcement Learning Approach. J. of Materi Eng and Perform 28, 666–672 (2019). https://doi.org/10.1007/s11665-018-3690-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11665-018-3690-2