Abstract

In this paper, we introduce several inertial-like algorithms for solving equilibrium problems (EP) in real Hilbert spaces. The algorithms are constructed using the resolvent of the EP associated bifunction and combines the inertial and the Mann-type technique. Under mild and standard conditions imposed on the cost bifunction and control parameters strong convergence of the algorithms is established. We present several numerical examples to illustrate the behavior of our schemes and emphasize their convergence advantages compared with some related methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let H be a real Hilbert space and C be a nonempty, closed and convex subset of H. Given a bifunction \(f:C\times C\rightarrow \mathfrak {R}\), the equilibrium problem with respect to f and C, in the sense of Blum and Oettli [7, 31], is formulated as follows:

The solution set of problem (EP) is denoted by EP(f, C). This problem is also known as the Ky Fan inequality [10] because of his early contributions to this field. Mathematically, problem (EP) unifies various fields of optimization problems, variational inequalities, fixed point problems, Nash equilibrium problem and many others, see e.g., [7, 11, 18, 19, 22]. Due to the applicability of EPs, during the last 20 years, problem (EP) has received a great interest by many authors who study the existence of solutions as well as developing iterative schemes for solving it, see for example [4, 12, 16, 17, 23, 26, 28, 30, 32, 35, 38].

One of the most popular method for solving problem (EP) is the proximal point algorithm (PPA). The algorithm was originally introduced by Martinet [25] for solving monotone variational inequalities, and later extended by Rockafellar [33] to monotone operators. Other researchers proposed generalizations and extensions to the PPA. For example, Moudafi [26] proposed a PPA-type method for solving EPs with monotone bifunctions and in [21], non-monotone bifunctions are considered.

Let us recall the proximal point algorithm for solving problem (EP). Choose a starting point \(x_0\in H\). Given the current iterate \(x_n\), find the next iterate \(x_{n+1}\) by solving the subproblem

By introducing the resolvent of the bifunction f (see, [9, 26]), defined by

the PPA can be written in a compact form of \(x_{n+1}=T_\lambda ^f(x_n)\), where \(\lambda >0\). It was known that under some suitable conditions, the sequence \(\left\{ x_n\right\} \) generated by the PPA weak converges to some solution of problem (EP) provided by the monotonicity of f. In the case when f is strongly monotone, then the \(\left\{ x_n\right\} \) converges strongly to the unique solution of problem (EP), see, e.g., [8, Theorem 12]. Recently, Iusem and Sosa [20] have studied and analyzed further a proximal point method for problem (EP) in a Hilbert space which improved upon previously known convergence results.

In the light of Antipin’s research [1,2,3, 12], Moudafi [27] introduced the following so-called inertial proximal point algorithm (IPPA): given \(x_{n-1}\), \(x_n\) and two parameters \(\alpha _n\in [0,1)\) and \(r_n>0\), find \(x_{n+1}\in C\) such that

i.e., \(x_{n+1}=T_{r_n}^f(y_n)\) with \(y_n=x_n+\alpha _n(x_n-x_{n-1})\). Clearly when \(\alpha _n=0\) then the IPPA reduces to the original PPA in [26]. Moudafi [27] proved that the sequence \(\left\{ x_n\right\} \) generated by the IPPA converges weakly to some solution of problem (EP) under the assumption

Very recently, in [14] the author has reconsidered the IPPA in incorporating with the Krasnoselski–Mann iteration and proposed a weakly convergent algorithm, namely the inertial-like proximal algorithm, for solving problem (EP). The convergence of the inertial-like algorithm in [14] is established without assuming the condition (*). The numerical results in [14] also suggest the faster convergence of the IPPA over others.

It can be seen that the inertial-like algorithms share a common feature and is that the next iterate \(x_{n+1}\) is constructed based on the previous two iterations \(x_{n-1}\) and \(x_n\). This is believed to cause an “inertial effect” which can improve the convergence rate of the original algorithm. Recently, Chbani and Riahi [8] introduced another kind of inertial term which is a convex combination of the two previous iterates, i.e., \(y_n=x_n-\alpha _n(x_n-x_{n-1})=(1-\alpha _n)x_n+\alpha _n x_{n-1}\). By combining the proximal point method, their inertial technique and the viscosity method, Chbani and Riahi have introduced an inertial algorithm, namely Algorithm (RIPAFAN). Under suitable conditions imposed on the control parameters and associated cost bifunction, the RIPAFAN converges either weakly or strongly. Some other inertial-like algorithms for solving problem (EP) can be found, for example, in [13, 15] and the many references therein.

Motivated and inspired by the aforementioned results above, in this paper we study and propose some inertial-like proximal point algorithms (IPPA) for solving EPs in areal Hilbert spaces with strong convergence property. Firstly, we consider a class of strongly monotone bifunctions and establish the rate of convergence (at least linearly) of the first IPPA. This algorithm is requires the knowledge of the modulus of strong monotonicity of the cost bifunction. Since this constant might be unknown or difficult to approximate in practice, we also introduce a second variant named the modified IPPA and does not require the knowledge of this constant. For this algorithm we also provide convergence theorem and rate of convergence. Last we introduce a so-called Mann-like IPPA for solving a wider class of EPs with monotone bifunctions and compared with the Krasnoselski-Mann IPPA in [14], we provide strong convergent. Several numerical results are reported and compared with related methods to illustrate the convergence and advantages of our proposed algorithms.

The rest of this paper is organized as follows: in Sect. 2, we recall some definitions and preliminary results for further use. Sects. 3 presents the algorithms for a class of strongly monotone bifunctions while Sect. 4 deals with the algorithm for monotone bifunctions. In Sect. 5, several numerical results are reported to illustrate the computational performance of the new algorithms over some existing algorithms.

2 Preliminaries

Let H be a real Hilbert space with the inner product \(\left\langle \cdot ,\cdot \right\rangle \) and the induced norm \(||\cdot ||\). Let C be a nonempty, closed and convex subset of H and \(f: C\times C\rightarrow \mathfrak {R}\) be a bifunction. Throughout this paper, we consider the following standard assumptions imposed on the bifunction f:

-

(A1)

\(f(x,x)=0\) for all \(x\in C\);

-

(A2a)

f is strongly monotone on C, i.e., there exists a constant \(\gamma >0\) such that

$$\begin{aligned} f(x,y)+f(y,x)\le -\gamma ||x-y||^2,\quad \forall x,y\in C; \end{aligned}$$ -

(A2b)

f is monotone on C, i.e.,

$$\begin{aligned} f(x,y)+f(y,x)\le 0,\quad \forall x,y\in C; \end{aligned}$$ -

(A3)

f(., y) is upper-hemicontinuous for each y, i.e., for all \(x,y,z\in C\),

$$\begin{aligned} \lim _{t\rightarrow 0}f(tz+(1-t)x,y)\le f(x,y); \end{aligned}$$ -

(A4)

f(x, .) is convex and lower semi-continuous for each \(x\in C\);

-

(A5)

EP(f, C) is nonempty.

It is obvious that the hypothesis (A2a) implies the hypothesis (A2b). Under the hypothesis (A1), (A2b), (A3) and (A4) then the resolvent \(T_\lambda ^f\) of the cost bifunction f is single-valued and \(EP(f,C)=Fix(T_\lambda ^f)\) (see, for example [9]) where \(Fix(T_\lambda ^f)\) is denoted by the set of fixed points of \(T_\lambda ^f\). Moreover, the solution set EP(f, C) of problem (EP) is closed and convex.

In any Hilbert space, we have the following result.

Lemma 2.1

[6, Corollary 2.14] For all \(x,y\in H\) and \(\alpha \in \mathfrak {R}\), the following equality holds,

The following technical lemmas are useful for our algorithms analysis in Sect. 4.

Lemma 2.2

[39] Let \(\left\{ a_n\right\} \) be a sequence of nonnegative real numbers. Suppose that

where the sequences \(\{\gamma _n\}\) in (0, 1) and \(\{\delta _n\}\) in \(\mathfrak {R}\) satisfy the conditions: \(\lim _{n\rightarrow \infty }\gamma _n=0\), \(\sum _{n=1}^\infty \gamma _n=\infty \) and \(\lim \sup _{n\rightarrow \infty }\delta _n\le 0\). Then \(\lim _{n\rightarrow \infty }a_n=0\).

Lemma 2.3

[24, Remark 4.4] Let \(\left\{ \epsilon _n\right\} \) be a sequence of non-negative real numbers. Suppose that, for any integer m, there exists an integer p such that \(p\ge m\) and \(\epsilon _p\le \epsilon _{p+1}\). Let \(n_0\) be an integer such that \(\epsilon _{n_0}\le \epsilon _{n_0+1}\) and define

for all \(n\ge n_0\). Then \(0\le \epsilon _n \le \epsilon _{\tau (n)+1}\) for all \(n\ge n_0\). Furthermore, the sequence \(\left\{ \tau (n)\right\} _{n\ge n_0}\) is non-decreasing and tends to \(+\infty \) as \(n\rightarrow \infty \).

3 Inertial proximal point algorithms for strongly monotone bifunctions

In this section, we present two inertial proximal point algorithms for a special class of strongly monotone bifunctions. The first one, namely IPPA, is presented when the modulus of strong monotonicity of the cost bifunction is known. In this case, the rate of convergence (at least linearly) of the algorithm is established under some mild conditions on the control parameters. Afterwards we introduce a modified inertial proximal point algorithm (MIPPA) where this the modulus is not known in advance and convergence rate is also established. Throughout this section, under the hypotheses (A1), (A2a), (A3)–(A4), problem (EP) has a unique solution, see, e.g., [29, Proposition 1]. This unique solution is denoted by \(x^\dagger \).

3.1 IPPA with the prior knowledge of the modulus of strong pseudomonotonicity

The analysis of the algorithm is next.

Theorem 3.1

Under the hypotheses (A1), (A2a), (A3)–(A4), the sequence \(\left\{ x_n\right\} \) generated by Algorithm 3.1 converges strongly (at least linearly) to the unique solution \(x^\dagger \) of problem (EP). Moreover, there exists \(M>0\) such that

where \(\gamma \) is the modulus of strong monotonicity of bifunction f.

Proof

It follows from the definitions of \(x_{n+1}\) and \(T_\lambda ^f\) that

which, with \(y=x^\dagger \in C\), leads to

Since \(x^\dagger \) is the solution of problem (EP) and \(x_{n+1}\in C\), \(f(x^\dagger ,x_{n+1})\ge 0\). Thus \(f(x_{n+1},x^\dagger )\le -\gamma ||x_{n+1}-x^\dagger ||^2\) because f is strongly monotone. This together with relation (1) implies that \( -\gamma \lambda ||x_{n+1}-x^\dagger ||^2+\left\langle x_{n+1}-w_n,x^\dagger -x_{n+1}\right\rangle \ge 0 \) or

Applying the equality \(2\left\langle a,b\right\rangle =||a+b||^2-||a||^2-||b||^2\) for \(a=x_{n+1}-w_n,~b=x^\dagger -x_{n+1}\) to relation (2), we obtain

Thus

Moreover, it follows from the definition of \(w_n\) and Lemma 2.1 that

and

Combining relations (3)–(5), we obtain

Thus

or

It is obvious that

Since \(0\le \theta \le \frac{1}{3+4\lambda \gamma }\), we obtain \(0\le \theta (3+4\lambda \gamma )\le 1\). Thus \(2\theta (1+2\lambda \gamma )\le 1-\theta \), which implies that

Combining relations (7)–(9), we obtain

or

where

It follows from relation (11), by the induction, that

We have

Thus, from the definition of \(a_{n+1}\) [see (12) with \(n=n+1\)], we obtain

where the last inequality follows from the facts that \(0\le \theta \le \frac{1}{3+4\lambda \gamma }< \frac{1}{3}\) and \(\lambda \gamma >0\). This together with (13) implies that

where \(M=3a_1\) and

Finally, it follows from relation (11) that the sequence \(\left\{ a_n\right\} \) converges Q-linearly. This together with the definition of \(a_n\) follows the R-linear convergence of \(\left\{ ||x_n-x_{n-1}||^2\right\} \). Hence, we obtain immediately that the sequence \(\left\{ x_n\right\} \) converges R-linearly. Theorem 3.1 is proved. \(\square \)

Remark 3.1

We can introduce the following relaxed version of Algorithm 3.1 where the next iterate \(x_{n+1}\) might be more computable.

An example for the sequence \(\left\{ \varDelta _n\right\} \) satisfying condition (H2) is \(\varDelta _n=\frac{1}{(1+2\lambda \gamma )^{pn}}\), where \(p\ge 1\). In the case when \(\varDelta _n=0\) (and thus \(\delta _n=0\)), the aforementioned relaxed algorithm becomes Algorithm 3.1. This relaxed version allows more flexibility in numerical computation.

In this case, the conclusion of Theorem 3.1 is still true. Indeed, by arguing similarly to relation (10), we also obtain

which, from the definition of \(\delta _n\) in (H2), follows that

where \( {\bar{a}}_n=||x_n-x^\dagger ||^2-\frac{\theta }{1+2\gamma \lambda }||x_{n-1}-x^\dagger ||^2+\frac{1-\theta }{1+2\gamma \lambda }||x_n-x_{n-1}||^2+2\lambda \varDelta _n. \) Thus, we obtain by the induction that

As in relation (14), we get

Thus, from relation (17), one obtains

where \({\bar{a}}_n=||x_1-x^\dagger ||^2-\frac{\theta }{1+2\gamma \lambda }||x_{0}-x^\dagger ||^2+\frac{1-\theta }{1+2\gamma \lambda }||x_1-x_{0}||^2+2\lambda \varDelta _1.\)

3.2 IPPA without the prior knowledge of the modulus of strong pseudomonotonicity

In this section we present an IPPA without the prior knowledge of the modulus of strong pseudomonotonicity, called the MIPPA).

In the case, \(\varDelta _n=0\) (and thus \(\delta _n=0\)) then \(x_{n+1}\) can be shortly rewritten as \(x_{n+1}=T_\lambda ^f(w_n)\). An example for the sequences \(\left\{ \lambda _n\right\} \) and \(\left\{ \varDelta _n\right\} \) satisfied conditions (H3)–(H5) is \(\lambda _n=\frac{1}{n^q}\) and \(\varDelta _n=\frac{1}{a^{pn}}\), where \(0<q\le 1\) and \(p\ge 1\). We have the following result.

Theorem 3.2

Under the hypotheses (A1), (A2a), (A3)–(A4), the sequence \(\left\{ x_n\right\} \) generated by Algorithm 3.2 converges strongly to the unique solution \(x^\dagger \) of problem (EP). Furthermore, there exist numbers \(n_0\ge 1\) and \(M>0\) such that

Proof

Since \(\lambda _n\rightarrow 0\), \(a>1\) and \(0\le 3\theta <1\), there exists a number \(n_0\ge 1\) such that

and \(3\theta +4\gamma \theta \lambda _{n-1}<1\). Thus, \(2\theta (1+2\gamma \lambda _{n-1}) < 1-\theta \) or

Repeating the proof of relation (6), we obtain

It follows from relation (19) and the non-increasing property of \(\left\{ \lambda _n\right\} \) that

Combining this inequality with (21), and noting the fact \(\theta \ge \frac{\theta }{1+2\gamma \lambda _{n-1}}\) and relation (20), we come to the following estimate,

or

where \(\widetilde{a}_n=||x_n-x^\dagger ||^2-\frac{\theta }{1+2\gamma \lambda _{n-1}}||x_{n-1}-x^\dagger ||^2+\frac{1-\theta }{1+2\gamma \lambda _{n-1}}||x_n-x_{n-1}||^2+2\lambda _n\varDelta _n\). Thus, by the induction, we obtain for all \(n\ge n_0\) that

As mentioned above, we also see that

Thus

where \(M=3\widetilde{a}_{n_0}\). Hence, since \(\sum _{n=1}^\infty \lambda _n=+\infty \), we obtain that \(||x_{n+1}-x^\dagger ||^2\rightarrow 0\) or \(\left\{ x_n\right\} \) converges strongly to \(x^\dagger \) which solves uniquely problem (EP). \(\square \)

Remark 3.2

Although the formulation of Algorithm 3.2 does not require the prior knowledge of the modulus \(\gamma \), from the proof of Theorem 3.2 we see that the convergence rate depends strictly on this constant.

Remark 3.3

In order to prove the strong convergence of Algorithm 3.2 (also for the algorithm proposed in Remark 3.1), we only need to take a sequence \(\left\{ \delta _n\right\} \subset [0,+\infty )\) such that \(\sum _{n=1}^\infty \delta _n<\infty \). However, in view of conditions (H2) and (H5), we use a sequence \(\left\{ \delta _n\right\} \) having a special structure. This is the aim in order to obtain estimate (18) of the convergence rate of the algorithm. The special structure of \(\left\{ \delta _n\right\} \) is technically used in estimate (22).

4 Mann-like IPPA for monotone bifunctions

In this section, we introduce a third inertial-like proximal point algorithm with strong convergence property for solving EPs with monotone bifunctions.

Remark that the parameter \(\gamma _n\) with the properties in (H6) can be considered as a parameter of Tikhonov, see, in [5, Theorem 7.4] which plays an important role in establishing the strong convergence of the sequence \(\left\{ x_n\right\} \) to the minimum-norm solution of problem (EP). The convergence analysis of the algorithm is presented next.

Theorem 4.1

Under the hypotheses (A1), (A2b), (A3)–(A5), the sequence \(\left\{ x_n\right\} \) generated by Algorithm 4.3 converges strongly to the minimum-norm solution \(x^\dagger \) of problem (EP).

Proof

We divide the proof into the following four steps.

Claim 1 The following estimate holds for all \(n\ge 0\)and \(x^*\in EP(f,C)\),

Indeed, by arguing similarly to relation (3) for the monotone bifunction f, we obtain

Hence

From the definition of \(x_{n+1}\) and a straightforward computation, the iterate \(x_{n+1}\) can be rewritten as

Thus, applying the inequality \(||x+y||^2\le ||x||^2+2 \left\langle y,x+y\right\rangle \) and relation (25), we get

in which the second inequality follows from the convexity of \(||.||^2\) and the last inequality comes from relation (25) and the fact \((1-\gamma _n)^2\le 1-\gamma _n\). Thus, Claim 1 is proved.

Claim 2 The sequences \(\left\{ x_n\right\} \), \(\left\{ w_n\right\} \)are bounded.

Indeed, since \(\left\{ \beta _n \right\} \subset [a,b] \subset (0,1)\) and \(\gamma _n \rightarrow 0\), there exists \(n_0 \ge 1\) such that \(1-\beta _n-\gamma _n \in (0,1)\) for all \(n \ge n_0\). Without loss of generality, throughout the proof of Theorem 3.1, we can assume that \(1-\beta _n-\gamma _n \in (0,1)\) for all n. From the definitions of \(x_{n+1}\), \(w_n\) and relation (25), we have

It follows from conditions (H6) and (23) that \((1-\gamma _n)\frac{\theta _n}{\gamma _n}||x_n-x_{n-1}||\rightarrow 0\) as \(n\rightarrow \infty \). Thus, this sequence is bounded. Set \(K{=}||x^*||+\sup \left\{ (1{-}\gamma _n)\frac{\theta _n}{\gamma _n}||x_n{-}x_{n-1}||:n\ge 1\right\} \). By relation (26), we obtain

which, by the induction, implies that \(||x_{n+1}-x^*||\le \max \left\{ ||x_0-x^*||,K\right\} \). Thus, the sequence \(\left\{ x_n\right\} \) is bounded. The boundedness of \(\left\{ w_n\right\} \) follows from its definition. This completes the proof of Claim 2.

Claim 3 We have the following estimate for all \(n\ge 0\)and \(x^*\in EP(f,C)\),

Indeed, it follows from the definition of \(w_n\) that

Thus, from the definition of \(x_{n+1}\), the convexity of \(||\cdot ||^2\) and relation (24), we obtain from the definitions of \(x_{n+1}\) and \(w_n\), we have

which completes the proof of Claim 3.

Claim 4 The sequence \(\left\{ x_n\right\} \)converges strongly to the minimum-norm solution \(x^\dagger \)of problem (EP).

Indeed, applying Claim 3 for \(x^*=x^\dagger \) and set \(\varGamma _n=||x_n-x^\dagger ||^2\), we obtain

Now, we consider two cases.

Case 1 There exists a number \(n_0\ge 1\) such that \(\varGamma _{n+1}\le \varGamma _n\) for all \(n\ge n_0\). In this case, the limit of the sequence \(\left\{ \varGamma _n\right\} _{n}\) exists. Moreover, from condition (H6) and (23), we obtain \(\theta _n||x_n-x_{n-1}||\rightarrow 0\) and \(\gamma _n\rightarrow 0\) as \(n\rightarrow \infty \). Thus, it follows from (28) and \(\beta _n\ge a>0\) that

Let \(\omega _w(x_n)\) denote the set of weak cluster points of the sequence \(\left\{ x_n\right\} \). It follows from the definition of \(\left\{ w_n\right\} \) that \(\omega _w(x_n)=\omega _w(w_n)\). Moreover, it follows from (29) that \(\omega (w_n)\subset EP(f,C)\) (see, for example, a proof for this conclusion in [14, Theorem 3.1]). Now, using Claim 1 with \(x^*=x^\dagger \), we obtain

It follows from the definition of \(w_n\) that

where \(M=\sup \left\{ ||x_n-x_{n-1}||,||x_n-x^\dagger ||:n\ge 1\right\} \). Thus, from relations (30) and (31), we get

where

Note that \(\frac{\theta _n}{\gamma _n}||x_n-x_{n-1}||\rightarrow 0\) as \(n\rightarrow \infty \). Thus, from relations (29) and (33), we see that

in which the last inequality follows from the facts that \(x^\dagger =P_{EP(f,C)}(0)\) and \(\omega _w(x_n)\subset EP(f,C)\). Thus, Lemma 2.2, together with relations (32), (34) and condition (H6), ensures that \(\varGamma _n\rightarrow 0\) or \(x_n\rightarrow x^\dagger \) as \(n\rightarrow \infty \).

Case 2 There exists a subsequence \(\left\{ \varGamma _{n_i}\right\} \) of \(\left\{ \varGamma _{n}\right\} \) such that \(\varGamma _{n_i}\le \varGamma _{n_i+1}\) for all \(i\ge 0\). In that case, it follows from Lemma 2.3 that

where \(\tau (n)=\max \left\{ k\in N:n_0\le k \le n: \varGamma _k\le \varGamma _{k+1}\right\} \). Moreover the sequence \(\left\{ \tau (n)\right\} \) is non-decreasing and \(\tau (n)\rightarrow +\infty \) as \(n\rightarrow \infty \). It follows from relations (28) and (35) that

From the definition of \(\varGamma _n\), we have

Thus, from (36), we obtain

Passing to the limit in (37) and noting that \(\theta _{\tau (n)}||x_{\tau (n)}-x_{{\tau (n)}-1}|| \rightarrow 0\) and \(\beta _{\tau (n)}\ge a>0\), we obtain

Thus, as in Case 1, we obtain \(\omega _w(x_{\tau (n)})=\omega _w(w_{\tau (n)})\subset EP(f,C)\) and

where

From (38), we obtain \(\gamma _{\tau (n)}\varGamma _{\tau (n)}\le \varGamma _{\tau (n)}-\varGamma _{\tau (n)+1}+\gamma _{\tau (n)} \delta _{\tau (n)}\), which from (35), implies that \(\gamma _{\tau (n)}\varGamma _{\tau (n)}\le \gamma _{\tau (n)} \delta _{\tau (n)}\). Thus

because \(\gamma _{\tau (n)}>0\). By arguing similarly to Case 1, we also obtain \(\limsup _{n} \delta _{\tau (n)}\le 0\). Thus, from (40), we get \(\limsup _{n} \varGamma _{\tau (n)}\le 0\), which together with relation (38), implies that

Hence \(\varGamma _{\tau (n)+1}\rightarrow 0\). Consequently, \(\varGamma _n\rightarrow 0\) because relation (35) or \(x_n\rightarrow x^\dagger \) as \(n\rightarrow \infty \). This completes the proof. \(\square \)

Observe that when \(\theta =0\) then \(\theta _n={\bar{\theta }}_n=0\) and we obtain the following result which follows directly from Theorem 4.1.

Corollary 4.1

Suppose that the assumptions (A1), (A2b), (A3)–(A5) hold. Choose two sequences \(\left\{ \beta _n\right\} \) and \(\left\{ \gamma _n\right\} \) as in Algorithm 4.3. Then, the sequence \(\left\{ x_n\right\} \) generated by

converges strongly to the minimum-norm solution \(x^\dagger \) of problem (EP).

Now, we consider the constrained optimization problem

where \(\phi : C \rightarrow \mathfrak {R}\) is a proper convex, lower semicontinuous function. We assume that the solution set \(\arg \min _C \phi \) of problem (OP) is nonemtpy. For each \(x,~y \in C\), set \(f(x,y)=\phi (y)-\phi (x)\). In this case, the resolvent \(T_\lambda ^f\) is the proximal mapping \(\mathrm{prox}_{\lambda \phi }\) of \(\phi \) with the parameter \(\lambda \), i.e.,

Thus, we obtain immediately the following corollary from Theorem 4.1

Corollary 4.2

Suppose that \(\phi : C \rightarrow \mathfrak {R}\) is a proper convex, lower semi-continuous function, and that the solution set \(\arg \min _C \phi \) of problem (OP) is nonempty. Choose \(x_0,~x_1\in H\) and the parameters \(\lambda >0\), \(\theta \in [0,1)\), \(\left\{ \gamma _n\right\} \subset (0,+\infty )\), \(\left\{ \epsilon _n\right\} \subset [0,+\infty )\), \(\left\{ \beta _n\right\} \subset [a,1]\subset (0,1]\), \(\left\{ \theta _n\right\} \) as in Algorithm 4.3. Then, the sequence \(\left\{ x_n\right\} \) generated by

converges strongly to the minimum-norm solution \(x^\dagger \) of problem (OP).

5 Numerical illustrations

In this section, we present numerical experiments and comparison with related algorithms illustrating the behavior of our proposed algorithms. In case that the solution of problem (EP) is unknown, we’ll use the sequence

as a measure to describe the computational performance of the algorithms. Recall that \(\mathrm{prox}_{\lambda f}\) is denoted by the proximal mapping of the function f with \(\lambda >0\), i.e.,

Clearly \(D_n=0\) if and only if \(x_n\) is the solution of problem (EP). So, the convergence of \(D_n\) to 0 can be considered as if \(x_n\) converges to the solution of the problem. Otherwise, when the solution \(x^*\) of the problem is known, we use the sequence

All the programs are written in Matlab 7.0 with the Optimization Toolbox and computed on a PC Desktop Intel(R) Core(TM) i5-3210M CPU 2.50 GHz, RAM 2.00 GB.

Example 1 In this example, we focus in \(\mathfrak {R}^m\) (\(m=100,~150\)) with the bifunction

where \(q\in \mathfrak {R}^m\) and P, Q are two \(m\times m\) matrices such that Q is symmetric positive semi-definite and \(Q-P\) is symmetric negative semi-definite. All the entries of Q, P, and q are in \((-2,2)\) and the feasible set is

Experiment 1 Here the data is randomly generated such that \(Q-P\) is negative definite. We have

where \(\gamma \) is the smallest eigenvalue of \(Q-P\). In this case, the bifunction f is strongly monotone with the constant \(\gamma \). Then, the aim of this experiment is to describe the numerical behavior of the relaxed version of Algorithm 3.1 (shortly, IPPA) and Algorithm 3.2 (MIPPA). We choose \(\lambda =1\) for IPPA and \(\lambda _n=1/(n+1)\) for MIPPA. The starting point is \(x_0=x_1\) and generated randomly in the interval [0, 1]. For computing an approximation of the resolvent \(T_\lambda ^f\) of bifunction f we use the regularization algorithm [30, Algorithm A1] (a inner loop over iteration). This means that we find \(x_{n+1}\) in problem (AEP) in Remark 3.1 as a \(\delta _n\)-solution at each iteration (where \(\delta _n=\varDelta _n-a\varDelta _{n+1},~ a=2, \varDelta _n=\frac{1}{a^n}\)). These computations are obtained easily thanks to the Lipschip-type condition of f (see, [30, Lemma 5.1] for more details).

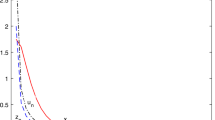

The numerical results are shown in Figs. 1 and 2 for different inertial parameters. In these figures, the y-axis represents for the value of \(D_n\) in Log-scale while the x-axis is for the execution time elapsed in seconds. We see that IPPA preforms better than MIPPA, but we need to keep in mind the theoretical advantages of MIPPA, as explained in the previous sections.

Experiment 2 In this experiment, we consider three settings.

Case 1 Choose \(P=Q=diag(1,2,\ldots ,m)\) and \(q=0\). In this case, the solution of problem (EP) is \(x^*=(0,0,\ldots ,0)^T\).

Case 2 Choose \(P=Q\) as full, symmetric and positive define matrix and \(q=0\). The solution of problem (EP) is again \(x^*=(0,0,\ldots ,0)^T\).

Case 3 The entries of P, Q and q are generated randomly such that Q is symmetric positive semi-definite and \(Q-P\) is symmetric negative semi-definite. The solution of problem (EP) is unknown.

In all above the cases, the bifunction f is monotone. We compare Algorithm 4.3 (shortly, MaIPPA) with four strongly convergent algorithms, namely algorithm RIPAFAN introduced in [8], the hybrid projection proximal point algorithm (HPPA1) proposed in [36, Corollary 3.1], the hybrid projection proximal point algorithm (HPPA2) suggested in [37, Theorem 3.1] and the shrinking projection proximal point algorithm (SPPA) presented in [34, Corollary 3.6].

The parameters are chosen as follows: \(\theta =0.5\), \(\beta _n=n/(2n+1)\), \(\gamma _n=1/(n+1)\), \(\epsilon _n=1/(n+1)^{1.1}\) for MaIPPA; \(\gamma _n=0.5\), \(\delta _n=\frac{1}{(n+1)^{1.1}}\), \(\beta _n=\frac{n}{2n+1}\), \(\alpha _n=1-\beta _n-\frac{1}{(n+1)^{1.1}}\), \(g=x_0\) for RIPAFAN; \(r_n=\lambda =1\) for all the algorithms. The starting point is randomly chosen as in the previous experiment.

We emphasize here that in both Cases 1 and 2, since P is symmetric, we get that \(\left\langle Px,y\right\rangle =\left\langle Py,x\right\rangle \). Thus, from the fact \(P=Q\), we obtain

Hence, our problem (EP) becomes the optimization problem on C with the optimization function \(g(x)=x^TPx+q^Tx\). Thus, the resolvent \(T_\lambda ^f(x)\) of the bifunction f is the proximal mapping \(\mathrm{prox}_{\lambda g}(x)\) of the function g(x). Thus, \(T_\lambda ^f(x)\) can be computed effectively by the Optimization Toolbox in Matlab. In the last case, the resolvent \(T_\lambda ^f(x)\) is found similarly to Experiment 1. The numerical results are shown in Figs. 3, 4, 5, 6, 7 and 8 and suggest that MaIPPA (Algorithm 4.3) preforms better than the other algorithms. In Figs. 3, 4, 5, 6, 7 and 8, as in Experiment 1, the y-axis represents for the value of \(D_n\) and \(E_n\) in Log-scale and the x-axis is for the execution time elapsed in seconds.

Example 2 Let \(C \subset \mathfrak {R}^m\) be a polyhedral convex set given by

where A is a matrix of size \(10 \times m\) and b is vector in \(\mathfrak {R}^m\) with their entries generated randomly in \((-2,2)\). Consider the bifunction f in [32] of the form \(f(x,y)=\left\langle F(x)+Qy+q,y-x\right\rangle \) where Q is a randomly generated symmetric positive semidefinite matrix, \(q \in \mathfrak {R}^m\), and \(F: \mathfrak {R}^m \rightarrow \mathfrak {R}^m\) is a mapping. For experiments, we suppose that F is of the form \(F(x)=M(x)+Dx+c\), where

and \(c=(-1,-1,\ldots ,-1)\), D is a square matrix of order m, given by

Experiment 3 In this experiment, we choose the parameters as in Experiment 2. For finding an approximation of \(T_\lambda ^f\), we use a linesearch procedure presented in [32, Algorithm 2] to find a \(\delta _n\)-approximation solution (where \(\delta _n=\varDelta _n-a\varDelta _{n+1},~ a=2, \varDelta _n=\frac{1}{a^n}\)). The using of a linesearch in general is time-consuming. The results are illustrated in Figs. 9 and 10.

6 Conclusions

In this paper, we have presented some algorithms which provide the strong convergence for solving an monotone equilibrium problem in a Hilbert space. The algorithms can be considered as the combinations between the (relaxed) proximal point method, the inertial method and the Mann-like iteration. The strong convergence of the algorithms are established under several standard conditions imposed on cost bifunction and control parameters. The using of inertial technique is the aim to improve the convergent properties of the algorithms. Several experiments are performed to support the theoretical results. The numerical results have illustrated the fast convergence of the new algorithm over some existing algorithms.

References

Antipin, A.S.: Second order controlled differential grradient methods for equilibrium problems. Differ. Equ. 35, 592–601 (1999)

Antipin, A.S.: Equilibrium programming: proximal methods. Comput. Math. Phys. 37, 1285–1296 (1997)

Antipin, A.S.: Second-order proximal differential systems with feedback control. Differ. Equ. 29, 1597–1607 (1993)

Anh, P.N., Hieu, D.V.: Multi-step algorithms for solving EPs. Math. Model. Anal. 23, 453–472 (2018)

Balhag, A., Chbani, Z., Riahi, H.: Existence and continuousdiscrete asymptotic behaviour for Tikhonov-like dynamical equilibrium systems. Evol. Equ. Control Theory 7, 373–401 (2018)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, New York (2011)

Blum, E., Oettli, W.: From optimization and variational inequalities to equilibrium problems. Math. Program. 63, 123–145 (1994)

Chbani, Z., Riahi, H.: Weak and strong convergence of an inertial proximal method for solving Ky Fan minimax inequalities. Optim. Lett. 7, 185–206 (2013)

Combettes, P.L., Hirstoaga, S.A.: Equilibrium programming in Hilbert spaces. J. Nonlinear Convex Anal. 6, 117–136 (2005)

Fan, K.: A minimax inequality and applications. In: Shisha, O. (ed.) Inequality III, pp. 103–113. Academic Press, New York (1972)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer, Berlin (2002)

Flam, S.D., Antipin, A.S.: Equilibrium programming and proximal-like algorithms. Math. Program. 78, 29–41 (1997)

Hieu, D.V., Cho, Y.J., Xiao, Y.-B.: Modified extragradient algorithms for solving equilibrium problems. Optimization 67(11), 2003–2029 (2018). https://doi.org/10.1080/02331934.2018.1505886

Hieu, D.V.: An inertial-like proximal algorithm for equilibrium problems. Math. Meth. Oper. Res. 88(3), 399–415 (2018). https://doi.org/10.1007/s00186-018-0640-6

Hieu, D.V.: New inertial algorithm for a class of equilibrium problems. Numer. Algor. 80(4), 1413–1436 (2018). https://doi.org/10.1007/s11075-018-0532-0

Hieu, D.V.: Strong convergence of a new hybrid algorithm for fixed point problems and equilibrium problems. Math. Model. Anal. 24, 1–19 (2019)

Hieu, D.V., Strodiot, J.J.: Strong conve rgence theorems for equilibrium problems and fixed point problems in Banach spaces. J. Fixed Point Theory Appl. 20, 131 (2018)

Hieu, D.V., Anh, P.K., Muu, L.D.: Modified extragradient-like algorithms with new stepsizes for variational inequalities. Comput. Optim. Appl. 73, 913–932 (2019)

Hieu, D.V., Cho, Y.J., Xiao, Y.-B.: Golden ratio algorithms with new stepsize rules for variational inequalities. Math. Meth. Appl. Sci. (2019). https://doi.org/10.1002/mma.5703

Iusem, A.N., Sosa, W.: On the proximal point method for equilibrium problems in Hilbert spaces. Optimization 59, 1259–1274 (2010)

Konnov, I.V.: Application of the proximal point method to nonmonotone equilibrium problems. J. Optim. Theory Appl. 119, 317–333 (2003)

Konnov, I.V.: Equilibrium Models and Variational Inequalities. Elsevier, Amsterdam (2007)

Konnov, I.V., Ali, M.S.S.: Descent methods for monotone equilibrium problems in Banach spaces. J. Comput. Appl. Math. 188, 165–179 (2006)

Maingé, P.E.: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 47, 1499–1515 (2008)

Martinet, B.: Régularisation d’inéquations variationelles par approximations successives. Rev. Fr. Autom. Inform. Rech. Opér. Anal. Numér. 4, 154–159 (1970)

Moudafi, A.: Proximal point algorithm extended to equilibrum problem. J. Nat. Geom. 15, 91–100 (1999)

Moudafi, A.: Second-order differential proximal methods for equilibrium problems. J. Inequal. Pure Appl. Math. 4, Art. 18 (2003)

Mordukhovich, B., Panicucci, B., Pappalardo, M., Passacantando, M.: Hybrid proximal methods for equilibrium problems. Optim. Lett. 6, 1535–1550 (2012)

Muu, L.D., Quy, N.V.: On existence and solution methods for strongly pseudomonotone equilibrium problems. Vietnam J. Math. 43, 229–238 (2015)

Muu, L.D., Quoc, T.D.: Regularization algorithms for solving monotone Ky Fan inequalities with application to a Nash–Cournot equilibrium model. J. Optim. Theory Appl. 142, 185–204 (2009)

Muu, L.D., Oettli, W.: Convergence of an adative penalty scheme for finding constrained equilibria. Nonlinear Anal. TMA 18, 1159–1166 (1992)

Quoc, T.D., Muu, L.D., Nguyen, V.H.: Extragradient algorithms extended to equilibrium problems. Optimization 57, 749–776 (2008)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14, 877–898 (1976)

Saewan, S., Kumam, P.: A modified hybrid projection method for solving generalized mixed equilibrium problems and fixed point problems in Banach spaces. Comput. Math. Appl. 62, 1723–1735 (2011)

Santos, P., Scheimberg, S.: An inexact subgradient algorithm for equilibrium problems. Comput. Appl. Math. 30, 91–107 (2011)

Tada, A., Takahashi, W.: Weak and strong convergence theorems for a nonexpansive mapping and an equilibrium problem. J. Optim. Theory Appl. 133, 359–370 (2007)

Takahashi, W., Zembayashi, K.: Strong and weak convergence theorems for equilibrium problems and relatively nonexpansive mappings in Banach spaces. Nonlinear Anal. 70, 45–57 (2009)

Vuong, P.T., Strodiot, J.J., Nguyen, V.H.: Extragradient methods and linesearch algorithms for solving Ky Fan inequalities and fixed point problems. J. Optim. Theory Appl. 155, 605–627 (2012)

Xu, H.K.: Another control condition in an iterative method for nonexpansive mappings. Bull. Austral. Math. Soc. 65, 109–113 (2002)

Acknowledgements

The authors would like to thank the Associate Editor and the two anonymous referees for their valuable comments and suggestions which helped in improving the original version of this paper. This paper is supported by Vietnam National Foundation for Science and Technology Development (NAFOSTED) under Grant No. 101.01-2017.315.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

There are no conflicts of interest to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Van Hieu, D., Gibali, A. Strong convergence of inertial algorithms for solving equilibrium problems. Optim Lett 14, 1817–1843 (2020). https://doi.org/10.1007/s11590-019-01479-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-019-01479-w