Abstract

We describe an iterative method for solving absolute value equations. The result gives a sufficient condition for unique solvability of these equations for arbitrary right-hand sides. This sufficient condition is compared with that one by Mangasarian and Meyer.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The absolute value equation

(where \(A,B\in \mathbb{R }^{n\times n}\) and \(b\in \mathbb{R }^n\)) has been recently studied by several authors, cf. e.g. Caccetta, Qu and Zhou [1], Hu and Huang [3], Karademir and Prokopyev [4], Mangasarian [5, 6], Mangasarian and Meyer [7], Prokopyev [9], Rohn [11, 12], and Zhang and Wei [13]. The reason for recent interest in this new topic consists probably in the fact that some frequently occurring optimization problems, as e.g. linear complementarity problem, linear programming, or convex quadratic programming can be equivalently reformulated in the form (1) and thus solved as absolute value equations (Rohn [10], Murty [8]).

In the above-quoted papers various methods for solving absolute value equations were proposed, based mostly on nonlinear optimization techniques. Little attention, however, has been paid so far to iterative methods for solving (1). In this paper we formulate such a method (Theorem 1) working under assumption of existence of matrices \(M\) and \(R\) satisfying certain matrix inequality. In Theorem 2 we show how a specific solution of this inequality can be found. This theorem implies that the condition

(\(\varrho \) denotes the spectral radius) guarantees unique solvability of the equation

for arbitrary right-hand side \(b\). In Sect. 5 we compare this condition with the Mangasarian–Meyer condition

(\({\sigma }_{\max }\) denotes the largest singular value) and we show that they are independent of each other. In the last Theorem 4 we propose merging the two conditions into a single one. Some brief comments about possibility of finding a necessary and sufficient condition of unique solvability of an absolute value equation for each right-hand side conclude the paper.

2 General condition

Our main result in Sect. 3 will be delivered under assumption of existence of matrices \(M,R\in \mathbb{R }^{n\times n}\) satisfying

(where \(I\) denotes the identity matrix). As this inequality looks complicated at the first glance, we shall bring it to some simpler, albeit two-line form. To this end, let

then \(G\) is nonnegative and the condition (2) can be equivalently written as

Now, since the right-hand side of (4) is nonnegative, we have that \(M\ge 0\), hence \(|M|=M\) and (4) implies

Conversely, if (5), (6) hold, then \(M=|M|\) and (5) turns into (4), which is (2). Hence, under notation (3), the condition (2) can be written either in one-line form (4), or in equivalent two-line form (5), (6). The former option is shorter whereas the latter is more descriptive.

3 Iterative method under general condition

The following theorem is the basic result of this paper. \({\sigma }_{\max }(A)\) stands for the largest singular value of \(A\).

Theorem 1

Let \(M\) and \(R\) satisfy (2). Then the sequence \(\{x^i\}_{i=0}^{\infty }\) given by \(x^0=Rb\) and

converges to the unique solution \(x^*\) of (1) and for each \(i\ge 0\) there holds

and

proof

For clarity, we divide the proof into several steps.

(a) Let (2) have a solution \(M\) and \(R\). Using the equivalent conditions (5), (6), we have

and postmultiplying this inequality by \(G\) and adding \(I\) to both sides we obtain

and by induction

for \(k=0,1,2,\ldots \). In view of nonnegativity of \(M\), this shows that the nonnegative matrix series \(\sum _{j=0}^{\infty }G^j\) satisfies

hence it is convergent, so that \(G^j\rightarrow 0\) and consequently

Now we have

hence

Since \(\varrho (I-RA)<1\), the matrix

is nonsingular, which gives that both \(R\) and \(A\) are nonsingular.

(b) Let \(i\ge 1\). Subtracting the equations

we get

and for each \(m\ge 1\) by induction

From the final inequality

in view of the fact that \(G^{i-1}\rightarrow 0\) as \(i\rightarrow \infty \) due to (10), we can see that the sequence \(\{x^i\}\) is Cauchian, thus convergent, \(x^i\rightarrow x^{*}\). Taking the limit in (7) we get

which gives

and employing the above-proved nonsingularity of \(R\), we can see that \(x^*\) is a solution of (1). The estimation (8) follows from the above-established inequality

by writing \(i+1\) instead of \(i\) and taking \(m\rightarrow \infty \).

(c) To prove (9), let us subtract the Eq. (7) from the equation \(Ax^*+B|x^*|=b\) written in the form

Then we have

and taking Euclidean norm we obtain

(d) To prove uniqueness, assume that (1) has a solution \(x^{\prime }\). From the equations

written as

we obtain

hence

and by (9),

which shows that \(x^{\prime }=x^*\).

This completes the proof. \(\square \)

4 Iterative method under specific condition

Let us write the condition (2), as before, in the form

where

Obviously, such \(M\) and \(R\) do not exist always because their existence implies nonsingularity of \(A\) (Proof of Theorem 1, (a)). We show how \(M\) and \(R\) satisfying (11), (12) can be found. \(\varrho (A)\) stands for the spectral radius of \(A\).

Theorem 2

Let \(A\) be nonsingular and let

hold. Then the sequence \(\{x^i\}_{i=0}^{\infty }\) given by \(x^0=A^{-1}b\) and

converges to the unique solution \(x^*\) of (1) and for each \(i\ge 0\) there holds

and

proof

As is well known, the condition (13) is equivalent to

(Horn and Johnson [2]). Then the matrices

satisfy (11), (12). Indeed, \(M\ge 0\) by assumption, and because \(G=|A^{-1}B|\), we have \(M(I-G)=M(I-|A^{-1}B|)=I\). Then Theorem 1 applies. \(\square \)

5 Comparison of two sufficient conditions

In this section we shall be interested in sufficient conditions for existence of a unique solution, putting aside the computational aspects. From Theorem 2 we immediately obtain this sufficient condition.

Theorem 3

If (13) holds, then for each right-hand side \(b\) the absolute value equation

has a unique solution.

Mangasarian and Meyer studied in [7] an absolute value equation in a simplified form

which is a special case of our Eq. (15) for \(B=-I\). In [7, Prop. 3] they proved that the Eq. (16) is uniquely solvable for each \(b\) if

holds, where \({\sigma }_{\min }\) denotes the smallest singular value. Since

(assuming nonsingularity of \(A\)), we can bring the condition (17) to an equivalent form

which is similar to our condition (13) which in the case of Eq. (16) reads

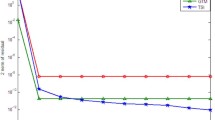

To compare the two sufficient conditions, we created the following MATLAB function.

As it can be seen, it generates \(j\) randomly generated matrices of size \(n\times n\), where \(n\) varies randomly between \(2\) and \(m\). For each such a matrix \(A\), the values

are computed and the numbers of occurrences of the four cases

are stored in variables \(rs1, r1s, s1r\), and \(ors\), respectively. We ran the function using the command

i.e., on 10,000 randomly generated matrices of random sizes varying between \(2\times 2\) and \(100\times 100\). The results were as follows:

Hence, both conditions (19) and (18) were satisfied in 1985 out of 10,000 examples, in 483 cases the condition (19) was satisfied whereas (18) was not, in 79 cases the two conditions reversed their roles, and in 7453 cases (almost 75 %) neither of them worked. This example reveals an important fact that the two conditions (19), (18) are independent of each other, i.e., none of them implies the second one; this is so because both cases \(r<1<s, s<1<r\) can occur. To be perfectly clear, we show two specific examples illustrating occurrences of the two cases.

Here \(r<1<s\).

Here, on the contrary, \(s<1<r\).

In all computed sets of examples it was \(r1s>s1r\), sometimes \(r1s\) was greater by an order of magnitude. This shows that generally the condition (19) should be preferred. But we can merge both conditions into a single one, making use of both of them.

Theorem 4

If \(A\) is invertible and

holds, then for each right-hand side \(b\) the absolute value equation

has exactly one solution.

6 Conclusion

From our iterative method we have derived a sufficient condition for unique solvability of an absolute value equation for each right-hand side, and we have compared it with a sufficient condition by Mangasarian and Meyer. Of much greater theoretical importance would be to find a necessary and sufficient condition. What we can only say at this moment is that any necessary and sufficient such a condition would be difficult to verify considering the fact that the problem of solvability of absolute value equations is NP-hard, as proved by Mangasarian and Meyer [7].

References

Caccetta, L., Qu, B., Zhou, G.: A globally and quadratically convergent method for absolute value equations. Comput. Optim. Appl. 48(1), 45–58 (2011). doi:10.1007/s10589-009-9242-9

Horn, R.A., Johnson, C.R.: Matrix analysis. Cambridge University Press, Cambridge (1985)

Hu, S.L., Huang, Z.H.: A note on absolute value equations. Optim. Lett. 4(3), 417–424 (2010). doi:10.1007/s11590-009-0169-y

Karademir, S., Prokopyev, O.A.: A short note on solvability of systems of interval linear equations. Linear Multilinear Algebra 59(6), 707–710 (2011). doi:10(1080/03081087).2010.486403

Mangasarian, O.: Absolute value equation solution via concave minimization. Optim. Lett. 1(1), 3–8 (2007). doi:10.1007/s11590-006-0005-6

Mangasarian, O.: A generalized Newton method for absolute value equations. Optim. Lett. 3(1), 101–108 (2009). doi:10.1007/s11590-008-0094-5

Mangasarian, O.L., Meyer, R.R.: Absolute value equations. Linear Algebra Appl. 419(2–3), 359–367 (2006). doi:10.1016/j.laa.2006.05.004

Murty, K.G.: Linear complementarity, linear and nonlinear programming. Heldermann, Berlin (1988)

Prokopyev, O.: On equivalent reformulations for absolute value equations. Comput. Optim. Appl. 44(3), 363–372 (2009). doi:10.1007/s10589-007-9158-1

Rohn, J.: A theorem of the alternatives for the equation \({A}x+{B}|x|=b\). Linear Multilinear Algebra 52, 421–426 (2004)

Rohn, J.: An algorithm for solving the absolute value equation. Electron. J. Linear Algebra 18, 589–599 (2009). http://www.math.technion.ac.il/iic/ela/ela-articles/articles/vol18_pp589-599.pdf

Rohn, J.: An algorithm for solving the absolute value equation: an improvement. Technical Report 1063, Institute of Computer Science, Academy of Sciences of the Czech Republic, Prague (2010). http://uivtx.cs.cas.cz/~rohn/publist/absvaleqnreport.pdf

Zhang, C., Wei, Q.: Global and finite convergence of a generalized Newton method for absolute value equations. J. Optim. Theory Appl. 143(2), 391–403 (2009). doi:10.1007/s10957-009-9557-9

Acknowledgments

The authors thank the referee for helpful suggestions that resulted in essential improvement of the text of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rohn, J., Hooshyarbakhsh, V. & Farhadsefat, R. An iterative method for solving absolute value equations and sufficient conditions for unique solvability. Optim Lett 8, 35–44 (2014). https://doi.org/10.1007/s11590-012-0560-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-012-0560-y