Abstract

In this paper, we further elaborate on a methodology dedicated to the modeling of geotechnical data to be used as input in numerical simulation and TBM performance codes. The expression “geotechnical data” refers collectively to the spatial variability and uncertainty exhibited by the boundaries and the mechanical or other parameters of each geological formation filling a prescribed 3D domain. Apart from commercial design and visualization software such as AutoCAD Land Desktop® software and 3D solid modelling and meshing pre-processors, the new tools that are employed in this methodology include relational databases of soil and rock test data, Kriging estimation and simulation methods, and a fast algorithm for forward or backward analysis of TBM logged data. The latter refers to the continuous upgrade of the soil or rock mass geotechnical model during underground construction based on feedback from excavation machines for a continuous reduction of the uncertainty of predictions in unsampled areas. The approach presented here is non-intrusive since it may be used in conjunction with a commercial or any other available numerical tunneling simulation code. The application of these tools is demonstrated in Mas-Blau section of L9 tunnel in Barcelona.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Deterministic numerical tunneling simulation models relying on Boundary Element Method (BEM), Finite Element Method (FEM), Finite Differences Method (FDM), Distinct Element Method (DEM) or other, with soil or rock parameters data averaged over very large volumes (geological units or sections) and assigned uniformly to each building “brick” (element) of the model, are relatively well developed and studied. For example, we may refer to the recent paper [13] investigating the effect of permeability on the deformations of shallow tunnels; also to the paper [12] that investigates the effect of the nature of constitutive model of the soil on the ground deformation behavior around a tunnel. However, past studies [6, 7, 11, 21] have shown that inherent variability of soil or rock masses viewed at the scale of the core or the scale of elements needed for numerical simulations (mesoscale) can produce ground deformation behaviors that are qualitatively different from those predicted by conventional deterministic analyses with averaging of mechanical properties over large volumes such as this of an entire geological unit (macroscale). Hence, there could be cases that the latter approach will lead to significant errors and consequently to wrong decisions. It is also worth mentioning, the concerns of Tunnel Boring Machines (TBM) and Roadheader (RH) developers, regarding the estimation of the spatial distribution of rock or soil strength and wear parameters inside the geological domain and in particular along the planned chainage of the tunnel before the commencement of the excavation.

In this work, we aim at the transformation of the conceptual qualitative geological model plus collected geotechnical data, into a 3D geotechnical or ground model that exhibits variability at the mesoscale. As is shown in the flowchart of Fig. 1, the approach starts with the creation of the Discretized Solid Geological Model (DSGM) together with the Digital Terrain Model (DTM) (for shallow excavations). The procedure for accomplishing this task by virtue of CAD techniques for discretization of solid models is illustrated in Sect. 2. In the next stage, the soil and rock mechanical parameters that are calibrated on laboratory or in situ test data together with the spatial coordinates of the samples are stored in a relational database [4]. The new concept introduced in this database scheme is that it not only contains all the parameters of a given soil or rock that are derived by assuming a certain constitutive law, but also the data reduction and model calibration methodology in a transparent manner. This approach apart from collecting, organizing and processing the experimental data, is also useful for calibration of micromechanical properties of discrete element or particle models that could be employed for subsequent simulation of rock cutting with disc cutters, knives or cutting picks [18].

Herein, the geometry of either a given lithological unit or groundwater table, or value of a mechanical parameter (i.e. modulus of elasticity, uniaxial and tensile strengths, etc.) and permeability, is considered to be Random Functions (RF) or random fields. The term RF means that at each location (i.e. at a grid node or inside a grid cell of the discretized model) the considered parameter behaves like a Random Variable (RV); that is to say, like a function that might take different values (outcomes) with given probabilities. The theory of stochastic processes and RF’s has been in use for a relatively long time to solve problems of interpolation or filtering. In the literature, a RF is also called a stochastic process when x varies in 1D space, and a RF for a space of more than one dimension. Geostatistical theory [1, 2, 5, 10, 14–16] is used as the tool for assigning to each finite element (unit cell) of the DSGM, lithological, hydrogeological and mechanical parameters. The basic ingredients of this theory and especially the Kriging estimation and Simulated Annealing (SA) techniques are outlined in Sect. 3. In the same section, the basic features of the upgraded KRIGSTAT code [5] that includes these two methods, and is dedicated for the interpolation, averaging or filtering of a given material parameter or geological parameter or TBM excavation logged data, are also presented.

In Kriging, the experimental semivariogram is first found from a set of data. Then, a model semivariogram is calibrated on the experimental one and the best linear unbiased estimate of the parameter at any grid cell of the DSGM is found. On the other hand, in the frame of the random field generation scheme (SA) of KRIGSTAT, n different input files (i.e. n realizations) of the random field or fields (elastic moduli, cohesion, internal friction angle, etc.) may be generated and assigned to each grid cell of the DSGM. These input files contain all the information needed by the deterministic FEM, FDM or BEM programs, i.e. assigned material type and associated parameters in each grid cell. Thus, no programming effort is required to change the existing FEM, BEM code in contrast to the popular spectral stochastic finite element method [8]. These input files can be executed automatically in FEM or BEM code with the aid of the KRIGSTAT software. Once the solution process is finished, post-processing can be carried out to extract all the necessary response statistics of interest. The non-intrusive stochastic analysis scheme is shown in Fig. 1. The ground model is completed by specifying the in situ stresses and ground water table (i.e. Fig. 1).

As is shown in Fig. 1, the 3D Kriging estimation or stochastic ground model may be also used as an input file for the Tunnel Boring Machine (TBM) performance model. The TBM performance forward model is analytical and has been implemented into CUTTING_CALC code. This fast code predicts the consumption of the Specific Energy (SE) or the net advance rate along the planned tunnel alignment given the ground strength properties, in situ stresses, pore pressure of the groundwater, cutterhead design and TBM operational parameters like rotational speed and penetration depth per revolution, and is outlined in Sect. 4. The same algorithm has another subroutine that performs similar SE calculations for Roadheaders (RH). The backward TBM model—that is also a subroutine of the code—is used for the estimation of the ground strength properties from the measured power consumption, thrust, rotational speed and penetration depth per revolution of the cutterhead of the TBM. Back-analysis may be easily achieved since the TBM performance model is analytical. Hence, the estimated strength properties may be used as a feedback for the upgrade of the initial ground model and so forth in the manner illustrated in the flowchart of Fig. 1. The numerical model is adopted to compute ground deformations induced by tunneling in each spatially heterogeneous realization of this random field. Realization means the estimated or simulated values of a certain variable within the domain. This non-intrusive scheme does not require the user to modify existing deterministic numerical simulation codes.

2 Discretized solid geological modeling

The three-step procedure proposed here for the creation of the DSGM and DTM that could be subsequently used as input directly to a numerical code, is illustrated by using geological and borehole exploration data from the Mas-Blau tunnel of L9 in Barcelona, and is as follows:

-

1.

The geological model of the Mas-Blau section was created by modeling each interface between the four layers through the interpolation of borehole lithological data (see Fig. 2a) on a specified grid using the geostatistical code KRIGSTAT [3, 5] that is outlined in Sect. 3. More specifically, the surfaces between adjacent soil formations were derived from the interpolation of elevation data (z values), that were identified from the 15 boreholes (Fig. 2a), in a pre-specified (x, y) grid inside the 3D model by using the Ordinary Kriging (OK) subroutine. Surface models are derived from interpolation of borehole data using the OK technique.

Fig. 2 Example application of Line 9 Barcelona Metro, Mas-Blau section: a Longitudinal view of the exploratory boreholes indicating the lithological units along them (courtesy of GISA); b solid geological model, and c discretized geological model on a mesh with tetrahedral elements and lithological data assigned to grid points (example of MIDAS pre-processor ready for implementation into the numerical simulation code)

-

2.

After constructing the DTM in DXF format, the Solid Geological Model (SGM) of each geological unit is created by virtue of AutoCAD Land desktop software in ACIS format (Fig. 2b).

-

3.

Subsequently, each SGM corresponding to a given soil formation (R-QL1, QL2, QL3 and QL4) is imported to geometrical solid modeling pre-processor [9, 19] and is discretized into tetrahedral elements, thus creating the final DSGM. In such a manner, a given lithology is assigned to each grid cell (i.e. Fig. 2c). It is worth noting that modelling each layer separately permits one to perform geostatistical analysis inside them, as it is shown in the next section, by assuming statistical homogeneity of each considered soil parameter.

Before proceeding to the next step of assigning material parameters to each grid cell, several possibilities for exploiting such a model may be explored. For example, given the tunnel alignment and diameter, the SGM of the tunnel could be isolated from the global SGM as is shown in Fig. 3a. Subsequently, it becomes possible to infer the probability that the tunnel’s face lies in QL3 formation by using the Indicator Kriging (IK) subroutine of KRIGSTAT. In such an IK process, a binary RV (+1 for QL3 or 0 otherwise) is associated with each grid cell and then the probability of the particular tunnel’s face intersecting QL3 is derived by averaging the outcomes. Figure 3b plots the variation of this probability along the chainage of the tunnel. Also, in the same figure, the SE consumed by the TBM and computed via CUTTING_CALC algorithm as is explained in Sect. 4 is also plotted for comparison purposes. It is clear from this figure as well as from Fig. 3c that geological conditions influence the SE.

a Solid geological model of the tunnel that displays mixed-face conditions along a significant part of it and single-face conditions close to its end, b plot of the SE consumed by the TBM with respect to the probability of tunnel’s face lying in QL3 layer, c dependence of SE on the probability of tunnel intersecting QL3 formation, and d dependence of SE on NSPT

Since the experimental technique of the standard penetration test (SPT) blow counts, designated as N SPT, involves also penetration resistance of the soil and there were available such data in the Mas-Blau, it is logical to seek for a possible correlation of this number with SE. This is attempted in Fig. 3d where we have plotted the SE with respect to N SPT. It is noted that the N SPT values were interpolated with the OK method on the grid points of the DSGM of the tunnel from the experimental data along the boreholes. From Fig. 3d, it may be observed that there is some linear correlation between these two parameters; however, we must be cautious due to the objectivity involved for the estimation of the in situ N SPT and the smoothing effect induced by the Kriging estimation method.

3 Geostatistical ground model

3.1 Basic preliminaries

Geostatistics [1, 2, 10, 14–16] and Random Field theory [22] provide the mathematical tools needed to estimate spatially or temporally correlated data from sparse sample data. For clarity of the presentation, the basic concepts of Geostatistics are outlined here.

Let the capital letter Z denote a RV, whereas the lower case letter z denote its outcome values that are called “realizations”. Many times, the probability density function (pdf) of Z is location dependent, hence it is denoted as \( Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ) \) where \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \) is the position vector (curly underline denotes a vector) in a fixed Cartesian coordinate system. The cumulative distribution function (cdf) of a continuous RV \( Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ) \) could be also easily defined. When the cdf is made to obey a particular information set, then it is said to be a conditional cdf (ccdf). One may note that the ccdf is a function of the location \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \), the sample size and spatial distribution of prior data \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{a}, \,a = 1, \ldots ,n \) and the n sample values \( z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{a} } ),a = 1, \ldots ,n \). The mathematical expression for the cdf describes the uncertainty about the unsampled value \( Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ) \), whereas ccdf characterizes the reduced uncertainty after taking into account the information set (n).

It is usual to describe the statistical behavior of a RV by its 1st and 2nd moments, namely its mean m or expectation and variance σ2 or mean square, respectively, as follows

Other useful moments are the coefficient of skewness (3rd moment) and of kurtosis or flatness (4th moment). A useful dimensionless ratio expressing the degree of uncertainty of Z can be calculated by normalizing the standard deviation σ (square root of the variance) with respect to the “local” mean, m. This ratio is called the Coefficient of Variation (COV). These statistical properties are functions of space coordinates in case of a RF, i.e. \( m = m( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ) \) etc. In Geostatistics, most of the information related to an unsampled value \( z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ) \) comes from sample values at neighboring locations \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}^{\prime } \). Thus, it is important to model the degree of correlation or dependence between any number of RV’s \( Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{a} } ) \), a = 1,…, n. The concept of a RF allows such modeling and updating of prior cdf’s to posterior ccdf’s.

A RF is a set of RV’s defined inside some region V of interest, i.e. \( \{ {Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ),\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \in V} \} \) and maybe also denoted as \( z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ) \). As in the case of a RV that is characterized by a univariate cdf, the RF is characterized by the set of all its K-variate cdf’s for any number K and any choice of the K locations, \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{k} ,k = 1, \ldots ,K \)

Of particular interest in Geostatistics is the bivariate (K = 2) cdf of any two RV’s \( Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{1} } ) \) and \( Z( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{2} } ) \), that is to say

The covariance of two correlated values (two-point covariance) in the same “statistically homogeneous” random field (i.e. a field where all the joint probability density functions remain the same when the set of locations \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{1} ,\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{2} , \ldots \) is translated but not rotated in the parameter space) is defined as the expectation of the product of the deviations from the mean

i.e. h is the distance between sampling locations \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{1} \) and \( \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{2} \). The field with this property is called stationary or homogeneous. Dividing R by σ leads to the dimensionless coefficient of correlation ρ between \( Z(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{1} ) \) and \( Z(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{2} ) \)

In most cases, the mean is not known beforehand but needs to be inferred from the data; to avoid this lack of data, it may be more convenient to work with the semivariogram that is defined as

By using the semivariogram instead of the covariance function to analyze the spatial correlation structure of a geotechnical parameter means that a constant population mean, m is assumed. This is the so-called “intrinsic hypothesis” of Geostatistics [16].

It may be also easily shown that for a stationary RV, the following relationship is valid

3.2 Kriging estimation

The basic statistical and geostatistical procedures have been implemented in KRIGSTAT algorithm for the exploratory data analysis and spatial estimation of geotechnical model parameters over the defined study area V. KRIGSTAT may be a stand-alone application or add-onto a commercial numerical code or risk analysis software. The estimation procedure starts with exploratory data analysis, normality tests, searching for trends, declustering to correct for spatial bias, etc. Kriging assumes that the expected value \( z^{*} (x_{k} ) \) of RV Z at location x k can be interpolated as follows

where z(x i ) represents the known value of variable Z at point x i ; λ i is the interpolation weight function, which depends on the interpolation method, and M is the total number of points used in the interpolation. This technique apart from being simple gives the Best Linear Unbiased Estimations (BLUE) at any location between sampling points and is fast. Kriging uses the experimental semivariogram for the interpolation between spatial points. In the case of isotropic conditions and statistical homogeneity, the experimental semivariogram is

where n is the total number of observations separated by the distance (lag) h. In practice, Eq. (9) is evaluated for evenly spaced values of h using a lag tolerance Δh, and n is the number of points falling between h − Δh and h + Δh. A typical semivariogram characteristic of a stationary random field saturates to a constant value (i.e., sill) for a distance called “range”. The range represents the distance beyond which there is no correlation between spatial points. In theory, γ(h) = 0 when h = 0; however, the semivariogram often exhibits a nugget effect at very small lag distance, which reflects usually measurement errors and size effects. Experimental semivariograms can be fitted using spherical, exponential, Gaussian, Bessel, cosine or hole-effect, mixed semivariogram models, etc. from the library of KRIGSTAT. The fitted model is then validated using residuals or other suitable method (i.e. jack-knife, leave-one-out or cross-validation). Based on an isotropic semivariogram model, OK determines the coefficients λ i by solving the following system of n + 1 equations:

where β is a Lagrange multiplier. The isotropic semivariogram of Eq. (10) can be generalized for anisotropic cases when data depend not only on distance but also on direction. In practice, anisotropic semivariograms are determined by partitioning data along directional conical-cylindrical bins with pre-defined geometry. Anisotropy is detected when the range or sill changes significantly with direction and may be efficiently tackled with KRIGSTAT. The present analysis assesses the error of spatial interpolation using the minimum error variance (e.g. [1]). The estimated interpolation error at location x k is the variance (or standard deviation squared) of the error estimate

As it may be observed from Eq. (11), the Kriging variance does not depend on the sample values themselves, but only on their locations. Therefore, Kriging variance measures the uncertainty at the estimation location due to the spatial configuration of the available data for its estimation, rather than based on the dispersion of the values. The Kriging variance could be used for the conduction of risk analysis of the project, as well as for the proposition of optimum additional exploratory drill holes at strategic locations inside the geological domain of interest.

Spatial interpolation of all the Standard Penetration Tests (SPT) blow counts N SPT (M = 140) conducted in the exploratory boreholes of Mas-Blau tunnel section on the 3D grid of the DSGM was carried out by virtue of the OK technique. The calculated experimental semivariogram that exhibits a geometrical anisotropy (i.e. range along the vertical direction is 0.35 of the range along any horizontal direction) and the fitted exponential model are shown in Fig. 4a. The estimations of N SPT values are presented separately for each formation in Fig. 4b. The average N SPT values on the tunnel’s face obtained from this model were used for the construction of the diagram presented in Fig. 3d.

3.3 Simulated annealing

KRIGSTAT includes also an adaptive method that starts from a standard simulation, matching only the histogram and the covariance (or semivariogram), for example, and adapts it iteratively until it matches the imposed constraints. This method is simulated annealing. This is an optimization method rather than a simulation method and its use for the construction of geostatistical simulations is based on arguments that are more heuristic than theoretical. The name and inspiration come from annealing in metallurgy, a technique involving heating and controlled cooling of a material to increase the size of its crystals and reduce their defects. The heat causes the atoms to become unstuck from their initial positions (a local minimum of the internal energy) and wander randomly through states of higher energy; the slow cooling gives them more chances of finding configurations with lower internal energy than the initial one.

Stochastic simulation is the process of drawing alternative, equally probable models of the spatial distribution of \( z(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ) \), with each such realization denoted with the superscript r, i.e. \( \{ {z^{(r)} (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ),\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \in V} \} \). The simulation is said to be “conditional” if the resulting realizations obey the hard data values at their locations, that is \( z^{(r)} (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{a} ) = z(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x}_{a} )\quad \forall r \). The \( {\text{RV}}\,Z (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ) \) maybe “categorical”, e.g. indicating the presence or absence of a particular geological formation, or it maybe a continuous property such as porosity, permeability, cohesion of a soil or rock formation.

Simulation differs from Kriging or any other deterministic estimation method (e.g. inverse squared distance, nearest neighbor, etc.) in the following two major aspects:

-

1.

Most interpolation algorithms give the best local estimate \( z^{*} (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ) \) of each unsampled value of the RV \( Z(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ) \) at a grid of nodes without regard to the resulting spatial statistics of the estimates \( z^{ * } (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ),\quad \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \in V \). In contrast, in simulations, the global texture and statistics of the simulated values \( z^{(r)} (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ),\quad \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \in V \) take precedence over local accuracy.

-

2.

As it was already mentioned in the previous paragraph, for a given set of sampled data, Kriging provides a single numerical model \( z^{*} (\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} ),\quad \underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \in V \) that is the “best” in some local accuracy scheme. On the other hand, simulation provides many alternative numerical models \( \{ {z^{(r)} ( {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} } ),\,\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle\sim}$}}{x} \in V} \},\;r = 1, \ldots ,R \), each of which is a “good” representation of the reality in some “global sense”. The difference among these R alternative models or realizations provides a measure of joint spatial uncertainty.

In the context of SA technique, random numbers are generated via a Monte Carlo simulation technique from a specified statistical distribution of the field parameter under investigation. For “training simulations,” conditioning data are relocated in the nearest grid points. Then, the initial image is modified by swapping the values in pairs of grid nodes. A swap is accepted if the objective function is lowered. In this frame, a global optimization is achieved in contrast to Kriging in which a local optimization scheme is followed. The objective function (OF) is defined as the average squared difference between the experimental and model semivariogram like:

The division by the square of the model semivariogram value at each lag h standardizes the units and gives more weight to closely spaced (low semivariogram) values.

Not all swaps that raise the objective function are rejected. The success of the method depends on a slow “cooling” of the image controlled by a temperature function, which decreases with evolution of time. The higher the temperature denoted by the symbol T 0, which is a control parameter, the greater the possibility that an unfavorable swap will be accepted. The simulation ends when the image is frozen, that is, when further swaps do not reduce the value of the objective function. “Hard data” values are relocated at grid nodes and are never swapped, which allows their exact reproduction.

The following procedure is employed to create an image that matches the cdf F(z), the model semivariogram γ(h) and other possible conditioning data:

-

For each grid node of the DSGM that does not coincide with a conditioning datum, an outcome is randomly drawn from the cdf via Monte Carlo (MC) simulation. This generates an initial realization that matches the conditioning data and the cdf, but does not probably match the model semivariogram model γ(h).

-

The next aim is that the experimental semivariogram γ *(h) of the simulated realization to match the predefined model γ(h). This is achieved with the objective function (12) as it was explained previously.

-

The initial image is modified by swapping pairs of nodal values z i and z j chosen at random, where none of these nodes represent conditioning data. After each swap, the objective function (12) is updated.

-

As metallurgical analysis suggests, the image must be cooled slowly (annealed), rather than cooled rapidly (quenched). In other words, accepting swaps that increase the objective function allows the possibility of avoiding a local minimum. The acceptance or not of a given perturbation is given in terms of Boltzmann pdf.

All favorable perturbations (OF(i) ≤ OF(i − 1)) are accepted, whereas the unfavorable perturbations are accepted with an exponential probability distribution as it is shown in Eq. (13). The term “simulation cycle” refers to the swapping process by using the same simulation annealing parameters. The selection rule proposed here is the comparison of the probability of acceptance P of a successfully swapping cycle with P acc = 0.95. The specification of how to decrease T is called “annealing schedule”. The following parameters describe this schedule: T 0 = the initial temperature, λ = the reduction factor (0 < λ < 1), N acc = index counting the accepted combinations (if the upper limit is exceeded means that it must restrict the selection rule by proceeding to the next cycle by multiplying the current temperature parameter T with a reduction factor λ). The simulation is terminated if the current cycle (N c ) reaches the total cycles number (N cmax) with a proposed value N cmax = 3. Also, N accmax = upper limit of accepted successful swapping combinations with a proposed value of 10 times the number of estimation points, and N max = the maximum number of swapping combinations at any given temperature (it is of the order of 100 times the number of nodes).

The flowchart of this subroutine is shown in Fig. 5.

MC simulation is used for modelling the spatial distribution of N SPT in a vertical longitudinal section along the chainage of the tunnel, as is shown in Fig. 6a, using initially the statistical distribution shown in Fig. 6b. Then, the conditional simulation is used for rearranging the data such as to satisfy the input model semivariogram. The mean SA spatial estimation obtained from R = 15 alternative realizations and a single Kriging estimation between two boreholes in QL2 formation are displayed in Fig. 6c (data locations inside the boreholes are shown by the dots), whereas the standard deviation of the simulations and the standard deviation σ SK of the Kriging estimation are compared in Fig. 6d. From these figures, the smoothing effect of the Kriging estimation may be observed. The results of the two methods inside all formations are compared in Fig. 6e, f, respectively; it may be observed that the two methods give similar results but as was expected Kriging gives smoother predictions.

a Vertical longitudinal section following the chainage of the tunnel, b Fitting the histogram of sampled NSPT values with a Gamma pdf, c comparison of the mean from 15 realizations with SA (left) and Kriging (right) estimation between two exploratory boreholes, d comparison of the standard deviations of the SA (left) and Kriging (right) techniques, e SA spatial model in all formations and f Kriging model in all formations

4 TBM performance model

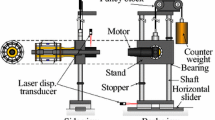

The operation of an EPB machine depends on many parameters that are related to the various operations performed by the machine, in situ stresses, pore pressure of groundwater and the strength properties of the soil itself. The most important, however, are the parameters linked with the cutterhead because this is the part of the machine that is in direct contact with the soil through which the tunnel is passing, and more specifically the disc cutters and drag picks (or knives) that are transmitting the forces to the soil.

Penetration rate (PR) is defined as the distance excavated divided by the operating time during a continuous excavation phase, while advance rate (AR) is the actual distance mined and supported divided by the total time and it includes downtimes for TBM maintenance, machine breakdown, and tunnel failure. When using a TBM, it is necessary to choose a PR that matches the rate of debris removal and support installation. In conventional operations, the standard PR is decided beforehand and the other operations are timed to match this rate. Also, in soft soil, disc cutters are not really important and most tools act as knives. Ripper, knife and buckets accomplish the main task of excavation. So, the development of these parts must be propelled in an efficient way. The total jack thrust force F should be equal to the sum of positive penetration resistance F C of cutter head and the frictional force F F between cutterhead/concrete lining and tunnel wall. During soil cutting, a force F c is imparted on each knife. Let F n , F s denote the force components acting on each knife that are, respectively, normal and parallel to the tunnel’s face. The mean normal force F n acting on the knives may be determined at a first approximation from the cutterhead contact normal force and the number of knives N attached on the head as follows

For sharp knives, the torque T is the sum of the products of the radius of the position of each knife with the drag cutting force F s , plus the torque due to contact cutterhead frictional forces T CF, plus the lifting forces of the buckets T LF, plus the mixers at the rear part of the cutting wheel that move the material to avoid segregation or sedimentation T CM. Another term should be added for the case of knives with wear flats to account for the additional friction. The mean drag (or tangential) force F s may be estimated through the approximate formula

in which D denotes the cutterhead diameter, D [L]. The penetration depth per revolution is given by the formula

wherein PR denotes cutterhead (net) penetration rate and ω indicates the cutterhead rotational speed, [1/T].

A parameter of major importance is the Specific Energy (SE) of cutting that is defined as the energy consumed in removing a unit volume V of material. There are two definitions of SE, namely one proposed in [17] denoted herein as SE1

in which ℓ T is the total length traveled by the knife and S is the distance between neighboring cuts of the knives. It may be noticed that in the definition for SE only the tangential force of knives are used because the tangential cutting direction consumes almost all of the cutting energy, whereas the energy expended in the normal direction is comparatively negligible. This is an immediate consequence of the fact that much greater amount of cutter travel takes place tangential to the face than normal to it, even though the normal force is much higher than the tangential forces.

Another SE, called SE2, may be also found from the ratio of the energy consumption of the TBM over the excavated volume [20],

where η denotes the efficiency of energy transfer from motor to the cutterhead, P is the registered power, t denotes time and V is the excavated volume.

For the Mas-Blau case study, there are plenty TBM logs collected during tunnel excavation with the EPB machine. This set of data was collected during the excavation with a high frequency per concrete ring that was not appropriate for the purposes of this analysis and moreover was located at separate files. Therefore, in a first stage of analysis, all the files were merged into only one Excel spreadsheet and the data were reduced so that a single row of logged data to correspond to each concrete ring.

The equivalence of the two definitions for SE is clearly shown in the diagram of Fig. 7a. This validates the formulae (14) ÷ (17) for the calculation of SE1. The benefit of using SE1 is that one may estimate the values of the mean forces F n , F s exerted on the knives. Such an example is demonstrated in Fig. 7b where we have plotted the dependence of tangential force acting on knives on the penetration depth per revolution. It may be observed that the logged data follow a more or less linear relationship as was expected from cutting theory with drag tools.

The TBM performance model also includes analytical soil cutting models with knives and picks and rock cutting models with disc cutters based on Limit Theorems (upper and lower bound) of the theory of Plasticity. These cutting models have been implemented into CUTTING_CALC code for forward and backward analysis of TBM logged data.

5 Concluding remarks

Modeling and visualization of the geological and the geotechnical parameters, as well as the performance of excavation machines (TBM’s and RH’s) are the most important tasks in tunneling design. The tunneling design process should take into account the risk associated with the soil quality and the design of the excavation machine. The geological and geotechnical parameters are evaluated from observations, boreholes, tests, etc. at certain locations, and interpolation is necessary to model these parameters at the grid elements of the DSGM. We have shown that the proposed spatial analysis approach provides an interesting alternative to more traditional approaches since:

-

1.

It provides a consistent, repeatable and transparent approach to construct a spatial model of any geotechnical parameter needed for subsequent numerical simulation or TBM performance model.

-

2.

It takes into account the spatial continuity of variables.

-

3.

Apart from the estimation of a variable, it provides a measure of uncertainty that can be used for risk assessment or for improvement of the exploration (new boreholes in areas of high uncertainty).

-

4.

In general, it leads to a better model since local interpolation performed over restricted neighborhoods provides a better model compared to zone the model in homogeneous geotechnical volumes or sections, and averaging.

-

5.

It provides input data directly to any deterministic numerical tunneling simulation code that has the capability to assign different properties to each element.

Furthermore, the proposed TBM model outlined above may be used in conjunction with the spatial analysis model to explore any relation between excavation performance (expressed here with SE) and geotechnical or geological parameters. If such relation(s) could be found then they may be used for upgrading the geotechnical model as TBM advances [5].

References

Chiles JP, Delfiner P (1999) Geostatistics—modeling spatial uncertainty. Wiley, New York

Deutsch CV, Journel AG (1997) Geostatistical software library and user’s guide. Oxford University Press, New York

Exadaktylos G, Stavropoulou M (2008) A specific upscaling theory of rock mass parameters exhibiting spatial variability: analytical relations and computational scheme. Int J Rock Mech Min Sci 45:1102–1125

Exadaktylos G, Liolios P, Barakos G (2007) Some new developments on the representation and standardization of rock mechanics data: from the laboratory to the full scale project. In: Rock mechanics data: representation and standardisation, 11th ISRM congress, July 12th 2007, Lisbon Congress Centre, Lisbon Portugal

Exadaktylos G, Stavropoulou M, Xiroudakis G, deBroissia M, Schwarz H (2008) A spatial estimation model for continuous rock mass characterization from the specific energy of a TBM. Rock Mech Rock Eng 41:797–834

Fenton GA, Griffiths DV (1995) Flow through earth dams with spatially random permeability. In: Proceedings 10th ASCE engineering mechanics conference, Boulder, CO, USA, pp 341–344

Fenton GA, Griffiths DV (2002) Probabilistic foundation settlement on spatially random soil. ASCE J Geotech Eng 128(5):381–390

Ghanem RG, Spanos PD (1991) Stochastic finite element: a spectral approach. Springer, NY

GiD (2006) GiD the personal pre- and postprocessor. CIMNE, Barcelona, http://www.gidhome.com

Goovaerts P (1997) Geostatistics for natural resources evaluation. Oxford University Press, New York

Griffiths DV, Fenton GA (2004) Probabilistic slope stability analysis by finite elements. ASCE J Geotech Geoenv Eng 130(5):507–518

Hejazi Y, Dias D, Kastner R (2008) Impact of constitutive models on the numerical analysis of underground constructions. Acta Geotech 3:251–258

Hoefle R, Fillibeck J, Vogt N (2008) Time dependent deformations during tunnelling and stability of tunnel faces in fine-grained soils under groundwater. Acta Geotech 3:309–316

Isaaks EH, Srivastava RM (1989) Introduction to applied geostatistics. Oxford University Press, Oxford

Journel AG, Huijbregts CHJ (1978) Mining geostatistics. Academic Press, London

Kitanidis PK (1997) Introduction to geostatistics: applications in hydrogeology. Cambridge University Press, Cambridge

Kutter H, Sanio HP (1982) Comparative study of performance of new and worn disc cutters on a full-face tunnelling machine. In: Tunnelling’82, IMM, London, pp 127–133

Labra C, Rojek J, Onate E, Zarate F (2008) Advances in discrete element modelling of underground excavations. Acta Geotech 3:317–322

MIDAS GTSII (2006) Geotechnical and tunnel analysis system, MIDASoft Inc. (1989–2006), http://www.midas-diana.com

Snowdon RA, Ryley MD, Temporal J (1982) A study of disc cutting in selected British rocks. Int J Rock Mech Min Sci Geomech Abstr 19:107–121

Stavropoulou M, Exadaktylos G, Saratsis G (2007) A combined three-dimensional geological-geostatistical-numerical model of underground excavations in rock. Rock Mech Rock Engng 40(3):213–243

Vanmarcke E (1983) Random fields: analysis and synthesis. The MIT Press, Cambridge

Acknowledgments

The authors would like to thank the financial support from the EC 6th Framework Project TUNCONSTRUCT (Technology Innovation in Underground Construction) with Contract Number: NMP2-CT-2005-011817, http://www.tunconstruct.org, and Mr. Henning Schwarz of GISA for his kindness to provide us with geological, exploratory and TBM data from Mas-Blau.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Stavropoulou, M., Xiroudakis, G. & Exadaktylos, G. Spatial estimation of geotechnical parameters for numerical tunneling simulations and TBM performance models. Acta Geotech. 5, 139–150 (2010). https://doi.org/10.1007/s11440-010-0118-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11440-010-0118-z