Abstract

There are good reasons for higher education institutions to use learning analytics to risk-screen students. Institutions can use learning analytics to better predict which students are at greater risk of dropping out or failing, and use the statistics to treat ‘risky’ students differently. This paper analyses this practice using normative theories of discrimination. The analysis suggests the principal ethical concern with the differing treatment is the failure to recognize students as individuals, which may impact on students as agents. This concern is cross-examined drawing on a philosophical argument that suggests there is little or no distinctive difference between assessing individuals on group risk statistics and using more ‘individualized’ evidence. This paper applies this argument to the use of learning analytics to risk-screen students in higher education. The paper offers reasons to conclude that judgment based on group risk statistics does involve a distinctive failure in terms of assessing persons as individuals. However, instructional design offers ways to mitigate this ethical concern with respect to learning analytics. These include designing features into courses that promote greater use of effort-based factors and dynamic rather than static risk factors, and greater use of sets of statistics specific to individuals.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Institutions offering higher education are interested in identifying students at greater risk of failing or not completing their course of study, and intervening before this happens. To facilitate this goal, institutions can use learning analytics: statistical analyses of data gathered on students to better support desired education outcomes for students, as individuals and as groups. The Open University, for example, describes its learning analytics practice as using ‘raw and analysed student data to proactively identify interventions which aim to support students in achieving their study goals. Such interventions may be designed to support individual students and/or the entire cohort’ (Open University Sep 2014, p.1). Computer tracking and analysis of large amounts of data from all students can identify factors statistically correlated with worse performance, especially failing or dropping out. Predictive risk modelling can be used to assign a risk categorization to individual students (see, e.g., OAAI 2012; Jia 2014; Open University Oct 2014; Wagner and Hartman 2013). Institutions can then treat ‘high risk’ individuals differently, with the aim of ensuring they do not end up counting as negative statistics for completion.

There is no single agreed definition of ‘learning analytics’ used by education researchers and institutions. Long and Siemens (2011) proposed restricting the term ‘learning analytics’ to use of data focussed on the learning process, as opposed to data analysis for business intelligence at the institutional level. It is worth noting Sclater’s 2014 report on learning analytics in the UK, which suggests institutions see learning analytics data more broadly as a resource for various stakeholders ‘from individual students and their tutors to educational researchers, to unit heads and to senior management’ (Sclater 2014b, p.4). However, it seems fair to assume that instructional design theorists and practitioners are more interested in the learning process and learning environment than in, for example, use of analytics to target fundraising efforts (see Campbell et al. 2007). Accordingly, this paper uses a learner-focussed definition of learning analytics from Slade and Prinsloo (2013). Learning analytics is ‘…the collection, analysis, use and appropriate dissemination of student-generated, actionable data with the purpose of creating appropriate cognitive, administrative and effective support for learners’ (Slade and Prinsloo 2013, p.4). This will include both demographic data and course engagement data from students.

Implementing learning analytics requires systematic collection and analysis of large amounts of data to identify risk factors across students; classifying a student as a member of risk-bearing groups or categories (see Jia 2014), then subjecting ‘risky’ students to different treatment (interventions). Outside the education context, this process is not uncommon. Applicants for a bank loan, or for insurance, are screened, and the organization forms some kind of risk profile for the applicant. In the higher education context, the practice of learning analytics is typically expected to involve screening students on group risk factors for the purposes of designing and targeting interventions. The risk factors might include, for example, part-time status; gender; ethnicity; nationality; number of years of prior education; highest level of educational qualification; student engagement with courses; accessing library resources (see, e.g., Jia 2014; Open University Sep 2014). Bichsel (2012) reports that Purdue University’s learning analytics system uses grade information, demographics, and existing data on student engagement. Sclater (2014b) reports both Nottingham Trent University and the Open University have identified accessing library resources as a predictor of student success.

Interventions for ‘risky’ students could include restrictions on the level of course studied; restriction on the number of courses studied, or imposition of prerequisites, which might mean a requirement to take extra courses (such as a bridging course). Interventions could also simply involve offers of additional support, such as extra phone calls to encourage engagement, or referrals to academic (or other) support services. However, even these less restrictive interventions may direct ‘risky’ students to do more work, or impress on them an expectation that they do more work. Interventions may be appropriate for some of the group of ‘risky’ students, but there may be individuals in the group who would be better off without the intervention (Sclater 2014b), in which case it is a burden on them. An institution could also try to discourage a student from continuing with study (see Open University Sep 2014, p.9), or avoid putting resources into a student the institution thinks is not going to succeed (Bichsel 2012).

Using analytics to identify, and intervene with, students at greater risk of not completing their course of study is increasingly seen as an economic and pedagogical imperative for education institutions (Kay et al. 2012; Slade and Prinsloo 2013). This will be particularly acute for distance education institutions offering courses online (Prinsloo and Slade 2014). Online distance education learners continue to have poorer retention rates than class-based learners (Lokken and Mullins 2015), and online education provides huge opportunities for computer tracking and analysis of data on students’ online behaviour. Together, these factors suggest institutions that provide online education have strong motivations to use group-risk analytics to screen and categorize students. Proposed benefits of learning analytics include increases in student performance and student retention (Bichsel 2012; Sclater 2014b), through highlighting patterns of success or disengagement in students (Oblinger 2012) that can ‘[inform] the design of more effective, appropriate and, importantly, more cost-effective student support’ (Prinsloo and Slade 2014, n.p.). Importantly, the risk statistics and predictions for students generated through the use of learning analytics can provide more information resulting in more systematic and effective interventions (see Sclater, 2014b).

Ethical concerns raised by the deployment and intended deployment of learning analytics, have recently been the subject of increasing discussion (see, e.g., Campbell et al. 2007; Simpson 2009; Kay et al. 2012; Slade and Prinsloo 2013; Johnson 2014; Pardo and Siemens 2014; Polonetsky and Tene 2014; Prinsloo and Slade 2014; Sclater 2014a; Willis 2014; Willis and Pistilli 2014; Sclater and Bailey 2015). These discussions tend to take existing ethical/legal principles surrounding use of data, such as transparency, consent, choice, accountability, privacy and security, and translate them to the education analytics context. There is less discussion of the ethics of subjecting an individual to intervention on the basis of information about group risk. This paper contributes to this topic, drawing on philosophical accounts of wrongful discrimination to examine in what sense intervention on the basis of learning analytics might be ethically concerning.

The principal concern identified is for the assessment of students as individuals, and impacts on the individual agency of students. This concern is cross-examined through arguments put forward by Frederick Schauer (2003) that suggest there is little conceptual difference between what we think of as ‘individualized’ assessments and classification of people using group-risk statistics directly. These arguments are translated into a learning analytics context, and Schauer’s argument is challenged through identification of a notable difference between individual examination and direct use of risk-statistics. This difference impacts on the recognition of students as individuals and inclusion of students as individual agents in the learning process. Fortunately—given the potential benefits from the use of learning analytics—I argue that there are some ways to facilitate individual student agency in the use of learning analytics, and that instructional designers have a key role to play in enabling these. I conclude with a note of caution regarding an institutional focus on students as bearers of risk.

Conceptions of the ethics of learning analytics

Theorists discussing the newly-emerging topic of the ethics of learning analytics have looked to the ethical principles established for existing practices that may be related to learning analytics practices. For instance, as learning analytics involves gathering and analyzing information from students, it could be seen as a type of research on students. Hence, some researchers have considered whether analytics has a normative basis in the principles of research ethics (see, e.g., Pardo and Siemens 2014; Kay et al. 2012). However, Kay et al. (2012) note several differences between the contexts for research and for analytics that render the ‘research ethics’ conception of the principles less relevant. For example, ‘consent’ in a research context is specific to a particular research project being undertaken, and the intent of the use of the data in research is ‘directed toward an agreed outcome’ (Kay et al. 2012, p.22). Use of learning analytics does not typically involve such discrete, well-defined research projects. This does not invalidate consent as an ethical principle for learning analytics; instead, it suggests the practices constituting acceptable consent in research ethics do not easily transfer to a learning analytics context.

The desired context for learning analytics is continual data collection and processing for ongoing educational intervention and innovation. Unsurprisingly then, education theorists have conceived of the ethics of learning analytics in terms of the institutional collection of data from individuals. Slade and Prinsloo (2013), for example, identify three broad areas of ethical issues for learning analytics: the location and interpretation of data; informed consent, privacy and the de-identification of data; and the management, classification and storage of data. Sclater’s comprehensive review of the literature on legal and ethical issues for learning analytics identifies the top four ethical foci as: “transparency, clarity, respect for users and user control” and notes that “[c]onsent, accountability and access also feature prominently” (Sclater 2014a, p.59). Pardo and Siemens (2014) start with a research ethics base and focus on privacy and personalization in learning analytics, yet even their four principles of transparency; student control over the data; right of access and security; and accountability and assessment largely fit under the data collection concerns. Most of these concerns echo recommendations from legal guidelines for data protection and privacy (see, e.g., the Information Commissioner’s Office’s Data protection principles, n.d.). As these guidelines embed ethical principles for practitioners, so the focus in the current literature on transparency, consent, choice, accountability, privacy and security of data is well-placed for guiding institutions’ considerations of the ethics of learning analytics.

Implementing an extensive learning analytics system is not just another use of data with privacy implications, however. At the heart of the practice is the concept of categorizing students according to the statistical risk that can be attached to them, and subjecting students to different treatment on this basis. This has given rise to some additional ethical concerns for the practice of learning analytics: concerns about discrimination, identity and the agency of students. The spectre of discrimination is raised by Polonetsky and Tene (2014); Sclater and Bailey (2015) and Slade and Prinsloo (2013). Identity and agency concerns are raised by Johnson (2014), Prinsloo and Slade (2014), Sclater (2014a) and Slade and Prinsloo (2013); and are addressed by the Open University (Sep 2014). However, in comparison with, for example, the ethics of big data (see, e.g., Crawford and Schultz 2013; IWGDPT 2014; Richards and King 2014) there is less focus on these concerns and how they interact. Moreover, the recommendations regarding agency tend to be to inform students that learning analytics are used and get their consent. This paper contributes to the literature by employing normative theories of discrimination to analyze the use of learning analytics. The paper draws links to concerns for students as individual agents, and suggests how instructional design can help support recognition of students as individual agents in learning-analytical assessment.

Discrimination

Discussions of discrimination in the literature on the ethics of learning analytics have focused on the concern that students may be stereotyped and mistreated in some way. This includes the concern that students may be at risk of being subject to prejudiced interactions with the staff of the institution. The Open University’s policy on ethical use of learning analytics warns that ‘Analysis based on the characteristics of individual students at the start of their study must not be used to limit the University’s or the students’ expectations of what they can achieve’ (Open University Sep 2014, p.8). Differential treatment of individuals on the basis of groups they belong to may be considered discrimination when individuals are disadvantaged in some way. For interventions based on learning analytics, the disadvantage could include being required or encouraged to engage in unnecessary extra work that could also be an added expense (for example, being directed to take a bridging course). Philosophers have not reached a consensus on how to specify the moral wrong(s) of discrimination. The following selection of different normative perspectives on wrongful discrimination covers a broad range of positions currently argued by philosophers working in the field.

Larry Alexander (1992) sees actions as wrong (when they are wrong) simply if they are likely to produce worse overall consequences than alternative actions. However, he distinguishes wrongful discrimination as involving a false belief in the moral inferiority of some people. Similarly, Arneson (2006) argues that wrongful discrimination shows flawed beliefs or attitudes: defective conduct based on ‘unjustified hostile attitudes toward people perceived to be of a certain kind or faulty beliefs about the characteristics of people of that type’ (Arneson 2006, p.779). However, the practice of learning analytics does not feature attitudes or beliefs about the moral worth of the groups targeted. Suppose students are identified on the basis of a set of group characteristics correlated with completion risk such as gender, a certain level of prior education, and ethnicity; and are then treated differently. Neither the process of identification by the institution nor the differing treatment is likely to be based in beliefs or attitudes that members of these groups are morally inferior, nor motivated by hostility. So these agent-based accounts of discrimination would see learning analytics as ethically unproblematic.

Hellman (2008) argues that the moral wrong in discrimination is not about the beliefs or attitudes of the agent, but instead what the discriminatory act expresses. Morally wrongful discrimination is a form of demeaning a person. ‘Demeaning’ acts or policies express a disregard for the moral equality of those being discriminated against. The social context gives the action its demeaning quality, along with the agent being in a position of power relative to the person subject to the discrimination. Tertiary education institutions are certainly in a position of power relative to students. But under Hellman’s definition, use of learning analytics is only morally wrong if it brings into question the moral equality of those treated differently, in a way that demeans them. This is clearly not the intent of learning analytics; while it is possible that something about the practice of it could demean students, this seems unlikely.

Lippert-Rasmussen (2006) defines discrimination as intentionally treating members of some socially salient groups better than others. Socially salient groups are broad social categories of different ethnicities, genders, disabilities, sexual orientations and so on. Lippert-Rasmussen (2006) takes a consequentialist view of wrongful discrimination: the badness of any discriminatory act is due to its bad consequences, compared with alternatives. This is basically a utilitarian position on ethics, where actions are morally good if they produce the most good consequences overall, considering everyone affected. But Lippert-Rasmussen (2006) modifies this utilitarian position with rules to prioritize the worse-off and to accommodate desert. His basic suggestion is that acts are morally good the more they increase well-being; where there is a choice, we should prioritize the well-being of the worse-off; where there is a choice, we should prioritize well-being for those that deserve it.

Lippert-Rasmussen’s position suggests we should consider the consequences for the total amount of welfare, and distribution of welfare, in our assessment of differential treatment due to learning analytics. In fact, the distribution of the benefits of learning analytics is a key feature distinguishing it from risk-screening in other areas such as lending and insurance. In these commercial settings, decision-makers want to identify applicants belonging to riskier groups to exclude them from a good, or give them worse conditions to access it. So an insurer might deny insurance or impose higher premiums; and a bank might deny loans or impose more restrictions on loans. In contrast, institutions using learning analytics aim to benefit the groups of students who are identified as bigger risks (for failing or dropping out), targeting them for interventions and extra resources. If so, learning analytics will produce good consequences. Moreover, it may produce a higher proportion of good consequences for worse-off students, if students identified as bigger risks for failing or dropping out are unlikely to have been financially and socially well-supported for education, and more likely to have suffered from general welfare disadvantages. This may promote a better distribution of good consequences.

There is one potential exception to this picture: if institutions use learning analytics to identify high risk students to exclude them from a program of study; to exclude them from extra academic support, or even to exclude them from the institution. Bichsel (2012) reports the suggestion from an institutional research professional that

[…] it is very expensive to bring a student to campus who’s not going to succeed, and so you have to be doing things all the way along the line to make sure they’re going to succeed or recognize early enough before you’ve made a big investment that they’re not going to succeed. (my italics, Bichsel 2012, p.15).

Suppose learning analytics can deliver a very accurate prediction of a very high likelihood of failure for some students. Higher education is an expense for students, both in terms of the fees paid and the opportunities missed through the time spent studying. From a consequentialist perspective, we must consider if it could be to the overall benefit of particularly high risk students—and the institution—to exclude them from higher education study at a particular point in time. Certainly, not attending could be better for such high risk students, as a group, than to be admitted but offered no extra academic support, with many of the group then dropping out (Woodley and Simpson 2014, p.474). However, the definition of learning analytics offered earlier is not compatible with continuing the enrolment of students while excluding them from academic support, given the purpose of learning analytics is to create ‘appropriate cognitive, administrative and effective support for learners’ (Slade and Prinsloo 2013, p.4).

It is concerning to think of oneself being perceived and treated as a ‘costly risk’ by institutions due to one’s group membership. This concern is brought out in Sophia Moreau’s (2010, 2013) definition of wrongful discrimination as differential treatment of individuals that impacts on an individual’s ‘deliberative freedoms’. A deliberative freedom is a capacity to access core life opportunities without the concern that certain socially-recognized traits will be counted as costs against oneself. Relevant traits may include skin color, gender or religious tradition—any traits that we think decision-makers ought not to mark negatively in distributing opportunities to access some goods and services. Consider, for example, the non-White tenancy applicant who is more likely to be told “the place is already let” once the letting agent sees the color of their skin. Moreau (2010) writes that ‘….[wrongful] discrimination involves a violation of someone’s right to a certain deliberative freedom [….] denying them the chance to make a choice without having to think about this trait of theirs in the given context’ (p.138) Discrimination thus impacts on liberty and autonomy.

In the higher education context, if students know they will be treated differently based on risks attached to certain traits they have, such as ethnicity or gender, this would potentially impact on their deliberative freedoms, and thus on their liberty and autonomy. However, as noted previously, education institutions that use learning analytics to mark students as risky are not typically trying to exclude students from an opportunity, but rather to intervene to try to foster student success. The higher education context also presents a complication regarding autonomy. Higher education intends to enhance a student’s autonomy, and institutions may implement learning analytics so that they can do a better job of educating students, and thus a better job of enhancing their autonomy. This raises the possibility of a potential trade-off between recognition or respect for the autonomy of each student on the one hand, and benevolent (if perhaps paternalistic) efforts to increase autonomy for groups of students on the other.

Finally, Eidelson (2013) suggests part of the badness of discrimination is simply the failure to treat a person properly as an individual, ‘…by failing to treat him as in part a product of his own past efforts at self-creation, and as an autonomous agent whose future choices are his own to make’ (p.395). If a decision-maker treats a student differently just on the basis of their membership in a particular group, the decision-maker takes no account of how the student differs from the other members of the group. This also entails that the decision-maker selects which feature of the student (namely, the feature of belonging to that particular group) will predominate in the decision-maker’s assessment of the student. This is action on the basis of stereotyping, and stereotyping is a prime example of not treating a person as an individual. Eidelson’s position does not sit easily with the use of learning analytics based on the characteristics of groups, a use that takes little account of students as individuals. Assessing students based on group-risk statistics, and treating ‘risky’ students differently, does appear to count as discrimination on this account. This is an ethical concern if we value treating people as individuals (at least to some extent), rather than stereotypes.

To conclude, using learning analytics to treat some groups of students differently is importantly different from other discrimination. It does not stem from hostile attitudes or false beliefs about the worth of the groups identified, and is not likely to demean the groups identified (not in the way that we think other discrimination demeans people). It is also not obvious that it is typically likely to impact negatively on the freedom to deliberate about one’s core life opportunities, or promote bad consequences for a socially salient group, in the way other discriminatory actions might. Indeed, learning analytics aims to produce benefits for the groups it identifies as at risk of poorer outcomes. The one ethical concern from the accounts of wrongful discrimination that does characterize learning analytics in higher education is the failure to recognize a person as an individual, and any attendant effects on students as individual agents.

Group risk statistics and individuals

The idea that people have an ethical claim to be recognized or treated as particular individuals, rather than merely on the basis of statistical generalizations about groups, is intuitively appealing. However, it is challenged by arguments put forward by Schauer (2003). Schauer (2003) uses an example of treating pit bull dogs differently due to breed statistics showing elevated risk of violence, to argue that what we think of as ‘individualized’ assessments really just reflect statistical generalizations from groups. I will rework Schauer’s example for the higher education context. The basic question is what a decision-maker in an education institution should make of any statistical evidence that some student groups present significantly more ‘risk’ in terms of failure and dropout than others. Should a decision-maker arrange to have students examined individually and assessed against a certain threshold for likelihood of failure and dropout, and require interventions with extra work only for those who exceed the threshold? Or should a decision-maker simply sort students on the basis of group-risk statistics and require intervention with extra work for all the ‘statistically risky’ students, without examination of them as individuals?

Suppose that institution A uses six characteristics to assess whether a student is a ‘high risk’ for failing or dropping out, namely gender, nationality, school socio-economic status, years of education, part-time status and highest prior qualification. Institution B instead gives each individual student some kind of pre-entry examination to assess whether they are likely to pass a program. Institution B’s method is a more ‘individualized’ assessment—it focusses squarely on what the individual has done and is capable of. However, Schauer (2003) would argue that assessing individual students against an examination norm also involves assessing the individual students against a group generalization; namely, a generalization from the groups of students that, over time, were used as the basis for developing the examination norm. It is on the basis of aggregate evidence from past events involving these other students that a current student’s performance on the examination is ‘probabilistically predictive’ (Schauer 2003, p.68) of failing or dropping out. Schauer (2003) argues that what we think of as ‘individual assessment’ is grounded in generalizations from assessment-performance to real-life behavior. His argument would suggest that using individual examination to predict an individual’s failure or dropout is not fundamentally different from using group-risk statistics as a predictor of an individual’s failure or dropout.

Schauer (2003) would also note that errors in prediction can still be expected with both institutions’ methods. Institution A’s six broad characteristics will be an inaccurate predictor. Some students with these characteristics, given the chance, would succeed as well as other ‘less risky’ students without interventions requiring them to do extra work. But examination procedures are imperfect too, and so will also inaccurately predict for some cases: some students who pass an examination procedure will not prove adequate to the program of study. Why should an institution use the results of an examination to intervene and impose extra work on a student, rather than using the fact that the student possesses a particular set of characteristics that means they fit a ‘risky’ profile? Schauer (2003) would conclude that what distinguishes the two methods is only that one requires a closer inspection of the student than the other.

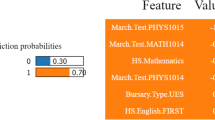

Schauer’s analysis thus suggests that in terms of generalizing, an institutional decision-maker using membership in some ‘risky’ groups to make a judgment of a particular student does nothing fundamentally different from an institutional decision-maker who does an individual examination of a particular student. However, this analysis misses something. Suppose that the following image is of a particular student, “Pat”, who has the six characteristics that put him in a ‘high risk’ category.

[Image: Biagio D’Antonio. Samuel H Kress Collection, 1939.1.179. Retrieved from https://images.nga.gov]

[Image: Biagio D’Antonio. Samuel H Kress Collection, 1939.1.179. Retrieved from https://images.nga.gov]

Suppose too that the next image, reproduced multiple times, represents the category of high risk students.

[Image: Jan Steen, Widener Collection. 1942.9.81. Retrieved from https://images.nga.gov]

[Image: Jan Steen, Widener Collection. 1942.9.81. Retrieved from https://images.nga.gov]

Finally, suppose that this image represents an examination norm:

Schauer’s alternatives seem to be presented as: ‘Pat put in the category of high risk students’ versus ‘Pat facing individual examination’. This makes it look like we are considering Pat and the category of high risk students, thus: versus considering Pat and an examination norm:

versus considering Pat and an examination norm:

On Schauer’s view, if using a categorization of high risk students and using an examination are both generalizing at base, then we could say the institutional decision-maker’s judgment of Pat is based on generalizations either way. However, the initial conceptualization in Fig. 1 is misleading. Figure 1 misrepresents the situation. If the decision-maker, when considering imposing an intervention requiring extra work, uses a classifier of the six characteristics as indicating a higher risk of failure or dropout, Pat the student is not judged. This is how Fig. 1 should look:

The crucial point is that beyond being put in the ‘high risk’ category, Pat the student is given no consideration at all.

Any institutional decision imposing interventions requiring extra work would be applied to individual students without being about the particular student. Claims of group-risk probability extend over (or quantify over) an individual but are not about the individual (qua individual). While people do apply probabilistic claims to individuals, this does not mean they are picking out a feature of the individual rather than a feature of a group. The application of an examination, by contrast, does consider the particular student that is Pat, and is about Pat. There is thus a notable distinction between examination-based and group-risk-based assessing; the former is about the individual in a way the latter is not. Judging individuals on the basis of group-risk statistics does differ from the examination-based assessments, and the difference involves a failure to deal with persons as individuals.

When decision-makers in higher education institutions fail to consider the student they are dealing with as an individual, their decisions will feature only their own participation (or that of the principals on whose behalf they act). There is little sense in which the individual student is recognized in the decision. The institutional decision-makers are the only ‘selves’ present in the decision. The decision-makers get to spread their ‘selves’ over actions that affect individual students, without the inclusion of students’ ‘selves’. This inhibits the extent to which the students being with dealt are included as agents in the process. This can also mean the decision unilaterally represents the decision-maker’s perspective and interests. If so, this is a substantial exercise of power on the part of the decision-maker. Furthermore, in those cases where individual students suffer extra work unnecessarily due to institutional use of risk profiling, this may seem unfair.

Higher education institutions, however, have good reason to use learning analytics to make judgments about students. As noted initially, use of learning analytics has the potential to make decision-makers’ judgments for targeting student support more effective and efficient, with benefits to students and the institution. Moreover Frederick Schauer (2003) argues that even if a practice of using group generalizations to judge individuals that is overall beneficial results in unfair decisions for some individuals, it can still be a good practice—and that it can be wrong to try to fix the unjust decisions. Schauer argues that whoever is deciding which unfair results to correct and how to correct them may be less reliable on average than letting a generalization hold, with its inevitable instances of unfair judgments. Basically, even expert judges make mistakes when assessing individual information, and they might make things worse.

In the instructional design context, this point receives support from a study by Vuong et al. (2011) on teachers’ use of Cognitive Tutor, an electronic program of mathematics problems that provides feedback to individual students based on how they deal with the problems. The problems are organized in a sequence of modules, in accordance with research on appropriate mathematics pedagogy. Students must demonstrate mastery of the problems in one module before they can move to the next module; hence each module is a prerequisite for the next. However, teachers (our expert judges) are able to decide whether it would be better for their students if modules were skipped or taken in a different order, and can bypass the program to effect this. As it happens, 85 % of teachers did this. As noted by Baker and Siemens (2014) the results of the research suggested that students were disadvantaged when teachers used their professional judgment to intervene and bypass the Cognitive Tutor sequencing. This supports Schauer’s general point that allowing individual experts to intervene in the use of a system may not improve it, even when the experts have access to the relevant risk statistics.

In view of the foregoing, what reason have institutions to do anything other than judge students on the basis of the probabilities attached to certain groups of which the student is a member? One reason lies in the value we place on assessing people as individual agents. Edmonds (2006) examines practices of generalizing on the basis of group features. He argues that our judgments of the moral acceptability of the resulting discrimination hinge on an interplay of our assessments of causation and our assessments of responsibility or desert. We particularly value assessing people based on what they do—on their choices, efforts and achievements—where these relate to the issue in question. Allowing students to sit a test or looking at their recent academic work, for example, does better at capturing effort and achievement than the use of characteristics that do not testify to effort or achievement, such as ethnicity, age or gender. The question is, how can we capture this valuing of individual agency whilst employing learning analytics?

Kasper Lippert-Rasmussen (2011) draws on Schauer (2003) to support his claim that we have no fundamental right to be treated purely as individuals. Lippert-Rasmussen argues that if generalization from groups is somewhere in the background of our assessments of people, as it is when we use an examination that has been normed on others’ outcomes, then people cannot have a fundamental right to be treated fully as individuals, where this means judging people without reference to any generalizations. However, rather than accepting nihilism about the possibility of treating people as individuals, Lippert-Rasmussen (2011) suggests instead that part of treating someone as an individual could involve using information that categorizes them as part of a group, if we are also careful not to ignore other information that is particular to that individual. This would suggest that learning-analytical assessment of students using group risk statistics could still treat students as individuals—or at least more as individuals—if it can incorporate more information particular to individuals.

For instructional design theorists and practitioners, the practical ethical question is thus whether there are ways for learning analytics data to include more information particular to individual students, where this concerns students’ choices, efforts and achievements. If so, this could be a way to help embed recognition of students’ individual agency in institutions’ use of learning analytics. I will briefly explain three paths that could be especially promising for instructional designers to explore, before concluding with a note of caution regarding focusing on students as individuals.

Nature of the risk factors: choices, efforts and achievements

Can the factors used in learning analytical assessment of students include more focus on individual choices, efforts and achievements by students that may be correlated with risk? For a general example, suppose that students who complete an online study timetable prior to starting a course are at lower risk of dropping out of their courses. Instructional designers could check whether students have the option of completing a study timetable for their courses, and if not, build this into the design of the course. A more specific initiative would require consideration of student choice or effort factors correlated with success in particular types of courses. Suppose that success for students studying humanities courses correlates with accessing library resources more than it does in science courses (see, e.g., Sclater 2014b). Instructional designers working on humanities courses can thus build in learning activities that require students to effectively access library resources across these courses. Including activities of this sort would help incorporate risk information that draws more on the choices and effort of individual students, in contrast to risk statistics for factors such as ethnicity or nationality.

Nature of the risk factors: static or dynamic

The second question on the nature of the risk factors is whether the risk factors static or dynamic. Static risk factors are largely historical; they do not change, or change little and only slowly (such as aging) (Witt et al. 2011). Examples of static risk factors include factors from childhood, ethnicity or gender. Dynamic risk factors can change relatively quickly, such as a student’s current courses of study or health status. What is ethically salient here is that static risk factors are not usually easily amenable to change. A student assessed as high-risk on static risk factors, if assessed 6 months later on the same factors, will be still classed as high risk, regardless of any risk-reducing efforts they have made over this time. Static factors tend to be quicker and cheaper to assess, dynamic factors can be more expensive. But assessing only or primarily static factors will bypass possibilities for active agency on the part of the individual applicant. Instructional designers working at a program level can check whether there are avenues for students to signal changes to dynamic risk factors, and how these may change the prompts for teachers (or courseware) to introduce academic intervention.

Use of statistics specific to individuals

Schauer (2003) depicts institutional decision-makers as having two avenues for assessing a person’s risk, namely, expert examination of an individual or evidence from group risk statistics. There is a third avenue, however, for institutions that capture data on individual student behaviour that can be correlated with outcomes for that student. Individual behavioural analytics uses a collection of behavioural data across time from the same individual. We see this, for example, in the computer-based Cognitive Tutor program that tracks individual student answers and responds with tailored content and activities (Mayer-Schönberger and Cukier 2014), or in higher education institutions that offer online learning that tracks learners’ behaviors at a more general level (see, e.g., Sclater 2014b; Wagner and Hartman 2013). Obviously, instructional design is crucial to the performance of individual content-responsive programs such as Cognitive Tutor, and this is a key area where instructional design can support institutions to respond to students more as individuals. At the more general level, instructional designers can check whether online course pages can measure and display to students the amount of time a student has spent actively engaged on the page, and correlate this with the student’s test score for items relating to the topic covered on the page. This could provide a useful basis for students to reflect on their learning and study behaviours.

Comparing the three methods for assessing individual students to predict their future performance, we see that: one involves experts examining individual students; the second involves assessment using statistical evidence derived from extending other students’ behaviour over an individual student; and the third is assessment based on individual analytics, using statistical evidence derived from extending the individual student’s past behaviour over that student. This third approach is a use of evidence that is both statistical yet specific to the individual student (see Colyvan et al. 2001). I suggest that the more individual behavioural analytics can be incorporated in risk-assessment of students, the more learning-analytical risk judgments should be seen as recognizing and including students as individual agents.

Institutions of higher education have the power to decide what risk features to measure for dealing with the problem of student dropout and failure. This paper has focused on data where risks are imputed to students; however, this should not be taken to suggest that the risk of student dropout or failure is generated solely by students. Instructional designers should be aware that how institutions choose to structure themselves also contributes to the risk of students not completing. For example, Kovacic (2010) found that two of the three biggest factors predictively distinguishing successful from unsuccessful students at a distance education institution were the program students were enrolled in and the trimester in which they were enrolled. Institutions can choose the extent to which they restrict their measuring of risk to the risks attributable to students, or instead include also statistics relating to their own programs, courses, schools or departments, and general processes and structure. This choice will impact on who will bear the burden of addressing the risks: students or the institution.

The burdens involved for institutions to address institutional risk factors associated with increased student dropout or failure are the same as those for any organizational change. These include, for example, the financial costs of reorganizing institutional structures, and staff feeling stressed or unhappy about the change. The possible burdens for ‘risky’ students could include extra work or financial cost from requirements to take bridging courses, or restrictions on the number of courses, level of courses or pathways through a course. Ideally, from the point of view of increasing student success, instructional designers might hope institutions would focus on multifaceted interventions addressing both student and institutional risk factors. However, institutions will no doubt have a significant focus on the costs to themselves of any potential interventions. Richardson et al. (2012) suggest that cognitive changes in students such as increased effort regulation and elevated grade goals may be more cost-effective than multifaceted interventions, even if the latter might be academically better. Given the position of power of institutions relative to students, it is possible that it will be mostly students who will bear the burdens of addressing risk.

Conclusion

In the education literature, current discussion on the ethics of the use of learning analytics has focussed particularly on principles drawn from existing ethical guidelines on institutional use of data. The discussion covers many of the ethical concerns learning analytics raises, especially the issues of transparency, consent, choice, accountability, privacy and security of data. This discussion has produced some excellent recommendations for ethical practice, including detailed policy recommendations (see, e.g., Open University Oct 2014). One ethical concern that has been less well-covered is that of treating individuals differently on the basis of group categorization, particularly where individuals are burdened due to group-based risk statistics. Schauer (2003) argues there is no significant difference between judgments based on ‘individualized’ evidence, and judgments based on statistics on group risk. This paper has explored and challenged this argument. This critical exploration has resulted in the identification of distinctive ethical concerns regarding individuality and agency. As higher education institutions have good reason to use learning analytics to statistically assess risk regarding students’ learning, this paper proposes instructional design approaches that may mitigate the ethical concerns. These are to consider the nature of the factors used in analytics and, where possible, to incorporate more use of factors involving individual effort, and dynamic rather than static factors, and to make greater use of statistics specific to individual students.

References

Alexander, L. (1992). What makes wrongful discrimination wrong? Biases, preferences, stereotypes and proxies. University of Pennsylvania Law Review, 141(1), 149–219.

Arneson, R. J. (2006). What is wrongful discrimination? San Diego Law Review, 43, 775–808.

Baker, R.S., & Siemens, G. (2014). Educational data mining and learning analytics. In K. R. Sawyer (Eds.), Cambridge handbook of the learning sciences (2nd ed., pp. 253–272). New York: Cambridge University Press.

Bichsel, J. (2012). Analytics in higher education: benefits, barriers, progress, and recommendations (Research Report). Louisville, CO: EDUCAUSE Center for Applied Research. Retrieved August 2012 from http://www.educause.edu/ecar.

Campbell, J., deBlois P., & Oblinger, D. (2007). Academic analytics: A new tool for a new era. EDUCAUSE Review, 42(4), 41–57.

Colyvan, M., Regan, H., & Ferson, S. (2001). Is it a crime to belong to a reference class? Journal of Political Philosophy, 9(2), 168–181.

Crawford, K., & Schultz, J. (2013). Big data and due process: Toward a framework to redress predictive privacy harms. NYU School of Law. Public Law Research Paper No. 13-64, 93-128.

Edmonds, D. (2006). Caste wars: A philosophy of discrimination. London & New York: Routledge.

Eidelson, B. (2013). Treating people as individuals. In D. Hellman & S. Moreau (Eds.), The philosophical foundations of discrimination law (pp. 354–395). Oxford: Oxford University Press.

Hellman, D. (2008). When is discrimination wrong? Cambridge: Harvard University Press.

Information Commissioner’s Office (n.d.) Data protection principles. Retrieved November 2015 from https://ico.org.uk/for-organisations/guide-to-data-protection/data-protection-principles/.

International Working Group on Data Protection in Telecommunications (IWGDPT). (2014). Working paper on big data and privacy: Privacy principles under pressure in the age of big data analytics. Retrived June 26, 2015 from http://dzlp.mk/sites/default/files/u972/WP_Big_Data_final_clean_675.48.12%20%281%29.pdf.

Jia, P. (2014). Using predictive risk modeling to identify students at high risk of paper non-completion and program non-retention at university. MBus thesis. Auckland University of Technology.

Johnson, J. A. (2014). The ethics of big data in higher education. International Review of Information Ethics, 21, 3–10.

Kay, D., Korn, N., & Oppenheim, C. (2012). Legal, risk and ethical aspects of analytics in higher education. CETIS Analytics Series, 1(6), 1–30.

Kovacic, Z. (2010) Early prediction of student success: Mining students enrolment data. Proceedings of informing science & IT education conference (InSITE) 2010.

Lippert-Rasmussen, K. (2006). Private discrimination: A prioritarian, desert-accommodating account. San Diego Law Review, 43, 817–856.

Lippert-Rasmussen, K. (2011). “We are all different”: Statistical discrimination and the right to be treated as an individual. The Journal of Ethics, 15, 47–59.

Lokken, F., & Mullins, C. (2015). ITC 2014 distance education survey results. Retrieved November 2015 from http://www.itcnetwork.org/membership/itc-distance-education-survey-results.html.

Long, P., & Siemens, G. (2011). Penetrating the fog: Analytics in learning and education. EDUCAUSE Review, 46(5), 31–40.

Mayer-Schönberger, V., & Cukier, K. (2014). Learning with big data. Boston/New York: Houghton Mifflin Harcourt.

Moreau, S. (2010). Discrimination as negligence. Canadian Journal of Philosophy, 40(sup1), 123–149.

Moreau, S. (2013). In defense of a liberty-based account of discrimination. In D. Hellman & S. Moreau (Eds.), The philosophical foundations of discrimination law (pp. 71–86). Oxford: Oxford University Press.

OAAI, Marist College (2012). Open academic analytics initiative. Retrieved November 2015 from https://confluence.sakaiproject.org/pages/viewpage.action?pageId=75671025.

Oblinger, D. (2012). Let’s Talk … Analytics. EDUCAUSE Review, 47(4), 10–13.

Open University Oct. (2014). Ethics use of student data for learning analytics policy FAQs. Retrieved October 2015 from http://www.open.ac.uk/students/charter/sites/www.open.ac.uk.students.charter/files/files/ecms/web-content/ethical-student-data-faq.pdf.

Open University Sep. (2014). Policy on ethical use of student data for learning analytics. Retrieved October 2015 from http://www.open.ac.uk/students/charter/sites/www.open.ac.uk.students.charter/files/files/ecms/web-content/ethical-use-of-student-data-policy.pdf.

Pardo, A., & Siemens, G. (2014). Ethical and privacy principles for learning analytics. British Journal of Educational Technology, 45, 438–450.

Polonetsky, J., & Tene, O. (2014). The ethics of student privacy: Building trust for ed tech. International Review of Information Ethics, 21, 25–34.

Prinsloo, P., & Slade, S. (2014). Educational triage in open distance learning: Walking a moral tightrope. The International Review of Research in Open and Distance Learning, 15(4), 306–331.

Richards, N., & King, J. (2014). Big data ethics. Wake Forest Law Review, 49, 393–432.

Richardson, M., Abraham, C., & Bond, R. (2012). Psychological correlates of university students’ academic performance: a systematic review and meta-analysis. Psychological Bulletin, 138(2), 353–387. doi:10.1037/a0026838.

Schauer, F. (2003). Profiles, probabilities and stereotypes. Cambridge: Harvard University Press.

Sclater, N. (2014a). Code of practice for learning analytics: A literature review of the ethical and legal issues. London: Jisc.

Sclater, N. (2014b). Learning analytics: The current state of play in UK higher and further education. JISC. Retrieved October 2015 from http://repository.jisc.ac.uk/5657/1/Learning_analytics_report.pdf.

Sclater, N. & Bailey, P. (2015). Code of practice for learning analytics. JISC, London. Retrieved October 2015 from https://www.jisc.ac.uk/guides/code-of-practice-for-learning-analytics.

Simpson, O. (2009). Open to people, open with people: Ethical issues in open learning. In U. Demiray & R. C. Sharma (Eds.), Ethical practices and implications in distance learning (pp. 199–215). New York: Information Science Reference.

Slade, S., & Prinsloo, P. (2013). Learning analytics: Ethical issues and dilemmas. American Behavioral Scientist, 57(10), 1509–1528.

Vuong, A., Nixon, T., & Towle, B. (2011). A method for finding prerequisites in a curriculum. In M. Pechenizkiy, T. Calders, C. Conati, S. Ventura, C. Romero, & J. Stamper (Eds.), Proceedings of the 4th international conference on educational data mining. Eindhoven, The Netherlands, July 6-8, 2011 (pp. 211–216).

Wagner, E. & Hartman, J. (2013). Welcome to the era of big data and predictive analytics in higher education. SHEEO (State Higher Education Executive Officers Association Higher Education Policy Conference 2013 [presentation]. Retrieved November 2015 from http://www.sheeo.org/sites/default/files/0808-1430-plen.pdf.

Willis, J., E. (2014). Learning analytics and ethics: A framework beyond utilitarianism. EDUCAUSE Review. Retrieved October 2015 from http://er.educause.edu/articles/2014/8/learning-analytics-and-ethics-a-framework-beyond-utilitarianism.

Willis, J. E., & Pistilli, M. D. (2014). Ethical discourse: Guiding the future of learning analytics. EDUCAUSE Review. Retrieved October 2015 from http://www.educause.edu/ero/article/ethical-discourse-guiding-future-learning-analytics.

Witt, P. H., Dattilio, F. M., & Bradford, J. M. W. (2011). Sex offender evaluations. In E. Drogin, F.M. Dattilio, R.L. Sadoff, & T. G. Gutheil (Eds.), Handbook of forensic assessment: Psychological and psychiatric perspectives. Wiley Online.

Woodley, A., & Simpson, O. (2014). Student Dropout: The elephant in the room. In O. Zawacki-Richter & T. Anderson (Eds.), Online distance education: towards a research agenda (pp. 459–483). Edmonton: AU Press.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that she has no conflict of interest.

Rights and permissions

About this article

Cite this article

Scholes, V. The ethics of using learning analytics to categorize students on risk. Education Tech Research Dev 64, 939–955 (2016). https://doi.org/10.1007/s11423-016-9458-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-016-9458-1