Abstract

This paper reports on a study of teacher support in experimental computer-supported collaborative learning (CSCL) settings where students engage with graphs in real-time labs within the context of school science. Real-time labs are digital devices and software connected to student-controlled sensors or probes that can measure and visualize data graphically. The empirical setting was a science project about ocean acidification (OA) where lower secondary school students conducted measurements of the pH value of water with increased concentrations of CO2. The analytical focus is on student–teacher interaction during group-work activities where the students carried out, reviewed and reported on the real-time lab experiment. The analyses show that students needed additional support from the teacher in interpreting the real-time graphs and in making connections between the graphic representation, the practical undertakings of the experiment and the underlying scientific phenomena. Most importantly, the study demonstrates the complexity of teacher support in CSCL settings and how this type of support intersects with the support provided by digital resources, peer collaboration and applied instructional design.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In recent decades, several studies have provided important insight into students’ learning processes in computer-supported collaborative learning (CSCL). This research has highlighted several productive aspects of CSCL settings as means for enhancing students’ conceptual learning, epistemic skills, and collaboration skills. However, studies have also shown that students face a range of conceptual, social, and emotional challenges in computer-supported collaborative learning, especially when working in small-group settings (Järvelä et al. 2016). These findings suggest that, even in well-designed CSCL settings, “gaps” between the technology design, peer collaboration, and the surrounding instructional design often occur. Acknowledging that the teacher holds an important role in bridging these types of gaps, several studies within the field of CSCL have emphasized the significance of additional support provided by teachers (Ludvigsen 2016). However, most CSCL studies that have directed attention towards the role of the teacher have focused on how various forms of digital tools can support teachers’ orchestration of learning activities (Dillenbourg 2013; Erkens et al. 2016; Schwarz et al. 2018). Few studies have analysed teacher support in the form of student–teacher interaction (Furberg 2016). By taking a holistic and multi-layered approach, this paper aims at contributing to the emerging body of literature focusing on the role of support provided by teachers in naturalistic CSCL settings (Ludvigsen and Arnseth 2017; White 2018). Following previous studies with a sociocultural approach to teacher support in CSCL settings (c.f. Furberg 2016; Strømme and Furberg 2015), the present study explores the significance of teacher support and how it intersects with the digital resources in use, peer collaboration and the surrounding instructional design.

Our empirical point of departure is a CSCL setting involving students’ engagement with digital learning devices often referred to as “real-time labs”. Typically, real-time labs embed software and measuring devices, such as probes or sensors, to enable collection and graphical visualization of data from science experiments. The intention behind a real-time lab is to assist students in performing investigations and practical work similar to how scientists conduct experiments, as well as to support students in using graphical representations to interpret and communicate results from their experiments (Linn and Eylon 2011; Nakhleh 1994; van Joolingen et al. 2007). Because commonly used real-time labs are not designed as CSCL environments, support in the form of task design, peer collaboration, and teacher support must be facilitated through the instructional design. For that reason, a learning activity involving students’ collaborative engagement with real-time labs forms an interesting analytical starting point for exploring the significance of support provided by teachers.

The empirical setting for this study was a science project about OA where lower secondary school students (aged 14–15 years) and their teacher performed an experiment involving a sensor-based real-time lab measuring the pH value of water with increasing concentrations of CO2. The analytical focus is on student–teacher interactions taking place as the teacher was making rounds (Greiffenhagen 2012) during group-work activities where the students carried out the real-time lab experiment, as well as when students processed and reported on their experiment results. The analytical attention is directed at student–teacher interactions taking place in settings where students summoned the teacher. We attend to these interactions because they display both the challenges faced by students and how the teacher responds to these challenges (Furberg 2016).

We argue for the value of adding a dialogic approach when examining student–teacher interaction (Linell 2009; Mercer 2004; Säljö 2010). The applied analytical procedure is interaction analysis involving a sequential analysis of the talk and interaction between interlocutors (Furberg 2016; Jordan and Henderson 1995). Based on microanalyses of student–teacher interactions during small-group work, we aim to demonstrate the complexity of facilitating students’ development of conceptual understanding in these settings. On a general level, this study contributes to our understanding of teacher support in CSCL settings by displaying how teacher guidance intersects with support provided by the digital resources, peer collaboration, and the applied instructional design. On an empirical level, the study aims at providing insight into productive and challenging aspects of students’ engagement with graphical representations in real-time labs, as well as the support provided by the teacher in this type of CSCL setting.

In the following, we direct our focus on previous studies: Firstly, we present findings from studies focusing on students’ engagement with graphical representations and real-time labs. Secondly, we present findings from relevant studies focusing on the role of teacher support in CSCL settings.

Previous studies: Students’ engagement with graphs and teacher support in CSCL settings

Students’ engagement with graphs in computer-supported science learning

Graphic representations are frequently used to display and explore statistical data, measurements, and calculations and to communicate scientific knowledge within educational settings and in socio-scientific discourses (Roth and McGinn 1997; Tytler et al. 2013). As such, being able to interpret, understand, and use graphs in ways specific to a scientific discipline are important aspects of developing scientific literacy (Knain 2015; Lemke 1998). Graphs are also important tools in inquiry-based activities where students explore trends and patterns in data collected during their own observations and experiments to learn about various phenomena (Wu and Krajcik 2006). Despite the central role of graphs in science learning, many studies have reported challenging aspects of students’ comprehensions of graphs (Leinhardt et al. 1990). For instance, students often struggle to treat graphs as abstract representations, to connect graphs of experiment data to the physical event they represent, and to link graph patterns to the underlying concepts and phenomena (Glazer 2011).

The last decades’ technological advances have provided digital tools designed to support students’ graphing practices in science. Several studies have scrutinized how various forms of digital tools can support students’ graphing skills and their understanding of the conceptual issue in focus (Ainsworth 2006; Ares et al. 2009; Mitnik et al. 2009; White and Pea 2011). Of particular interest for our research are studies focusing on students’ engagement with graphs in real-time labs, which are student-controlled software connected to sensors that measure and visualize data. Many experimental and quasi-experimental studies have demonstrated positive effects of engaging with real-time labs on students’ graphing skills and conceptual understanding of scientific phenomena (Friedler and McFarlane 1997; Linn et al. 1987; Mokros and Tinker 1987; Nicolaou et al. 2007). A quasi-experimental study by Mokros and Tinker (1987) involving middle school students’ engagement with real-time labs in science experiments showed significant gains in the students’ graphing skills, which were explained in terms of specific features of the real-time lab. Among the features highlighted as supportive of students’ graph interpretation skills was the real-time pairing of the practical undertakings of the lab experiment and the graphic representation. This finding is supported by a qualitative study based on dialog-based interviews conducted by Nemirovsky et al. (1998) investigating primary school students’ engagement with real-time labs about motion. Findings were that students developed graphing skills through exploring how the movements of the motion sensor materialized in the graph, which gradually enabled the students to predict and create intended and specific graph patterns. In an intervention study involving real-time labs in a physics unit about heat and temperature, Linn et al. (1987) investigated middle school students’ graphing skills. Analyses of students’ pre- and post-test scores showed significant gains in students’ conceptions of graphical representations related to heat and temperature. Most interesting, the results also showed gains in students’ generic understanding of graphical representations, implying that they were able to apply their graphical skills to other science concepts.

Other studies have demonstrated that real-time labs are productive learning resources in collaborative learning settings (Kelly and Crawford 1997; Lindwall and Ivarsson 2011). In a comparative study conducted by Lindwall and Ivarsson (2011) involving science student teachers, two student groups were assigned to reproduce a specific motion graph: one group with a real-time lab with motion sensors and the other group with a simulation-based graphing tool. Analyses of student interaction showed that students in the real-time graph condition explicated and verbalized their conceptual reasoning to a greater extent than students in the simulation condition. The differences in the students’ reasoning was explained by the features of the two graphing tools; specifically, producing graphs in real-time labs required that the students explicated the relationship between the actions undertaken with the motion sensor and the pattern generated in the graph display, whereas the simulation enabled a trial-and-error strategy eliminating the need for explicit reasoning. Kelly and Crawford (1997) investigated secondary school students’ collaborative engagement with real-time labs in a physics course and found that the graphs served as a “stand in” for the physical events of the experiments in the succeeding activity where the students were to interpret and discuss the results. However, Kelly and Crawford also found differences between groups concerning task performance and discussion quality and argued for the significance of teacher guidance to support peer collaboration and guide students in interpreting the real-time graphs.

Along with studies demonstrating the benefits of real-time labs in supporting students’ graphing practices, researchers have also reported challenging aspects. One challenge concerns students’ difficulty with interpreting trends in real-time graphs (Lindwall and Lymer 2008; Testa et al. 2002). In an interview study, Testa et al. (2002) investigated students’ interpretation of real-time graphs and found that the high level of detail typical of real-time graphs was challenging for students and that many students perceived irregular curves as an indicator of poor graph quality or measurement errors. Based on analyses of physics student teachers’ interactions during a real-time lab about motion, Lindwall and Lymer (2008) showed that many students experienced difficulties in separating the relevant pattern from “noise” in the graph display, which made it challenging to identify the graph patterns as representations of functional relationships. Another challenge arises from the real-time labs’ automatic generation of graphs, which blackboxes central operations in graph production (e.g., plotting, organizing data and choosing scale) that are significant aspects of both graphing practices and students’ understanding of graphs (Glazer 2011; Roth 1996). In an experimental study comparing secondary biology students’ ability to construct and interpret line graphs after working in either a real-time lab condition or a traditional condition, Adams and Shrum (1990) found that students in the traditional condition outperformed students in the experimental group on graph construction skills.

The challenging aspects of students’ learning with real-time labs documented in prior studies demonstrate the need for teacher support. In naturalistic classroom settings where students engage with real-time labs, teachers will usually be present, providing additional guidance in the intersection of digital resources, peer collaboration, and applied instructional design. In the following, we turn our attention to findings from previous studies focusing on teacher support.

Research on teacher support in computer-supported science learning

Few studies have explicitly scrutinized teacher support in real-time lab settings; therefore, we widen our scope to include studies focusing on the role of teacher support in CSCL settings in science more generally. The aforementioned study by Lindwall and Lymer (2008) explored student–teacher interaction during dyad work and found that the teacher provided crucial support in directing the students’ attention towards specific features of the graph, which aided students in distinguishing relevant features and in coming to see the graph pattern as a linear relationship. Additionally, Kelly and Crawford (1997) explored the role of teacher support during an instance where one group of students struggled with identifying an acceleration curve during a real-time lab. The analyses showed that the teacher played an important role in guiding the students in making sense of the graphs. The teacher prompted, confirmed, and elaborated students’ interpretations and explanations, thereby supporting students in reading the line graphs as representations and helping students link the physical events and the corresponding motion concepts.

Widening the scope of the review to include studies on teacher support in experimental CSCL settings involving representational forms other than graphs, several studies have shown the importance of procedural and conceptual support provided during whole-class sessions and during group work. Several studies have demonstrated the significance of procedural support, or helping students regulate their work processes, in students’ exploratory work in CSCL settings (Strømme and Furberg 2015; Urhahne et al. 2010). In the context of inquiry-based CSCL in physics, Mäkitalo-Siegl et al. (2011) conducted an experimental study examining the influence of support in whole-class settings. Findings were that students receiving procedural instructions at the beginning of each inquiry phase, support during group work and evaluations in plenary sessions sought less help during group-work activities but showed higher learning gains than students assigned to the control group. In a study involving 12 middle school classrooms, Warwick et al. (2013) investigated student–teacher interaction taking place within various forms of CSCL settings in science where the digital resources was designed by the teacher. Analyses of instructional trajectories demonstrated the importance of procedurally oriented instructions provided by the teacher, both in the form of direct instructions given during whole class sessions prior to group-work activities and in the form of “indirect scaffolds” displayed on the board during group work, such as instructions, hints, and reminders of spoken instruction.

Other studies have demonstrated the significance of teacher support aimed at scaffolding students’ conceptual understanding in experimental CSCL settings (Furberg 2016; Gillen et al. 2008; Jornet and Roth 2015). In a study on secondary school students’ engagement of energy transformation conducted by Jornet and Roth (2015), analyses of student–teacher interaction showed that teacher-prompted questions in whole-class sessions succeeding a group-based experimental setting supported the students’ sensemaking of their experiences from the practical experiments, providing important resources in subsequent group-work activities. Based on data from a similar context, Arnseth and Krange (2016) analysed student–teacher interaction in group-work settings and showed that important aspects of teacher support included prompting students to explain their reasoning based on the representations and linking the students’ use of spontaneous terms to more scientific terms and concepts when attempting to explain the phenomena observed. Other studies have demonstrated the significance of conceptual teacher support in CSCL settings where students engage with inquiry learning and virtual labs. Strømme and Furberg (2015) investigated the role of teacher support in an inquiry project about heat loss where students’ inquiry process was guided by various types of digital representations, including a simulation. Findings were that the teacher provided significant support by helping students to link the simulation and its underlying concepts. Furberg’s (2016) study produced similar findings on the role of teacher support in a setting where secondary school students engaged with a virtual lab aimed at supporting their processing of a physical lab experiment. Analyses of student–teacher interactions during group activities displayed that teacher support was crucial for the students to link the practical procedures of the lab experiment, the reflection tasks and the experiment’s underlying scientific principles.

The review of relevant previous studies offers a valuable background for understanding productive and challenging aspects of students’ collaborative engagement with graphs in real-time labs and the significance of procedural and conceptual support from a teacher in CSCL settings. Nevertheless, the review also reveals that few studies have directed analytical attention to teacher support in the context of real-time lab work. Acknowledging the relevance of these studies and the gaps in the literature, this study aims to contribute to this body of research by examining the complexity of teacher support in a particular CSCL setting involving real-time labs and graphical representations and how this support intersects with digital resources, peer collaboration and applied instructional design.

The present study – Research questions

Based on the reported findings about the challenges experienced by students while working with graphic representations in real-time labs and our interest in exploring the significance of support provided by a teacher in these types of CSCL settings, we direct our analytical attention towards student–teacher interaction taking place during two group-work activities in which students conducted and reviewed the results from their real-time lab experiments before they processed and reported on their findings. Firstly, analyses of student–teacher interactions taking place in these types of settings will provide insight into the challenges students faced and how the teacher responded. Secondly, analysing these types of interactions over time and across the two different activities will enable us to explore the specific possibilities and challenges occurring in the different phases of the real-time lab experiment. To both grasp the student and teacher perspective of student–teacher interactions and to understand the significance of teacher support, we have formulated the following guiding research questions:

-

RQ1: How do the students and the teacher make sense of the real-time lab experiment and its associated graphical representations, and what challenges are displayed in the student–teacher interactions?

-

RQ2: How is teacher support enacted during the student–teacher interactions?

Before providing a discussion of methodological issues, we will briefly account for the theoretical premises underlying our understanding of digital resources and graphical representations, as well as our conceptual understanding of support provided by teachers.

Approaching teacher support from a sociocultural perspective

Sociocultural perspectives conceptualize learning as the appropriation of knowledge and skills through the adoption of cultural tools (Vygotsky 1978; Wertsch 1998). Teaching and learning are regarded as dialogic and dynamic processes of meaningful tool use taking place in the social interaction between interlocutors (Linell 1998, 2009). Among the cultural tools that can serve as mediational means in social interaction, language is assigned a special role, providing a “social mode of thinking” (Mercer and Littleton 2007; Wells 1999). Teaching and learning are also highly dependent on the use of material tools or artifacts where knowledge and social practices are inscribed and stored (Säljö 2010). These tools serve as important carriers of meaning potentials, developed and shaped over time through discursive practices (Ivarsson et al. 2009). In the context of schooling, instructional tools (e.g., textbooks, digital learning environments, worksheets, lab guidelines) are developed to support students’ appropriation of specific subject content, methods and procedures. Other commonly used tools are disciplinary tools (e.g., technical equipment, inquiry procedures, scientific concepts, and representations) developed in the practices of particular subject domains. While both instructional and disciplinary tools serve as important resources in students’ learning, their meaning potentials in relation to goal-directed activities are not readily available to students (Roth and McGinn 1998; Säljö and Bergqvist 1997). In particular, disciplinary tools need to be re-contextualized to support student learning processes in institutional settings like school science (Bezemer and Kress 2008; Roth and Tobin 1997).

In naturalistic CSCL settings, the teacher is responsible for selecting resources, designing learning activities and providing support that guides students’ engagement in relation to specific purposes during classroom activities (Mortimer and Scott 2003; Wells 1999). Thus, the teacher holds an important position as an “expert” in the sense that he masters the tools and knows the purposes for which they are used. Vygotsky’s (1978, 1986) notion of the “Zone of Proximal Development” (ZPD) provides a conceptualization of the window in which support of various kinds is needed and productive in students’ learning processes. Vygotsky (1978) defined the ZPD as “the distance between the actual developmental level as determined by independent problem solving and the level of potential development as determined through problem solving under adult guidance or in collaboration with more capable peers” (p. 86). Following Wells (1999), we approach ZPD as being “created in interaction between the students and the co-participants in an activity, including the available tools and the selected practices” (p. 318). A CSCL perspective on ZPD stresses the value of both social resources in the form of collaboration between peers and technological resources in the form of designed digital artefacts as support measures that can lead students beyond what they can do alone (Pea 2004). Thus, the “limits” to what students can accomplish as individuals or as collaborating groups are constituted by the availability of social and material resources in the specific learning setting, but also the nature and quality of interactions. However, because students bring different experiences to the setting, their goals and orientations when engaging with social and technological resources might differ from those intended by the teacher or the instructional design (Furberg et al. 2013).Therefore, an important task of the teacher involves responding to students’ concerns and orientations as these emerge in situ in the course of the goal-directed activities.

In sum, a sociocultural perspective provides a lens through which we can approach teacher support in CSCL settings as a situated and dialogic endeavour, constituted in the interaction between participants, purposes and available tools. Seen from this perspective, instances of tensions and breakdowns in students’ collaborative sensemaking might provide valuable access points to scrutinize teacher support as it is initiated and enacted at the intersection of available support structures. Conceptualizing teaching and learning as mediated processes in goal-directed activities, this perspective implies that the teacher’s position as expert and provider of institutional practices must be acknowledged in order to understand the role of teacher support in naturalistic CSCL settings.

Research design

Participants and educational setting

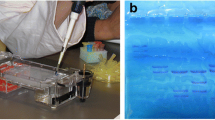

The data were produced during a science project about climate change, which took place in eight school lessons over the course of three weeks in October 2016. The participants were one class of 25 lower secondary school students, aged 14 to 15 years, and their science teacher. The project comprised several subunits covering topics like the carbon cycle, OA, sea level rise, and the greenhouse effect. The activities within each subunit were designed around various forms of textual and visual digital representations, such as diagrams, models, simulations, and graphs. Throughout the project, students documented their work in online workbooks using the application Padlet.Footnote 1 The empirical basis for this study was a three-lesson unit about OA where the students performed and reported on a physical experiment. The science teacher team, which included the teacher in focus here, decided to organize this unit around the use of real-time labs, as the school had just bought digital pH sensors for their PASCO labs.Footnote 2 In this unit, students used the sensors with an application called SPARKvueFootnote 3 to investigate the effects of CO2 on pH levels of water. The students used SPARKvue as a real-time graphing tool to measure the changes in the pH level of water under two conditions. First, they measured the changing pH level in a glass of water while breathing through a straw placed in the water to infuse CO2 (see Fig. 1). In a second glass, they performed a control measurement by measuring the pH of plain tap-water for 20 seconds. The intention behind the experiment was to simulate the process of OA due to the rising level of CO2 in the atmosphere, as well as using the experiment as a starting point for discussing the environmental consequences of OA.

SPARKvue is a sensor-based data logging tool consisting of lab software for iPads and other platforms. SPARKvue allows users to collect, analyse and visualize different kinds of scientific data through wireless sensors, in this case, a pH sensor. The software offers several options for visualizing measurements. The students used a simple display of a graph showing pH as a function of time. The monitor displayed a graph of the pH value measured and the pH value as a decimal number next to the graph, which allowed students to monitor changes in real time. The students used the application’s default mode, which had the graphical scale set to 0 to 100 seconds and pH from 0 to 13.5. The measurements were controlled (started and stopped) on a control panel in the graph display.

Table 1 provides an overview of the activities in the OA unit. The teacher began with a brief introduction to OA that connected increased concentrations of CO2 in the atmosphere to the process of OA. The teacher also demonstrated the effects of OA on calcifying organisms. Submerging two eggs into two glasses containing water and vinegar, the teacher explained that the egg in vinegar represented a calcifying organism and that they were going to review it the next day. As homework, the teacher instructed the students to prepare themselves for the upcoming experiment by watching a teacher-produced instructional video showing how to use SPARKvue. The second lesson, taking place the next day, started with the students and the teacher examining the eggs and hypothesizing about what had happened to the egg in vinegar. The teacher then demonstrated how to use the application by measuring the pH value in the glass containing the egg and vinegar before showing students how to read the measured pH value from the graph display. Following this explanation, the teacher gave a short presentation of the pH scale before going through the practical instructions on how to perform the experiment. Before starting the experiment, the teacher distributed a handout with instructions and asked students to read them carefully as well. The experiment was succeeded by four follow-up activities designed to help the students attend to the scientific processes underlying the experiment and to make connections between representations and real-world phenomena. Three of the follow-up activities concerned documenting and processing the results from the experiment. The fourth and most time-consuming follow-up activity took place in Lesson 3 where the student groups were to discuss and provide written answers to four reflection tasks (see Fig. 5) about the undertaken experiment and how the experiment was linked to the real-world phenomenon of OA.

The science project that contained the OA unit took place in the context of a larger intervention conducted as part of the research project Representations and Participation in School Science (REDE), where researchers (including the authors of this paper) and two science teachers (one of which is in focus here) collaborated on developing instructional units. However, the science teacher team, including the teacher in focus here, designed the OA unit as a first trial of the use of pH sensors with SPARKvue labs. In planning sessions, the researchers provided feedback on the design of the unit, but the teacher did not receive any specific instructions regarding his role as a teacher in this unit, and he was fully responsible for implementing the instructional design without interference from the observing researchers.

Data and analytical procedures

In this study, the main data material consisted of 95 minutes of transcribed video recordings of all student–teacher interactions during the OA unit. Ethnographically inspired observation notes from classroom observations, video-recordings of student–student interactions, transcripts from interviews, teacher-prepared instructional resources, and student-produced materials (photocopies or screengrabs) provided supplementary contextual data for the analyses of the participants’ interactions (Derry et al. 2010). The video data was collected by the use of one hand-held video camera recording all student–teacher interactions during whole-class and group-work activities, as well as three video cameras capturing interactions at the group level. Data from the group cameras complemented data from the hand-held camera by providing additional camera angles and sound sources.

During the two group-work activities related to the real-time lab experiment, a total of 49 instances of student–teacher interactions took place while the teacher was making rounds in the classroom. In his encounters with the student groups, the teacher either responded to questions or intervened by checking on the students’ progression. Of these interactions, 22 took place during the activity where the students carried out the experiment, while 27 instances took place in the phase where the students processed and reported on their findings the next day. The length of the student–teacher interactional sequences ranged from a few seconds to 3 minutes. We conducted the analyses of the student–teacher interactions in two steps. The initial analysis involved an examination of all 49 student–teacher interactions, which made it possible to identify general patterns or, in this case, the most frequently addressed challenges and issues addressed by the students and the teacher. To explore and understand these challenges and issues, as well as how the teacher addressed them, we selected three excerpts of student–teacher interaction for detailed interactional analysis, which constitutes the second step in the analysis. Two excerpts were from interactions that occurred during the activity where students carried out and reviewed the results from the pH experiment (Lesson 2): Excerpt 1 is from the initial part of the experimental setting, and Excerpt 2 is from the last part of the experiment setting. Excerpt 3 is from the setting where the students discussed the results from the experiment in the context of real-world issues (Lesson 3).

We selected the analysed student–teacher excerpts based on four criteria. Firstly, in line with our theoretical perspectives, we selected the excerpts to serve as empirical manifestations of the phenomena under scrutiny. Secondly, in accordance with our research questions, the selected sequences involved student–teacher interactions where the interlocutors focused on issues, challenges or concerns related to conceptual issues, experimental procedures, or understanding the graphic representations. A third criterion, which related to the internal validity of the study, was that the issues and challenges addressed in the selected excerpts should reflect the most frequent issues and challenges during the different phases of the targeted activity. The fourth criterion concerned interactional transparency in the sense that the interlocutors’ verbal and physical contributions were characterized by a certain degree of explicitness (Linell 2009; Mercer 2004). Based on these criteria, the selected settings display typical interactional patterns of student–teacher interactions taking place during the group-work activities within this empirical setting.

The applied analytical procedure is interaction analysis involving sequential analysis of the talk and interaction between interlocutors also including the artefacts in focus (i.e., graphical representations or other representations; Jordan and Henderson 1995; Linell 2009). A sequential analysis implies that each utterance in a selected excerpt is considered in relation to the previous utterance in the ongoing interaction. As a result, the focus is not on the meaning of single utterances, but on how meaning is created within the exchange of utterances. To make sense of how the students and the teacher addressed and used digital and material artefacts, our analysis involved attending not only to discourse, but also to non-verbal modes and the conjunction of modes in interaction. In addition to the detailed examination of the interaction sequences, we used ethnographic information about the institutional setting as a background resource for understanding what was going on. This procedural guideline for analysis ensures that the participants’ concerns and their actual activities—not only the researchers’ intentions and predefined interests—are scrutinized. By analytically scrutinizing student–teacher interactions taking place during the lab activities, we can provide insight into challenges encountered when students collaboratively produced and interpreted graphs representing a scientific phenomenon as well as provide insight into how the teacher, in response to the challenges encountered, supported students’ conceptual sensemaking when working with such representations.

We transcribed the video recordings according to an adaptation of Jeffersonian transcription notations (Jefferson 1984). Table 2 in Appendix provides a description of the transcript notations. We have translated the conversations, which took place in Norwegian, into English and given pseudonyms to the teacher and the students appearing in the excerpts. The data sequences and analyses have been presented in data analysis seminars with both national and international colleagues. Critical comments and joint analysis efforts from research colleagues have strengthened the validity of the empirical analysis.

Results

The following sections present and analyse three excerpts of student–teacher interaction. The excerpts are drawn from the three group activities in the OA unit. Excerpt 1 is from the setting where the students conducted the experiment. Excerpt 2 is also from the experimental setting, but at a later stage when the students were reviewing their results. The final excerpt, Excerpt 3, is from the follow-up activity taking place in Lesson 3. In this setting, the students reported on their results and discussed the findings in the context of environmental consequences of OA. During the group activities, the students worked in groups of four. Each group shared an iPad while conducting the experiment and reviewing the results. During group activities, the teacher circulated among the groups.

Activity 1: Conducting the experiment and exploring graphs

While the students prepared the experiment equipment and set up the SPARKvue lab, the teacher assigned one student in each group to read the instructions that the teacher had handed out before starting the experiment. Figure 2 provides an overview of the experiment instructions.

According to the instructions, the students were to produce two measurement curves; one representing the experiment measurement and one representing the control measurement. By blowing air into the cup with tap-water, the students infused CO2 into the water, resulting in a gradual decrease in the water’s pH level. Ideally, this should have produced a decreasing curve in the graph display. However, even though the students followed the detailed experiment instructions, their first measurement generated what appeared to be a straight line graph. Puzzled by this unexpected result, most groups summoned the teacher for help. This kept the teacher busy during the first part of the experiment, moving from group to group, attending to the encountered challenges. The teacher soon realized that the default setting in the SPARKvue setup displayed a much larger scale range in the y-axis than the pH data being represented, which caused the curve to appear like a straight line graph, and that zooming in would make the graph appear as a decreasing line. Furthermore, the prescribed 15 seconds of blowing into the water did not seem to be sufficient for the CO2 induction to generate a considerable change in pH, and the teacher instructed the students to blow for a longer period, thus producing an additional measurement curve.

Excerpt 1 displays the challenges experienced by the students during this first phase of the experiment and how the teacher, John, addressed these challenges. Like most groups, Serena, Andy, Cory and Steven summoned the teacher when experiencing that the graph line appeared flat in the graph display.

Excerpt 1 | ||

1. | Serena: | John? We can’t see (.) a difference ((showing the iPad to the teacher)) |

2. | Teacher: | Uhm you must- Don’t give up (.) Just continue blowing ((bending down facing the students at the end of their desks holding the iPad screen towards the students)) |

3. | Andy: | ((holding the straw)) Should I blow? |

4. | Teacher: | Yes just blow a little bit more |

((The students start another measurement with Andy blowing into the water, but the app seems to be lagging. When no curve appears in the graph while the second measurement is running, the teacher tells the students to stop, replace the water and start over again)) | ||

5. | Teacher: | But consider this ((pointing at the screen)) (2) Here you’ve got the whole pH scale ((pointing up and down along the y-axis)) |

6. | Serena: | Yes |

7. | Teacher: | It might be a good idea to zoom in a bit ((using his fingers to zoom in on the y-axis; see Fig. 3a and b)) |

8. | Cory: | Oh yes |

9. | Teacher: | Because if we zoom in we can actually see that something has happened |

10. | Serena: | Yes |

11. | Teacher: | It’s not completely straight ((indicating the curve)) (2.0) |

12. | Serena: | [Right] ((looking at the curve)) |

13. | Teacher: | [And I-] What do we see if we look from there and onwards? ((pointing at the last of the two peaks and slides his finger along the decreasing curve; see Fig. 3c)) What do we see (.) actually? ((using his fingers to zoom further in)) |

14. | Andy: | It went up? ((referring to the peaks in the curve)) |

15. | Teacher: | If we look from there- ((pointing from the last peak and onwards)) It is kind of going down (.) Maybe these are your inhalations? ((Looking from Andy to the screen while pointing at the last peak, and demonstrates inhaling)) |

16. | Andy: | Oh yes |

17. | Teacher: | And then you have started blowing again ((pointing from the last peak and along the decreasing curve)) (2) So what really happens when you start to add CO2? ((looking at all the students)) |

18. | Cory: | It rises again? |

19. | Serena: | No it decreases |

20. | Teacher: | ((nods at Serena)) Yes when you start to blow. So- |

21. | Andy: | Oh yes ((mumbling inaudible)) |

22. | Teacher: | ((looking at Andy)) So if we give it some more time- |

23. | Serena: | Oh there’s the green one ((pointing at the iPad, where the second measurement curve has finally turned up in the graph display)) |

24. | Teacher: | There it is (.) Wow perfect (2) ((scrolling down to make the new curve visible)) Now you can go ahead and take a look at that ((leaves the group)) |

In the opening of Excerpt 1, Serena summons the teacher and tells him that they are not able to see a change in the graph as they blow. The teacher responds by demonstrating the zooming function on the touchscreen, which causes the apparently straight-line graph to fold out as a slightly decreasing curve with two small, yet visible, peaks (lines 1–4). Further, the excerpt displays some challenges the students experience when it comes to interpreting and making sense of the graph. In line 13, the teacher prompts the students to explain what they see and tries to orient the students’ attention towards the slight decrease in the graph by leading his index finger from the last peak along the decreasing line (see Fig. 1c). Andy’s response, “It went up” (line 14), signifies that his focus is on the peak instead of attending to the decreasing feature outlined by the teacher. Trying to reorient the students’ attention, the teacher repeats his finger gesture along with providing a description of the decreasing pattern (line 15). He then turns the attention towards the peak (line 15) and suggests that the peak might represent Andy’s inhalations. Although Andy confirms the teacher’s interpretation of the peaks (line 16), the teacher prompts the students for an explanation, this time by asking, “So what really happens when you start to add CO2?” (line 17). Cory’s and Serena’s divergent replies indicate that they focus on different graph features: Cory on the peak (line 18) and Serena on the decrease (line 19). By looking at Serena and nodding, the teacher once more tries to draw the students’ attention towards what he finds the most salient and relevant graph feature: the decline (line 20). As the second measurement curve finally appears in the graph and the teacher leaves the group, the students are left with interpretive resources to map between curve features and measurement actions (lines 22–24).

The analysis of the interaction in Excerpt 1 shows some interesting aspects of how the students and the teacher made sense of the graph and its features. Firstly, the analysis displays challenges related to generic features in graphing; in this case, adjusting the scale on the y-axis to a proper range to disclose features of the graph. Secondly, the analysis displays that the teacher and the students initially did not attend to the same graph features; the teacher was clearly attending to the decrease, while the students seemed to be attending to the peaks. This suggests that seeing the curve pattern as a decreasing line is not a straightforward action, but rather a matter of perspective. Thirdly, the analysis displays the challenge and significance of linking the particular features of the graph, its peaks and decline, to the actions undertaken in the experiment.

Shifting the analytical perspective to how the teacher responded to the challenges encountered, the analysis shows that he provided support in form of eliciting the students’ understanding of the graph. As can be seen in the second half of Excerpt 1, he enacted this support by directing the students’ attention towards salient features and prompting for student accounts of these. He used his gaze, pointing, and hand movements to indicate where the students should focus their attention, and these cues served as crucial resources when posing questions and elaborating on the students’ responses. The teacher also provided important support in the form of modelling how to link the graph features and practical undertakings of the experiment, both by prompting and providing accounts of what was done during the measurement to produce the specific shapes in the curve.

Summing up, the analysed student–teacher interaction in Excerpt 1 illustrates typical challenges encountered in the initial phase of conducting the experiment. One aspect was the complex and dynamic nature of the graphing tool and the crucial role of scaling functions when exploring the graphs. A second aspect concerned making sense of the graph and the significance of establishing a shared perspective when attending to curve patterns. The analysis shows how the students and the teacher negotiated their orientations and how students needed a considerable amount of teacher support before they could attend to what the teacher emphasized to be the most important features. Furthermore, the analysis shows how the teacher balanced an eliciting strategy with providing relevant interpretations when supporting students in mapping between the curve features and the practical undertakings of the experiment. However, as we will see in the next excerpt, other conceptual challenges emerged once the experiment activity progressed into conducting comparative measurements and making sense of the final graphs.

Activity 2: Reviewing the results and comparing graph curves

Towards the end of Lesson 2, most groups had successfully conducted their measurements and were engaged in reviewing their final results. According to the original instructions, the students should have generated two measurement curves: one representing the experiment measurement and one representing the control measurement. In this phase, the students were to interpret the patterns displayed in their final graphs. To read the graph pattern as a difference between the experiment and the control measurement, the students had to construe the first measurement curve as decreasing and the control measurement curve as a flat line. However, due to the technical problems and the fact that the length of the measurements had to be adjusted, several groups ended up with three measurement curves in their final graph. As one can expect, marginal differences with regard to the condition of each measurement resulted in some inconsistencies that were also visible in the graph. For instance, students sometimes performed the measurements without replacing the water in the experiment glass or without rinsing the probe before submerging it into the control glass. As a consequence, some students ended up with control curves that did not appear as a flat line or with three measurement curves, none of which overlapped. These issues gave rise to some challenges when interpreting the results.

The following excerpt illustrates how the teacher and students attended to these issues in student–teacher interaction during this phase. The students were to compare the measurement curves they produced and report their conclusions and findings. We enter the interaction in Excerpt 2 where the teacher is summoned by the students Nina, Marcus, Rachel, and Thomas.

Excerpt 2 | ||

1. | Teacher: | ((leaning over, looking at the iPad; see Fig. 4.)) Well look at this! |

2. | Nina: | Uhm (.) We don’t really get this because the green one is when we just put it in the water |

3. | Teacher: | Yes that one is quite straight |

4. | Nina: | The red one ((referring to the middle line in Fig. 4b)) is when I was blowing (.) And I blew much harder than Thomas |

5. | Teacher: | Uhum (.) Yes ((leaning closer, resting his elbows on the desk)) |

6. | Nina: | And the red one ((referring to the upper line in Fig. 4b)) was when Thomas was blowing |

7. | Marcus: | Or longer |

8. | Nina: | Longer too |

9. | Teacher: | Yes but this is- ((scrolling slowly towards the end of the measurements)) Wow this looks really good (3) |

10. | Marcus: | But why did the green one ((referring to the lower line in Fig. 4b)) change? |

11. | Nina: | Yes we were wondering about that too |

12. | Teacher: | Yes what could’ve have happened? (2) ((looking from the iPad, pointing at it with his hand, to Marcus)) What could’ve happened? |

13. | Marcus: | Moved it? |

14. | Teacher: | Yes it might just be that you have moved it- Made it go slightly above the water- ((gesturing moving the sensor above the water surface)) Slightly above the water ((looking at the students)) That might be the cause (.) of such small changes ((scrolling in the graph)) but I think that in total it looks quite straight (.) But I think it is- The graphs here- ((points at the first and second measurement curves, looks at Nina and Rachel)) What does this tell us? |

15. | Marcus: | That it becomes more acidic |

16: | Thomas: | First up a bit up and then it becomes more acidic |

17. | Nina: | [I was blowing harder] |

18. | Teacher: | [Yes and my guess is that] I guess that you started blowing here ((points at the graph at 20 seconds)) |

19. | Nina: | Oh (.) No I started blowing here ((scrolls left)) |

20. | Teacher: | Yeah maybe you took a breath |

21. | Nina: | Yes I did (.) I had to breathe a little bit ((laughs)) |

22. | Teacher: | Yes that might be related to that jump (2) Still it is quite- When we zoom in enough there ((pointing up and down the y-axis)) I think there is a considerable change here |

Excerpt 2 shows that students were challenged in attending to the relevant differences in the graph patterns. In the opening of the excerpt, the students start to explicate the differences between the curves in terms of the practical undertakings of the lab experiment, emphasizing how Nina was blowing longer or harder than Marcus (lines 2, 4, and 6–8). The teacher confirms the students’ remarks but does not pursue or elaborate on these issues. Instead, he concludes that their result “looks really good” (line 9). Marcus then attends to the change within the control measurement curve (lower curve in Fig. 4b) and asks for an account of this (line 10). The teacher and the students settle on the explanation that holding the sensor above water is a probable cause (lines 12–14) before the teacher restates that the control curve “looks quite straight” and invokes the first and second measurement curves as a contrast (line 14). The interaction in the first part of the excerpt indicates differences between the participants’ orientation: The students’ attention seems oriented towards irregularities in the control measurement curve and the differences between the first and second measurement curves. The teacher, conversely, emphasizes the overall trends of the curves and strives to foreground the differences between the control measurement curve and the other two curves.

Another challenge displayed in Excerpt 2 involves making sense of what the graphs represent. When being prompted to explain what the graph demonstrates, both Marcus and Thomas immediately suggest that “it becomes more acidic” (lines 15–16). Nina, however, does not attend to the teacher’s prompt and continues focusing on curve patterns and practical undertakings by stating “I was blowing harder” (line 17). Thus, Nina does not display her understanding of what the graph represents scientifically. Although the teacher’s response in line 18 can be seen as a confirmation of all the students’ suggestions, he chooses to follow up on Nina’s account about the relation between the graph and the practical undertakings of the experiment, rather than the underlying scientific principles. The students’ eagerness to display their understandings of the relation between curve patterns and practical undertakings during the experiment can be seen in relation to the support provided by the teacher in the setting in Excerpt 1, where he modelled the strategy of mapping between graph features and practical undertakings as a means to interpret the graph. However, although the interaction in Excerpt 2 demonstrates students’ uptake of this particular strategy, it also displays the limits to which the mapping strategy was productive, in the sense that it also pushed students’ attention towards irregularities which are difficult to explain with reference to the practical undertakings of the experiment.

Shifting the focus towards how the teacher responded to the students’ challenges, the excerpt shows how the teacher strove to guide the students in their sensemaking process in several ways. Firstly, he guided the students by trying to orient their attention towards the salient features of the graphs. For instance, he tried to lead the students’ attention away from the details of single measurement lines or the differences between the first and second measurement towards the overall trends of the curves, thereby signalling which features were salient (lines 3, 14, and 23). Secondly, the teacher provided guidance by trying to enable the students to make sense of what the graph represents. This can be seen in his attempts to prompt the students to account for what the graphs’ overall declining tendency might represent (lines 10–14). However, as also seen in Excerpt 1, the interaction taking place in Excerpt 2 displays the challenge that most teachers experience in balancing scientific perspectives and concerns with the students’ intuitive perspectives and concerns. In this setting, the teacher was in the role of being an “expert” in interpreting graphs, and he knew the correct outcome of the experiment. This implies that he had a clear sense of the graphs’ salient features and what they would represent. Nevertheless, to both address the students’ intuitive concerns and guide them in a required direction, he also strove to balance the scientific concerns with the students’ concerns.

Summing up, the analysis of the interaction in Excerpt 2 illustrates the challenges encountered in the phase where the students reviewed their experiment results. A central aspect concerned deciding which graph features were salient and attending to the relevant changes. The analysis shows how the students focused on interpreting the graph in terms of the practical undertakings of the experiment; however, the analysis highlights how the teacher balanced support for students’ linking between the graph and measurement actions while also orienting their attention towards underlying scientific principles. As a response to the concerns encountered during this last phase of the experiment, the teacher decided to organize a recapping activity the following lesson to allow more time to develop a scientific perspective on the pH experiment. The final excerpt is from the follow-up activity where the students processed and reported their results from the experiment.

Activity 3: Processing and contextualizing the experiment results

The following day, Lesson 3 centred on recapping and contextualizing the results from the pH experiment. In this activity, the students worked in dyads with four questions aimed at facilitating reflections on how to link the experiment to real-world issues such as increasing CO2 emissions, changes in ocean acidity and potential effects on calcifying organisms. The questions were displayed on the board during the whole activity, next to a picture of the graph that one of the groups had produced (see Fig. 5). The students discussed the four questions and formulated written responses which they would later review and rewrite in the original groups of four in their digital workbooks.

While the students worked, the teacher circulated, helped students and monitored the groups’ progress. The teacher and students spent the most time on the second question: “What did we simulate? Can we connect what we did yesterday to a real challenge in nature?” (see Fig. 5). The question prompted students to reflect on the relation between the results of the experiment and the real-world phenomenon it was supposed to illustrate, as well as prompting a reflection about OA. However, students found it challenging to provide an account of these connections, not least because the teacher required them to produce a written account, something that demanded a high degree of verbal and scientific accuracy. The interaction in Excerpt 3 illustrates how student–teacher interactions addressed the challenge of making these connections. In the following setting, Steven and Andy have just started their work with the second question when they summon the teacher for guidance.

Excerpt 3 | ||

1 | Steven: | What did we stimulate? |

2 | Teacher: | What we simulated? |

3 | Steven: | Yeah |

4 | Teacher: | Well that actually means- We did an experiment in the classroom (.) But we did it because we wanted to demonstrate something in nature |

5 | Steven: | Yes but what did we demonstrate? ((laughing)) |

6 | Teacher: | What do you think the water represents? |

7 | Students: | The ocean |

8 | Teacher: | Right (.) And why did we blow into the straw? What was that supposed to illustrate? |

9 | Steven: | It- |

10 | Andy: | It is- (.) aciding of water ((referring to the term acidification)) |

11 | Teacher: | Yes that it what it actually is |

12 | Andy: | Aciding |

13 | Teacher: | And when we blow into the water (.) what are we actually doing? What are we inducing? |

14 | Students: | CO2 |

15 | Teacher: | Right ((pointing at Steven)) |

16 | Steven: | And then (.) watch what happens |

17 | Teacher: | And what has that got to do with the ocean? |

18 | Andy: | Well if it goes down (.) it might endanger calcifying organisms |

19 | Teacher: | Yes that’s right (.) That is number three there ((pointing at the board, referring to the third question: “What are the consequences for calcifying organisms living in the ocean when this happens?”)) But of course when the CO2 levels in the atmosphere increase- what happens to the CO2 level in the ocean? |

20 | Steven: | That- (.) The pH value goes down |

21 | Teacher: | Yes and the CO2 level in the ocean will also increase (.) Right so when we blow into the straw into the water we demonstrate what actually happens when the CO2 levels of the ocean increase (.) Are you with me on that? |

22 | Steven: | ((nods)) Uhum |

Excerpt 3 shows that the students were challenged by the task of contextualizing the actions undertaken during the experiment. The excerpt begins with Steven’s probing for an account of what the experiment demonstrated from the teacher (lines 1–5). The teacher responds by posing a series of cued questions prompting the students to account for the objects and actions that comprised the experiment and what they signified (lines 6, 8, 13, and 17). The succeeding interaction (in lines 7–15 and 17–20) shows that the students successfully make connections between the central components of the experiment and their corresponding real-world phenomenon when elicited by the teacher’s cued questions. The interaction in the second part of the excerpt shows that a more challenging aspect of accounting for the experiment concerns relating the experiment to real-world issues. This can be illustrated by the interaction taking place from line 17 and onwards, where the teacher requests an account of the link between CO2 induction, acidification and the ocean, as identified by the students (lines 7, 10, 12, and 14). Andy’s response in line 18 (“if it goes down, it might endanger calcifying organisms”) suggests that he construes the experiment as a representation of the effects of acidification. However, his reply does not target changes in CO2 level. The teacher’s follow-up question (line 19) specifically prompts an account of the relation between CO2 concentrations in the atmosphere and CO2 levels in the ocean. Looking at Steven’s response (line 20), he again targets the decrease in pH, not an increase in CO2 level. In line 21, the teacher confirms and elaborates, “And the CO2 level in the ocean will also increase”. The interaction in the last part of the excerpt suggests that the students, although demonstrating that they can link the experiment to the real-world issue of OA, are in fact bypassing the crucial aspect of understanding that an increase in CO2 in the atmosphere will lead to an increase in CO2 in the ocean. The teacher, conversely, strives to elicit an account of CO2. Upon realizing that the students are not attending to the relationship between CO2 concentration in the atmosphere and in the ocean, he explicates the relationship himself.

Looking at how the teacher responded to students’ challenges, Excerpt 3 illustrates how the teacher supported the students in contextualizing the experiment. Firstly, the teacher provided support in eliciting student understandings of the experiment as representation of real-world phenomena. From line 6 onwards, the teacher prompted the students in linking the central elements of the experiment (tap-water, blowing through the straw and acidification of water) with a corresponding real-world phenomenon OA, thereby establishing the central connections needed to reason about the significance of the experiment. Secondly, the teacher provided support by trying to guide the students’ conceptual reasoning by confirming and elaborating on student accounts and cueing questions to help students connect and integrate prior contributions. This can be seen in line 17 where the teacher, probing for accounts of the changing CO2 levels in the ocean, challenged the students to connect the information they had provided in previous utterances. However, the following exchanges display that the guiding questions did not provide enough support for the students to attend to the change in CO2 levels, and the teacher eventually provided the targeted information in line 21.

Summing up, the analysis of the interaction in Excerpt 3 shows the challenges encountered when students were working to produce written accounts of what was demonstrated by the experiment. An important aspect concerned establishing connections between elements in the experiment and the real-world phenomenon of OA. Another challenging aspect was establishing an understanding of the role of CO2 in the pH experiment and the relation between CO2 and pH levels. Altogether, the analysis of the three excerpts from the different phases of the students’ engagement with the real-time lab and the graphical representations shows the crucial work of the teacher in eliciting students’ understanding through probing and cueing questions, guiding students’ reasoning, and targeting potential gaps in students’ conceptual understandings. In the following section, we will discuss the main empirical findings of the analysis according to findings from previous studies.

Discussion

The aim of this study is twofold. On a general level, it aims to provide insight into the role of teacher support in naturalistic CSCL settings and examine how teacher support intersects with other available support structures. On an empirical level, the study aims to provide insight into productive and challenging aspects of students’ engagement with graphical representations in real-time labs, as well as what kind of support the teacher enacts in this particular type of CSCL setting. In the following sections, we will discuss our central empirical findings in relation to previous research findings, as well as potential implications for teacher support and instructional designs in naturalistic CSCL settings.

In this article, we have chosen student–teacher interaction during small-group activities as our analytical access point for exploring the role and significance of support provided by a teacher. The main reason for this choice is that these types of settings provide insight into the various challenges students might encounter during their work with real-time labs, as well as how the teacher addresses and deals with these challenges. In a sense, this implies that we intentionally have been “looking for trouble” instead of focusing on all the settings where everything went smoothly. The focus on “trouble” does not, however, imply that the educational setting in focus here was unproductive or unsuccessful.

Before turning our attention towards teacher support, we discuss our findings in relation to prior research on students’ engagement with real-time labs. Several studies have shown that students’ engagement with graphic representations in real-time labs can be productive in the sense of enhancing students’ development of graphing skills and conceptual understanding of scientific phenomena (Friedler and McFarlane 1997; Linn et al. 1987; Mokros and Tinker 1987; Nicolaou et al. 2007), as well as supporting students’ shared reasoning (Kelly and Crawford 1997; Lindwall and Ivarsson 2011; Nemirovsky et al. 1998). Despite these promising findings, studies have also documented more challenging aspects of students’ engagement with graphical representations in real-time labs, such as coping with the high level of detail typical of graphs from real-time experiments, identifying the relevant graph patterns and interpreting trends in real-time graphs (Lindwall and Lymer 2008; Testa et al. 2002). The empirical findings of this study confirm and supplement findings from previous research. In particular, our study provides deeper insight into challenging aspects concerning the interpretation of graphical representations. For instance, the analyses of student–teacher interactions show that students’ attention tended to be oriented towards some of the less significant graph features, such as the peaks rather than the slopes (Excerpt 1) and insignificant differences within curves rather than the differences between the control and other curves (Excerpt 2). This tendency made it difficult for the students to identify relevant shapes and patterns within the graphs. Another interpretational challenge demonstrated by our analyses relates to the students’ difficulty of seeing the connection between the graphic representations and the practical undertakings of the experiment (Excerpt 1 and Excerpt 2).

Another type of challenge displayed in our analyses of student–teacher interactions concerns the contextualization of the undertaken experiment. Prior studies focusing on lab work and other experimental settings have documented that students often struggle to see the relation between experiments and their underlying scientific principles or corresponding real-world phenomena, a finding that is documented across different types of CSCL settings (Furberg 2016; van Joolingen et al. 2007). The present study yields similar findings in the context of engaging with graphic representations in real-time labs. Our analyses display that students were challenged by linking the declining pH curve produced during the experiment to the underlying scientific principles of pH change. Furthermore, the analysis reveals that the students struggled with linking the undertaken experiment to the overall scientific phenomena represented by the experiment; the issue of OA (Excerpt 3).

With these challenges as a backdrop, we turn our attention towards the support provided by the teacher. As seen in our review of prior research, several CSCL studies have demonstrated the significant role of procedural and conceptual support from the teacher in students’ engagement in inquiry and lab work settings (Furberg 2016; Mäkitalo-Siegl et al. 2011). Concerning procedural support (i.e., guidance that aids students in regulating their work process), the initial analysis of student–teacher interactions during the lab work activities showed that rather few of these involved procedurally oriented issues. The reason might be that the teacher provided a broad range of instructional materials such as lab guideline sheets, descriptions of work distribution in the groups, an instructional video and reflection tasks. In addition, the teacher provided procedural instructions during whole-class sessions, demonstrating how to use the experiment equipment and explaining the guideline sheet. Taken together, these measures provided a considerable amount of support on a procedural level, and additional procedural support was required mainly in the first phase of the lab experiment activities. In particular, the teacher needed to provide support in how to utilize the scaling functions to adjust the scales in the graph display (Excerpt 1). Thus, our study demonstrates both the productive aspects of scaffolding devices in the form of material resources and instructions provided upfront, as well as the significance of the teacher providing support that fills in the gaps in the instructional design.

Previous studies focusing on students’ engagement with lab experiments and representational resources in CSCL settings have also demonstrated the importance of conceptual support provided by teachers– referring to guidance that targets students’ understanding of the scientific content and concepts (Arnseth and Krange 2016; Furberg 2016; Jornet and Roth 2015). The initial analyses of student–teacher interactions taking place in the course of the lab work activities show that most of the challenges expressed by the students concerned conceptually oriented issues. The microanalyses of the selected student–teacher interactions reveal that the teacher provided conceptual support by using an eliciting strategy and an elaboration strategy. The eliciting strategy became evident on the representational level, where the teacher guided students’ attention towards specific features and elicited their perceptions of these, as well as on an inter-representational level, where the teacher prompted student ideas about connections between experiment and graphic representation to develop a shared understanding of this relation. As displayed in Excerpt 1 and Excerpt 2, eliciting at these levels was characterized by the use of discursive devices such as prompts and cued questions in conjunction with non-verbal devices such as gestures, gaze and hand movements to actively engage students in shared reasoning. In this sense, our analyses also demonstrate that the graphic representations served as productive shared resources from which students could make their interpretations, understandings and challenges visible and available to the teacher. Further, our analyses of interaction at the representational level also demonstrate the significance of the teacher’s deictic movements as means to invoke disciplinary-relevant orientations towards the graphs. On a contextual level, where the teacher guided students’ reasoning about the relation between the experiment and real-world issues, the teacher’s eliciting strategy consisted mainly of cued questions, reframing, and elaborating on student accounts. This can be illustrated by the analyses of Excerpt 3, where the teacher resorted to discursive devices to elicit students’ accounts of the links between the experiment and corresponding scientific phenomena and concepts due to the lack of visual representations of the lab experiment setup. Finally, our analyses also display instances across the three levels where the teacher refrained from the eliciting strategy and used an elaboration strategy, which implies that he elaborated on students’ inquiries or his own guiding questions by providing the crucial information himself. Examples of this strategy can be seen in Excerpt 2 where the teacher provided a final interpretation of the graph pattern as demonstrating a change in pH and in Excerpt 3 where the teacher elaborated on students’ accounts of the experiment highlighting the role of increased CO2 levels.

The shift in the teacher’s guiding strategy aligns with the findings in other CSCL studies demonstrating that a central concern for teachers in CSCL settings is to balance their efforts in aiding students’ in utilizing their own knowledge with the approach of taking a source position and providing crucial information (Strømme and Furberg 2015). The teacher’s role in collaboration will always be influenced by the teacher’s status as an expert and the provider of the instructional design (Wells 1999). In the present study, as is almost always the case in any experimental science setting, the teacher knew the results of a correctly performed experiment as well as what to look for when interpreting the graphs. Conversely, the students were in an “inquiry mode” because they did not know the expected results of their experiment or which graph features were salient. The shift in the teacher’s strategy can be seen in light of what Mortimer and Scott (2003) referred to as the need for a balance between a dialogic approach to students’ contribution where explorations into students’ orientations and facilitating collaborative reasoning is central, and the need to provide authoritative support in the form of confirmations, validations and making information which was not attended to by students explicit.

Returning to the notion of the ZPD, a central aim in the context of CSCL is to design learning environments where peers, digital resources and instructional designs provide support that enables students to accomplish tasks they would not be able to solve alone (Pea 2004). However, several studies have demonstrated that even in well-designed CSCL settings, “gaps” between the technology design, peer collaboration and the surrounding instructional design do occur (Furberg 2016; Järvelä et al. 2016; Mäkitalo-Siegl et al. 2011; Strømme and Furberg 2015). These findings serve to demonstrate the significance of additional support from the teacher in bridging these gaps to keep students in the ZPD. The findings in the present study provide further insight into the functions of teacher support in CSCL settings, demonstrating that the teacher has an important role to play, both as an orchestrator who invokes and interweaves digital and social resources and as a guide who provides direction in students’ collaborative reasoning.

Implications for instructional design

Regarding implications for teaching practice and instructional design in CSCL settings and real-time lab settings in particular, several points can be made on the basis of our findings. Firstly, the teacher in our study provided students with a considerable amount of procedural support prior to the lab experiment activities, in the form of both instructional materials designed to scaffold students’ work during the lab experiment and direct instructions during whole-class sessions upfront. Our study serves to demonstrate the importance of designing scaffolding devices that provide structure in students’ work. However, our analyses also show that students needed additional support in how to utilize the scaling function in the SPARKvue tool. Thus, we suggest that targeting the scaling function in instructional materials or in the demonstrations provided by the teacher prior to the lab experiment activities might have been beneficial for the students and released more time for other issues in student–teacher interaction during the first phase of the experiment.

Regarding conceptual support, the findings of the present study demonstrate that the material and digital resources did not provide nearly enough support for student groups to overcome conceptual difficulties without additional teacher support. Further, we found that conceptually-oriented support was mainly provided in the form of teacher-student interaction in group work settings. In line with studies that have shown the potential role of whole-class sessions aimed at consolidating students’ experiences from group-work settings (Jornet and Roth 2015; Mäkitalo-Siegl et al. 2011), we argue that whole-class discussions might also have been an alternative means to address some of the conceptually oriented issues that arose as part of conducting and reviewing the real-time experiment. We propose that whole-class dialogues could be organized either as pauses in group work at points where several of the student groups are struggling with similar issues or as part of consolidation activities at the end of each activity, providing the opportunity to revisit and conclude on central issues. Either way, invoking contributions of several student groups might allow different student orientations to become resources in collaborative reasoning, which in turn might produce productive interactions and reduce the need to shift from an eliciting to an elaboration strategy.