Abstract

Answer set programming (ASP) is a knowledge representation and reasoning paradigm with high-level expressive logic-based formalism, and efficient solvers; it is applied to solve hard problems in various domains, such as systems biology, wire routing, and space shuttle control. In this paper, we present an application of ASP to housekeeping robotics. We show how the following problems are addressed using computational methods/tools of ASP: (1) embedding commonsense knowledge automatically extracted from the commonsense knowledge base ConceptNet, into high-level representation, and (2) embedding (continuous) geometric reasoning and temporal reasoning about durations of actions, into (discrete) high-level reasoning. We introduce a planning and monitoring algorithm for safe execution of plans, so that robots can recover from plan failures due to collision with movable objects whose presence and location are not known in advance or due to heavy objects that cannot be lifted alone. Some of the recoveries require collaboration of robots. We illustrate the applicability of ASP on several housekeeping robotics problems, and report on the computational efficiency in terms of CPU time and memory.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Answer set programming (ASP) [3, 18] is a knowledge representation and reasoning paradigm with high-level expressive logic-based formalism, and efficient solvers (e.g., iClingo [13]). Due to these advantages, it has been applied in many domains, such as systems biology [12, 26], biomedical informatics [7], wire routing [9], space shuttle control [22], etc. The idea is to formally represent the computational problems (e.g., actions of robots and planning problems) in ASP, and use the state-of-the-art ASP solvers to compute models of the formulation that characterize the solutions (e.g., discrete plans) of the given problem. In this paper, we illustrate how computational methods/tools of ASP can be used effectively for the housekeeping robotics problem (i.e., multiple autonomous robots cleaning a house collaboratively).

Advantages of using ASP for representing and reasoning about housekeeping robotics domains can be summarized along with the main contributions of this paper as follows. First, one of the challenges of housekeeping robotics domain is the necessity of commonsense knowledge for the robots to behave intelligently tidying a house. For instance, in a tidy house, books are in the bookcase, dirty dishes are in the dishwasher, pillows are in the closet; some objects, like glasses, are fragile, whereas some others, like pillows are not. Using ASP computational methods, we can automatically extract such commonsense knowledge from the commonsense knowledge base ConceptNet [19], and embed it in the high-level representation of robots’ actions in ASP.

Second, in the spirit of [8], we embed continuous geometric reasoning (e.g., to avoid robot–robot, robot–stationary object and robot–moveable object collisions) also in the high-level discrete representation of robots’ actions in ASP. In this way, not only the ASP solver guides the motion planner by providing a discrete task plan that satisfies some given complex temporal goals (e.g., some actions like carrying heavy objects should be postponed to later steps in a plan) or by conveying information as to how some actions should be executed (e.g., a fragile object should be carried slowly), but also, in return, the motion planner guides the ASP solver by providing information about the configurations that would lead to collisions; so that the ASP solver can find feasible plans. Also, for multiple autonomous robots to complete cleaning a house by a given time, durations of actions should be taken into account. However, this brings about further challenges. Since the robots can help each other, when a help request is received, the robot should be able to autonomously decide whether she has enough time to complete her tasks and help the other robot by the given deadline. Since it takes time for robots to change locations so that they can help each other, transportation delay (depending on the length of the continuous trajectory) should also be considered. To address these challenges, we embed temporal reasoning about durations of actions into high-level representation and reasoning.

Once every robot finds a discrete plan using the ASP solver iClingo, the robots start executing these plans. However, a plan execution may fail, for instance, due to collisions with movable objects whose presence and location are not known in advance or due to heavy objects that cannot be lifted alone. Also, a failure may cause a delay and put the given time constraints at stake. In such a case, a robot may need assistance of another robot to meet its deadline. We introduce a planning and monitoring algorithm, so that when such incidents occur, robots can identify the cause of the failure and act/collaborate accordingly.

The paper is organized as follows: Sect. 2 gives a summary of the related works, and Sect. 3 provides an overview of ASP, while a sample housekeeping domain is represented using ASP in Sect. 4. Sections 5 and 6 detail embedding of background/commonsense knowledge bases and geometric/temporal reasoning into high-level ASP representation, respectively. Section 7 discusses solution of hybrid planning problems using ASP, while execution and monitoring of the computed hybrid plans are addressed in Sect. 9. Section 10 concludes the paper.

2 Related work

Another line of work that studies housekeeping robotics using a formal knowledge representation and reasoning framework is presented in [1, 2]. Different from our ASP-based approach to housekeeping robotics, Aker et al. represent the robots’ actions and planning problems in the high-level action description language \(\mathcal{C}+\) [15] and solve the task planning problems using the causal reasoner CCalc [21]. Although Aker et al. discuss embedding commonsense knowledge in high-level representation of housekeeping domain, the automatic extraction of such knowledge from a commonsense knowledge base, like ConceptNet, is not discussed. Aker et al. assume that actions are instantaneous, whereas we consider a more involved housekeeping domain where durations of actions (and temporal constraints on the total duration of plans) are taken into account.

In addition to these differences, there are also variations between these two approaches based on the difference between the two formalisms and the related automated reasoners. ASP allows some useful constructs that make it easier to formalize some concepts: aggregates (e.g., to find the maximum of the durations of concurrently executed actions), cardinality constraints (e.g., to specify that a heavy object can be carried by at least 2 robots), defaults and exceptions (e.g., to specify that, in an untidy house, the movable objects are not normally at their desired locations). Furthermore, ASP allows us to solve optimization problems by means of maximize/minimize constructs (e.g., to minimize the total cost of actions). In addition to such advantages of ASP formalism, ASP solvers also have some advantages. For instance, the ASP solver iClingo computes an answer to a planning/prediction problem incrementally, and thus uses less memory compared to the causal reasoner CCalc. We observe these advantages of ASP also in the experiments that we performed over some housekeeping problems instances. On the other hand, for the housekeeping robotics domain, we observe that the computation times are comparable; in that sense, our ASP-based approach can be viewed as complementary to Aker et al.’s approach.

There is another line of work that utilizes the action description language \(\mathcal{C}+\) and the causal reasoner CCalc, but in a different robotic application: robotic manipulation [8]. This work is similar to Aker et al.’s work in that they formalize the domain in \(\mathcal{C}+\) and solve task planning problems using CCalc. Similar to our ASP-based approach to housekeeping robotics, all these related works [1, 2, 8] embed geometric reasoning in the high-level representation of domains, to be able to find feasible task plans; they can also include temporal constraints in the high-level descriptions of planning problems. Though, our ASP-based approach to embedding temporal reasoning in high-level representation and reasoning is more general in that we consider durative actions. In particular, we can estimate durations of actions (e.g., going from one location to another) by utilizing geometric reasoning (e.g., based on the length of the continues trajectory computed by a motion planner), and use these estimates to decide for the feasibility of a task plan with respect to the given time constraints.

Among these related works that utilize the high-level action description language \(\mathcal{C}+\) and the causal reasoner CCalc, a planning and monitoring algorithm is presented in [1]. Our planning and monitoring algorithm is more general in that it considers durations of actions, and delays due to failures.

3 Answer set programming

The idea of ASP is to represent knowledge (e.g., actions of multiple robots) as a “program” and to reason about the knowledge (e.g., find a plan of robots’ actions) by computing models, called “answer sets” [14], of the program using “ASP solvers” like iClingo.

An (ASP) program is a finite set of rules of the form

where \(m \ge k \ge 0, L_0\) is a literal (a propositional atom \(p\) or its negation \(\lnot p\)) or \(\bot \), and each \(L_i\,(i>0)\) is a literal. In such a rule, \(L_0\) is called the head and \(L_1, \ldots , L_k, { not}\ L_{k+1}, \ldots , { not}\ L_{m}\) is called the body of the rule. If the head of a rule is \(\bot \), then the rule is called a constraint; and \(\bot \) is simply dropped from the head. Note the two sorts of negation: classical negation \(\lnot \) as in classical logic, and default negation \({ not}\). Intuitively, \(\lnot p\) means that “it is known that \(p\) is not true” whereas \({ not}\ p\) means that “it is not known that \(p\) is true”. Default negation is useful in expressing default values of atoms. For instance, \(closed \leftarrow { not}\ \lnot closed\) expresses that a door is closed by default (i.e., unless it is not known that it is open).

Intuitively, an “answer set” for an ASP program is a “minimal” (consistent) set of literals that satisfies the program. If an atom \(p\) is included in an answer set, then \(p\) is known to be “true”. If \(\lnot p\) is included in an answer set, then \(p\) is known to be “false”. Otherwise, neither \(p\) nor \(\lnot p\) is in an answer set, \(p\) is unknown. Due to its semantics, ASP is different from Prolog. For a precise definition of an answer set, we refer the reader to [14].

When we represent a problem in ASP, we use special constructs of the form \(l \{L_1,\ldots ,L_k\} u\) (called cardinality expressions) where each \(L_i\) is a literal and \(l\) and \(u\) are nonnegative integers denoting the “lower bound” and the “upper bound” [24]. Programs using these constructs can be viewed as abbreviations for normal programs [10]. Such an expression describes the subsets of the set \(\{L_1,\ldots ,L_k\}\) whose cardinalities are at least \(l\) and at most \(u\). Such expressions when used in heads of rules generate many answer sets whose cardinality is at least \(l\) and at most \(u\), and when used in constraints eliminate some answer sets.

A group of rules that follow a pattern can be often described in a compact way using “schematic variables”. For instance, we can write the program

as follows

The auxiliary predicate \({ index}(i)\) is introduced to describe the ranges of variables. ASP solvers compute an answer set for a given program that contains variables, after “grounding” the program. The “definitions” of such auxiliary predicates tell the ASP solver how to substitute specific values for variables in schematic expressions. Variables can be also used “locally” to describe the list of formulas. For instance, the rule \(1\{p_1,\ldots ,p_7\}1\) can be represented as follows: \(1\{p(i):{ index}(i)\}1.\)

4 Representing housekeeping domain in ASP

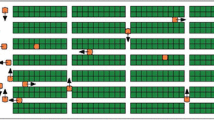

Consider a house consisting of three rooms: a bedroom, a living room and a kitchen as shown in Fig. 1. There are three cleaning robots in the house. The furniture is stationary and their locations are known to the robots a priori. Other objects are movable. There are three types of movable objects: books (yellow pentagon shaped objects), pillows (red triangular objects) and dishes (blue circular objects). The locations of the movable objects are not known to the robots a priori. The goal is for the cleaning robots to tidy the house collaboratively in a given amount of time.

We represent the housekeeping domain in ASP and then solve planning problems using the ASP solver iClingo. In the following, we describe how the housekeeping domain is represented in ASP.

4.1 Fluents and actions

To represent the robots’ actions and the change in housekeeping domain, we think of the world as being in one of many states. To be able to describe the states of the world, we need to introduce fluents (atoms whose truth values depend on time).

We view the house as a grid; so the robots and the endpoints of objects are located at grid-points. Here are some of the fluents introduced for housekeeping domain: \(at(th,x,y,t)\) (“thing \(th\) is at \((x,y)\) at time step \(t\)”), \(connected(r,ep,t)\) (“robot \(r\) is connected to endpoint \(ep\) of an object at time step \(t\)”).

We introduce the following elementary actions to represent robots’ actions: \(goto(r,x,y,t)\) (“robot \(r\) goes to \((x,y)\) at time step \(t\)”), \(detach(r,t)\) (“robot \(r\) detaches from the object it is connected to, at time step \(t\)”), and \(attach(r,t)\) with an attribute \(attach\_point(r,ep,t)\)(“robot \(r\) attaches to an object at its endpoint \(ep\) at time step \(t\)”).

4.2 Direct effects of actions

We describe the direct effects of the actions above by ASP rules. For instance, the following rule expresses that the direct effect of the action of a robot \(r\) going to a location \((x,y)\):

Similarly, we can describe the direct effects of the action of a robot \(r\) detaching from the endpoints of an object it is connected to:

and the direct effect of the action of a robot \(r\) attaching to an endpoint of an object:

4.3 Preconditions of actions

We describe preconditions of actions using constraints. For instance, at any time step \(t\), we can describe that a robot \(r\) cannot go to a location \((x,y)\) if the robot is already at that location, as the following:

To describe that a robot \(r\) cannot go to a location \((x,y)\) if that location is already occupied by a stationary object, we need to know in advance the locations of stationary objects in the house. Such knowledge does not change in time, and is independent from the rest of the domain representation. Therefore, we represent it as a part of the “background knowledge” using atoms of the form \(occupied(x,y)\), and make use of it as follows:

4.4 Ramifications

If the robot is holding an object, then the location of the object changes as the location of the robot changes. In that sense, the change of the location of the object is a ramification of the robot’s action. ASP allows us to represent this ramification as follows:

4.5 Constraints

ASP allows us to represent constraints on the states of the world. For instance, the following constraint expresses that, at any time step \(t\), two objects \(ep\) and \(ep1\) do not reside at the same location by the constraint

According to the formulation above, actions can be executed concurrently. To ensure that some actions cannot be executed concurrently, we make use of constraints as well. For instance, the following rules express that a robot cannot attach to an object while moving to a location:

5 Embedding commonsense knowledge in planning

In the housekeeping domain, the robots need to know that books are expected to be in the bookcase, dirty dishes in the dishwasher, and pillows in the closet. Moreover, a bookcase is expected to be in the living-room, dishwasher in the kitchen, and the closet in the bedroom. In addition, the robots should have an understanding of a tidy house to be able to clean a house autonomously: tidying a house means that the objects are at their desired locations. Also, while cleaning a house, robots should pay more attention while carrying fragile objects; for that they should have an understanding of what a fragile object is. Such commonsense knowledge is formally represented already in commonsense knowledge bases, such as ConceptNet.

ASP allows us to extract and embed commonsense knowledge from these knowledge bases by means of “external predicates.” External predicates are not part of the signature of the domain description (i.e., they are not declared as fluents or actions). They are implemented as functions in some programming language of the user’s choice, such as C++ or Prolog. External predicates take as input not only some parameters from the domain description (e.g., the locations of robots) but also detailed information that is not a part of the action domain description (e.g., commonsense knowledge). They are used to externally check some conditions.

5.1 Expected locations of objects

We represent the expected locations of objects by a new fluent \(at\_desired\_location(ep,t)\) describing that an object \(ep\) is at its expected position in the house at time step \(t\). Unlike the fluents above, we can define \(at\_desired\_location(ep,t)\) in terms of the others (much like a “derived predicate”):

The second rule expresses that normally the movable objects in an untidy house are not at their desired locations. The first rule formalizes that the object \(ep\) is at its desired location if it is at some “appropriate” position \((x,y)\) in the right room. Here \(in\_place\) is not a fluent, but an external predicate; it is defined externally in Prolog as follows:

Here belongs(EP,OBJ), type_of(OBJ,Type) describe the type Type of an object Obj that the endpoint EP belongs to, and el(Type,Room) describes the expected room of an object of type Type. The rest of the body of the rule above checks that the endpoint’s location (X,Y) is a desired part of the room Room.

5.2 Acquiring commonsense knowledge

In order to acquire the knowledge of expected rooms of objects, which is represented by el(Type,Room), we make use of existing commonsense knowledge bases. Specifically, we use ConceptNet.

Using Python API of ConceptNet 4.0, we can easily query which objects are likely to be located at a specific room. The network provides a well-suited relation for our purpose, called “At Location”. As the name itself suggests, it is a relation denoting the locations of objects.

For instance, we query the objects which are likely to be located in the bedroom, and automatically generate a list of facts as follows:

Here atLocation represents the “At Location” relation, and bedroom is representing the “Bedroom” concept. The query outcome denoted by result is a set of assertions. An assertion in ConceptNet is simply an object which includes two related concepts, and a relation connecting these two concepts. Assertions also have some intrinsic properties like the language of the assertion, and the frequency in which the given two concepts are related to each other by the given relation. “Score” is one of these intrinsic values which denotes the reliability of an assertion. In order to eliminate unreliable assertions, we filter out the ones with a score less than the value of threshold. The value of threshold is determined empirically, and is equal to \(5\) for this case.

After obtaining the query result, we simply represent it in Prolog using atoms of the form el(Type,Room). Here are some assertions about what is expected in the bedroom:

We could have obtained a much longer list of facts if the threshold had been set to a lower value; but then we would have jeopardized the integrity of the facts. ConceptNet is generated automatically using the data gathered collaboratively by Open Mind Common Sense Project, and as a result, contains some unreliable knowledge. Still, eliminating the unreliability is possible as described above, with the help of the easy-to-use API of ConceptNet.

Note that the expected location of an object depends on where it is: for instance, the expected location of a book on the floor of the kitchen is the exchange area between the kitchen and the living room (so that the robot whose goal is to tidy the living room can pick it up and put it in the bookcase); on the other hand, the expected location of a plate on the floor of the kitchen is the dishwasher. Therefore, the predicate area(Room,Xmin,Xmax,Ymin,Ymax) describes either an exchange area (if the object does not belong to the room where it is at) or a deposit area (if the object belongs to the room where it is at). Note also that knowledge about specific objects in a room as well as specific deposit and exchange areas are not common knowledge to all robots; each robot has a different knowledge of objects in their room.

5.3 Tidy house

Once we define the expected locations of objects in a house as described above, we can introduce another fluent \(tidy\) to define a tidy house:

The second rule above expresses that the house is normally tidy. The first rule above describes the exceptions: when an object is not at its expected location, the house is untidy.

5.4 Other sorts of commonsense knowledge

Our method of embedding commonsense knowledge is general enough to embed all sorts of commonsense knowledge. For instance, we can extract from the commonsense knowledge base ConceptNet which types of objects have property of being fragile and define an external predicate to specify the endpoints of fragile objects:

and then describe that fragile objects should be handled more carefully by adding an “attribute” of the action of attaching to an object:

5.5 Exceptions to commonsense knowledge

There may be some exceptions to the commonsense knowledge extracted from the commonsense knowledge bases. For instance, books normally are expected to be in the living room; however, cookbooks are exceptions (since they are expected to be in the kitchen). Such exceptions can be represented in ASP in a natural way, since its formalism allows representation of “defaults”. To be able to handle such exceptions about the locations of objects, we need to replace the rule

with the rule

and say that cookbooks are exceptions:

6 Embedding geometric reasoning and temporal reasoning in planning

We can embed geometric reasoning in causal reasoning by making use of external predicates as well. For instance, suppose that the external predicate \(path\_exists(x,y,x1,y1)\) is implemented in C++ utilizing Rapidly exploring Random Trees (RRTs) [16]; so it returns 1 if there is a collision-free path between \((x,y)\) and \((x1,y1)\), and it returns 0 if there is no collision-free path between \((x,y)\) and \((x1,y1)\). Then we can express that the robot \(r\) cannot go from \((x1,y1)\) to \((x,y)\) where \(path\_exists(x,y,x1,y1)\) does not hold, by the following constraint:

For multiple autonomous robots to complete cleaning a house by a given time, durations of actions should also be taken into account. Since the robots can help each other, when a help request is received, the robot should be able to autonomously decide whether she has enough time to complete her tasks and help the other robots by the given deadline. Since changing locations to be able to help each other takes some time, transportation delay (depending on the length of the continuous trajectory) should also be taken into account.

To represent duration of actions, we introduce another fluent \(robot\_time(r,d,t)\) (“duration of the action performed by robot \(r\) at time step \(t\) is \(d\)”). Then we define effects of actions on these fluents accordingly. For instance, the rule below expresses that the action of a robot \(r\) attaching to an object takes 1 unit of time:

Every attach performed by the robots is nearly identical; therefore, it is reliable to assign a constant value for the duration of this action. But the idea may not be applicable to other actions, such as changing the location. Since the path traveled by the robot is the main factor contributing to the duration of a movement of the robot, we tried to obtain an estimate by taking the length of the path into account using the following rule:

Here \(time\_estimate(x1,y1,x2,y2)\) is an external predicate implemented in C++. It calls a motion planner to find a trajectory from an initial position \((x1,y1)\) to a final position \((x2,y2)\), and then returns a duration estimate between 1 and 4, based on the total length of this trajectory. Therefore, the rule expresses that if the robot \(r\) goes from one point to another, the execution time is proportional to the length of the path it follows.

There is no obligation that every robot should perform an action at every state. Therefore, we define the default value for the duration of an action to be 0:

While \(robot\_time(r,d,t)\) estimates the duration of a single action, we also need a fluent to keep track of the total amount of time starting from the initial state. For that we introduce a fluent \(elapsed\_time(d,t)\) (“it takes duration \(d\) to reach time step \(t\)”), and define it essentially by accumulating the durations of each action.

7 Hybrid planning using ASP

Once the housekeeping domain is represented, and both the background/commonsense knowledge and geometric/temporal reasoning are embedded in the high-level representation, we can solve planning problems using ASP.

Since geometric reasoning and temporal reasoning (via a motion planner) are embedded in the computation, the calculated plans can be seen as hybrid plans integrating discrete ASP planning and continuous motion planning. Hybrid plans help computation of plans that are geometrically feasible as well as temporally feasible. Let us show these two advantages of hybrid planning with examples.

Consider Scenario 1, depicted in Fig. 2, in which one of the nightstands together with the bed forms a narrow passage possibly blocking the robot to reach the north part of the bedroom where the red pillow is located. Note that this kind of geometric feasibility checks can only be performed using full geometric models of the robot and the room at a continuous level, and cannot be abstracted at the grid level without loosing completeness. Therefore, without geometric reasoning, naive task planning may lead to infeasible plans.

Indeed, at the grid-level, preconditions prohibit the robot to go to the locations occupied by the robot itself and occupied by the stationary objects; so, in Scenario 1, the robot can go to where the red pillow is, and given the following planning problem

naive task planning using iClingo without geometric feasibility checks computes the following geometrically infeasible plan.

However, our hybrid planning approach identifies that such a planning problem is infeasible (i.e., iClingo returns that no solution exists) thanks to the geometric reasoning embedded into high-level representation.

The second example shows the usefulness of embedding temporal reasoning in planning, by means of estimating the durations of actions based on the length of paths (computed by motion planners), to identify infeasible plans due to temporal constraints. Consider Scenario 2, described by the following planning problem

We compute two shortest plans (of length 16) for this problem using iClingo: one without any temporal constraints, and one with the temporal constraint where the total duration of the plan is limited to 25 units of time. For the latter problem we add the following line to the planning problem description above:

Table 1 presents these two plans. Note that, even though both plans last 16 time steps at the discrete level, the plan computed with the duration estimation obeys the temporal constraint of 25 time units, while the plan computed without duration estimation lasts for 26 time units, violating the temporal constraint. Indeed, according to the continuous trajectories computed for each “go” action, the total length of the trajectory for the plan computed without temporal constraints is 55 units, whereas the total length of the trajectory for the plan computed with temporal constraints is 51 units; assuming a constant velocity of 3 units leads to duration estimates of 26 and 25 units, respectively.

Note that the hybrid planning approach can also be extended to allow for collaborative actions of multiple housekeeping robots [1, 2]. In particular, consider Scenario 3, a variation of Scenario 2 where there exists a second robot that is available to help Robot 2 in the kitchen. A collaborative plan for these two robots calculated by iClingo is presented in Table 2. Note that the collaboration of robots decrease the total plan duration to 14 time units.

8 Experimental evaluation

To compare the computational efficiency (in terms of program size, CPU time and memory consumption) of our ASP-based approach to housekeeping, with the \(\mathcal{C}+\)-based approach of [1, 2], we have experimented with 14 different problem instances in housekeeping domain. We have used iClingo Version 3.0.2, CCalc Version 2.0 with the SAT solver miniSAT [6] Version 2.2. Experiments are performed at a workstation with two 1.60 GHz Intel Xeon E5310 Quad-Core Processor and 6 GB RAM.

Since the initial states are completely specified in these planning problems, we could make several simplifications in the ASP formulation. For instance, ensuring that the location of every thing \(th\) (i.e., every robot and every object) is specified by adding the rule

\(\leftarrow \{at(th,i,j,t) : x\_coord(i) : y\_coord(j) \} 0,\)

we have dropped the rule defining \(\lnot at\_desired\_location\) in Sect. 5.

For each problem, Table 3 presents its description (in terms of the number of robots, the number of movable objects, the length of a shortest plan) and the program size (in terms of the number of atoms and the number of clauses for CCalc, and in terms of the number of atoms and the number of rules for iClingo). The table also presents the computation times (in terms of CPU seconds), and the memory consumption (in MB).

For instance, Problem 2 is a planning problem where one robot is expected to tidy a room with two movable objects. Once this problem is given to CCalc, for plan length \(k=0,1,2,...\), CCalc prepares the input for miniSAT, and calls miniSAT to find a plan of length \(k\), until it reaches the shortest plan length \(k=8\). The size of the planning problem for \(k=8\) can be described in terms of the size of the formulation given as input to miniSAT: a set of 287,390 clauses over 37,450 atoms. The whole process of CCalc’s computing a shortest plan takes 98.64 CPU seconds, and consumes 2,703 MB of memory.

Similarly, once Problem 2 is given to iClingo, for plan length \(k=0,1,2,\ldots \), iClingo prepares the input for clasp, and calls clasp to find a plan of length \(k\), until it reaches the shortest plan length \(k=8\); though the computation is done incrementally as the value of \(k\) increases. The size of the planning problem for \(k=8\) can be described in terms of the size of the formulation given as input to clasp: a set of 156,468 rules over 3,983 atoms. The whole process of iClingo’s computing a shortest plan takes 93.04 CPU seconds, and consumes 140 MB of memory.

According to the experimental results presented in Table 3, in many cases, the timings are comparable between iClingo and CCalc, and proportional to program sizes (except for Problems 10 and 13). We have also observed that CCalc subsumes much more memory than iClingo does. This is most probably due to the incremental computation of a plan by iClingo. On the other hand, note that iClingo also consumes more memory as the program size gets larger (e.g., for Problems 13 and 14).

It is important to note here that, to find a shortest plan of length \(k\), both iClingo and CCalc solve \(k+1\) planning problems with plan lengths ranging from \(0\) to \(k\); and classical planning is NP-hard for plans of polynomially bounded length [4]. In that sense, the increase in computation times and memory consumption in proportion with the increase in problem/program size is not surprising.

9 Execution and monitoring of hybrid plans

Suppose that, once each robot obtains the current state of the world from the sensor information, she autonomously finds a hybrid plan to achieve her tasks in a given time, using the ASP-based hybrid planning approach as presented in Sect. 7. Then each robot starts executing her plan. However, a plan execution may fail, for instance, due to the unpredictable interference of a human. A human may relocate an object which is to be carried by robot, or bring a new movable object to the room. Since the robots are unaware of these dynamic changes, they may collide with these obstacles. Furthermore, while most of the moveable objects are carried with only one robot, some of these objects are heavy and their manipulation requires two robots. The robots do not know in advance which objects are heavy, but discover a heavy object only when they attempt to move it. Also, a failure may cause a delay and put the time constraint at stake. In such a case, a robot may need assistance of another robot to meet its deadline. When such incidents occur, robots can identify the cause of the failure and act accordingly, e.g., according to the planning and monitoring algorithm illustrated by the flowchart in Fig. 3.

In particular, for each cleaning robot, a plan is computed and executed according to Algorithm 1 (execute&monitor). First, each robot obtains the current state \(s\) of the world from the sensor information. After that, each robot computes a plan \(\mathcal P\) of length less than or equal to a given nonnegative integer \(k\), from the observed state \(s_0\) to a goal state (that satisfies the given goal \(g\) which includes a time constraint \(tc\)) in a world described by a given action domain description \(\mathcal D\). Plans are computed using iClingo as described in Sect. 7, by the function findP. Once a plan \(\mathcal P\) is computed, each robot starts executing it according to Algorithm 8 (execute). If a plan execution fails or is interrupted, the relevant robot can identify the cause of the failure/interrupt and act accordingly.

-

When a robot collides with an unknown movable object while following its planned trajectory for a transition \(\langle s,A,s^{\prime } \rangle \) (i.e., the cause of the failure is UNKNOWN-OBJECT), Algorithm 2 (caseUnknownObject) is invoked. In particular, the robot tries to calculate a new, collision-free trajectory \(\pi \) to reach the next state \(s^{\prime }\) from its current configuration. Such a trajectory \(\pi \) is computed by the function findT, which implements a motion planner based on RRTs. If no such trajectory is calculated by the motion planer, the robot goes to a safe state \(s\) (possibly the previous state) and asks iClingo to find a new plan \(\mathcal P\) to reach the goal from \(s\) taking into account the recently discovered moveable objects.

-

When a plan fails because a robot attempts to carry an object which is relocated by another agent (i.e., the cause of the failure is OBJECT-NOT-FOUND), then following Algorithm 3 (caseObjectNotFound), the robot observes the world and asks iClingo to find a new plan \(\mathcal P\) to reach the goal from the current state \(s\) taking into account the recently relocated moveable objects.

-

When a plan fails because a robot attempts to manipulate a heavy object (i.e., the cause of the failure is HEAVY-OBJECT), the robot has to ask for assistance from other robots so that the heavy object can be carried to its destination [see Algorithm 4 (caseHeavyObject)]. However, in order not to disturb the other robots while they are occupied with their own responsibilities, the call for help is delayed as much as possible. With the observation that the manipulation of the heavy object takes 4 steps (get to the heavy object, attach to it, carry it, detach from it), this is accomplished by asking iClingo to find a new plan \(\mathcal P\) that manipulates the heavy object within the last \(i=4,5,6,...\) steps of the plan only. Once such a plan is computed, the robot single-handedly follows the first part of the plan without asking for any help.

-

As the execution continues, the robot eventually encounters a concurrent action involving two robots (i.e., the cause of the interrupt is CALL-ROBOT). At this point, the execution is paused and the robot notifies the other robots that it needs help [see Algorithm 5 (caseCallRobot)], and gives them a time estimate for how long the helping process will take by looking at the plan \(\mathcal P\).

-

Once the other robots’ plan execution is interrupted by such a request (i.e., the cause of the interrupt is HELP-REQUESTED), they accept or reject the request of help-seeking robot by taking their own deadlines into account, and their possible behaviors can be described as follows [see Algorithms 6 (caseHelpRequested) and 7 (caseHelpOffered)]:

-

If one of the robots decides that it can assist the help-seeking robot without violating its own time constraint by, it replies back to the help request affirmatively, pauses the execution of its own plan, goes to the entrance of the other room, and waits for the commands of the help-seeking robot. After they execute the collaborative part of the plan, the helper robot goes back its room, exactly to the location where it was interrupted in the first place, and continues the execution of its own plan.

-

If all of the robots reject the help request due to the fact that they cannot meet their deadlines otherwise, then help-seeking robot offers help to the other robots. The idea is that by offering help, the help-seeking robot may save some time to another robot. Then, the help-seeking robot can ask for help one more time, and this second attempt is much more likely to be successful.

-

-

If a robot’s plan execution is interrupted by such a help offer (i.e., the cause of the interrupt is HELP-OFFERED), then the robot accepts the offered help if that would save the robot some time.

Message passing for asking for help, offering help, helping other robots and listening to messages are detailed in Algorithms 10 (askforHelp)–13 (listen).

Note that if a human brings some objects into the room, and these objects are not in collision course with the robots, they cannot be discovered until the robot observes the world. Therefore, in Algorithm 1 (execute&monitor), after the execution of the plans, a final observation instance is triggered comparing current state of the room with its goal state. If there are any discrepancies, then the robots ask for new plans to reach the goal.

Plans are executed according to Algorithm 8 (execute) as follows. For each transition \(\langle s,A,s^{\prime } \rangle \) of the history of the plan:

-

If the action \(A\) is not a concurrent action then the robot executes it according to Algorithm 9 (executeAction).

-

Otherwise, \(A\) is a concurrent action, the robot finds a helper to collaborate with and then the robots execute the concurrent action according to Algorithm 9 (executeAction).

Given the current state \(s\) and the next state \(s^{\prime }\), an action \(A\) is executed at \(s\) according to Algorithm 9 (executeAction) as follows.

-

If \(A\) is a primitive action of the form goto(R,X, Y,T), then a trajectory is computed from the current configuration (at \(s\)) to the next configuration (at \(s^{\prime }\)) and the relevant robot follows that trajectory.

-

If \(A\) is a primitive action of some other form (e.g., attach(R,T) or detach(R,T)), then the action is performed by the relevant robot.

-

If \(A\) is a concurrent action of the form \(\{A_1,A_2\}\) to be executed by two robots independently, then the actions are executed in parallel by recursively invoking Algorithm 8 (execute).

-

If \(A\) is a concurrent action of the form \(\{A_1,A_2\}\) to be executed by two robots collaboratively (i.e., each \(A_i\) is of the form goto(R,X, Y,T) and both robots are attached to the same object according to \(s\)), then a trajectory for each robot is computed from the current configuration (at \(s\)) to the next configuration (at \(s^{\prime }\)) and the robots follow these trajectories.

Table 4 shows the execution of plans calculated in Sect. 7 by Robots 1 and 2. In particular, Robot 1 starts executing its plan in the Bedroom, but at time step 1, it encounters a heavy object. Then, Robot 1 asks Robot 2 for help by giving it the duration of help process, which is estimated to be \(9\). Considering the help duration and the cost of a round trip travel between the bedroom and the kitchen (which is \(2 \times 2=4\)), Robot 2 declines Robot 1’s request, since otherwise it will miss its own deadline. Then, Robot 1 asks Robot 2 “If I help you in the kitchen, will you be able to help me back?”. Robot 2 evaluates this help offer and verifies that after receiving help from Robot 1, it can, not only complete its own task on time, but also offer help to Robot 1 to complete its task. Hence, it accepts Robot 1’s help offer. They tidy the kitchen together, then Robot 1 goes back to the bedroom and asks for help one more time. This time Robot 2 is unoccupied and willing to help Robot 1. The help request is accepted and the heavy object in the bedroom is carried collaboratively. Both robots successfully complete their tasks without violating their deadlines.

We have shown the applicability of the ASP-based approach to hybrid planning, in such a planning and monitoring framework with a simulation of a housekeeping domain. The domain is modeled using VRML 2.0 and the execution of the actions is implemented in Matlab/Simulink. Video clips illustrating this simulation can be found at https://www.dropbox.com/s/4slielakahfoswl/two_rooms_with_cooperation.flv.

10 Conclusion

We have shown the usefulness of the knowledge representation and reasoning paradigm, ASP, in housekeeping robotics from the point of view of three perspectives: formal representation of the domain, automated reasoning and planning over this domain, and execution and monitoring of computed plans.

In particular, we have formalized a housekeeping domain that involves multiple autonomous robots, in ASP. We have illustrated how to embed three sorts of semantic knowledge into high-level representation of housekeeping domain for intelligent reasoning: (1) commonsense knowledge automatically extracted from the commonsense knowledge base ConceptNet, (2) feasibility check of plans via (continuous) geometric reasoning (e.g., RRT-based motion planning), and (3) estimated durations of actions computed also by means of motion planning algorithms.

We have shown how hybrid plans can be computed using the ASP solver iClingo over this domain formalized in ASP, taking into account temporal constraints. We have also performed some experiments that illustrated the advantage of using ASP and iClingo over the action language \(\mathcal{C}+\) and the reasoner CCalc, in terms of memory consumption. On the other hand, we have observed that the computation times are comparable; in that sense, our ASP-based approach can be viewed as complementary to the other approach that utilizes \(\mathcal{C}+\) and CCalc.

We have also introduced a planning and monitoring framework so that robots can recover from failures during execution of the hybrid plans. In particular, we have considered execution failures due to (1) collisions with movable objects whose presence and location are not known in advance, and (2) heavy objects that cannot be lifted alone, and introduced algorithms so that robots can identify the cause of failures and act/collaborate accordingly to recover from these failures.

A related work on the use of AI planning for housekeeping with multiple robots is [20], which considers a domain with no concurrency, no obstacles and no human intervention. On the other hand, it considers heterogenous robots with different functionalities (e.g., sensors, actuators), and take their functionalities into account while planning. The extension of our approach to handle collaborations of such heterogenous robots can be done by adding some more preconditions of actions. For instance, we can ensure that only robots with two grippers can carry dishes, by the following ASP rule

where \(has\_gripper\) is specified as background knowledge.

Our approach to planning actions of multiple housekeeping robots can be viewed as a kind of multi-agent planning: tasks need to be allocated among these robots, robots need to collaborate with each other to complete the given tasks, and the robots need to communicate with each other for collaboration. Since our focus in this paper is more oriented towards embedding of background/commonsense knowledge and geometric/temporal reasoning in high-level representation and reasoning, these three aspects of multi-agent planning are kept as simple as possible: we assume that tasks are already allocated among the robots, the robots collaborate with each other only when they cannot complete their tasks due to a failure (for instance, when one robot cannot carry a heavy object), and we assume that the robots communicate with each other by means of requesting/offering help. However, thanks to the modular structure of our planning and monitoring framework and the generality of the methods used for hybrid planning, these three aspects of housekeeping robotics domain can be extended with the existing approaches in multi-agent planning (as described in the survey paper [28]). For instance, tasks can be allocated among the robots using a method for deciding for compatible agents, such as Contract Net Protocols [25], or using a method based on auctions, such as Vickrey Auctions [27]; coordination of robots can be allowed at the stage of hybrid planning even when failures do not occur as in [5, 17]; and the communication between robots can be extended possibly via an Agent Communication Language, such as KQML [11] or FIPA ACL [23].

References

Aker E, Erdogan A, Erdem E, Patoglu V (2011) Causal reasoning for planning and coordination of multiple housekeeping robots. In: Proceedings of LPNMR’11

Aker E, Erdogan A, Erdem E, Patoglu V (2011) Housekeeping with multiple autonomous robots: representation, reasoning and execution. In: Proceedings of commonsense’11

Brewka G, Eiter T, Truszczynski M (2011) Answer set programming at a glance. Commun ACM 54(12):92–103

Bylander T (1994) The computational complexity of propositional STRIPS planning. Artif Intell 69(1–2):165–204

Decker K, Lesser V (1992) Generalizing the partial global planning algorithm. Int J Intell Coop Inf Syst 1:319–346

Eén N, Sörensson N (2003) An extensible sat-solver. In: Proceedings of SAT, pp 502–518

Erdem E, Erdem Y, Erdogan H, Oztok U (2011) Finding answers and generating explanations for complex biomedical queries. In: Proceedings of AAAI

Erdem E, Haspalamutgil K, Palaz C, Patoglu V, Uras T (2011) Combining high-level causal reasoning with low-level geometric reasoning and motion planning for robotic manipulation. In: Proceedings of ICRA

Erdem E, Wong MDF (2004) Rectilinear steiner tree construction using answer set programming. In: Proceedings of ICLP, pp 386–399

Ferrein A, Fritz C, Lakemeyer G (2005) Using GOLOG for deliberation and team coordination in robotic soccer. Künstliche Intelligenz 1

Finin TW, Fritzson R, McKay DP, McEntire R (1994) KQML as an agent communication language. In: Proceedings of CIKM, pp 456–463

Gebser M, Guziolowski C, Ivanchev M, Schaub T, Siegel A, Thiele S, Veber P (2010) Repair and prediction (under inconsistency) in large biological networks with answer set programming. In: Proceedings of KR

Gebser M, Kaminski R, Kaufmann B, Ostrowski M, Schaub T, Thiele S (2008) Engineering an incremental ASP solver. In: Proceedings of ICLP, pp 190–205

Gelfond M, Lifschitz V (1991) Classical negation in logic programs and disjunctive databases. New Gener Comput 9:365–385

Giunchiglia E, Lee J, Lifschitz V, McCain N, Turner H (2004) Nonmonotonic causal theories. Artif Intell 153:49–104

Lavalle SM (1998) Rapidly-exploring random trees: a new tool for path planning. Tech. rep

Lesser V, Decker K, Carver N, Garvey A, Neimen D, Prassad M, Wagner T (1998) Evolution of the GPGP domain independent coordination framework. Tech. rep., University of Massachusetts

Lifschitz V (2008) What is answer set programming? In: Proceedings of AAAI

Liu H, Singh P (2004) ConceptNet: a practical commonsense reasoning toolkit. BT Technol J 22

Lundh R, Karlsson L, Saffiotti A (2008) Autonomous functional configuration of a network robot system. Robot Auton Syst 56(10):819–830

McCain N, Turner H (1997) Causal theories of action and change. In: Proceedings of AAAI/IAAI, pp 460–465

Nogueira M, Balduccini M, Gelfond M, Watson R, Barry M (2001) An a-prolog decision support system for the space shuttle. In: Proceedings of PADL. Springer, Berlin, pp 169–183

O’Brien PRN (1998) FIPA–towards a standard for software agents. BT Technol J 16(3):51–59

Simons P, Niemelä I, Soininen T (2002) Extending and implementing the stable model semantics. Artif Intell 138:181–234

Smith RG (1980) The contract net protocol: high-level communication and control in a distributed problem solver. IEEE Trans Comput 29(12):1104–1113

Tari L, Anwar S, Liang S, Hakenberg J, Baral C (2010) Synthesis of pharmacokinetic pathways through knowledge acquisition and automated reasoning. In: Proceedings of PSB, pp 465–476

Vikrey W (1961) Computer speculation, auctions, and competitive sealed tenders. J Finance 16:8–37

de Weerdt MM, Clement B (2009) Introduction to planning in multiagent systems. Multiagent Grid Syst 5:345–355

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Erdem, E., Aker, E. & Patoglu, V. Answer set programming for collaborative housekeeping robotics: representation, reasoning, and execution. Intel Serv Robotics 5, 275–291 (2012). https://doi.org/10.1007/s11370-012-0119-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11370-012-0119-x