Abstract

The accuracy of an adopted cohesive zone model (CZM) can affect the simulated fracture response significantly. The CZM has been usually obtained using global experimental response, e.g., load versus either crack opening displacement or load-line displacement. Apparently, deduction of a local material property from a global response does not provide full confidence of the adopted model. The difficulties are: (1) fundamentally, stress cannot be measured directly and the cohesive stress distribution is non-uniform; (2) accurate measurement of the full crack profile (crack opening displacement at every point) is experimentally difficult to obtain. An attractive feature of digital image correlation (DIC) is that it allows relatively accurate measurement of the whole displacement field on a flat surface. It has been utilized to measure the mode I traction-separation relation. A hybrid inverse method based on combined use of DIC and finite element method is used in this study to compute the cohesive properties of a ductile adhesive, Devcon Plastic Welder II, and a quasi-brittle plastic, G-10/FR4 Garolite. Fracture tests were conducted on single edge-notched beam specimens (SENB) under four-point bending. A full-field DIC algorithm was employed to compute the smooth and continuous displacement field, which is then used as input to a finite element model for inverse analysis through an optimization procedure. The unknown CZM is constructed using a flexible B-spline without any “a priori” assumption on the shape. The inversely computed CZMs for both materials yield consistent results. Finally, the computed CZMs are verified through fracture simulation, which shows good experimental agreement.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

As investigated by Barenblatt and Dugdale [1, 2], and later extended and referred to as fictitious crack model by Hillerborg et al. [3], the cohesive zone model (CZM) is an idealized model to address nonlinear fracture behavior. The CZM has been successfully applied to various types of materials including concrete [4], functionally graded materials [5], asphalt [6], welded joints [7] and so on. Specifically, the CZM assumes a material-level constitutive relation at the fracture process zone. The fracture process zone is located ahead of the traction-free crack, and it can be due to plasticity, inelasticity, microcracking and/or crack bridging by aggregate or fiber.

The CZM is usually used in numerical simulation of mode I or mixed-mode fracture. For mode I fracture, the CZM assumes the relation between normal traction and crack opening displacement (COD) (Fig. 1), while for pure mode II, the relation is between shear traction and sliding displacement. In Fig. 1, stress distribution is shown at both the cohesive zone and the elastic zone, where ∆n denotes COD, σ denotes the cohesive stress, ∆nc and σ c are the critical values of ∆n and σ, respectively, and σ(∆n) describes the traction-separation relation. Notice the continuity of the stress distribution from cohesive zone to the elastic zone. The basic description of the CZM includes ∆nc, σ c and the shape of the curve. Two assumptions are considered in the present work: the cohesive zone only localizes on the crack surfaces, and material outside the cohesive zone is elastic [8].

In the finite element model, elastic deformation is represented by bulk elements while cohesive fracture behavior is described by cohesive surface elements. Both “intrinsic” models [9, 10] and “extrinsic” models [11–13] have been developed. Moreover, the CZM concept has also been implemented in conjunction with extended and generalized FEM (X-FEM and GFEM) [14, 15]. Despite advancements in the numerical capability using CZM concept, a proper CZM, based on physical behavior, must be provided to yield accurate prediction of the fracture process. The primary parameters that determine the fracture response are ∆nc and σ c. However, the shape of the CZM may have significant influence too. Shah et al. [16] reviewed various shapes of σ(∆n) described by linear, bilinear, trilinear, exponential and power functions, and concluded that the local fracture behavior is sensitive to the selection of the shape of σ(∆n). Recently, independent investigations by Volokh [17] and Song et al. [18] demonstrated that the shape of the CZM can significantly affect the results of fracture analysis.

Experimentally, uniaxial tension test is considered as a fundamental method to determine σ(∆n) [19, 20] because it is able to directly measure the traction-separation relation without additional analysis. However, because assurance of a uniform distribution of tensile stress through the specimen cross-section is difficult experimentally [16, 19], researchers have looked for indirect methods. One common way is based on assuming a simple shape of σ(∆n), with a few model parameters, and computing the model parameters through experimental results [21]. Van Mier [22] summarized the common procedure of inverse analysis: model parameters are adjusted at each iteration by comparing the difference between computational and experimental outcomes. This method is not computationally efficient since a complete simulation, which is a nontrivial nonlinear problem, must be carried out at each iteration. Recently, Elices et al. [23] summarized the main streams of indirect methods used to determine σ(∆n). These indirect methods have common characteristics: they all use the global response of the experimental results, load (P) and either displacement (δ) or crack mouth opening displacement (CMOD), from either popular notched beams or compact specimens, as the basis of the inverse parametric fitting analysis. These indirect methods are related to the fact that the global responses are usually the obtainable outcomes of experiments. Therefore, the limitations are manifested: these methods are semi-empirical in that the CZMs are assumed a priori and, in general, it is hard to validate the CZMs at the local level. This also means that the uniqueness of the obtained CZMs may not be guaranteed. However, the CZM obtained from these methods can still yield satisfactory predictive capability in finite element (FE) simulation of fracture [4, 24–26].

The difficulties in directly measuring cohesive properties are: (1) fundamentally, the cohesive stress cannot be directly measured. Instead, it is deduced or computed from other experimental measurements or computational techniques; (2) complete crack profile is hard to be measured accurately due to its refined scale. Furthermore, if the deduction of stress and measurement of crack profile are independent from each other, a correlation between cohesive stress and COD must be established to obtain the CZM. Recent advancement of a specific experimental technique, named digital image correlation (DIC), enables relatively accurate measurement of surface displacement field. Thus, the complete description of the COD in the fracture process zone is now possible. From the gradient of the displacement field of the bulk materials next to the crack surface, one can estimate the cohesive traction from the constitutive relation (e.g. elastic). By correlating the crack opening displacement (COD) with the estimated cohesive stress, one can obtain the CZM from the local level [27, 28]. If the cohesive traction is treated as boundary traction, it is possible to apply more sophisticated techniques, e.g. those used in inverse elastostatics problems, to compute the distribution of the cohesive traction assuming that the bulk material remains to be linear elastic. Such techniques are based on a framework of either the boundary element method (BEM) [29] or the FEM [30]. Recently, a technique for combining DIC with the FEM to compute CZM was proposed by the authors of current paper [31]. The key idea consists of utilizing the continuous displacement field measured from DIC to compute the CZM inversely in an FEM framework. The distinctive features of the technique are the use of a flexible shape parameterization of the CZM, and the direct computation of the CZM shape parameters through a derivative-free optimization method, the Nelder-Mead method. Exhaustive numerical tests and associated issues have been presented and discussed in [31]. The current paper intends to demonstrate this technique using actual experimental results of two materials: one adhesive and one plastic.

In the following, first, DIC of two different correlation algorithms, subset and full-field algorithms, will be compared. Then, the proposed hybrid DIC-FEM will be explained. Afterwards, use of the DIC of full-field algorithm to measure the bulk elastic properties of PMMA and Garolite G-10/FR4 is presented. Following that is the presentation of the DIC results for the fracture testing and the inverse CZM computation of the adhesive, Plastic Welder II, and the plastic, Garolite G-10/FR4. Finally, some concluding remarks are provided.

Digital Image Correlation (DIC)

Digital image correlation (DIC) is a non-contact optical technique that is capable of measuring full-field two-dimensional or three-dimensional surface deformations [32–36]. Over the years, with the rapid advancement of computer vision technology, and with the availability of cheaper and more powerful digital image acquisition devices, DIC has gained increasing popularity among researchers in different fields. The rich information from DIC shows great potential in the applications of inverse identification problems [37, 38] and experimental fracture analysis [27, 28, 39]. Fracture characterization of concrete materials has been considered difficult due to their heterogeneous material microstructure. However, DIC has been demonstrated to be an effective tool for the study of fracture of concrete materials too. For example, Choi et al. [40] used DIC to measure the deformation data of concrete under fracture. Their measurement obtained accuracy within the micron range. Sokhwan and Shah [41] studied the fracture of quasi-brittle cement paste using DIC under compression. Corr et al. [42] used DIC to examine the bond between carbon fiber reinforced polymers (CFRP) and concrete substrates and measured the softening and fracture behavior at interfacial transition zone of plain concrete.

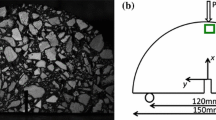

A recent comprehensive review [43] indicates that DIC in fact is a subset of the research area of image registration. Essentially, DIC compares two digital images, one is the reference image corresponding to an undeformed state, and the other is the deformed image. The DIC algorithm will search for the one-to-one correspondence of the points (pixels) in both images by matching the pixel intensities in an area with a unique image pattern. Such correspondence is made by means of displacements only, or a combination of displacements and its gradients. The most popular type of image used in DIC is gray-scale image with random speckles. The gray-scale image can be 8-bit, 12-bit, 16-bit or 32-bit depending on the image acquisition device. The size of random speckles is usually very fine, in the scale of 1–100 microns. It can be black speckles in a white background or vice versa. Figure 2 shows a typical speckle pattern used in current study, where the speckles are black. Usually the speckle pattern is generated by spraying either black or white paint using a refined airbrush. In this study, the speckle patterns were generated using a Paasche® Single Action—External Mix—Siphon Feed Airbrushes.

Subset DIC Algorithm

In the subset DIC algorithm, a grid of points (nodes) is first selected on the reference image. A subset is the set of pixels with a pre-selected node at its center (Fig. 3). Usually the subset is a square consisting of (2m+1) × (2m+1) pixels, where m is an integer. The moving subset is centered on the node at each correlation and is to be correlated from the reference image to the deformed image by a mapping function

where (x,y) and \( \left( {\tilde{x},\tilde{y}} \right) \) refer to the same point before and after deformation, and u and v are displacement components defined by a set of mapping parameters. With the prerequisite that the displacement field within the subset is continuous and smooth, it is usually approximated by Taylor series expansion from zero-order (rigid-body motion) up to second-order (arbitrary deformation) [44].

Subset DIC algorithm where each image subset is correlated independently (cf Fig. 4 for the full-field DIC)

Let’s denote I r (x,y) and I d (x,y) the intensity functions of the reference and the deformed images, respectively. If the mapping functions within each subset are correct, then ideally, we have

Usually, seeking the right mapping parameters is done by minimizing a correlation coefficient [32]:

where R p represent any single pixel point in subset domain Ω P in the reference image that centers at node “P”, and λ represents the vector of the mapping parameters. If linear change of the illumination lighting is an issue, more robust zero-mean normalized cross-correlation criterion shall be used [45, 46].

The displacements measured from all subsets form the whole displacement field. However, the subset DIC algorithm does not consider continuity between adjacent subsets. Thus, the discrete displacements at the nodes may be noisy while the strains are always much noisier. Complex treatment of the displacement field can be employed so that smooth strain field can be obtained from the treated displacement field [47]. In addition, the selection of the subset size also affects the accuracy. In general, the optimal size of the subset for a particular speckle pattern can be chosen by trial and error [48] or through a parameter defined for the optimal selection of the subset size [49]. The accuracy of subset algorithm is also sensitive to the quality of the speckle pattern [50], therefore high quality speckle pattern is usually required.

Full-Field DIC Algorithm

Contrary to subset DIC algorithm, the entire image is correlated in the full-field DIC algorithm (Fig. 4). Similarly to equation (3), the full-field DIC algorithm seeks to minimize the dissimilarity between the reference image and the deformed image:

where C img is the correlation function, Ωimg is the domain of the region of interest (ROI) on the image, and #Ωimg is the total number of pixels in Ωimg. Here λ is the set of parameters that determine the deformation field for the whole ROI.

Full-field DIC algorithm where the whole image is correlated (cf Fig. 3 for the subset DIC)

As the ROI on the image may have inhomogeneous deformation field, e.g. near a crack tip, the approximation of the deformation field using a truncated Taylor’s series is inadequate. For the case of continuous elastic deformation, a smooth parametric function is employed to represent the inhomogeneous field. B-spline surface representation appears to be very attractive in that it is smooth (C2 continuous), flexible and requires a relatively small number of unknown parameters. As an example, Fig. 5 shows a single bicubic B-spline surface described by

where β 3(x) is the B-spline of degree 3 according to the expression

Similar expressions can be developed for β 3(y).

A uniform grid is first applied to the ROI on the specimen surface image (Fig. 4). The spacing between the grid points can be arbitrary and needs not to be the same in the Cartesian x- and y-directions. The smaller the spacing between grid points, the more detailed the displacement field can be represented. Each grid point is associated with a bicubic B-spline surface of a certain height. The complete representation of the displacement field is a linear combination of all the bicubic B-splines expressed by

where m and n are the number of grid points in x- and y-directions, h x and h y denote the grid spacing, c x,i,j and c y,i,j are the coefficients associated with the two bicubic B-spline surfaces at grid point (i, j) for displacements u and v, respectively. Equation (4) can be rewritten as

where the optimal unknowns c x,i,j and c y,i,j yield the best estimate of u(x), therefore the minimum C img.

In equation (8), the deformation to be correlated only includes displacement, while for the subset DIC algorithm, the deformation parameters may include derivatives of the displacement. However, the B-spline form of u(x) from equation (7) allows one to compute a smooth and continuous gradient field efficiently, which is simply the derivatives of the continuous displacement field function: equation (7). Therefore, the strain fields are also an analytical function so that strain at any point can be immediately computed. Naturally, the computed gradient field is not as smooth as the displacement field. This is due to the fact that the gradient of u(x) is more sensitive than u(x) itself to the change of the unknown parameters c x,i,j and c y,i,j . To enhance the smoothness of the computed strain field, an additional regularization term [51]

can be used, where \( {{\mathbf{D}}^2} = \left( {\frac{{{\partial^2}}}{{\partial {x^2}}},{ 2}\frac{{{\partial^2}}}{{\partial x\partial y}},{ }\frac{{{\partial^2}}}{{\partial {y^2}}}} \right) \) is the second-order total differential operator, and \( \left\| \cdot \right\| \) denotes the Euclidean norm. Combining equations (4) and (9), and assigning different weights to the two terms, one obtain the new objective function to be minimized, i.e.

where w c and w g are scalar value weights. In the above equation, the first term refers to the minimization between reference and deformed images, and the second term is a regularization term. The relative values of w c and w g depend on the characteristic of the field and the noise level. When noise level is high, a higher value of w g may be set. It is generally observed that the computed displacement field is not sensitive to w g if the value of w g not very high. The Newton-Raphson procedure to minimize the correlation coefficient C can be used to minimize Ψ. Cheng et al. and Sorzano et al. [51, 52] have proposed using the Levenberg-Marquardt optimization method as the solver to improve computational efficiency.

While full-field DIC is able to provide smoother displacement field than subset DIC, it has its own drawbacks. Compared to subset DIC, full-field DIC is much more difficult to implement, harder to converge, and computationally much more intensive. In addition, full-field DIC requires the deformation field to be continuous, while subset DIC can actually be used to find displacement jumps, i.e. cracked images.

Assessment of the Full-Field DIC

Assessment of the accuracy of DIC needs at least a pair of images with known displacement transformation between them. Usually, numerically transformed images are used [38, 44, 52], or transformation can be made by experimental means [53]. In the latter case, the transformations are usually limited to be uniform in one degree-of-freedom, e.g. translation, uniaxial stretching or compression, or rigid body rotation. While if numerical transformation is used, more complicated transformation, e.g. arbitrary combination of translation, stretching and rotation, can be applied. For our assessment, we use a complicated numerical transformation to generate the deformed image from a reference image, which is a real speckle image. It is anticipated that such transformation simulates the more general cases of inhomogeneous deformation. Equation (11) shows the displacement functions, horizontal and vertical, for the image transformation:

where (x 0,y 0) are the coordinate of the origin of the reference image, W and H are the width and height of the reference image in pixels. The image transformation involves evaluation of subpixel intensity from the reference image, which is done through bicubic interpolation. The image used for the assessment of the in-house DIC is shown in Fig. 6, which also shows the deformed image and the displacement field for the transformation.

A uniform grid of 21 × 21 points over the entire reference image was used as the sites for displacement evaluation in both the subset and full-field algorithms. For the subset DIC, a subset size of 41 × 41 pixels are selected and the subset centers were located directly at the grid nodes. A second order mapping function with 13 parameters, one of which accounts for the constant illumination change, are used as the subset mapping function [44]. For the full-field DIC, a grid of 33 by 33 nodes was used. The displacements by full-field DIC can be computed from equation (7) at sites for evaluation (the 21 × 21 points). The weights in equation (10) are set as: w c = 1 and w g = 5. The measured displacements by subset DIC and full-field DIC are compared with the displacements calculated from equation (11). The errors of horizontal displacement are plotted against the displacement in Fig. 7, in which u thr denotes theoretical displacement and u DIC denotes the displacement measured by DIC.

As Fig. 7 shows, in average, full-field DIC yields better measurement than the subset DIC. Notice that the error level does not depend on the displacement value. This is contrary to what have been observed in simple transformation that the mean errors are small when the displacement is close to a whole pixel number. Similar results can be found for the vertical displacement. It was found, however, those problematic points having higher errors by both subset and full-field DIC measurement are located at the image boundary, as is demonstrated by Fig. 8. It is clear that all larger errors occur at the boundary. Away from the boundary, subset and full-field DIC seems to have similar level of accuracy. The reason that subset DIC is prone to errors near the boundary is because only partial subset for the boundary grid point can be used for the correlation, while for full-field DIC there is no such issue. The imperfect interpolation near the image boundary for the transformation may be the reason that relatively larger errors at the boundary also occur for full-field DIC.

Strain components can be obtained directly from both subset and full-field DICs. As the strain field is not used in current study, it is not evaluated here. Finally, Table 1 summarizes the statistics of the displacement errors. One must note that this single evaluation is far from sufficiency to draw definite conclusion about the performance of both DIC algorithms. Selection of which DIC algorithm to use depends on the nature of the application.

Hybrid DIC-FEM Optimization Method

In a general fracture mechanics problem, the geometry of the solids, the constitutive parameters for the bulk materials and the cohesive properties, and a set of well-posed boundary conditions are known or given. The solutions of the problem are the displacement, strain and stress fields. Such fracture problems are referred to as direct problems. The FEM formulation of these direct problems, based upon the principle of virtual work, can be expressed as [10]:

where Ω represents the specimen domain, Γ ext represents the boundary on which surface traction T ext is applied, Γ coh represents the cohesive surface where the cohesive traction T coh and crack opening displacement (COD) ∆u are present, σ is the Cauchy stress tensor, E is the Green strain tensor, and u is the displacement tensor. Applying the Galerkin discretization procedure that uses FEM shape functions leads to the set of standard FEM system of equations

where K b is the global stiffness matrix of the bulk material, K c is the global cohesive stiffness matrix, u is the global displacement vector, λ is the set of parameters associated with the CZM, and F ext is the global external force vector. In a forward nonlinear fracture simulation, λ is known, while u is the response to be directly computed. On the contrary, the inverse problem for equation (13) is to identify or estimate λ, with u known from experimental measurements (DIC in current study).

For the inverse parameter identification problems, various methods have been developed, namely, finite element model updating (FEMU) method [54, 55], virtual fields method (VFM) [56], constitutive equation gap method [57], and equilibrium gap method [58]. These methods have been reviewed recently [38, 59]. The comparison between these methods shows that the FEMU and VFM yield consistently accurate estimation of the target constitutive parameters. The VFM is a non-updating method, which is computationally efficient, yet it requires accurate whole-field displacement measurement. The FEMU uses updating approach, which begins with an initial guess and iteratively updates the constitutive parameters by minimizing a prescribed cost function. Usually, the cost function for the FEMU is a least-square difference between the measured displacement field and the computed counterparts. A whole-field displacement is not necessarily required for the FEMU approach, but the availability may improve the accuracy of the identification.

Nonlinear Least Square Optimization

In this study, the FEMU approach is adopted. Usually, the FEMU approach is used to identify the elastic constitutive parameters in a solid. For the current inverse problem, the target is the nonlinear cohesive constitutive parameters to be identified on the fracture surface. Therefore, modified procedure and solution strategy are used. Using the optimization approach, the inverse problem can be formulated as

where M is the number of input parameters and Φ(λ) is called the objective function or the cost function, \( {\Omega_\lambda } \) is the feasible domain within which a physically valid CZM shape can be constructed, \( {\mathbf{\bar{u}}} \) is the displacement vector representing the whole displacement field obtained from DIC, and u *(λ) is computed by

where

Flexible spline with either linear or cubic interpolation can be used to construct the CZM without assumption on the shape of the CZM curve [31]. Figure 9 shows the parameterization of the CZM curve through a spline, where P i are the control points, ∆n,i are the COD and σ i are the traction. The unknown λ is then associated with the control points of the spline:

Apparently, the feasible region for λ is the domain that the COD must be positive and the traction must be tensile. Another requirement is that the COD control points must be sequential so that the curve can be constructed. Written in mathematical form, \( {\Omega_\lambda } \) is

Barrier functions can be easily incorporated into (14) to ensure the condition that \( \lambda \in {\Omega_\lambda } \) is satisfied [31, 60, 61]. The barrier term

where 0 < θ b << 1, and N b >>1 is used to satisfy λ i > 0. Apparently, β 1(λ) is negligible when σ i > θ b but becomes a numerical barrier when σ i < θ b . The barrier term for the condition that \( {\Delta_{{\rm{n,}}1}} < {\Delta_{{\rm{n,}}1}} < ... < {\Delta_{{\rm{n,}}\mu + 1}} \) is

where

is the normalized horizontal distance of point i from the midpoint of the adjacent two points i – 1 and i + 1. When ξ i < 1, condition \( {\Delta_{{\rm{n,}}i - 1}} < {\Delta_{{\rm{n,}}i}} < {\Delta_{{\rm{n,}}i + 1}} \) is satisfied. Again, β 2(λ) is negligible when \( 0 \leqslant {\xi_i} < 1 - {\theta_b} \) but becomes a numerical barrier when \( {\xi_i} > 1 - {\theta_b} \). Now the objective function becomes

and the favorable condition for optimization is established:

Notice that each time when u *(λ) is updated through (15) and (16), only \( {{\mathbf{K}}_{\rm{c}}}\left( {{\mathbf{\bar{u}}};\lambda } \right) \) needs to be reconstructed. Such construction is not computationally expensive as \( {{\mathbf{K}}_{\rm{c}}}\left( {{\mathbf{\bar{u}}};\lambda } \right) \) is only associated with the elements at crack surface, the number of which is only a small fraction of the total number of the FEM elements. Although it seems that the change of cohesive zone parameters will only result in the change of a small number of components in K c, equations (15) and (16) show that the force boundary condition \( {{\mathbf{\hat{F}}}^{\rm{ext}}}\left( {{\mathbf{\bar{u}}};\lambda } \right) \) along the crack surface changes. Thus, the deformation field in the bulk material near the changed boundary condition will be affected. Evaluation of (15) is efficient by first factorizing the constant matrix K b.

Regularization

It is well-known that most inverse problems are ill-posed, and therefore the uniqueness and stability of the solutions are not guaranteed [62]. The difficulty of solving current inverse problem is primarily from the use of the spline as the parameterization of the CZM. While the spline has the total flexibility in constructing any possible curve, the drawback is that the independent, freely moving control points may generate CZM curve without physical meanings. The possible situations that may hinder or fail the optimization are the formation of cluster, spike or tail points [Fig. 10(a)]. In either of these situations, one or more control points are trapped and become ineffective for the CZM shape construction. Numerical tests have shown that the cluster and tail points are more frequently formed during the optimization process and many times the curve is locked to an apparently incorrect CZM representation.

If a polynomial functional is used to define CZM, such issue may not exist but the generality is also lost [30]. To overcome the ill-posedness due to such parameterization, regularization must be applied. Regularization involves introducing prior or additional information in order to solve an ill-posed problem effectively or efficiently. We notice the iterative nature of the nonlinear inverse problem. Therefore, simple algorithms with pre-defined criteria can be implemented to monitor the control points of the CZM curve computed at each iteration and detect if any of the adverse situation appears. The cluster points can be redistributed uniformly along the currently computed CZM curve, while the spike or tail points can be removed and additional control points can be added to other locations in the curve [Fig. 10(b)]. After the control points are adjusted, the optimization is then restarted with a set of better-estimated and well-conditioned initial guess. Numerical examples shown in reference [31] have demonstrated the efficient performance of such “ad hoc” regularization for various cases. The major advantage of this approach is that it does not need explicit regularization terms, therefore there is no difficult issue as how to choose the appropriate regularization weights. However, as current hybrid inverse technique may be extended to solve other inverse problems in fracture, the “ad hoc” method may not be applicable and the more general regularization methods, such as the most widely used Tikhonov regularization method, may have to be used. The investigation of the regularization effects is the immediate topic extending current study.

Nelder-Mead Solver

Usually efficient Newton-Raphson or Newton-like algorithms are used for minimizing the cost function. This is because the cost functions for usual inverse problems in elastostatics are convex, which has been proved [59]. For our inverse problem, it is hard to prove the solution space is convex. In addition, it may be very difficult to obtain the explicit form for the gradient of our cost function. Therefore, derivative-free optimization method is desired. Due to its simplicity and effectiveness, the Nelder-Mead optimization method [63] is utilized to solve (14). The investigation through numerical experiments has demonstrated the effectiveness of the proposed scheme. In the numerical examples presented in [31], the “synthetic” displacement data from numerical fracture simulation was used. In current study, the displacement field measured from DIC, which naturally includes experimental errors, is used.

Limitations of the Current Hybrid DIC-FEM Method

The assumption of the proposed hybrid DIC-FEM method, at current development, is that the bulk material remains elastic, and therefore, no plasticity is considered and the method can only apply to quasi-brittle materials. For quasi-brittle materials, the out-of-plane deformation is dominated by Poisson’s effect, which is expected to have negligible effect to the in-plane displacement field. The plane stress assumption may be sufficient so that in-plane displacement field measured at surface may accurately represent the in-plane displacement through the material depth. In another word, the 3D effect is assumed negligible for quasi-brittle materials and is not considered.

From the basic formulation, equations (14) and (15), it is desired that the larger the area of which its displacement field is sensitive to the cohesive zone, the more probable the method may succeed. There are two important conditions in the proposed method [31, 53]. First, the cohesive zone size shall be relatively large so that there is more bulk material area near the cohesive zone to be directly affected. Second, the ratio of cohesive traction to bulk elastic modulus shall be large enough so that the displacement field is not smeared by the rigidity of the bulk materials. Both conditions are only limited by the resolution of the displacement. Higher displacement resolution allows application of the method to materials with shorter cohesive zone and lower cohesive traction. In addition, the current numerical development can only apply to fracture tests with well-defined crack path and that only mode-I fracture are present. The selection of the test materials in this study were based on the above discussion.

Measurement of Elastic Properties

Full-field DIC algorithm is used for this study. In the inverse procedure to extract the CZM, the bulk elastic properties are assumed known. In reality, the elastic properties for conventional materials are usually known, or can be measured reliably through universal testing machines instrumented with displacement or strain sensors. The purpose of using DIC to measure the elastic properties is to demonstrate the accuracy of the DIC technique.

Materials

Two plastics, polymethylmethacrylate (PMMA) and Garolite G-10/FR4 (GL) are used for this study. PMMA is an amorphous glassy polymer. Garolite is a high performance type of fiberglass, composed of dense woven glass cloth in an epoxy resin media. The mechanical properties of these two plastics vary and the available ranges of their common mechanical properties are listed in Table 2.

Experimental Setup

Two compressive prisms of PMMA with a nominal dimension 11.5 mm × 23 mm × 75 mm, two 4-point bend PMMA specimens of 11.5 mm × 24 mm × 120 mm, and two 4-point bend GL specimens of 10 mm × 25.5 mm × 160 mm are prepared. The test set-up is shown in Fig. 11.

Black and white enamel-based paints are used to prepare the speckle pattern. A Paasche® Single Action—External Mix—Siphon Feed Airbrushes is used to first spray the white paint on the specimen as background. The amount of white paint sprayed is just enough to uniformly and fully cover the specimen. Then black paint is sprayed to generate the random speckle pattern. Figure 11 also shows the ROIs that are analyzed using DIC. For the PMMA compression prisms, a ROI away from both ends is used to avoid end effects. For the bending specimens, the ROI is selected in the pure bending region away from the loading points. The digital image resolutions for the test specimens are listed in Table 3.

The camera used for taking the images for DIC is a Canon EOS 400D. It has a 10.1 megapixel, 22.2 × 14.8 mm CMOS (complementary metal–oxide–semiconductor) sensor with 8-bit depth digitization for grey scale images. The lens used is a Sigma telephoto 150 mm f/2.8 macro lens with 1:1 magnification. Optimal aperture for the sharpest image is found to be around f/8. The camera to specimen distance is around 400 mm. The out-of-plane movement of 1 mm will result in a relative in-plane displacement error of around 1/400. In addition, considering the rigidity of the specimen, the out-of-plane movement is much smaller than 1mm. Therefore, out-of-plane movement is not a concern to the accuracy of measurement in current study.

Uniaxial Compression

The PMMA prisms are loaded within elastic range. Figure 12 shows the load versus crosshead displacement for the compression test for both specimens. The surface speckle images are taken for each loading point. The reference image for DIC is the image taken at zero loading. The correlated displacement fields u x and u y for the load P = 1900 N are shown in Fig. 13, which is computed using a grid of 17 by 17 spline nodes. The positive x- and y- directions are to the horizontal right and to the vertical down, respectively, for all displacement and strain plot in this paper. Notice the displacement is measured in pixels. The corresponding strain fields ε x and ε y are shown in Fig. 14. It is directly computed by taking derivatives of equation (7). The displacement u y is due to the compressive loading, while the displacement u x is due to the Poisson’s effect. Notice the origin of y-axis in the field plots is at the top and the y-axis direct is reversed. This is to conform to the image processing convention that the origin of an image is usually designated at the upper-left corner.

The strain fields ε x and ε y shown in Fig. 14 are reasonably uniform. The compressive stress, justified as uniformly distributed in accordance to the strain, can be calculated through the measured load. The Young’s modulus is calculated as the ratio of the averaged values of stress over strain. Average value of ε x and ε y are also used to compute the Poisson’s ratio directly. The measured Young’s modulus and Poisson’s ratio are summarized in Table 4, which shows consistency amongst the results obtained for each material. The bounds shown in Table 4 for each specimen is computed from measurements of different load steps, five load steps in compression and four load steps in bending.

Four-Point Bending

Figure 15 shows the loading points when the DIC images are recorded. A typical displacement plots, u x and u y , at P = 1500 N is shown in Fig. 16 (2D contour plot). A grid of 17 by 17 spline nodes is used in the full-field DIC. It can be seen that the displacement field conforms to beam’s theory. The mean displacements in both x- and y-directions have been subtracted in the plots in Fig. 16 to eliminate the rigid-body motion recorded and to have better visualization of the fields. The rigid body motion is unavoidable during actual experiments. However, it doesn’t affect the analysis as the relative displacements are the only ones of interest to obtain E and ν. The zero u x isoline in the plots shows the symmetry plan (vertical) and the beam neutral axis (horizontal). The compression above and the tension below the neutral axis can be readily inferred from the relative movement of pixels. The vertical displacement field for the beam under pure bending can be rarely seen in literatures because usually it is of little or no interest.

Figure 17 shows the strain field ε x and ε y corresponding to Fig. 16. Notice the symmetry of the compression (negative ε x ) and tension (positive ε y ) regions about the neutral axis indicated by the zero-strain isoline. As presumably no vertical stress exists in pure bending, ε y is due to Poisson’s effect only. This is confirmed with the following observations: (1) ε y has the opposite sign of ε x ; (2) ε y is proportional to ε x ; (3) ε y plots reveal the same location of neutral axis.

Again, with the presumption that the bending stress distribution through the beam depth is linear, the correlation between stress and DIC measured strain can be obtained to estimate the Young’s modulus. The Poisson’s ratio is better estimated by comparing the average gradient of ε y to the average gradient of ε x both in y-direction. The estimated Young’s modulus and Poisson’s ratio for PMMA from the two bending specimens are listed in Table 4. They are consistent with the measurements from compression tests.

The same procedures to measure the Young’s modulus and Poisson’s ratio using four-point bent specimens are applied to GL and will not be presented here. GL’s Young’s modulus and Poisson’s ratio are also listed in Table 4.

Fracture Tests

SENB specimens of PMMA bonded by adhesive and GL are prepared for fracture testing. The specimen geometry is shown in Fig. 18. The actual dimensions of the test specimens are listed in Table 5.

The adhesive used to bond the PMMA is Devcon® Plastic Welder™ II (PW-II). It is a toughened structural acrylic adhesive formulated for bonding difficult-to-bond substrates, e.g., the PMMA used in this study. It has high shear strength and resistance to peeling. Its tensile peeling stress is higher than 24 MPa. The effectiveness of this adhesive in bonding PMMA and its ductility resulted in the desired cohesive failure of the adhesive. The procedure of bonding PMMA is as follows: the surfaces to be bonded are first roughened by a coarse sand paper, followed by cleaning with acetone, then a very thin layer of PW-II is applied on both surfaces to ensure full coverage, afterwards an even and thick layer is applied on one of the surface, and then the two PMMA prisms are assembled, firm pressure is applied to the adhesive layer before setting to eliminate gaps and enhance contact. The final widths of the PW-II layer for both specimens are 0.6 ± 0.05 mm, which is between the product manual recommended thickness ranges, 0.38 mm – 0.76 mm, for optimum adhesion.

The notches for the SENB specimens are first cut below the desired length by an ordinary band saw, and then a teeth-sharpened thin band saw is used to produce the final sharp notch tip (Fig. 19). The notch tip radius is estimated to be less than 50 µm by using an optical microscope.

The fracture testing is conducted on a servo-hydraulic Instron testing frame. A clip gage is installed on the bottom of the SENB specimen to measure the crack mouth opening displacement (CMOD) (Fig. 19). The loading rate is CMOD controlled and is set to 0.1 mm/min before peak load and increased to 0.2 to 0.5 mm/min during the softening part. Figure 20 shows the load versus CMOD curves for both PMMA and G10/FR4 Garolite SENB specimens. The locations where images are taken for DIC analysis are also shown.

Figure 21 shows the fracture surfaces. The cohesive failure through the PW-II adhesive can be clearly seen, which ran through the thin adhesive layer. The rough fracture surface of G10/FR4 Garolite shows the rupture of the fine fibers. The crack of GL ran through the symmetry of the SENB specimen, which may indicate high uniformity of the GL property. The well-formed crack paths ease the DIC analysis and later the finite element inverse analysis.

Deformation Field

Using the image at P = 0N as reference, two ROIs right above the initial notch tip on either side of the crack path are selected as the reference images (Fig. 18). After the applying DIC using a grid of 33 by 33 spline nodes, the displacement fields on the two ROIs are combined for each recorded image. Figures 22 and 23 show the u x deformation at different loads for one PW-II bonded PMMA and one G10/FR4 Garolite SENB specimen, respectively. The pixel resolution for PWII-3 is 9.93 µm/pixel and for GL-3 is 8.32 µm/pixel. On both figures, the left side is the surface plots showing crack profiles, the right side is the corresponding contour plots showing the u x values. This full displacement field enables the calculation of the crack open displacement. The crack length and the crack tip location can be easily seen on either the surface plot or the contour plot in Figs. 22 and 23. The crack tip location depends on the combined resolving power of the image system and the DIC algorithm, and it can be defined at the location along the crack path where the COD is larger than a specified threshold value. This threshold value can be selected to be a few times of the resolving power. For our system, the estimated error is in an order of 10 µm/pixel × 0.015pixel = 0.15 µm. This does not mean the crack tip location can be determined within an error of 0.15 µm. A little variation of this threshold value may results in a new crack tip location many times of the threshold value away. This is due to the slow variation of COD around the crack tip location. It is found that a threshold value between 0.2 and 5 µm does not affect the results, though the specified crack tip location may vary by a distance of a few surface elements. For the portion of the crack path with a COD less than the threshold value, roller boundary condition is specified (Fig. 24), and the computed nodal force or surface stress can be either tensile or compression stresses.

Inverse Analysis

In house MATLAB code is developed for the inverse analysis, including the part of finite element model. A 2D FE model representing the left half of the beam is used in the inverse analysis. The full integration four-node quadrilateral (Q4) plane stress element is used to model the bulk material. The elastic properties used in the model are based on Table 4: for PMMA, E = 3.4 GPa, v = 0.38 and for Garolite G-10/FR4, E = 18 GPa, v = 0.20. The number of Q4 elements along the crack line is 600, equivalent to a cohesive element size of 42.5 µm. The measured DIC displacements at the nodal locations are the average of the displacements on the left and right sides (mirror ROIs about the crack shown in Fig. 18) of the crack. Figure 24 shows the Q4 mesh with zoom-in detail. In this study, nodes that are within a horizontal distance of 0.2(H – a 0) to the crack path and are within a vertical range between the crack tip to the top of the specimen are used. Within this region, there is sufficient number of measurements/nodes so that noise can be averaged out. It is beyond current study to explore what is the feasible and optimal region of interest for inverse analysis.

Based on the investigations from the numerical examples [31], an initial guess of the curve of the CZM that is below the exact CZM curve will converge faster (less optimization iterations) to the exact CZM. The simplest initial guess of the CZM can be a linear softening CZM. From the DIC measured COD profile, a small fraction, e.g. any number between 0.1 and 0.5, of the maximum COD can be used as the critical COD in the initial guess. Likewise, a small fraction of the estimated tensile strength can be used as the initial guess of the critical stress in the CZM. The initial guesses of the CZM curves for both materials used for the inverse analysis are shown in Fig. 25. Five control points and cubic Hermite interpolation are used to construct the CZM.

For both materials, the inverse analyses are carried out for all displacement data measured at the different post-peak loadings (Fig. 20). This is because the complete formation of the cohesive fracture process zone can only happen after peak load has reached. In fact, from the inverse analysis, those post-peak loading points that are still above around 70% of the peak loading cannot converge to a complete CZM. Inverse analysis at points after 70% of the post-peak loading all converges to similar CZM curves. The above observation indicates that the complete cohesive fracture process zone forms not right after peak, but at a lower post-peak loading point. An example of the evolution of the objective function value is shown in Fig. 26. The three curves are for images of the PWII-3 taken after peak loading. The convergence time is in the order of a few tens of minutes for an ordinary personal computer. The size of the FEM model affects the time used in each iteration, while the complexity of the CZM shape and the noise level of the displacement data affect the number of iterations to convergence.

Evolution of the objective function during optimization for PWII-3 for the three post-peak images shown in Fig. 20

The computed CZMs (three inverse analysis results at different post-peak loadings for each material) for PW-II and Garolite G-10/FR4 are shown in Fig. 27. The computed CZMs of both PWII and Garolite G-10/FR4 show a softening behavior. More variations are seen on the computed CZMs of PWII while for Garolite, the computed CZMs are more consistent. This may be because PMMA SENB specimens are bonded manually using PWII in our labs, in which case variations of bond strength may not be negligible. While the Garolite is a continuously manufactured piece, the cohesive properties along the crack are expected to be more homogeneous. The computed PWII CZMs have a critical cohesive strength about 15 MPa, in comparison to the manufacturer provided peel strength of 24 MPa. The maximum computed critical cohesive stress for Garolite G-10/FR4 is 250 MPa, which is close to the lower bound of its tensile stress, 262 MPa. Surprisingly, the critical separations of the CZMs do not vary as seen in [27]. This may due to the enhanced smoothness of DIC measurement using the full-field correlation algorithm.

Verification of the Extracted CZMs

We verify the computed CZMs: (1) by examining the fracture energies, and (2) by comparing the FEM simulation using the computed CZMs to the experiments. The fracture energy from the DIC-FEM computed CZMs (dash lines in Fig. 27) could be directly calculated by

where G f is the fracture energy. The fracture energy can also be estimated from the area under the experimental curves of load versus load-line displacement (two specimens for each material). The load-line displacement is the load-head, or called crosshead, displacement. The fracture energies computed from equation (23) using the exacted CZMs and from the experimental load versus load-line displacement curves are compared in Table 6, which demonstrates good agreement.

To use the computed CZM for the direct FEM fracture simulation, the computed CZMs are averaged to obtain an abstracted simple curve for convenient use. For PWII, the computed CZMs are banded and vaguely show a “kink” point, therefore we use a bilinear softening curve to abstract the computed curves (Fig. 27). While for the Garolite G-10/FR4, we found a power-law curve with power index = 1.92 well fits the computed CZMs.

The extracted CZMs for both materials are then used as the input to the FEM model, of which the system of equations is described in equation (13), to compute the global response, i.e. P versus CMOD curve. The FEM simulated P versus CMOD curves are compared with experimental results and are shown in Fig. 28.

Notice that globally the simulated results have good agreement with experiments both in elastic range and the softening range. The agreement in the initial slope of the P versus CMOD curves illustrates the accurate measurement of the Young’s modulus. Moreover, the good fit around the peak load and for the softening part of P versus CMOD may imply that the shapes of the computed CZMs are accurately estimated.

Conclusions

In this study, a hybrid FEMU-DIC inverse technique was used to extract the cohesive fracture properties of an adhesive, Devcon® Plastic Welder™ II (PW-II), and a plastic, Garolite G-10/FR4. Single edge-notched beam (SENB) specimens were fabricated for fracture testing. For PW-II, PMMA was used as substrate. The DIC technique used in this study features a full-field correlation algorithm in contrast to conventional sub-set correlation algorithm.

First, DIC was used to estimate the elastic properties of the bulk materials. The full-field correlation algorithm yielded smooth measurement of the displacement field that it could be reliably used to derive the strain field, for simple load configurations such as uniaxial compression and bending specimens demonstrated in this paper. The strain field was used to estimate accurate Young’s modulus, E, and Poisson’s ratio, ν. The DIC estimated E and ν between compression and bending tests are consistent and are in good agreement with published material properties.

For the inverse problem constructed for the identification of the cohesive properties. The derivative-free Nelder-Mead optimization method was adopted in the FEMU formulation, which enabled a flexible construction of the mode-I cohesive traction-separation relation. Using Nelder-Mead method, the convexity near the solution is not required, which avoids the search for a close-enough initial guess for Newton-like solvers. This is particular useful for nonlinear problems in elasticity like the fracture problems demonstrated in this paper. The current study showed great potential in this active research field that constitutive damage properties could be quantitatively evaluated through the continuous kinematic fields.

References

Barenblatt GI (1959) The formation of equilibrium cracks during brittle fracture. General ideas and hypotheses. Axially-symmetric cracks. J Appl Math Mech 23:622–636

Dugdale DS (1960) Yielding of steel sheets containing slits. J Mech Phys Solids 8:100–104

Hillerborg A, Modéer M, Petersson PE (1976) Analysis of crack formation and crack growth in concrete by means of fracture mechanics and finite elements. Cem Concr Res 6:773–781

Roesler J, Paulino GH, Park K, Gaedicke C (2007) Concrete fracture prediction using bilinear softening. Cem Concr Compos 29:300–12

Shim D-J, Paulino GH, Dodds RH Jr (2006) J resistance behavior in functionally graded materials using cohesive zone and modified boundary layer models. Int J Fract 139:91–117

Song SH, Paulino GH, Buttlar WG (2006) Simulation of crack propagation in asphalt concrete using an intrinsic cohesive zone model. ASCE J Eng Mech 132:1215–1223

Lin G, Meng XG, Cornec A, Schwalbe KH (1999) Effect of strength mis-match on mechanical performance of weld joints. Int J Fract 96:37–54

Gdoutos EE (2005) Fracture mechanics: an introduction. Springer, Dordrecht

Xu XP, Needleman A (1994) Numerical simulations of fast crack growth in brittle solids. J Mech Phys Solids 42:1397

Zhang Z, Paulino GH (2005) Cohesive zone modeling of dynamic failure in homogeneous and functionally graded materials. Int J Plast 21:1195–254

Camacho GT, Ortiz M (1996) Computational modeling of impact damage in brittle materials. Int J Solids Struct 33:2899–938

Ortiz M, Pandolfi A (1999) Finite-deformation irreversible cohesive elements for three-dimensional crack-propagation analysis. Int J Numer Methods Eng 44:1267–1282

Zhang Z, Paulino GH (2007) Wave propagation and dynamic analysis of smoothly graded heterogeneous continua using graded finite elements. Int J Solids Struct 44:3601–3626

Moes N, Belytschko T (2002) Extended finite element method for cohesive crack growth. Eng Fract Mech 69:813–33

Wells GN, Sluys LJ (2001) A new method for modeling cohesive cracks using finite elements. Int J Numer Methods Eng 50:2667–2682

Shah SP, Ouyang C, Swartz SE (1995) Fracture mechanics of concrete: applications of fracture mechanics to concrete, rock and other quasi-brittle materials. Wiley, New York

Volokh KY (2004) Comparison between cohesive zone models. Commun Numer Methods Eng 20:845–856

Song SH, Paulino GH, Buttlar WG (2008) Influence of the cohesive zone model shape parameter on asphalt concrete fracture behavior. In: Paulino GH, Pindera M-J, Dodds RH Jr et al (eds) Multiscale and functionally graded materials. AIP, Oahu Island, pp 730–735

van Mier JGM, van Vliet MRA (2002) Uniaxial tension test for the determination of fracture parameters of concrete: state of the art. Fracture of Concrete and Rock 69:235–247

Yang J, Fischer G (2006) Simulation of the tensile stress-strain behavior of strain hardening cementitious composites. In: Konsta-Gdoutos MS (ed) Measuring, monitoring and modeling concrete properties. Springer, Dordrecht, pp 25–31

Seung Hee K, Zhifang Z, Shah SP (2008) Effect of specimen size on fracture energy and softening curve of concrete: Part II. Inverse analysis and softening curve. Cem Concr Res 38:1061–9

van Mier JGM (1997) Fracture processes of concrete: assessment of material parameters for fracture models. CRC, Boca Raton

Elices M (2002) The cohesive zone model: advantages, limitations and challenges. Eng Fract Mech 69:137–163

Seong Hyeok S, Paulino GH, Buttlar WG (2006) A bilinear cohesive zone model tailored for fracture of asphalt concrete considering viscoelastic bulk material. Eng Fract Mech 73:2829–48

Song SH, Paulino GH, Buttlar WG (2005) Cohesive zone simulation of mode I and mixed-mode crack propagation in asphalt concrete. Geo-Frontiers 2005 ASCE, Reston, VA, Austin, TX, U.S.A, pp 189–198

Alfano M, Furgiuele F, Leonardi A, Maletta C, Paulino GH (2007) Cohesive zone modeling of mode I fracture in adhesive bonded joints. Key Eng Mater 348–349:13–16

Tan H, Liu C, Huang Y, Geubelle PH (2005) The cohesive law for the particle/matrix interfaces in high explosives. J Mech Phys Solids 53:1892–917

Abanto-Bueno J, Lambros J (2005) Experimental determination of cohesive failure properties of a photodegradable copolymer. Exp Mech 45:144–52

Zabaras N, Morellas V, Schnur D (1989) Spatially regularized solution of inverse elasticity problems using the BEM. Commun Appl Numer Methods 5:547–553

Schnur DS, Zabaras N (1990) Finite element solution of two-dimensional inverse elastic problems using spatial smoothing. Int J Numer Methods Eng 30:57–75

Shen B, Stanciulescu I, Paulino GH (2008) Inverse computation of cohesive fracture properties from displacement fields. Submitted for journal publication

Dally JW, Riley WF (2005) Experimental stress analysis. College House Enterprises, Knoxville

Peters WH, Ranson WF (1982) Digital imaging techniques in experimental stress analysis. Opt Eng 21:427–31

Sutton MA, Wolters WJ, Peters WH, Ranson WF, McNeill SR (1983) Determination of displacements using an improved digital correlation method. Image Vis Comput 1:133–139

Chu TC, Ranson WF, Sutton MA, Peters WH (1985) Applications of digital-image-correlation techniques to experimental mechanics. Exp Mech 25:232–44

Bruck HA, McNeill SR, Sutton MA, Peters WH III (1989) Digital image correlation using Newton-Raphson method of partial differential correction. Exp Mech 29:261–267

Bonnet M, Constantinescu A (2005) Inverse problems in elasticity. Inverse Probl 21:1–50

Avril S, Bonnet M, Bretelle A-S, Grediac M, Hild F, Ienny P, Latourte F, Lemosse D, Pagano S, Pagnacco E, Pierron F (2008) Overview of identification methods of mechanical parameters based on full-field measurements. Exp Mech 48:381–402

Abanto-Bueno J, Lambros J (2002) Investigation of crack growth in functionally graded materials using digital image correlation. Eng Fract Mech 69:1695–1711

Choi S, Shah SP (1997) Measurement of deformations on concrete subjected to compression using image correlation. Exp Mech 37:307–313

Sokhwan C, Shah SP (1998) Fracture mechanism in cement-based materials subjected to compression. ASCE J Eng Mech 124:94–102

Corr D, Accardi M, Graham-Brady L, Shah SP (2007) Digital image correlation analysis of interfacial debonding properties and fracture behavior in concrete. Eng Fract Mech 74:109–21

Zitova B, Flusser J (2003) Image registration methods: a survey. Image Vis Comput 21:977–1000

Lu H, Cary PD (2000) Deformation measurements by digital image correlation: implementation of a second-order displacement gradient. Exp Mech 40:393–400

Tong W (2005) An evaluation of digital image correlation criteria for strain mapping applications. Strain 41:167–75

Pan B, Qian K, Xie H, Asundi A (2009) Two-dimensional digital image correlation for in-plane displacement and strain measurement: a review. Meas Sci Technol 20:062001 (17 pp.)

Pan B, Xie H, Guo Z, Hua T (2007) Full-field strain measurement using a two-dimensional Savitzky-Golay digital differentiator in digital image correlation. Opt Eng 46:033601

Lecompte D, Sol H, Vantomme J, Habraken A (2006) Analysis of speckle patterns for deformation measurements by digital image correlation In: Proceedings of SPIE Vol 6341 International Society for Optical Engineering, Bellingham, WA, United States, Nimes, France, pp 63410E1-6

Pan B, Xie H, Wang Z, Qian K, Wang Z (2008) Study on subset size selection in digital image correlation for speckle patterns. Opt Express 16:7037–7048

Lecompte D, Smits A, Bossuyt S, Sol H, Vantomme J, Van Hemelrijck D, Habraken AM (2006) Quality assessment of speckle patterns for digital image correlation. Opt Lasers Eng 44:1132–1145

Sorzano COS, Thevenaz P, Unser M (2005) Elastic registration of biological images using vector-spline regularization. IEEE Trans Biomed Eng 52:652–663

Cheng P, Sutton MA, Schreier HW, McNeill SR (2002) Full-field speckle pattern image correlation with B-spline deformation function. Exp Mech 42:344–352

Shen B (2009) Functionally graded fiber-reinforced cementitious composites—manufacturing and extraction of cohesive fracture properties using finite element and digital image correlation, Ph.D. thesis. University of Illinois at Urbana-Champaign, United States

Genovese K, Lamberti L, Pappalettere C (2005) Improved global-local simulated annealing formulation for solving non-smooth engineering optimization problems. Int J Solids Struct 42:203–37

Molimard J, Le Riche R, Vautrin A, Lee JR (2005) Identification of the four orthotropic plate stiffnesses using a single open-hole tensile test. Exp Mech 45:404–11

Grediac M, Pierron F, Avril S, Toussaint E (2006) The virtual fields method for extracting constitutive parameters from full-field measurements: a review. Strain 42:233–253

Geymonat G, Pagano S (2003) Identification of mechanical properties by displacement field measurement: a variational approach. Meccanica 38:535–545

Roux S, Hild F (2008) Digital image mechanical identification (DIMI). Exp Mech 48:495–508

Avril S, Pierron F (2007) General framework for the identification of constitutive parameters from full-field measurements in linear elasticity. Int J Solids Struct 44:4978–5002

Bezerra LM, Saigal S (1993) A boundary element formulation for the inverse elastostatics problem (IESP) of flaw detection. Int J Numer Methods Eng 36:2189–202

Schnur DS, Zabaras N (1992) An inverse method for determining elastic material properties and a material interface. Int J Numer Methods Eng 33:2039–57

Tarantola A (2005) Inverse problem theory and methods for model parameter estimation. Society for Industrial and Applied Mathematics, Philadelphia

Nelder JA, Mead R (1965) Simplex method for function minimization. Comput J 7:308–313

Acknowledgement

The authors gratefully acknowledge the support from the Natural Science Foundation under the Partnership for Advancing Technology in Housing Program (NSF- PATH Award #0333576). The technical support by Dr. Grzegorz Banas for the fracture testing in Newmark Civil Engineering Laboratory is greatly appreciated.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shen, B., Paulino, G.H. Direct Extraction of Cohesive Fracture Properties from Digital Image Correlation: A Hybrid Inverse Technique. Exp Mech 51, 143–163 (2011). https://doi.org/10.1007/s11340-010-9342-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11340-010-9342-6