Abstract

Objectives

Even in the presence of physical indicators like pain, tumor, color, and function loss, determining the exact size or location of acute dental apical diseases is challenging. Even harder to detect is chronic apical periodontitis, which is asymptomatic. In such circumstances, use of dental radiography is especially beneficial. However, radiographs are not sufficient by themselves, and require interpretation by a well-trained dental specialist. Nevertheless, owing to the human factor, mistakes leading to incorrect treatment can be made by specialists because of a wrong diagnosis. This study aimed to introduce an automated dental apical lesion detection methodology by assessing changes in hard tissue structures. The system consists of modules for jaw separation, tooth segmentation, root localization, and lesion detection.

Methods

Panoramic radiographs are used to improve the process of diagnosis. Unlike the column-sum methodology used in previous studies, the upper and lower jaws are separated using discrete wavelet transformation along with polynomial regression to obtain a better jaw separation curve. Subsequently, angular radial scanning is used to segment the teeth and capture the location of the tooth roots. At the last step, for each detected root, region growing is performed to detect possible lesions surrounding the root apices.

Results

The results for test samples indicate that use of the above-mentioned methods with proposed threshold selection is an effective way for discriminating anatomic structures from lesions, which is our main concern.

Conclusions

The findings prove that the proposed methodology can be used efficiently as an assistant for examination of radiographs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Root canal systems shelter multiple irritants after pulp necrosis. These irritants pervade into the adjacent tissues and bone through the apices of the roots. This process triggers defensive reactions that usually initiate apical pathologies. Unfortunately, attempts by host tissues to eradicate the infection cause resorption of the adjacent bone. The loss of bone reveals itself as radiolucent areas around the apices of the roots. This radiographic appearance serves as a key for diagnosis, especially in cases of chronic apical periodontitis. Chronic apical periodontitis usually develops without any signs, and can remain silent for a long period. Periodic inspections by taking radiographs are crucial for diagnosis and appropriate treatment. However, radiographs are not sufficient by themselves, and need to be inspected by a dentist with specialized training. Even then, the evaluation may not be reliable because the human factor is present, and misinterpretations may occur. This can lead to a wrong diagnosis and mistreatment that may directly affect the quality of life of the patient. However, with increasing usage of digital X-ray imaging, dental radiographs are now electronically accessible, which helps toward archiving patients’ dental records and makes computer-aided analysis possible.

In the field of oral radiology, computer-aided detection systems mainly focus on subjects like segmentation of oral structures and identification of individuals using dental radiographs. In this context, Lien et al. [1] focused on tooth segmentation and employed adaptive power law transformation, local singularity analysis, connected component analysis, and snake boundary tracking techniques to form a scheme for segmentation of teeth in periapical radiographs. With a similar aim, Rad et al. [2] detected a region of interest area for teeth and extracted their distinguishing features to determine the rates of density accumulation. Consequently, they were able to obtain specific features of oral structures for segmentation. As a different concept, Lin et al. [3] focused on human identification using teeth and dental works. They used the Hausdorff distance to compare the contours of teeth from distinct radiographs. An alignment-invariant frequency feature was presented to compensate for the error caused by imperfect alignment of teeth. In a similar manner, Flint et al. [4] proposed a registration algorithm to correct the variations in projection geometry on radiographs to be compared for human identification. They proposed a heuristic threshold for subtraction analysis of radiographs. It was stated that this threshold level would vary with the anatomical region of the mouth examined. Nassar et al. [5] designed a neural network-based system for matching radiographs. For feature extraction, they used nonlinear filters that mapped the region of interest area of each tooth to a feature set. The feature set was treated as a feature image of the corresponding tooth and formed the outputs of the network. These outputs were fed into a Bayesian classifier to match with test samples. Promising experimental results were reported for that study.

The superior color discrimination capability of computers has enabled some tasks, such as automated lesion detection, to become practicable. Li et al. [6] proposed a semiautomatic lesion detection technique using a level set method. The method was used to segment the image into three meaningful pathological regions, designated normal region, potentially abnormal region, and abnormal/background region. Subsequently, according to this segmentation, an analysis scheme was applied to evaluate the extracted regions. In another study, Lin et al. [7] used segmentation with a variational level set method for detection of possible lesions in a radiograph. They used the level set method with three initial level set contours obtained by the thresholding method of Otsu [8] to segment the area into three classes, designated normal areas, possible lesions, and serious lesions. Sogur et al. [9] also researched the subject of lesion detection. They were specifically interested in qualitative and quantitative analyses of bone density and architecture. The fractal dimension (FD) of dental structures was calculated using a box-counting method [10]. They stated that the FD can be used as an indicator for early detection of periapical lesions.

In this study, we aimed to introduce a system for analyzing and locating lesions in panoramic dental radiographs. The main functions of the proposed system are jaw separation, tooth segmentation, root localization, and detection of potential periapical lesions. Unlike the jaw separation process in previous studies, horizontal details extracted by a discrete wavelet transformation are fed into a polynomial regression as input observations to determine a jaw separation curve, C, that remains consistent even in cases with absent teeth. Tooth segmentation and root localization are achieved using an angular radial scanning technique that operates on a group of lines drawn from C to the end of each tooth with varying angles. After tooth segmentation and root localization, the pixels surrounding the apices of each root are examined using a region-growing technique for the existence of a potential lesion.

The remainder of the paper is organized as follows: details of the image processing and machine learning methodologies are presented in the "Materials and methods" section; step-by-step results of the proposed methodology are given in the "Results" section; and critical comments are provided in the "Discussion" section.

Materials and methods

Wavelet transformation

Wavelet transformation is a useful technique for extracting the spatial and frequency characteristics of an input signal, t. It benefits from small functions called “wavelets” to represent t, unlike the sinusoids used in Fourier transformation with varying frequencies. Essentially, the method employs two functions designated scaling function and wavelet function. When working on signal intensities in image I, use of these functions in different orders provides multi-resolution analysis of the input and details in the horizontal, vertical, and diagonal directions.

When sampling wavelets in a discrete manner instead of the continuous manner, the methodology is termed discrete wavelet transformation (DWT), which is more practical for application to images. Different types of wavelet functions like Haar et al. [11] have been proposed thus far. For this study, the Haar-type wavelet and scaling coefficients are used.

Polynomial regression

Polynomial regression is known as a curve-fitting methodology that can predict the output t N+1 of the input x N+1 based on previous outputs T = {t 1, t 2,…, t N } and corresponding inputs X = {x 1, x 2,…, x N }. This is achieved by predicting the coefficients of the polynomial.

In this study, polynomial curve fitting is used to predict sample points fitting to the jaw separation curve. After application of DWT, regions may appear where no horizontal details (tooth tips) are present. In such areas, use of traditional column-sum methodology would be insufficient to detect a gap valley between the jaws. However, a polynomial with order 2 or 3 is sufficient to predict the shape of the jaws and accordingly the jaw separation curve in cases lacking sample points.

Region growing

Region growing is a widely used image segmentation algorithm for clustering the pixels that form meaningful areas, which are lesions located around the apices of tooth roots for our case. The methodology requests two fundamental parameters other than the input image. The first is the “initial seed”, which represents the pixel from where the expansion begins. In semiautomated systems, the coordinates of this point are given manually to the system, whereas for the subject of this study, automated systems detect this point autonomously. The second parameter is the “threshold” value, which restricts the size of the expansion. Any new pixel with an intensity value lower than the threshold is considered part of the region detected. Along with the initial seed, this parameter plays a vital role for accuracy of implementation.

Results

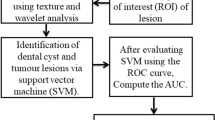

A general overview of the system is shown in Fig. 1, and step-by-step results of the proposed methodology are described below.

Image enhancement

Because of the raw and noisy data, noise reduction and image enhancement procedures first need to be applied to the input image. In image processing, noise is simply defined as the existence of pixels with incompatible intensity values when compared with their neighbors. The ideal case is to observe smooth variations between the intensities of neighboring pixels rather than radical shifts. Dental radiographs are subject to noise caused by different responses of the mouth structures to incoming X-rays. In some cases, this results in a grainy image that is difficult to work on. To eliminate this problem, each radiograph introduced to the system is smoothed using a 5 × 5 Gaussian mask with a standard deviation of σ = 1.

Noise reduction is necessary, but not nearly sufficient. Structures in the mouth like teeth, bones, gums, and gaps should be clearly identified for discrimination from one another. The accuracy of this discrimination is highly dependent on the difference in the pixel intensities of such structures. As the difference increases, it becomes easier to identify each structure. Therefore, in the following step, contrast enhancement is performed to increase the difference in the pixel intensities of each object. The enhancement in contrast is obtained by using top-hat and bottom-hat transformations on input image I.

Top-hat transformation corresponds to a morphological “opening” operation and reveals the lighter regions of I, whereas bottom-hat transformation corresponds to a “closing” operation and reveals the darker regions. Simply, applying these transformations to I and adding the difference in their results to I (Eq. 1) provides a new image, I C , that has much more contrast between the structures it contains.

Here, \(\bullet\) and \(\circ\) correspond to the closing and opening operations, respectively, and S is the structuring element used. The results of the process can be seen in Fig. 2.

Jaw separation

Working on panoramic radiographs instead of bitewing images brings along an extra complexity, requiring separation of the upper jaw from the lower jaw for further processing. Previous studies have benefited from the intensities of pixels residing in each column of the input image and tried to find regions where the pixel intensities are below a pre-chosen threshold. Subsequently, the regions at which the locations are close to one another are grouped together to form a “gap valley”, which is said to lie between the upper and lower teeth. This valley is used to obtain a roughly drawn curve that separates the jaws. However, in cases of absent/half teeth or implants, the shape of this curve can become radically spoiled. To avoid this problem, the proposed system benefits from discrete wavelet transformation and uses horizontal details (Fig. 3b) of the image to form a better jaw separation curve (C).

As shown in Fig. 3b, c, the thresholding method of Otsu [8] is applied to exclude weak details and find a more generalized form of C. In such cases, the most significant details around the brightest structures (especially implants) may emerge and the less significant details from relatively darker structures (teeth with no implants) may vanish, leading to gaps between the horizontal details. The shape of C in the gap positions should be predicted using the existing details. At this point, polynomial regression provides an effective solution for this problem. As can be seen in Fig. 4, using the existing horizontal details (red marks), a second-order polynomial y(x) = −0.001 x 2 + 0.763 x + 64.377 can form C.

As shown in Fig. 4, the length of C is strictly dependent on the locations of the first and last horizontal details. If the length of the curve does not fully cover the length of the gap between the jaws, it can easily be extended from both ends because y(x) is known. Once C is known, assistive upper and lower borders (blue curves) with the same curvature as C can be formed to determine a rough boundary for the ending of all teeth. These borders are positioned by vertical shifting of C in both directions (C upper and C lower) and finding the optimal amount of shift where the mean intensity of pixels residing on C upper or C lower is below a predetermined threshold. One can also prefer to use a ratio between the highest and lowest mean pixel intensities for each direction as a dynamic threshold. For this, a ratio between 10 and 20 % is found to be suitable when working on test subjects.

Tooth segmentation and root apex localization

The next step is segmentation of teeth and localization of the apices of roots. Although horizontal details extracted by DWT are useful for separation of the jaws, vertical details are not sufficient for tooth segmentation. At this point, an angular radial scanning method is employed. According to this method, the slope of each point on C is determined and perpendicular lines are drawn from these points to the upper and lower border curves (C upper and C lower). The intensities of the pixels on each line are then extracted to form signals for interpretation. However, owing to the orientation of teeth, perpendicular lines are not generally effective for finding optimal gaps between teeth (tooth segmentation) or channels passing through teeth (root apex localization). To avoid this issue, rather than a single perpendicular line drawn from a point on C, 2α + 1 lines with varying angles in the range of 90° ± α° are drawn to the border curves. For ease of computation, α is chosen as 5. In the case of tooth segmentation, for each point on C, the line with the lowest mean pixel intensity value among the 2α + 1 lines is chosen as a candidate for the segmentation line passing between adjacent teeth. In contrast, for the same position, the line with the highest mean pixel intensity value among the 2α + 1 lines is chosen as a candidate for the root line passing all along the teeth from the top to the root apex. Figure 5a, b depict the mean pixel intensity signals used for tooth segmentation and root apex localization in the upper jaw of the radiograph shown in Fig. 4. The minima seen in Fig. 5a correspond to gaps between teeth and point to the existence of a separation line starting from this point in C. The original pixel intensities (range 0–255) are used for this task. On the contrary, the maxima seen in Fig. 5b correspond to lines on which pixels with the highest intensity values lie. These lines are called root detection lines and are considered to pass through the apices of roots of teeth because of their highest mean intensity values. After heuristic trials, it was observed that instead of the original pixel intensity values, thresholded values (0 or 1) obtained from the binary (black–white) version of the input image (I) give better root apex localization results.

From Fig. 5a, b, the following question arises: “Among all, which minima/maxima should be considered as indicators of segmentation/root detection lines?” For each minimum/maximum, the total amount of vertical shift according to the previous maximum/minimum and next maximum/minimum can be used to answer this question. The calculated shift amounts can be sorted in descending order. Next, the top K values of these shifts or significant shifts above the predetermined threshold can be chosen to locate the segmentation/root detection lines. The former approach is suitable where there are no absent teeth in the subject’s radiograph, while the latter approach can work on radiographs with absent teeth. Based on the test samples used in this study, the latter approach is preferred.

Once the locations of the tooth segmentation lines are determined, the teeth can be segmented as shown in Fig. 6. It should be noted that the accuracy of this process is strictly dependent on C, α, and tooth orientations.

Unlike tooth segmentation, root localization requires further evaluation after the initial coordinates of the root detection lines are determined. Because a binarized (black and white) version of the input image is used for this task, the initial continuous region of black pixels indicates a root apex. The spatial coordinates of this point can be determined by using its distance to C and the angle of the root detection line. Like tooth segmentation, the accuracy of this process depends on C, α, and tooth orientations. Figure 7 depicts the results of the root apex localization step.

Lesion detection

Once the apices of the roots are localized, the next step is to start a search around each root apex to identify potential lesions, which are relatively darker than the gums. Region growing is used for this task. However, before application of the method, solutions must be found to the problems of how to select the seed point for region growing and how to decide whether a detected darker area is a lesion.

The intensities of pixels on a root detection line provide useful information for detecting the seed point of the region-growing algorithm. Accordingly, if the first minimum after the root apex is below threshold t 1 and above threshold t 2 , the spatial coordinates of this minimum are used as the seed point of the region-growing process. To determine t 1 and t 2 autonomously, the intensities of pixels residing in a 10 × 10 area taken below each root apex are fed into a three-class intraclass variance minimization problem as defined in Eq. 2.

All pixels taken from the square areas below the root apices are grouped into three classes: gum pixels, lesion pixels, and air gap pixels. Gum pixels are lighter than lesion pixels, and can be discriminated from lesion pixels using the threshold t 1 . Similarly, lesion pixels are lighter than air gap pixels, and these two can be discriminated using threshold t 2 . σ w is the total within-class variance, which should be minimized with optimal values of t 1 and t 2 , σ i is the variance of pixels in the ith class, and ω i is the probability of a pixel coming from the ith class.

The sample intensity signal shown in Fig. 8 demonstrates this selection process. The root apex point is the 74th pixel on the root detection line and the first minimum providing the threshold criteria is chosen as the seed point, which has the spatial coordinates (611, 362) on the input radiograph.

After detecting the coordinates of the seed point, the region-growing algorithm is applied. At each iteration, the mean pixel intensity of the detected region is computed. If the mean pixel intensity of the expanding region drops below (t 1 + t 2 )/2, the growing process is terminated. With termination of the process, a potential lesion region is extracted with its borders and size. However, this search may still detect false regions as lesions. For this reason, as the last step of the process, each candidate region is checked for whether it expands to the root of the corresponding tooth. A candidate region is designated a lesion only if it expands to the root of a tooth at least TL/10 pixels from the root apex position, where TL is the length of the individual tooth. This length can be roughly defined as the position of the root apex on the root detection line. Figure 9a depicts the output of this process along with the results for some of the test samples (Fig. 9b–d).

The proposed methodology was applied to nine test samples, the details of which are shown in Table 1.

For all teeth in each sample, thresholds t 1 and t 2 were determined, and regions with a mean pixel intensity between these thresholds were considered to be lesions (positive output). The outputs of the system were evaluated and the true-positive (TP), true-negative (TN), false-positive (FP), and false-negative (FN) values shown in Table 2 were obtained.

The specificity and sensitivity values obtained for the test subjects were 89 and 70 %, respectively.

Discussion

In endodontics in particular, diagnosis and treatment monitoring require data obtained from oral radiographs. Instead of conventional radiography, digital radiographs are now used for this task, and panoramic dental radiographs are the most preferred images among dentists. However, this type of radiography degrades the three-dimensional oral structures to two-dimensional images, which complicates determination of osseous changes by the human eye. In conjunction with complex anatomy, this degradation may lead to misdiagnosis and incorrect treatment that can directly affect the quality of life of the patient. In this study, we aimed to bring a new aspect to two-dimensional digital radiography by using advanced computer vision techniques for analysis of panoramic radiographs to reduce the risk. The proposed methodology is capable of tooth segmentation and root apex localization, which also makes it usable as a preprocessing tool for matching dental radiographs in identification tasks.

Previous studies suggested using the column-sum approach for locating the gap valley between the upper and lower jaws for jaw separation, but this technique is vulnerable to absent teeth. In trials, it was observed that discrete wavelet transformation along with polynomial regression appears to provide consistent jaw separation curves that are robust to conditions like implants or absent teeth. Formation of the correct curve using a polynomial is crucial, because each point on the curve should provide an angle as an input to angular radial scanning. Once this has been achieved, radial scanning can then search for gaps between teeth (tooth segmentation) or the lightest lines passing through teeth (root apex localization). As soon as the apices of roots are detected, a region-growing approach is employed from the root apex positions to detect possible lesions with their sizes, shapes, and expansion directions. Trials showed that there is a tradeoff between the accuracy of segmentation and the runtime of the software depending on factors like α and number of sample points on C. Besides, it is important to study radiographs with proper contrast and quality. Abnormal alignment of teeth can also be considered a handicap.

The results show that the proposed methodology is applicable as an assistant for evaluating radiographs. However, identifying complex anatomic structures and distinguishing abnormal formations in panoramic radiographs remain to be discussed. As future work, it is aimed to use probabilistic methods to improve the assessment of detected lesions.

References

Lin PL, Huang PY, Huang PW, Hsu HC, Chen CC. Teeth segmentation of dental periapical radiographs based on local singularity analysis. Comput Methods Progr Biomed. 2014;113:433–45.

Rad AE, Rahim MSM, Kumoi R, Norouzi A. Dental X-ray image segmentation and multiple feature extraction. In: 2nd world conference on innovation and computer sciences; 2012 May 10–14; Kusadasi, Turkey; 2012. pp 188–97.

Lin PL, Lai YH, Huang PW. Dental biometrics: human identification based on teeth and dental works in bitewing radiographs. Pattern Recogn. 2012;45:934–46.

Flint DJ, Dove SB, Brumit PC, White M, Senn DR. Computer-aided dental identification: an objective method for assessment of radiographic image similarity. J Forens Sci. 2009;54:177–84.

Nassar DEM, Ammar HH. A neural network system for matching dental radiographs. Pattern Recogn. 2007;40:65–79.

Li S, Fevens T, Krzyzak A, Jin C, Li S. Semi-automatic computer aided lesion detection in dental X-rays using variational level set. Pattern Recogn. 2007;40:2861–73.

Lin PL, Huang PY, Huang PW. An automatic lesion detection method for dental X-ray images by segmentation using variational level set. In: International conference on machine learning and cybernetics; 2012 July 15–17; Shaanxi, China; 2012. pp 1821–1825.

Otsu N. A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern. 1979;9:62–6.

Sogur E, Baksi BG, Grondahl HG, Sen BH. Pixel intensity and fractal dimension of periapical lesions visually indiscernible in radiographs. J Endod. 2013;39:16–9.

White SC, Rudolph DJ. Alterations of the trabecular pattern of the jaws in patients with osteoporosis. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 1999;88:628–35.

Mallat S. A wavelet tour of signal processing. The sparse way. Third ed. Burlington: Academic Press; 2008.

Acknowledgments

This work was supported by the Scientific Research Projects Coordination Unit of Istanbul University under Grant BAP-37275.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Ramiz Gorkem Birdal, Ergun Gumus, Ahmet Sertbas, and Ilda Sinem Birdal declare that they have no conflict of interest.

Ethical statement

This article does not contain any studies with human or animal subjects performed by any of the authors.

Rights and permissions

About this article

Cite this article

Birdal, R.G., Gumus, E., Sertbas, A. et al. Automated lesion detection in panoramic dental radiographs. Oral Radiol 32, 111–118 (2016). https://doi.org/10.1007/s11282-015-0222-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11282-015-0222-8