Abstract

Bandwidth aggregation is a key research issue in integrating heterogeneous wireless networks, since it can substantially increase the throughput and reliability for enhancing streaming video quality. However, the burst loss in the unreliable wireless channels is a severely challenging problem which significantly degrades the effectiveness of bandwidth aggregation. Previous studies mainly address the critical problem by reactively increasing the forward error correction (FEC) redundancy. In this paper, we propose a loss tolerant bandwidth aggregation approach (LTBA), which proactively leverages the channel diversity in heterogeneous wireless networks to overcome the burst loss. First, we allocate the FEC packets according to the ‘loss-free’ bandwidth of each wireless network to the multihomed client. Second, we deliberately insert intervals between the FEC packets’ departures while still respecting the delay constraint. The proposed LTBA is able to reduce the consecutive packet loss under burst loss assumption. We carry out analysis to prove that the proposed LTBA outperforms the existing ‘back-to-back’ transmission schemes based on Gilbert loss model and continuous time Markov chain. We conduct the performance evaluation in Exata and emulation results show that LTBA outperforms the existing approaches in improving the video quality in terms of PSNR (Peak Signal-to-Noise Ratio).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the past few years, we have witnessed the breakthrough of wireless access technologies with diverse transmission features and capabilities. For instance, cellular networks such as general packet radio service (GPRS) and universal mobile telecommunications system (UMTS) cover a wide area but support a low transmission rate while wireless local area network (WLAN) covers a relatively small area but supports a high transmission rate. In addition, worldwide interoperability for microwave access (WiMAX), high speed downlink packet access (HSDPA), CDMA2000 1X evolution data optimized (EV-DO), etc. are widely deployed for various application services over wireless environments. Expectedly, more wireless networks will emerge to provide users with better networking services and these networks will coexist in the future as they do now. The heterogeneity in different access technologies, architectures and specifications coins the idea of a “Heterogeneous Wireless Network” [1].

With the rapid development of wireless infrastructures, mobile video streaming (e.g., Youtube [2] and Hulu [3]) has become one of the most popular applications during the past few years. According to the Cisco Visual Networking Index [4], video streaming accounts for 57 % of mobile data usage in 2012 and will reach 69 % by the year 2017. Global mobile data is expected to increase 13-fold between 2012 and 2017.

Sometimes, a single wireless network has difficulties in supporting the high bandwidth-demanding video applications [5, 6]. On one hand, the WLAN systems can provide relatively higher available bandwidth while they are not robust to user mobility. On the other hand, the cellular networks perform better in sustaining user mobility but their bandwidth will be limited as the wireless spectrum is shared by multiple users. To deliver high-quality video services, it becomes vital to consider aggregating the bandwidth of heterogeneous wireless networks. To aggregate the bandwidth of different access networks, the mobile clients ought to be equipped with multihoming capability, i.e., they have multiple radio interfaces (3G, WLAN, etc.). Special mobile devices have already emerged to provide aggregated bandwidth capacity to mobile business or vehicular users through multiple wireless broadband subscriptions [7]. The IETF MONAMI6 working group, which is focused on enhancing MIPv6 with multihoming support, has identified the benefits that multihomed mobile hosts offer to both end users and network operators [8]. Therefore, the bandwidth aggregation in heterogeneous wireless networks has attracted considerable attentions [6, 9, 10] recently, especially for improving the quality of streaming video.

However, the burst loss in the unreliable wireless environments is a severely challenging problem which can result in heavy packet loss and significantly degrade the user-perceived video quality. Existing solutions [11, 12] mainly address the critical problem by reactively increasing the forward error correction (FEC) redundancy. Nonetheless, the increased redundancy will in turn impose a higher load on networks, which is often not tolerable in the bandwidth-limited wireless channels. In this paper, we propose a loss tolerant bandwidth aggregation (LTBA) approach, which proactively leverages the channel diversity in heterogeneous wireless networks for fighting against the burst loss. First, we allocate the FEC data packets according to the ‘loss-free’ bandwidth of each wireless network. Second, we deliberately insert intervals between the packets’ departure in each physical path to the multihomed client while respecting the delay constraint for FEC block. The detailed descriptions of the proposed LTBA will be presented in Sect. 4.

This research makes the following contributions:

-

1.

We propose a loss tolerant bandwidth aggregation (LTBA) approach, which proactively leverages the channel diversity in heterogeneous wireless networks to overcome the burst loss.

-

2.

We carry out analysis based on Gilbert loss model and continuous time Markov chain, proving that the proposed LTBA outperforms existing FEC packet scheduling approaches over heterogeneous wireless networks in minimizing end-to-end video distortion.

-

3.

We evaluate the performance of the proposed approach through emulations in Exata. The experimental results demonstrate that: (1) LTBA improves the average video PSNR by up to 0.78, 4.38, and 5.53 dB compared to the D-EMS [11], S-EMS [11], and EDPF [9], respectively. (2) LTBA guarantees 97 % of the video frames are delivered within the decoding deadline averagely while the percentage for D-EMS, S-EMS and EDPF is 95, 89 and 83 %, respectively. (3) LTBA is able to handle the highly lossy channel with a loss probability of 40 %.

The rest of this paper is organized as follows: In Sect. 2, we briefly introduce and discuss the related work. In Sect. 3, we show the system model and problem formulation. Sect. 4 describes the proposed solution in detail. In Sect. 5, we present performance evaluation based on Exata emulations. Conclusion remarks are given in Sect. 6. The basic notations used throughout this paper are listed in Table 1.

2 Related Work

In the literature, there are extensive researches on bandwidth aggregation in heterogeneous wireless networks and a general conclusion can be found in [13]. The authors conclude that aggregating effective offered bandwidth from individual wireless networks can significantly improve the performance in terms of throughput, load balancing and reduced latency. Furthermore, most of the existing bandwidth aggregating solutions consider a single metric to adapt to varying traffic and network conditions. However, the wireless channel is characterized by multiple attributes, e.g., delay, loss, bandwidth, etc. The existing studies can be divided into three branches: (1) rate allocation policies, e.g., [5, 14] and [15]; (2) packet scheduling approaches, e.g., [9] and [10]; (3) forward error correction schemes, e.g., [11, 12] and [16]. The proposed LTBA can be regarded as a solution that deals with rate allocation in concert with packet scheduling.

Zhu et al. [14] study the distributed rate allocation policies for multihomed video streaming over heterogeneous wireless networks and conclude that the media-aware rate allocation policies outperform the heuristic AIMD-based schemes in improving video quality. Song et al. [15] propose a probabilistic multipath transmission (PMT) scheme, which sends video traffic bursts over multiple available channels based on a probability generation function of the packet delay. PMT is not robust to the client mobility as it does not dynamically adjust the flow splitting probability according to the time-varying channel status. In [5], Jurca et al. proposed physical path selection and source rate allocation schemes to efficiently satisfy media-specific QoS for stored video streaming over multipath networks. The experimental results show that video streaming through only certain reliable physical paths gives better video quality than that through all possible physical paths.

In the EDPF [9] algorithm, it takes into account the available bandwidth, propagation delay and packet size for estimating the arrival time and aims to find an earliest path for delivering the video packet. However, EDPF is not robust to bandwidth fluctuations, which usually occur in the unreliable environments. The load balancing algorithm (LBA) [10] performs stream adaption in response to the varying channel status by only transmitting those packets which are estimated to arrive at the client within the decoding deadline and conserves bandwidth by dropping packets that cannot be decoded because they rely on previous packets that have been dropped. A packet prioritization scheme in LBA gives a higher weighting to I frames over B and P frames and also to base layer packets over enhancement layer packets. The LBA scheduler sorts packets according to priority weighting, and sacrifices lower priority packets to ensure the delivery of those with a higher priority.

Jurca et al. [16] research on the optimal FEC scheme and layer selection in multi-path scenario. This approach uses a multi-layer coded video stream, and the base-layer stream is protected by duplicated transmission using multiple physical paths during the handoff. The multi-path loss tolerant (MPLOT) [12] is a transport protocol which focuses on maximizing the application throughput, yet it does not address tight delay constraints. While MPLOT can greatly benefit applications with bulk data transfer, a live video streaming source cannot effectively exploit such throughput benefits because its streaming rate is typically fixed or bounded, e.g., by the encoding schemes.

In addition to above researches, Yousaf et al. [17] propose an end-to-end architecture that provides the vertical handover and bandwidth aggregation services to TCP applications. The proposed architecture focused on the throughput gain while the burst loss is not addressed.

To summarize, our work is distinct from previous studies in that we design a framework which jointly exploits the optimal FEC packets allocation and path interleaving (i.e., spread-out the packets’ departures) to overcome the burst loss. Furthermore, the existing works mainly focus on the throughput and delay performance while the goal of the proposed LTBA is to maximize the video quality.

3 System Model and Problem Formulation

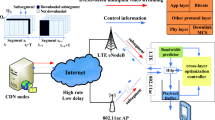

We consider a case in which a multi-homed client is receiving real-time video streaming from a remote video server as presented in Fig. 1. The client is equipped with multihoming capability and the video streaming can be concurrently transmitted via more than one wireless network. Without loss of generality, we assume that \(\mathcal {R}\) networks are involved and the \(r\)th wireless network is referred to as \(P_r, 1\le r\le \mathcal {R}\).

3.1 Network Model

Each wireless network represents a physical path from the video server to the multihomed client. We assume the paths to be independent of each other and each path is associated with three positive metrics:

-

The available bandwidth \(\mu _r\), expressed in the unit of Kbps [18] to keep in accordance with that of video streaming (encoding) rate.

-

The path propagation delay \(t_r\), which includes the propagation delay of the wired and wireless domain along the \(r\)th path.

-

The loss rate \(\pi _B^r\), assumed to be an i.i.d process, independent of the streaming rate.

The above metrics can be obtained by end-to-end measurement solutions, e.g., the pathLoad [19] and pathChirp [20] algorithm. In this paper, we apply the pathChirp algorithm for achieving the goal. Interested readers could refer to [20] for its detailed descriptions and source code. Besides, we model burst loss on each path by the continuous time Gilbert model [21]. It is a two-state stationary continuous time Markov chain. The state \(\mathcal {X}_{r}(t)\) at time \(t\) assumes one of two values (see Fig. 2): \(G\) (Good) or \(B\) (Bad). If a packet is sent at time \(t\) and \(\mathcal {X}_{r}(t)=G\) then the packet can be successful delivered; if \(\mathcal {X}_{r}(t)=B\) then the packet is lost. We denote by \(\pi ^{r}_G\) and \(\pi ^{r}_B\) the stationary probabilities that the path to \(P_{r}\) is good or bad. Let \(\xi ^{r}_B\) and \(\xi ^{r}_G\) represent the transition probability from \(G\) to \(B\) and \(B\) to \(G\), respectively. In this work, we adopt two system-dependent parameters to specify the continuous time Markov chain packet loss model: (1) the average loss rate \(\pi _B^r\), and (2) the average loss burst length \(1/\xi _B^r\). Then, we will have

3.2 Video Distortion Model

We introduce a generic video distortion model [22] in this subsection. The video distortion perceived by the end user can be represented as the sum of the source distortion (\(D_{src}\)) and the channel distortion (\(D_{chl}\)). The expected end-to-end video distortion comprises of the following two terms:

In other words, the streaming video quality depends on both the distortion due to a lossy encoding of the media information and the distortion due to losses experienced in the network. The source distortion is mostly driven by the source or streaming rate, and the media sequence content, whose characteristics influence the performance of the encoder (e.g., for the same bit rate, the more complex the sequence, the lower the quality). The source distortion decays with the increase in video encoding rate; the decay is quite steep for low bit rate values, but it becomes very slow at high bit rate. The channel distortion is dependent on the average loss probability, and the sequence characteristics. It is roughly proportional to the number of video frames that cannot be decoded correctly, and an increase in loss probability augments the channel distortion. Hence, we can explicitly formulate the end-to-end distortion (expressed in mean square error, MSE) as:

where \(\omega , R_0\) and \(\beta \) are parameters which depend on the video sequence. \(\pi _{agg}\) denotes the aggregate loss rate of all the wireless networks to the multihomed client.

3.3 Forward Error Correction

The systematic Reed–Solomon (RS) erasure code has been widely used as FEC code to protect data packets against losses in packet erasure networks. In RS\((n,k)\) code, for every \(k\) source packets, \(n-k\) parity packets are introduced to make up a codeword of packets. As long as a client receives at least \(k\) out of the \(n\) packets, it can recover all the source packets. If the received packet number is less than \(k\), the received source packets can still be used, because they have been kept intact by the systematic RS encoding process.

Let \(c\) denote a \(n-\)tuple that represents a particular failure configuration. If the \(i\)th FEC packet in a block is lost on path \(P_{r}\), then \(c_{i}^r=B,\,1\le i\le n,\,1\le r\le \mathcal {R}\) and vice versa. By considering all the possible configurations \(c\) we can compute the aggregate loss rate \(\pi _{agg}\) as

where \(0\le \mathcal {L}(c)\le k\) denotes the number of lost data packets after the FEC recovery process for a given \(c\). For the FEC(\(n,k\)) encoding, we can have

Let \(\mathbb {P}(c_{i}^{r})\) denote the probability of a failure on path \(P_{r}\) in the transmission of the \(i\)th packet. The derivation of \(\mathbb {P}(c_{i}^{r})\) for the continuous Gilbert loss model is straightforward. We denote by \(p_{i,j}^r(\theta )\) the probability of transition from state \(i\) to \(j\) on \(P_{r}\) in time \(\theta \)

For the classic Markov chain analysis, we can have:

in which \(\gamma =\exp \left[ -(\xi ^{r}_B+\xi ^{r}_G)\cdot \theta \right] \). Now, we can compute \(\mathbb {P}(c_{i}^{r})\), e.g., for \(n=3,\, c_3^r=B|c_2^r=B|c_1^r=G\) we have

in which \(\theta _{i}^r=T_{i+1}^r-T_{i}^r\) is the time interval between the \(i\)th and \((i+1)\)th transmission packet on path \(P_{r}\). In general, \(\mathbb {P}(c^r)\) can be computed as

Finally, after a sequence of algebraic manipulations, we have

3.4 Problem Formulation

As the source distortion is determined by the video sequence and encoding parameter, the goal of the proposed LTBA is to find the optimal scheduling for minimizing channel distortion. The optimization problem can be stated as follows: given the physical path properties and for wireless networks to the multihomed client, the FEC parameters and maximal FEC block transmission delay, find the scheduling that minimizes the channel distortion

in which \(\pi _{agg}\) can be obtained with Eq. (10). The optimization problem satisfies to the following conditions: (1) all the FEC data packets should arrive at the client before the delay constraint (\(d_{\text {FEC}}\)), and (2) the allocated FEC data packets should not exceed the estimated capacity of each wireless network. We approach this problem in two steps: (1) we find the optimal solution for FEC packets allocation with regard to the channel status diversity (available bandwidth, propagation delay and loss rate) in heterogeneous wireless networks, and (2) we insert intervals between packets’ departure in each physical path while respecting the delay constraint.

4 Proposed Solution

In this section, we firstly describe the proposed solution in detail. Then, we prove that the proposed LTBA outperforms existing approaches in minimizing end-to-end video distortion.

4.1 Loss Tolerant Bandwidth Aggregation

First, we calculate the transmission delay of a FEC packet through the \(r\)th physical path by

in which \(\varDelta \) denotes the FEC packet payload size and \(\mu _r(1-\pi _r)\) is the ‘loss-free’ bandwidth of the \(i\)th wireless network. Then, the maximum number of packets that can be transmitted through the \(i\)th within the deadline \(d_{\text {FEC}}\) can be obtained with

where \(\lfloor x \rfloor \) denotes the largest integer less than \(x\). The number of FEC packets allocated for each path can be computed as

The above steps are intended to determine the allocation of FEC packets for each wireless network. Then, in order to take full advantage of channel resources, we design to spread the departure of FEC data packets while respecting the delay constraint. Let \(\psi _{ir}\) denote the departure time for the \(i\)th FEC packet dispatched onto \(P_r\). Finally, the departure time for each FEC packet can be obtained with

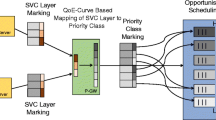

Assume that there exists \(\mathcal {R}=2\) available wireless networks and the FEC(\(5,3\)) encoding is applied, the scheduling process of the proposed loss tolerant bandwidth aggregation is presented in Fig. 3.

4.2 Comparison with ‘Back-to-Back’ Transmission Schemes

The ‘back-to-back’ transmission schemes represent the approaches used in [9, 11, 12]. As depicted in Fig. 4, in the ‘back-to-back’ transmission, the FEC data packets depart from the sender side without interval or the intervals can be neglected. According to Eq. (10), the expected aggregate loss rate can be computed as the initial state of the departure time of the transmission, i.e.,

In Eq. (10), for the departure interval \(\theta ^r>0\), the transition probabilities satisfy to \(p_{i,j}^r(\theta ^r)<1\). Then, we can have \(\prod _{i=1}^{n-1}\left( p^{r}_{c_{i}^{r},c_{i+1}^{r}}\left( \theta _{i}^r\right) \right) <1\). Now, we can compare the aggregate loss rate of the proposed LTBA and the ‘back-to-back’ transmission schemes by

According to Eq. (3), under the same source distortion, the end-to-end video distortion is determined by the aggregate loss rate. Then, we can arrive at the conclusion LTBA outperforms the ‘back-to-back’ transmission schemes in minimizing the end-to-end distortion

5 Performance Evaluation

We evaluate the performance of the proposed LTBA by comparing it with existing bandwidth aggregation solutions in heterogeneous wireless networks. First, we introduce the emulation methodology that includes the emulation setup, reference schemes, performance metrics and emulation scenario. Then, we present and discuss the emulation results in detail.

5.1 Emulation Methodology

5.1.1 Emulation Setup

-

Network emulator. We evaluate different transmission schemes using Exata 2.1 [23] emulations. Exata is an advanced edition of QualNet [24] in which we can perform semi-physical emulations. The system architecture for performance evaluation is presented is Fig. 5. In the emulation topology, the video server has one wired network interface and the mobile client has WLAN and HSDPA network interfaces. We can construct an end-to-end connection to a specific wireless network interface by binding a pair of IP addresses from the server and the client, e.g., \(P_{1}\) is constructed by \(\{IP_{S},IP_{D}^{1}\}\).

-

Video codec. We adopt the H.264/SVC reference software JSVMFootnote 1 9.18 [26] as the video codec. In order to implement the real video streaming based emulations, we integrate the source code of JSVM [as Object File Library (.LIB)] with Exata and develop an application layer protocol of “Video Transmission”. The interested readers can refer to Exata Programmers’ Guide [23] for the detailed descriptions of the development steps. The tested video sequences are City, Crew, Harbor and Soccer downloaded from [27]. Each video sequence is concatenated to itself to be 3,000 frame-long so as to obtain statistically meaningful results. The video streaming is encoded at 30 fps (frames/s) and a GoP consists of 8 frames. The video encoding rate is 1,000 Kbps and the decoding deadline for each video frame is set to be 200 ms.Footnote 2

5.1.2 Reference Schemes

We compare the performance of the proposed LTBA with the following two schemes:

-

EDPF [9]. We apply the Earliest Delivery Path First algorithm in the video server, taking into account the available bandwidth, link delay and FEC packet size. Then, the arrival time is estimated based on the above metrics. A FEC data packet is always dispatched onto the wireless network with the minimal estimated end-to-end delay.

-

EMS [11]. The Encoded Multipath Streaming splits the load based on the loss rate of each path and this approach includes two main components: (1) online load splitting and (2) FEC redundancy adaption. In order to fully compare its performance with the proposed LTBA, we disable its FEC redundancy adaption function and this scheme is named Static EMS (S-EMS). The EMS with dynamic FEC redundancy adaption is dubbed D-EMS. When the FEC redundancy gets large, the optimal load splitting becomes inversely proportional to the channel loss rate.

5.1.3 Performance Metrics

We adopt the following performance metrics to evaluate the proposed scheme against the two competing schemes:

-

PSNR. PSNR [29] (Peak Signal-to-Noise Ratio) is a standard metric of video quality and is a function of the mean square error between the original and the received video frames. If a video frame is dropped or past the deadline, it is considered lost and is concealed by copying from the last received frame before it.

-

Ratio of video frames past decoding deadline. PSNR measures video quality based on the received video frames regardless of the deadline of video frames. However, the video frames past the deadline can not be used to display real-time streaming media. We measure the ratio of packets which miss the decoding deadline to reflect the real-time video streaming quality. Note that this ratio includes the lost video frames due to channel loss.

-

Aggregate loss rate. As described in Sect. 3.3, the aggregate loss rate is the ratio of lost packets to total number of packets for a specific transmission scheme. This metric directly impacts on the channel distortion.

5.1.4 Emulation Scenario

We conduct emulations under client mobility. In the emulation scenario, the client is set to move at walking speed along a predefined mobile trajectory. To emulate the burst channel loss behavior, both the WLAN and HSDPA wireless links are set to have a random fault. The average burst loss lengths of both WLAN and HSDPA wireless links are set to be 25 ms. The path propagation delays of WLAN and HSDPA are set to be 40 and 70 ms, respectively. In all the emulations, the FEC group size is set to be 32 and the redundancy set contains the four elements: (22, 10), (24, 8), (26, 6), and (28, 4).

For the confidence results, we repeat each set of emulations more than 5 times and present the averaged results with a 95 % confidence interval, except for the microscopic results, where we present the results of a single run with finer granularity. In the results of time series analysis, as the raw data is often very noisy, we also show the smoothed data to make the plots readable and useful.

5.2 Emulation Results

First, we depict the aggregate throughput and the available bandwidth of each wireless network to the mobile client in Fig. 6a, b. It can be inferred from Fig. 6a, b that HSDPA supports a relatively steady link while the WLAN experiences severe bandwidth fluctuations. Figure 6c, d plot the loss rate of the HSDPA and WLAN wireless links estimated by the implemented physical path monitoring process.

5.2.1 PSNR

We plot the average PSNR values for all the compared approaches under different FEC settings in Fig. 7. Besides, the PSNR values of encoded video without loss impairment (named “Encoded video”) are also depicted to show the best achievable quality. The average PSNR values are obtained from all the video test sequences. Figure 7 indicates that LTBA achieves the highest mean PSNR values and outperforms other reference schemes under the FEC redundancy settings of \((24,8)\) and \((26,6)\). In the redundancy of \((22,10)\) and \((28,4)\), D-EMS achieves higher PSNR values than LTBA because the redundancy settings are either too low or too high to overcome the channel losses. The excessively redundant packets will only lead to larger end-to-end delay of video streaming [11]. The results demonstrate that the amount of FEC redundancy which is ‘just enough’ for overcoming the channel loss is able to achieve higher video quality. In order to have a microscopic view of the results, we also depict the average PSNR values and standard deviation for all the tested video sequences under the FEC redundancy setting of \((26,6)\) in Table 2. LTBA achieves higher PSNR values while still maintaining lower deviations than the competing approaches. Figure 8 shows the PSNR values for each video frame during the interval of \([80,100]\) seconds. It can be observed from Fig. 8 that D-EMS, S-EMS and EDPF obtain lower PSNR values and higher variations than LTBA.

5.2.2 Ratio of Video Frames Past Decoding Deadline

First, we depict the average end-to-end video frame delay in Fig. 9. We can see from Fig. 9 that EDPF achieves lower average delay than LTBA, D-EMS and S-EMS because it always dispatches the data packets onto the path with minimal estimated end-to-end delay. However, as the loss rate is not taken into account in EDPF, the allocated FEC data packets may encounter severe channel losses during the transmission. Figure 10 shows the percentage of received video frames that miss their deadlines. Although EDPF gains better delay performance, it is not able to effectively deal with the loss rate and thereby results in more FEC data packets encounter channel loss. In general, LTBA guarantees more video frames delivery within their deadlines than the reference schemes because it can allocates the packets with regard to the delay constraint while still taking into account the loss property of each wireless channel. The results indicate the ‘loss-free’ bandwidth is a more reasonable metric in the FEC packets allocation than just only the loss, delay or available bandwidth.

5.2.3 Aggregate Loss Rate

Figure 11 shows the noisy and smoothed data of mean aggregate loss rate for all the compared schemes. Figure 11b illustrates that LTBA significantly outperforms D-EMS, S-EMS and EDPF in reducing the aggregate loss rate. This verifies our theoretical inductions in Sect. 4.2. The improvements of LTBA can be attributed for : (1) LTBA assigns less transmission rates to the wireless networks with higher loss rates, and (2) LTBA spreads out the packets’ departure so that the risk of consecutive packet loss can be minimized. EMS achieves better performance than EDPF as it dynamically splits the traffic load based on the loss rate of each wireless network, allocating less FEC data packets to the loss-prone channel. Note that during the intervals of \([55,70]\) and \([75,85]\) s the proposed LTBA achieves significantly lower aggregate loss rate than the reference schemes. This indicates that LTBA exhibits superiority in handling highly lossy channels effectively.

6 Conclusion and Discussion

In this paper, we have presented a novel Loss Tolerant Bandwidth Aggregation (LTBA) approach. Instead of reactively increasing the FEC redundancy, the proposed LTBA leverages the channel diversity to overcome the burst loss in heterogeneous wireless networks. In the future, we shall consider: (1) introduce dynamic FEC redundancy adaption into LTBA based on the loss rate of wireless networks, and (2) employ the video source rate adaption to handle the case in which the aggregate bandwidth of all wireless networks is insufficient compared to the video streaming rate.

Notes

We choose the JSVM in convenience for the source code integration as both Exata and JSVM are developed using C++. However, the H.264/AVC JM [25] software is developed using C language.

References

Oliveira, T., Mahadevan, S., & Agrawal, D. P. (2011). Handling network uncertainty in heterogeneous wireless networks. In Proceedings of IEEE INFOCOM.

YouTube, http://www.youtube.com/.

Hulu, http://www.hulu.com/.

Cisco company (2013). Cisco visual networking index: Global mobile data traffic forecast update, 2012–2017. May 2013 (online). Available: http://www.cisco.com/en/US/solutions/collateral/ns341/ns525/ns537/ns705/ns827/white_paper_c11-520862.html.

Jurca, D., & Frossard, P. (2009). Media flow rate allocation in multipath networks. IEEE Transactions on Multimedia, 9(7), 1227–1240.

Han, S., Joo, H., Lee, D., & Song, H. (2011). An end-to-end virtual path construction system for stable live video streaming over heterogeneous wireless networks. IEEE Journal on Selected Areas Communications, 29(5), 1032–1041.

Mushroom Networks Inc. (2013). Wireless broadband bonding network appliance (online). Available: http://www.mushroomnetworks.com.

Ernst, T., Montavont, N., Wakikawa, R., & Kuladinithi, K., (2008). Motivations and scenarios for using multiple interfaces and global addresses. Internet-Draft, IETF MONAMI6 Working Group.

Chebrolu, K., & Rao, R. (2006). Bandwidth aggregation for real-time applications in heterogeneous wireless networks. IEEE Transactions on Mobile Computing, 5(4), 388–403.

Jurca, D., & Frossard, P. (2007). Video packet selection and scheduling in multipath networks. IEEE Transactions on Multimedia, 9(3), 629–641.

Chow, A., Yang, H., Xia, C., Kim, M., Liu, Z., & Lei, H. (2010). EMS: Encoded multipath streaming for real-time live streaming applications. In Proceedings of IEEE ICNP.

Sharma, V., Kalyanaraman, S., Kar, K., Ramakrishnan, K., & Subramanian, V. (2009). MPLOT: A transport protocol exploiting multipath diversity. In Proceedings of IEEE INFOCOM.

Ramaboli, A., Falowo, O., & Chan, A. (2012). Bandwidth aggregation in heterogeneous wireless networks: A survey of current approaches and issues. Journal of Networks and Computer Applications, 35(6), 1674–1690.

Zhu, X., & Boronat, F. (2009). Distributed rate allocation policies for multihomed video streaming over heterogeneous access networks. IEEE Transactions on Multimedia, 56(12), 2912–2933.

Song, W., & Zhuang, W. (2012). Performance analysis of probabilistic multipath transmission of video streaming traffic over multi-radio wireless devices. IEEE Transactions on Wireless Communications, 11(4), 1554–1564.

Jurca, D., Frossard, P., & Jovanovic, A. (2009). Forward error correction for multipath media streaming. IEEE Transactions on Circuits and Systems for Video Technology, 19(9), 1315–1326.

Yousaf, M., Qayyum, A., & Malik, S. A. (2012). An architecture for exploiting multihoming in mobile devices for vertical handovers & bandwidth aggregation. Wireless Personal Communications, 66(1), 57–79.

Quantities and units—part 13: Information science and technology. ISO/TC12 WG12, IEC/TC25, Geneva, Switzerland, ISO/IEC 80000–13: 2008, 2008 (online). Available: http://www.iso.org/iso/catalogue_detail?csnumber=31898.

Jain, M., & Dovrolis, C. (2002). Pathload: A measurement tool for end-to-end available bandwidth. In Proceedings of passive and active measurement workshop.

Ribeiro, V., Riedi, R., Baraniuk, R., Navratil, J., & Cottrell, L. (2003). pathChirp: Efficient available bandwidth estimation for network paths. In Proceedings of passive and active measurement workshop.

Konrad, A., Zhao, B., Joseph, A., & Ludwig, R. (2003). A Markov-based channel model algorithm for wireless networks. Wireless Networks, 9(3), 189–199.

Stuhlmüller, K., Färber, N., Link, M., & Girod, B. (2000). Analysis of video transmission over lossy channels. IEEE Journal on Selected Areas in Communications, 18(6), 1012–1032.

Exata (2013). http://www.scalable-networks.com/exata.

QualNet (2013). http://www.scalable-networks.com/qualnet.

H.264/AVC Reference Software, JM 18.2 (2012). (online). Available: http://iphome.hhi.de/suehring/tml/.

H.264/SVC reference software, JSVM 9.1.3 (2012). (online). Available: http://ip.hhi.de/imagecom-G1/savce/downloads/SVC-Reference-Software.htm.

Video Test Media (2013). (Online). Available: http://media.xiph.org/video/derf/.

Tu, W., & Jia, W. (2005). APB: An adaptive playback buffer scheme for wireless streaming media. IEICE Transactions on Communications, E88.B(10), 4030–4039.

ANSI T1.TR.74-2001 (2001). Objective video quality measurement using a peak-signal-to-noise-ratio (PSNR) full reference technique. Draft technical report, American National Standards Institute, Ad Hoc Group on Video Quality Metrics, Washington, DC, USA, Tech. Rep. T1.TR.74-2001, 2001 (online). Available: http://webstore.ansi.org/RecordDetail.aspx?sku=T1.TR.74-2001.

Acknowledgments

This research is supported by the National Grand Fundamental Research 973 Program of China under Grant Nos. 2011CB302506, 2013CB329102, 2012CB315802; National Key Technology Research and Development Program of China “Research on the mobile community cultural service aggregation supporting technology” (Grant No. 2012BAH94F02); Novel Mobile Service Control Network Architecture and Key Technologies (2010ZX03004-001-01); National High-tech R&D Program of China (863 Program) under Grant No. 2013AA102301; National Natural Science Foundation of China under Grant Nos. 61003067, 61171102, 61001118, 61132001; Program for New Century Excellent Talents in University (Grant No. NCET-11-0592); Project of New Generation Broadband Wireless Network under Grant No. 2011ZX03002-002-01; Beijing Nova Program under Grant No. 2008B50. The authors would like to express their sincere gratitude for the anonymous reviewers who provide the suggestions to improve the paper quality.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wu, J., Shang, Y., Cheng, B. et al. Loss Tolerant Bandwidth Aggregation for Multihomed Video Streaming over Heterogeneous Wireless Networks. Wireless Pers Commun 75, 1265–1282 (2014). https://doi.org/10.1007/s11277-013-1422-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-013-1422-3