Abstract

This paper focus on the drought monitoring and forecasting for semi-arid region based on the various machine learning models and SPI index. Drought phenomena are crucial role in the agriculture and drinking purposes in the area. In this study, Standardized Precipitation Index (SPI) was used to predicted the future drought in the upper Godavari River basin, India. We have selected the ten input combinations of ML model were used to prediction of drought for three SPI timescales (i.e., SPI -3, SPI-6, and SPI-12). The historical data of SPI from 2000 to 2019 was used for creation of ML models SPI prediction, these datasets was divided into training (75% of the data) and testing (25% of the data) models. The best subset regression method and sensitivity analysis were applied to estimate the most effective input variables for estimation of SPI 3, 6, and 12. The improved support vector machine model using sequential minimal optimization (SVM-SMO) with various kernel functions i.e., SMO-SVM poly kernel, SMO-SVM Normalized poly kernel, SMO-SVM PUK (Pearson Universal Kernel) and SMO-SVM RBF (radial basis function) kernel was developed to forecasting of the SPI-3,6 and 12 months. The ML models accuracy were compared with various statistical indicators i.e., root mean square error (RMSE), mean absolute error (MAE), relative absolute error (RAE), root relative squared error (RRSE), and correlation coefficient (r). The results of study area have been showed that the SMO-SVM poly kernel model precisely predicted the SPI-3 (R2 = 0.819) and SPI-12 (R2 = 0.968) values at Paithan station; the SPI-3 (R2 = 0.736) and SPI-6 (R2 = 0.841) values at Silload station, respectively. The SMO-SVM PUK kernel is found that the best ML model for the prediction of SPI-6 (R2 = 0.846) at Paithan station and SPI-12 (R2 = 0.975) at the Silload station. The compared with SVM-SMO poly kernel and SVM-SMO PUK kernel was observed, these models are best forecasting of drought (i.e. SPI-6 and SPI-12), while SVM-SMO poly kernel is good for SPI-3 prediction at both stations. The results have been showed the ability of the SVM-SMO algorithm with various kernel functions successfully applied for the forecasting of multiscale SPI under the climate changes. It can be helpful for decision making in water resource management and tackle droughts in the semi-arid region of central India.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Deficiency of water is larges problems in worldwide expensive pressures to ecologies, an especially in various areas with different climatic types (Mokhtar et al. 2021; Yaseen et al. 2021; Kushwaha et al. 2022a). Dry is a spatiotemporal occurrence that develops when rainfall levels fall beyond normal for a prolonged period of time, presenting an ecology risks (Ihinegbu and Ogunwumi 2021; Kushwaha et al. 2022c). It crucially affects the different sectors related ti ecological components as a water resources availability, vegetation, agricultural, industrial production, wildlife, river basin systems, and socio-economic structures (Ahmed et al. 2019; Abdo 2020; Hateffard et al. 2021). However, global literatures on drought assessment indicates that extreme drought episodes are predicted to an increase problems about the climate change (Deo et al. 2018; Malik et al. 2021). Therefore, the process of predicting and modelling characteristics of drought, including duration, intensity, and frequency, which is a vital and urgent mechanism in the context of adequate water resources management to agricultural, industrial and service development.

Since the last five decades, current area are always facing the biggest problem of metrological and agriculture drought in the semi-arid region. Overall climate change factors impact on the entire global climate, and main important factors affected on the increasing temperature and decreasing agriculture crop (World Bank 2003). During the year 2013, severe drought is occurred due to low rainfall measured during monsoon season in Maharashtra, India. It was worst in the last 40 years. Almost 60% of agriculture depend on the rain water, and no any other source of water for crops during a drought situation in India. Indeed, "Indians know that Monsoon is India's real finance minister," the ecological activist Sunita Narian has been claimed. India faces its worst-ever water crisis in a drought period (Udmale et al. 2014). NITI Aayog, the leading policy investigation centre, says that demand for water in the country is projected to double its supply by 2030. Millions of farmers are now struggling to live with drought and crop failure in different parts of the country. Drought has been declared to be affected by more than 80% of districts in the Karnataka state and 70% in Maharashtra (Udmale et al. 2014; Sreekesh et al. 2019).

Researchers and scientist have developed and available many mathematical indicators in worldwide, which is measuring and an evaluating drought incidents, and crop moisture index (CMI) (Palmer 1968); Palmer Drought Severity Index (PDSI) (Palmer 1965); and Standardized Precipitation Index (SPI) (McKee et al. 1995; Shukla and Wood 2008). SPI is one of the most reliable indicators are used to globally assessment of meteorological drought, since its suitability and capabilities to assess on multiple temporal scales (1-, 3-, 6-, 9-, and 12-month timescales) with relevant constructive interpretations (Dutta et al. 2013; Bonaccorso et al. 2015; Ionita et al. 2016; Komasi et al. 2018). However, SPI is a probabilistic index that used rainfall variability as a crucial indicator influencing drought severity and duration, ranges between -3 (severe drought) to + 3 (wet conditions) (Dutta et al. 2013; Barker et al. 2016; Saada and Abu-Romman 2017; Ali et al. 2019b).

SPI is a non-linear method based on the drought prediction by conventional statistical methods with great uncertainty. Recently, the implementation of machine learning (ML) algorithms was used to SPI estimation and based on the support vector regression (SVR) (Belayneh et al. 2014; Borji et al. 2016), an extreme learning machine (ELM) (Park et al. 2016; Ali et al. 2019a; Liu et al. 2021), linear genetic programming (LGP) (Mehr et al. 2014), adaptive regression spline (MARS) (Deo et al. 2017), an extremely randomized tree (ERT) (Rhee and Im 2017), adaptive neuro-fuzzy inference system (ANFIS) (Nguyen et al. 2015; Gocić et al. 2015), artificial neural network (ANN) (Deo and Şahin 2015; Banadkooki et al. 2021), M5 Tree (M5T) (Yaseen et al. 2021; Elbeltagi et al. 2022b), and random forest (RF) (Masroor et al. 2021; Elbeltagi et al. 2022b) have presented huge potentials with promising results for an estimating and modeling drought compared with conventional methods.

Super vector machine (SVM) is a novel machine-learning algorithm has been reported as a reliable method dealing with complex sorting issues (Suess et al. 2015; Borji et al. 2016; Kushwaha et al. 2021). Moreover, the implementation of SVM has essential features such as advanced validation, geometric explanation, and precise statistical tracking with a low number of a training data sets (Zhang and Wu 2012; Achirul Nanda et al. 2018). In the SVM models, the kernel functions are presented a worthy tool because it aids in determining the dataset to a more an excellent proportional environment to acquire a reliable an explanation at classification method (Achirul Nanda et al. 2018; Sihag et al. 201.8). prediction of drought is must for the understanding of the intensity of drought in future, which plans best for controlling and decreasing less impact by drought condition and climate changes in future. In this context, developed some models for forecasting of drought in the semi-arid region (Sakaa et al. 2022). These machine learning models can be also good predicated the information by using the correct information of input variables (Buttafuoco et al. 2015). In India every year, any part of country is suffering drought but no one given the future predication of drought and intensity, this is a so long research gap in the drought research and also forecasting models (Pande et al. 2022). Machine learning models can be given development of various models for forecasting of drought in the semi- arid region. Various models present but researchers are not applied for the development of drought forecasting in the India study area (Pande et al. 2022). Some of researchers is not believed on machine learning models but in future such kind of study can be very helpful for quick development of machine learning model for forecasting of values (Tan et al. 2019). Now in this adopted this model have been given more accurate results depend on the 30 year SPI data (Rhee and Im 2017). Compared with previous studies and current study directly shown a very big research gap related to forecasting of drought and machine learning models in the semi-arid condition. This current research results are a cover an entire research gap filled in the view of drought forecasting and development of ML model to prediction of metrological and agriculture drought. Despite the wide variety of drought prediction algorithms, the generalization of a model suitable for all global and regional climatic characteristics is still a necessary procedure. Importantly, studies related to testing the potential of Kernal functions in modeling and predicting drought are still limited. Novelty of this research, we have developed the forecast model for semi-arid condition based on the 30-year rainfall data and processing entire datasets and development model in the python programming. We have found the best models for forecasting of drought particularly in the dry land area. Such type of research works first time introduced and suggested to policy makers, future plans and governments. However, projected results can be used to preparation of drought policy and agriculture purposes in the semi-arid region. This is main novelty and objective has been achieved for sustainable development planning and management. The present study focuses to improve the performance of SVM algorithm using sequential minimal optimization (SMO) under various kernel functions i.e., SMO-SVM poly kernel, SMO-SVM Normalized poly kernel, SMO-SVM PUK (Pearson Universal Kernel) and SMO-SVM RBF (radial basis function) kernel for prediction multiscale droughts at the upper Godavari River basin in central India, affected by several droughts.

2 Material and Methods

2.1 Study area

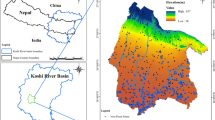

The upper Godavari River basin is located in the central India of Maharashtra state. It is located between 19°00′00′′ and 20°30′00′′ N latitudes and 73°20′00′′ and 75°40′00′′ E longitudes (Fig. 1). This basin area is in the semi-arid zone with basaltic rock. The average rainfall is 700-850 mm with minimum and maximum temperature observed is 25° to 40 °C. The agricultural land is most dependent on rainwater and groundwater. The weather stations information is presented in Table 1. It comes from Trimbakeshwar, which is located at a height of 1067 m in Maharashtra's of Nashik district. It mostly passes through to the eight states of Maharashtra, Telangana, Andhra Pradesh, Karnataka, Madhya Pradesh, Chhattisgarh, Odisha, and Puducherry as it flows into the Bay of Bengal. The Upper Godavari river basin, which is wholly inside the state of Maharashtra, is also a part of the study region. The basin is usually dry in other seasons because the monsoon season is when it rains the most. The basin of water resources is primarily used to agriculture purposes. In this study area has been found basaltic rock overall basin. This river basin area is most important for agriculture crops such as onion, sugarcane, vegetables, wheat and horticulture production, however most of the crops are cash crops in surrounding basin area.

2.2 Methodology

The present study was evaluated the best subset regression model and sensitivity analysis, which is selected best combinations of variables as an input to build of machine learning models. Four machine-learning algorithms were considered to develop in MATLAB software varying in various kernel functions: poly kernel, Normalized poly kernel, PUK and RBF. The adopted methodology is presented in Fig. 2.

2.2.1 Support Vector Machine Algorithm

Support vector machine (SVM) is a classification and regression supervised learning technique. SVM is determined the extrication hyperplane that separations vector space in vectors sub-set assign to a collection of p-dimensional vectors space; every divided sub-set (data set) is allocated to one class. The SVM algorithm was introduced and created by Vladimir Vapnik and Alexey Chervonenkis in 1963 (Cortes and Vapnik 1995). SVM has been proposed through kernel technique on largest boundary hyperplanes to create non-linear classifiers (Boser et al. 1992; Cortes and Vapnik 1995). Linear SVP is given in Eq. (1)

where \({y}_{1},{y}_{1}\) are also 1 and -1, for every showing the type, which the point \({x}_{1},{x}_{1}\) be appropriate. Every \({x}_{1},{x}_{1}\) is a \(\rho \rho\)-dimensional actual vector. We wish to discover the highest hyperplane that splits the class of points \({x}_{1},{x}_{1}\) for where \({y}_{1},{y}_{1}=1\) from the class of sites for which \({y}_{1}=-1\), which is specified so that the distance between the hyperplane and the nearest point \({x}_{1},{x}_{1}\) by either category is greatest.

Any hyperplane is written as the set of points xx filling with Eq. (2)

where is the normal vector to the support vectors (not necessarily normalized), this is similar to Hesse normal form, only it isn't always a unit vector.

During the training of support-vector machines, the quadratic programming (QP) problem can be solved using the sequential minimum optimization (SMO) approach (SVM). Support vector machines (SVMs) are frequently trained using SMO, which is implemented by the well-liked LIBSVM tool. The SMO algorithm was announced in 1998, and the SVM community was quite happy about it because earlier methods for SVM training were far more difficult and expensive third-party QP solvers were necessary. The aforementioned optimization problem is addressed by the iterative SMO algorithm. SMO breaks down this problem into a number of its tiny sub-problems, and then analytically resolves each one. Due of the linear equality requirement involving them, the lowest problem involves two Lagrange multipliers.

2.2.2 Poly kernel

The kernel functions are used to integrate the original pure (linear/non-linear) dataset into a higher dimensional space is required to convert it to a linear dataset.

A polynomial kernel is given in below

wherever x and y are input vector space, i.e. feature vectors derived using training or testing samples, c ≥ 0 can factor interchange off an effect of greatest-order against lower-order define in the polynomial. When c = 0, the kernel is called as homogeneous (Shashua 2009).

An additional general poly kernel xTyxTy by a user-particular scalar factor

An example of the nature φ can be observed. If we set Let d = 2, we become the quadratic kernel's different case. After reforming and multinomial theorem (double-the outermost use is the binomial theorem).

From this, it monitors that the feature is provided by:

2.2.3 Normalize Poly Kernel

Normalize a poly kernel by dividing every element by the sum over all kernel elements, resulting in a normalized kernel with a sum including all elements equal to unity. This assures that the average pixel in the changed image is the same brightness as the original data.

Normalize poly kernel is expressed as

2.2.4 PUK (Pearson Universal Kernel)

The Pearson VII function for the fitted graph (Sihag et al. 2018) is given by;

where H is peak height at its center, x0x0, and x is the single variable. The half-width and investigation parameters of the peak are controlled by the parameters x and ω. A function, on the other hand, is considered legitimate if the kernel matrix relating to it is homogeneous and positive semi-fixed. Üstün et al. (2006) rewrote the preceding equation into a function of two vectors to show that the PUFK does indeed satisfy these constraints (Zhang and Ge 2013; Sihag et al. 2018).

where \({x}_{i},{x}_{i}\) and \({x}_{j}{x}_{j}\) are both vector arguments. The peak off-set define \({x}_{0}{x}_{0}\) in Eq. (8) Without losing generality, the maximum height H is eliminated and simply replaced by 1. For every random pair of numbers, the Pearson VII function kernel will result in a matrix with ones on the diagonal as well as all values range of 0 and 1 (\({x}_{i},{x}_{i}\) and \({x}_{j}{x}_{j}\)). It is a strong equivalent or even more mapping power than conventional kernel functions, resulting in SVM performance that is equal or better than standard kernel functions.

2.2.5 RBF (Radial Basis Function) Kernel

The kernel function is used in several machine learning algorithms. The concepts underpinning kernel functions are quite complex, thus understanding them is essential. Various kernel functions are regularly utilized. The radial basis function (RBF) kernel is most prevalent (at least for this study). The RBF is expressed as an equation:

Here, K view points for RBF kernel. The x and x’ are vectors where x’ is a “point of reference” vector and the || term is Euclidean distance. The RBF kernel function returns a number that is always between 0.0 and 1.0. RBF gives 1.0 if x and x' are equal. The lesser the value of RBF becomes as x and x' become more apart, approaching, but not quite reaching, 0. As a result, the RBF kernel is a similarity measure.

The Partial Auto Correlation Function (PACF), which also regresses the values of the time series at all shorter lags, provides the partial correlation between a stationary time series and its own lagged values, which can be used to choose the best input variables for the developed machine learning models. The autocorrelation function, in contrast, does not take into account extra lags. The examination of data to determine the amount of lag in an autoregressive (AR) model depends on this function. This function was initially used to find the required lags by graphing the partial auto correlative functions in the Box-Jenkins technique for modeling time series.

2.3 Performance Indicators

The actual data of SPI and modeled values were compared through 30 year datasets. To evaluate the accuracy of developed hybrid support vector machine models, the following statistical indicators have been used, i.e., Root Mean Square Error (RMSE), Coefficient of Determination (R2), and Mean Absolute Error (MAE) (Elbeltagi et al. 2022a, c; Kushwaha et al. 2022b). This indicators are well-defined as:

-

1.

Root Mean square error (RMSE)

$$\mathrm{RMSE }=\sqrt{\frac{1}{\mathrm{N}}{\sum }_{i=1}^{N}{{(SPI}_{A}^{i}-{SPI}_{P}^{i})}^{2}}$$(11) -

2.

Coefficient of determination (R.2)

$${\mathrm{R}}^{2}= {\left[\frac{{\sum }_{i=1}^{N}{(SPI}_{A}^{i}-{\overline{SPI} }_{A}){(SPI}_{P}^{i}-{\overline{SPI} }_{P})}{\sqrt{{\sum }_{i=1}^{N}{{(SPI}_{A}^{i}-{\overline{SPI} }_{A})}^{2}}\sqrt{{\sum }_{i=1}^{N}{{(SPI}_{P}^{i}-{\overline{SPI} }_{P})}^{2}}}\right]}^{2}$$(12) -

3.

Mean absolute error (MAE)

$$\mathrm{MAE}=\frac{1}{\mathrm{N}}{\sum }_{i=1}^{N}{|SPI}_{P}^{i}-{SPI}_{A}^{i}|$$(13)

In which, \({SPI}_{A}^{i}\) is an observed or actual value, \({SPI}_{P}^{i}\) is simulated or forecasted value,\(\overline{{SPI }_{A}}\) and \(\overline{{SPI }_{P}}\) are the mean values of observedand forecasted samples, and N is the total number of data points.

3 Results and Discussion

3.1 Best Subset Regression and Sensitivity Analysis

3.1.1 Input Selection Using Best Subset Model for the SPI 3, 6, and 12 Months of Selected Stations

The finest input grouping was certain by six statistics analysis norms (MSE, determination coefficients (R2), Adjusted R2, Mallows' Cp, Akaike's AIC and Amemiya's PC) at two stations and outputs are shown in Tables 2(A–C) and 3(A–C) for SPI-3, SPI-6, and SPI-12 respectively at Paithan and Silload stations. From Table 2(A), it can be seen that at Paithan station, the bolded blue of input combination is found as a better combination input variables, it is given best results of arithmetical norms like MSE, determination coefficients (R2), Adjusted R2, Mallows' Cp, Akaike's AIC and Amemiya's PC as 0.517, 0.726, 0.720, 2.180, -151.13, -130.29, 0826, respectively of among all input combinations for prediction of SPI-3 model. For SPI 6, the lowest values of MSE (0.436) and lowest values of Amemiya's PC of 0.172 among all input combinations. This input combination was achieved a high equal value for R2 (0.831) and a high Adj- R2 of 0.834. Furthermore, the lowest values of Mallows' Cp (-0.837) and lowest values of Amemiya's PC of 0.065 among all input combinations in SPI 12.

Similarly, at the Silload station, the bolded blue input grouping having the lowest value of Mallows' Cp (0.363) and lowest value of Amemiya's PC (0.280) among all selected input combinations as the best input combination for SPI-3 and it has a high equal value for R2 (0.725) and a high Adj- R2 of 0.729. For SPI-6, the lowest values of Mallows' Cp (2.66) and lowest values of Amemiya's PC of 0.179 among all input combinations. This input combination was achieved a high equal value for R2 (0.824) and a high Adj- R2 of 0.828. Furthermore, the lowest values of Mallows' Cp (-2.02) and lowest values of Amemiya's PC of 0.073 among all input combinations for SPI-12. We have used 30-years data sets are divided into two sections i.e. 75% training and 25% testing dataset, which are measured for validation of the ML models.

3.1.2 Sensitivity Analysis

In this analysis of input combinations are obtained using regression investigation for classify the more important input factors used to forecast of multiscale SPI by machine learning models. The acquired result from regression proces at the Paithan station can be seen in Table 4(A–C) and Fig. 3a–c. The outputs of accomplished regression process based on whole input variables has been showed of SPI (t-1), SPI (t-4), SPI (t-5), SPI ((t-6), and SPI (t-9) have the highest absolute standard coefficients (β = 0.892, -0.103, 0.134, -0.615 and 0.101) recognized as more important input variables for simulation of SPI-3. In SPI 6 simulation, the conclusions of done regression analysis based on input variables have been proved that SPI (t-1), SPI (t-2), SPI (t-6) and SPI (t-7) by having highest absolute standard coefficients (β = 0.993, -0.071, -0.140 and 0.128) were observed as more significant input parameters, respectively. In addition, our results stated that SPI (t-1), SPI (t-3) and SPI (t-6) by having the highest absolute standard coefficients (β = 1.000, 0.086 and -0.157) were identified as the more significant input variables, which is used to estimating of SPI-12.

The Table 5(A–C) and Figs. 4a–c listed the outcomes of regression analysis to identify the most effective input parameters in predictive data-driven models at the Silload station. The results of performed regression analysis on all input parameters proved that SPI (t-1), SPI (t-3), SPI (t-4), and SPI (t-10) having the highest absolute standard coefficients (β = 0.946, -0.269, 0.105, and 0.087) were found as the most significant initial parameters, respectively for simulation of SPI-3. As well, for SPI 6 simulation, an observed of performed regression process on whole input variables have been proved of SPI (t-1), SPI (t-2), SPI (t-3), SPI (t-5), and SPI (t-9) by having highest absolute standard coefficients (β = 1.002, -0.144, 0.082, -0.107, and 0.086) are found as most powerful input factors. Similarly, the result showed that SPI (t-1) and SPI (t-8) by having the highest absolute standard coefficients (β = 1.012 and -0.092) were found as the important input factors, which is computed of SPI-12.

3.2 Evaluation Machine Learning Models

The multiscale SPI at both various stations were computed by using applied four ML models. The performances of used to models were assessed with associated using different performance metrics (i.e., MAE. RMSE, RAE, RRSE and r). The model with a high r and lowest value of MAE, RMSE, RAE, and RRSE with close to 0 is considered the larger accurateness for assessment of SPI. The common trend MAE. The models performance indicators R, RMSE, RAE, RRSE are obtainable in Tables 4 and 5(A–C). The algorithm SMO-SVM with two kernels i.e. SMO-SVM poly kernel and SMO-SVM PUK kernel has possible to assess the SPI with better accuracy as associated with another selected kernels.

3.2.1 Estimation of Multiscale SPI at Paithan Station

The algorithm was used to SMO-SVM with four kernel functions, i.e. Poly kernel, normalized poly kernel, PUK kernel and RBF kernel, was calculated using statistics indicators (MAE, RMSE, RAE, RRSE, and r) at Paithan station and obtainable in Table 6(A–C). It is clear from table of SMO-SVM poly kernel model performed better during the training and testing period in predicting SPI-3. The results of r, MAE, RMSE, RAE (%) and RRSE (%) for model SMO-SVM poly kernel were obtained as 0.773 and 0.905, 0.699 and 0.329, 0.979 and 0.493, 75.38 and 40.52, 70.52 and 42.88, respectively during training and testing stages for SPI-3. In case of SPI-6, the punk kernel improves the performance of SMO-SVM as it gave the best performance criteria values, MAE = 0.387, RSME = 0.555, RAE = 47.17%, RRSE = 40.10%, r = 0.920 during the testing period. Similarly, for SPI-12, the SMO-SVM algorithm with Poly kernel was superior over the other selected kernel function and gave the values of r, MAE, RMSE, RAE (%) and RRSE (%) as 0.961 and 0.984, 0.254 and 0.187, 0.543 and 0.361, 26.22 and 18.77, 28.70 and 20.01, respectively during training and testing phases. Therefore, it revealed that the SMO-SVM algorithm performed better with poly kernel for SPI-3 and SPI-12, while PUK kernel improved the performance of the SMO-SVM model during the prediction of SPI-6.

The temporal variation between estimated and observed multiscale SPI and their scatter plots are presented in Fig. 5a–c. In scatter graphs, the regression line delivered the highest coefficient of determination (R2) as 0.819 for the SMO-SVM poly kernel (SPI-3), 0.846 for the SMO-SVM PUK kernel (SPI-6), and 0.968 for the SMO-SVM poly kernel (SPI-12), respectively [Fig. 5a–c]. The regression line (RL) was above the best fit (1:1) for all the applied models, which means these models overestimated the multiscale SPI values concerning the observed SPI values. The SMO-SVM poly kernel and the SMO-SVM PUK kernel models provided that RL is good fitted line and presented a superior performance.

In addition to the above, the relative analysis of models was done with help of Taylor diagram. According to Fig. 6a–c, the SMO-SVM RBF kernel model was located furthest. The SMO-SVM poly kernel model for SPI-3 & SPI-12 and SMO-SVM PUK kernel model for SPI-6 were located nearest the observed point depending on the standard deviation, correlation, and RMSE. This showed SMO-SVM RBF kernel as the worst model and SMO-SVM poly kernel and SMO-SVM PUK kernel as the best model among the selected models for multiscale SPI estimation.

3.2.2 Estimation of Multiscale SPI at Silload Station

The results obtained for multiscale SPI estimation at Silload station is summarized in Table 7(A–C). It revealed that the SMO-SVM poly kernel model was superior over the other models with r = 0.858, MAE = 0.421, RMSE = 0.644, RAE = 45.17% and RRSE = 51.60% during the testing period in the estimation of SPI-3. Similarly, Table 7(B) summarized that the best performance of SMO-SVM poly kernel model was for projecting the SPI-6 at Silload station with the best performance criteria values, MAE = 0.359, RSME = 0.486, RAE = 45.11%, RRSE = 39.71%, r = 0.917 during the testing period. As noted from Table 7(B), the SMO-SVM performance was observed to be the best with PUK kernel functions for SPI-12 and having the best performance indicator values (MAE = 0.191, RSME = 0.338, RAE = 16.64%, RRSE = 18.71%, r = 0.987) during the testing span for projection of drought condition.

The temporal disparity between the projected and calculated values for multiscale SPI by the SMO-SVM with four kernel functions i.e. Poly kernel, normalized poly kernel, PUK kernel and RBF kernel models during the testing span at Silload station are presented in Figs. 7a–c. As seen from these Figures, the line of regression of SMO-SVM poly kernel was quite near the best-fit line and the highest coefficient of determination (R2) as 0.736 and 0.841 for SPI-3 and SPI-6 respectively. The SMO-SVM PUK kernel showed the regression line close to the best-fit line for SPI-12 and gave the value of R2 of 0.975.

Accordingly, the spatial design of projected and calculated values of multi-scalar SPI for the SMO-SVM with four kernel functions i.e. Poly kernel, normalized poly kernel, PUK kernel and RBF kernel models were measured by utilizing the Taylor diagram (TD) as a polar graph for accomplishing a graphical judgment of model performance based on standard deviation, correlation coefficient, and RMSE. Figures 8a–c shows the TD of applied models for the testing span. Consequently, Fig. 8a–b shows that the SMO-SVM with the chosen poly kernel can be utilized for SPI projection at 3 and 6-month time spans and Fig. 8c displays that the SMO-SVM with PUK kernel for the 12-months.

4 Discussion

The performance of the SMO-SVM algorithm and four various kernel functions was assessed of both stations, (Paithasn and Silload) for the multiscale prediction of SPI (i.e. 3, 6 and 12 month). The results have been obtained highlighted the suitability of machine learning algorithms for the prediction of multiscale SPI, especially. Figures 5, 6 and 7 represented the temporal variation between predicted and calculated SPI values and their scatter plots for SPI-3, SPI-6, and SPI-12 months. In scatter graphs, the regression line has given the high value of the coefficient of determination (R2) regarding the SMO-SVM with poly kernel and PUK kernel at both stations for SPI-3, SPI-6, and SPI-12 months. As seen from the Figures, the meta-heuristics algorithms performed better in a longer time scale. The Taylor Diagrams (Figs. 6, 7 and 8) showed a more comparable depiction of model performance in predicting monthly SPI values. The SMO-SVM RBF kernel model was located furthest, and the SMO-SVM poly kernel model was located nearest to the observed point based on the standard deviation, correlation, and RMSE for SPI-3, 12 at Paithan and SPI-3, 6 at Silload stations. This showed that the SMO-SVM poly kernel has higher accuracy in predicting monthly SPI than other kernel-based predictions.

The findings from this study are also associated with the recent work shown in a different location in the world (Deo et al. 2017; Rahmati et al. 2020; Malik et al. 2021; Elbeltagi et al. 2022b). Deo et al. (2017) applied machine learning models for predicting SPI values for eastern Australia. The result showed that M5Tree models were the best model and this model is a lower value of RSME and MAE and a high R2 value. A similar attempt was made by Rahmati et al. (2020) and compared the machine learning algorithms random forests (RF), multivariate adaptive regression splines (MARS), flexible discriminant analysis (FDA), boosted regression trees (BRT), and support vector machines (SVM) for spatial modelling of agricultural droughts in the south-east region of Australia. They found RF models yielded the highest accuracy than the other models. Malik et al. (2021) predicted SPI using Multi-layers Perceptron Neural Network (MLPNN), Co-Active Neuro-Fuzzy Inference System (CANFIS), and Multiple Linear Regression (MLR), in the Garhwal, Uttarakhand, India. They found that CANFIS model has higher accuracy in the prediction of SPI-3, 6 and 12 in Dehradun; SPI-1, 3, 6, 9 and 12 months in Chamoli and Tehri Garhwal; SPI-1, 3, 6, and 9 months in Haridwar and Pauri Garhwal; SPI-1, 3, and 6 months Rudraprayag and SPI-3 in Uttarkashi station, respectively. Özger et al. (2020) modelled the self-calibrated Palmer Drought Severity Index (SC-PDSI) values through the application of machine learning techniques (i.e., ANFIS, SVM, and M5 models) and their hybrid with empirical mode decomposition (i.e., EMD-ANFIS, EMD-SVM, EMD-M5) and wavelet decomposition (i.e., WD-ANFIS, WD-SVM, WD-M5). They reported that WD hybrid models such as WD-ANFIS, WD-SVM, WD-M5 have better performance than the EMD hybrid models. Aiyelokun et al. (2020) assessed the presentation of Support Vector Machine (SVM) with various kernel classes with ANN depending on the various neural network structures used for the forecasting of multiscale SPI values at Minna, North Central Nigeria. The SVM with Radial Basis kernel outperformed other SVM types with R2 (0.64) and ANN with two hidden layers gave the best result. The present study also unravelled that of all the kernel types considered for the SMO-SVM algorithm, the algorithm with poly kernel and PUK kernel has high accuracy than the Normalized and RBF kernels in the prediction of monthly SPI at both the stations. The study further revealed that the performance of Poly kernel and PUK kernel based-SMO-SVM were close to each other in the prediction of long time droughts (i.e., SPI-6 and SPI-12). Low precipitation, significant oscillations in average rainfall, and climate change, particularly as a result of regional and global warming, all have an impact on the occurrence of drought. Therefore, it is imperative to create drought management policies and successfully carry them out with the help and support of public and private entities. With the use of three months' worth of SPI data, the findings of the current study can be used to understand the water availability for the entire year in advance, which can then be used to develop an effective climate-smart agriculture strategy with ramifications for the world economy.

5 Conclusion

Assessment of extreme conditions in the semi-arid region plays an important role in water resource management. One of the most expensive risks to environments worldwide is drought, particularly in areas with complex climate patterns. Drought is a spatial–temporal phenomenon that happens when precipitation levels fall below average for a given time period and become dangerously low. The study was evaluated the improved support vector machine based on sequential minimal optimization (SVM-SMO) under various kernel functions (i.e., SMO-SVM poly kernel, SMO-SVM Normalized poly kernel, SMO-SVM PUK kernel and SMO-SVM RBF kernel) in predicting the SPI-3,6, and 12 months at the upper Godavari river basin, India. Data with extra variability have been selected and used for training and testing model performance. Ten input combinations were used and the best combinations were selected using the method of subset regression and sensitivity analysis. The performance was assessed using statistical criteria and visual graphics. The results revealed that at Paithan station, the SVM-SMO poly kernel model performed well in capturing SPI-3 (RSME = 0.493, and r = 0.905) and SPI-12 (RSME = 0.361, and r = 0.984), while predicting SPI-6, the SMO-SVM at PUK kernel performed superior (RSME = 0.555, and r = 0.920). Similarly, at the Silload station, the SVM-SMO with poly kernel function showed the best result for SPI-3 (r = 0.858) and SPI-6 (r = 0.917) and SVM-SMO algorithm with PUK kernel precisely predicted SPI-12 with a lower value of RMSE (0.338) and high value of correlation coefficient (0.987). This showed the efficacy of applied algorithms in predicting the SPI drought index. Based on the result obtained, it can be concluded that the SVM-SMO algorithm with poly and PUK kernels showed competitive results in the prediction of SPI- 6 and SPI-12. While predicting SPI-3, the SVM-SMO algorithm with a poly kernel was on the top during the testing period at both stations. It has been observed that the performance of the applied algorithms with various kernel functions was increased when predicting the long-term drought conditions. Finally, the poly and PUK kernels function significantly enhanced the performance of SMO-SVM algorithms by improving the correlation coefficient and decreasing the MAE and RMSE values in the study stations. This study provides simple information and will help policymakers or resource managers in the preparation of droughts mitigation strategies. However, it becomes more challenging due to uncertainties, heterogeneity, linked datasets, methodologies, scenarios, models with a lot of algorithmic parameters, search space, and optimization process. Thus, future study should concentrate on reducing these uncertainties and enhancing optimization performance. Additionally, because just two stations were chosen for this investigation, it is possible to evaluate the applicability of the suggested SMO-SVM model at many sites with variable agro-climatic conditions under various sceneros in order to reach a more generalized conclusion.

Data Availability and Materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Abdo HG (2020) Evolving a total-evaluation map of flash flood hazard for hydro-prioritization based on geohydromorphometric parameters and GIS–RS manner in Al-Hussain river basin, Tartous, Syria. Nat Hazards 104:681–703. https://doi.org/10.1007/s11069-020-04186-3

Achirul Nanda M, Boro Seminar K, Nandika D, Maddu A (2018) A comparison study of kernel functions in the support vector machine and its application for termite detection. Information 9:5. https://doi.org/10.3390/info9010005

Ahmed K, Shahid S, Chung E-S et al (2019) Climate change uncertainties in seasonal drought severity-area-frequency curves: Case of arid region of Pakistan. J Hydrol 570:473–485. https://doi.org/10.1016/j.jhydrol.2019.01.019

Aiyelokun O, Ojelabi A, Agbede O (2020) Performance evaluation of machine learning models in predicting dry and wet climatic phases. J Soft Comput Civil Eng 4:29–48. https://doi.org/10.22115/scce.2020.213319.1154

Ali M, Deo RC, Maraseni T, Downs NJ (2019a) Improving SPI-derived drought forecasts incorporating synoptic-scale climate indices in multi-phase multivariate empirical mode decomposition model hybridized with simulated annealing and kernel ridge regression algorithms. J Hydrol 576:164–184. https://doi.org/10.1016/j.jhydrol.2019.06.032

Ali R, Kuriqi A, Abubaker S, Kisi O (2019) Long-term trends and seasonality detection of the observed flow in Yangtze River using Mann-Kendall and Sen’s innovative trend method. Water 11:1855. https://doi.org/10.3390/w11091855

Banadkooki FB, Singh V, Ehteram M (2021) Multi-timescale drought prediction using new hybrid artificial neural network models. Nat Hazards. https://doi.org/10.1007/s11069-021-04550-x

Barker LJ, Hannaford J, Chiverton A, Svensson C (2016) From meteorological to hydrological drought using standardised indicators. Hydrol Earth Syst Sci 20:2483–2505. https://doi.org/10.5194/hess-20-2483-2016

Belayneh A, Adamowski J, Khalil B, Ozga-Zielinski B (2014) Long-term SPI drought forecasting in the Awash River Basin in Ethiopia using wavelet neural network and wavelet support vector regression models. J Hydrol 508:418–429. https://doi.org/10.1016/j.jhydrol.2013.10.052

Bonaccorso B, Cancelliere A, Rossi G (2015) Probabilistic forecasting of drought class transitions in Sicily (Italy) using standardized precipitation index and north atlantic oscillation index. J Hydrol (Amsterdam) 526:136–150. https://doi.org/10.1016/j.jhydrol.2015.01.070

Borji M, Malekian A, Salajegheh A, Ghadimi M (2016) Multi-time-scale analysis of hydrological drought forecasting using support vector regression (SVR) and artificial neural networks (ANN). Arab J Geosci 9:725. https://doi.org/10.1007/s12517-016-2750-x

Boser BE, Guyon IM, Vapnik VN (1992) A training algorithm for optimal margin classifiers. In Proceedings of the 5th annual workshop on Computational learning theory. Association for Computing Machinery, New York, NY, USA, pp 144–152

Buttafuoco G, Caloiero T, Coscarelli R (2015) Analyses of drought events in Calabria (Southern Italy) using standardized precipitation index. Water Res Manag 29:557–573

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20:273–297. https://doi.org/10.1007/BF00994018

Deo RC, Kisi O, Singh VP (2017) Drought forecasting in eastern Australia using multivariate adaptive regression spline, least square support vector machine and M5Tree model. Atmos Res 184:149–175. https://doi.org/10.1016/j.atmosres.2016.10.004

Deo RC, Şahin M (2015) Application of the extreme learning machine algorithm for the prediction of monthly Effective Drought Index in eastern Australia. Atmos Res 153:512–525. https://doi.org/10.1016/j.atmosres.2014.10.016

Deo RC, Salcedo-Sanz S, Carro-Calvo L, Saavedra-Moreno B (2018) Chapter 10 - Drought Prediction With Standardized Precipitation and Evapotranspiration Index and Support Vector Regression Models. In: Samui P, Kim D, Ghosh C (eds) Integr Disaster Sci Manag. Elsevier, pp 151–174

Dutta D, Kundu A, Patel NR (2013) Predicting agricultural drought in eastern Rajasthan of India using NDVI and standardized precipitation index. Geocarto Int 28:192–209. https://doi.org/10.1080/10106049.2012.679975

Elbeltagi A, Di Nunno F, Kushwaha NL et al (2022a) River flow rate prediction in the Des Moines watershed (Iowa, USA): a machine learning approach. Stoch Environ Res Risk Assess. https://doi.org/10.1007/s00477-022-02228-9

Elbeltagi A, Kumar M, Kushwaha NL et al (2022b) Drought indicator analysis and forecasting using data driven models: case study in Jaisalmer. Stoch Environ Res Risk Assess, India. https://doi.org/10.1007/s00477-022-02277-0

Elbeltagi A, Raza A, Hu Y et al (2022c) Data intelligence and hybrid metaheuristic algorithms-based estimation of reference evapotranspiration. Appl Water Sci 12:152. https://doi.org/10.1007/s13201-022-01667-7

Gocić M, Motamedi S, Shamshirband S et al (2015) Potential of adaptive neuro-fuzzy inference system for evaluation of drought indices. Stoch Environ Res Risk Assess 29:1993–2002. https://doi.org/10.1007/s00477-015-1056-y

Hateffard F, Mohammed S, Alsafadi K et al (2021) CMIP5 climate projections and RUSLE-based soil erosion assessment in the central part of Iran. Sci Rep 11:7273. https://doi.org/10.1038/s41598-021-86618-z

Ihinegbu C, Ogunwumi T (2021) Multi-criteria modelling of drought: a study of Brandenburg Federal State. Model Earth Syst Environ, Germany. https://doi.org/10.1007/s40808-021-01197-2

Ionita M, Scholz P, Chelcea S (2016) Assessment of droughts in Romania using the Standardized Precipitation Index. Nat Hazards 81:1483–1498. https://doi.org/10.1007/s11069-015-2141-8

Komasi M, Sharghi S, Safavi HR (2018) Wavelet and cuckoo search-support vector machine conjugation for drought forecasting using Standardized Precipitation Index (case study: Urmia Lake, Iran). J Hydroinf 20:975–988. https://doi.org/10.2166/hydro.2018.115

Kushwaha NL, Elbeltagi A, Mehan S et al (2022a) Comparative study on morphometric analysis and RUSLE-based approaches for micro-watershed prioritization using remote sensing and GIS. Arab J Geosci 15:564. https://doi.org/10.1007/s12517-022-09837-2

Kushwaha NL, Rajput J, Elbeltagi A et al (2021) Data intelligence model and meta-heuristic algorithms-based pan evaporation modelling in two different agro-climatic zones: a case study from Northern India. Atmosphere 12:1654. https://doi.org/10.3390/atmos12121654

Kushwaha NL, Rajput J, Sena DR et al (2022b) Evaluation of data-driven hybrid machine learning algorithms for modelling daily reference evapotranspiration. Atmos Ocean 62:1–22. https://doi.org/10.1080/07055900.2022.2087589

Kushwaha NL, Rajput J, Shirsath PB et al (2022c) Seasonal climate forecasts (SCFs) based risk management strategies: A case study of rainfed rice cultivation in India. J Agrometeorol 24:10–17. https://doi.org/10.54386/jam.v24i1.775

Liu C, Yang C, Yang Q, Wang J (2021) Spatiotemporal drought analysis by the standardized precipitation index (SPI) and standardized precipitation evapotranspiration index (SPEI) in Sichuan Province. China Sci Rep 11:1280. https://doi.org/10.1038/s41598-020-80527-3

Malik A, Kumar A, Rai P, Kuriqi A (2021) Prediction of Multi-Scalar Standardized Precipitation Index by Using Artificial Intelligence and Regression Models. Climate 9:28. https://doi.org/10.3390/cli9020028

Masroor M, Rehman S, Sajjad H et al (2021) Assessing the impact of drought conditions on groundwater potential in Godavari Middle Sub-Basin, India using analytical hierarchy process and random forest machine learning algorithm. Groundw Sustain Dev 13:100554. https://doi.org/10.1016/j.gsd.2021.100554

McKee T, Doeskin N, Kleist J (1995) Drought monitoring with multiple time scales. American Meteorological Society, Dallas, Texas, pp 233–236

Mehr AD, Kahya E, Özger M (2014) A gene–wavelet model for long lead time drought forecasting. J Hydrol Complete:691–699. https://doi.org/10.1016/j.jhydrol.2014.06.012

Mokhtar A, Jalali M, He H et al (2021) Estimation of SPEI Meteorological Drought Using Machine Learning Algorithms. IEEE Access 9:65503–65523. https://doi.org/10.1109/ACCESS.2021.3074305

Nguyen LB, Li QF, Ngoc TA, Hiramatsu K (2015) Adaptive Neuro-Fuzzy Inference System for drought forecasting in the Cai River basin in Vietnam. J Fac Agric Kyushu Univ 60:405–415

Özger M, Başakın EE, Ekmekcioğlu Ö, Hacısüleyman V (2020) Comparison of wavelet and empirical mode decomposition hybrid models in drought prediction. Comput Electron Agric 179:105851. https://doi.org/10.1016/j.compag.2020.105851

Pande Chaitanya B, Nadhir Al-Ansari NL, Kushwaha Aman Srivastava, Noor Rabeea, Kumar Manish, Moharir Kanak N, Elbeltagi Ahmed (2022) Forecasting of SPI and Meteorological Drought Based on the Artificial Neural Network and M5P Model Tree. Land 11:2040. https://doi.org/10.3390/land11112040

Palmer (1965) Meteorological drought. U.S. Research Paper No. 45. US Weather Bureau,Washington, DC., Washington, DC

Palmer WC (1968) Keeping track of crop moisture conditions, nationwide: The new crop moisture index. Weatherwise 21:156–161. https://doi.org/10.1080/00431672.1968.9932814

Park S, Im J, Jang E, Rhee J (2016) Drought assessment and monitoring through blending of multi-sensor indices using machine learning approaches for different climate regions. Agric for Meteorol 216:157–169. https://doi.org/10.1016/j.agrformet.2015.10.011

Rahmati O, Falah F, Dayal KS et al (2020) Machine learning approaches for spatial modeling of agricultural droughts in the south-east region of Queensland Australia. Sci Total Environ 699:134230. https://doi.org/10.1016/j.scitotenv.2019.134230

Rhee J, Im J (2017) Meteorological drought forecasting for ungauged areas based on machine learning: Using long-range climate forecast and remote sensing data. Agric Meteorol 237–238:105–122. https://doi.org/10.1016/j.agrformet.2017.02.011

Sakaa B, Elbeltagi A, Boudibi S, Chaffaï H, Islam ARM, Kulimushi LC, Choudhari P, Hani A, Brouziyne Y, Wong YJ (2022) Water quality index modeling using random forest and improved SMO algorithm for support vector machine in Saf-Saf river basin. Environ Sci Pollut Res 29:48491–48508

Saada N, Abu-Romman A (2017) Multi-site Modeling and Simulation of the Standardized Precipitation Index (SPI) in Jordan. J Hydrol Reg Stud 14:83–91. https://doi.org/10.1016/j.ejrh.2017.11.002

Shashua A (2009) Introduction to Machine Learning: Class Notes 67577. arXiv:09043664 [cs]

Shukla S, Wood AW (2008) Use of a standardized runoff index for characterizing hydrologic drought. Geophys Res Lett 35. https://doi.org/10.1029/2007GL032487

Sihag P, Jain P, Kumar M (2018) Modelling of impact of water quality on recharging rate of storm water filter system using various kernel function based regression. Model Earth Syst Environ 4:61–68. https://doi.org/10.1007/s40808-017-0410-0

Sreekesh S, Kaur N, Sreerama Naik SR (2019) Agricultural drought and soil moisture analysis using satellite image-based indices. In: The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences. Copernicus GmbH, New Delhi, India, pp 507–514

Suess S, Van der Linden S, Okujeni A et al (2015) Using class probabilities to map gradual transitions in shrub vegetation from simulated EnMAP data. Remote Sens 7:10668–10688. https://doi.org/10.3390/rs70810668

Tan ML, Juneng L, Tangang FT, Chan NW, Ngai ST (2019) Future hydro-meteorological drought of the Johor River Basin, Malaysia, based on CORDEX-SEA projections. Hydrol Sci J 64:921–933

Udmale P, Ichikawa Y, Manandhar S et al (2014) Farmers׳ perception of drought impacts, local adaptation and administrative mitigation measures in Maharashtra State, India. Int J Disaster Risk Reduct 10:250–269. https://doi.org/10.1016/j.ijdrr.2014.09.011

Üstün B, Melssen WJ, Buydens LMC (2006) Facilitating the application of Support Vector Regression by using a universal Pearson VII function based kernel. Chemom Intell Lab Syst 81:29–40. https://doi.org/10.1016/j.chemolab.2005.09.003

World Bank (2003) Financing rapid onset natural disaster losses in india : a risk management approach. World Bank, Washington, DC

Yaseen ZM, Ali M, Sharafati A et al (2021) Forecasting standardized precipitation index using data intelligence models: regional investigation of Bangladesh. Sci Rep 11:3435. https://doi.org/10.1038/s41598-021-82977-9

Zhang G, Ge H (2013) Support vector machine with a Pearson VII function kernel for discriminating halophilic and non-halophilic proteins. Comput Biol Chem 46:16–22. https://doi.org/10.1016/j.compbiolchem.2013.05.001

Zhang Y, Wu L (2012) Classification of fruits using computer vision and a multiclass support vector machine. Sensors 12:12489–12505. https://doi.org/10.3390/s120912489

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Chaitanya B. Pande: Original draft writing, conceptualization, formal analysis, Supervision, Data curation, Processing of data, Development of ML models, Writing-review and editing, Investigation. Ahmed Elbeltagi: Methodology, Development of ML models, Validation and formal analysis and writing- review and editing. N. L. Kushwaha: Writing-review and editing, Development of ML models, Visualization. Israel R. Orimoloye: Writing review and editing. Abebe Debele Tolche: Writing review and editing. Hazem Ghassan Abdo: Writing review and editing. Rohitash Kumar: Writing review and editing.

Corresponding authors

Ethics declarations

Conflicts of Interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pande, C.B., Kushwaha, N.L., Orimoloye, I.R. et al. Comparative Assessment of Improved SVM Method under Different Kernel Functions for Predicting Multi-scale Drought Index. Water Resour Manage 37, 1367–1399 (2023). https://doi.org/10.1007/s11269-023-03440-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11269-023-03440-0