Abstract

In image processing, sparse coding has been known to be relevant to both variational and Bayesian approaches. The regularization parameter in variational image restoration is intrinsically connected with the shape parameter of sparse coefficients’ distribution in Bayesian methods. How to set those parameters in a principled yet spatially adaptive fashion turns out to be a challenging problem especially for the class of nonlocal image models. In this work, we propose a structured sparse coding framework to address this issue—more specifically, a nonlocal extension of Gaussian scale mixture (GSM) model is developed using simultaneous sparse coding (SSC) and its applications into image restoration are explored. It is shown that the variances of sparse coefficients (the field of scalar multipliers of Gaussians)—if treated as a latent variable—can be jointly estimated along with the unknown sparse coefficients via the method of alternating optimization. When applied to image restoration, our experimental results have shown that the proposed SSC–GSM technique can both preserve the sharpness of edges and suppress undesirable artifacts. Thanks to its capability of achieving a better spatial adaptation, SSC–GSM based image restoration often delivers reconstructed images with higher subjective/objective qualities than other competing approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background and Motivation

Sparse representation of signals/images has been widely studied by signal and image processing communities in the past decades. Historically, sparsity dated back to the idea of variable selection studied by statisticians in late 1970s (Hocking 1976) and coring operator invented by RCA researchers in early 1980s (Carlson et al. 1985). The birth of wavelet (Daubechies 1988) (a.k.a. filter bank theory (Vetterli 1986) or multi-resolution analysis (Mallat 1989) in late 1980s rapidly sparkled the interest in sparse representation, which has found successful applications into image coding (Shapiro 1993; Said and Pearlman 1996; Taubman and Marcellin 2001) and denoising (Donoho and Johnstone 1994; Mihcak and Ramchandran 1999; Chang et al. 2000). Under the framework of sparse coding, a lot of research have been centered at two related issues: basis functions (or dictionary) and statistical modeling of sparse coefficients. Exemplar studies of the former are the construction of directional multiresolution representation (e.g., contourlet (Do and Vetterli 2005)) and over-complete dictionary learning from training data (e.g., K-SVD (Aharon et al. 2012; Elad and Aharon 2012), multiscale dictionary learning (Mairal et al. 2008), online dictionary learning (Mairal et al. 2009a) and the non-parametric Bayesian dictionary learning (Zhou et al. 2009)); the latter includes the use of Gaussian mixture models (Zoran and Weiss 2011; Yu et al. 2012), variational Bayesian models (Ji et al. 2008; Wipf et al. 2011), universal models (Ramirez and Sapiro 2012), and centralized Laplacian model (Dong et al. 2013b) for sparse coefficients.

More recently, a class of nonlocal image restoration techniques (Buades et al. 2005; Dabov et al. 2007; Mairal et al. 2009b; Katkovnik et al. 2010; Dong et al. 2011, 2013a, b) have attracted increasingly more attention. The key motivation behind lies in the observation that many important image structures in natural images including edges and textures can be characterized by the abundance of self-repeating patterns. Such observation has led to the formulation of simultaneous sparse coding (SSC) (Mairal et al. 2009b). Our own recent works (Dong et al. 2011, 2013a) have continued to demonstrate the potential of exploiting SSC in image restoration. However, a fundamental question remains open: how to achieve (local) spatial adaptation within the framework of nonlocal image restoration? This question is related to the issue of regularization parameters in a variational setting or shape parameters in a Bayesian one; but the issue becomes even more thorny when it is tangled with nonlocal regularization/sparsity. To the best of our knowledge, how to tune those parameters in a principled manner remains an open problem (e.g, please refer to (Ramirez and Sapiro 2012) and its references for a survey of recent advances).

In this work, we propose a new image model named SSC–GSM that connects Gaussian scale mixture (GSM) with SSC. Our idea is to model each sparse coefficient as a Gaussian distribution with a positive scaling variable and impose a sparse distribution prior (i.e., the Jeffrey prior (Box and Tiao 2011) used in this work) over the positive scaling variables. We show that the maximum a posterior (MAP) estimation of both sparse coefficients and scaling variables can be efficiently calculated by the method of alternating minimization. By characterizing the set of sparse coefficients of similar patches with the same prior distribution (i.e., the same non-zero means and positive scaling variables), we can effectively exploit both local and nonlocal dependencies among the sparse coefficients, which have been shown important for image restoration applications (Dabov et al. 2007; Mairal et al. 2009b; Dong et al. 2011). Our experimental results have shown that SSC–GSM based image restoration can deliver images whose subjective and objective qualities are often higher than other competing methods. Visual quality improvements are attributed to better preservation of edge sharpness and suppression of undesirable artifacts in the restored images.

1.2 Relationship to Other Competing Approaches

The connection between sparse coding and Bayesian inference has been previously studied in sparse Bayesian learning (Tipping 2001; Wipf and Rao 2004, 2007) and more recently in Bayesian compressive sensing (Ji et al. 2008), latent variable Bayesian models for promoting sparsity (Wipf et al. 2011). Despite offering a generic theoretical foundation as well as promising results, the Bayesian inference techniques along this line of research often involve potentially expensive sampling (e.g., approximated solutions for some choice of prior are achieved in Wipf et al. (2011)). By contrast, our SSC–GSM approach is conceptually much simpler and admits analytical solutions involving iterative shrinkage/filtering operators only. The other works closely related to the proposed SSC–GSM are group sparse coding with a Laplacian scale mixture (LSM) (Garrigues and Olshausen 2010)and field-of-GSM (Lyu and Simoncelli 2009). In (Garrigues and Olshausen 2010), a LSM model with Gamma distribution imposed over the scaling variables was used to model the sparse coefficients. Approximated estimates of the scale variables were obtained using the Expectation-Maximization (EM) algorithm. Note that the scale variables derived in LSM (Garrigues and Olshausen 2010) is very similar to the weights derived in the reweighted \(l_1\)-norm minimization (Candes et al. 2008). In contrast to those approaches, a GSM model with nonzero means and a noninformative sparse prior imposed over scaling variables are used to model the sparse coefficients here. Instead of using the EM algorithm for an approximated solution, our SSC–GSM offers an efficient inference of both scaling variables and sparse coefficients via alternating optimization method. In Lyu and Simoncelli (2009), a field of FSM (FoGSM) model was constructed using the product of two independent homogeneous Gaussian Markov random fields (hGMRFs) to exploit the dependencies among adjacent blocks. Despite similar motivations to exploit the dependencies between the scaling variables, the techniques used in Lyu and Simoncelli (2009) is significantly different and requires a lot more computations than our SSC–GSM formulation.

The proposed work is related to non-parametric Bayesian dictionary learning (Zhou et al. 2012) or solving inverse problems with piecewise linear estimators (Yu et al. 2012) but ours is motivated by the connection between nonlocal SSC strategy and local parametric GSM model. When compared with previous works on image denoising (e.g., K-SVD denoising (Elad and Aharon 2012), spatially adaptive singular-value thresholding (Dong et al. 2013a) and Expected Patch Log Likelihood (EPLL) (Zoran and Weiss 2011)), SSC–GSM targets at a more general framework of combining adaptive sparse inference with dictionary learning. SSC–GSM based image deblurring has also been experimentally shown superior to existing patch-based methods (e.g., Iterative Decoupled Deblurring BM3D (IDD-BM3D) (Danielyan et al. 2012) and Nonlocal centralized sparse representation (NCSR) (Dong et al. 2013b)) and the gain in terms of ISNR is as much as \(1.5dB\) over IDD-BM3D (Danielyan et al. 2012) for some test images (e.g., \(butterfly\) image - please refer to Fig. 5).

The reminder of this paper is organized as follows. In Sect. 2, we formulate the sparse coding problem with GSM model and generalize it into the new SSC–GSM model. In Sect. 3, we elaborate on the details of how to solve SSC–GSM by alternative minimization and emphasize the analytical solutions for both subproblems. In Sect. 4, we study the application of SSC–GSM into image restoration and discuss efficient implementation of SSC–GSM based image restoration algorithms. In Sect. 5, we report our experimental results in image denoising, image deblurring and image super-resolution as supporting evidence for the effectiveness of SSC–GSM model. In Sect. 6, we make some conclusions about the relationship of sparse coding to image restoration as well as perspectives about the future directions.

2 Simultaneous Sparse Coding via Gaussian Scale Mixture Modeling

2.1 Sparse Coding with Gaussian Scale Mixture Model

The basic idea behind sparse coding is to represent a signal \(\varvec{x}\in {\mathbb {R}}^{n}\) (\(n\) is the size of image patches) as the linear combination of basis vectors (dictionary elements) \({\mathbf {D}}\varvec{\alpha }\) where \({\mathbf {D}}\in {\mathbb {R}}^{n\times K}, n\le K\) is the dictionary and the coefficients \(\varvec{\alpha }\in {\mathbb {R}}^{K}\) satisfies some sparsity constraint. In view of the challenge with \(l_0\)-optimization, it has been suggested that the original nonconvex optimization is replaced by its \(l_1\)-counterpart:

which is convex and easier to solve. Solving the \(l_{1}\)-norm minimization problem corresponds to the MAP inference of \(\varvec{\alpha }\) with an identically independent distributed (i.i.d) Laplacian prior \(P(\alpha _i)=\frac{1}{2\theta _i}e^{-\frac{|\alpha _i|}{\theta _i}}\), wherein \(\theta _i\) denotes the standard derivation of \(\alpha _i\). It is easy to verify that the regularization parameter should be set as \(\lambda _i=2\sigma _n^2/\theta _i\) when the i.i.d Laplacian prior is used, where \(\sigma _n^2\) denotes the variance of approximation errors. In practice, the variances \(\theta _i\)’s of each \(\alpha _i\) are unknown and may not be easy to accurately estimated from the observation \(\varvec{x}\) considering that real signal/image are non-stationary and may be degraded by noise and blur.

In this paper we propose to model sparse coefficients \(\varvec{\alpha }\) with a GSM (Andrews and Mallows 1974) model. The GSM model decomposes coefficient vector \(\varvec{\alpha }\) into the point-wise product of a Gaussian vector \(\varvec{\beta }\) and a hidden scalar multiplier \(\varvec{\theta }\) -i.e., \(\alpha _i=\theta _i \beta _i\), where \(\theta _i\) is the positive scaling variable with probability \(P(\theta _i)\). Conditioned on \(\theta _i\), a coefficient \(\alpha _i\) is Gaussian with the standard derivation of \(\theta _i\). Assuming that \(\theta _i\) are i.i.d and independent of \(\beta _i\), the GSM prior of \(\varvec{\alpha }\) can be expressed as

As a family of probabilistic distributions, the GSM model can contain many kurtotic distributions (e.g., the Laplacian, Generalized Gaussian, and student’s t-distribution) given an appropriate \(P(\theta _{i})\).

Note that for most of choices of \(P(\theta _i)\) there is no analytical expression of \(P(\alpha _i)\) and thus it is difficult to compute the MAP estimates of \(\alpha _i\). However, such difficulty can be overcome by joint estimation of \((\alpha _i, \theta _i)\). For a given observation \(\varvec{x}={\mathbf {D}}\varvec{\alpha }+\varvec{n}\), where \(\varvec{n}\sim N(0,\sigma _{n}^{2})\) denotes the additive Gaussian noise, we can formulate the following MAP estimator

where \(P(\varvec{x}|\varvec{\alpha })\) is the likelihood term characterized by Gaussian function with variance \(\sigma _n^2\). The prior term \(P(\varvec{\alpha }|\varvec{\theta })\) can be expressed as

Instead of assuming the mean \(\mu _i=0\), we propose to use a biased-mean \(\mu _i\) for \(\alpha _i\) here inspired by our previous work NCSR (Dong et al. 2013b) (its estimation will be elaborated later).

The adoption of GSM model allows us to generalize the sparsity from statistical modeling of sparse coefficients \(\varvec{\alpha }\) to the specification of sparse prior \(P(\varvec{\theta })\). It has been suggested in the literature that noninformative prior (Box and Tiao 2011) \(P(\theta _i) \approx \frac{1}{\theta _i}\)—a.k.a. Jeffrey’s prior—is often the favorable choice. Therefore, we have also adopted this option in this work, which translates Eq. (3) into

where we have used \(P(\varvec{\theta })=\sum _i P(\theta _i)\). Noting that Jeffrey’s prior is unstable as \(\theta _i\rightarrow 0\); so we replace \(\log \theta _i\) by \(\log (\theta _i+\epsilon )\) where \(\epsilon \) is a small positive number for numerical stability and rewrite \(\sum _i \log (\theta _i+\epsilon )\) into \(\log (\varvec{\theta }+\epsilon )\) for notational simplicity. The above equation can then be further translated into the following sparse coding problem

Note that the matrix form of original GSM model is \(\varvec{\alpha }=\varLambda \varvec{\beta }\) and \(\varvec{\mu }=\varLambda \varvec{\gamma }\) where \(\varLambda =diag(\theta _i)\in {\mathbb {R}}^{K \times K}\) is a diagonal matrix characterizing the variance field for a chosen image patch. Accordingly, the sparse coding problem in Eq. (6) can be translated from \((\varvec{\alpha },\varvec{\mu })\) domain to \((\varvec{\beta },\varvec{\gamma })\) domain as follows

In other words, the sparse coding formulation of GSM model boils down to the joint estimation of \(\varvec{\beta }\) and \(\varvec{\theta }\). But unlike (Portilla et al. 2003) that treats the multiplier as a hidden variable and cancel it out through integration (i.e., the derivation of Bayes Least-Square estimate), we explicitly use the field of Gaussian scalar multiplier to characterize the variability and dependencies among local variances. Such sparse coding formulation of GSM model is appealing because it allows us to further exploit the power of GSM by connecting it with structured sparsity as we will detail next.

2.2 Exploiting Structured Sparsity for the Estimationof the Field of Scalar multipliers

A key observation behind our approach is that for a collection of similar patches, their corresponding sparse coefficients \(\varvec{\alpha }\)’s should be characterized by the same prior i.e., the probability density function with the same \(\varvec{\theta }\) and \(\varvec{\mu }\). Therefore, if one considers the SSC of GSM models for a collection of \(m\) similar patches, the structured/group sparsity based extension of Eq. (7) can be written as

where \({\mathbf {X}}=[\varvec{x}_1,...,\varvec{x}_m]\) denotes the collection of \(m\) similar patchesFootnote 1, \({\mathbf {A}}=\varLambda {\mathbf {B}}\) is the group representation of GSM model for sparse coefficients and their corresponding first-order and second-order statistics are characterized by \(\varGamma =[\varvec{\gamma }_{1},...,\varvec{\gamma }_{m}]\in {\mathbb {R}}^{K\times m}\) and \({\mathbf {B}}=[\varvec{\beta }_{1},...,\varvec{\beta }_{m}]\in {\mathbb {R}}^{K\times m}\) respectively, wherein \(\varvec{\gamma }_j=\varvec{\gamma }, j=1,2,\ldots ,m\). Given a collection of \(m\) similar patches, we have adopted the nonlocal means approach (Buades et al. 2005) for estimating \(\varvec{\mu }\)

where \(w_j\sim \exp (-\Vert \varvec{x}-\varvec{x}_j\Vert _2^2/h))\) is the weighting coefficient based on patch similarity. It follows from \(\varvec{\mu }=\varLambda \varvec{\gamma }\) that

A practical issue of Eq. (10) is that the original patches \(\varvec{x}_j\) and \(\varvec{x}\), as well as the sparse codes \(\varvec{\alpha }_j\) and \(\varvec{\alpha }\) are not available, and thus we cannot directly compute \(\varvec{\gamma }\) using the Eq. (10). To avoid such difficulty, we can treat \(\varvec{\gamma }\) as another optimization variable and jointly estimate it with the sparse coefficients as

where the weights \(\varvec{w}=[w_1,\ldots ,w_m]^T\) are pre-computed using the initial estimate of the image. Using the alternative directional multiplier method (ADMM) (Boyd et al. 2011), Eq. (11) can be approximately solved. However, the computational complexity for solving the sub-problem of \(\varvec{\gamma }\) is high. Alternatively, we can overcome such difficulty by iteratively estimating \(\varvec{\gamma }\) from the current estimates of the sparse coefficients without any sacrifice of the performance. Let \(\varvec{\beta }_j=\hat{\varvec{\beta }}_j+\varvec{e}_j\), wherein \(\varvec{e}_j\) denotes the estimation error of \(\varvec{\beta }_j\) and is assumed to be Gaussian and zero-mean. Then, Eq. (10) can be re-expressed as

where \(\varvec{n}_w\) denotes the estimation error of \(\varvec{\gamma }\). As \(\varvec{e}_j\) is assumed to be zero-mean Gaussian, \(\varvec{n}_w\) would be small. Thus, \(\varvec{\gamma }\) can be readily obtained from the estimates of representation coefficients \(\varvec{\beta }_j\). In practice, we recursively compute \(\varvec{\gamma }\) using the previous estimates of \(\varvec{\beta }_j\) after each iteration.

We call such new formulation in Eq. (8) Simultaneous Sparse Coding for Gaussian Scalar Mixture (SSC–GSM) and propose to develop computationally efficient solution to this problem in the next section. Note that here the formulation of SSC–GSM in Eq. (8) is for a given dictionary \({\mathbf {D}}\). However, the dictionary \({\mathbf {D}}\) can also be optimized for a fixed pair of \(({\mathbf {B}}, \varvec{\theta })\) such that both dictionary learning and statistical modeling of sparse coefficients can be unified within the framework of Eq. (8). Figure 1 shows the denoising results by the two proposed methods that use the model of Eqs. (7) and (8), respectively. Both the two proposed methods use the same dictionary \({\mathbf {D}}\) and \(\varvec{\gamma }\). From Fig. 1 we can see that the proposed method without nonlocal extension outperforms the KSVD method (Elad and Aharon 2012). With the nonlocal extension, the denoising performance of the proposed method is significantly improved.

Denoising performance comparison between the variants of the proposed method. (a) Noisy image (\(\sigma _n=20\)); (b) KSVD (Elad and Aharon 2012) (PSNR = 29.90 dB); (c) the proposed method (without nonlocal extension) uses the model of Eq. (7) (PSNR = 30.18 dB); (d) the proposed SSC–GSM method (PSNR = 30.84 dB)

3 Solving Simultaneous Sparse Coding via Alternating Minimization

In this section, we will show how to solve the optimization problem in Eq. (8) by alternatively updating the estimates of \({\mathbf {B}}\) and \(\varvec{\theta }\). The key observation lies in that the two subproblems - minimization of \({\mathbf {B}}\) for a fixed \(\varvec{\theta }\) and minimization of \(\varvec{\theta }\) for a fixed \({\mathbf {B}}\) - both can be efficiently solved. Specifically, both subproblems admits closed-form solutions when the dictionary is orthogonal.

3.1 Solving \(\varvec{\theta }\) for a Fixed \({\mathbf {B}}\)

For a fixed \({\mathbf {B}}\), the first subproblem simply becomes

which can be rewritten as

where the long vector \(\tilde{\varvec{x}}\!\in \! {\mathbb {R}}^{nm}\) denotes the vectorization of the matrix \({\mathbf {X}}\), the matrix \(\tilde{{\mathbf {D}}}\!=\![\tilde{\varvec{d}}_1, \tilde{\varvec{d}}_2, \ldots , \tilde{\varvec{d}}_K]\!\in \! {\mathbb {R}}^{mn\times K}\) whose each column \(\tilde{\varvec{d}}_j\) denotes the vectorization of the rank-one matrix \(\varvec{d}_i\varvec{\beta }^i\), and \(\varvec{\beta }^i\in {\mathbb {R}}^{m}\) denotes the \(i\)-th row of matrix \({\mathbf {B}}\). For optimizing the nonconvex \(\log \) penalty in Eq. (14), the principled difference of convex functions (DC) programming approach can be used for a local minimum (Gasso et al. 2009). It has also been shown in Candes et al. (2008) that the \(\log \) penalty can be linearly approximated and thus a local minimum of the nonconvex objective function can be obtained by iteratively solving a weighted \(\ell _1\)-penalized optimization problem.

However, the optimization of Eq. (13) can be much simplified when the dictionary \({\mathbf {D}}\) is orthogonal (e.g., DCT or PCA basis). In the case of orthogonal dictionary, Eq. (13) can be rewritten as

where we have used \({\mathbf {X}}={\mathbf {D}}{\mathbf {A}}\). For expression convenience, we can rewrite Eq. (15) as

where \(a_i=\Vert \varvec{\beta }^i\Vert _2^2\), \(b_i=-2\varvec{\alpha }^i(\varvec{\beta }^i)^T\) and \(c=4\sigma _n^2\). Hence, Eq. (16) can be decomposed into a sequence of scalar minimization problem, i.e.,

which can be solved by taking \(\frac{df(\theta _i)}{d\theta _i}=0\), where \(f(\theta _i)\) denotes the right hand side of Eq. (17). We derive

By solving \(g(\theta _i)=0\), we obtain the following two stationary points of \(f(\theta _i)\), i.e.,

when \(b_i^2/(16a_i^2)-c/(2a_i)\ge 0\). Then, the global minimizer of \(f(\theta _i)\) can be obtained by comparing \(f(0)\), \(f(\theta _{i,1})\) and \(f(\theta _{i,2})\).

When \(b_i^2/(16a_i^2)-c/(2a_i)<0\), there does not exist any stationary points in the range of \([0, \infty )\). As \(\epsilon \) is a small positive constant, \(g(0)=b_i+c/\epsilon \) is always positive. Thus, \(f(0)\) is the global minimizer for this case. In summary, the solution to Eq. (17) can be written as

where

3.2 Solving \({\mathbf {B}}\) for a Fixed \(\varvec{\theta }\)

The second subproblem is in fact even easier to solve. It takes the following form

Since both terms are \(l_2\), the closed-form solution to Eq. (22) is essentially the classical Wiener filtering

where \(\hat{{\mathbf {D}}}={\mathbf {D}}\varvec{\varLambda }\). Note that when \({\mathbf {D}}\) is orthogonal, Eq. (23) can be further simplified into

where \(\varvec{\varLambda }^{T} \varvec{\varLambda }+\sigma _{n}^{2}{\mathbf {I}}\) is a diagonal matrix and therefore its inverse can be easily computed.

By alternatively solving both subproblems of Eqs. (13) and (22) for the estimates of \(\varvec{\varLambda }\) and \({\mathbf {B}}\), the image data matrix \({\mathbf {X}}\) can then be reconstructed as

where \(\hat{\varvec{\varLambda }}\) and \(\hat{{\mathbf {B}}}\) denotes the final estimates of \(\varvec{\varLambda }\) and \({\mathbf {B}}\).

4 Application of Bayesian Structured Sparse Coding into Image Restoration

In the previous sections, we have seen how to solve SSC–GSM problem for a single image data matrix \({\mathbf {X}}\) (a collection of image patches similar to a chosen exemplar). In this section, we generalize such formulation to whole-image reconstruction and study the applications of SSC–GSM into image restoration including image denoising, image deblurring and image superresolution. The standard image degradation model is used here: \(\varvec{y}={\mathbf {H}}\varvec{x}+\varvec{w}\) where \(\varvec{x}\in {\mathbb {R}}^N,\varvec{y}\in {\mathbb {R}}^M\) denotes the original and degraded images respectively, \({\mathbf {H}}\in {\mathbb {R}}^{N\times M}\) is the degradation matrix and \(\varvec{w}\) is additive white Gaussian noise observing \(N(0,\sigma _n^2)\). The whole-image reconstruction problem can be formulated as

where \(\tilde{{\mathbf {R}}}_l\varvec{x}\doteq [{\mathbf {R}}_{l_1}\varvec{x}, {\mathbf {R}}_{l_2}\varvec{x}, \ldots , {\mathbf {R}}_{l_m}\varvec{x}]\in {\mathbb {R}}^{n\times m}\) denotes the data matrix formed by a group of image patches similar to the \(l\)-th exemplar patch \(\varvec{x}_l\) (including \(\varvec{x}_l\) itself), \({\mathbf {R}}_l\in {\mathbb {R}}^{n\times N}\) denotes a matrix extracting the \(l\)-th patch \(\varvec{x}_l\) from \(\varvec{x}\), and \(L\) is the total number of exemplars extracted from the reconstructed image \(\varvec{x}\). For a given exemplar patch \(\varvec{x}_l\), we search similar patches by performing \(k\)-nearest-neighbor search within a large local window (e.g., \(40\times 40\)). As the original image is not available, we use the current estimate of original image for patch matching, i.e.,

where \(T\) denotes the pre-selected threshold and \(S\) denotes the collection of positions of those similar patches. Alternatively, we can form the sample set \(S\) by selecting the patches that are within the first \(m\) (\(m=40\) in our implementation) closest to \(\hat{\varvec{x}}_l\). Invoking the principle of alternative optimization again, we propose to solve the whole-image reconstruction problem in Eq. (26) by alternating the solutions to the following two subproblems.

4.1 Solving \(\varvec{x}\) for a Fixed \(\{{\mathbf {B}}_l\},\{\varvec{\theta }_l\}\)

Let \(\hat{{\mathbf {X}}}_l={\mathbf {D}}\varvec{\varLambda }_l{\mathbf {B}}_l\). When \(\{{\mathbf {B}}_l\}\) and \(\{\varvec{\theta }_l\}\) are fixed, so is \(\{\hat{{\mathbf {X}}}_l\}\). Therefore, Eq. (26) reduces to the following \(l_2\)-optimization problem

which admits the following closed-form solution

where \(\tilde{{\mathbf {R}}}_l^T\tilde{{\mathbf {R}}}_l \doteq \sum _{j=1}^{m}{\mathbf {R}}_j^T{\mathbf {R}}_j\), \(\tilde{{\mathbf {R}}}_l^T\hat{{\mathbf {X}}}_l \doteq \sum _{j=1}^{m}{\mathbf {R}}_j^T\hat{\varvec{x}}_{l_j}\) and \(\hat{\varvec{x}}_{l_j}\) denotes the \(j\)-th column of matrix \(\hat{{\mathbf {X}}}_l\). Note that for image denoising application where \({\mathbf {H}}={\mathbf {I}}\) - the matrix to be inversed in Eq. (29)—is diagonal, and its inverse can be computed easily. Similar to the K-SVD approach, Eq. (29) can be computed by weighted averaging each reconstructed patches sets \(\tilde{{\mathbf {X}}}_l\). For image deblurring and super-resolution applications, Eq. (29) can be computed by using a conjugate gradient (CG) algorithm.

4.2 Solving \(\{{\mathbf {B}}_l\},\{\varvec{\theta }_l\}\) for a Fixed \(\varvec{x}\)

When \(\varvec{x}\) is fixed, the first term in Eq. (26) goes away and the subproblem boils down to a sequence of patch-level SSC–GSM problems formulated for each exemplar - i.e.,

where we use \({\mathbf {X}}_l=\tilde{{\mathbf {R}}}_l\varvec{x}\). This is exactly the problem we have studied in the previous section.

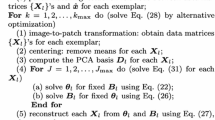

One important issue of the SSC–GSM-based image restoration is the selection of the dictionary. To adapt to the local image structures, instead of learning an over-complete dictionary for each dataset \({\mathbf {X}}_l\) as in Mairal et al. (2009b), we learn the principle component analysis (PCA) based dictionary for each dataset here (similar to NCSR (Dong et al. 2013b)). The use of the orthogonal dictionary much simplifies the Bayesian inference of SSC–GSM. Putting things together, a complete image restoration based on SSC–GSM can be summarized as follows.

In Algorithm 1 we update \({\mathbf {D}}_l\) in every \(k_0\) to save computational complexity. We also found that Algorithm 1 empirically converges even when the inner loop executes only one iteration (i.e., \(J=1\)). We note that the above algorithm can lead to a variety of implementations depending the choice of degradation matrix \({\mathbf {H}}\). When \({\mathbf {H}}\) is an identity matrix, Algorithm 1 is an image denoising algorithm using iterative regularization technique (Xu and Osher 2007). When \({\mathbf {H}}\) is a blur matrix or reduced blur matrix, Eq. (26) becomes the standard formulation of non-blind image deblurring or image super-resolution problem. The capability of capturing rapidly-changing statistics in natural images - e.g., through the use of GSM - can make patch-based nonlocal image models even more powerful.

5 Experimental Results

In this section, we report our experimental results of applying SSC–GSM based image restoration into image denoising, image deblurring and super-resolution. The experimental setup of this work is similar to that in our previous work on NCSR (Dong et al. 2013b). The basic parameter setting of SSC–GSM is as follows: patch size—\(6\times 6\), number of similar blocks— \(K=44\); \(k_{max}=14, k_0=1\) for image denoising, and \(k_{max}=450, k_0=40\) for image deblurring and super-resolution. The regularization parameter \(\eta \) is empirically set. Its values are shown in Table 1. To evaluate the quality of restored images, both PSNR and SSIM (Wang et al. 2004) metrics are used. However, due to limited page space, we can only show part of the experimental results in this paper. More detailed comparisons and complete experimental results are available at the following website: http://see.xidian.edu.cn/faculty/wsdong/SSC_GSM.htm.

5.1 Image Denoising

We have compared SSC–GSM based image denoising method against three current state-of-the-art methods including BM3D Image Denoising with Shape-Adaptive PCA (BM3D-SAPCA) (Katkovnik et al. 2010) (it is an enhanced version of BM3D denoising (Dabov et al. 2007) in which local spatial adaptation is achieved by shape-adaptive PCA), learned simultaneous sparse coding (LSSC) (Mairal et al. 2009b) and nonlocally centralized sparse representation (NCSR) denoising (Dong et al. 2013b). As can be seen from Table 2, the proposed SSC–GSM has achieved highly competitive denoising performance to other leading algorithms. For the collection of 12 test images, BM3D-SAPCA and SSC–GSM are mostly the best two performing methods - on the average, SSC–GSM falls behind BM3D-SAPCA by less than \(0.2dB\) for three out of six noise levels but deliver at least comparable for the other three. We note that the complexity of BM3D-SAPCA is much higher than that of the original BM3D; by contrast, our pure Matlab implementation of SSC–GSM algorithm (without any C-coded optimization) still runs reasonably fast. It takes around 20 s to denoise a \(256\times 256\) image on a PC with an Intel i7-2600 processor at 3.4GHz.

Figures. 2 and 3 include the visual comparison of denoising results for two typical images (\(lena\) and \(house\)) at moderate (\(\sigma _w=20\)) and heavy (\(\sigma _w=100\)) noise levels respectively. It can be observed from Fig. 2 that BM3D-SAPCA and SSC–GSM seem to deliver the best visual quality at the moderate noise level; by contrast, restored images by LSSC and NCSR both suffer from noticeable artifacts especially around the smooth areas close to the hat. When the noise contamination is severe, the superiority of SSC–GSM to other competing approaches is easier to justify—as can be seen from Fig. 3, SSC–GSM achieves the most visually pleasant restoration of the house image especially when one inspects the zoomed portions of roof regions closely.

Denoising performance comparison on the Lena image with moderate noise corruption. (a) Original image; (b) Noisy image (\(\sigma _{n}=20\)); denoised images by (c) BM3D-SAPCA (Katkovnik et al. 2010) (PSNR = 33.20 dB, SSIM = 0.8803); (d) LSSC (Mairal et al. 2009b) (PSNR = 32.88 dB, SSIM = 0.8742); (e) NCSR (Dong et al. 2013b) (PSNR = 32.92 dB, SSIM = 0.8760); (f) Proposed SSC–GSM (PSNR = 33.08, SSIM = 0.8787)

Denoising performance comparison on the House image with strong noise corruption. (a) Original image; (b) Noisy image (\(\sigma _n=100\)); denoised images by (c) BM3D-SAPCA (Katkovnik et al. 2010) (PSNR = 35.20 dB, SSIM = 0.6767); (d) LSSC (Mairal et al. 2009b) (PSNR = 25.63 dB, SSIM = 0.7389); (e) NCSR (Dong et al. 2013b) (PSNR = 25.65 dB, SSIM = 0.7434); (f) Proposed SSC–GSM (PSNR = 26.70, SSIM = 0.7430)

5.2 Image Deblurring

We have also compared SSC–GSM based image deblurring and three other competing approaches in the literature: constrained total variation image deblurring (denoted by FISTA), Iterative Decoupled Deblurring BM3D (IDD-BM3D) (Danielyan et al. 2012) and nonlocally centralized sparse representation (NCSR) denoising (Dong et al. 2013b). Note that the IDD-BM3D and NCSR are two recently developed state-of-the-art non-blind image deblurring approaches. In our comparative study, two commonly-used blur kernal i.e., \(9\times 9\) uniform and 2D Gaussian with standard deviation of 1.6; blurred images are further corrupted by additive white Gaussian noise with variance of \(\sigma _n=\sqrt{2}\). Table 3 includes the PSNR/SSIM comparison results for a collection of 11 images among four competing methods. It can be observed that SSC–GSM clearly outperforms all other three for 10 out of 11 images (the only exception is the \(house\) image for which IDD-BM3D slightly outperforms SSC–GSM by \(0.13dB\)). The gains are mostly impressive for \(butterfly\) and \(barbara\) images which contain abundant strong edges or textures. One possible explanation is that SSC–GSM is capable of striking a better tradeoff between exploiting local and nonlocal dependencies within those images.

Figures 4, 5 and 6 show the visual comparison of deblurring results for three test images: \(starfish\), \(butterfly\) and \(barbara\) respectively. For \(starfish\), it can be observed that IDD-BM3D and NCSR achieve deblurred images with similar quality (both noticeably better than FISTA); restored image by SSC–GSM is arguably the most preferred when compared against the original one (even though the PSNR gain is impressive). For \(butterfly\) and \(barbara\), visual quality improvements achieved by SSC–GSM are readily observable—SSC–GSM is capable of both preserve the sharpness of edges and suppress undesirable artifacts. Such experimental findings clearly suggest that the SSC–GSM model is a stronger prior for the class of photographic images containing strong edges/textures.

Deblurring performance comparison on the Starfish image. (a) Original image; (b) Noisy and blurred image (\(9\times 9\) uniform blur, \(\sigma _n=\sqrt{2}\)); deblurred images by (c) FISTA (Beck and Teboulle 2009) (PSNR = 27.75 dB, SSIM=0.8200); (d) IDD-BM3D (Danielyan et al. 2012) (PSNR = 29.48 dB, SSIM=0.8640); (e) NCSR (Dong et al. 2013b) (PSNR = 30.28 dB, SSIM = 0.8807); (f) Proposed SSC–GSM (PSNR = 30.58 dB, SSIM = 0.8862)

Deblurring performance comparison on the Butterfly image. (a) Original image; (b) Noisy and blurred image (\(9\times 9\) uniform blur, \(\sigma _n=\sqrt{2}\)); deblurred images by (c) FISTA (Beck and Teboulle 2009) (PSNR = 28.37 dB, SSIM=0.9058); (d) IDD-BM3D (Danielyan et al. 2012) (PSNR = 29.21 dB, SSIM=0.9216); (e) NCSR (Dong et al. 2013b) (PSNR = 29.68 dB, SSIM = 0.9273); (f) Proposed SSC–GSM (PSNR = 30.45 dB, SSIM = 0.9377)

Deblurring performance comparison on the Barbara image. (a) Original image; (b) Noisy and blurred image (Gaussian blur, \(\sigma _n=\sqrt{2}\)); deblurred images by (c) FISTA (Beck and Teboulle 2009) (PSNR = 25.03 dB, SSIM = 0.7377); (d) IDD-BM3D (Danielyan et al. 2012) (PSNR = 27.19 dB, SSIM = 0.8231); (e) NCSR (Dong et al. 2013b) (PSNR = 27.91 dB, SSIM = 0.8304); (f) Proposed SSC–GSM (PSNR = 28.42 dB, SSIM = 0.8462)

5.3 Image Superresolution

In our study on image super-resolution, simulated LR images are acquired from first applying a \(7\times 7\) uniform blur to the HR image, then down-sampling the blurred image by a factor of 3 along each dimension, and finally adding white Gaussian noise with \(\sigma _{n}^{2}=25\) to the LR images. For color images, we work with the luminance channel only; simple bicubic interpolation method is applied to the upsampling of chrominance channels. Table 4 includes the PSNR/SSIM comparison for a set of 9 test images among four competing approaches. It can be seen that SSC–GSM outperforms others in most situations. Visual quality comparison as shown in Figs. 7 and 8 also justifies the superiority of SSC–GSM to other SR techniques.

Image super-resolution performance comparison on the Plant image (scaling factor 3, \(\sigma _n=0\)). (a) Original image; (b) Low-resolution image; reconstructed images by (c) TV (Marquina and Osher 2008) (PSNR = 31.34 dB, SSIM = 0.8797); (d) Sparsity-based (Yang et al. 2010) (PSNR = 31.55 dB, SSIM = 0.8964); (e) NCSR (Dong et al. 2013b) (PSNR = 34.00 dB, SSIM = 0.9369); (f) Proposed SSC–GSM (PSNR = 34.33 dB, SSIM = 0.9236)

Image super-resolution performance comparison on the Hat image (scaling factor 3, \(\sigma _n=5\)). (a) Original image; (b) Low-resolution image; reconstructed images by (c) TV (Marquina and Osher 2008) (PSNR = 28.13 dB, SSIM = 0.7701); (d) Sparsity-based (Yang et al. 2010) (PSNR = 28.31 dB, SSIM = 0.7212); (e) NCSR (Dong et al. 2013b) (PSNR = 29.94 dB, SSIM = 0.8238); (f) Proposed SSC–GSM (PSNR = 30.21 dB, SSIM = 0.8354)

5.4 Running Time

The proposed SSC–GSM algorithm was implemented under Matlab. The running time of the proposed method with comparison to other competing methods is reported in Table 5. The LSSC method is implemented in other platform and thus we don’t report its running time. For image denoising, the proposed SSC–GSM method is about 6–9 times faster than the NCSR and BM3D-SAPCA methods. For image deblurring and superresolution, the proposed SSC–GSM method is much slower than other competing methods, as it requires more than four hundreds iterations. Since the patch grouping and the SSC for each exemplar patch can be implemented in parallel, the proposed SSC–GSM method can be much speeded up by using parallel computation techniques (e.g., GPU). Another way to accelerate the proposed method is to improve the convergence speed of Algorithm 1, which we remain it as future work.

6 Conclusions

In this paper, we proposed a new image model named SSC–GSM that connects SSC with GSM and explore its applications into image restoration. The proposed SSC–GSM model attempts to characterize both the biased-mean (like in NCSR) and spatially-varying variance (like in GSM) of sparse coefficients. It is shown that the formulated SSC–GSM problem, thanks to the power of alternating direction method of multipliers - can be decomposed into two subproblems both of which admit closed-form solutions when orthogonal basis is used. When applied to image restoration, SSC–GSM leads to computationally efficient algorithms involving iterative shrinkage/filtering only.

Extensive experimental results have shown that SSC–GSM can both preserve the sharpness of edges and suppress undesirable artifacts more effectively than other competing approaches. This work clearly shows the importance of spatial adaptation regardless the underlying image model is local or nonlocal; in fact, local variations and nonlocal invariance are two sides of the same coin - one has to take both of them into account during the art of image modeling.

In addition to image restoration, SSC–GSM can also be further studied along the line of dictionary learning. In our current implementation, we use PCA basis for its facilitating the derivation of analytical solutions. For non-unitary dictionary, we can solve the SSC–GSM problem by reducing it to iterative reweighted \(l_1\)-minimization problem (Candes et al. 2008). It is also possible to incorporate dictionary \({\mathbf {D}}\) into the optimization problem formulated in Eq. (5); and from this perspective, we can view SSC–GSM as a generalization of K-SVD algorithm. Joint optimization of dictionary and sparse coefficients is a more difficult problem and deserves more study. Finally, it is interesting to explore the relationship of SSC–GSM to the ideas in Bayesian nonparametrics (Polson and Scott 2010; Zhou et al. 2012) as well as the idea of integrating over hidden variacles like BLS-GSM (Portilla et al. 2003).

Notes

Throughout this paper, we will use subscript/superscript to denote column/row vectors of a matrix respectively.

References

Aharon, M., Elad, M., & Bruckstein, A. (2012). The K-SVD: An algorithm for designing of overcomplete dictionaries for sparse representations. IEEE Transactions on Signal Processing, 54(11), 4311–4322.

Andrews, D. F., & Mallows, C. L. (1974). Scale mixtures of normal distributions. Journal of the Royal Statistical Society. Series B (Methodological), 36(1), 99–102.

Beck, A., & Teboulle, M. (2009). Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Transactions on Image Processing, 18(11), 2419–2434.

Box, G. E., & Tiao, G. C. (2011). Bayesian inference in statistical analysis (Vol. 40). New York: Wiley.

Boyd, S., Parikh, N., Chu, E., Peleato, B., & Eckstein, J. (2011). Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundations and Trends in Machine Learning, 3(1), 1–122.

Buades, A., Coll, B., & Morel, J.-M. (2005). A non-local algorithm for image denoising. CVPR, 2, 60–65.

Candes, E., Wakin, M., & Boyd, S. (2008). Enhancing sparsity by reweighted \(l_1\) minimization. Journal of Fourier Analysis and Applications, 14(5), 877–905.

Carlson, C., Adelson, E., & Anderson, C. (Jun 1985). System for coring an image-representing signal. US Patent 4,523,230.

Chang, S. G., Yu, B., & Vetterli, M. (2000). Adaptive wavelet thresholding for image denoising and compression. IEEE Transactions on Image Processing, 9(9), 1532–1546.

Dabov, K., Foi, A., Katkovnik, V., & Egiazarian, K. (2007). Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Transactions on Image Processing, 16(8), 2080–2095.

Danielyan, A., Katkovnik, V., & Egiazarian, K. (2012). Bm3d frames and variational image deblurring. IEEE Transactions on Image Processing, 21(4), 1715–1728.

Daubechies, I. (1988). Orthonormal bases of compactly supported bases. Communications On Pure and Applied Mathematics, 41, 909–996.

Do, M. N., & Vetterli, M. (2005). The contourlet transform: An efficient directional multiresolution image representation. IEEE Transactions on Image Processing, 14(12), 2091–2106.

Dong, W., Li, X., Zhang, L., & Shi, G. (2011). Sparsity-based image denoising via dictionary learning and structural clustering. In: IEEE Conference on Computer Vision and Pattern Recognition.

Dong, W., Shi, G., & Li, X. (2013a). Nonlocal image restoration with bilateral variance estimation: a low-rank approach. IEEE Transactions on Image Processing, 22(2), 700–711.

Dong, W., Zhang, L., Shi, G., & Li, X. (2013b). Nonlocally centralized sparse representation for image restoration. IEEE Transactions on Image Processing, 22(4), 1620–1630.

Donoho, D., & Johnstone, I. (1994). Ideal spatial adaptation by wavelet shrinkage. Biometrika, 81, 425–455.

Elad, M., & Aharon, M. (2012). Image denoising via sparse and redundant representations over learned dictionaries. IEEE Transactions on Image Processing, 21(9), 3850–3864.

Garrigues, P., & Olshausen, B. A. (2010). Group sparse coding with a laplacian scale mixture prior. In: Advances in neural information processing systems, (pp. 676–684).

Gasso, G., Rakotomamonjy, A., & Canu, S. (2009). Recovering sparse signals with a certain family of nonconvex penalties and DC programming. IEEE Transactions on Signal Processing, 57(12), 4686–4698.

Hocking, R. R. (1976). A biometrics invited paper: The analysis and selection of variables in linear regression. Biometrics, 32(1), 1–49.

Ji, S., Xue, Y., & Carin, L. (2008). Bayesian compressive sensing. IEEE Transactions on Signal Processing, 56(6), 2346–2356.

Katkovnik, V., Foi, A., Egiazarian, K., & Astola, J. (2010). From local kernel to nonlocal multiple-model image denoising. International Journal of Computer Vision, 86(1), 1–32.

Lyu, S., & Simoncelli, E. (2009). Modeling multiscale subbands of photographic images with fields of gaussian scale mixtures. IEEE Transactions on Pattern Analysis and Machine Intelligence, 31(4), 693–706.

Mairal, J., Bach, F., Ponce, J., & Sapiro, G. (2009a). Online dictionary learning for sparse coding. In: 2009 IEEE 26th International Conference on Machine Learning, (pp. 689–696).

Mairal, J., Bach, F., Ponce, J., Sapiro, G., & Zisserman, A. (2009b). Non-local sparse models for image restoration. In: 2009 IEEE 12th International Conference on Computer Vision, (pp. 2272–2279).

Mairal, J., Sapiro, G., & Elad, M. (2008). Learning multiscale sparse representation for image and video restoration. SIAM Multiscale Modeling and Simulation, 7(1), 214–241.

Mallat, S. (1989). Multiresolution approximations and wavelet orthonormal bases of \(l^{2}({\varvec {r}})\). Transactions of the American Mathematical Society, 315, 69–87.

Marquina, A., & Osher, S. J. (2008). Image super-resolution by tv-regularization and bregman iteration. Journal of Scientific Computing, 37(3), 367–382.

Mihcak, I. K. M.K., & Ramchandran, K. (1999). Local statistical modeling of wavelet image coefficients and its application to denoising. In: IEEE International Conference on Acoust. Speech Signal Processing, (pp. 3253–3256).

Polson, N. G., & Scott, J. G. (2010). Shrink globally, act locally: sparse bayesian regularization and prediction. Bayesian Statistics, 9, 501–538.

Portilla, J., Strela, V., Wainwright, M., & Simoncelli, E. (2003). Image denoising using scale mixtures of gaussians in the wavelet domain. IEEE Transactions on Image Processing, 12, 1338–1351.

Ramirez, I., & Sapiro, G. (2012). Universal regularizers for robust sparse coding and modeling. IEEE Transactions on Image Processing, 21(9), 3850–3864.

Said, A., & Pearlman, W. A. (1996). A new fast and efficient image codec based on set partitioning in hierarchical trees. IEEE Transactions on Circuits and Systems for Video Technology, 6, 243–250.

Shapiro, J. M. (1993). Embedded image coding using zerotrees of wavelet coefficients. EEE Transactions on Acoustics, Speech and Signal Processing, 41(12), 3445–3462.

Taubman, D., & Marcellin, M. (2001). JPEG2000: Image compression fundamentals, standards, and practice. Norwell: Kluwer.

Tipping, M. (2001). Sparse bayesian learning and the relevance vector machine. The Journal of Machine Learning Research, 1, 211–244.

Vetterli, M. (1986). Filter banks allowing perfect reconstruction. Signal Processing, 10(3), 219–244.

Wang, Z., Bovik, A. C., Sheikh, H. R., & Simoncelli, E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4), 600–612.

Wipf, D. P., & Rao, B. D. (2004). Sparse bayesian learning for basis selection. IEEE Transactions on Signal Processing, 52(8), 2153–2164.

Wipf, D. P., & Rao, B. D. (2007). An empirical Bayesian strategy for solving the simultaneous sparse approximation problem. IEEE Transactions on Signal Processing, 55(7), 3704–3716.

Wipf, D. P., Rao, B. D., & Nagarajan, S. (2011). Latent variable bayesian models for promoting sparsity. IEEE Transactions on Information Theory, 57(9), 6236–6255.

Xu, J., & Osher, S. (2007). Iterative regularization and nonlinear inverse scale space applied to wavelet-based denoising. IEEE Transactions on Image Processing, 16(2), 534–544.

Yang, J., Wright, J., Huang, T., & Ma, Y. (2010). Image super-resolution via sparse representation. IEEE Transactions on Image Processing, 19(11), 2861–2873.

Yu, G., Sapiro, G., & Mallat, S. (2012). Solving inverse problems with piecewise linear estimators: from gaussian mixture models to structured sparsity. IEEE Transactions on Image Processing, 21(5), 2481–2499.

Zhou, M., Chen, H., Paisley, J., Ren, L., Sapiro, G., & Carin, L. (2009). Non-parametric bayesian dictionary learning for sparse image representation. In: Advances in neural information processing systems, (pp. 2295–2303).

Zhou, M., Chen, H., Paisley, J., Ren, L., Li, L., Xing, Z., et al. (2012). Nonparametric bayesian dictionary learning for analysis of noisy and incomplete images. IEEE Transactions on Image Processing, 21(1), 130–144.

Zoran, D., & Weiss, Y. (2011). From learning models of natural image patches to whole image restoration. In: Proceedings of ICCV.

Acknowledgments

The authors would like to thank Zhouchen Lin of Peking University for helpful discussion. They would also like to thank the three anonymous reviewers for their valuable comments and constructive suggestions that have much improved the presentation of this paper. This work was supported in part by the Major State Basic Research Development Program of China (973 Program) under Grant 2013CB329402, in part by the Natural Science Foundation (NSF) of China under Grant 61471281, Grant 61227004 and Grant 61390512, in part by the Program for New Scientific and Technological Star of Shaanxi Province under Grant 2014KJXX-46, in part by the Fundamental Research Funds of the Central Universities of China under Grant BDY081424 and Grant K5051399020, and in part by NSF under Award ECCS-0968730.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Julien Mairal, Francis Bach, Michael Elad.

Rights and permissions

About this article

Cite this article

Dong, W., Shi, G., Ma, Y. et al. Image Restoration via Simultaneous Sparse Coding: Where Structured Sparsity Meets Gaussian Scale Mixture. Int J Comput Vis 114, 217–232 (2015). https://doi.org/10.1007/s11263-015-0808-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-015-0808-y