Abstract

In the current work, we examined learners’ comprehension when engaged with elaborative processing strategies. In Experiment 1, we randomly assigned students to one of five elaborative processing conditions and addressed differences in learners’ lower- and higher-order learning outcomes and ability to employ elaborative strategies. Findings indicated no significant differences among conditions on learning outcomes. However, learners better able to employ elaborative processing strategies performed better on outcome measures. Experiment 2 extended this research and addressed whether there would be differences across elaborative processing conditions in learners’ comprehension at delayed testing. We also examined the role of motivation in performance and strategy use. Findings indicated no differences on the outcome measures at delayed testing; however, there were significant differences in learners’ performance on an integration outcome at immediate testing. In addition, significant positive correlations were indicated for several outcome measures, strategy use and mastery orientation. Future research should further consider instructional scaffolds to promote learners’ strategic processing and critical individual difference variables as they effect elaborative processing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Learners often lack text comprehension strategies that facilitate their construction of complete knowledge representations of expository text. This is particularly problematic when the text presents many new concepts as well as relationships among concepts (e.g., Graesser et al. 2002), which is a common format for many texts college students read for their courses. Individual learners can experience comprehension difficulties when they lack relevant prior knowledge or the inability to draw inferences across sentences (e.g., Robinson 1998). However, even when learners have some prior knowledge about a topic, they often fail to use that existing knowledge to make connections between new content in texts and what they already know (Bransford et al. 1982).

Over the past several decades, researchers have moved from a behavioral approach of studying learners as passive recipients of knowledge to an approach of studying learners as active participants in their own learning experiences (Bell and Kozlowski 2008; Salas and Cannon-Bowers 2001). Such an approach to instruction and learning seeks to help learners develop strategies and processes that support their ability to engage in higher-order thinking, problem-solving, and transfer. Learners’ use of more complex learning strategies during reading, such as elaboration (e.g., Boudreau et al. 1999), questioning the text (e.g., NAGB 2008), and generating novel examples (Hamilton 1989, 1997), represent such active learning processes that should aid in the development of strong mental models (Kintsch 1988, 1998) necessary for deep processing of text. Information processed at a deep level is better remembered than information processed at a shallow level (Craik and Lockhart 1972; Craik and Tulving 1975). According to Craik and Lockhart’s (1972) levels-of-processing theory, elaboration of material is important to deep learning of material. Generating ideas is one effective way to elaborate (Smith and Kosslyn 2007).

Research on elaboration and integration strategies is supported by generative learning principles (e.g., Grabowski 2004; Jonassen 1994; Wittrock 1990, 1991) and suggests that learners enhance understanding when they construct meaning and integrate new meaning with what they already know (McNamara 2009). Consistent with this perspective, text comprehension requires that readers actively generate relations both among the parts of the text as well as with their own knowledge and experience and the text. Readers, therefore, create models that organize information in ways that fit their own experiences and by so doing, generate an effective representation (e.g., Grabowski 2004; Graesser et al. 2002; Kintsch 1988, 1998; McNamara 2009). Thus, readers who have prior knowledge in the content area or life experiences they could call upon to help understand the text materials will process the new information more effectively and efficiently than those readers who lack prior content knowledge or experiences related to the text.

Numerous previously researched learner strategies as well as instructional strategies rely on elaborative processing as a foundation for new, meaningful knowledge construction, such as self-explanation (e.g., Ainsworth and Burcham 2007; Ainsworth and Th Liozou 2003; Chi et al. 1994), drawing (e.g.,Van Meter et al. 2006), notetaking (Keiwra et al. 1991), and elaborative interrogation (e.g., Callender and McDaniel 2007; Dornisch and Sperling 2008; Hamilton 2004; Ozgungor and Guthrie 2004). In this work, we expand the existing knowledge base and directly compare the effects of several elaborative processing strategies on students’ expository text comprehension.

The existing knowledge base, supported by numerous foundational studies in the area of elaboration, indicated that providing precise elaborations to base sentences substantially increased factual learning (Bransford et al. 1982; Stein and Bransford 1979; Stein et al. 1984). Building upon this work, researchers hypothesized that providing precise elaborations can also help to make text more memorable for students (Bransford et al. 1982), and can facilitate learning (Stein and Bransford 1979). Further, studies conducted by Pressley and others (e.g., Pressley et al. 1987, 1988) showed that providing participants with elaborations resulted in greater learning gains, indicating strength for the elaboration strategies, including those in which elaborations were provided as well as for those in which participants themselves elaborated on the text. In this current research, we investigate the relative merits of providing learners with elaborations in the form of examples within text when compared with the relative merits of several elaborative learning strategies in which participants generate their own ideas.

While we compare these mechanisms for elaboration, we recognize that they may promote slightly varied processing. For example, Hamilton (1989, 1997) suggested elaboration might increase the richness or distinctiveness with which information is coded into memory. He presented generating novel examples, a condition in the current work, as one effective way to elaborate on text and also suggested that generating new examples increased the richness of memory while elaborative interrogation, also studied in this current work, increases the distinctiveness of memory. In short, there are a number of known effective elaboration strategies. One well-accepted, often-researched elaborative processing strategy is elaborative interrogation (EI). EI is a higher-order questioning strategy that is generally implemented in one of two ways. In the first type of implementation, EI studies require that learners either answer provided ‘why’ questions (e.g., Why would that person do that? Why would that fact be true?). In the second general implementation in EI research, learners generate their own elaborative questions (Boudreau et al. 1999; Dornisch and Sperling 2004, 2006, 2008). The premise underlying these studies is the belief that answering or posing EI questions improves understanding as learners connect new information with prior knowledge, making the to-be-learned information more meaningful and memorable to the learner (Martin and Pressley 1991; Ozgungor and Guthrie 2004).

Although most intuitively believe EI is effective and existing EI research generally supports benefits of the strategy for target sentences and small paragraphs; findings from the limited studies that have examined EI with longer texts are mixed. That is, some studies found EI to be of benefit in learning outcomes with longer text (e.g., Boudreau et al. 1999; Seiffert 1994); whereas, others concluded that alternative forms of instructional manipulations or strategic processing are just as helpful to learners (Willoughby et al. 1994). Further, some researchers conclude little benefit of EI in authentic learning situations when college learners read longer texts (e.g., Callender and McDaniel 2007; Dornisch and Sperling 2004, 2006, 2008).

In the former research, EI proved no more or less effective than alternative learning strategies, such as (1) judgment of the quality of provided elaborations (Wood et al. 1994a, b), (2) visual elaboration strategies (Pressley et al. 1988; Woloshyn et al. 1990), (3) self-reference (Woloshyn et al. 1990), (4) spontaneous study and self-study (Wood and Hewitt 1993), and (5) generation of elaborative interrogation questions with or without attentional supports (Boudreau et al. 1999). These studies, therefore, indicated reason to question the efficacy of the EI strategy when compared to other processing strategies. In the current study, we address some of the limitations of existing EI research as we examine the relative benefit of several variations on elaborative interrogation.

Previous EI studies have primarily targeted isolated sentences (e.g., Pressley et al. 1988; Wood et al. 1994a, b; Willoughby et al. 2003), sequential sentences (e.g., Willoughby et al. 1993), and structured paragraphs (e.g., Seiffert 1993). Unfortunately, such materials are inconsistent with those instructional materials most learners encounter. A small number of studies have addressed the benefits of EI in learning from longer texts (e.g., Boudreau et al. 1999; Callender and McDaniel 2007; Dornisch and Sperling 2004, 2006; Hamilton 1997; Ozgungor and Guthrie 2004). The current study employs a longer text. Additionally, participants in many previous EI and other elaborative learning strategy investigations studied materials unrelated to any task required for their coursework. Given this limitation, it is unclear how such elaborative learning strategies, particularly EI, function in typical, or authentic, instructional environments. To better assess the utility of elaborative learning strategies, in this study we used a long, course-related, expository text within the context of learning course content.

One proposed benefit of elaborative learning strategies is that they facilitate student understanding because learners relate their prior knowledge to the content that they are learning, thereby processing the information at a deeper, more meaningful level. Specifically with respect to EI, much of the previous work has not related EI to complex learning (Ozgungor and Guthrie 2004) nor employed transfer-appropriate outcomes that measure higher-order learning. For example, previous EI investigations have assessed cued recall (e.g., Pressley et al. 1987, 1988; Woloshyn et al. 1990, 1992; Wood et al. 1990) associative matching (e.g., Martin and Pressley 1991; Woloshyn et al. 1990), free recall (e.g., Dornisch and Sperling 2004; Martin and Pressley 1991; Woloshyn et al. 1990; Woloshyn and Stockley 1995), true or false recognition (e.g., Greene et al. 1996; Woloshyn et al. 1994; Woloshyn and Stockley 1995), and multiple-choice recognition (e.g., Dornisch and Sperling 2004, 2006). Even in studies that employ slightly longer texts, learner outcomes have primarily focused on definitions of target concepts, specific examples used in the text, and main ideas of paragraphs (Boudreau et al. 1999; Hamilton 1997, Seiffert 1993). Exceptions include two studies that examined problem-solving items (Dornisch and Sperling 2006; Hamilton 1997), a study that examined EI’s efficacy in learning both factual and inferential information (McDaniel and Donnelly 1996), and Ozgungor and Guthrie’s (2004) study that addressed not only recall, but also inference and coherence.

From both a research and a practice perspective, the general lack of higher-order processing assessed in previous EI studies is a serious limitation. Therefore, in this study, we extended the research on EI and examined the benefits of these strategy prompts on both lower- and higher-order learner processing. We included a matching test, an open-ended factual integration test, and a four-alternative recognition test that addressed questions at varying levels of Bloom’s (1956) taxonomy. Further, we included a complex problem-solving transfer item.

Our first experiment addressed elaboration learning strategies as they apply to college-aged readers learning from typical course materials and extends the research on elaborative learning strategies, particularly EI, in several ways. First, we used variations on EI as well as another elaborative learning strategy in which learners generate novel examples related to the text. We also reached back to the work of Bransford and others (Bransford et al. 1982; Stein and Bransford 1979; Stein et al. 1984) and provided elaborations, in the form of provided examples within the text, for learners. Second, we used a fact-dense, course-related, expository text. Third, we examined the benefits of the strategy prompts on both lower- and higher-order outcome measures.

Further, with respect to previous EI studies, given that the use of EI should facilitate student processing and that it has not consistently been shown to do so, we questioned whether students in EI studies were truly engaging in the use of the strategy. We also wondered about the extent to which learners using other elaborative strategies, such as those generating novel examples related to the text, would be truly engaged in the use of the strategy. We recognized that assigning students to specific strategy conditions does not guarantee that students will engage in those strategies. Further, if students do engage in the strategies, it does not ensure that they will do so well. In this work, we wanted to be assured that learners were actually employing the various elaborative strategies. We also wanted to assess the degree to which students were successfully using the strategies. We expected that when students either did not comply with the directions of their strategy conditions or were unable to successfully engage in directed strategy use, the utility of elaborative processes as aids to learning from text would be compromised. Therefore, we hypothesized that regardless of strategy condition, participants who had difficulty responding to prompts designed to elicit elaborative processing would not perform as well as those who did not have such difficulty.

In summary, elaboration is known to be effective in facilitating knowledge construction. The elaborative strategies employed in this study should therefore assist readers’ text comprehension. The purpose of this study was to address the relative benefits of a variety of elaboration processing strategies for text comprehension.

In Experiment 1, we investigated two questions. First we considered whether there might be differences in the lower- and higher-order processing outcomes of varying elaborative processing strategies. We expected differences among strategy conditions for higher level learning outcomes (e.g., higher-order recognition questions and problem-solving transfer) but expected no differences among elaborative processing strategies for lower-order items (e.g., matching items and lower-order recognition questions).

In this work we expected that students would be better able to provide an answer to an elaborative prompt but would be less able to generate either examples or elaborative questions. When comparing the generation conditions, we expected that students would perform better at generating examples than they would elaborative questions. However, we felt that if students were successful at generating questions and responses, better learning outcomes would be realized for those conditions that required the greatest cognitive demands during generation.

Our second research question examined students’ compliance with the elaborative processing strategy prompts. We expected that when students had difficulty engaging in elaborative strategies at study, the usefulness of the prompts employed to promote elaboration would be compromised.

Experiment 1

Experiment 1 examined the benefits of promoting elaborative processing through the use of elaborative strategy prompts placed within instructional materials. As our research questions addressed differences among elaborative processing strategies, all of the design manipulations and strategy prompts used in this experiment required learners to use elaborative strategies when reading the text. In the first condition, participants read a text about standardized testing. We modified an existing text by including elaborations in the form of additional examples. This condition is similar to the many studies in which learners were provided elaborations within to-be-learned information. In the remaining conditions, participants either (a) answered provided elaborative interrogation questions, (b) generated elaborative interrogation questions, (c) generated and answered elaborative interrogation questions, or (d) generated novel examples related to text content.

Method

Participants and design

One hundred and 61 undergraduate students enrolled in two sections of an educational psychology course taught by the same instructor participated in the study outside of class for extra course credit. The sample included 32 males and 129 females. One-first year student, 136 sophomores, 14 juniors, 9 seniors, and one graduate student participated in the study. The vast majority of the participants majored in education (e.g., elementary education, secondary education, special education, agricultural education), although some students reported majors in communication disorders, psychology, human development and family studies, nutrition, and rehabilitation services.

In addition to outcome measures, self-reported demographic information included GPA (M = 3.39, SD = .40) and number of statistics courses taken in high school (no courses, n = 123; 1 course, n = 33; 2 courses, n = 5) and at a university (no courses, n = 96; 1 course, n = 60, 2 courses, n = 4, 3 courses, n = 1). We also asked students whether they were concurrently enrolled in an educational psychology statistics class (never taken, n = 136; previously taken, n = 14; currently enrolled, n = 11) and whether they had read the specific chapter from the text addressed in the instructional materials (no, n = 145; yes, n = 16). A survey assessing computer use asked participants (a) to rate their comfort using computers compared to others (worse, n = 16; same, n = 111; better, n = 34), (b) to indicate if they had taken a university course supplemented by Internet materials (no, n = 77; yes, n = 94) or email (no, n = 25; yes, n = 136), and (c) to describe how often and for what purposes they use computers.

To determine whether we should consider any demographic data in our analyses, we conducted one-way ANOVAs. With respect to academic background and prior knowledge, we found no differences among conditions on GPA (F [4, 156] = .88, p = .47), Math SAT scores (F [4, 156] = .06, p = .99), high school statistics courses (F [4, 156] = 1.08, p = .37, whether students were currently enrolled in or had taken a previous statistics course in the educational psychology program (F [4, 156] = 1.17, p = .36), or whether they had read the text chapter related to this material (F [4, 156] = .91, p = .46). We found differences in the number of courses students had taken at the university level, (F [4, 156] = .88, p = .47), although a Tukey HSD follow-up showed no significant differences among any two specific groups. Likewise, with respect to computer knowledge, we found no differences among conditions on their reported computer comfort and skill (F [4, 156] = 1.30, p = .27), previous experiences with Internet use (F [4, 156] = .56, p = .70), and email use F [4, 156] = .74, p = .57). Thus, we determined that it was not necessary to consider these demographic data in the analyses among conditions.

Students were randomly assigned to one of the five experimental conditions: (1) the condition in which students were provided further examples within the text (PEX), (2) the condition in which elaborative interrogations were provided to the students (PEI), (3) the condition in which students generated elaborative interrogations (GEI), (4) the condition in which students generated elaborative interrogations and then provided an answer for their questions (GAEI), and (5) the condition in which students generated an example (GEX). Table 1 provides a reference table of condition labels, conditions names, and brief descriptions to assist the reader.

Procedures

A researcher notified students of the experiment during their regularly scheduled class session and distributed informed consent forms. Students were given the URL for the experimental materials on a small slip of paper and were directed to go to the website and to complete the study independently without discussing it with their peers. Participants accessed the materials outside of class time in one study session within 3 days of recruitment. The site was programmed so that students logged on and entered a unique four digit code, completed demographic questions, and were then randomly assigned to one of the study conditions. After exposure to the experimental materials, participants completed matching, multiple-choice, and open-ended, factual recall dependent measures. In addition, students responded to a problem-solving transfer item.

The study was designed to require students to answer all question prompts. If a question was omitted, the materials prompted learners to complete it. After all questions were answered, the program allowed learners to continue to the next phase of the experiment. Further, the materials were programmed so that after reading the text and answering the questions, participants were prevented from re-accessing the instructional materials. All responses were written to ascii-deliminated text files for ease of transport into a statistical software program.

Materials

A base text of 3,057 words, or about 10 text pages, on the topic of standardized testing and normal distribution was presented to the learners. The experimental environment was designed to be commensurate with reading materials assigned for outside-of-class study in typical college courses. The instructional materials were relevant to the course content but had yet to be covered in class. Across the response conditions (PEI, GEI, GAEI, and GEX order) all thirteen textboxes were placed in the same relative position so that participants would target similar text information (see Fig. 1) when responding to the strategy prompt. The prompts were placed based on the length of the text, with study prompts being placed approximately two paragraphs apart. All study conditions received the base text modified as follows. Participants in the provided elaborative interrogation condition (PEI) read the text and answered elaborative “why” conditions during study (n = 27). An example PEI condition question is: Why might a teacher be more interested in the range than the variance of her class? Participants in the generated elaborative interrogation condition (GEI) read the text and generated thirteen elaborative “why” questions during study (n = 34). Participants in the generated and answered elaborative interrogation condition (GAEI) read the text, generated elaborative “why” questions, and provided answers to each of the questions they generated (n = 33). Participants in the generated examples group (GEX) read the text and generated a novel example related to information presented in the text (n = 35). For the provided examples condition (PEX), the researchers modified the base text by adding researcher-generated examples within the text, thereby increasing the word count for this condition to 3,378. There were 32 participants in the PEX condition. All of the conditions were designed to promote learners’ elaborative strategic processing.

While early studies related to EI and other elaborative processing have used extensive training on the strategy, much of the recent research on elaborative interrogation using longer texts either has not used the same type of extensive training or did not report training procedures (Callender and McDaniel 2007; Dornisch and Sperling 2004, 2006; Hamilton 1997; Ozgungor and Guthrie 2004). Although we did not provide specific or extensive training, for each of the conditions in which participants generated a response, we provided a sample paragraph with sample responses as a model of the type of response expected from participants. This strategy is consistent with these studies and other previous research on strategy prompts (Ramsay et al. 2009; Spires and Donley 1998).

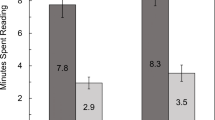

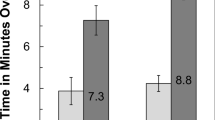

Learning outcomes were examined with several dependent measures. First, a matching test including seventeen factual definition items was administered. Although there were but thirteen strategy prompts, those prompts targeted text in which multiple concepts were studied; thus, the seventeen factual questions used for this measure targeted each of the specific concepts presented within the text. Second, a nineteen-item, four-alternative, multiple-choice recognition test containing a combination of knowledge/comprehension items (n = 7), application items (n = 4), and higher-order (analysis, synthesis, evaluation) items (n = 8) was administered. An example knowledge/comprehension stem was “Standardized tests were initially intended to…”, an example application level stem: was “Frances wants to present the average mathematics score attained by the participants in her study. She needs to report a measure of …”, and an example higher-order stem was “David received a raw score of 56. Given a mean of 50, a median of 50, and a standard deviation of 4, what is David’s z-score?” We used fewer lower level items on this instrument for two reasons. First, we assessed participants’ factual knowledge with the matching instrument. Second, we expected fewer differences among the conditions on lower-order questions since, based on elaborative processing, we were interested in learning whether these specific elaborative processes aid in the ability to process higher-order thinking. Participants also answered a thirteen-item integration test. To answer these integration questions students had to draw across information from several sentences, or in some cases, several paragraphs. The integration test assessed learners’ ability to integrate text materials. One researcher scored these responses based upon a four-point rubric. A second researcher rated randomly-selected cases across both experiments presented in this study. Inter-rater reliability across the experiments was r = .94. According to the four-point rubric, we judged the responses as having no response (e.g., the response was either nonsensical or I don’t know) (0), having the incorrect response (1), having a partial response (2), or having the full, correct response (3).

As a final outcome measure, participants answered an open-ended, problem-solving transfer item. This transfer item provided standard scores for two hypothetical students and required participants to explain and interpret the test scores (see Fig. 2). One person scored the problem-solving transfer responses across the two experiments described here. As with the integration responses, reliability was taken across the samples by a second scorer who randomly selected cases (greater than 20%) across the two experiments. There was 100% agreement on the scores for these cases. Table 2 illustrates the six-point scoring protocol used to score the problem-solving transfer item.

All participants’ responses to the condition prompts were scored as an efficacy check. For each of PEI, GEI, GAEI, and GEX, reliability was assessed across the samples by a second scorer who randomly selected 15% of the cases from each condition across the experiments. Reliability coefficients were as follows: for PEI, r = .84; for GEI, r = .83; for GAEI, r = .82; and for GEX, r = .84. The scorers used four-point scoring protocols to score each of the responses to the PEI, GEI, GAEI, and GEX. Table 3 illustrates the scoring protocol for these responses. Given the different strategy prompts, the resulting responses were not directly comparable across conditions.

Results

Benefits of elaborative strategies

Our first research question focused on the benefits of the various elaborative strategy prompts on lower- and higher-order learner processing. We expected that different levels of elaboration would facilitate deeper learner processing but would have little effect on lower-order items. To determine whether there were benefits of the various elaborative strategy prompts on both lower- and higher-order processing, we conducted a MANOVA. Wilks’ Lambda indicated that there were no differences among conditions on the four dependent measures (F [16, 468.06] = 1.31, p = .19). Specifically, no differences among the conditions were apparent for matching (F [4, 156] = 1.75, p = .14), recognition (F [4, 156] = 1.44, p = .22), open-ended factual questions (F [4, 156] = .78, p = .54), or problem-solving transfer (F [4, 156] = .75, p = .56). To further examine the recognition dependent variable, a second MANOVA was utilized to investigate whether condition made a difference in each of the subscales. Wilks’ Lambda indicated that there were no differences among conditions on the three subscales of the recognition dependent measure (F [12, 407.74] = .81, p = .64). Specifically, no differences among the conditions were apparent for knowledge/comprehension questions (F [4, 156] = .66, p = .62), the application questions (F [4, 156] = .71, p = .59), or the ASE (analysis, synthesis, and evaluation) questions (F [4, 156] = 1.77, p = .14). Table 4 presents the means and standard deviations for performance by condition on the four dependent measures, as well as for the sub-scores on the multiple choice measure in Experiment 1.

To determine the overall learning, we computed a composite outcome score and conducted an ANOVA. There were no significant differences among conditions on the total composite outcome (F [4, 156] = 1.00, p = .41). A total of 80 points were possible. Participants’ overall performance on the composite outcome scores were middling (M = 47.69, SD = 11.85).

Ability to use elaborative strategies

Our second research question focused on how the utility of the various elaboration strategies would be affected when students did not comply or were unable to effectively respond to strategy condition prompts. We expected that if students had difficulty in responding to study questions, their usefulness would be compromised. Because the strategies required slightly different processing and students’ responses were scored on slightly different scales, to address this question, instead of conducting a MANOVA we computed correlations between each study condition and the four dependent measures. Results of these analyses indicated that for the PEI condition, participants who performed well answering the strategy prompt tended to perform better on the matching test (r = .56, p < .01), the recognition test (r = .64, p < .01), the open-ended, factual recognition test (r = .77, p < .01), and the problem solving test (r = .44, p, < .05). The same patterns, however, did not hold true for any of the other conditions. In fact, the only additional significant correlation indicated was with the generate and answer elaborative interrogation condition (GAEI), where participants who performed well answering study conditions tended to perform better on the problem solving test (r = .38, p < .05).

Discussion

In Experiment 1, we addressed the benefits of several elaboration strategies on learning from long, dense, course-related expository texts. We found no differences at immediate testing among any of the five study conditions on any of the outcome measures. While several prior studies support a benefit of EI when used as a study prompt (i.e., when the question was provided to the participants) over different elaborative strategies (Pressley et al. 1987, 1988), findings from this study mimic findings from different investigations on EI that show no benefit for elaborative interrogation over other elaborative strategies at immediate testing (e.g., Woloshyn et al. 1990, 1994a , b; Wood and Hewitt 1993). It is possible, however, that the benefits from using elaboration strategies may be more salient at delay, rather than at immediate testing when the text is more readily accessible. As Robinson (1998) indicated, too often researchers neglect to investigate the durability of learning. Thus, in our second experiment we questioned whether participants engaged in varying elaborative strategies would perform differently at delayed testing.

The results for the PEI condition in Experiment 1 supported our expectations that when students had difficulty responding to the elaborative strategy prompts, the usefulness of the strategies would be compromised. However, this pattern was not consistent across the prompted elaborative strategy conditions. Further analyses indicated that in Experiment 1, there was variability in the ability of participants to respond to their study condition questions. Participants in the PEI condition performed better within their condition (M = 30.27, SD = 5.13) than did those in GEI (M = 18.03, SD = 5.09), GAEI (M = 17.47, SD = 7.62), and GEX (M = 19.00, SD = 8.37) suggesting, perhaps not surprisingly, that students struggled in the generative conditions much more than they did in answering provided elaborative strategy prompts. Overall, the struggles of students in the generation conditions give rise to concerns about the extent to which students are able to use these strategies independently. Although learners are required though their schooling to generate inferences and elaborations (e.g., NAGB 2009) and are tested on their ability to do so, our findings are consistent with some existing research that indicates that students may simply lack these strategies regardless of whether scaffolds are included with their texts (Azevedo and Hadwin 2005; Davis and Linn 2000; Demetriadis et al. 2008; Gerjets et al. 2007; NAGB 2009). It is possible that regardless of whether elaborative and generative strategies are used in schooling, students continue to lack the procedural knowledge necessary to use them effectively (Hilden and Pressley 2007). In the absence of further strategy instruction specifically designed to address the particulars of the strategies used here, it is likely that participants were simply not able to draw on their prior experiences of generating elaborations for text. Future research should explore what type of instruction would be required for deep learning to occur using these strategies (Ramsay et al. 2009).

Experiment 2

Several studies have measured the durability of the effectiveness of EI; however, those studies have concentrated primarily on recognition measures such as matching tasks (Kahl and Woloshyn 1994; Willoughby et al. 1993), selection of true statements (Woloshyn et al. 1994; Woloshyn and Stockley 1995), and rating confidence of correct answers (Woloshyn and Stockley 1995). Further, texts used in all of the prior EI durability investigations presented to-be-learned sentences with corresponding elaborative interrogation prompts with each sentence. This is in contrast to longer expository texts, with fewer elaboration opportunities. Results from these prior studies indicated positive effects for the durability of elaborative interrogation. Adult learners in an EI condition studying facts about animals, for example, maintained positive effects over a reading control condition on a matching test after a 1-month delay (Willoughby et al. 1993). Woloshyn and colleagues (1994) investigated EI’s durability in several delayed recognition sessions (14-day, 75-day, and 180-day) and found the learning gains to be durable in all sessions. Other studies confirmed these long-term effects (Kahl and Woloshyn 1994; Woloshyn and Stockley 1995). This study extends the research on elaborative interrogation by examining the benefits of these strategy prompts at a 1-week delay when learners engaged with long, course-related texts. Additionally, we chose for our delayed outcome measures not only lower-order, recognition items (i.e., matching test and lower-order items on our four-alternative recognition text) but also higher-order learner processing (i.e., higher-order recognition items and a problem-solving transfer item). Based on the results of Experiment 1, we expected no differences among study conditions on the immediate dependent measures of recognition and open-ended factual questions. However, we anticipated that there would be differences among conditions on delayed dependent measures of recognition, matching, and problem-solving transfer items.

Our second research question in Experiment 2 addressed students’ compliance with the strategy conditions. We again expected that participants who had difficulty responding to study conditions would not perform as well as those who did not have difficulty at both at immediate and delayed testing.

Previous research has indicated that motivation plays an important role in learners’ success. More specifically, goal orientation theories address how learners’ mastery or performance goals affect how they persist at completing academic tasks (Ames 1992; Dweck 1986, 2002). Research into such theories has suggested that mastery-oriented learners tend to engage in challenging tasks, have more intrinsic motivation for learning, and are interested in gaining more understanding; whereas, performance-oriented learners focus on performing better than others and sometimes engage in competitive and counterproductive learning behaviors.

While mastery orientation seems optimal, some research has indicated a benefit for performance orientation as well (Ames and Archer 1988; Bouffard et al. 1995). Our third research question in Experiment 2 examined the role of motivation in performance outcomes and strategy compliance. We expected that those learners demonstrating high mastery approach orientation would be more likely to do better on the strategies during study. We also expected that those students with a high mastery approach orientation would be likely to better learn the material; whereas, participants with high performance avoidance would be less likely to do so.

Methods

Participants

The participants for Experiment 2 were 267 undergraduate students enrolled in two sections of the same educational psychology course in a different semester than Experiment 1. Participation in the study occurred outside of class for extra course credit. The sample included 49 males and 218 females. Forty-nine first year students, 155 sophomores, 35 juniors, 25 seniors, and 3 post-degree education certificate students participated in the study. Unfortunately, but not unexpectedly, attrition occurred at delay, and a total of 170 participants were retained. Although a different instructor taught the course than in Experiment 1, both instructors covered topics related to the experimental study topic in their classes. The study was conducted prior to when the material was covered in class.

Procedures

Procedures for immediate testing were identical to those in Experiment 1, except that participants responded to only two dependent measures, an open-ended recall test and a four-alternative multiple-choice, recognition test. In Experiment 2, participants also entered the instructional environment at a 1 week delay to complete the following: a matching test, a second parallel four-alternative, multiple-choice recognition test on which no items were identical to those at immediate testing, and a problem-solving transfer item.

Materials

The same instructional materials used in Experiment 1 were used in Experiment 2. In Experiment 2, at immediate testing, there were 56 participants in the provided examples (PEX) condition, 48 participants in the provided elaborative interrogation (PEI) condition, 68 participants in the generated elaborative interrogation (GEI) condition, 36 participants in the generated and answered elaborative interrogation (GAEI) condition, and 59 in the generated examples (GEX) condition. At delayed testing, we experienced some attrition. There were 40 participants in the PEX condition, 28 participants in the PEI condition, 46 in the GEI condition, 18 in the GAEI condition, and 38 in the GEX condition. As in Experiment 1, several dependent measures were employed to assess student learning. In Experiment 2, a second recognition test served as an additional dependent measure at delay. At immediate testing, participants answered the same thirteen-item, open-ended integration test used in Experiment 1, which was intended to show learners’ abilities to organize text materials. The same four-point scoring protocol employed in Experiment 1 was utilized again to score responses to the organization questions. As previously reported, one person scored all of the data from the integration measures across the two studies, and reliability was taken across the samples by a second scorer who randomly selected cases across these two experiments (r = .94). Also at immediate testing, we used the identical four-alternative, multiple-choice test from Experiment 1. See Experiment 1 for examples of questions in each condition.

Therefore, at a 1-week delay, participants re-entered the materials and completed (1) a matching test including seventeen factual definitional items, (2) a second twenty-item, four-alternative multiple-choice test completely different from that used at immediate testing but containing the same combination of knowledge/comprehension items (n = 5), application items (n = 7), and higher-order (analysis, synthesis, evaluation) items (n = 8), and (3) an open-ended problem-solving transfer item identical to that used at immediate testing in Experiment 1. See Fig. 2 for a description of the “interpreting standardized test scores” transfer task. One person scored the problem-solving transfer responses using a six-point scoring rubric. As with the integration responses, reliability was taken across the samples by a second scorer who randomly selected cases across experiments. There was 100% agreement between the scorers.

As in Experiment 1, participants’ answers and generated questions were scored. To establish reliability in scoring across the two studies, for each of PEI, GEI, GAEI, and GEX, while different scorers were assigned to score the responses for different conditions, a single scorer scored all of the responses in each condition. Reliability was taken on the participants’ answers and generated questions across the samples by a second scorer who randomly selected about 15% of the cases from each condition. Reliability coefficients were as follows: for PEI, r = .84; for GEI, r = .83; for GAEI, r = .82; and for GEX, r = .84. The scorers used four-point scoring protocols to score each of the responses to the PEI, GEI, GAEI, and GEX. Table 3 illustrates the scoring protocol for these responses.

As in Experiment 1, self-reported demographic information included GPA (M = 3.35, SD = .46) and number of statistics courses taken in high school (no courses, n = 189; 1 course, n = 74; 2 courses, n = 4) and at a university (no courses, n = 177; 1 course, n = 78, 2 courses, n = 12, 3 courses, n = 0). We also asked students whether they were concurrently enrolled in an educational psychology statistics class (never taken, n = 220; previously taken, n = 11; currently enrolled, n = 36) and whether they had read the specific chapter from the text addressed in the instructional materials (no, n = 247; yes, n = 20). A survey assessing computer use asked participants (a) to rate their comfort using computers compared to others (worse, n = 28; same, n = 188; better, n = 51), (b) to indicate if they had taken a university course supplemented by Internet materials (no, n = 108; yes, n = 159), or email (no, n = 46; yes, n = 221) and (c) to describe how often and for what purposes they use computers.

To determine whether we should consider any demographic data in our analyses, we conducted one-way ANOVAs. With respect to academic background and prior knowledge, we found no differences among conditions on Math SAT scores (F [4, 262] = 1.17, p = .33), high school statistics courses (F [4, 262] = .75, p = .56, number of college statistics courses taken (F [4,262] = .56, p = .69), whether students were currently enrolled in or had taken a previous statistics course in the educational psychology program (F [4, 262] = .69, p = .60), or whether they had read the text chapter related to this material (F [4, 262] = .60, p = .68). Likewise, with respect to computer knowledge, we found no differences among conditions on their reported computer comfort and skill (F [4, 262] = .62, p = .65), previous experiences with Internet use (F [4, 262] = 1.23, p = .30), and email use F [4, 262] = 1.23, p = .60). Although small differences on GPA were indicated (F [2, 262] = 4.44, p = .002), we determined that these differences alone did not provide a reason to consider this demographic data in the analyses among conditions.

Finally, to assess motivation, we administered the twelve-item self-report measure of achievement motivation developed by Elliot and McGregor (2001). This instrument has been used in dozens of studies conducted with college-age learners Students responded to the motivation questions at the same time that they answered the demographic survey and before they began work with the instructional materials. The self-report measure included items intended to measure each of four goal orientations. Previous exploratory factor analysis on this measure indicated independence of the factors and, that combined, these factors accounted for a large portion of sample variance (Elliot and McGregor 2001, Study 1). Further work indicated that Cronbach’s alpha reliability scores ranged from .82 through .92 for the four orientations (Elliot and McGregor 2001, Study 3). The validity of the instrument was also considered and indicated strong support for the structure of the instrument given confirmatory factor analysis (Elliot and McGregor 2001, Study 2). The measure has been used for assessing motivation by other researchers (see Lieberman and Remedios 2007). In this work, the reliability estimates were similar for performance approach to those reported by Elliot and McGregor (r = .87) work but were slightly lower for the Mastery Approach (r = .82) and were lower for the avoidance scales (r = .69 for performance avoidance and r = .74 for mastery avoidance). The lower avoidance scales are likely due to contextual variables, as some students likely deemed the task more extraneous to their classroom learning than did others. Further, Mastery Avoidance scales are often reported to be less resilient than the other scales (e.g., Day et al. 2003).

Results

We examined three research questions in Experiment 2. First, we questioned whether the benefits of levels of elaborative strategy prompts, though perhaps not significant at immediate testing, would be more salient at delayed testing. Second, we questioned whether the results we found in Experiment 1 with regard to students’ ability to respond to the strategy prompts would be found in Experiment 2, for both immediate and delayed testing. Third, and finally, we considered whether students’ underlying motivation played a role in their ability or willingness to engage in strategy use and if performance on outcome measures were explained by motivation. We conducted several sets of analyses to answer the research questions for this experiment.

Benefits of elaborative strategies

Because researchers have noted that the results of instructional strategy research are often limited to immediate testing (e.g., Robinson 1998), we addressed the benefits of elaborative strategy prompts both at immediate and delayed testing. Based on results of Experiment 1, we anticipated no differences on either lower- or higher-order items at immediate testing. We considered the possibility that at immediate testing, because of its accessibility, the material may be more easily recalled by all students. However, at delayed testing, the material may be more difficult to recall. Consequently, we thought it possible that strategy use may play a particularly pronounced role in delayed testing. Therefore, we predicted that differences among the elaboration conditions would be more salient at delay.

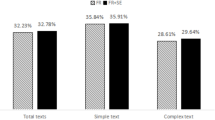

To determine whether there were differences in performance on the dependent measures we conducted several MANOVAs. We first tested whether there were differences on the two outcome measures, the integration test and the recognition test, used at immediate testing. Wilks’ Lambda indicated that differences existed between conditions (F [8, 522] = 2.82, p < .01). Specifically, differences were found between conditions on the integration test (F [4, 262] = 3.85, p < .01) but not on the recognition test (F [4, 262] = 1.09, p = .36). Tukey post hoc analyses on the integration test revealed significant differences between GEI and PEX, where participants in the GEI condition scored significantly lower than those in the PEX group. To further examine the immediate recognition dependent variable, a second MANOVA was used to investigate whether condition made a difference in any of the subscales. Wilks’ Lambda indicated that there were no differences between conditions on the three subscales of the recognition dependent measure (F [12, 688.19] = 1.36, p = .18). Specifically, no differences among the conditions were apparent for knowledge/comprehension questions (F [4, 262] = 1.20, p = .31), application questions (F [4, 262] = .99, p = .41), and ASE questions (F [4, 262] = 1.49, p = .21).

Next, we conducted a MANOVA to determine whether there were differences in performance on any of the delayed dependent measures. Wilks’ Lambda indicated that there were no differences among conditions on any of the delayed outcome measures (F [12, 431.55] = 1.49, p = .12). Specifically, there were no differences among conditions on the matching test (F [4, 165] = 1.18, p = .32), delayed recognition (F [4, 165] = .90, p = .47), and problem-solving transfer (F [4, 165] = 1.88, p = .18). Again, a second MANOVA was employed to investigate whether condition made a difference on any of the subscales of the delayed recognition test. Wilks’ Lambda revealed that there were no differences among the conditions on the three subscales of the delayed recognition dependent measure (F [12, 431.55] = .81, p = .64). Specifically, no differences among the conditions were apparent for knowledge/comprehension (F [4, 165] = 1.70, p = .15), application (F [4, 165] = .84, p = .50), and ASE (F [4, 165] = .15, p = .56). Tables 5 and 6 present the means and standard deviations for performance by condition on immediate and delayed outcomes, as well as for the sub-scores on the multiple choice recognition measures.

Ability to use elaborative strategies

As in Experiment 1, our second research question focused on how the utility of the various elaboration strategies would be affected when students did not comply or were unable to respond to study condition questions. We expected that if students had difficulty in responding to study questions, their usefulness would be compromised, both at immediate and delayed testing. Overall mean scores and standard deviations of these data indicated that participants in the provided elaborative interrogation condition (PEI) had a higher mean than the other groups (M = 29.97, SD = 6.35). Participants who generated examples (GEX) also seemed to do better than the other two groups (M = 24.51, SD = 9.57) although the variance in scores was higher. Means for the other two conditions, where participants who generated only questions (M = 18.57, SD = 6.42) (GEI) and those who both generated and answered their own questions (M = 16.19, SD = 4.24) (GAEI), indicated that participants were not as successful in these conditions. However, to address our research question, because the scales across strategies were not the same, we computed correlations between each study condition and the four dependent measures.

Results of these analyses indicated that for the PEI condition, participants who performed well answering strategy condition prompts in the study tended to do better on both of the immediate outcome measures: open-ended, factual recall test (r = .31, p < .05) and recognition (r = .43, p < .01). Additionally, participants who performed well answering study condition prompts also performed well on two of the delayed outcome measures: matching (r = .48, p < .01) and problem-solving (r = .06, p < .05). The correlation between study condition performance and delayed recognition was not significant.

The same patterns, however, were not indicated for the other conditions. Participants in the GAEI condition who generated and answered EI prompts well tended to do better than those who did not on the delayed recognition outcome (r = .41, p < .05) and in the GEX condition, participants who did well generating examples tended to do better than those who did not on the integration measure (r = .52, p < .01).

Motivation

As an initial analysis of the role of motivation, we conducted a MANOVA. Wilks’ Lambda revealed that there were no differences in motivation by condition (F [12, 688.19] = .77, p = .68). Specifically, there were no differences among conditions on orientations of performance (F [4, 262] = 1.32, p = .26), mastery (F [4, 262] = .77, p = .55), approach (F [4, 262] = .61, p = .66), or avoidance (F [2, 262] = 1.36, p = .25). Having ruled out differences among conditions on motivation orientations, we next conducted bivariate correlations to test our original hypotheses. As expected, we found a small but significant positive correlation for mastery approach orientation and use of study strategy (r = .17, p < .05), indicating that those participants with this orientation were more likely to adhere to or be able to use the study strategies. Significant correlations between performance orientations or mastery avoidance orientation and use of study strategy were not indicated.

Next, we conducted bivariate correlations to determine if a relationship between outcome performance and motivation existed. Results indicated significant correlations between motivation and outcome measures in generally expected directions. The correlations revealed significant negative correlations for performance avoidance orientation and performance on the immediate integration (r = −.23, p < .01) and recognition (r = −.26, p < .01) tests as well as the delayed matching (r = −.27, p < .01) and recognition (r = −.36, p < .01) tests. Also, there were significant positive correlations for mastery approach orientation and performance on the immediate integration (r = .20, p < .01) and recognition (r = .23, p < .01) tests as well as the delayed recognition test (r = .20, p < .01). There were no significant correlations between either performance approach orientation or mastery avoidance orientation on any of these tests. Also, no significant differences were found between these two orientations and performance on the problem-solving transfer task.

Discussion

In Experiment 2 we examined several questions related to lower- and higher-order learning from a lengthy, dense, course-related text. First, we questioned whether the benefits of levels of elaborative strategy prompts, though perhaps not significant at immediate testing, would be more salient at delayed testing. Second, we questioned whether the results we found in Experiment 1 with regard to students’ ability to respond to the strategy prompts would be found in Experiment 2, for both immediate and delayed testing. Third, and finally, we considered whether students’ motivational orientation was a factor in their ability or willingness to engage in strategy use and if performance on outcome measures might be explained in part by motivation.

Levels of elaboration and utility of strategies

Strategy instruction

With respect to our first question, no significant differences among the elaboration conditions were found on most outcome measures, with the exception of a benefit of provided examples over generated elaborative interrogations (GEI) on an open-ended integration test. There are several possible explanations for these findings. First, as Seifert (1993) indicated, the EI strategy and other related elaboration strategies may not be as effective when ideas are embedded in longer prose passages. This clearly might limit the utility for Elaborative Interrogation as an effective learning strategy. Yet, findings related to our second research question indicated that when students who used a provided EI strategy performed well on study condition questions, they also performed well on several of the outcome measures. This suggests that when EI questions are provided to students and they are able to answer the questions effectively, the strategy aids them in responding to both lower- and higher-order outcome measures, both at immediate and delayed testing, even in the longer passages used in the current experiment. Interestingly, these findings did not generalize to conditions in which students generated either EI questions or examples related to text content. Seifert (1993) suggested that the effectiveness of the EI strategy may be reduced when learners encounter longer texts because when ideas are already contextualized within a text, they may be inherently more memorable. This might explain the results of this study, because perhaps when students are asked to generate their own questions when reading a longer text, they may feel that the pool of questions they might create is limited because the text is already elaborated. This burden may have been lifted for those students in the PEI condition because they had only to answer, rather than generate, questions. Further, learners likely have much more experience in answering rather than generating questions.

As additional support for this position, again in Experiment 2, mean strategy condition scores indicated that students were better able to respond to provided elaboration questions than to generate any type of response. Such findings suggest that learners may not be able to effectively utilize elaboration strategies without prior experience with the strategy. Although most learners have some experience answering elaborative questions while studying, as supported by the results of Experiment 1, even when questions are provided to learners, they often have difficulties elaborating on the text, likely relying on information they can find within the text to answer the study questions. In short, without training even college learners are not effective independent elaborators. These findings are supported, in part by recent research that indicated that students struggle with generating main ideas and elaborations when reading extended history text (Ramsay et al. 2009).

Prior research has shown that students are unlikely to use sophisticated strategies, such as elaboration strategies, when they encounter new and difficult texts, reverting, rather, to less sophisticated strategies (Willoughby et al. 2003). This is particularly relevant with college learners within the context of a meaningful learning experience, that is, one that has relevance or ‘stakes’ outside the experimental setting. Under these conditions, learners may elect to use and may be more successful using their own known and practiced strategies instead of employing a new, unfamiliar, untested elaboration strategy. It remains unclear, therefore, how the EI strategy works for students who are able to use EI while reading more typical texts in typical instructional environments.

Elaboration strategies are known to be potent when effectively used by learners (Willoughby et al. 2003). With the exception of some of the learners in the PEI condition, in neither Experiment 1 nor 2 did learners effectively use elaborative processing strategies. That is, across conditions, learners were relatively unable to generate elaborations. Explicit strategy instruction or knowledge about the effectiveness of using elaboration strategies might encourage learners to monitor their own learning and become more self-regulated (Willoughby et al. 2003).

In previous research in which learners were asked to generate, rather than answer EI while reading longer texts, investigators suggested that difficulty experienced by the “unsupported elaborative interrogation group” might be attributed to the unfamiliarity of the strategy (Boudreau et al. 1999). Future investigations should examine whether further manipulating to-be-learned text by providing better quality instructions, with added information about the benefits of the prompted elaborative processing strategy, might facilitate effective use of the strategy. Additional research should address how much learners must practice the use of EI and other elaborative strategies in order to effectively use such strategies within authentic learning situations. The findings from the current study indicated that providing students with EI questions as text supplements works to facilitate performance on a variety of outcome measures, but only when students perform the task well. Such findings provide encouragement that other elaborative strategies would be more effective if students were better able to perform the cognitive processing such tasks require. Findings also indicated that particularly in the GEI and GAEI conditions, students experienced difficulties responding to the strategy prompts. If we expect that engagement in elaborative strategies does facilitate learning and performance on higher-order outcomes, further research must investigate what types of strategy instructions are necessary to ensure that learners are prepared to use strategies that would be most beneficial to their higher-order processing.

Prior knowledge

A second explanation for the findings in the current work may rest with the level of prior knowledge of the participants. Among many others, Robinson and Schraw (1994), for example, indicated that inferential text processing can be difficult for learners who lack prior knowledge. This may be compounded when students attempt to also engage with unfamiliar strategies since greater demands are placed on cognitive processes for both activities than would be if either the text content or the strategy were familiar. Thus, the learners in the current research may not have possessed the necessary prior knowledge to facilitate learning from this unfamiliar text when using unfamiliar elaboration strategies.

This possibility is strengthened by findings from previous studies on the elaborative interrogation strategy (Kuhara-Kojima and Hatano 1991; Willoughby et al. 1993), which suggest that prior knowledge may be critical to the effectiveness of EI (Willoughby et al. 2003) since learners can more readily call upon background knowledge (Wood and Hewitt 1993) to answer questions posed while they study. Studies have revealed some conflicting evidence of the benefits of EI when encountering materials for which learners have low prior knowledge. However, prior research supporting that learning can be facilitated by EI even when prior knowledge is low has focused primarily on learners engaged in studying sentences (Symons and Greene 1993; Willoughby and Wood 1994; Woloshyn et al. 1992). Thus, in general, while EI has proven to be most effective when learners’ repertoires include a rich knowledge base (Willoughby et al. 1994, 1999 , 2000 , 2003), in situations where prior knowledge is low (Willoughby and Wood 1994; Willoughby et al. 2003) or when studying unfamiliar domains (Symons and Greene 1993; Woloshyn et al. 1992), EI has facilitated performance minimally at best. Results from the current investigations support findings from previous studies that indicate only learners with relevant knowledge benefit from using the EI strategy (Willoughby et al. 1993, 1994; Wood et al. 1990).

If, as prior research suggests, learners lacking background knowledge about text topics have difficulty elaborating beyond provided information when using EI (Willoughby et al. 1997), it would follow that learners using various alternative elaboration strategies would face similar difficulties. Thus, the fact that differences among the study conditions in Experiments 1 and 2 were only found on one of the outcome measures may not be surprising. Findings from one previous study indicated that when learning from text passages about familiar content, students are likely to activate prior knowledge (Seiffert 1993). It is entirely possible, however, that when learners encounter longer texts such as those in the current study for which they have little or no prior knowledge there are insufficient cues in the text to activate prior knowledge, limiting the ability to make connections between new information and prior knowledge. Thus, learners may be unable to effectively utilize elaboration strategies without prior knowledge of text material. Future research should investigate the utility of elaboration strategies when learners have differing levels of prior knowledge of course-related text materials.

The role of prior knowledge in understanding course materials is widely recognized by university professors, who understand that learners may have difficulties reading texts dense with new facts and concepts when they have little prior knowledge of topics. Thus, they often suggest that students read course related materials after the topics have been covered in class. It might be interesting, therefore, to examine the effectiveness of various levels of elaboration strategies when learners engage with longer, authentic texts, specifically tied to course content after the content has been initially taught. Using elaborative strategies while reading texts after instruction might help learners to address misunderstandings developed during the original presentation of course materials. Previous researchers have purported that EI is more effective than traditional classroom interaction in replacing errant knowledge with new knowledge (Woloshyn et al. 1992). Learners using EI strategies might be more likely to activate prior knowledge in order to explain a fact, and may therefore be more likely to rectify errant knowledge during study. Future research should investigate whether errant knowledge developed during a lecture is more likely to be rectified when learners use elaboration strategies than when they use less sophisticated strategies.

Text organization

In addition to taking into account learners’ familiarity with study strategies and prior knowledge, the findings of the current work coupled with prior research findings, suggest that it is necessary to also address the type of text that learners are reading. For example, a recent study (Willoughby et al. 2003) investigated whether the organization of study materials played a role in the effectiveness of learning from the EI strategy. As with much of the prior research on EI, the study focused on EI’s utility when learning from sentences, rather than longer texts. However, results from the research indicated that effectiveness of learning with EI may be dependent on the organizational structure of the materials being presented. Researchers in this previous study provided some students with the opportunity to organize study materials; when given a choice, learners organized sentences about animals according to behavior rather than by animal (Willoughby et al. 2003). As pointed out by the investigators, learning from this method of organization was more effective than organizational structures provided for learners in prior studies, indicating that organizational structures play a role in the utility of the EI strategy.

Students are accustomed to learning from expository texts dense with new facts for college course work. Thus, it is reasonable to assume that the text used in the research reported here was organized in a way that would be familiar to students. However, it is possible that elaboration strategies work better with texts that are organized differently than the dense, expository text we used. Perhaps learners would find that the elaboration strategies examined here would work better with texts containing an overall structure that is more descriptive, or presents cause-effect or problem–solution texts.

As students continue to struggle with comprehension of texts, researchers and educators must continue to uncover strategies that will facilitate learning from different types of expository, and perhaps narrative, texts. Future research should addresswithin-subject designs in which learners are given the opportunity to use target strategies with texts organized in multiple ways.

Motivation

In addition to investigating learning strategies, the current work examined learners’ motivation and the role that motivation might play in learning. Our findings, with respect to motivation, suggest that motivation, as expected, was a factor in participants’ performance on the outcome measures. The significant positive correlation between mastery approach orientation and use of study strategy first indicates that learners possessing this orientation were better able to use the strategies during study or were more inclined to adhere to the study instructions than were learners with mastery avoidance orientation or either type of performance orientation. Further, significant positive correlations between mastery approach orientation and outcomes, as well as significant negative correlations between performance avoidance orientation and outcomes supported our expectations that learners with different motivation orientations would perform differently on the outcomes. Interestingly, there were no associations between any motivation orientation and performance on the problem-solving transfer task. However, the magnitude of the effect of motivation overall in this study is relatively small and suggests additional research should examine other factors, such as instructional support or ability, to use the strategies more directly than to examine motivational variables.

Conclusions and implications

The purpose of these two experimental studies was to extend the current research on EI. Specifically, we were interested in examining the relative benefit of elaborative interrogation when compared to other elaborative, or generative, learning strategies on both lower- and higher-order learner processing. Based on the generative learning theory (Jonassen 1994; Wittrock 1990, 1991), results from prior EI research, and the results of this research, we expect that elaborative strategies should facilitate students’ learning from text. While the findings of this study do not fully support that expectation, it may be that learners who use a provided elaborative interrogation strategy effectively may perform better on outcome measures than those who do not, both at immediate and delayed testing. This finding is important because it suggests that elaborative interrogation should work, but to be successfulrequires scaffolding through strategy instruction, practice, or further instruction about how elaborative interrogation should facilitate learning.

Findings from this study indicated that learners differentially performed the tasks during the study. Specifically, participants were unable to effectively generate their own questions or examples; they were much more able to answer questions posed to them during study. It is important to mention that they were not engaged in the same type of activities and that generating good questions is both more difficult and more unfamiliar than answering questions posed directly. Regardless of our findings with respect to learners’ ability to use these strategies, we still expect that any elaborative process that students use should facilitate learning providing they can use those strategies effectively. We believe that if we scaffold instruction on the use of elaborative strategies, students should be better able to use the strategies. This is an important consideration as it would be more useful for learners to have a repertoire of effective elaboration strategies they might spontaneously use, rather than simply responding to questions posed to them while reading texts. This is especially important since it is likely that many texts students encounter for college coursework will not contain questions as text supplements. Students who use elaborative strategies supported by generative learning principles would more likely become sophisticated readers.

References

Ainsworth, S., & Burcham, S. (2007). The impact of text coherence on learning by self-explanation. Learning and Instruction, 17(3), 286–303.

Ainsworth, S., & Th Liozou, A. (2003). The effects of self-explaining when learning with text or diagrams. Cognitive Science, 27(4), 669–681.

Ames, C. (1992). Classroom goals, structures, and student motivation. Journal of Educational Psychology, 84(3), 261–271.

Ames, C., & Archer, J. (1988). Achievement goals in the classroom: Students’ learning strategies and motivation processes. Journal of Educational Psychology, 80, 260–267.

Azevedo, R., & Hadwin, A. F. (2005). Scaffolding self-regulated learning and metacognition—implications for the design of computer-based scaffolds. Instructional Science, 33(5–6), 367–379.

Bell, B. S., & Kozlowski, S. W. J. (2008). Active learning: Effects of core training design elements on self-regulatory processes, learning, and adaptability. Journal of Applied Psychology, 93(2), 296–316.

Bloom, B. S. (1956). Taxonomy of educational objectives: The classification of educational goals (1st ed.). New York: Longman’s Green.

Boudreau, R. L., Wood, E., Willoughby, T., & Sprecht, J. (1999). Evaluating the efficacy of elaborative strategies for remembering expository text. Alberta Journal of Educational Research, 45(2), 170–183.

Bouffard, T., Boisvert, J., Vezeau, C., & Larouche, C. (1995). The impact of goal orientation on self-regulation and performance among college students. British Journal of Educational Psychology, 65(3), 317–329.

Bransford, J. D., Stein, B. S., Vye, N. J., Franks, J. J., Auble, P. M., Mezynksi, K. J., et al. (1982). Differences in approaches to learning: An overview. Journal of Experimental Psychology: General, 111, 390–398.

Callender, A. A., & McDaniel, M. A. (2007). The benefits of embedded question adjuncts for low and high structure builders. Journal of Educational Psychology, 99(2), 339–348.

Chi, M. T. H., de Leeuw, N., Chiu, M. H., & La Vancher, C. (1994). Eliciting self-explanations improves understanding. Cognitive Science, 18, 439–477.

Craik, F. I. M., & Lockhart, R. S. (1972). Levels of processing: A framework for memory research. Journal of Verbal Learning and Verbal Behavior, 11(6), 671–684.

Craik, F. I., & Tulving, E. (1975). Depth of processing and retention of words in episodic memory. Journal of Experimental Psychology: General, 104(3), 268–294.

Davis, E. A., & Linn, M. C. (2000). Scaffolding students’ knowledge integration: Prompts for reflection in KIE. International Journal of Science Education, 22(8), 819–837.

Day, E. A., Radosevich, D. J., & Chasteen, C. S. (2003). Construct- and criterion-related validity of four commonly used goal orientation instruments. Contemporary Educational Psychology, 28(4), 434–464.

Demetriadis, S. N., Papadopoulos, P. M., Stamelos, I. G., & Fischer, F. (2008). The effect of scaffolding students’ context-generating cognitive activity in technology-enhanced case-based learning. Computers & Education, 51(2), 939–954.

Dornisch, M., & Sperling, R. (2004). Elaborative questions in web-based text materials. International Journal of Instructional Media, 31(1), 49.

Dornisch, M., & Sperling, R. (2006). Facilitated learning from technology-enhanced text: Effects of prompted elaborative interrogation. The Journal of Educational Research, 99(3), 156–165.

Dornisch, M., & Sperling, R. (2008). Elaborative interrogation and adjuncts to technology-enhanced text: An examination of ecological validity. International Journal of Instructional Media, 35(3), 317.

Dweck, C. S. (1986). Motivational processes affecting learning. American Psychologist, 41(10), 1040–1048.

Dweck, C. S. (2002). The development of ability conceptions. In A. Wigfield & J. S. Eccles (Eds.), Development of achievement motivation (pp. 57–88). New York: Academic Press.

Elliot, A. J., & McGregor, H. A. (2001). A 2 × 2 achievement goal framework. Journal of Personality and Social Psychology, 80(3), 501–519.

Gerjets, P., Scheiter, K., & Schuh, J. (2007). Information comparisons in example-based hypermedia environments: Supporting learners with processing prompts and an interactive comparison tool. Educational Technology Research and Development, 56(1), 73–92.

Grabowski, G. (2004). Generative learning contributions to the design of instruction and learning. In D. Jonassen (Ed.), Handbook on research on educational communications and technology (2nd ed., pp. 719–743). Mahwah, NJ: Erlbaum.

Graesser, A. C., Leon, J. A., & Otero, J. C. (2002a). Introduction to the psychology of science text comprehension. In J. Otero, J. A. Leon, & A. C. Graesser (Eds.), The psychology of science text comprehension (pp. 1–15). Mahwah, NJ: Erlbaum.

Graesser, A. C., Person, N., & Hu, X. (2002b). Improving comprehension through discourse processes. New Directions in Teaching Learning, 89, 33–44.

Greene, C., Symons, S., & Richards, C. (1996). Elaborative interrogation effects for children with learning disabilities: Isolated facts versus connected prose. Contemporary Educational Psychology, 21, 19–42.

Hamilton, R. (1989). The effects of learner-generated elaborations on concept learning from prose. Journal of Experimental Education, 57, 205–217.

Hamilton, R. J. (1997). Effects of three types of elaboration on learning concepts from text. Contemporary Educational Psychology, 22, 299–318.

Hamilton, R. J. (2004). Material appropriate processing and elaboration: The impact of balanced and complementary types of processing on learning concepts from text. British Journal of Educational Psychology, 74, 221–237.

Hilden, K. R., & Pressley, M. (2007). Self-regulation through transactional strategies instruction. Reading & Writing Quarterly, 23(1), 51–75.

Jonassen, D. H. (1994). Thinking technology: Toward a constructivist design model. Educational Technology, 34(4), 34–37.