Abstract

Although prior research has shown that experts tend to overestimate or underestimate what laypersons actually know, little is known about the specific consequences of biased estimations for communication. To investigate the impact of biased estimations of a layperson’s knowledge on the effectiveness of experts’ explanations, we conducted a web-based dialog experiment with 45 pairs of experts and laypersons. We manipulated the experts’ mental model of the layperson by presenting them either valid information about the layperson’s knowledge or information that was biased towards overestimation or underestimation. Results showed that the experts adopted the biased estimations and adapted their explanations accordingly. Consequently, the laypersons’ learning from the experts’ explanations was impaired when the experts overestimated or underestimated the layperson’s knowledge. In addition, laypersons whose knowledge was overestimated more often generated questions that reflected comprehension problems. Laypersons whose knowledge was underestimated asked mainly for additional information previously not addressed in the explanations. The results suggest that underestimating a learner during the instructional dialog is as detrimental to learning as is the overestimation of a learner’s knowledge. Thus, the provision of effective explanations presupposes an accurate mental model of the learner’s knowledge prerequisites.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

It is a widely held belief that experts should avoid disciplinary jargon in communication with laypersons and give explanations intelligible to all. The KISS principle (keep it simple, stupid) from software and engineering design (e.g., Macintosh and Gentry 1999) is often cited to substantiate this claim: In order to communicate expert knowledge to laypersons successfully, the message should be kept as simple as possible. In addition, there are also scientific approaches assuming a linear relationship between general indicators of text comprehensibility, such as clarity or conciseness (e.g., see Bromme et al. 2005a), and communicational success.

Although most people would probably spontaneously agree with the previous statements, we doubt the validity of a general “one-size-fits-all” solution that takes not into account individual differences. The reasons for our misgivings will be laid out in the following introduction to a dialog experiment in which computer experts communicated with laypersons about fundamentals of Internet technology. The findings of the study confirmed our doubts: The most successful explanations with regard to laypersons’ understanding were not those designed in the simplest manner. Rather, it was important that the explanations were specifically adapted to the layperson’s individual background knowledge: “Too simple” explanations that resulted from underestimations of the laypersons’ knowledge were as detrimental as “too difficult” explanations that resulted from overestimations of the laypersons’ knowledge. Thus, starting the instructional dialog with an underestimation of the learner’s knowledge in mind (cf. Bromme et al. 2001) would not be a recommendable heuristic for an expert or tutor to follow.

Experts’ assumptions of what laypersons know

Communication with laypersons has become an integral part of the professional competence of many experts, such as in doctor–patient interaction, computer consultation, or business management (e.g., Bromme et al. 2005b; Candlin and Candlin 2002; Nückles and Bromme 2002). Up to now, however, comparatively little attention has been paid to how experts share their knowledge with people who have less expertise (Cramton 2001; Hinds and Pfeffer 2003). In the traditional expert-novice paradigm that highlights the differences between experts and novices in the organization and application of knowledge (Chi et al. 1981; Simon and Chase 1973), experts are merely construed as isolated problem solvers (Bromme et al. 1999). Their knowledge base and skills under study are confined to the problem domain, that is, to a certain field of expertise, such as medicine, geography, or physics (e.g., Anderson and Leinhardt 2002; Rikers et al. 2002). Hence, the requirements for experts to effectively convey their specialist knowledge to others are not investigated.

Especially the task of communicating with laypersons may be cognitively demanding for experts. In order to communicate effectively, they need to take into account a layperson’s differing perspective (Schober 1998). Thus, experts should have quite a precise idea of what a specific layperson does and does not know (Nickerson 1999). Most theories of language use assume that a partner model, that is, a model of the communication partner, is indeed an essential prerequisite for successful communication (Clark 1992; Clark 1996; Fussell and Krauss 1992; Horton and Gerrig 2002). In deriving assumptions about the communication partner, communicators can tailor their contributions to the knowledge they believe the partner to possess in order to facilitate understanding and comprehension (Clark and Murphy 1982). Especially in communication between experts and laypersons, such a mental model of the communication partner is of particular importance because typically the topical knowledge between experts and laypersons greatly differs (Brennan and Clark 1996; Isaacs and Clark 1987; Nückles et al. 2005; Nückles and Stürz 2006; Schober 1998; Schober and Brennan 2003). Hence, in contrast to routine communication, it would be extremely difficult for laypersons to understand the information conveyed by experts if experts did not appreciate and bridge the differences between their own specialist and the laypersons’ less sophisticated knowledge (cf. Pickering and Garrod 2004).

Though what actually happens when experts arrive at erroneous assumptions about the layperson’s knowledge and give explanations that are not adapted to the layperson’s individual understanding? Previous research has paid little attention to the particular effects on communication resulting from experts’ false beliefs of laypersons’ knowledge (Hinds and Pfeffer 2003). This is surprising given the growing body of literature that demonstrates that experts both tend to overestimate and underestimate what laypersons know in their field of expertise. In Nickerson’s theory (1999), the construction of a mental model of another person’s knowledge is conceptualized as an anchoring-and-adjustment process (Tversky and Kahneman 1974), where one’s model of one’s own knowledge serves as a default model of what another random person knows (similar to the so-called false-consensus effect proposed by Ross et al. 1977). Accordingly, when experts use their own knowledge as the basis for constructing an initial model of a layperson’s knowledge, they might be particularly prone to overestimate a layperson’s understanding. For example, in a study by Hinds (1999), experts were asked to predict the time needed by novices to perform an unfamiliar complex task. Results showed that experts systematically overestimated how quickly novices would be able to complete the task. Hinds concluded that the ready-availability and inter-connectedness of the experts’ knowledge (Chi et al. 1988) interfered with the task of taking into account the limited knowledge of laypersons. She coined this phenomenon the curse of expertise. A related body of research in educational psychology considers the accuracy of tutors’ monitoring of students’ understanding (Chi et al. 2004; Siler and VanLehn 2003). Tutors are comparable to domain experts in that they are often very knowledgeable in a particular domain but have not necessarily pedagogical expertise, that is, formal training in the skills of tutoring (Cohen et al. 1982). For example, Chi et al. (2004) asked tutors to assess students’ understanding of biological issues during tutoring. They found that tutors inflated their judgment toward assuming that students had a more complete understanding than they actually had. Hence, tutors overestimated the students’ knowledge because they monitored students’ understanding from their own perspective instead of from the students’ perspective. However, not only experts or tutors with high content knowledge, but even teachers who possess pedagogical knowledge, that is, knowledge about methods for assessing students’ understanding (Borko and Putnam 1996), may be caught by the curse of expertise. For example, Nathan and Petrosino (2003) investigated the influence of teachers’ domain knowledge in mathematics on their ability to predict students’ difficulties with mathematical problems. Regardless of their educational experience, teachers with high domain knowledge generally overestimated the students’ knowledge required to solve symbol-based mathematical tasks (see also Nathan and Koedinger 2000).

Although it seems intuitive that experts—in line with the empirical findings reported previously—are more likely to overestimate what laypersons know, one may also find situations in which experts tend to underestimate the laypersons’ knowledge. In particular, when experts are aware of their status as an expert, they may perceive the exclusiveness of their knowledge as a feature that distinguishes them from the community of laypersons (Fussell and Krauss 1992). Hence, when they are triggered to take into account all information about their own knowledge that can be considered as special or unlikely to be representative of the knowledge of people in general (Nickerson 1999), experts might be prone to overestimate the exclusiveness of their own knowledge and therefore underestimate what laypersons in fact do know. Bromme et al. (2001) tested this assumption and compared the estimates of experts and laypersons concerning the commonality of specialist terms from the computer domain among laypersons. They found that experts, in contrast to laypersons, generally produced more cautious estimates, particularly when the experts’ knowledge was experimentally induced as being exclusive. In addition, when specialist terms were considered to be known by the majority of laypersons, experts—as compared with laypersons—clearly underestimated laypersons’ knowledgeability of these concepts.

Overall, the reported studies provide evidence that experts have a propensity to make overestimations or underestimations of the laypersons’ knowledge. However, the particular consequences of an inaccurate mental model of the laypersons’ understanding for communication and learning have not yet been studied systematically. Nonetheless, some studies found that experts might have difficulties providing explanations to laypersons at an appropriate level. For example, in an experiment by Hinds et al. (2001), experts’ explanations addressed to a lay audience were more advanced and less concrete than those provided by persons with less expertise. As a result, laypersons had considerably more problems in understanding the instructions given by experts. Contrary, Alty and Coombs (1981) who conducted a detailed conversation analysis of face-to-face advisory dialogs collected at diverse computer support services found that experts often included redundant information in their explanations or paraphrased the same content several times without noticing that it was already understood by the layperson (see also Erickson and Schultz 1982). These findings could imply that experts’ deficits in giving appropriate explanations might be attributed to an inaccurate mental model of the layperson’s understanding. In a strict sense, however, the results do not allow for conclusions with respect to the relevance of an accurate partner model. There might be factors other than a biased mental model of the communication partner that might prevent experts from providing intelligible and informative explanations (Schober and Brennan 2003). For example, experts might deliberately express themselves in an incomprehensible manner to demonstrate the exclusiveness of their knowledge (Stehr and Ericson 1992), or their rhetorical skills are insufficient for translating their writing plans into a well-designed text (Bromme et al. 1999). In addition, the discourse tasks might be particularly difficult for experts (Brown and Dell 1987), or they may have to produce explanations under time pressure (Horton and Keysar 1996), thus making fewer attentional resources available to devote to taking into account the partner’s specific needs (Krauss and Fussell 1996).

In order to study the specific effects of a partner model on communication, it is important to ensure that this partner model is indeed the determining factor in message production. For example, in their experiments, Fussell and Krauss (1992) developed a two-stage version of the referential communication paradigm to measure speakers’ assumptions about their recipient independently of the messages produced. In a first stage, speakers were asked to estimate the likelihood that their communication partners would know the names of political celebrities. In a second stage, the speakers’ task was to describe these celebrities in such a way that the communication partner was able to identify them unambiguously. Fussell and Krauss found that the amount of descriptive information speakers provided along with the names of the celebrities was directly correlated with the perceived likelihood that the communication partners would know the names of these celebrities (for similar results, see Lau et al. 2001). Although the findings provide valuable insights into the effects of speakers’ mental models of their communication partner on message production, the particular impact of false assumptions, that is, overestimations and underestimations of the communication partner’s understanding, was not investigated. Moreover, Fussell and Krauss’s experiments focused exclusively on acts of reference (i.e., describing and identifying single objects during communication) but did not take into account more natural communication tasks, as will be investigated in the present study.

Learning from instructional texts

In order to shed light on how experts’ overestimations and underestimations might affect laypersons’ understanding in communication, findings of text revision studies provide an empirical background. These studies deal with the question of how to make instructional texts more understandable with respect to a learner’s specific knowledge level (e.g., Britton and Gülgöz 1991; McNamara et al. 1996). As learning from texts is hindered when the information in the text cannot be linked to the readers’ prior knowledge (Alexander et al. 1994; Kintsch 1994), it seems sensible to optimize learning by making a text as easy to read and comprehend as possible. In line with this assumption, Britton and Gülgöz reduced the need for readers to make inferences when reading a text by supplying background information that was previously left unstated in the text. As a result, the revised text facilitated the readers’ comprehension substantially (for similar results, see Beck et al. 1991; Vidal-Abarca and Sanjose 1998). However, research has also shown that this traditional approach does not always lead to optimal learning. For example, McNamara et al. (1996) and Voss and Silfies (1996) found that texts can be too easy for students with a relatively high degree of prior knowledge. When such students read a text in two different versions, the text version that was more difficult to comprehend (i.e., less coherent) resulted in better learning outcomes. This finding suggests that the higher level of text difficulty induced the students to more actively process the textual information by making more and deeper connections of the text concepts with related concepts in their long-term memory. In contrast, due to the higher redundancy between the students’ prior knowledge and the information provided by the easy texts, these texts were not challenging enough, thus resulting only in passive processing and lower comprehension. Similarly, Wolfe et al. (1998) conducted a study in which students with different levels of medical knowledge were asked to read different texts about the human heart that varied in the level of difficulty. They found that the match between the background knowledge of the students and the difficulty of text information was crucial for learning. Students whose prior knowledge did not overlap enough with the textual information showed only a poor understanding of the medical information. However, students’ learning whose knowledge overlapped too much with the contents was equally impaired. Hence, only those texts were optimal for learning that provided the students with the opportunity to link the text content with their prior knowledge but still contained enough new information to expand their already existing knowledge.

Against the background of these findings, it can be assumed that experts’ explanations that are not accurately adapted to the laypersons’ current level of knowledge have detrimental effects on learning. In order to provide sufficient information to a layperson to facilitate the comprehension of the content being presented, experts should design their explanations in line with their assumptions about what the layperson knows (Bromme et al. 2005a; Clark and Murphy 1982; Fussell and Krauss 1992; Nickerson 1999; Nückles et al. 2005; Schober and Brennan 2003). Accordingly, when experts have false assumptions about the laypersons’ knowledge, this should result in suboptimal explanations that do not fully match with the laypersons’ actual informational needs. Consistent with the findings of the text revision studies reported previously, experts’ explanations that are too easy might prevent laypersons from deepening their understanding, whereas explanations that are too difficult can be expected to lead to comprehension breakdowns. In contrast to the text revision studies, however, the present study investigated learning from textual information in an interactive communication setting. The laypersons, therefore, had the opportunity to ask the experts questions when they experienced difficulties in processing the experts’ explanations. For example, they could ask experts for clarification or for further information in order to improve their understanding. Thus, by taking an active role in communication through question asking, laypersons could seek to reduce possible comprehension difficulties (cf. Graesser and McMahen 1993; Otero and Graesser 2001).

Research questions and predictions

The current study aimed to focus on the impact of experts’ false assumptions about a layperson’s knowledge on communication. We conducted a dialog experiment in which computer experts provided laypersons with written explanations on the fundamentals of Internet technology in a computer-mediated context. In computer-mediated communication, prior assumptions about the communication partner play a particularly important role because the opportunities to give feedback are more restricted than in face-to-face communication (Clark and Brennan 1991). Due to the reduced amount of information that communicators can use to form a detailed mental model of their partner, they must rely more heavily on their prior assumptions about the other’s knowledge (Fussell and Krauss 1992). In the case of false assumptions, these assumptions have lower chances of being recognized and corrected when feedback is more restricted (Nickerson 1999). Thus, computer-mediated communication is very suitable for investigating the impact of false assumptions about an interlocutor’s knowledge on communication and learning. The increased anonymity associated with the medium and the reduced situational cues make it relatively easy to experimentally manipulate the validity of the interlocutors’ assumptions about their communication partner’s knowledge. Therefore, it was possible to induce systematic overestimations and underestimations on the part of the experts.

In order to realize false assumptions about their partner’s knowledge that were biased towards an overestimation or an underestimation, respectively, we provided experts with explicit information about the layperson’s individual background knowledge prior to communication. Experts were instructed to use this information in order to tailor their explanations to the layperson’s specific communicational needs. In one experimental condition, experts were presented with valid information about the layperson’s actual knowledge level. In the other two experimental conditions, the provided information about the laypersons’ knowledge was distorted: The information either overestimated or underestimated the layperson’s knowledge relative to the layperson’s actual knowledge level. In all three experimental conditions, the laypersons actually had a moderate level of prior knowledge in the computer and Internet domain. This was necessary in order to induce systematic overestimations and underestimations. Using this experimental paradigm, we tested the effects of false assumptions about the layperson’s knowledge on experts’ explanations and laypersons’ understanding and question asking.

Technical language hypothesis

If the experts used the information about the laypersons’ knowledge to construct a mental model of the layperson, this should influence the way they conveyed the technical information to the laypersons. In previous experiments, we demonstrated that experts indeed adapted their explanations in accordance with displayed information about a layperson’s knowledge level (Nückles et al. 2005, 2006). Depending on the layperson’s level of knowledge, the experts varied the type and amount of the technical information in their explanations. For example, when the layperson had a low knowledge level in the computer domain, the experts used rather basic statements that required no or only little foundation in the computer and Internet domain to understand. By contrast, when the layperson had a higher knowledge level, the experts used more advanced statements in their explanations of technical concepts and problems (cf. Hinds et al. 2001, for similar findings).

Analogous to these findings, we predicted that experts would use the most advanced statements when they received information about the layperson’s knowledge biased towards an overestimation. In this case, experts could assume the laypersons’ knowledge to be relatively high, which should reduce their need to transfer their knowledge to the layperson in more basic terms. In contrast, when experts were presented information that was biased towards an underestimation, they could assume the layperson’s knowledge to be relatively low. In this case, it was expected that the experts used the most basic statements in the interest of being as clear as possible. As experts with valid (i.e., unbiased) information about the layperson’s knowledge provided explanations to laypersons with a relatively moderate knowledge in the computer and Internet domain, they should use fewer advanced statements than experts who overestimated the laypersons’ actual knowledge and fewer basic statements than experts who underestimated the laypersons’ actual knowledge. Hence, we predicted a linear trend with the most advanced and the fewest basic statements in the explanations of experts who were presented overestimations and with the fewest advanced and most basic statements in the explanations of experts who were presented underestimations of a layperson’s knowledge. When experts were provided with valid knowledge information, the number of advanced and basic statements in their explanations should lie in-between. The technical language hypothesis served as a manipulation check in order to reveal whether or not the experts adopted the experimentally provided information about the layperson’s knowledge and customized their explanations accordingly.

Knowledge gain hypothesis

Providing experts with valid information about a layperson’s knowledge should result in explanations that were—more or less—well adapted to the laypersons’ real knowledge level (Nückles et al. 2005, 2006; Nückles and Stürz 2006). Consequently, such adapted explanations should facilitate a layperson’s knowledge acquisition. Conversely, providing experts with biased knowledge information should lead to suboptimal explanations that were only poorly adjusted to the laypersons’ real informational needs. Accordingly, learning should be impaired, regardless of whether the laypersons’ knowledge was overestimated or underestimated (cf. Wolfe et al. 1998). Laypersons who were overestimated and therefore mainly presented with advanced technical information should learn less because their actual knowledge state would be too distant from the information provided by the experts. In contrast, laypersons who were underestimated should acquire less knowledge because the basic information that experts presented in their explanations should overlap too much with the laypersons’ already existing knowledge base. Hence, it was predicted that both overestimated and underestimated laypersons should benefit less from the experts’ explanations than laypersons who received explanations from experts with valid information.

Question asking hypothesis

Both overestimated and underestimated laypersons should experience a greater discrepancy between the information provided by the experts and their own communicational needs (Graesser and McMahen 1993). As laypersons were expected to receive explanations that only insufficiently matched with their actual informational needs, this should provoke them to write back to the expert and ask for clarifications or further information more often. Valid information about the layperson’s knowledge level should, in contrast, help experts to better adapt their explanations to the layperson’s knowledge. Consequently, the layperson should be more contented and therefore return fewer questions to the expert. Accordingly, it was predicted that laypersons would ask substantially more questions when experts had biased information available as compared with laypersons whose experts had valid knowledge information.

Comprehension-question hypothesis

Depending on whether experts had valid or biased information about the layperson's knowledge available, laypersons should ask different types of questions. Experts who were provided with information that was biased towards an overestimation of the layperson’s knowledge should produce explanations that were too difficult to understand. As a result, laypersons should more often have problems encoding unknown words (i.e., the surface code, Otero and Graesser 2001) or adequately representing the semantic structure (i.e., the textbase level, Kintsch 1994) of the experts’ explanations in order to achieve a deeper understanding. Accordingly, it was predicted that laypersons whose prior knowledge level was overestimated would ask more comprehension questions that were specifically related to the words and statements generated by the experts, as compared with laypersons who communicated with experts who had valid information or information biased towards underestimation.

Information-seeking question hypothesis

Providing experts with underestimations of a layperson’s knowledge should result in rather simple explanations which were not sufficiently informative for the laypersons. The laypersons might have minor problems comprehending the content of these explanations. On the other hand, they would offer the laypersons only little opportunity to deepen and extend their understanding, that is, to enrich their mental model of the computer issues (Kintsch 1994) with new information. Hence, laypersons whose knowledge was supposed to be underestimated by the experts should ask for additional information not previously stated in the explanations more often, as compared with laypersons who received explanations from experts with valid information or information biased towards overestimation.

Method

Participants

Forty-five computer experts and 45 laypersons participated in the experiment. Computer experts were recruited among advanced students of computer science. They were paid 12 EURO for their participation. Their average age was 22.50 years (SD = 2.07). The 45 participants serving as laypersons were recruited among students of psychology and of the humanities. They received 15 EURO as compensation for their participation. The somewhat larger amount of money was justified by the extended knowledge tests the laypersons were administered in addition to the communication phase of the experiment. The laypersons’ mean age was 23.60 years (SD = 5.65).

It was ensured that the participants serving as laypersons in the experiment had only a moderately low level of prior knowledge in the computer and Internet domain. This was necessary in order to establish systematic overestimations and underestimations of laypersons’ knowledge. Moreover, the pre-selection of laypersons allowed us to control for potential effects of prior knowledge on question asking. Research has shown that the amount and the quality of the questions people ask might depend on the knowledge they have in a certain domain (e.g., Otero and Graesser 2001). In order to determine the laypersons’ prior knowledge in the computer and Internet domain, a standardized knowledge test was administered. The test was based on a multiple-choice test by Richter et al. (2000) that was specifically constructed to differentiate among people who are laypersons in this domain (cf. Nückles et al. 2005, 2006). Thus, even someone with high scores on this knowledge test would still have substantially less knowledge than a computer expert. The test consisted of 24 multiple choice items, with 12 items representing the computer knowledge scale and 12 items representing the Internet knowledge scale. Only those laypersons participated in the experiment who correctly solved at least 5 but no more than 8 items on each scale. This range of solved items on each scale was defined as a layperson’s medium knowledge level. Students whose number of correctly solved items was outside this range were not eligible to participate. There were no significant differences in laypersons’ computer and Internet knowledge between the experimental conditions, F(2, 42) = 1.76, ns (computer knowledge); F(2, 42) = 1.72, ns (Internet knowledge).

In order to further validate our assignment of participants to the group of experts and laypersons, respectively, we asked all participants to self-assess their experience in using the Internet prior to the experiment. In particular, we asked the participants how long they had been interested in the Internet, how many hours they spend per week working on the Internet, and we asked them to provide a direct rating of their Internet expertise on a 5-point scale, ranging from 1 (= very inexperienced) to 5 (= very experienced). Table 1 displays the mean values and standard deviations of the three measures separately listed for experts and laypersons together with the univariate F-statistics. The comparisons show that our a priori classification of students of computer science on the one hand and of students of psychology and of the humanities on the other hand into experts and laypersons, respectively, was clearly confirmed by the rating data. On average, the computer experts indicated about twice as many years Internet experience, spending about eight times more hours per week working with the Internet, and they rated their overall Internet expertise as clearly higher than the students classified as laypersons did.

Design

Computer experts and laypersons were combined into pairs that were randomly assigned to the experimental conditions. A one-factorial between-subject design was used with validity of knowledge information as the independent variable comprising three different conditions: (a) communication in which experts were provided with valid information about the layperson’s knowledge (in the following labeled valid data condition), (b) communication in which experts were provided with information that was biased towards an overestimation of the laypersons’ knowledge (overestimation condition), and (c) communication in which experts were provided with information that was biased towards an underestimation of the laypersons’ knowledge (underestimation condition). Dependent variables encompassed the technical language of the experts’ explanations as well as laypersons’ knowledge gain and question asking. The technical language of the experts’ explanations referred to the extent to which the explanations required an understanding of the computer and Internet domain—as measured by the proportions of basic and advanced statements—and the number of technical terms that experts used in their explanations. The layperson’s knowledge gain referred to the increase in knowledge through the dialog with the computer expert. Question asking was operationalized by the number of follow-up questions laypersons returned in response to the expert’s initial explanations. The follow-up questions were categorized either as comprehension questions or information-seeking questions.

Materials

The assessment tool

In order to provide experts with information about the layperson’s knowledge, we used an assessment tool that was tried and tested in previous experiments (Nückles et al. 2005, 2006; Nückles and Stürz 2006). The assessment tool presented computer experts both with ratings of the laypersons’ general computer knowledge and their Internet knowledge (see Fig. 1). For each rating, the laypersons’ individual knowledge was displayed on a 6-point scale in the assessment tool, ranging from a very low to a very high knowledge level. The values presented in the assessment tool were determined via the computer and Internet knowledge test mentioned before (see “Participants” section before).

In the valid data condition, the number of items that a layperson solved correctly in the general computer knowledge subtest and in the Internet knowledge subtest was translated into values on the scales in the assessment tool. This was done by dividing the raw score a layperson achieved in each subtest by 2 and indicating the resulting score on the corresponding scale in the assessment tool. For example, if a layperson solved 5 out of the 12 items of the Internet knowledge subtest, this was indicated as a rather low Internet knowledge level. As the prior knowledge level of all participants varied from 5 to 8 correct test items, all participants were classified as rather low or rather high in the valid data condition.

In the biased estimation conditions, overestimations and underestimations were produced by adding or subtracting, respectively, two points on each scale from the laypersons’ actual knowledge level. For example, if a layperson actually had a rather low knowledge level on the computer knowledge scale, this was indicated as a very low knowledge level in the underestimation condition. In the overestimation condition, such a person was marked as having a very high knowledge level.

Inquiries asked by the laypersons

In order to initiate the dialog with the experts in the communication phase of the experiment, laypersons received six prepared inquiries that they directed one after another to the experts. The inquiries demanded explanations of relevant Internet topics and problems. They were chosen from a pool of 20 inquiries that were constructed and pre-tested in preliminary analyses. Three inquiries required the computer expert to explain a technical concept. The other three were more complex. They asked the expert to instruct the layperson how to solve a problem and, additionally, to provide an explanation why the problem occurred in order to help the layperson understand the nature of the problem. The wording of the inquiries was standardized to make the initiation phase of the expert-layperson dialogs comparable across participants and, above all, across experimental groups. Each inquiry was accompanied by one or two additional sentences that provided some background context for the embedded question and thus helped the expert to understand the broader intention of the inquiry. Table 2 shows the six inquiries that were used in the experiment.

Pre-test and post-test on laypersons’ knowledge about the inquiries

Laypersons’ knowledge about the inquiries discussed in the communication phase was assessed using a description task. Laypersons were asked to try to answer each of the six inquiries before and after the communication phase. For each of the six inquiries, we constructed a reference answer with the help of a computer expert. Each reference answer was typically comprised of three, or, in some cases, four main ideas that made up a complete answer in all. In order to keep the scoring task manageable for the raters, only whole scores were assigned. For each answer, up to 3 points could be achieved (0 = no or wrong answer, 1 = partly correct answer, 2 = roughly correct answer, 3 = completely correct answer). For example, if a layperson was unable to provide an answer or the ideas mentioned were incorrect, this answer was assigned a value of 0. A value of 1 was assigned if at least one main idea specified in the reference answer was correctly described by the layperson. Agreement among the raters was determined by the intra-class coefficient. On average, a coefficient of .92 for the individual ratings resulted, indicating very good interrater agreement. All of the points a layperson achieved were summed up across the answers to the six inquiries. Hence, the maximum score to be obtained was 18 points. Generally, laypersons had no substantial knowledge about the inquiries prior to the communication. On average, they only obtained 0.97 out of 18 points (SD = 1.59). There were no significant differences between the experimental conditions, F(2, 42) = 1.29, ns.

Procedure

The pairs of experts and laypersons participated in individual sessions in the experiment. An experimental session including the pre-test phase, communication phase, and post-test phase lasted about two and a half hours.

In the pre-test phase, the laypersons were administered the computer and Internet knowledge test (see “Participants” section before). Additionally, their prior knowledge with regard to the six inquiries to be discussed in the communication phase was determined. The laypersons were encouraged to try to answer each of the inquiries, if possible. We were careful to give the laypersons no reason to assume that the computer experts would have any information about their test results in the communication phase of the experiment. This was important because otherwise the students’ self-perceptions of their test performance might probably have influenced their communication behavior during the advisory exchange with the computer expert. Therefore, at the beginning of the pre-test phase, the students were informed that they were participating in a study on students’ knowledge about computers and the Internet. After completion of the knowledge test, the experimenters analyzed the layperson’s answers to the knowledge tests in a separate room, where they subsequently entered the results into the assessment tool form (see “The assessment tool” section above). Thus, the students serving as laypersons had no reason to suspect that the experts would be informed about their test results. At the beginning of the communication phase, the laypersons were merely told that, in the following, they would have the opportunity to ask an expert about relevant Internet topics and that they would have to demonstrate the acquired knowledge afterwards.

In the communication phase, the computer expert and the layperson sat in different rooms and communicated through a text-based interface, which could be accessed by means of the browser. On average, the communication phase lasted about one and a half hours. The layperson’s task was to sequentially direct all six inquiries (cf. Table 2) to the expert by typing the prepared wording of the inquiry into the text form of the interface. The sequence of the inquiries was randomized individually for each pair of expert and layperson. The expert was asked to answer each inquiry as well as possible. The laypersons were free to write back and ask as many follow-up questions as needed or desired. When both communication partners felt that an inquiry had been answered satisfactorily, they could continue on to the next inquiry. In all three experimental conditions, the assessment tool with information about the laypersons’ knowledge was incorporated into the interface and visible in the upper part of the experts’ screen (see Fig. 1). The experts were informed that the layperson’s knowledge had been determined in advance and that they should try to bear in mind the information when answering the layperson’s inquiries. In the lower left part of the screen, the layperson’s inquiry was presented to the expert and on the right side there was a separate text box for the expert to type in their answer. Communication was asynchronous as in electronic mail. A written message was not automatically visible on the partner’s screen, but was announced by an alert window. In order to view the message, the participant had to press the ‘OK’-button in this window.

In the post-test phase, the laypersons were again asked to write down their answers to each of the six inquiries. In this way, it was possible to compute the individual increase in knowledge for each layperson. After completion of the post-test, the layperson and the expert were debriefed and compensated for their participation.

Analyses and coding

Technical language of the experts’ explanations

For the content analysis of the experts’ explanations, a coding scheme was developed that differentiated between basic and advanced statements that experts used in their answers to the laypersons’ questions. For this purpose, the experts’ written answers were segmented into single statements as the coding units. In order to facilitate segmentation, the experts’ explanations were first coded for different categories that described or explained mutually exclusive aspects of the technical concepts mentioned in the experts’ answers (for details, see Nückles et al. 2006). These included (a) definitions of technical concepts (e.g., categorization, instantiation), (b) processes and events related to technical concepts (e.g., functions, purpose), and (c) characteristics of technical concepts (e.g., commonality, difference). The categories were developed following the knowledge representation types for science texts suggested by Graesser et al. (2002). In the next step, each of these categories was coded as either basic or advanced. Basic categories were those that required no or only little foundation in the computer and Internet domain to understand, whereas advanced categories required such a foundation (for a similar classification, see Hinds et al. 2001). In the following, for the three different types of explanatory statements, a description and an example of a basic and an advanced statement are given:

-

(1)

Definitions of technical concepts. In this dimension, the denotative meaning of a concept was explained, for example, by relating the concept to a superordinate concept.

-

(a)

Basic statements: “HTML is a language in which web pages are written.”

-

(b)

Advanced statements: “FTP is a TCP/IP-based protocol for transferring files between remote computer systems.”

-

(a)

-

(2)

Processes and events related to technical concepts. In this category, statements were coded that explained technical concepts in relation to the technical processes and events that are accomplished by these concepts. Accordingly, statements that, for example, describe the technical functionality of a concept were categorized on this dimension.

-

(a)

Basic statements: “By means of HTML you design web pages and other information that is viewable in the Internet Explorer.”

-

(b)

Advanced statements: “The proxy server acts as an intermediary between a Web client and a Web server.”

-

(a)

-

(3)

Characteristics of technical concepts. On this dimension, attributes that specifically characterized a concept were mentioned or explained. In order to make the attributes of a concept clearer, these statements made use, for example, of comparisons to highlight commonalities and differences between technical concepts.

-

(a)

Basic statements: “SSH is similar to other programs that protect every piece of information sent over the Internet.”

-

(b)

Advanced statements: “The structure of HTML has similarities with LaTeX.”

-

(a)

A trained research assistant split the sentences of each expert answer into smaller units on the basis of the three types of explanatory statements described before. Then she judged whether these statements were basic or advanced. A second trained rater who was blind to the codings of the research assistant coded 10% of the explanations. Interrater agreement as determined by Cohen’s Kappa was good (κ = .82).

In addition to the classification scheme, we also coded the experts’ explanations for technical terms. This linguistic measure has been shown to be a sensitive indicator for the experts’ audience design in communication with laypersons (cf. Bromme et al. 2005a). Technical terms were used by experts, for example, as part of a definition (e.g., ‘HTML is a programming language’), to instantiate a superordinate category (e.g., ‘a common browser software is Mozilla’), or to introduce subordinate concepts (e.g., ‘movies are part of Flash animations’). For the analysis, a list was made containing all the technical terms produced by the experts. Only those expressions were coded as technical terms that were listed in the computer glossary of the book ‘Computerlexikon’ (Schulze 2003). However, computer terms listed in the glossary which have already become everyday terms (e.g., mouse, computer screen) were not coded as technical terms. To identify the technical terms experts used in their answers to the laypersons’ questions, two judges coded all explanations. They were blind to the experimental conditions. A total of 264 technical terms resulted. Interrater agreement was excellent (κ = .99, cf. Fleiss 1981).

Laypersons’ questions

The dialogs between experts and laypersons were recorded, and all the follow-up questions that the laypersons asked in response to an expert’s explanation were analyzed. The questions were assigned to one of the following two question categories:

-

(1)

Comprehension questions. The category was scored when the question addressed comprehension problems specifically related to particular words or statements produced by the experts. Thus, this type of questions was generated when a layperson was uncertain about the meaning of a particular word in the expert’s answer (e.g., “What does LaTex mean?”; word-triggered questions, Otero and Graesser 2001), or when a layperson had problems creating a referential representation for a statement in the expert’s answer (statement-triggered questions, Otero and Graesser). For example, an expert explained the technical concept ‘Secure Shell’ and used the terms ‘command line’ and ‘UNIX’ for illustration. The layperson asked in response to the expert’s explanation: “What does it mean to execute a command line in UNIX?” Hence, these questions sought information in order to solve problems by building an adequate textbase or situation model from the expert’s explanations (cf. Otero and Graesser).

-

(2)

Information-seeking questions. This category refers to questions that required experts to provide laypersons with additional or new information about technical concepts that was not previously stated in the experts’ explanations. Following the question content categories developed by Graesser et al. (1992), these types of questions were generated when laypersons, for example, asked for specific features of a technical concept (e.g., “What are the difficulties of using a proxy server?”), for the frequency of an attribute of a technical concept (e.g., “How fast is FTP transfer?”), for similarities and differences between technical concepts (e.g., “What are the advantages of FTP as compared to HTTP?”), or for consequences associated with a technical concept (e.g., “What happens if I don’t deactivate Active X?”). Thus, information-seeking questions—in contrast to comprehension questions—did not reflect comprehension problems related to words or statements used in the experts’ explanations. Instead, they sought to add new or more elaborate information to the layperson’s already existing situation model.

Two trained raters counted and coded all laypersons’ follow-up questions independently. They were blind to the experimental conditions. In cases of disagreement between the two judges, the final coding was determined through discussion. Interrater agreement was very good (Cohen’s κ = .91, cf. Fleiss 1981).

Results

In this study, an alpha level of .05 was used for all statistical tests.

Technical language of the experts’ explanations

First, we analyzed whether or not experts used the information about the layperson’s knowledge that was experimentally provided by means of the assessment tool. Note that it would only be reasonable to claim that experts in fact overestimated or underestimated the laypersons’ real knowledge or, on the contrary, correctly estimated their communication partner’s knowledge when they adopted the information about the layperson as it was displayed in the assessment tool. Hence, if experts used the provided information in order to construct a mental model of the laypersons’ understanding, they should also design their explanations on the basis of their partner model. To test this assumption, we examined the technical language of the experts’ explanations of the six inquiries. More specifically, we differentiated within each explanatory statement type (i.e., definitions, processes and events, and characteristics) between basic and advanced statements in the experts’ explanations. To this end, the basic and advanced statements were counted and summed up across all experts’ answers separately for the each of the three explanatory statements. Following the technical language hypothesis, experts in the overestimation condition should use the most advanced and the fewest basic statements. In contrast, we expected experts in the underestimation condition to produce the fewest advanced and the most basic statements in their answers to the laypersons’ questions. The number of basic and advanced statements the experts used in the valid data condition, however, should lie in-between. This predicted linear trend was represented by the following contrast: valid data condition: 0, overestimation condition: 1, underestimation condition: −1. We performed a planned multivariate analysis with basic and advanced statements for each type of explanatory statement as the dependent variables and experimental condition as the independent measure. The results confirmed the technical language hypothesis, p(6, 37) = 20.64, p < .001, η² = .77 (strong effect). Planned contrasts for each of the dependent variables with the same contrast weights as reported before showed that this prediction was fulfilled with regard to each type of explanatory statement. As Table 3 illustrates, when experts received information about the layperson’s knowledge that was biased towards an overestimation, their explanations contained the most advanced and the fewest basic statements. In contrast, experts generated the fewest advanced and the most basic statements in their explanations when they were supposed to assume the laypersons’ knowledge to be lower than it actually was. This was true for each type of explanatory statement.

To rule out the possibility that the reported differences in the number of basic and advanced statements between the experimental conditions could be attributed to the fact that experts might have differed in the number of words they overall produced to convey the technical information, we computed an ANOVA with number of words as the dependent variable. There were, however, no significant differences between the experimental conditions, F(2, 42) = 0.18, ns. In all three experimental conditions, experts generated, on average, a similar amount of words per explanation (valid data condition: M = 113.36 words, SD = 59.29; overestimation condition: M = 112.63 words, SD = 36.46; underestimation condition: M = 110.47 words, SD = 29.05). Obviously, experts did not customize their explanations with respect to the amount of information in general but mainly varied the proportions of basic and advanced statements in their explanations depending on whether they assumed the layperson’s knowledge to be relatively low or relatively high.

In addition, we also counted and summed up the technical terms that experts used in their explanations. The number of technical terms across the experts’ answers to the laypersons’ inquiries was subjected to a contrast analysis with the same contrast weights as reported before. The results provided further evidence in support of the technical language hypothesis, F(1, 42) = 6.55, p < .02, η² = .14 (strong effect). In line with the content analysis conducted previously, experts in the overestimation condition used the most technical terms (M = 19.87, SD = 13.19), whereas the explanations of the experts in the underestimation condition contained only half as many technical terms (M = 11.13, SD = 6.01). The number of technical terms in the experimental condition with valid knowledge information lied in-between (M = 15.6, SD = 7.21).

Overall the results clearly demonstrate that experts used the experimentally provided information about the laypersons to construct a mental model of the laypersons’ knowledge. On the basis of this partner model, they varied the extent to which their explanations required only a little or, in contrast, an advanced understanding of the computer and Internet domain. Hence, we demonstrated that the experts in the corresponding experimental conditions in fact overestimated or underestimated the layperson’s real knowledge state.

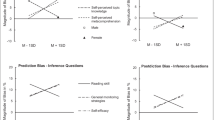

Laypersons’ knowledge gain

In order to investigate the question of how the experts’ explanations influenced the laypersons’ understanding, we analyzed the laypersons’ knowledge that they acquired from the experts’ explanations during communication. Because the test on laypersons’ knowledge about the inquiries was administered both during the pre-test and the post-test, the pre-test to post-test gains were calculated. Following the knowledge gain hypothesis, in those conditions where the experts were presented biased information about the laypersons’ knowledge and therefore produced only suboptimal explanations, the laypersons should acquire less knowledge than the laypersons in the valid data condition. This prediction was represented by the following contrast: valid data condition: 1, overestimation condition: −.5, underestimation condition: −.5. The results of the contrast analysis supported this prediction, F(1, 42) = 4.83, p = .03, η² = .10 (medium effect). Table 4 shows the mean values of the laypersons’ increase in knowledge. As predicted, the largest knowledge gain occurred in the valid data condition, whereas in the conditions in which the laypersons’ knowledge was overestimated or underestimated their knowledge acquisition was impaired to a similar extent.

Laypersons’ questions

Apart from laypersons’ learning, we also examined to what extent experts’ explanations affected laypersons’ question-asking behavior. For this purpose, we counted the total number of follow-up questions a layperson asked in response to an expert’s explanation. Following the question asking hypothesis, laypersons should generate more questions when they received explanations from experts who had biased information about the layperson’s knowledge level available as compared to laypersons whose accurate knowledge level was presented to the expert. This prediction was represented by the following contrast: valid data condition: 1, overestimation condition: −.5, underestimation condition: −.5. The results confirmed the question asking hypothesis, F(1, 42) = 5.86, p = .02, η² = .12 (strong effect). As Table 4 shows, laypersons asked significantly more questions when experts over- or underestimated their knowledge, as compared with the incidence of laypersons’ questions in the valid data condition. The laypersons in the biased estimation conditions returned at least twice as many questions to their expert advisors than the laypersons in the valid data condition.

Types of questions asked by the laypersons

In order to examine differential effects on laypersons’ question asking as a function of whether experts provided mainly basic or advanced statements in their explanations, we distinguished between comprehension questions and information-seeking questions. This analysis should reveal whether laypersons, depending on whether their knowledge was overestimated or underestimated, self-regulated their difficulties with the experts’ explanations by asking different types of questions.

Following the comprehension question hypothesis, we predicted that laypersons whose knowledge was overestimated should ask more comprehension questions than laypersons in the valid data condition or underestimation condition. This prediction was represented by the following contrast: valid data condition: −.5, overestimation condition: 1, underestimation condition: −.5. The results of the contrast analysis clearly supported this prediction, F(1, 42) = 9.77, p = .003, η² = .19 (strong effect). Laypersons in the overestimation condition generated about three times as many comprehension questions as laypersons in the other experimental conditions (see Table 4).

Following the information-seeking question hypothesis, laypersons who were provided with explanations that required only little or no knowledge at all for understanding should ask more frequently for information not previously stated in the experts’ explanations than laypersons in the valid data condition or overestimation condition. Accordingly, a planned contrast with the following weights was computed: valid data condition: −.5, overestimation condition: −.5, underestimation condition: 1. This contrast, however, just reached the 10%-level of statistical significance, F(1, 42) = 3.20, p = .08, η² = .07 (medium effect). Table 4 shows that descriptively the incidence of information-seeking questions was actually highest in the underestimation condition. However, despite their comprehension problems, laypersons whose knowledge was overestimated also asked the experts for further information.

Discussion

The study described in this article gave a systematic picture of how false assumptions about the communication partner can influence communication between experts and laypersons. When experts were experimentally provided with false information about the layperson’s knowledge, they used this information to adjust their explanations accordingly. Experts who received information about the laypersons biased towards overestimation produced explanations that contained mainly advanced information on the fundamentals of Internet technology, including a considerable number of technical terms. In contrast, experts who were made to believe that their partner’s knowledge was lower than it actually was primarily conveyed basic technical information to the layperson, thereby using technical terms comparatively seldom. Depending on the technical style of the experts’ explanations, we found substantial differences in laypersons’ understanding and question asking. When experts had valid information about the laypersons’ knowledge available, their explanations facilitated laypersons’ knowledge acquisition and lessened their need to ask questions. In contrast, laypersons profited less from the instructional explanations when experts were provided with biased knowledge information. In both experimental conditions, laypersons’ knowledge acquisition was impaired to a similar extent, and they directed significantly more questions to the experts. Hence, the accuracy of a mental model of a layperson’s knowledge was important for communication between experts and laypersons to be effective and efficient (Nickerson 1999).

The findings also provided evidence that the laypersons differed in the types of questions they asked in response to the experts’ explanations. When laypersons’ knowledge was overestimated, the advanced technical information provided by the experts raised the incidence of comprehension questions. Conversely, laypersons in the underestimation condition who were primarily provided with basic information about the Internet and computer domain asked very few comprehension questions, indicating that the explanations they received from the experts caused very little trouble in understanding. At the same time, however, those laypersons asked the most information-seeking questions, although this effect just reached the 10%-level of statistical significance. Presumably, the experts’ explanations were less than optimally informative for the laypersons in this condition, which increased their need to enrich and extend their understanding of the topic by asking for additional information. Nonetheless, with regard to laypersons’ information-seeking, our empirical results are less clear. For contrary to our expectations, there were also a comparatively high number of information-seeking questions in the overestimation condition. Although the laypersons in the overestimation condition received slightly too complex and demanding explanations, these explanations might have stimulated the layperson’s curiosity. This conjecture would explain the higher number of information-seeking questions as compared with the valid data condition.

Overall the findings of the present study demonstrated the negative consequences for laypersons’ knowledge acquisition when experts’ explanations only insufficiently matched with the layperson’s actual informational needs. Explanations that required a relatively high level of knowledge in the computer and Internet domain to be understood obviously overwhelmed the laypersons and, therefore, resulted in lower learning. In a similar vein, explanations that required no or only little understanding did not benefit the laypersons’ knowledge acquisition. Evidently, such suboptimal explanations provided laypersons in both cases with ineffective learning opportunities that did not increase their knowledge to a greater extent. This is consistent with results of text revision studies that demonstrate the detrimental effects on learning when instructional texts are not optimally adjusted to a reader’s knowledge: Texts too difficult might hamper learning because the textual information is too distant from the reader’s knowledge to integrate it into a coherent and well-integrated mental representation (e.g., Britton and Gülgöz 1991). Conversely, texts too easy may also run the risk of preventing readers from learning because—due to the greater overlap with the readers’ knowledge—they might tempt them to process the information more superficially (e.g., McNamara et al. 1996). The results of the present study extend the findings of the text revision studies to computer-based communication settings where learners (i.e., the laypersons) acquire knowledge through reading their communication partner’s (i.e., the expert’s) written explanations. Hence, the negative effects of instructional texts that are only poorly adjusted to the learner’s prior knowledge not only occur in individual learning situations, as investigated in the traditional text revision studies, but can be also found in interactive instructional settings. In addition to the text revision studies, however, our findings also show that such negative effects do persist even when learners can take an active role in self-regulating their understanding through question asking. Although laypersons in both conditions with biased knowledge information obviously experienced communication problems and intensified their question asking to compensate for these problems, they were not fully able to overcome their difficulties. They acquired less knowledge from the experts’ explanations than laypersons in the valid data condition.

Different factors could explain why—despite the higher incidence of follow-up questions they directed to the experts—the laypersons learned less from their explanations than laypersons who were advised by experts with valid knowledge information. Given the overall low frequency of questions asked by laypersons, one might assume that laypersons only insufficiently engaged in question asking to compensate for their problems with the experts’ answers. Remember that the laypersons were free to ask follow-up questions but were not instructed to do so. Under these self-regulated conditions, laypersons might have put generally little effort in asking questions. This is in line with the findings of Graesser and McMahen (1993) who compared question asking under two experimental conditions. Participants were either directly instructed to ask an experimenter questions or they had the liberty to do so. Results showed that, compared with the number of task-induced questions, the likelihood of question asking under the self-induced condition was extremely low. Also, one may speculate that the cognitive discrepancy experienced between the laypersons’ own informational needs and the information provided in the experts’ explanations was not the only factor that played an important role in laypersons’ question asking. Graesser and McMahen have proposed a three-component model of question asking in which the detection of a cognitive discrepancy is only a necessary but not a sufficient condition for question generation. For a question to be asked, the potential question asker must, in addition to the detection of a discrepancy (anomaly detection), articulate and refer to the identified discrepancy in words (verbal coding) and take the initiative to express the question in a social setting (social editing). Accordingly, even when one seeks to pose a question, there can be numerous reasons not to do so. For example, the social context is not viewed as a helpful context, or it is doubtable that the answerer has the competence to respond to the question appropriately. Hence, through the social editing process, the potential question asker assesses the costs and benefits of asking a question in a particular social setting. If the costs associated with asking a question exceed its benefits, then the question is less likely to be asked. According to this model, it might be assumed that laypersons in both conditions with false knowledge information regarded the experts generally as being not very well able to provide them with information that satisfied their individual needs. Therefore, laypersons’ view of the experts’ communicative competence might have been a barrier to asking more questions which could have clarified comprehension problems or allowed them to receive additional information that would have enriched their understanding. To investigate the role of such non-cognitive variables in more detail, further studies are necessary that systematically separate the effects of social editing from laypersons’ actual needs to ask questions.

Nevertheless, it has to be acknowledged that laypersons communicated with experts in a computer-mediated setting. In computer-mediated contexts, the costs for producing a message are much higher than in face-to-face communication because every message has to be typed on a computer keyboard which takes more effort and time than speaking (Clark and Brennan 1991). For this reason, the decision to actively contribute to communication depends even more heavily on the urgency and relevance of the message in relation to the costs associated with its communication (Reid et al. 1996). Therefore, communicators in a computer-mediated context usually generate fewer communicative contributions, such as answers or questions, than in face-to-face communication (e.g., Lebie et al. 1996).

Apart from that, the present findings demonstrate how experts adapted their explanations to the laypersons’ knowledge background—at least to the knowledge they assumed the laypersons to possess—in order to design messages that were optimal for their communication partner (Clark and Murphy 1982). This result is consistent with the findings of Bromme et al. (2005a) who showed that medical experts mentioned advanced information more often when they expected to write a message to a medical colleague, whereas their explanations contained more basic information when their expected recipient was a layperson. Similarly, Nückles et al. (2005, 2006) found that experts who were provided with information about the laypersons’ knowledge level were capable of producing explanations that met the laypersons’ individual communicational needs. Together with the current study, these results provide evidence that experts are able to take into account a layperson’s knowledge by adjusting the extent to which the layperson requires knowledge to understand the information being provided (so-called specific-partner adjustments, see Schober and Brennan 2003). The results extend the findings of psycholinguistic studies that usually document that partner adaptation occurs in referential communication (for an overview, see Krauss and Fussell 1996). The tasks used in these studies, however, strongly constrain the content and style of communication because the type of linguistic references is typically restricted to concrete referents like famous persons of public life (Fussell and Krauss 1992) or nonsense figures (e.g., tangram figures, see Schober and Clark 1989). Thus, the communication under study in this research tradition does not aim at improving understanding in terms of acquiring knowledge but mainly consists in solving lexical or referential ambiguities that might impede the natural flow of communication (cf. Bromme et al. 2005a; Krauss and Fussell 1996). Our study, however, demonstrates how understanding and learning in a relatively naturalistic communication setting using a more complex task can indeed be affected by the adaptations that communicators make with respect to their partner’s communicational needs. This result supports and substantiates the validity of models of language use that regard an accurate mental model of the communication partner as an essential component in the process of message production (Clark 1992, 1996; Clark and Murphy 1982; Nickerson 1999).

Although we demonstrated the negative effects of false assumptions on communication in a computer-mediated context, we believe that our findings are not necessarily confined to situations in which the interactive dynamics of communication are restricted by the constraints of the communication medium. Instead, even in face-to-face settings where understanding can be indicated more immediately and in a multitude of ways (Clark and Brennan 1991), an inaccurate mental model of the communication partner might create an obstacle to effective interaction. For example, in human tutoring, there is evidence that tutor-generated explanations often do not support students’ learning (Chi et al. 2001; VanLehn et al. 2003). One might speculate that students’ problems in learning from tutorial explanations also result from tutors’ misjudgments of what a student actually does know (Chi et al. 2004; Siler and VanLehn 2003). As outlined in the beginning, tutors often do not have formal training in tutoring skills and thus might lack the ability to adapt to a learner’s needs in a flexible manner. On the other hand, people with extensive didactic expertise should be less prone to overestimate or underestimate a learner’s prior knowledge (although see Nathan and Petrosino 2003). Thus, it would be interesting to investigate whether tutors with little didactic expertise differ from tutors with extensive didactic expertise in their ability to adjust their instructional explanations to a learner’s knowledge (Glass et al. 1999).

Study limitations

A potential limitation of our study is that we did not examine the experts’ own assumptions about the laypersons’ knowledge and their effects on communication. Instead, we provided experts with pre-fabricated information about the layperson’s pretended knowledge in order to systematically analyze the impact of overestimations and underestimations on the design of the experts’ explanations and the laypersons’ understanding. From a psycholinguistic point of view (Clark 1996), it can be countered that it might be a difference whether experts establish their own estimations of a layperson’s knowledge or are provided with this information. Our results, however, indicate that the experts did not question the information about the laypersons that was presented to them via the assessment tool. Rather, the experts evidently used the information in the assessment tool to construct a mental model of their communication partner’s knowledge. This assumption is supported by the result that the experts considerably differed in the technical language of their explanations depending on whether they were provided with valid information about the layperson’s knowledge or with information that was biased towards an overestimation or an underestimation. The assumption is further corroborated by the findings of a previous study (Nückles et al. 2006) in which experts had to think aloud in order to examine how they processed the knowledge information presented in the assessment tool. The study demonstrated that experts indeed adopted the information about the laypersons in order to specify their mental model of their communication partner. On the basis of this mental model, they then adjusted their explanations accordingly. Hence, from these results it can be concluded that in the communication setting of the present experiment experts obviously integrated the information about the layperson into their estimations of their communication partner’s knowledge. That is, the information was in fact part of their estimations.

A related caveat concerns the question of whether experts who were provided with false information about the layperson’s knowledge became suspicious during the course of communication and recognized that the knowledge that was presented in the assessment tool did not reflect the laypersons’ real understanding. In order to prevent experts from distrusting the information about their communication partner, the experimental induction of a biased mental model of the laypersons’ knowledge was rather discreet. Remember, for example, that if a layperson’s real computer knowledge level was rather low, this was presented in the underestimation condition as a very low knowledge level. Nevertheless, the laypersons in the experiment were free to pose questions when experiencing difficulties with the experts’ explanations. Therefore, it might be possible that experts in the overestimation and underestimation condition did not merely use the information that was displayed in the assessment tool to develop a mental model of the layperson’s understanding. Instead, they might have tried to infer the layperson’s real knowledge state from the questions that the laypersons asked during the course of communication. If experts indeed used the questions of the laypersons to recalibrate their mental model of their communication partner, they might have adapted their explanations to the layperson’s real knowledge state during communication. We found, however, no evidence supporting this assumption. For example, experts’ explanations given at the beginning of the discourse did not differ from their explanations given at the end of the discourse with regard to their technical style. Obviously, experts did not take into account layperson’s question asking in order to update their mental model of their partner’s knowledge. However, it has to be conceded that the laypersons asked very few questions overall. Therefore, experts only seldom had the opportunity to get a comprehensive picture of the laypersons’ real understanding. More research is needed on how experts—and people in general—infer what their communication partner really does and does not know about the topic of conversation (cf. Nickerson 1999; Person et al. 1994).

What is the most crucial finding that can be taken from this close examination of communication between experts and laypersons? Obviously, it is not a fruitful strategy to provide learners with explanations designed at the simplest level. Even for laypersons, there are explanations that can be too simple and might therefore have similar detrimental effects as explanations that are too difficult to understand. Hence, the effectiveness of explanations largely depends on how well communicators—including experts, teachers, and tutors—are able to adjust the level of information to the learner’s individual understanding.

References

Alexander, P. A., Kulikowich, J. M., & Schulze, S. K. (1994). How subject-matter knowledge affects recall and interest. American Educational Research Journal, 31, 313–337.

Alty, J. L., & Coombs, M. J. (1981). Communicating with university computer users: A case study. In M. J. Coombs & J. L. Alty (Eds.), Computing skills and the user interface (pp. 7–71). London: Academic Press.

Anderson, K. C., & Leinhardt, G. (2002). Maps as representations: Expert novice comparison of projection understanding. Cognition and Instruction, 20, 283–321.

Beck, I. L., McKeown, M. G., Sinatra, G. M., & Loxterman, J. A. (1991). Revising social studies text from a textprocessing perspective: Evidence of improved comprehensibility. Reading Research Quarterly, 26, 251–276.

Borko, H., & Putnam, R. (1996). Learning to teach. In D. Berliner & R. Calfee (Eds.), Handbook of educational psychology (pp. 673–708). New York: Macmillan.

Brennan, S. E., & Clark, H. H. (1996). Conceptual pacts and lexical choice in conversation. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22, 1482–1493.

Britton, B. K., & Gülgöz, S. (1991). Using Kintsch’s computational model to improve instructional text: Effects of repairing inference calls on recall and cognitive structures. Journal of Educational Psychology, 83, 329–345.