Abstract

Since the first version of the IEEE 802.11, the standard committee has included a set of transmission rates aiming to accommodate the wide variety of requirements of end-user devices and channel operating conditions. Traditionally, the sender increases the data transmission rate upon receiving various consecutive acknowledgement packets while the data transmission rate is decreased on the absence of acknowledgement packets. This error-control procedure assumes that the channel operating conditions are the main source of transmission errors and losses. However, under medium or high load conditions, transmission impairments are mainly due to channel access conflicts: collisions. Under these load conditions, reducing the data transmission based exclusively on the absence of feedback not only proves ineffective, but it actually degrades the overall network performance. In this paper, we describe a novel rate adaptation mechanism capable of mitigating the effect of collisions using the information imbedded in the received packets. Simulation results show that our proposal limits the use of the data transmission adaptation mechanism which in turn results on a significant increase of the aggregated throughput.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, research on wireless networks is one of the most active areas in the telecommunications arena. The fast development of devices such as tablets and smartphones has spurred the demand of wireless technologies. Despite the numerous existing wireless technologies, the IEEE 802.11 standard [8] is by far the most widely used facilitating the interconnectivity at the local area range and covering a wide range of different types of devices. This is mainly due to their low cost, their ease of deployment and, specially, the possibility to the end users to freely move within the area covered by the wireless LANs (WLANs). Another key factor is the ongoing work of the 802.11 group to improve the original standard. The 802.11 group has incorporated many enhancements to the standards, into both, the media access control (MAC) and physical (PHY) layers.

Since the first version of the IEEE 802.11 standard [9], the standard committee has been defining a set of transmission rates to be used (1 and 2 Mbps originally). The first amendment of the PHY layer was defined in the IEEE 802.11b [11]. This amendment included four transmission rates (1, 2, 5.5 and 11 Mbps) in the 2.4 GHz band. In the meantime, the 802.11a PHY layer was developed [10], whose main novelty consisted in the use of the orthogonal frequency division multiplexing (OFDM) mechanism to achieve transmission rates up to 54 Mbps in the 5 GHz band. However, the inclusion of such features has some drawbacks such as the incompatibility with the original functionalities of the IEEE 802.11 standard. Furthermore, the use of the 5 GHz band increases the power demands and reduces the coverage area. In 2003, the IEEE committee approved the 802.11g [13], which is a new extension of the PHY layer. Similar to 802.11b, 802.11g uses the 2.4 GHz band, which guarantees the interoperability with the original 802.11 while ensuring at the same time a similar level of robustness than 802.11b. In addition, it achieves the same rate that the 802.11a extension by using the OFDM technology. In this way, 802.11g joins the advantages of both the IEEE 802.11b and IEEE 802.11a amendments. In [16], the authors show the benefits of the 802.11g PHY layer over the 802.11a or 802.11b layers. Particularly, they show the benefits of using 802.11g for voice and VoIP applications, by better meeting the quality of service (QoS) requirements of such applications.

In the original IEEE 802.11 standard, the network administrator had to decide on the data rate to be used: 1 or 2 Mbps. A significant improvement was the definition of the dynamic rate shifting (DRS) mechanism in the 802.11b amendment [11]. Under the DRS mechanism, the transmission rate can be dynamically adapted instead of being fixed to a given data rate. Although the 802.11 has the ability to change its transmission rate, the decision of when it changes falls on a rate adaptation algorithm, which definition is not included in the IEEE 802.11 standard.

The IEEE standard does not specify the actual mechanisms to be implemented as part of the rate adaptation algorithm. This is to say, it is up to the wireless card manufacturers to specify the details of the rate adaptation algorithm. Nevertheless, the selection of an appropriate transmission rate is not a trivial task. Since the information in wireless networks is transmitted through the air, the information flow may be easily corrupted. The wireless channel is a changing environment. Walls, buildings, weather conditions and even people may be the reason of transmission degradation. Thus, an efficient rate adaptation algorithm is essential to successfully transmit the data packets.

The algorithms responsible of selecting the transmission rate have to deal not only with the channel conditions, but also with other networks events such as collisions. Despite the fact that the 802.11 includes the carrier sense multiple access with collision avoidance (CSMA/CA) mechanism to avoid packet collisions, it is unable to eliminate them and the collisions involving data packets increases as the number of stations does [24]. Among the MAC layer enhancements, one of the most necessary was the IEEE 802.11e amendment [12]. The IEEE 802.11e defines the mechanisms for wireless LANs that aims to provide QoS support to timesensitive applications, such as, voice and video communications. In various works [4, 19, 25, 26] the authors have evaluated the performance of the 802.11e by varying the EDCA parameters. The results of these studies have shown an increase on the number of collisions with respect to the original IEEE 802.11 standard. This increase in collisions greatly limits the potential benefits that the IEEE 802.11e amendment promised to bring to services requiring QoS guarantees.

One of the best known and popular rate adaptation algorithms is the auto rate fallback (ARF) mechanism [14]. ARF is an ACK-based algorithm that increases or decreases the transmission rate depending on the presence or absence of acknowledgement packets (ACKs) respectively. Therefore, they propose that the data rate should be reduced in the presence of packet losses. ARF uses two thresholds to decide if the transmission rate should be increased or decreased. The main problem posed on the ACK-based algorithms is the difficulty to differentiate channel losses and collision losses. Ideally, the rate adaptation algorithm should only modify the transmission rate when the delivery errors are caused by a change on the channel conditions. Unfortunately, ARF does not have the means to identify the main source of errors: channel impairments and/or channel access conflicts. This explains the poor performance of such algorithms in scenarios characterized by error-free channels but moderate to high load conditions. Moreover, when the IEEE 802.11e is operated under heavy load, the ARF is even unable to provide better results than the original standard. To address this issue, recent algorithms integrate load network mechanisms allowing them to adapt the data transmission rate on the basis of the estimated load. The main aim of such proposals is to identify if the rate adaptation algorithm should be invoked or not.

In this paper, we propose a new rate adaptation algorithm, called dynamic ARF (Dyn-ARF). It is based on the use of thresholds as the ARF mechanism, but they are dynamically changed taking into account the network load conditions. We calculate those values using the information of each MAC service data unit (MSDU) packet arriving at the station, such as the source address and the retry bit.

The remainder of this paper is organized as follows. In Sect. 2, we provide some background on the issues and proposed solutions for rate adaptation in Sect. 3. The proposed rate adaptation mechanism is described in Sect. 4. Section 5 presents our simulation results and, finally, Sect. 6 provides the conclusions of the work.

2 IEEE 802.11 standard

The IEEE 802.11 WLANs are one of the fastest growing network technologies in the wireless communications field. The IEEE 802.11 MAC protocol provides a physical-layer multi-rate capability[9]. The original IEEE 802.11 protocol supports a single base rate, typically 2 Mbps. With the multi-rate enhancement, the data transmission can take place at various rates according to the channel conditions. Higher data rates than the base rate are possible when the signal-to-noise ratio (SNR) is sufficiently high. Within the IEEE 802.11b standard [11] the set of possible data rates are 1, 2, 5.5 and 11 Mbps whereas for the IEEE 802.11a standard [10] the set of possible data rates includes 6, 9, 12, 18, 24, 36, 48 and 54 Mbps. All of the 802.11a/b/g rates are summarized, including their forward error correction rates, in Table 1.

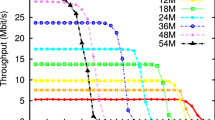

The amount of information received over a link is a function of the number of bits the receiver can decode correctly. Figure 1 plots the theoretical bit error rate (BER) against SNR for several modulations, assuming a channel with only additive Gaussian white noise (AGWN), based on equations from [5]. Throughput over a link, in correct bits received per second, is the product of the symbol rate, bits per symbol, and the probability that a bit is received correctly (1-BER). Figure 2 shows throughput versus SNR for each modulation for a symbol rate of 1 mega-symbol per second. This figure unveils the need to adapt the transmission rate to channel conditions.

2.1 Distributed coordination function

The IEEE 802.11 MAC sub-layer [8] defines two medium access coordination functions, the distributed coordination function (DCF) and the optional point coordination function (PCF). DCF is the basic access function for IEEE 802.11 and is based on the CSMA/CA algorithm together with a contention backoff algorithm. PCF uses a centralized polling method requiring a node to play the role of point coordinator (PC). The PC cyclically polls the stations giving them the opportunity to transmit. In the following, we restraint our description to the DCF mechanism because PCF is optional and only very few APs adapters implement it.

When DCF is being used, a station should determine the state of the channel before transmitting. A station may start to transmit after having determined that the channel is idle during an interval of time longer than the distributed interframe space (DIFS). Otherwise, if the channel is busy, once the transmission in course finishes and in order to avoid a potential collision with other active (waiting) stations, the station will wait a random interval of time (the backoff time, BT) before attempting to transmit:

where rand() is a random function with uniform distribution on the range (0,1); CW is the contention window (with \(CW_{min} \le CW \le CW_{max}\)); and aSlotTime, a parameter that depends on the physical layer used. CW is computed starting from a \(CW_{min}\). If the backoff procedure is invoked because of a collision event or a corrupt transmission, the CW value is updated as \( \mathrm{CW}=2\times (\mathrm{CW}+1)-1\). If, after repeated updates, CW reaches the threshold value CWmax, the contention window is maintained unchanged till a successful transmission occurs. After a successful transmission, CW is reset to \(CW_{min}\).

As long as no activity is detected in the channel, a backoff counter, initially set to the backoff, is decremented on an aSlotTime by aSlotTime basis. Whenever activity is detected, the backoff counter is frozen and reactivated once again when the channel remains idle during an interval of time longer than DIFS. The station will be able to begin transmission as soon as the backoff counter reaches zero (Fig. 3). In case of an unsuccessful transmission, for example due to the presence of noise on the channel, the station can attempt the packet transmission once again. In this case, the station will exponentially increase its CW. The number of transmission attempts per packet is limited. In order to know if a transmission has been successful, the destination station should respond to the source station with an ACK in an interval of time equal to the short interframe space (\(SIFS < DIFS\)) from the time instant the data frame has been received.

The packet acknowledgment mechanism may be used to adapt the transmission rate. Several flow adaptation schemes increase or decrease the channel transmission rate based on number of ACKs successfully received or on the number of ACK packet losses, respectively. However, in an IEEE 802.11 WLANs, the sender is unable to identify whether the loss of an ACK is due to a channel access conflict (collision) or to the channel conditions. Therefore, most schemes tend to reduce the channel transmission rate even in the case of a collision. In the case of a collision, such action will have a negative impact on the network operation, i.e., reducing the channel transmission rate will result on a higher network load, and as a result on an increase on the number of collisions.

Regarding the well-known hidden node problem, the IEEE 802.11 DCF basic access method supports the \(Request{-}to{-}\) \(Send/Clear{-} to{-}Send\)(RTS/CTS) access control mode. When the RTS/CTS access mode is used, the sender station reserves the wireless channel after a successful exchange of RTS/CTS frames. In this case, the sender initially emits an RTS packet. Upon correctly receiving the RTS, the destination node responds with a CTS packet. As soon as the sender receives CTS, it initiates the transmission of the DATA packet. If the destination node receives the DATA packet correctly, it sends back an ACK to the sender node. During the exchange of RTS/CTS, all the nodes within the coverage range of the sender and/or the receiver should refrain from transmitting for a period of time specified in the duration field of the RTS and CTS packets. The duration fields in RTS and CTS should be set long enough such that the source and destination nodes will be allow to complete their communication within the specified period. The deferral periods are managed by a data structure called the network allocation vector (NAV). In the case that the source node does not get a response to its RTS or a DATA packet, it enters into an exponential backoff mode. According to the 802.11 standard [8], the decision to use the RTS frame transmission is made solely at the transmitter side. That is, the RTS frame is used when the size of the pending data frame is equal to or larger than the RTS threshold value. However, in most commercial IEEE 802.11-compliant devices operating in infrastructure-based WLANs with access points (APs), the RTS threshold is set to the largest value specified by the standard, i.e., 2,347 octets, which basically disable the usage of RTS/CTS exchange. Accordingly, the RTS and CTS frames are rarely used in real IEEE 802.11-WLANS setups. The RTS/CTS access mode can also be used to differentiate between packets having been corrupted or involved in a collision. Whenever the RTS packet gets corrupted, it is much likely that it has been involved in a collision. As these packets are very small and they are usually sent at the basic transmission rate. In contrast, the arrival of a corrupted data is mainly due to impairments introduced by the channel conditions. In this latter case, since the two communicating nodes have reserved the channel by means of the RTS/CTS exchange, no other station should transmit a packet during the NAV duration.

Even though DCF is a simple and effective mechanism, DCF can neither support QoS nor guarantee to meet the multimedia applications requirements. That is to say, DCF does not comprise the differentiation mechanisms enabling it to guarantee bandwidth, packet delay, packet loss-rate and/or jitter bounds for high priority stations or multimedia flows. DCF has been basically designed for providing a best-effort service.

2.2 Enhanced distributed channel access

The IEEE 802.11e standard [12] aims to specify the mechanisms enabling the provisioning of QoS guarantees in IEEE 802.11 WLANs. In the IEEE 802.11e standard, distinction is made among those stations not requiring QoS support, known as nQSTA, and those requiring it, QSTA. In order to support both Intserv and DiffServ QoS approaches in an IEEE 802.11 WLAN, a third coordination function is being added: the hybrid coordination function (HCF). The use of this new coordination function is mandatory for the QSTAs. HCF incorporates two new access mechanisms: the contention-based enhanced distributed channel access (EDCA), and the HCF controlled channel access (HCCA).

One main feature of HCF is the definition of four access categories (AC) queues and eight traffic stream (TS) queues at MAC layer. When a frame arrives at the MAC layer, it is tagged with a traffic priority identifier (TID) according to its QoS requirements, which can take values from 0 to 15. The frames with TID values from 0 to 7 are mapped into four AC queues using the EDCA access rules. The frames with TID values from 8 to 15 are mapped into the eight TS queues using the HCF controlled channel access rules. The TS queues provide a strict parameterized QoS control while the AC queues enable the provisioning of multiple priorities. Another main feature of the HCF is the concept of transmission opportunity (TXOP), which defines the transmission holding time for each station. As the original standard, the centralized function (HCCA) is not present on commercial cards.

EDCA incorporates various contention-based prioritized QoS support mechanisms. In EDCA, two main methods are introduced to support service differentiation. The first one is to use different IFS values for different ACs. The second method consists in allocating different CW sizes to the different ACs. Each AC forms an EDCA independent entity with its own queue and its own access mechanism based on an DCF-like mechanism with its own arbitration inter-frame space defined by \(AIFS[AC] = SIFS + AIFSN[AC] \times aSlotTime\) and its own CW[AC] (\(CW_{min} \le CW \le CW_{max}\)) (see Fig. 4), where AIFSN[AC] is the AIFS number. If an internal collision arises among the queues within the same QSTA, the one having higher priority obtains the right to transmit. The queue getting the right to access to the channel obtains a transmission opportunity (TXOP). The winning queue can then transmit during a time interval whose length is given by TXOPLimit.

A closer look to EDCA and DCF uncovers an important issue; EDCA slightly differs from DCF on the way the backoff counter is managed. In EDCA, the backoff counter is also decremented at every idle slot time and it is frozen during channel activity periods. But it resumes one slot time before the AIFS expiration. This means that when the AIFS timer elapses, the backoff counter will already be decremented by one unit. Moreover, since a single MAC operation per slot is permitted (backoff decrement or packet transmission [12]), when the counter decrements to 0, the station cannot transmit immediately, but it has to wait for a further backoff slot if the medium is idle, or a further AIFS expiration in the medium is busy. Such apparently minor difference, it has important consequences in terms of performance of EDCA access categories, especially when they compete with legacy DCF stations. Because of this, the performance of EDCA with AIFSN = 3 are similar to the DCF performance with AIFSN = 2.

EDCA has been designed to support QoS for multimedia applications while maintaining compatibility with DCF. This compatibility with DCF limits the possible values of IFSN, CWmin and CWmax. Thus the IEEE recommended the use of the values shown in Table 2. From the table, it is clear that the EDCA recommends the use of a very small window size to prioritize the access of real-time applications (voice and video). As shown in several works reported in the literature [4, 19, 25, 26], this fact greatly increases the probability of collision when the network load increases. Under these scenarios, the flow adaptation schemes based on the reception of the ACK packet will have a worse performance. The increasing rate of collisions of these schemes quickly reduce the transmission rate.

3 Related work

The traditional rate adaptation mechanisms in WLANs can be based on the ACK reception or signal to noise ratio (SNR) value. The first ACK-based algorithm reported in the literature is ARF [14]. As already stated, ARF is the most popular data transmission rate adaptation algorithm used in the IEEE 802.11 WLANs. This algorithm adapts the transmission rate based on the presence or absence of ACKs. ARF uses two fixed thresholds, referred from now on as \(C\) and \(W\). The first one denotes the number of consecutive ACKs that a station must receive before increasing the transmission data rate. According to the ARF specifications, \(C\) should be set to 10. The latter, \(W\), is fixed to 2 and refers to the number of consecutive failed transmission attempts triggering the decrease of the data transmission rate. ARF further specifies that in the case that the transmission right after a rate increase fails, the transmission rate should be automatically decreased.

Despite being quite popular, the ARF does not perform well when the channel behavior changes quickly or is quite stable. To solve the last problem, Lacage et al. propose the adaptive ARF (AARF) mechanism [18]. In AARF, the authors have chosen to adapt the \(C\) threshold by using a binary exponential backoff (BEB) scheme. When the transmission of the probing packet (after increasing the transmission rate) fails, AARF switches immediately back to the previous lower rate (as in ARF) but they also multiply by two the number of consecutive successful transmissions (with a maximum bound set to 50) required to switch to a higher rate. This threshold is reset to its initial value of 10 when the rate is decreased because of two consecutive failed transmissions. The effect of this adaptation mechanism is to increase the period between successive failed attempts to use a higher rate. Fewer failed transmissions and retransmissions improve the overall throughput.

The main drawback of the ARF and AARF mechanisms is that they operate under the assumption that packet losses are due to channel errors. However, the wireless channel is a shared medium and channel access conflicts are also a major source of packet losses. Consequently, the ARF and AARF mechanisms also decrease the transmission rate without discriminating between losses due to channel impairments or packet collisions. This issue has been addressed by works recently reported in the literature [6, 7, 15, 27]. Kim et al. proposed the collision-aware rate adaptation (CARA) algorithm [15]. In this mechanism, when a packet is not acknowledged, before its retransmission, a request to send/clear to send (RTS/CTS) mechanism is used to avoid collisions. If after this exchange the packet is successfully transmitted, CARA does not decrease the transmission rate. Otherwise, it decreases the channel data rate. Nevertheless, the use of RTS/CTS mechanism introduces overhead which has prevented its use in WLANs.

The second approach to adapt the transmission rate is based on the SNR value perceived by the receivers. The receiver-based auto rate (RBAR) [7] and the closed-loop adaptive rate allocation (CLARA) [6] are good examples of SNR-based algorithms. In RBAR [7] an RTS/CTS handshake must be invoked before each data delivery. After the reception of RTS, the receiver responds with channel state information and target SNR for optimal mode selection. This information is sent back to the sender by embedding it into the header of the CTS frame. The rate selection mechanism of RBAR is a major improvement over ARF since it supports receiver feedback during initial channel setup. However, its use comes at a price: the MAC headers of the RTS and CTS packets are no longer compatible with the 802.11 specifications. Due to changes in the MAC headers RBAR cannot longer interwork with 802.11 compliant devices. To solve this limitation, Hoffmann et al. proposed the CLARA algorithm [6]. In CLARA, the MAC headers of RTS and CTS are not modified. CLARA adapts the transmission rate using the SNR of the CTS, assuming a symmetric link. However, the main drawback of this scheme is that it does not take into account the IEEE 802.11 links are in general asymmetric [17, 22]. Moreover, CLARA continues to experience a compatibility issue: not all commercial network cards are able to sense the SNR level of the received packet.

Due to the limitations of the traditional rate adaptation algorithm other recent works have been reported in the literature whose mode of operation relies on estimating the network load. Such schemes change the data transmission rate only if there is enough evidence that packet losses are due to the channel operating conditions. The snoopy rate adaptation (SRA) [3] estimates the collision probability using the channel activity MAC layer information available in the device driver. In particular, the channel utilization and the number of active senders in a time window are the factors used to determine it. This collision probability is used to differentiate between channel losses and collision losses. However, the algorithm is very complex and the authors only report results for a network of up to 8 stations.

The wireless congestion optimized fallback (WOOF) [1] algorithm characterizes the congestion in wireless networks and incorporate this information into the design of a congestion-aware rate adaptation scheme. The WOOF algorithm comprises two steps. First, WOOF identifies the level of congestion in real-time. This step consists on passively measuring the channel busy time as the fraction of time during which the medium is monitored. Second, WOOF employs the channel busy time congestion metric to adapt the transmission rate. The use of congestion metric enables the rate-adaptation algorithm to differentiate between packet losses due to congestion and those due to poor link quality. However, WOOF exhibits the same limitations than the previous SRA. Moreover, the stability of WOOF remains to be demonstrated.

Wong et al. proposed the robust rate adaptation algorithm (RRAA) [27]. The RRAA uses the packet loss rate to decide if the transmission rate should be modified. With the aim of properly identifying the source of errors, RRAA includes the use of the RTS/CST collision avoidance mechanism. The use of RTS/CTS is very similar to the CARA algorithm [15], and also it drawbacks: it increases the overhead.

Finally, Cardoso et al. propose yet another rate algorithm (YARA) [2], which infers the collision probability in order to choose the transmission rate minimizing the collision probability. To infer the collision probability, YARA uses different time measurements to decide if the next transmission will use a higher transmission rate. Table 3 summarizes the main features of the various aforementioned rate adaptation algorithms.

4 Proposed mechanism

As explained before, the ARF mechanism does not perform well when the number of active stations increases because erroneous packets due collisions are considered as channel errors and the transmission rate is decreased. With the aim of being able to discriminate the source of packet losses, we propose a novel rate adaptation mechanism sensitive to the network load named Dyn-ARF. As the ARF mechanism, our algorithm uses two thresholds to decide the adequacy of changing the transmission rate. The novelty of Dyn-ARF is that the \(C\) and \(W\) values, referred to below as \(C_{dyn}\) and \(W_{dyn}\), are dynamically calculated based on the network load conditions. In order to estimate the network conditions we take into account the traffic pattern during one second, i.e., \(C_{dyn}\) and \(W_{dyn}\) are recalculated every second.

The traffic pattern is characterized using the parameters listed in Table 4. \(P\) represents the total number of packets detected by the station. Let the number of stations transmitting during the last second be denoted as \(N_{eff}\), and let the total number of packets that are retransmissions be denoted as \(R\). \(N_{eff}\) and \(R\) can be easily obtained by reading the header parameter of the MSDU packets. Specifically, the source address and the retry bit fields have to be checked.

As shown in [24], the number of collisions increases as the number of active stations does. Under such conditions, there is a higher probability of consecutive packet collisions. Consequently, it is more likely that the ARF reduces the transmission rate due to packet collisions. In order to reduce the number of times that this event occurs, we propose to set the value of \(W_{dyn}\) as a function of the number of active stations. To better respond to the varying channel conditions, we limit the value of \(W_{dyn}\) to 5. In this way, the station gets the chance to transmit the data packet using a lower transmission rate at least two times before being discarded (the default retransmission limit in the IEEE 802.11 standard is seven attempts).

The \(W_{dyn}\) value is dynamically evaluated as follows. Initially, \(W_{dyn}\) is set to two which is the value used by the ARF algorithm. Thereafter, at regular intervals of 1 s, the \(W_{dyn}\) is recalculated taking into account the number of stations from which the target station has received a packet (independently if it was addressed to it or not). To accomplish the minimum and maximum values for \(W_{dyn}\) stated above, a correction factor is used, called \(n\) , to modify the \(N_{eff}\) value according to the limits established. This correction factor, which value should be between 0 and 1, represents the contribution of each active station to the increase of the \(W_{dyn}\) parameter. We have assumed that all active stations contribute in the same way to the \(W_{dyn}\) value. Thus, the value for \(W_{dyn}\) is the largest integer less than or equal to \(W + N_{eff} \times n\), i.e., is determined by:

We use a different approach to decide the number of consecutive ACK packets necessary before increasing the transmission rate, i.e., the value of \(C_{dyn}\) While \(W_{dyn}\) is exclusively affected by \(N_{eff}\), the \(C_{dyn}\) value also takes into account the ratio of retransmitted packets, which is computed as \(R_{ratio} = R/P.\) To simplify the algorithm we have assumed that almost all retransmitted packets are due collisions, i.e. \(R_{ratio}\) collision probability. This assumption is reasonable since all stations implement the rate adaptation algorithm and hence, there should be a very small number of losses due to channel conditions. Nevertheless, we have designed the mechanism with flexibility using a threshold, called \(T\). This threshold represents the error rate due to channel impairments.

Using \(R_{ratio}\) we are able to determine the probability of \(m\) consecutive packet collisions, which is then given by \((R_{ratio})\) m \( \times m \). \(m\) is limited by the \(T\) threshold according with \((R_{ratio})\) m \(\le \) \(T\), i.e., the probability of \(m\) consecutive collisions is limited by the probability of a channel error, which is defined by \(T\). Thus the value of \(m\) is the smallest integer value that is greater than or equal to \(log(T) / log(R_ratio)\) and is defined as

Once having set the numerical value of \(m\), the mechanism follows an approach similar to the one used to calculate \(W_{dyn}\). To determine the value of \(C_{dyn}\) we modify the default value \(C\) as follows:

Simply stated, Eqs. 1 and 2 provide us with the information to better decide if the transmission rate should be changed. These two parameters are updated taking into account the network load. As it will be shown in our results, changing the values of \(W_{dyn}\) and \(C_{dyn}\) proves to be quite effective.

5 Performance evaluation

In this section, we carry out a performance analysis on the effectiveness of our proposed scheme. Throughout our study, we have used the OPNET Modeler tool 16.1 [21], which already includes an IEEE 802.11 model. In our performance evaluation study we compare our new proposal with the traditional ARF algorithm. Throughout an exhaustive campaign of simulations, we have evaluated the performance of the system in terms of four metrics: aggregated throughput, transmission rate, delay and retransmission attempts.

5.1 Scenarios

In our simulations, we model an IEEE 802.11g WLAN with infrastructure consisting of an AP and different number of wireless stations (STA). We develop our study by considering two different scenarios. First, we evaluate a basic service set (BBS) where the wireless channel is assumed ideal, i.e., collisions are the only source of packet errors and losses. Second, we test the same BSS taking into account channel errors.

In both scenarios, we vary the network load expressed in terms of STAs. The number of STAs has been initially set to 2 STAs. We then increase the number of STAs by 2 stations to a maximum of 30 STAs in the first scenario, and up to 24 in the second one. In all our scenarios, we use uplink flows. The packet size is set to 1,024 bytes (including the RTP/UDP/IP headers) with a Poisson distribution and a traffic average arrival rate of 50 packets/second for all stations, which generates about 400 Kbps in each station.

In order to evaluate the performance under channel errors, the AP is located at the centre of the BSS and the STAs are mobile terminals randomly placed in the BSS at a constant speed of 3.6 km/h. We also place one STA close to the AP in order to verify if this station reduces its transmission rate. It should not do it since the channel quality between the AP and this station is quite good (see Fig. 5). To test our mechanism we have set the values of n and T to 1/6 and 0.1 respectively.Footnote 1 In the second scenario, which includes channel errors, the cell size is changed throughout the different scenarios under study. We use three network sizes expressed in terms of coverage area.

The OPNET modeler only includes the BER versus SNR curves for each modulation used by the IEEE 802.11g, but it does not differentiate between coding rates. Thus, we have used BER vs. SNR curves included in the contributed model of [28]. As suggested in [28], we have used the complete set of IEEE 802.11g transmission rates except 9 Mbps.

In order to model the wireless channel, we have used the Ricean model to characterize the propagation of the signal throughout the medium [23]. This model is often described in terms of a parameter k, which is defined as the ratio between the power in the direct path and the power in the other, scattered paths. In this work, we have set the parameter k to 10 to simulate an outdoor environment.

Throughout our study, we have simulated four minutes of operation of each scenario. Our measurements started after a warm-up period (about 3 s), allowing us to collect the statistics under steady-state conditions. Each point in our plots is obtained from an average over 30 simulation runs and the error bars indicate the 95 % confidence interval.

5.2 Metrics

In our study, we have been interested in assessing the performance in terms of the following metrics: aggregated throughput, transmission rate, delay and retransmission attempts. In the following, we provide the definitions of all the metrics being considered.

-

1.

Aggregated throughput: it represents the total throughput received by the AP from all the STAs.

-

2.

Transmission rate: this metric corresponds to the average transmission rate of the mobile STAs. We also show the transmission rate of the STA placed close the AP for the scenarios with channel errors.

-

3.

Delay: it defines the time that a packet waits in the STAs before being sent.

-

4.

Retransmission attempts: this metric represents the average number of packet retransmissions.

5.3 Results

The evaluation under ideal channel conditions is shown in Fig. 6. The results show how the ARF mechanism fails on addressing the rate adaptation in presence of packet collisions. The decrease of the aggregate throughput indicates that the ARF mechanism does not perform well (see Fig. 6a). Packets involved in collisions are considered by the ARF as corrupted by the channel conditions and hence it decreases the transmission rate. As a result, the packet transmission takes longer. This is to say, the use of ARF results on the saturation of the network due to the slower channel transmission rate. This results on an increase in the delay (see Fig. 6c), and retransmission rate (see Fig. 6d). On the other hand, our proposed algorithm is able to deliver all traffic correctly independently of the network load. Unlike the ARF mechanism, our proposal is able to retransmit those packets without decreasing the transmission rate (see Fig. 6b).

Figure 7 shows the performance evaluation of a network with channel errors and a coverage area of 50 m \(\times \) 50 m. Figure 7a shows the aggregate throughput for the ARF mechanism and Dyn-ARF. The simulation results show that the ARF penalizes the network performance even in a small network, where the stations are relatively close to the AP and only few channel errors occurs. Packet collisions cause that the impact of these few channel errors to further degrade the network performance. As the network load increases, the probability of two consecutive erroneous deliveries is more likely to occur. Thus, the ARF mechanism will decrease the transmission rate even in the absence of errors due to the channel. Figure 7b shows the average packet delay. The ARF mechanism exhibits a rather rapid increase on the packet delay. The reason is that the transmission rate is decreased due to channel errors and collisions (see Fig. 7c). On the other hand, Dyn-ARF mitigates the influence of the collisions, maintaining a higher transmission rate thanks to the dynamic values of \(W\) and \(C\). Thus, a reduced delay is possible.

When the ARF mechanism is used, it is more difficult to successfully deliver ten consecutive data packets and consequently, to increase the transmission rate. Figure 7d shows this fact where the station placed close to the AP also decreases its transmission rate. On the contrary, when Dyn-ARF is used, the transmission rate is maintained at its maximum level. This indicates that this new scheme is able to effectively adapt the thresholds of consecutive ACKs and not acknowledged packets to deal with the collisions. Consequently, the throughput level is maintained at the maximum level although the number of STAs increases (see Fig. 7a).

When the network coverage area is increased to 100 \(\times \) 100 m. (see Fig. 8), the channel errors appear more frequently and the STAs have to use a more robust transmission rate to transmit the data packets. Thus the performance achieved with respect to the previous case decreases (see Fig. 7). Nevertheless, thanks to the adaptation of the thresholds our mechanism achieves an acceptable transmission rate, which makes the throughput level clearly higher than the one obtained by the ARF mechanism (see Fig. 8c, d). In the case of a network of 150 m \(\times \) 150 m (see Fig. 9), Dyn-ARF also outperforms the ARF mechanism. In this case, the network coverage area is quite large. Thus, the transmission rate is decreased to overcome the channel errors (see Fig. 9c). Nevertheless, thanks to the collision estimation of Dyn-ARF, our scheme is able to achieve a transmission rate that fits better with the actual channel conditions and network load. Figure 9d shows that the station close to the AP transmits the data packets using the maximum transmission rate when our mechanism is used. Notice that this STA has good channel quality and the packets are correctly delivered. On the other hand, the ARF mechanism reduces its transmission rate drastically as the number of STAs increases although the channel quality was good enough to use the maximum transmission rate.

Finally, Fig. 10 shows the number of retransmissions per packet for all the coverage areas. When there are few STAs sending data, the quick reduction of the transmission rate makes that ARF successfully delivers the packets using less transmission attempts than Dyn-ARF. Nevertheless, as discussed before, ARF does not properly set the transmission rate according to the channel conditions in presence of packet collisions. It causes a premature saturation situation that degrades the network performance (see Figs. 7, 8, 9). Consequently, the number of retransmissions when ARF is used grows faster. This effect is shown in Fig. 10b and c, where our proposal obtains a retransmission rate smaller than the ARF almost in all cases. Despite the fact that in some cases Dyn-ARF behaves worse in terms of retransmissions, it transmits those packets using a higher transmission rate than the ARF mechanism (see Figs. 7, 8, 9).

In short, the proposed algorithm to dynamically adapt \(C_{dyn}\) and \(W_{dyn}\) values has proved effective. The aggregated throughput is considerably higher compared with the ARF mechanism. The transmission rate is maintained for the station close to the AP while it is adapted in the case of the mobile STAs to accomplish with the channel conditions.

6 Conclusion and future work

We have proposed a new rate adaptation algorithm that dynamically adapts the default values of the ARF mechanism. Our algorithm is able to estimate the network load to avoid reducing the transmission rate when erroneous packets are due to collisions instead of resulting from the underlying channel conditions. The adaptation has proved effective within ideal channel conditions, where the transmission rate in not decreased due to packet collisions, as occurs when the ARF mechanism is used. In presence of channel impairments, our algorithm increments the aggregated throughput while the number of retransmissions is maintained or decreased with respect to the ones reported by other mechanisms. This means that our algorithm outperforms other mechanism by properly setting the channel transmission rates.

We use the available information in the received MSDU packets to dynamically calculate the number of consecutive unsuccessful transmission attempts and successful deliveries used to decide if the transmission rate should be modified. Our algorithm is fully compatible with the IEEE 802.11 standards. We estimate the effective number of stations in the network and the percentage of retransmitted packets. These values are used to determine the threshold values.

To better estimate the percentage of collisions in a heavily load network covering a large area, we plan to conduct an analysis under saturation conditions. Once that we show that our mechanisms outperforms the ARF mechanism, we will undertake the optimization of the two correction factors used to estimate the collision probability In this way, we should be able to estimate the contribution of each active station to the network load in order to generalize our study to the more real case, i.e., the case when the stations exhibit different traffic rates. We will also look on better ways of estimating the percentage of erroneous packets due to the channel conditions. Thus, our future work includes an evaluation of these factors. A better estimation will lead us to identified collisions even in error prone or very large networks.

Notes

These values have been chosen after test several values in different simulation tests. Further studies will let to calculate these values dynamically as \(C_{dyn}\) and \(W_{dyn}\).

References

Acharya, P. A. K., Sharma, A., Belding, E. M., Almeroth, K. C., & Papagiannaki, K. (2008). Congestion-aware rate adaptation in wireless networks: A measurement-driven approach. In IEEE communications society conference on sensor, mesh and ad hoc communications and networks (SECON), (pp. 1–9).

Cardoso, K., & de Rezende, J. (2012). Increasing throughput in dense 802.11 networks by automatic rate adaptation improvement. Wireless Networks, 8(1), 95–112.

Ha, J., Lee, K., Kim, H., & Kang, I. (2008). A snooping rate adaptation algorithm for IEEE 802.11 WLANs. In International symposium on wireless pervasive computing (ISWPC), (pp. 606–609).

Hamidian, A., & Krner, U. (2008). Extending EDCA with distributed resource reservation for QoS guarantees. Telecommunication Systems, 39(3–4), 187–194.

Heiskala, J., & Terry, J. (2001). OFDM wireless LANs: A theoretical and practical guide. Indianapolis: SAMS.

Hoffmann, C., Manshaei, M. H., & Turletti., T. (2005). CLARA: Closed-loop adaptive rate allocation for IEEE wireless LANs. In Proceedings of the international conference on wireless networks, communications and mobile computing (WIRELESSCOM’05), (1, pp. 668–673).

Holland, G., Vaidya, N. H., & Bahl., P. (2001). A rate-adaptive mac protocol for multi-hop wireless networks. In Proceedings of ACM MOBICOM’01, (pp. 236–251) Rome (Italy).

IEEE standard for wireless LAN medium access control (MAC) and physical layer (PHY) specifications. March 2012, Std 802.11-2012.

IEEE 802.11 WG. IEEE standard for wireless LAN medium access control (MAC) and physical layer (PHY) specifications. Std 802.11-1999.

IEEE 802.11a WG. IEEE 802.11a: Wireless LAN medium access control (MAC) and physical layer (PHY) specifications, high speed physical layer in the 5 GHz band (1999).

IEEE 802.11b WG. IEEE 802.11b: Wireless LAN medium access control (MAC) and physical layer (PHY) specifications, higher speed PHY extension in the 2.4 GHz band (1999).

IEEE 802.11e WG. IEEE 802.11e: Wireless LAN medium access control (MAC) and physical layer (PHY) specifications: Amendment 8: Medium access control (MAC) quality of service enhancements (2005).

IEEE 802.11g WG. IEEE 802.11g: Wireless LAN medium access control (MAC) and physical layer (PHY) specifications, further higher data rate extension in the 2.4 GHz band (2003).

Kamerman, A., & Monteban, L. (1997). WaveLAN(C)-II: A high-performance wireless LAN for the unlicensed band. Bell Labs Technical Journal, 2(3), 118–133.

Kim, J., Kim, S., Choi, S., & Qiao, D. (2006). CARA: Collision-aware rate adaptation for IEEE 802.11 WLANs. In 25th IEEE international conference on computer communications. Proceedings (INFOCOM 2006), (pp. 1–11).

Krzysztof, G., Aleksander, K., Jozef, W., & Krzysztof, N. (2011). Testbed analysis of video and VoIP transmission performance in IEEE 802.11 b/g/n networks. Telecommunication Systems, 48(3–4), 247–260.

Kurth, M., Zubow, A., & Redlich, J. P. (2006). Multi-channel link-level measurements in 802.11 mesh networks. In International conference on wireless communications and mobile computing (IWCMC), (pp. 937–944).

Lacage, M., Manshaei, M.H., & Turletti, T. (2004). IEEE 802.11 rate adaptation: A practical approach. In Proceedings of the ACM international symposium on modeling, analysis, and simulation of wireless and mobile systems (MSWiM’04), (pp 126–134).

Martorell, G., Riera-Palou, F., & Femenias, G. (2013). Modeling fast link adaptation-based 802.11n distributed coordination function. Telecommunication Systems, 56, 215. doi:10.1007/s11235-013-9831-x.

Ni, Q. (2005). Performance analysis and enhancements for IEEE 802.11e wireless networks. IEEE Network, 19(4), 21–26.

Opnet Technologies Inc. OPNET modeler 16.5 (c)1987–2012. http://www.opnet.com

del Prado Pavon, J., & Choi, S. (2003). Link adaptation strategy for IEEE 802.11 WLAN via received signal strength measurement. In IEEE international conference on communications (ICC), (2, pp. 1108–1113).

Punnoose, R. J., Nikitin, P. V., & Stancil, D. (2000). Efficient simulation of ricean fading within a packet simulator. In 52nd vehicular technology conference, IEEE-VTS Fall VTC 2000. 2, (pp. 764–767).

Santos, M. A., Villalón, J., Ramirez-Mireles, F., Orozco-Barbosa, L., & Delicado, J. (2011). A novel multicast collision prevention mechanism for IEEE 802.11. IEEE Communications Letters, 15(11), 1190–1192.

Villalón, J., Cuenca, P., & Orozco-Barbosa, L. (2007). On the capabilities of IEEE 802.11e for multimedia communications over heterogeneous 802.11/802.11e WLANs. Telecommunication Systems, 36(1–3), 27–38.

Villalón, J., Mió, F., Cuenca, P., & Orozco-Barbosa, L. (2010). Multiservice unicast/multicast communications over IEEE 802.11e networks. Telecommunication Systems, 43(1–2), 59–72.

Wong, S. H. Y., Yang, H., Lu, S., & Bharghavan, V. (2006). Robust rate adaptation for 802.11 wireless networks. In Procceedings of MobiCom 2006, (pp. 146–157). Los Angeles, California, USA.

Xi, W., Munro, A., & Barton, M. (2008). Link adaptation algorithm for the IEEE 802.11n MIMO system. In NETWORKING 2008 ad hoc and sensor networks. In Wireless networks, next generation internet, (4982, pp. 780–791).

Acknowledgments

This work was supported by the Spanish MICINN, Consolider Programme and Plan E funds, as well as European Commission FEDER funds, under Grants CSD2006-00046, TIN2009-14475-C04-03 and TIN2012-38341-C04-04.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Santos, M.A., Villalón, J.M. & Orozco-Barbosa, L. Dyn-ARF: a rate adaptation mechanism sensitive to the network load over 802.11 WLANs. Telecommun Syst 61, 5–19 (2016). https://doi.org/10.1007/s11235-014-9949-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-014-9949-5