Every day one comes across somebody who says that of course his view may not be the right one. Of course his view must be the right one, or it is not his view.

- G. K. Chesterton, Orthodoxy

Abstract

I argue that believing that p implies having a credence of 1 in p. This is true because the belief that p involves representing p as being the case, representing p as being the case involves not allowing for the possibility of not-p, while having a credence that’s greater than 0 in not-p involves regarding not-p as a possibility.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

People have full beliefs, and they have credences or degrees of belief. Full beliefs—a.k.a. beliefs—are all-or-nothing. One either believes in God or one doesn’t, in which case one is an atheist or an agnostic. But credences come in degrees. We regard various possibilities as more or less likely. Say I believe a proposition p. What follows, if anything, about what my credence in p is? In this paper I’ll argue:

-

(Certainty) If a subject S believes a proposition p, then S’s credence in p is 1.

(Certainty) is an unpopular thesis amongst contemporary epistemologists, although there are at least some who defend it.Footnote 1 \(^{,}\) Footnote 2

I’ll proceed as follows. In the next section (Sect. 2) I’ll discuss some initial evidence that (Certainty) is true. In Sect. 3 I offer a couple of explanations as to why (Certainty) should be true, thus providing new reasons to accept it. In Sect. 4 I respond to three objections to (Certainty).

But before I get started, let me state crucial assumptions I’ll be making about what our beliefs are. I’ll be assuming that one expresses one’s beliefs through sincere unqualified assertions (Frege 1918). One asserts p without qualification when one simply asserts p—not ‘p is very likely’Footnote 3 or ‘p is a serious possibility’ or whatever—one just asserts p without qualifying one’s assertion in any way: one says simply ‘p’. Sometimes we assert sentences we don’t believe, of course. We lie. We pretend. We assert sentences that make presuppositions we don’t believe, speaking as if we do believe them for the purposes of the conversation (Stalnaker 1978). But there are especially serious contexts, such as giving testimony in a courtroom, where a strong form of sincerity is required, sincerity that requires you only to speak what you believe, and where you must believe all the presuppositions of your assertions. This is the kind of sincerity I mean when I speak of a sincere assertion. It’s the notion of sincerity at play in disquotational principles of belief such as the following (taken from Kripke 1979):

-

If a normal English speaker, on reflection, sincerely assents to ‘p’, then she believes that p [assuming ‘p’ contains no context-dependent terms].

I accept the above disquotation principle and I think that we can get a good grip on what we believe by thinking about propositions we sincerely assent to on reflection without qualification. Sincerely assenting to a sentence or proposition is also connected with being willing to assume it in reasoning. If on reflection I assume p without qualification when (silently) reasoning by myself, then (all else equal) I believe p. In short, we can get a grip on what we believe by thinking about what on reflection we assent to, where that can be cashed out in terms of what we are able to sincerely assert and what on reflection we assume in reasoning, without qualification.

I find the above claims about belief to be very plausible, and will be assuming they’re true henceforth. For any readers who doubt that the ability to sincerely assert or assume something in reasoning is a sufficient condition for belief, let us agree to disagree! Such readers will at least admit that there’s a subset of our beliefs we can sincerely assert and assume in reasoning. Let such readers pretend this paper is only about our beliefs in that subset.

2 Some evidence for (Certainty)

Say I regard p as probably true in the sense that my credence in p is greater than 0.5. I might express my credence in p by thinking or asserting the sentence ‘Probably p’.Footnote 4 I might think or assert such sentences as an aid to reasoning. For instance, I might reason as follows: ‘p is probably true. Since p or q follows from p, that means that probably p or q’.Footnote 5 My main point here is to introduce a way of using the expression ‘Probably p’: it can be used by a subject to express the fact that her credence in p is greater than 0.5. The subject can use the judgment that ‘Probably p’ to see what other things she’s committed to in virtue of having this credence. Let’s examine how the doxastic commitments expressed in such probably-judgments combine with the commitments involved in other judgments.Footnote 6

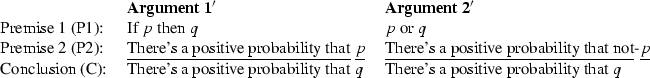

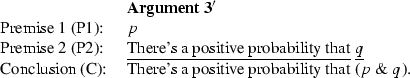

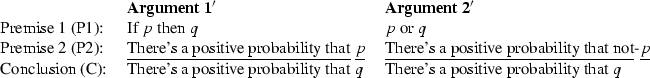

Consider the following three arguments. Throughout this paper assume that the use of ‘probably’ in these three arguments is the one just mentioned, in which it’s used to express the speaker’s having a credence greater than 0.5 in a proposition, and also assume that p and q aren’t logically equivalent.Footnote 7

Let’s say someone ‘accepts’ an instance of one of the premises or conclusions in one of these arguments iff [for their first premise (P1)] s/he believes the premise or [for their (P2) or (C)] she has the credence the premise/conclusion speaks of (s/he has a credence greater than 0.5 in the relevant proposition). I think it’s extremely plausible that for each of the three argument schemas, anyone who accepts its premises is committed to accepting its conclusion.Footnote 8 Let’s say someone ‘rejects’ the conclusion (C) of one of Arguments 1–3 iff she has a credence in q [for Arguments 1 and 2] or in (p & q) [for Argument 3] that is less than or equal to 0.5. I’m claiming that the following is very plausible:

-

(Commitment) In accepting Arguments 1–3’s premises, one is thereby committed to accepting their conclusions, on pain of inconsistency.

-

In other words, the following set of attitudes is jointly inconsistent: acceptance of (P1) in one of Arguments 1–3, acceptance of (P2) in the same Argument, and rejection of (C) in the same Argument.

-

(Commitment) is articulated in terms of ‘acceptance’, which is a term of art in this paper. Nevertheless, if my prior claims are granted that we can use probabilistic language to talk about our credences and that we express our beliefs through assertions, then (Commitment) presents a very natural idea. Say I assert both premises of one of the three arguments, and in doing so I accurately express a real belief and a real credence that I in fact have. What (Commitment) says is that in that case, the psychological states my assertions in fact accurately portray me as having commit me to also having the psychological state I would represent myself as having by asserting the argument’s conclusion.Footnote 9

The plausibility of (Commitment) is revealed when we consider some instances of the argument schemas 1–3. Let’s start with Argument 1. Imagine Watson says or thinks that if Mr. Smith wasn’t the murderer, then it was Mr. Jones [Argument 1, (P1), or ‘(P1)\(_1\)’]. He also says or thinks that Mr. Smith probably wasn’t the murderer [(P2)\(_1\)]. It seems he’s thereby committed to Mr. Jones probably being the murderer [(C)\(_1\)]. Imagine that Watson actually asserted the two premises but then denied being thereby committed to the conclusion [‘I deny that it was probably Jones; nevertheless, if it wasn’t Smith it was Jones, and it probably wasn’t Smith’]. We would find what he said exceedingly strange. For surely, we’d respond, if Watson thinks those premises are true, he must conclude that it probably was Jones. He’s being inconsistent.Footnote 10

Similarly for Arguments 2 and 3. Say Watson says/thinks that the murderer was either Smith or Jones [(P1)\(_2\)], and then says/thinks that it probably wasn’t Smith [(P2)\(_2\)]. It seems he’s thereby committed to its probably being Jones [(C)\(_2\)]. Were Watson to deny commitment to this conclusion, while affirming the premises [‘It’s not the case that it was probably Jones. Nevertheless, it was either Smith or Jones and probably wasn’t Smith’], we would find what Watson said odd, to say the least. Finally, consider an instance of Argument 3. Imagine someone says/thinks that the Democrats will have control of the Senate in 2016 [(P1)\(_3\)] while also saying/thinking that the Democrats will probably have control of the House in 2016 [(P2)\(_3\)]. It seems that that person is thereby committed to thinking that probably, the Democrats will have control of both the Senate and the House in 2016 [(C)\(_3\)]. Were someone to deny the conclusion while asserting both premises [‘The Democrats will have control of the Senate, and they’ll probably have control of the House. But I deny that they’ll probably have control of both the House and the Senate’], it would sound to us like this person was contradicting himself.

(Commitment) is very plausible. Let’s say it’s true. I demonstrate in this paper’s Appendix that (Commitment) is true iff in believing/accepting Argument 1–3’s first premise (P1), the subject has a credence of 1 in that premise.Footnote 11

Say we thought (Certainty) was true. Then we would find nothing surprising about the fact that (Commitment) holds iff believing (P1) involves having a credence of 1 in it. But this fact is strange if (Certainty) is false.

One who denied (Certainty) but accepted (Commitment) might claim the following:

-

(†) For some reason, when one accepts (P1) and (P2) in Arguments 1–3, believing (P1) requires having a credence of 1 in (P1). However, it’s not generally true that believing a proposition involves having a credence of 1 in it.

(†) is problematic. Why does a subject’s believing (P1) require having a credence of 1 in (P1) when s/he also accepts (P2), if belief doesn’t generally require having a credence of 1? I don’t know how the denier of (Certainty) will be able to answer this question in a plausible way. There are additional problems. My previous examples of accepting instances of the premises of Arguments 1–3 involved ordinary, mundane beliefs. I ask the reader to come up with his or her own examples of everyday, mundane beliefs people have in propositions in the logical forms specified by (P1) in Arguments 1–3. Now consider an agent who has these ordinary beliefs, and also accepts (P2) in the same Argument. I predict that it will seem plausible to you that in the cases you consider, the imaginary agent is committed to the Arguments’ conclusions in virtue of accepting (P1) and (P2). The agent is committed to the Arguments’ conclusions iff s/he has a credence of 1 in their (P1) (as I show in the Appendix). The exercise you just went through (combined with the Appendix 1) suggests that as a general rule our credence in our beliefs is 1. This runs contrary to the claim of (†), according to which generally believing something doesn’t involve having a credence of 1 in it. Furthermore, the many deniers of (Certainty) tend to think of having a credence of 1 as an extreme attitude that we have to almost no contingent propositions, even though we do believe many things. Thus (Certainty)’s enemies typically won’t be content to merely deny (Certainty), but will also want to deny that it’s at all common to have a credence of 1 in propositions we believe. However, the combination of the exercise we just went through and the Appendix’s result seems to show that it’s actually common to have a credence of 1 in our beliefs, and that we have a credence of 1 in our mundane, ordinary beliefs.Footnote 12

Given the problems accepting (Commitment) poses for them, those who deny (Certainty) may opt to reject (Commitment). Such philosophers could claim that there is a pragmatic explanation as to why asserting one of the Arguments’ premises seems to commit one to its conclusion. (I discussed one idea along these lines back in footnote 9.) I cannot myself think of how our intuitions about Arguments 1–3 can be explained in a way that doesn’t imply (Commitment). Thus, while our intuitions about Arguments 1–3 don’t force us to accept (Certainty), they at least provide some evidence for it.

One argument for (Certainty) is that we should accept it in order to allow us to endorse the following thesis in the face of the Lottery Paradox:

-

(Closure) If one believes p and believes q, then one believes p & q.

Imagine that a credence of \(\tau \) is sufficient for belief, where \(\tau \) is as high as we like, but less than 1. Now imagine a fair lottery with a sufficient number of tickets so that one’s credence that any individual ticket in the lottery will lose is greater than \(\tau \). One will believe of each individual ticket that it will lose, and therefore, if (Closure) is true, one may find oneself believing the conjunction that ticket 1 will lose & ticket 2 will lose & \(\ldots \) , where the conjunction contains all the tickets. Since this conclusion is absurd, we must either deny (Closure) or deny that having a credence of \(\tau \) is sufficient for belief. An obvious way to avoid the conclusion without denying (Closure) is to accept (Certainty).

Regarding the Lottery Paradox, accepting (Certainty) does enable us to say what I think is the right thing to say about it, namely that prior to the announcement of the winner, one doesn’t believe of any lottery ticket that it will lose. As I stated in the Introduction, I think we can get a grip on what our beliefs are by thinking about what we would be willing to assert. Others have noted that typically we are not willing to assert that a lottery ticket will lose,Footnote 13 a fact that at least provides prima facie evidence that we don’t believe it. However, not everyone finds it as plausible as I do that we don’t believe that individual lottery tickets won’t win,Footnote 14 and there are several theories of belief which deny (Certainty) while simultaneously maintaining that one doesn’t believe lottery propositions.Footnote 15 However, while these theories do enable us to say that we don’t believe lottery propositions and that (Closure) holds, all without having to endorse (Certainty), they fail to provide a way to accept (Commitment) while denying (Certainty). Furthermore, any theory that reconciled (Commitment) with not-(Certainty) would have to accept (†), and I’ve already argued that (†) is problematic.

In summary, it’s plausible that (Commitment) is true. This fact provides evidence for (Certainty). But why should (Certainty) be true? Why should believing p require having a credence of 1 in p? There are a couple of straightforward explanations, which I’ll present in the next section. These explanations provide new reasons to think that (Certainty) is true.

3 Why (Certainty) is true

I think there are two facts, one about belief and one about credence, which together entail (Certainty). The first fact, which I’ll explain and defend in Sect. 3.1 and 3.4, is that belief is doxastic necessity: if one believes that p, one’s perspective or doxastic state doesn’t leave open the possibility that not-p. The second fact, which I’ll defend in Sect. 3.2, is that one has a positive credence only in propositions that are doxastically possible for one. In § 3.4 I tie the thesis that belief is doxastic necessity to the thesis that beliefs are representations, and offer a different but complementary explanation of why (Certainty) is true.

3.1 Belief as doxastic necessity

Let’s start by defining doxastic possibility: a proposition p is doxastically possible for a subject S iff S’s doxastic state leaves it open that p is true—i.e., iff S’s point of view or perspective is consistent with p. Take the proposition that Spain will win the next World Cup. I have some credence in this proposition, and more in its negation. My overall doxastic state leaves it open that the proposition is true, and also that its negation is true. Hopefully the notion of doxastic possibility is clear enough for us to work with.

Note that if epistemic possibility is taken to be something like what is true for all one knows, then doxastic and epistemic possibility are not the same. Nevertheless, doxastic possibility is a perfectly good notion of possibility. As such, speakers should be able to talk about what is doxastically possible for them—to express the fact that they regard certain things as open possibilities. Presumably we would use modal auxiliaries like ‘might’, ‘possible’, and the rest to do so. For instance, in order to express the fact that I regard it as an open possibility that Spain will win the next World Cup, and also regard it as an open possibility that they won’t—these two propositions are both doxastic possibilities for me—I could say, ‘Spain might win the next World Cup, and they also might not’.

Consider assertions of sentences of the following form, where the ‘possibly’ is used to express doxastic possibility:

-

(Moore) p but possibly not-p.

Assertions of the form of (Moore) have the following property: in asserting the right conjunct (‘possibly not-p’), it sounds like the speaker has retracted her assertion of the left conjunct (‘p’). That’s a comment about hearing someone else assert (Moore). It’s also true that one cannot sincerely assert or think to oneself a sentence of this form: insofar as one thinks the second conjunct, one doesn’t truly think the first. We can explain these properties of (Moore) by positing the following thesis:

-

Belief is doxastic necessity (BIDN): If S believes that p, then p is doxastically necessary for S.

Here’s the explanation. Plausibly, a sincere assertion is an outward expression of a belief. Say S asserts (Moore). In asserting its left conjunct, she expresses her belief that p. If we assume (BIDN) is true, it follows that not-p is doxastically impossible for S, which is contradicted by her expressing the fact that not-p is doxastically possible for her. Thus we can explain why asserting (Moore)’s right conjunct seems like a retraction of the assertion of its left conjunct, and also why one cannot sincerely assert or think a sentence of the form of (Moore), if we assume both (BIDN) and that assertion is an outward expression of belief. I think the fact that (BIDN) enables an explanation of these properties of (Moore) provides evidence that (BIDN) is true.

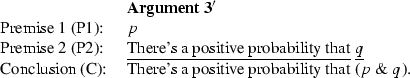

Additional evidence for (BIDN) is provided by its ability to explain the following. Consider the following arguments, where the ‘possibly P’ is used to express or assert that P is doxastically possible for the speaker:

Previously I proposed that (Commitment) was true: for Arguments 1–3, anyone who accepts their premises while rejecting their conclusions is doxastically inconsistent. Let’s broaden our notions of acceptance and rejection so that they extend to Arguments 4–6. Someone ‘accepts’ the premises of Arguments 4–6 iff [for their (P1)] s/he believes the premise or [for their (P2) or (C)] the relevant proposition is doxastically possible for him/her. Someone ‘rejects’ (C) iff the relevant proposition is not doxastically possible for him/her. I think the analogue of Arguments 1–3 is true for Arguments 4–6:Footnote 16

-

(Commitment \(^\Diamond \)) Where the ‘possibly’ in Arguments 4–6 is used to express the fact that the relevant proposition is doxastically possible for one: In accepting Argument 4–6’s premises, one is thereby committed to accepting their conclusions, on pain of inconsistency.

-

In other words, the following set of attitudes is jointly inconsistent: acceptance of (P1) in one of Arguments 4–6, acceptance of (P2) in the same Argument, and rejection of (C) in the same Argument.

-

The plausibility of (Commitment \(^\Diamond \)) is revealed when we consider instances of it. Take Argument 4. I think to myself that if Mustard had access to the key to Jones’ study, then he was Jones’ murderer. Next I think that he might have had access to the key in the sense that I can’t rule out that possibility (it’s a doxastic possibility for me). It seems I should conclude that Mustard might be the murderer—apparently I can’t rule that out either. Let’s try Argument 5. I think to myself that my wife is either at home or at our daughter’s school picking her up. Then I think that she might not be at home. I’m committed to the thought that she might be picking our daughter up from school. Finally, Argument 6. I believe that Mustard had a violent temper. Then it occurs to me that he might have had a motive to kill Jones. I should conclude that it might be that he both had a violent temper and a motive to kill Jones.

(Commitment \(^\Diamond \)) is plausible. Let’s assume it’s true. What would explain its truth? None of Arguments 4–6 are valid in normal modal logics (e.g., the modal systems K, B, S4, or S5). But if one prefixes a necessity operator to the arguments’ first premise (P1), with wide scope over the whole premise, the arguments all become valid. Thus, if in believing (P1), (P1) becomes doxastically necessary for one—then we can explain why (Commitment \(^\Diamond \)) is true. In other words, (BIDN) enables us to explain (Commitment \(^\Diamond \)). Perhaps there are other ways to explain (Commitment \(^\Diamond \)), but (BIDN) provides a very natural and straight-forward explanation of it. I think this provides us with a good reason to think (BIDN) is true.

I think (BIDN) is true. In this subsection, I’ve given two reasons for thinking so. First, (BIDN) enables us to explain why one cannot sincerely assert or think to oneself sentences of the form of (Moore) (where the ‘possibly’ expresses doxastic possibility), and also why, when one utters (Moore), in uttering the right conjunct it will sound like one retracted one’s assertion of the left conjunct. Second, (BIDN) also provides a natural and plausible explanation of (Commitment \(^\Diamond \)).Footnote 17

3.2 Probability, credence, and possibility

According to contemporary physics, there’s a non-0 physical probability that the egg I just dropped on the floor will suddenly jump back up into my hand and be whole again, as if I never had dropped it. Although such an event has an extremely small probability, its probability is greater than 0.Footnote 18 It follows that, according to the physical theories in question, the splattered egg might jump back into my hand and reconstitute itself. If there’s a small but non-0 physical probability that the broken egg will spontaneously reconstitute itself, then it’s physically possible that the egg will do that.

The egg example illustrates the following fact: if something has a chance of being true—no matter how small of a chance—then it’s possibly true. The physics example illustrates the general point for physical possibility and probability: whatever has a physical chance of happening is physically possible. But the same thing is true for other kinds of probability and possibility. Although I am extremely confident that I won’t win the next five lotteries in which I own a ticket, if I think there’s a non-0 probability that I will, then strictly speaking I regard their all winning as a possibility. Generally:

-

Credence doxastic possibility link (CDPL): If S has a positive credence in p, then p is doxastically possible for S.

The thought behind (CDPL) is that if I have some credence in p, then I regard p as a possibility. I hope that this thought and thus (CDPL) will strike the reader as obvious. Just in case it doesn’t, I will offer a couple of considerations in its favor. First, (CDPL) provides a natural interpretation of how credences are often modeled. Contemporary probability theorists and formal epistemologists typically have probabilities distributed over an algebra over a set of possible worlds. Say we model credences or subjective probabilities in that way. What kind of possibilities should we take the sets of possible worlds the probabilities are distributed over to represent? Given that credences are a feature of the subject’s doxastic state, the most natural answer seems to be that they’re doxastic possibilities. If we interpret the model in this way, we’re assuming that credences are distributed over doxastic possibilities. In other words, we’re assuming (CDPL).

Here’s another consideration in favor of (CDPL). We’ve seen that (Commitment) is plausible: commitment to the premises of Arguments 1–3 commits one to their conclusions, on pain of inconsistency. And the same goes for Arguments 4–6 (Commitment \(^\Diamond \)). If we look at Arguments 1–3 and 4–6, we notice that they’re very similar. The only difference is that where we find the word ‘probably’ in 1–3, we find ‘possibly’ in 4–6. The fact that 1–3 and 4–6 are so similar makes one think that there should be a uniform explanation of (Commitment) and (Commitment \(^\Diamond \)). This leads to a second reason to accept (CDPL): accepting (CDPL) enables us to give a uniform explanation of (Commitment) and (Commitment \(^\Diamond \)), as I will now explain.

Note that (P1) is the same premise in Arguments 4–6 as it is in Arguments 1–3. In the Appendix I show that in order to explain (Commitment), we need to posit that in believing Argument 1–3’s first premise (P1) (= Argument 1–6’s (P1)), one has a credence of 1 in (P1). Then in Sect. 3.1 we saw that in order to explain (Commitment \(^\Diamond \)), we must posit that in believing (P1), (P1) is doxastically necessary for one. It’s a consequence of (CDPL) that if p is doxastically necessary for S, then S has a credence of 1 in p.Footnote 19 Thus, if we accept (CDPL), the thing required to explain (Commitment \(^\Diamond \)) will also explain (Commitment). Therefore, if we accept (CDPL), we’re able to give a unified explanation of both (Commitment) and (Commitment \(^\Diamond \)). (Commitment) and (Commitment \(^\Diamond \)) both follow from the conjunction of (CDPL) and (BIDN).

(CDPL) just seems obvious to me. I hope my reader agrees. In case there was some doubt, I have offered two additional reasons to accept it. It allows for a natural interpretation of how credences are normally modeled by contemporary probability theorists and formal epistemologists, and accepting (CDPL) enables us to give a unified explanation of (Commitment) and (Commitment \(^\Diamond \)). Additionally, some things I will say in Sect. 3.4 provide additional support for (CDPL), especially what I say about why an assignment of a high probability to p isn’t simply incorrect if not-p is true.

3.3 Putting (BIDN) and (CDPL) together

In Sect. 3.1 I argued for (BIDN): If S believes that p, then p is doxastically necessary for S. In Sect. 3.2 I argued for (CDPL): If S has a positive credence in p, then p is doxastically possible for S. At the end of Sect. 3.2 I explained how it’s a consequence of (CDPL) that if p is doxastically necessary for S, then S’s credence in p is 1. Therefore, it’s a consequence of the conjunction of (BIDN) and (CDPL) that if S believes p, then S’s credence in p is 1. In other words, (BIDN) and (CDPL) jointly entail (Certainty).

In Sect. 2 I gave some evidence for (Certainty). My goal in this section was to give an explanation as to why (Certainty) should be true, and in the process further motivate it. (Certainty) is true because (BIDN) and (CDPL) are.

3.4 Beliefs as representations

I just finished giving one explanation of why (Certainty) is true. In this section I’ll give another complementary—and I believe deeper—explanation: the real reason (Certainty) is true has to do with the fact that beliefs are representations of the way things are.

Consider a map—say a map of Europe. This map will represent the physical and political geography of Europe as being a certain way. Assuming it’s accurate, it will represent Amsterdam as being north of Rome, south of Oslo, and east of Paris. In representing Amsterdam as being related to Rome, Oslo, and Paris in these ways, the map won’t leave open the possibility that, say, Amsterdam is really to the west of Paris. The map will leave some possibilities open though. If it’s just a map of Europe, it won’t depict Melbourne’s location, and thus will leave open the possibility that Melbourne is north of Amsterdam, south of Amsterdam, etc. These possibilities are all left open by the map because the map doesn’t represent the relationship between Melbourne and Amsterdam.

The above example illustrates that if a map represents it as being the case that p, it doesn’t leave open the possibility that not-p. Note the similarity of this claim to (BIDN) above. This isn’t just true of maps. I propose that this is true of all assertoric representations [(assertoric representations are representations that are put forward as true or with assertoric force, in the sense of Frege (1918)]. If a history book says that George Washington was the first President of the United States, the book doesn’t leave open the possibility that it was really Thomas Jefferson (assuming Washington \(\ne \) Jefferson). Likewise, if a television documentary says that Jimi Hendricks was the greatest rock guitarist, it doesn’t leave open the possibility that it was really Eric Clapton (assuming Hendricks \(\ne \) Clapton). It may leave open the possibility that Hendricks and Clapton were brothers or Martians if it doesn’t say anything inconsistent with these things being the case, but in saying Hendricks was the greatest, it doesn’t leave open the possibility that Clapton was. I propose that it is essential to representations that if a representation assertorically represents the world as being a certain way, it doesn’t leave open the possibility that the world is otherwise. This is true even though the content of an assertoric representation that p is consistent with not-p being possible. The fact that p is true is compatible with its being the case that a representation R leaves open the possibility that not-p. However, the fact that representation R assertorically represents p as true is incompatible with its being the case that R leaves open the possibility that not-p. It’s p’s being assertorically represented by R that rules out the possibility that not-p relative to R. That assertoric representations rule out counterpossibilities to their contents is, I propose, a deep and important fact about assertoric representations.Footnote 20 I also propose that (BIDN) is true of beliefs in virtue of the fact that beliefs, like assertions in a book or in a documentary, are assertoric representations of the way the world is.

Another significant thing to note about representations is that they can be mistaken (inaccurate or false) and can be correct (accurate or true). If the map represents London as being north of Manchester, it’s simply wrong. If it represents Manchester as being north of London, it’s simply correct about that. If a book, television documentary, or some other representation of the past says that Jefferson was the first U.S. President, it’s thereby mistaken. But if it says it was Washington, it’s correct. In the same way, our beliefs—being representations of the way things are—can be simply correct or incorrect. If one believes p and p is true, the belief is thereby correct, and if p is false, the belief thereby is incorrect.Footnote 21 This is a notable fact about our beliefs and other representations, a fact that doesn’t hold for probabilities. If I’m very (but non-maximally) confident that Miami will beat New York, I’m not simply wrong when New York beats Miami. My confidence might or might not have been misplaced, but it’s not simply wrong. Say I assign p a probability as high as you like but less than 1. As Jeremy Fantl and Matthew McGrath point out, if it turns out that not-p, and you say I was wrong about p, I could reasonably reply “Look, I took no stand on whether p was true or not; I just assigned it a high probability; I assigned its negation a probability too” (Fantl and McGrath 2009, p. 141); quoted by Clarke (2013, p. 6).Footnote 22 \(^,\) Footnote 23

Why am I not exactly wrong if I’m merely very (but non-maximally) confident that p when not-p is true? Why isn’t my high confidence mistaken, but a belief or assertion that p would be mistaken? I think it’s because in merely being very confident that p, I haven’t thereby ruled out the possibility that not-p.Footnote 24 Say I assign a very high probability to p, say 0.999, and tell you so. We discover that not-p is definitely true. In assigning p a probability of 0.999, I was thereby allowing that there was a 0.001 probability that not-p and thus acknowledging not-p as a real (though very unlikely) possibility.Footnote 25 This possibility that I was allowing for turned out to obtain. But because my probability assignment kept open the possibility that not-p, the fact that not-p doesn’t make my assignment false or incorrect.

Summarizing, beliefs and all representations rule out possibilities, whereas non-maximal probability assignments do not. A representation that p is the case rules out the possibility that not-p. It’s because of this that it’s simply inaccurate if not-p is really true. On the other hand, an assignment of a very high but non-maximal probability to p leaves open the possibility that not-p, and for this reason it’s not simply false or inaccurate if not-p is really true. (BIDN) and (CDPL) are true in virtue of the nature of representations and probability assignments, and because a belief is a representation. A belief that p assertively represents p as being the case, and thus rules out the possibility that not-p. However, in order to have a credence in p that is less than 1, one cannot rule out the possibility that not-p. Therefore, believing that p is incompatible with having a credence in p that’s less than 1.

4 Objections to (Certainty)

So far I’ve provided reasons for thinking that (Certainty) is true. In this section I will respond to three objections to (Certainty). The first objection contends that I don’t have the betting behavior with respect to my beliefs that (Certainty) predicts, and the second contends that if (Certainty) were true, then we would have fewer beliefs than we actually have. Those two objections belong together, and will both be dealt with in Sect. 4.1. The third objection alleges that if (Certainty) were true, then it would never be rational to give up a belief in response to evidence. But sometimes this is the rational response to acquiring new evidence. Therefore, (Certainty) must be false. I’ll argue that all three objections are at least inconclusive.

4.1 Objections 1 and 2: Betting behaviors and too few beliefs

Traditionally, credences have been cashed out in terms of betting behavior, or in terms of what bets one would regard as fair. I have a credence of n in p only if I would regard a bet on p as fair which paid me $1-n if p and where I paid $n if not-p. Accordingly, I have a credence of 1 in p only if I would regard a bet as fair that paid me nothing if p, and which I paid any amount you like if not-p! But surely, the first objection points out, we have many beliefs that p such that we would not regard as fair a bet that paid us nothing if p and where we have to pay a great deal if not-p. I agree, we do have beliefs like that. The problem with this objection lies in its assumption that we should cash out our credences in terms of what bets we would regard as fair.Footnote 26

There are good reasons for thinking that sometimes betting behavior (or what bets I would regard as fair) isn’t even a good measure of credences (Bradley and Leitgeb 2006; Eriksson and Hájek 2007). One problem is that being presented with a bet may change one’s credences.Footnote 27 With regard to (Certainty), maybe I do have a credence of 1 in p (in virtue of believing p), but if you talk to me about a bet on p at extreme odds, that will cause me to rethink how confident I should be in p, and as a result my credence in p will drop to less than 1. For instance, being presented with such a bet may lead me to think, for the first time, of the fact that there are unlikely not-p possibilities that I really can’t rule out. If this would happen frequently in cases where I have a credence of 1, then betting behavior (or what bets I would regard as fair) will be systematically unreliable in measuring my credence in p when it’s 1.

So my response to the first objection is that it’s at least possible that being presented with a bet on p at extreme odds might change my credence in p from 1 to less than 1. Therefore, it’s not obvious that the fact that if I were presented with such a bet on p I wouldn’t regard it as fair and wouldn’t take it shows that my credence now, in the absence of being presented with such a bet, is less than 1. Indeed, as Clarke (2013, pp. 11–12) points out, one is less inclined to assert p upon being offered a bet on p at extreme odds. Assuming that one’s beliefs are something like the propositions one is prepared to sincerely assert, this fact provides some evidence that the extreme bet makes one lose the belief.

The second objection contends that if (Certainty) is true, then we have almost no beliefs.Footnote 28 This objection assumes that we almost never have a credence of 1 in contingent propositions. In particular, most of the propositions we take ourselves to believe we don’t have a credence of 1 in, and thus according to (Certainty), we don’t actually believe those propositions.

What of that assumption that we almost never have a credence of 1 in contingent propositions? Many contemporary philosophers agree with it, but is there any reason to think it’s actually true? In this paper I’ve taken it for granted that (*) if one is willing to sincerely assert or assume ‘p’ in reasoning without qualification then (all else equal) one believes p (assuming ‘p’ contains no context-dependent terms). I have argued for (Certainty), i.e., if one believes that p, then one has a credence of 1 in p. The conjunction of (*) and (Certainty) jointly entail that (**) if one is willing to sincerely assert or assume ‘p’ in reasoning without qualification, then one has a credence of 1 in p. If (**) is true, then contrary to popular belief, we actually have a credence of 1 in many propositions—all the propositions we’re willing to sincerely assert or assume in reasoning without qualification. So my response to the second objection is that unless my assumption of (*) is false, (Certainty) does not imply that we have very few beliefs. On the contrary, it implies that we have a credence of 1 in many more propositions than philosophers realize.

While the arguments of this paper provide reasons to think we have a credence of 1 in many contingent propositions, most philosophers think we don’t. Are there any reasons to think the philosophers’ view is correct? I can think of four ways of arguing for the view that we have a credence of 1 in very few contingencies or that we believe many more propositions than we have a credence of 1 in. First, our betting behavior suggests that we don’t have a credence of 1 in very many contingencies. I’ve already given my response to that. Our betting behavior may be a systematically unreliable guide to what our credences are when they’re 1. The second reason is that it doesn’t feel to us like we’re certain of all our beliefs. When we assert the propositions we believe, we sometimes have feelings of doubt, or at least we don’t have a feeling of certainty about them. In other words, phenomenology suggests that we don’t have a credence of 1 in all our beliefs. However, I’m with Ramsey (1964) in thinking that phenomenology is not a good guide to what our credences are, and that our behavior is a better guide. A medical doctor, after carefully thinking about what the right procedure to cure Mike’s ailments is, concludes that Mike’s left arm must be amputated. If she’s wrong, she will be discredited as a doctor and poor Mike will lose his limb when he didn’t have to. Consequently, the doctor is terrified of making a mistake and is overwhelmed with feelings of doubt. However, she has thought about the matter thoroughly, and goes forward with the amputation even though these feelings never go away. We can imagine that her credence that she is doing the right thing is very close to 1. The fact that she’s willing to go ahead with the amputation shows us what her real credence is, and her feelings of doubt do not. Actions often speak louder than feelings. Regarding our beliefs, the fact that we’re willing to assert propositions without qualification shows that we do have a credence of 1 in them, in spite of the fact it may not feel like that’s what our credence is.Footnote 29

A third way to argue that we have a credence of 1 in fewer propositions than we believe is by considering specific examples. For instance, there’s the example with which I started Sect. 3.2. I’ve just dropped an egg on the floor, and it broke. According to quantum mechanics, there’s a non-0 probability that it will suddenly reconstitute itself and jump back up into my hands. Nevertheless, the objector contends, I believe that it will stay broken on the floor. Since I believe there’s a chance it will reconstitute itself, my credence that it will stay broken is less than 1, but I still believe that it will stay broken. Therefore, (Certainty) is false.

Just as I previously claimed that being presented with a bet at extreme odds may change one’s credence from 1 to less than 1, I claim that being forced to consider a possibility such as the extremely unlikely possibility that the egg will reassemble itself may change one’s credence from 1 to less than 1. Bob is willing to assert ‘The broken egg will stay there on the floor’ sincerely and without qualification, and so he has a credence of 1 that that’s what will happen. Then if you force him to acknowledge that there is a chance, after all, that it won’t stay on the floor, his credence may become slightly less than 1, and then he’ll lose the belief. That doesn’t mean he didn’t have the belief and a credence of 1 prior to acknowledging the chance. All I have to deny is that the following can happen: Bob is willing to sincerely and without qualification assert or assume in reasoning that the egg will stay on the floor while at the same time—in the very same situation he regards it as possible that it won’t due to his having a small positive credence that it won’t. It’s not obvious that that can happen. Perhaps, on the contrary, insofar as Bob comes to acknowledge the possibility that it won’t stay on the floor, he has thereby retracted any belief or assertion that it will.Footnote 30

Fourth, we may argue that we believe many more propositions than we have a credence of 1 in based on the premise that we are more confident in some of our beliefs than in others. For instance, I believe that (H) I am human and I believe that (C) smoking causes lung cancer. But I have a higher credence in H than in C. I have many other beliefs which I have a lower credence in than I have in H. Therefore, I have many beliefs that I don’t have a credence of 1 in, contrary to what (Certainty) claims.Footnote 31

I’ve already endorsed the view, developed in detail by Clarke (2013), that what one believes depends in part on which possibilities one is aware of.Footnote 32 It is easier to believe that p the fewer not-p possibilities one acknowledges, since believing p requires having a credence of 0 in every not-p possibility. The objection we’re considering makes two claims:

-

A: I am more confident in H (I’m human) than in C (smoking causes lung cancer).

-

B: I believe both H and C.

I acknowledge that A is true in some situations and B is true in others. However, I deny that there are any situations in which A and B are true simultaneously. What’s it like when A is true? I’m comparing H and C, and it occurs to me that C is more doubtful. C was established by empirical studies, and I consider the fact that such studies always have a small possibility of error. Or maybe I think that there’s always some small chance that massive, systematic errors were made in the analyses of the studies linking smoking and cancer, or there’s a small chance of a conspiracy of scientists against tobacco companies, or whatever. But there are no such error possibilities about my belief that H. If my present state of mind is one where I acknowledge such not-C possibilities, then while A is true, on my view (and Clarke’s) I don’t believe C and thus B is false. There are, however, many other situations where I am less reflective and am not acknowledging the not-C possibilities mentioned previously. In such situations, B is true, but A is not. It is not obvious that there are any situations where A and B are true simultaneously, which enables us to resist the objection to (Certainty).

4.2 Objection 3: (Certainty) entails that beliefs are indefeasible

The third objection to (Certainty) claims that (Certainty) entails that beliefs are indefeasible in the sense that giving up a belief will never be a rational response to evidence. Let’s assume that credences must conform to the probability calculus, and that when S acquires evidence E, she should update her credence in p to her prior conditional credence of p on E (i.e., she should update by conditionalization). It’s a law of probability that if P(p) = 1, then for any E such that P(\(E) > 0\), P(p|E) = 1. (Certainty) says that when S believes p, her credence in p is 1. Therefore, if S believes p, her credence in p conditional on any E (P(\(E) > 0\)) will be 1. Therefore, no matter what new evidence she acquires, she’ll be rationally required to maintain a credence of 1 in p when she updates on that evidence (at least for any evidence she had a prior positive credence in).Footnote 33

A weakness of the above objection is that it assumes that the only way to rationally update our doxastic states in response to evidence is conditionalization (or Jeffrey conditionalization). I think we should reject this assumption. For as Weisberg (2009) has argued, there is a good reason to think that we shouldn’t always update our doxastic states by conditionalization.Footnote 34 I’ll briefly recap his argument.

Consider the following two theses:

-

Partial Commutativity (PC): In some cases where an agent has one experience \(e_1\) followed by another \(e_2\), she could have had two experiences qualitatively very similar to \(e_1\) and \(e_2\) but in reverse order (i.e., she could have had an experience very similar to \(e_2\) followed by an experience very similar to \(e_1\)), such that the bearing of those experiences on her credences in some hypotheses should be the same in either case.

-

Holism: For any experience e and any proposition p, there is a “defeater” proposition q, such that your credence in p, upon having the experience e, should depend on your credence in the defeater q.

Consider the following scenario. I look at a jellybean and it looks blue to me. Let that be experience \(e_1\). Then I look at the lights that are illuminating the jellybean and notice that they appear to be blue-tinted. Let that be \(e_2\). We can imagine that experience \(e_1\) would normally justify my being very confident that the jellybean is blue, but that that justification is defeated by my having \(e_2\). After having \(e_2\) I should regard it as fairly likely that the jellybean’s appearing to be blue is caused by the blue-tintedness of the light illuminating it. This case illustrates the plausibility of PC. My final credence in the jellybean’s being blue should be the same whether I have experience \(e_1\) and then have \(e_2\), or instead have \(e_2\) and then \(e_1\). It also illustrates the plausibility of Holism. When one looks at something which appears to be a certain color, that experience (e) normally justifies our being confident that (p) it is that color. However, this depends on our being justifiedly confident that (q) our experience is not illusory in some way. Note that Holism articulates the well-known and plausible claim of Quine (1951) that experience doesn’t justify our beliefs in isolation, but in virtue of our background doxastic states.

Those who think that we should update on evidence by conditionalization are divided into those who think we should classically conditionalize and those who think we should Jeffrey conditionalize.Footnote 35 What Weisberg shows is that we cannot require that credences be updated on evidence by either Jeffrey or classical conditionalization, while at the same time accepting both PC and Holism.Footnote 36 I think Weisberg’s result provides an argument that we shouldn’t always update our doxastic states by conditionalization. The fact that the objection we’re considering to (Certainty) assumes otherwise undermines the persuasiveness of the objection.

5 Conclusion

In this paper I’ve argued for (Certainty): believing p entails having a credence of 1 in p. Although opposition to (Certainty) is widespread and fierce, I hope to have shown that there are good reasons for thinking that it’s true, and that some important objections to it can be resisted.Footnote 37

Notes

In Dodd (2011) I defend an analogous view about knowledge, namely that If S knows p, then the probability of p on S’s evidence is 1.

Throughout this paper, please read my quotation marks as corner quotes where appropriate.

I’m going to assume ‘probably’ means having a probability greater than 0.5. Applied to credence, therefore, it means having a credence greater than 0.5. I actually believe that’s what it means. However, if the reader disagrees, thinking perhaps that 0.5 is too low of a probability, that won’t affect any of the arguments in this paper. If one substitutes n for 0.5, where n is the minimum probability required for a proposition to be ‘probably’ true, all the arguments I give can be adapted to fit the change, and will still go through.

I’m assuming that one way speakers can use sentences of the form ‘Probably p’ is to express their credences. In fact, I think speakers do use probabilistic language to do this sometimes. That such sentences are used for this purpose is defended by contemporary philosophers of language (for an overview of how to think like this about ‘probably’ and similar operators, see Yalcin 2012). On the other hand, Holton (2014) disagrees. He thinks that we don’t ever use probabilistic language to express credences, but only to talk about probabilistic facts in the world. Holton also denies that we have any probabilistic attitudes like credences at all, although we do have attitudes about probabilities. His view of probabilistic language is plausible if it’s plausible that we lack credences. While Holton denies that we have probabilistic attitudes, he is happy to acknowledge that we have various degrees of confidence and uncertainty in what we regard as open possibilities. I think Holton would deny that degrees of confidence can modeled with the probability calculus—thus they’re not probabilistic attitudes—even where such modeling is thought of just as a useful idealization. I suspect it’s here where our disagreement chiefly lies. If we do lack probabilistic attitudes (for instance, because degrees of confidence shouldn’t be thought of probabilistically) as Holton thinks, then we lack credences and then obviously we can’t use probabilistic language to express them. But if, contra Holton, we do have credences—i.e., degrees of confidence that can be modeled with the probability calculus—then it seems we should be able to use probabilistic language to express them. Why wouldn’t we be able to? What would be stopping us? If my assumption is granted that we do have credences, then it is hard to see why we wouldn’t be able to utilize probabilistic language to express them. I’ll be assuming in this paper that we can do this.

Some readers may favor an expressivist (non-truth conditional) account of the semantics of sentences involving the doxastic use of ‘probably’ I’m focusing on. Such readers will be attracted to expressivist accounts of epistemic modals generally no doubt. Such readers may object to my talk of a judgment that ‘Probably p’, and maybe also object to speaking of an assertion of ‘Probably p’. Here and in what follows, where I talk about judging or asserting ‘Probably p’, feel free to substitute a different, expressivist-friendly locution for using such sentences (e.g., using the sentence on a particular occasion to express the speaker’s attitude). In spite of my use of words like ‘judging’ and ‘asserting’, in fact my arguments in this paper don’t presuppose that expressivist views about epistemic modals are false (or that they’re true).

Argument 1 was discussed by Yalcin (2008).

For those who want to analyze these arguments in terms of dynamic logic, where the order of the premises can affect an argument’s validity, this claim is only true if (P1) and (P2) occur in the order in which I present them (i.e., with (P1) first). I’m grateful to Hans Kamp and Johan van Bentham for drawing my attention to this point. I wish to remain neutral on the question of whether these arguments should be analyzed dynamically.

One might worry that because ‘acceptance’ is a term of art, the fact that we find Arguments 1–3 compelling shouldn’t lead us to conclude that (Commitment) is true. For instance, perhaps we find the arguments compelling only because we take the assertion of their first premise to express the speaker’s knowledge, rather than mere belief. The idea is that we think the speaker is committed to one of the arguments’ conclusions only insofar as s/he knows its first premises, and we don’t think s/he’s committed to its conclusion when s/he merely believes the premise. I have a couple responses. First, consider a case where the speaker asserts the premises of one of Arguments 1–3, but we (unlike the speaker) know that she doesn’t know the first premise, though she sincerely believes it. In this case, I still have an equally strong intuition that she is committed to accepting the argument’s conclusion. This intuition cannot be accounted for by the principle that knowledge of the argument’s premises, but not mere belief, commits one to its conclusion. Second response. The arguments’ second premises and conclusions are used to express the speaker’s credences. Whatever it is about knowing the arguments’ first premise that helps affect a commitment to their conclusions, it must be what knowledge implies about the speaker’s doxastic state. After all, it seems it would have to be the doxastic state the speaker is in in virtue of knowing the argument’s first premises that combines with the doxastic state described in the second premise to commit the speaker to the argument’s conclusion. What doxastic state is there, which is implied by knowing something, and which would do the relevant work here? Whatever doxastic state it is, it has to be one that involves having a certain credence: as we shall see, a speaker is committed to the arguments’ conclusions in virtue of being committed to their second premises just in case s/he has a credence of 1 in their first premises. So what doxastic state is there, which is implied by knowing the arguments’ first premises, which when combined with the speaker’s commitment to premises 2 commits her to the arguments’ conclusions, and which commits her to their conclusions in virtue of requiring her to have a credence of 1? The most obvious answer to this question is belief. Note that on most analyses of knowledge, the only doxastic state that knowing implies is belief. An exception is Williamson (2000), who thinks that knowledge itself is a mental state. So someone who, like Williamson, thought of knowledge as a mental state, could reject (Commitment), claiming that the reason we find Arguments 1–3 compelling is that we imagine the speaker to know the first premise, and not merely believe it, and unlike belief knowledge does require having a credence of 1. I have two replies to this response. First, even on a Williamsonian view, I don’t see the motivation for claiming that knowledge but not belief requires having a credence of 1. Second, the view that knowledge requires having a credence of 1 is itself a striking thesis.

As a referee pointed out, the example that McGee (1985) presented as a counterexample to modus ponens is a potential counterexample to (Commitment) applied to Argument 1. The following seems possible: I believe if a Republican wins the election, then (if it’s not Reagan, it will be Anderson), and also I accept that probably a Republican will win. Yet at the same time I don’t accept that probably (if a Republican wins the election, it will be Anderson). Point taken. I am very unsure about what to say about McGee’s original example (like this one, but with the probability operators missing) as a counterexample to modus ponens, and I’m equally unsure about what to say about this putative counterexample to (Commitment) applied to Argument 1.

As I explain in the Appendix, due to uncertainty about how to think about the probability of an indicative conditional, I can’t demonstrate that this holds for Argument 1. I provide evidence that it holds for Argument 1, and I demonstrate that it holds for Arguments 2 and 3.

Arguments 1–3 are examples of a general phenomenon. I leave it as an exercise for the reader to show that for the following three arguments, the analogue of (Commitment) is plausible, and that like Arguments 1–3 this is the case iff believing their first premises (P1) means having a credence of 1 in (P1).

For instance, DeRose (1996), Hawthorne (2004), and Williamson (2000) amongst others have noted this fact. However, Hawthorne (2004) also notes some cases in which we are willing to assert ‘lottery propositions.’ The writers just mentioned all take the typical unassertability of lottery propositions to motivate a knowledge norm of assertion. However, their unassertability can also be explained by a belief norm combined with (Certainty). I think this explanation is better than the explanation in terms of the knowledge norm because it generalizes to explain (Commitment), while the knowledge norm explanation doesn’t, unless we add to that explanation the view that knowledge involves having a credence of 1. But it’s not clear why knowledge should involve having a credence of 1 unless (Certainty) is true. (Since knowledge implies belief, we can explain (Commitment) in terms of the knowledge norm if we accept (Certainty). But accepting (Certainty) enables us to explain it in terms of a mere belief norm too.) Note that accepting my explanation of the unassertability of lottery propositions doesn’t prevent us from accepting the knowledge norm of assertion.

For instance, when he originally stated the Paradox, Kyburg (1961) just took it to be obvious that we do believe that individual tickets won’t win.

As with Arguments 1–3, if we analyze these arguments using dynamic logic, this claim is true only if the arguments occur in the order I presented them in (with (P1) occurring first).

The explanations offered in this subsection will make some readers think of (Stalnaker 1978, 1998)’s influential theory of the interaction of assertion and context. According to Stalnaker, a conversational context C is represented as a set of presuppositions which the speaker and audience share, and these presuppositions leave open a set of possible worlds—the set of worlds compatible with the presuppositions. These are the live possibilities for the conversation. When a sentence is asserted in C that expresses the proposition p and the conversational participants accept the assertion, they update the context by removing all the not-p possibilities from the set of live possibilities. (They will update by eliminating other possibilities too, like the ones ruled out by conversational implicatures.) Note that after a proposition p is accepted by the conversational participants, all the open possibilities in C will be p-possibilities. In this sense, every accepted proposition is necessary. Stalnaker’s theory can explain why asserting (Moore)’s right conjunct seems like a retraction of the assertion of its left conjunct, and why in accepting the premises of Arguments 4–6 conversational participants will thereby be committed to the Arguments’ conclusions.

If we think of assertion as the outward expression of belief, then it’s natural to extend what Stalnaker says about modeling the acceptance of a proposition by participants in a conversation to a way of modeling the acceptance of a proposition by an individual thinker. (This is an extension and not a part of Stalnaker’s theory. Stalnaker denies that in order for conversational participants to accept an assertion for the purposes of conversation, they have to believe it.) In short, the modeling technique may be applied to beliefs. When S comes to believe p, we update the set of possibilities S regards as open (S’s doxastic possibilities) by deleting all the not-p-possibilities from this set. When S comes to believe that p, S no longer regards any not-p-possibilities as open. In this sense, every proposition believed by S is necessary for S, and thus (BIDN) is true.

Finally, we can see Arguments 4–6 (and Arguments 1–3) as examples of what Stalnaker (1975) called “reasonable inferences.” An inference from P to Q is a reasonable inference iff accepting (in the Stalnakerian sense just outlined) a set of premises P eliminates every not-Q possibility, for any conversational context. As Stalnaker explains, the inference from P to Q might be a reasonable inference even though P doesn’t entail Q. (Stalnaker argued that p or q doesn’t entail if not-p then q, while it’s a reasonable inference from p or q to if not-p then q).

The example is from Strevens (1999).

For according to (CDPL), credences are distributed only over doxastic possibilities. So if p is doxastically necessary, S has all of her credence in p and no credence in not-p. Assuming that S’s credences all add up to 1 (as is required by the probability calculus), her credence in p will be 1.

I take it that we have a pre-theoretical grasp of what a representation is, and what assertoric force is. We understand pre-theoretically the difference between representations that are put forward as true, and those that are not: we understand the difference between an assertion and a supposition, and the difference between a news report that purports to tell us about real events (assertoric force), and mere make believe or fiction (no real assertoric force). My claim is that, for our pre-theoretical concepts of representation and assertoric force, an assertoric representation of p doesn’t leave open the possibility that not-p. That a representation that is put forward as true rules out all counterpossibilities I take to be a significant fact about assertoric representations.

Fantl and McGrath (2009) call this the truth standard for belief. They make the same point that I’m about to make, namely that the truth standard doesn’t hold for high but non-maximal credence.

Of course there are scoring rules for probabilities (e.g., the logarithmic and Brier rules), and they’re even sometimes called “accuracy measures”. Such scoring rules provide a way of measuring how well a probabilistic model or assignment fares, but they don’t score the model/assignment as simply accurate or inaccurate. They don’t do that, and a probability assignment or model isn’t simply accurate or inaccurate, true or false. Note that any assignment of probabilities can always receive a higher logarithmic or Brier score except for the assignment that assigns a probability of 1 to all truths and a probability of 0 to all falsehoods. But maps, assertions, beliefs, etc.—representations of the way the world is—can be simply accurate or inaccurate, correct or mistaken. This is a difference between probabilities and representations.

Take the Lockean view of belief to be the view that a state of belief is identical to a state of having a credence above a threshold \(\tau \) (\(\tau < 1\)). According to the Lockean view, there are states of believing p that are identical to states of having a credence greater than \(\tau \) but less than 1 in p. The fact that beliefs can be simply mistaken or correct but credences less than 1 can’t be gives us a simple Leibniz’s Law argument against the Lockean view. Let S be a state of belief that is also a state of having a credence between \(\tau \) and 1. Since S is a belief, it has the property of being correct or being wrong. Since S is a state of having a non-maximal credence, it must lack that property. Contradiction.

This is also Fantl and McGrath’s explanation.

Note the assumption of (CDPL) here.

The response to this objection I’m about to give is also given by Clarke (2013, p. 9ff).

I don’t bet. I’m very confident that I won’t accept any bets this year. Does that mean I’ll accept a bet (or regard a bet as fair) on the proposition that I won’t except any bets this year? Obviously not!

I’m grateful to an anonymous referee for requesting that I deal with this as a separate objection.

The doctor has a high credence that Mike needs his limb amputated. Is she also confident that this is what he needs? In some sense, yes, given that her credence is high. But perhaps it’s also true that she’s full of doubt, given that she has very strong feelings of doubt. Perhaps the words ‘confident’, ‘doubt’, ‘certain’, ‘uncertain’, etc., are all ambiguous. There’s a sense of ‘confidence’ where it’s simply credence, but there’s another sense of ‘confidence’ that has more to do with inner feelings. I’m inclined to think that ‘confidence’, ‘doubt’, etc. are ambiguous in this way. If so, then (Certainty) doesn’t rule out the compatibility of belief with a kind of doubt.

There is an extensive discussion in the literature on assertion, knowledge attributions, contextualism, and subject sensitive invariantism, about whether a speaker can sincerely and felicitiously assert ‘The broken egg will stay there on the floor’ in a context in which the possibility of the egg reconstituting itself has been acknowledged to be real. That discussion validates what I just claimed: it may be that one can’t make the assertion while simultaneously acknowledging the counterpossibility. It’s far from obvious that one can.

I’m grateful to an anonymous referee for pressing this objection.

Clarke’s view also involves accepting (Certainty). Leitgeb (2014) has developed a model of belief that doesn’t imply (Certainty), but which also claims that what one believes depends on which possibilities one is aware of. Like Clarke and Leitgeb, I accept a version of subject-sensitive invariantism about belief.

Note that the above argument’s conclusion is not that (Certainty) implies that beliefs are simply indefeasible. At best it only shows that it follows from (Certainty) that beliefs cannot be defeated by evidence in which the subject had a positive prior credence. From the point of view of (Certainty), this would only include evidence compatible with the subject’s prior beliefs. However, I’m going to proceed by taking it for granted that a belief’s justification can be defeated by evidence that doesn’t contradict any of the subject’s prior beliefs.

In addition to Weisberg’s argument, Artzenius (2003) gives some cases where it seems one shouldn’t update one’s credences by conditionalization.

We classically conditionalize on evidence e by setting our new credences \(P_e(p)\) to the values of our prior conditional credences P(p|e). In Jeffrey conditionalization, one’s credences over a partition \(e_i\) of the probability space changes from \(P_{old}(e_i)\) to \(P_{new}(e_i)\), and then one sets one’s new credences in each proposition p to \(\sum _i P_{old}(p|e_i)P_{new}(e_i)\). Classical conditionalization is a limiting case of Jeffrey conditionalization, wherein \(P_{new}(e_i) = 1\).

See Weisberg (2009). As presentation of his argument for this conclusion would take up too much space, I refer the reader to his paper.

I am grateful for the feedback I received on this paper from audiences at the Universities of Aberdeen, St Andrews, Copenhagen, Nancy, Edinburgh, Stirling, Texas-San Antonio, Konstanz, and at Queens University Belfast. I especially would like to thank Mike Almeida, Johan van Bentham, Tony Brueckner, Mikkel Gerken, Hans Kamp, Aidan McGlynn, Peter Milne, Dilip Ninan, Crispin Wright, and Elia Zardini. I was able to develop the ideas in this paper while a postdoctoral fellow of the AHRC-funded Basic Knowledge Project (2007–2012), led by Crispin Wright. I am grateful to Professor Wright, the other members of the Project, and to the members of the Arché Research Centre (St Andrews) and the Northern Institute of Philosophy (Aberdeen) for their collegiality and support.

For a nice summary of many of the objections to the material conditional analysis of indicative conditionals, see Abbott (2005).

References

Abbott, B. (2005). Some remarks on indicative conditionals. In K. Watanabe & R. B. Young (Eds.), Proceedings from semantics and linguistic theory (SALT). Ithaca, NY: CLC Publications.

Adams, E. (1965). The logic of conditionals. Inquiry, 8, 166–197.

Arlo-Costa, H. (2010). Review of F. Huber and C. Schmidt-Petri (eds.), Degrees of Belief. Notre Dame Philosophical Reviews, 2010

Artzenius, F. (2003). Some problems for conditionalization and reflection. The Journal of Philosophy, 100(7), 356–370.

Bradley, D., & Leitgeb, H. (2006). When betting odds and credences come apart: More worries for dutch book arguments. Analysis, 66, 119–127.

Clarke, R. (2013). Belief is credence one (in context). Philosopher’s Imprint, 13(11), 1–18.

de Finetti, B. (1990). Theory of probability. New York: Wiley.

DeRose, K. (1996). Knowledge, assertion and lotteries. Australasian Journal of Philosophy, 74(4), 568–580.

Dodd, D. (2011). Against fallibilism. Australasian Journal of Philosophy, 89, 665–685.

Douven, I. (2002). A new solution to the paradoxes of rational acceptability. British Journal for Philosophy of Science, 53(3), 391–410.

Edgington, D. (1995). On conditionals. Mind, 104, 235–329.

Eriksson, L., & Hájek, A. (2007). What are degrees of belief? Studia Logica, 86, 183–213.

Fantl, J., & McGrath, M. (2009). Knowledge in an uncertain world. Oxford: Oxford University Press.

Frege, G. (1918). Der Gedanke: Eine logische Untersuchung. Beiträge zur Philosophie des deutschen Idealismus, 1, 58–77.

Hawthorne, J. (2004). Knowledge and Lotteries. Oxford: Oxford University Press.

Holton, R. (2014). Intention as a model for belief. In M. Vargas & G. Yaffe (Eds.), Rational and social agency: Essays on the philosophy of Michael Bratman. Oxford: Oxford University Press.

Jackson, F. (1979). On assertion and indicative conditionals. Philosophical Review, 88(4), 565–589.

Jeffrey, R. (1964). If. Journal of Philosophy, 61, 702–703.

Kripke, S. (1979). A puzzle about belief. In N. Salmon & S. Soames (Eds.), Propositions and attitudes (pp. 102–149). Oxford: Oxford University Press.

Kyburg, H. E. (1961). Probability and the logic of rational belief. Middleton, CT: Wesleyan University Press.

Leitgeb, H. (2014). The stability theory of belief. Philosophical Review, 123(2), 173–204.

Levi, I. (1991). The fixation of belief and its undoing: Changing beliefs through inquiry. Cambridge: Cambridge University Press.

Levi, I. (2004). Mild contraction: Evaluating loss of information due to loss of belief. Oxford: Oxford University Press.

Lewis, D. (1976). Probabilities of conditionals and conditional probabilities. Philosophical Review, 85, 297–315.

McGee, V. (1985). A counterexample to modus ponens. Journal of Philosophy, 82, 462–471.

Pollock, J. (1995). Cogntive carpentry: A blueprint for how to build a person. Cambridge: MIT Press.

Quine, W. (1951). Two dogmas of empiricism. Philosophical Review, 60, 20–43.

Ramsey, F. P. (1964). Truth and probability. In H. E. Kyburg & H. E. Smokler (Eds.), Studies in subjective probability (pp. 61–92). New York: Wiley.

Rieger, A. (2006). A simple theory of conditionals. Analysis, 66(3), 233–240.

Ryan, S. (1996). The epistemic virtues of consistency. Synthèse, 109, 121–141.

Stalnaker, R. (1970). Probability and conditionals. Philosophy of Science, 37(1), 64–80.

Stalnaker, R. (1975). Indicative conditionals. Philosophia, 5, 269–286.

Stalnaker, R. (1978). Assertion. In P. Cole (Ed.), Syntax and semantics 9. New York: Academic Press.

Stalnaker, R. (1998). On the representation of context. Journal of Logic, Language and Information, 7(1), 3–19.

Strevens, M. (1999). Objective probability as a guide to the world. Philosophical Studies, 95, 243–275.

Weisberg, J. (2009). Commutativity or holism? A dilemma for conditionalizers. The British Journal for the Philosophy of Science, 60, 793–812.

Williamson, T. (2000). Knowledge and its limits. Oxford: Oxford University Press.

Yalcin, S. (November 2008). Probability operators. Unpublished lecture at the Arché Research Centre: University of St. Andrews.

Yalcin, S. (2012). Bayesian expressivism. Proceedings of the Aristotelian Society, 112(2), 123–160.

Author information

Authors and Affiliations

Corresponding author

Appendix 1: Arguments 1–3

Appendix 1: Arguments 1–3

For Arguments 1–3, assume that p and q aren’t logically equivalent, and that ‘probably’ is used to express the fact that the speaker has a credence greater than 0.5 in the relevant proposition.

Recall my notion of ‘acceptance’: one accepts (P1) in Arguments 1–3 iff one believes it, and one accepts (P2) and (C) iff one has a credence greater than 0.5 in the relevant proposition. In Sect. 2 I claimed that

-

(Commitment) In accepting Arguments 1–3’s premises, one is thereby committed to accepting their conclusions, on pain of inconsistency.

In other words, the following set of attitudes is jointly inconsistent: acceptance of (P1) in one of Arguments 1–3, acceptance of (P2) in the same Argument, and rejection of (C) in the same Argument.

In this appendix, I show with respect to Arguments 2 and 3 that (Commitment) is true iff believing their (P1) involves having a credence of 1 in (P1) (Appendices 1.1 and 1.2). Because of a lack of consensus about how to think about the probability of indicative conditionals, I won’t be able to show the same thing for Argument 1. In Appendix 1.3 I show that it holds if the probability of an indicative conditional \(p \rightarrow q\) is the probability of q conditional on p. For those who deny that the probability of a conditional is a conditional probability, I provide some evidence that (Commitment) holds only if believing (P1) involves having a credence of 1 in it.

In the rest of this appendix, let’s call the premise (P1), (P2), and the conclusion (C) of Argument x ‘(P1)\(_x\)’, ‘(P2)\(_x\)’, and ‘(C)\(_x\)’. Thus, for instance, premise (P2) of Argument 2 will be referred to as ‘(P2)\(_2\)’ and the conclusion of Argument 1 will be called ‘(C)\(_1\)’.

1.1 Appendix 1.1: (Commitment) holds for Argument 2 iff accepting (P1)\(_2\) means having a credence of 1 in (P1)\(_2\).

First suppose that accepting (P1)\(_2\) means having a credence of 1 in (P1)\(_2\): \(P(p \vee q) = 1\). Then \( P(\lnot p \& \lnot q) = 0\). Since the acceptance of (P2)\(_2\) (i.e., \(P(\lnot p) > 0.5\)) is equivalent to \( P(\lnot p \& q) + P(\lnot p \& \lnot q) > 0.5\), it follows that \( P(\lnot p \& q) > 0.5\), and thus that \(P(q) > 0.5\), which means that (C)\(_2\) is accepted. Therefore, if (P1)\(_2\) and (P2)\(_2\) are both accepted, and if accepting (P1) \(_2\) means having a credence of 1 in (P1) \(_2\), it follows that (C)\(_2\) is accepted. (Commitment) holds for Argument 2 if accepting (P1) \(_2\) means having a credence of 1 in (P1) \(_2\).

Suppose instead that although (P1)\(_2\) is accepted, \(P(p \vee q) < 1\). Given this supposition, it’s consistent with the fact that \(P(\lnot p) > 0.5\) [(P2)\(_2\) is accepted], that \(P(q) < 0.5\) [(C)\(_2\) isn’t accepted]. I think the reader will be able to see this through the following example. Say \(P(p \vee q)\) = 0.99. As the reader can verify, (P2)\(_2\) will be accepted while (C)\(_2\) won’t be for the following probability distribution:

-

\( P(\lnot p \& \lnot q) = 0.01\) [equivalent to our supposition that \(P(p \vee q)\) = 0.99]

-

\( P(\lnot p \& q) = 0.499\) [\(P(\lnot p) = 0.01 + 0.499 = 0.509 > 0.5\)]

-

\( P(p \& \lnot q) = 0.4909\)

-

\( P(p \& q) = 0.0001\) [\(P(q) = 0.499 + 0.0001 = 0.4991 < 0.5\)]

(Commitment) holds for Argument 2 only if accepting/believing (P1)\(_1\) involves having a credence of 1 in (P1)\(_1\). Combine this fact with what was demonstrated in the first paragraph and we have: (Commitment) for Argument 2 is true iff accepting/believing (P1)\(_2\) requires having a credence of 1 in (P1)\(_2\).

1.2 Appendix 1.2: (Commitment) holds for Argument 3 iff accepting (P1)\(_3\) means having a credence of 1 in (P1)\(_3\).

Recall that (P1)\(_3\) is p, (P2)\(_3\) is \(P(q) > 0.5\) [‘Probably q’] and (C)\(_3\) is \( P(p \& q) > 0.5\) [‘Probably (p & q)’].

Say \(P(p) = 1\) and \(P(q) > 0.5\). Then \( P(q) = P(p \& q) > 0.5\). Therefore, if accepting/believing p (i.e., (P1)\(_3\)) involves having a credence of 1 in p, then (Commitment) holds for Argument 3.

On the other hand, if accepting/believing (P1)\(_3\) doesn’t require having a credence of 1 in (P1)\(_3\), (Commitment) doesn’t hold for Argument 3. I think the reader will see that this is so by considering the following probability distribution.

-

\( P(\lnot p \& \lnot q) = 0.002\)

-

\( P(\lnot p \& q) = 0.008\)

-

\( P(p \& \lnot q) = 0.495\)

-

\( P(p \& q) = 0.495\)

In this example, \(P(p) = 0.495 + 0.495 = 0.99\), \(P(q) = 0.495 + 0.008 = 0.503 > 0.5\) (so (P2)\(_2\) is accepted), but since \( P(p \& q) < 0.5\), (C)\(_3\) isn’t accepted.

(Commitment) holds for Argument 3 if accepting/believing p involves having a credence of 1 in p, and it holds only if accepting/believing p involves having a credence of 1 in p.

1.3 Appendix 1.3: Argument 1

Recall that (P1)\(_1\) is if p then q [\(p \rightarrow q\)], (P2)\(_1\) is \(P(p) > 0.5\) [‘Probably p’], and (C)\(_1\) is \(P(q) > 0.5\) [‘Probably q’].

For Arguments 2 and 3 I argued directly that (Commitment) was true for them iff in accepting/believing each argument’s (P1), one has a credence of 1 in (P1). The problem with arguing in the analogous way for Argument 1 is that in doing so I would have to make an assumption about what the probability of (P1)\(_1\) is. But (P1)\(_1\) is an indicative conditional, and it’s controversial what the probability of an indicative conditional is.