Abstract

A hybrid algorithm based on the sparrow search algorithm (SSA) and whale optimization algorithm (WOA) is proposed to address numerical and engineering optimization problems. The hybrid algorithm has enhanced global search ability through the WOA's improved spiral update mechanism, so that it does not easily fall into the local optimum. Further, using the guard mechanism of SSA introduced by the Levy flight, it has a strong ability to escape from the local optimum. The performance of the improved sparrow search whale optimization algorithm (ISSWOA) was investigated using 23 benchmark functions (classified into standard unimodal, multimodal, and fixed-dimension multimodal benchmark functions) and compared with similar algorithms. The experimental results indicated that ISSWOA was significantly superior to other algorithms on most benchmark functions. To evaluate the performance of ISSWOA in complex engineering problems, seven engineering design problems and a large electrical engineering problem were solved using ISSWOA. Compared with other algorithms, the results showed that ISSWOA had high potential for practical engineering problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Optimization problems are common in real-world applications, especially in physics, chemistry, and biology [1]. Typically, a fast and effective method is required to find the optimal solution to an optimization problem. The most effective of these is based on metaheuristics.

Various metaheuristic algorithms exist. These can be classified into evolution-based algorithms (which imitate the law of the survival of the fittest), swarm-based algorithms (which simulate the cooperation and communication between animals), human-based algorithms (which imitate various human social behaviors), and physics-based algorithms (which imitate physical or chemical laws). An example of each type of algorithm is shown in Table 1.

Each metaheuristic algorithm has unique characteristics, and no algorithm outperforms others on all criteria. Different algorithms can be combined into a hybrid algorithm to leverage their characteristics, overcoming individual shortcomings and improving performance. Hybrid algorithms have been used in many studies. For example, Kumar et al. [22] proposed a multi-objective hybrid heat transfer search and passing vehicle search optimizer (MOHHTS–PVS) in which heat transfer search (HTS) acts as the main engine and passing vehicle search (PVS) is added as an auxiliary stage to enhance performance when applied to large engineering design problems. Yildiz et al. [23] proposed hybrid taguchi salp swarm algorithm-Nelder–Mead (HTSSA-NM) and hybrid artificial hummingbird algorithm and simulated annealing (HAHA-SA). HTSSA-NM used Nelder–Mead (NM) to improve the local search ability of the hybrid taguchi salp swarm algorithm (HTSSA), to optimize the structure and shape of an automobile brake pedal. HAHA-SA used simulated annealing (SA) to improve the performance of the artificial hummingbird algorithm (AHA), to solve constrained mechanical engineering problems. Li et al. [24] proposed particle swarm optimization-simulated annealing (PSO-SA) to complete seismic inversion in anisotropic media. PSO-SA combined simulated annealing (SA) and particle swarm optimization (PSO) and adjusted temperature by SA to control the particle aggregation jump out of local optima to improve the local search ability of PSO. Mafarja et al. [25] proposed whale optimization algorithm and simulated annealing (WOASA) to solve the problem of feature selection. WOASA embedded simulated annealing (SA) in the whale optimization algorithm (WOA) to enhance exploitation by searching the most promising regions located by WOA. Laskar et al. [26] proposed hybrid whale-particle swarm optimization (HWPSO), which combined particle swarm optimization (PSO) and the whale optimization algorithm, to solve electronic design optimization problems. HWPSO introduced “forced WOA” and “capping”; the former improves local optima avoidance in the exploration phase in PSO, and the latter accelerates the convergence to the global optimum. Han et al. [27] proposed the moth search-fireworks algorithm (MSFWA), which combined the moth search algorithm (MS) and fireworks algorithm (FA) to solve engineering design problems. MSFWA introduced the explosion and mutation operators from FA into MS to strengthen the exploitation capability of MS. Shehab et al. [28] proposed the cuckoo search and bat algorithm (CSBA), which combined the cuckoo search algorithm (CSA) and bat algorithm (BA) to solve numerical optimization problems. CSBA exploited the advantages of BA to improve the convergence ability of CSA.

SSA and WOA are swarm-based metaheuristic algorithms that imitate the predatory behavior of sparrows and whales, respectively. SSA [29] includes a step to help the algorithm escape local optima, which many other algorithms do not have; however, the effect is not obvious, and its global search ability is weak. WOA [30] has a strong local search ability owing to its spiral update mechanism but can easily fall into the local optimum because of its weak global search ability. The purpose of this research was to create a hybrid algorithm that could harness the strengths of each component to overcome these limitations. Accordingly, we improved WOA's spiral update mechanism to enhance its global search capability and used the Levy flight mechanism to improve SSA's ability to escape from the local optimum. Then, we combined the two improved algorithms into a hybrid algorithm that has strong global and local search capabilities and the ability to escape from the local optimum.

To evaluate the performance of ISSWOA, the hybrid algorithm was tested on the standard unimodal benchmark functions, standard multimodal benchmark functions, and standard fixed-dimensional multimodal benchmark functions. In addition, ISSWOA was applied to seven engineering design problems and an electrical engineering problem. The results showed that ISSWOA had strong optimization ability and was a competitive algorithm for solving practical problems.

The remainder of this paper is organized as follows. Sections 2 and 3 describe SSA and WOA, respectively. Section 4 details the proposed ISSWOA. The application of the proposed algorithm to 23 benchmark functions and engineering problems is reported in Sects. 5 and 6, respectively. Finally, Sect. 7 summarizes the paper and outlines future research directions.

2 SSA

SSA [31] is a metaheuristic optimization algorithm based on the sparrows’ foraging and antipredation behaviors and describes a discoverer–follower model with an awareness mechanism. In SSA, sparrows with high fitness in the population are regarded as producers, while others are considered as scroungers, and a proportion of individuals in the population are selected as guards for detection of threats.

2.1 Producers

After initializing the sparrow population, all sparrows are ranked by their fitness, and some of the individuals with better fitness are selected as producers. The producers’ iterative equation is given by

where t is the current iteration, \(\overrightarrow {{X_{i} }} \left( t \right)\) is the current location of the ith sparrow, Maxiter is the maximum number of iterations, α is a random number in (0,1], R is a random number in [0,1], ST in [0.5,1] is a safety threshold, Q is a random number drawn from a normal distribution, and L is a 1 × D matrix of ones.

2.2 Scrounger

After the producers update their positions, the other individuals are selected as scroungers, and their position update equation is given by

where \(\overrightarrow {{X_{{{\text{worst}}}} }} \left( t \right)\) is the worst position globally, n is the number of sparrows, \(\overrightarrow {{X_{{\text{P}}} }} \left( t \right)\) is the best position of producers, and A is a 1 × D matrix with each element randomly assigned the value of 1 or − 1.

2.3 Guard

After the positions of producers and scroungers are updated, a proportion of sparrows are selected from the population as guards, and their update equation is given by

where \(\overrightarrow {{X_{{{\text{best}}}} }} \left( t \right)\) is the current optimal position, β is a random number that controls the step size and obeys a Gaussian distribution, fi is the fitness of the ith sparrow, fg is the optimal fitness, K is a random number in (− 1,1), and fw is the worst fitness.

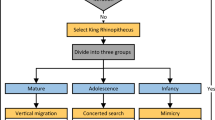

The pseudocode and flowchart of SSA are shown in Figs. 1 and 2, respectively.

3 WOA

WOA [32] is a metaheuristic optimization algorithm that mimics the hunting behavior of humpback whales. Humpback whales hunt using a bubble-net strategy. In WOA, this strategy is mathematically modeled as detailed below.

3.1 Encircling prey

For encircling prey, the whale herd moves iteratively toward the current optimal position according to the following iterative equation:

where \(\overrightarrow {{A{ }}}\) and \(\overrightarrow {{C{ }}}\) are vector coefficients, \(\overrightarrow {{X^{*} }} \left( t \right)\) is the current optimal position, \(\overrightarrow {{X{ }}} \left( t \right)\) is the current position of the whale, t is the current iteration, and \(\overrightarrow {{A{ }}}\) and \(\overrightarrow {{C{ }}}\) are expressed as

where \(\overrightarrow {{r{ }}}\) is a random vector with values in [0,1] and \(\overrightarrow {{a{ }}}\) decreases from 2 to 0 over the iterations as follows:

with Maxiter being the maximum number of iterations.

3.2 Bubble-net hunting

The bubble-net strategy of whales can adopt one out of two behaviors: encircling the prey and spiral updating position.

Encircling the prey is achieved by decreasing \(\overrightarrow {a }\) linearly from 2 to 0 over the iterative process to gradually reduce the fluctuation range of \(\overrightarrow {A }\). Vector \(\overrightarrow {r }\) takes a random value in [0,1] for \(\overrightarrow {A }\) to take a random number in \(\left[ { - a,a} \right]\). Hence, whales can appear anywhere at random between their current position and the current best position. For spiral updating position, a spiral equation between the position of the current whale and prey is created to model the following helix-shaped movement of whales:

where b is a constant with value 1 to define the helix shape and l is a random number in [− 1,1].

Whales randomly choose one hunting behavior with equal probability as follows:

where p is a random number in [0,1].

3.3 Search for prey

To localize a prey, whales move to the random positions rather than to the optimal position. The condition for whales to perform this behavior is \(\left| {\overrightarrow {{A{ }}} } \right| > 1\), and the behavior is described by

where \(\overrightarrow {{X_{{{\text{rand}}}} }} \left( t \right)\) is the random position of a whale.

The pseudocode and flowchart of WOA are shown in Figs. 3 and 4, respectively.

4 Proposed ISSWOA

4.1 Improved SSA

Levy flight [33] uses Levy random number to control the individual step size for the individuals to move randomly. As shown in Fig. 5, using K which control the pace of the guard in the original SSA and Levy generate 100 random numbers, different from the random numbers generated by K, the random numbers generated by Levy are not all in [− 1,1], a small part exceed this range, using Levy random number to control individuals step size and they can move in small steps with a large probability and large steps with a small probability; in this way, we can strengthen the ability of SSA to escape from the local optimum while ensuring the local search ability of the algorithm. Thus, Eq. (3) is rewritten as

where β is 1.5, and u and v are random numbers drawn from normal distributions with zero mean and variances \(\sigma_{u}^{2}\) and \(\sigma_{v}^{2}\), respectively.

4.2 Improved WOA

Spiral updating in WOA is a search method centered at the current optimal position. When the whales are concentrated at a later optimization stage, spiral updating improves the local search ability of WOA. However, global search capability of WOA is weak. To prevent this problem, we define the following spiral updating equation:

where \(\overrightarrow {{X_{{{\text{mean}}}} }} \left( t \right)\) is the average position of the current population, \(\overrightarrow {{X_{i + 1} }} \left( t \right)\) is the position of the next whale to be updated, R is a random number in [0.5,2], rand is a random number in [0,1], and c is a random number in [5, 10].

The new spiral updating [34] is centered at the current whale and carries out a spiral search outward to determine the existence of a better surrounding position. This update is adopted before the algorithm completes two-thirds of all the iterations. Then, Eq. (9) is adopted. Hence, the convergence accuracy is ensured by updating the position following Eq. (9) at a later stage, and the global search ability is improved by updating the position according to Eq. (15).

4.3 ISSWOA

The proposed ISSWOA combines our improved versions of WOA and SSA. Combining the two algorithms allows to fully use their advantages while overcoming the shortcomings of the original algorithms, thereby ensuring that the optimal solution can be found faster and more effectively.

The pseudocode and flowchart of the proposed ISSWOA are shown in Figs. 6 and 7, respectively. ISSWOA first gives each individual a random position within the search space to create an initial population. Then, it orders the initial population by fitness and chooses a proportion of the individuals with better fitness as producers and the remaining individuals as scroungers. After the producer updates its position, the scrounger randomly chooses to update its position according to Eq. (2) or the whale’s encircling behavior. After the producer’s position is updated, the population uses spiral updating to find a better position near itself or the optimal solution. Finally, some individuals are selected as guards, and their positions are updated according to Eq. (14). The iterative process is repeated until the optimization criteria are met.

When the population updates its position by spiral updating, if the number of iterations of the population is two-thirds of the maximum number of iterations, the position is updated by Eq. (14). Otherwise, the position is updated by Eq. (9). If the new position is better than the previous one, the position is updated; otherwise, the previous position is maintained.

Spiral updating in WOA strengthens the global searching ability of the individuals. Combined with the alert mechanism of SSA, ISSWOA prevents easily falling into a local optimum and improves the convergence accuracy.

5 Performance evaluation of ISSWOA on benchmark functions

We evaluated results for 100 independent runs of ISSWOA on different benchmark functions and compared it with similar algorithms to verify its superiority.

5.1 Algorithms for comparison

In this research, we compared ISSWOA with the original WOA, SSA, improved SSA (ISSA), and improved WOA (IWOA) to verify the improvement in the algorithm. Then we compared ISSWOA with snake optimization (SO), the pelican optimization algorithm (POA), the grasshopper optimization algorithm (GOA), improved gray wolf optimization (I-GWO) [35], and the hybrid particle swarm optimization and butterfly optimization algorithm (HPSOBOA) [36] to verify the optimization capability. GOA, DOA, SO, and POA are relatively novel metaheuristic algorithms; I-GWO and HPSOBOA are improved algorithms for basic metaheuristic algorithms.

5.2 Parameter settings

The most obvious parameters that affected the performance of ISSWOA were the number of leaders and number of guards. Initially, 60 positions were randomly generated for the population, and Eq. (1) with R < ST was used to iterate the population 100 times. From the results (Fig. 8), it can be seen that the position of the leader will approach the origin as the number of iterations increases. Consequently, if the number of leaders is set too high, and the optimal solution is far from the origin, the algorithm will have poor performance.

The number of guards is not the more the better. After testing, if there are too many guards, the ISSWOA test results of F6, F7, and F8, etc., will become worse. On the contrary, if there are too few guards, the results of ISSWOA for F12 and F13, etc., will also become worse.

From the analysis and early experimental testing, when the population number was set to 100, the number of leaders set at 40, and the number of guards set at 30, the performance of the algorithm was more balanced for most benchmark functions. The population number of other comparison algorithms was also set at 100, and the other parameter settings were the same as those in the original literature.

5.3 Benchmark functions

In this study, ISSWOA was applied to standard unimodal benchmark functions, multimodal benchmark functions, and fixed-dimensional multimodal benchmark functions [37] for testing and comparison with other algorithms. The functional formulas of benchmark functions are shown in Tables 2, 3, and 4, and their 3D views are shown in Figs. 9, 10, and 11.

5.4 Analysis of ISSWOA for standard unimodal benchmark functions

Table 5 shows the test results of the algorithm on the standard unimodal benchmark functions. It can be seen from the result that IWOA was better than WOA, especially in finding the optimal values of F1, F3, and F5. Because the improvement in the original WOA spiral mechanism significantly enhanced its global search ability, it could quickly find a good approximate position in the early stage and converge more fully by the end. For the ISSA, Levy flight mechanism mainly improved the exploration ability of the algorithm to help it escape local optima; it played only a limited role in the standard unimodal benchmark functions; therefore, the gap between ISSA and SSA was relatively small. Compared with the other algorithms, ISSWOA found the global optimal value for F1, F2, F3, and F4. The optimal values for F5, F6, and F7 found by ISSWOA were superior to those found by other algorithms; in particular, the optimal value for F5 was superior owing to the improved spiral update mechanism of IWOA and discoverer mechanism of ISSA. The above results showed that ISSWOA had good performance on standard unimodal benchmark functions.

Figures 12 and 13 display the iteration curves and box diagrams for ISSWOA and the other algorithms on standard unimodal benchmark functions, respectively. It can be seen from the figure that the iteration speed of ISSWOA was superior to that of other algorithms on seven standard unimodal benchmark functions, and its box diagram shows that its 100 times optimal results were distributed in a centralized manner, indicating that ISSWOA had good robustness.

5.5 Analysis of ISSWOA for standard multimodal benchmark functions

Table 6 shows the test results of ISSWOA and other algorithms on multimodal benchmark functions. Compared with SSA, ISSA demonstrated a greater ability to escape local optima under the influence of Levy flight mechanism, as reflected in the mean of F8 and the mean and optimal value of F12. For IWOA, the improved spiral update mechanism conferred a good global search capability; therefore, the optimal values of F12 and F13 found by IWOA were approximately 10−3 times the optimal values found by WOA. ISSWOA inherited the advantages of IWOA and ISSA, had good performance, and was superior to the other algorithms, especially on F12 and F13.

Figures 14 and 15 display the iteration curves and box diagrams of ISSWOA and the other algorithms on standard multimodal benchmark functions. It can be seen from Fig. 14 that ISSWOA still performed better than the other algorithms in terms of convergence speed for the standard multimodal benchmark functions. From Fig. 15, except for F8, the box plot of ISSWOA resembled a straight line, indicating that ISSWOA had good robustness on the standard multimode benchmark function.

5.6 Analysis of ISSWOA for standard fixed-dimensional multimodal benchmark functions

Table 7 shows the test results of ISSWOA and other algorithms on standard fixed-dimensional multimodal benchmark functions. Compared with SSA, except for F16 and F17, ISSA escaped the local optimum and found the global optimum under the influence of Levy flight mechanism. For F17, the mean value of ISSA was better, indicating that ISSA had stronger robustness. Under the influence of the improved spiral update mechanism, IWOA found more global optimal solutions than WOA, but because IWOA could not escape from the local optimum, its optimization stability was insufficient. Under the influence of Levy flight mechanism, ISSWOA's optimization performance was more stable compared with other algorithms. ISSWOA performed the best except for F1; however, ISSWOA still found its global optimal solution, and its performance is better than some other algorithms.

It can be seen from Fig. 16 that the iteration speed of ISSWOA on standard fixed-dimensional multimodal benchmark functions was optimal except for F17, F18, and F21. It can be seen from Fig. 17 that, although the ISSWOA boxplot was not as good as performance on standard unimodal benchmark functions and standard multimodal benchmark functions, the distribution of ISSWOA 100 times results was the most concentrated and thus the overall performance was better.

5.7 Analysis on the optimization efficiency of ISSWOA

The efficiency of finding the optimal solution is also an important indicator of algorithm performance. This information is represented in Tables 8 and 9, which record average time required for 300 iterations and the number of iterations required to find the optimal solution for each benchmark function, respectively. It can be seen from Table 8 that, although ISSWOA was composed of ISSA and IWOA, its running time was short, and on some benchmark functions, its running time was even less than that of its constituent algorithms. Its running time is similar to that of I-GWO and HPSOBOA, which are both improved algorithms. From the number of iterations required for each algorithm to find its optimal solution over 100 trials, shown in Table 9, ISSWOA required fewer iterations to find the global optimal solution on most benchmark functions, compared with some other algorithms. Considering the number of iterations and time required, ISSWOA is a competitive proposal in optimization efficiency.

5.8 Wilcoxon rank sum test

A Wilcoxon rank sum statistical test with 5% accuracy was used to investigate the differences between ISSWOA and other algorithms. The results are shown in Table 10, There were significant differences between ISSWOA and the other algorithms in the test results of most benchmark functions. Combined with Tables 5, 6, and 7, it can be seen that ISSWOA had significant advantages over other algorithms in most cases. Therefore, ISSWOA displayed strong performance in the optimization of three types of standard benchmark functions.

6 Application of ISSWOA to engineering problems

Engineering problems are complex, nonlinear optimization problems. Consequently, the performance of optimization algorithms is best evaluated by applying them to practical problems (rather than theoretically). For the simulations, we set the number of iterations as 500 and the population size as 100. The optimization results for 30 independent runs of ISSWOA and similar algorithms were compared, with the number of discoverers set as 40 and the number of guards as 30. The comparison algorithm is shown in Table 11.

6.1 Treatment of constraints

For engineering problems with constraints, the punishment function [61] is used to amplify the fitness of solutions according to the number of exceeded constraints. When the solution does not meet the constraint conditions, the equation for fitness is

where F is the fitness value of introducing the penalty function, f is the fitness value of the unpunished function, lam is a constant greater than 1, gi is the ith constraint value, and Qi is a binary variable, equal to 1 when the current constraints do not meet the conditions (and zero otherwise).

6.2 Tension/compression spring design problem

As illustrated in Fig. 18, the tension/compression spring design problem [62] in mechanical engineering can be used to evaluate optimization algorithms for various parameters. The problem consists of minimizing the mass under certain constraints, including four constraints and three design variables. The design variables are average diameter d of the spring coil (x2), diameter w of the spring wire (x1), and number L of effective spring coils (x3). Tables 12 and 13 compare the optimal solutions and constraints obtained by different algorithms. The statistical optimization results of the algorithms are given in Table 14. The problem is formulated as follows:

subject to

The variable ranges are \(0.05 \le x_{1} \le 2,0.25 \le x_{2} \le 1.3\), and \(2.0 \le x_{3} \le 15.0\).

6.3 Pressure vessel design problem

The pressure vessel design problem [63] illustrated in Fig. 19 is a typical hybrid optimization problem for minimizing the total cost while meeting production needs. This problem has four constraints and four design variables. The variables are: shell thickness Th (x1), head thickness Ts (x2), radius R (x3), and container section length L (x4). Tables 15 and 16 show the comparison among the optimal solutions and constraints obtained by different algorithms. The statistical optimization results of the algorithms are given in Table 17. The problem is formulated as follows:

subject to

The variable ranges are \(0 \le x_{1} \le 99,0 \le x_{2} \le 99,10 \le x_{3} \le 200\), and \(10 \le x_{4} \le 200\).

6.4 Speed reducer design problem

Figure 20 illustrates the speed reducer design problem [64]. It is an important and complex multi-constraint optimization problem in mechanical systems and consists of 7 variables and 11 constraints. The variables are tooth surface width B (x1), gear modulus M (x2), number Z of teeth in pinions (x3), length l1 of the first shaft between bearings (x4), length l2 of the second shaft between bearings (x5), diameter d1 of the first shaft (x6), and diameter d2 of the second shaft (x7). Tables 18 and 19 compare the optimal solutions and constraints obtained by different algorithms. The statistical optimization results of the algorithms are given in Table 20. The problem is formulated as follows:

subject to

The variable ranges are \(2.6 \le x_{1} \le 3.6,0.7 \le x_{2} \le 0.8,17 \le x_{3} \le 28,7.3 \le x_{4} \le 8.3,7.3 \le x_{5} \le 8.3,2.9 \le x_{6} \le 3.9\), and \(5 \le x_{7} \le 5.5\).

6.5 Rolling element-bearing design problem

As illustrated in Fig. 21, the rolling element-bearing design problem consists of maximizing the dynamic bearing capacity of rolling bearings [65]. There are 10 decision variables in this problem: pitch diameter (Dm), ball diameter (Db), number of balls (Z), inner raceway curvature coefficients (fi), and outer raceway curvature coefficients (fo), KDmin, KDmax, ε, e, and ζ. In addition, this problem has nine constraints, establishing a complex multi-constraint engineering design problem. Tables 21 and 22 compare the optimal solutions and constraints obtained by different algorithms, while the statistical optimization results of the algorithms are given in Table 23. The problem is formulated as follows:

subject to

where

The variable ranges are

6.6 Car side impact design problem

The objective of the car side impact design problem [54] is to find the most appropriate combination of variables to minimize the weight of the car door. The design variables are as follows: thicknesses of B-Pillar inner (x1), thickness of B-Pillar reinforcement (x2), thickness of floor side inner (x3), thickness of cross members (x4), thickness of door beam (x5), thickness of door beltline reinforcement (x6), thickness of roof rail (x7), materials of B-Pillar inner (x8), materials of floor side inner (x9), barrier height (x10), and hitting position (x11). Tables 24 and 25 compare the optimal solutions and constraints obtained by different algorithms, while the statistical optimization results of the algorithms are given in Table 26. The problem is formulated as follows:

subject to

The variable ranges are \(0.5\leqslant x_{1} \sim x_{7}\leqslant1.5,x_{8} ,x_{9} \in \left[ {0.192,0.345} \right], - 30\leqslant x_{10} ,x_{11}\leqslant30\).

6.7 Gear train design problem

This gear train design problem [52] is shown in Fig. 22. It is an unconstrained design problem. It is necessary to find the best gear number combination. There are four gears in this problem, with number of teeth MA(x1), MB(x2), MC(x3), and MD(x4). Table 27 compares the optimal solutions obtained by different algorithms, while the statistical optimization results of the algorithms are given in Table 28. The equation for the gear train design problem is shown below

The variable ranges are \(12\leqslant x_{1} ,x_{2} ,x_{3} ,x_{4}\leqslant 60\).

6.8 Three-bar truss design problem

The structure of the three-bar truss [52] is shown in Fig. 23. To minimize the volume of the three-bar truss and meet the stress constraints on each side of the truss member, the most appropriate cross-section combination of the truss member is found. Tables 29 and 30 show the comparison among the optimal solutions and constraints obtained by different algorithms, and Table 31 gives the statistical optimization results of the algorithms. The equation is as follows:

\(\begin{aligned} g_{1} \left( x \right) & = \frac{{\sqrt 2 x_{1} + x_{2} }}{{\sqrt 2 x_{1}^{2} + 2x_{1} x_{2} }}P - \sigma\leqslant0 \\ g_{2} \left( x \right) & = \frac{{x_{2} }}{{\sqrt 2 x_{1}^{2} + 2x_{1} x_{2} }}P - \sigma\leqslant0 \\ g_{3} \left( x \right) & = \frac{1}{{\sqrt 2 x_{2} + x_{1} }}P - \sigma\leqslant0 \\ \end{aligned}\)where \(l = 100\,{\text{cm}},P = 2\frac{{{\text{KN}}}}{{{\text{cm}}^{2} }},\sigma = 2\frac{{{\text{KN}}}}{{{\text{cm}}^{2} }}\).

The variable ranges are \(0\leqslant x_{1} ,x_{2}\leqslant1\).

6.9 Economic load dispatch

Economic load dispatch (ELD) [59] is an important engineering problem in power delivery systems. The goal of this problem is to find the best power distribution of available thermal units, to minimize the fuel cost while meeting the load. The calculation formula of the fuel cost is as follows:

where ai, bi, and ci are the fuel-cost coefficients of the ith unit, and di and ei are the fuel-cost coefficients of the ith unit with valve-point effects.

In this research, the number of units was set as 40, and the load was 10,500 MW. Loss of thermal units was considered negligible. The upper and lower limits of each unit of power and the value of the coefficients in Eq. 24 are given in Sinha et al. [66]. The best result obtained by ISSWOA is shown in Table 32. The comparison with other algorithm results is shown in Table 33.

It can be seen from the test results of the eight engineering problems that ISSWOA performed best on the element-bearing design problem and ELD problem, clearly outperforming other algorithms in terms of stability and optimal results. For the other six engineering problems, ISSWOA also performed adequately, which showed that ISSWOA is certainly viable for solving practical engineering problems.

7 Conclusion

This study proposed a hybrid ISSWOA that combines the improved SSA with Levy flight strategy and the improved WOA with a novel spiral updating strategy. The performance of ISSWOA was investigated on standard unimodal, multimodal, and fixed-dimensional multimodal benchmark functions. The purpose was to determine its capabilities on three primary criteria: avoidance of trapping in local optima and exploration and exploitation abilities. The results showed that ISSWOA performed significantly better than other metaheuristic algorithms on most benchmark functions.

Since metaheuristic algorithms are suited to complex engineering problems, this paper applied ISSWOA to seven kinds of engineering design problems and a large electrical engineering problem (i.e., the ELD problem). The results showed that ISSWOA achieved good performance, especially in the element-bearing design problem and ELD problem, and was effective compared to other algorithms in other engineering problems.

In this research, the parameter configuration of ISSWOA was balanced to suit different problems However, different parameter configurations will be better suited to certain problems; therefore, the next research objective is to adapt the parameter configuration to different problems. In addition, the binary and multi-objective versions of ISSWOA can be developed further to solve a wider variety of problems.

Data availability

All data generated or analyzed during this study are included in this article.

References

Zhang Y, Mo Y (2022) Chaotic adaptive sailfish optimizer with genetic characteristics for global optimization. J Supercomput 78:10950–10996. https://doi.org/10.1007/s11227-021-04255-9

Tang KS, Man KF, Kwong S et al (2022) Genetic algorithms and their applications. IEEE Signal Proc Mag 13:22–37. https://doi.org/10.1109/79.543973

Das S, Suganthan PN (2010) Differential evolution: a survey of the state-of-the-art. IEEE Trans Evol Comput 15:4–31. https://doi.org/10.1109/TEVC.2010.2059031

Lee KY, Yang FF (1998) Optimal reactive power planning using evolutionary algorithms: a comparative study for evolutionary programming, evolutionary strategy, genetic algorithm, and linear programming. IEEE Trans Power Syst 13:101–108. https://doi.org/10.1109/59.651620

Espejo PG, Ventura S, Herrera F (2009) A survey on the application of genetic programming to classification. IEEE Trans Syst Man Cybernet C 40:121–144. https://doi.org/10.1109/TSMCC.2009.2033566

Zhong J, Feng L, Ong YS (2017) Gene expression programming: a survey. IEEE Comput Intell Mag 12:54–72

Prajapati A (2022) A customized PSO model for large-scale many-objective software package restructuring problem. Arab J Sci Eng 47:10147–10162. https://doi.org/10.1007/s13369-021-06523-5

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Soft 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Hashim FA, Hussien AG (2022) Snake optimizer: a novel meta-heuristic optimization algorithm. Knowl Based Syst 242:108320. https://doi.org/10.1016/j.knosys.2022.108320

Salgotra R, Singh U, Saha S (2019) On some improved versions of whale optimization algorithm. Arab J Sci Eng 44:9653–9691. https://doi.org/10.1007/s13369-019-04016-0

Peraza-Vázquez H, Peña-Delgado AF, Echavarría-Castillo G et al (2021) A bio-inspired method for engineering design optimization inspired by dingoes hunting strategies. Math Probl Eng. https://doi.org/10.1155/2021/9107547

Zhang Z, He R, Yang K (2022) A bioinspired path planning approach for mobile robots based on improved sparrow search algorithm. Adv Manuf 10:114–130. https://doi.org/10.1007/s40436-021-00366-x

Gürses D, Mehta P, Sait SM et al (2022) African vultures optimization algorithm for optimization of shell and tube heat exchangers. Mater Test 64:1234–1241. https://doi.org/10.1515/mt-2022-0050

Barbarosoglu G, Ozgur D (1999) A tabu search algorithm for the vehicle routing problem. Comput Oper Res 26:255–270. https://doi.org/10.1016/S0305-0548(98)00047-1

Dai C, Chen W, Zhu Y et al (2009) Seeker optimization algorithm for optimal reactive power dispatch. IEEE Trans Power Syst 24:1218–1231. https://doi.org/10.1109/TPWRS.2009.2021226

Ramezani F, Lotfi S (2013) Social-based algorithm (SBA). Appl Soft Comput 13:2837–2856. https://doi.org/10.1016/j.asoc.2012.05.018

Ghorbani N, Babaei E (2014) Exchange market algorithm. Appl Soft Comput 19:177–187. https://doi.org/10.1016/j.asoc.2014.02.006

Vincent FY, Jewpanya P, Redi AANP et al (2021) Adaptive neighborhood simulated annealing for the heterogeneous fleet vehicle routing problem with multiple cross-docks. Comput Oper Res 129:105205. https://doi.org/10.1016/j.cor.2020.105205

Pashaei E, Aydin N (2017) Binary black hole algorithm for feature selection and classification on biological data. Appl Soft Comput 56:94–106. https://doi.org/10.1016/j.asoc.2017.03.002

Eskandar H, Sadollah A, Bahreininejad A et al (2012) Water cycle algorithm—a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110:151–166. https://doi.org/10.1016/j.compstruc.2012.07.010

Kaveh A, Khayatazad M (2012) A new meta-heuristic method: ray optimization. Comput Struct 112:283–294. https://doi.org/10.1016/j.compstruc.2012.09.003

Kumar S, Tejani GG, Pholdee N et al (2021) Hybrid heat transfer search and passing vehicle search optimizer for multi-objective structural optimization. Knowl Based Syst 212:106556. https://doi.org/10.1016/j.knosys.2020.106556

Yildiz AR, Mehta P (2022) Manta ray foraging optimization algorithm and hybrid Taguchi salp swarm-Nelder–Mead algorithm for the structural design of engineering components. Mater Test 64:706–713. https://doi.org/10.1515/mt-2022-0012

Li Q, Wang W (2021) AVO inversion in orthotropic media based on SA-PSO. IEEE Trans Geosci Remote 99:1–10. https://doi.org/10.1109/TGRS.2021.3053044

Mafarja MM, Mirjalili S (2017) Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing 260:302–312. https://doi.org/10.1016/j.neucom.2017.04.053

Laskar NM, Guha K, Chatterjee I et al (2019) HWPSO: a new hybrid whale-particle swarm optimization algorithm and its application in electronic design optimization problems. Appl Intell 49:265–291. https://doi.org/10.1007/s10489-018-1247-6

Han X, Yue L, Dong Y et al (2020) Efficient hybrid algorithm based on moth search and fireworks algorithm for solving numerical and constrained engineering optimization problems. J Supercomput 76:9404–9429. https://doi.org/10.1007/s11227-020-03212-2

Shehab M, Khader AT, Laouchedi M et al (2019) Hybridizing cuckoo search algorithm with bat algorithm for global numerical optimization. J Supercomput 75:2395–2422. https://doi.org/10.1007/s11227-018-2625-x

Li X, Gu J, Sun X et al (2022) Parameter identification of robot manipulators with unknown payloads using an improved chaotic sparrow search algorithm. Appl Intell. https://doi.org/10.1007/s10489-021-02972-5

Chakraborty S, Sharma S, Saha AK et al (2021) SHADE–WOA: a metaheuristic algorithm for global optimization. Appl Soft Comput 113:107866. https://doi.org/10.1016/j.asoc.2021.107866

Xue J, Shen B (2020) A novel swarm intelligence optimization approach: sparrow search algorithm. Syst Sci Control Eng 8:22–34. https://doi.org/10.1080/21642583.2019.1708830

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

Iacca G, dos Santos JVC, de Melo VV (2021) An improved Jaya optimization algorithm with Lévy flight. Expert Syst Appl 165:113902. https://doi.org/10.1016/j.eswa.2020.113902

Alsattar HA, Zaidan AA, Zaidan BB (2020) Novel meta-heuristic bald eagle search optimisation algorithm. Artif Intell Rev 53:2237–2264. https://doi.org/10.1007/s10462-019-09732-5

Nadimi-Shahraki MH, Taghian S, Mirjalili S (2021) An improved grey wolf optimizer for solving engineering problems. Expert Syst Appl 166:113917. https://doi.org/10.1016/j.eswa.2020.113917

Zhang M, Long D, Qin T et al (2020) A chaotic hybrid butterfly optimization algorithm with particle swarm optimization for high-dimensional optimization problems. Symmetry 12:1800. https://doi.org/10.3390/sym12111800

Khalilpourazari S, Khalilpourazary S (2019) An efficient hybrid algorithm based on water cycle and moth-flame optimization algorithms for solving numerical and constrained engineering optimization problems. Soft Comput 23:1699–1722. https://doi.org/10.1007/s00500-017-2894-y

Tang A, Zhou H, Han T et al (2021) A chaos sparrow search algorithm with logarithmic spiral and adaptive step for engineering problems. CMES Compt Model Eng 130:331–364. https://doi.org/10.32604/cmes.2021.017310

Mirjalili S (2015) The ant lion optimizer. Adv V Eng Softw 83:80–98. https://doi.org/10.1016/j.advengsoft.2015.01.010

Dhiman G, Kumar V (2017) Spotted hyena optimizer: a novel bio-inspired based metaheuristic technique for engineering applications. Adv Eng Softw 114:48–70. https://doi.org/10.1016/j.advengsoft.2017.05.014

Krishna AB, Saxena S, Kamboj VK (2021) A novel statistical approach to numerical and multidisciplinary design optimization problems using pattern search inspired Harris hawks optimizer. Neural Comput Appl 33:7031–7072. https://doi.org/10.1007/s00521-020-05475-5

Kamboj VK, Nandi A, Bhadoria A et al (2020) An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Appl Soft Comput 89:106018. https://doi.org/10.1016/j.asoc.2019.106018

Ferreira MP, Rocha ML, Neto AJS et al (2018) A constrained ITGO heuristic applied to engineering optimization. Expert Syst Appl 110:106–124. https://doi.org/10.1016/j.eswa.2018.05.027

Zhang Z, Ding S, Jia W (2019) A hybrid optimization algorithm based on cuckoo search and differential evolution for solving constrained engineering problems. Eng Appl Artif Intel 85:254–268. https://doi.org/10.1016/j.engappai.2019.06.017

Zahara E, Kao YT (2009) Hybrid Nelder–Mead simplex search and particle swarm optimization for constrained engineering design problems. Expert Syst Appl 36:3880–3886. https://doi.org/10.1016/j.eswa.2008.02.039

Guo W, Chen M, Wang L et al (2016) Backtracking biogeography-based optimization for numerical optimization and mechanical design problems. Appl Intell 44:894–903. https://doi.org/10.1007/s10489-015-0732-4

Baykasoğlu A, Ozsoydan FB (2015) Adaptive firefly algorithm with chaos for mechanical design optimization problems. Appl Soft Comput 36:152–164. https://doi.org/10.1016/j.asoc.2015.06.056

Savsani P, Savsani V (2016) Passing vehicle search (PVS): a novel metaheuristic algorithm. Appl Math Model 40:3951–3978. https://doi.org/10.1016/j.apm.2015.10.040

Hashim FA, Houssein EH, Mabrouk MS et al (2019) Henry gas solubility optimization: a novel physics-based algorithm. Future Gener Comput Syst 101:646–667. https://doi.org/10.1016/j.future.2019.07.015

Abd EM, Oliva D, Xiong S (2017) An improved opposition-based sine cosine algorithm for global optimization. Expert Syst Appl 90:484–500. https://doi.org/10.1016/j.eswa.2017.07.043

Singh N, Singh SB, Houssein EH (2020) Hybridizing salp swarm algorithm with particle swarm optimization algorithm for recent optimization functions. Evol Intell 1:1–34. https://doi.org/10.1007/s12065-020-00486-6

Sadollah A, Bahreininejad A, Eskandar H et al (2013) Mine blast algorithm: a new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput 13:2592–2612. https://doi.org/10.1016/j.asoc.2012.11.026

Braik MS (2021) Chameleon swarm algorithm: a bio-inspired optimizer for solving engineering design problems. Expert Syst Appl 174:114685. https://doi.org/10.1016/j.eswa.2021.114685

Zhang C, Lin Q, Gao L et al (2015) Backtracking search algorithm with three constraint handling methods for constrained optimization problems. Expert Syst Appl 42:7831–7845. https://doi.org/10.1016/j.eswa.2015.05.050

Yang XS, Gandomi AH (2012) Bat algorithm: a novel approach for global engineering optimization. Eng Comput. https://doi.org/10.1108/02644401211235834

Singh N, Singh SB (2017) A novel hybrid GWO-SCA approach for optimization problems. Eng Sci Technok 20:1586–1601. https://doi.org/10.1016/j.jestch.2017.11.001

Mirjalili S (2015) Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl Based Syst 89:228–249. https://doi.org/10.1016/j.knosys.2015.07.006

Li Y, Lin X, Liu J (2021) An improved gray wolf optimization algorithm to solve engineering problems. Sustainability 13:3208. https://doi.org/10.3390/su13063208

Chopra N, Ansari MM (2022) Golden jackal optimization: a novel nature-inspired optimizer for engineering applications. Expert Syst Appl 198:116924. https://doi.org/10.1016/j.eswa.2022.116924

Ling SH, Iu HHC, Chan KY et al (2008) Hybrid particle swarm optimization with wavelet mutation and its industrial applications. IEEE Trans Syst Man Cybernet B 38:743–763. https://doi.org/10.1109/TSMCB.2008.921005

Bayzidi H, Talatahari S, Saraee M et al (2021) Social network search for solving engineering optimization problems. Comput Intel Neurosci. https://doi.org/10.1155/2021/8548639

Chen H, Wang M, Zhao X (2020) A multi-strategy enhanced sine cosine algorithm for global optimization and constrained practical engineering problems. Appl Math Comput 369:124872. https://doi.org/10.1016/j.amc.2019.124872

Chauhan S, Vashishtha G, Kumar A (2022) A symbiosis of arithmetic optimizer with slime mould algorithm for improving global optimization and conventional design problem. J Supercomput 78:6234–6274. https://doi.org/10.1007/s11227-021-04105-8

Migallón H, Jimeno-Morenilla A, Rico H et al (2021) Multi-level parallel chaotic Jaya optimization algorithms for solving constrained engineering design problems. J Supercomput 77:12280–12319. https://doi.org/10.1007/s11227-021-03737-0

Emami H (2022) Stock exchange trading optimization algorithm: a human-inspired method for global optimization. J Supercomput 78:2125–2174. https://doi.org/10.1007/s11227-021-03943-w

Sinha N, Chakrabarti R, Chattopadhyay PK (2003) Evolutionary programming techniques for economic load dispatch. IEEE Trans Evolut Comput 7:83–94. https://doi.org/10.1109/TEVC.2002.806788

Funding

This work was supported by the National Natural Science Foundation of China Program under Grant 62073198.

Author information

Authors and Affiliations

Contributions

JZ and XC proposed the innovation and designed the experiment in this study, JZ, MZ, and JL performed the simulation experiments and analyzed the experiment results and wrote the manuscript, JL corrected the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Ethical approval

This study will not cause harm to anyone or animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, J., Cheng, X., Zhao, M. et al. ISSWOA: hybrid algorithm for function optimization and engineering problems. J Supercomput 79, 8789–8842 (2023). https://doi.org/10.1007/s11227-022-04996-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-022-04996-1