Abstract

Dimension reduction techniques are very important, as high-dimensional data are ubiquitous in many real-world applications, especially in this era of big data. In this paper, we propose a novel supervised dimensionality reduction method, called appropriate points choosing based DAG-DNE (Apps-DAG-DNE). In Apps-DAG-DNE, we choose appropriate points to construct adjacency graphs, for example, it chooses nearest neighbors to construct inter-class graph, which can build a margin between samples if they belong to the different classes, and chooses farthest points to construct intra-class graph, which can establish relationships between remote samples if and only if they belong to the same class. Thus, Apps-DAG-DNE could find a good representation for original data. To investigate the performance of Apps-DAG-DNE, we compare it with the state-of-the-art dimensionality reduction methods on Caltech-Leaves and Yale datasets. Extensive experimental demonstrates that the proposed Apps-DAG-DNE outperforms other dimensionality reduction methods and achieves state-of-the-art performance for image classification.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the era of Big Data, an increasing amount of image data are being generated on the Internet through daily social communication. Most real data are high-dimensional, such as image data. High-dimensional data significantly increases the time and space requirements, and also brings the curse of dimensionality problem. So it is necessary to develop efficient dimensionality reduction methods that can scale to massive amounts of high-dimensional data. Dimensionality reduction techniques have attracted considerable interest in machine learning [1, 2] which not only reduce the computational complexity and avoid the curse of dimensionality problem, but also improve the classification performance in the subspace. Here, we focus on subspace learning, which pursuits the low-dimensional representation of the original high-dimensional data.

Dimensionality reduction methods are usually divided into unsupervised methods and supervised methods according to the availability of class label information. Popular unsupervised dimensionality reduction methods include principle component analysis (PCA) [3], locally linear embedding (LLE) [4]. It is generally known that PCA obtains the projection matrix by maximizing the total scatter of training samples. LLE reconstructs a given point by its neighbors to represent the local geometry structure and then seeks a low-dimensional embedding. However, LLE cannot perform mapping for an unseen data, which is called the out-of-sample problem. To cover the drawback of LLE, many new methods have been proposed, such as locality preserving projection (LPP) [5], neighborhood preserving embedding (NPE) [6]. Both LPP and NPE find an embedding to preserve local structure and can be simply extended to unseen samples. However, all above methods cannot work well in classification tasks since they do not utilize the class label information.

In order to solve the problem of unsupervised method, many supervised methods are proposed, such as linear discriminant analysis (LDA) [7, 8], discriminant neighborhood embedding (DNE) [9], marginal Fisher analysis (MFA) [10, 11], locality-based discriminant neighborhood embedding (LDNE) [12], discriminant neighborhood structure embedding (DNSE) [13], double adjacency graphs-based discriminant neighborhood embedding (DAG-DNE) [14] and others [15,16,17,18,19,20,21,22]. LDA can find the projection matrix by maximizing the ratio between the inter-class scatter and the intra-class scatter. However, LDA fails to explore the manifold structure of the given data when projecting them into a lower-dimensional subspace. MFA, as an extension of LDA, by constructing inter-class and intra-class adjacency graphs to preserve the local information, is able to efficiently solve the drawbacks of LDA. DNE constructs an adjacency graph to distinguish between homogeneous points and heterogeneous points to keep the local structure. However, DNE fails to preserve the detailed position relationship between the samples and their neighbors. Thus, the performance of DNE in the low-dimensional subspace is not good enough for classification tasks. LDNE optimizes the difference between the inter-class distance and the intra-class distance under constructing the adjacency graph being different from DNE by endowing different weights. DAG-DNE constructs two adjacency graphs, aiming to keep the neighbors compact if they belonging to the same class, while neighbors belonging to different classes are separable in the subspace. In DAG-DNE, it chooses neighbors to construct inter-class and intra-class graphs in the same way, which finds the nearest neighbors. Thus, it can build a margin between different neighbors if and only if they belong to the different classes when building inter-class graph, and preserve the structure of nearest neighbors if they belong to the same class when constructing intra-class graph. However, during the classification task, we should focus on samples that are separable in the high-dimensional space, and the representations of these two points in the subspace are close to each other if they belong to the same class rather than preserving the local structure.

To achieve this goal, this paper presents a new supervised subspace learning method, called appropriate points choosing DAG-DNE (Apps-DAG-DNE). In Apps-DAG-DNE, it chooses points to construct inter-class and intra-class graphs in different ways, that is, it chooses the nearest neighbors to construct inter-class graph, which can build a margin between different neighbors if and only if they belong to the different classes, and chooses the farthest points to construct intra-class graph, which can establish relationship between two farthest points if they belong to the same class. Thus, Apps-DAG-DNE could establish relationship among two remote samples and find a good projection matrix for them.

The reminder of this paper is organized as follows. Section 2 introduces the related works on DNE, LDNE and DAG-DNE. In Sect. 3, we propose the new method Apps-DAG-DNE. Section 4 reports simulation experimental results, and Sect. 5 concludes this paper.

2 Related works

In this section, we briefly review DNE [9] and its variant LDNE [12] and DAG-DNE [14]. Given a set of samples \(\{({\mathbf{x}}_i,y_i)\}_i^N\), where \({\mathbf{x}}_i \in {\mathbb {R}}^d\), \(y_i \in \{1,2,\ldots ,c\}\) is the class label of \({\mathbf{x}_\mathbf{i}}\), d is the dimensionality of samples, N is the number of training samples, and c is the number of classes. We try to learn a linear transformation mapping which can project the original data from the high \(d\text {-dimensional}\) space into a low \(r\text {-dimensional}\) subspace (\(r\ll d\)) in which the samples are denoted as \(\{({\mathbf{v}}_i,y_i)\}_i^N\). Specifically, the linear transformation can be defined as

where \({\mathbf{P}} \in {\mathbb {R}}^{d \times r}\) is the projection matrix.

2.1 Discriminant neighborhood embedding

DNE is a supervised subspace learning method, which aims to project the samples from high-dimensional space into a low-dimensional space, and makes the gaps between different samples as wide as possible if they belong to the different classes and as close as possible if they belong to the same class. For a sample \({\mathbf{x}}_i\), \({N}_K^w({\mathbf{x}}_i)\) and \({N}_K^b({\mathbf{x}}_i)\) denote its K homogeneous and heterogeneous neighbors, respectively. The DNE method has the following steps:

(1) Construct the adjacency graph by using K-nearest neighbors. The adjacency weight matrix \({\mathbf{F}}\) is defined as:

(2) Feature mapping: Optimize the following objective function:

with simple algebra, the minimization problem can be rewritten as

where \({\mathbf{L}} = {\mathbf{D}} - {\mathbf{W}}\) and \({{\mathbf{D}}_{ii}} = \sum \nolimits _j {{{\mathbf{W}}_{ij}}}\). The projection matrix \({\mathbf{P}}\) can be optimized by computing the minimum eigenvalue solution to the generalized eigenvalue problem as follows:

where \({\mathbf{P}}\) is composed of the optimal r projection vectors corresponding to the r smallest eigenvalues.

2.2 Locality-based discriminant neighborhood embedding

Different from DNE, LDNE uses a heat kernel function instead of 1 or 0 to adopt the adjacency graph and aims to find an optimal projection matrix by maximizing the difference between the inter-class scatter and the intra-class scatter. Similar to DNE, the LDNE method has the following steps:

(1) Construct the adjacency graph by using K-nearest neighbors. The adjacency weight matrix \({\mathbf{S}}\) is defined as:

(2) Feature mapping: Optimize the following objective function:

where \({\mathbf{H}}={\mathbf{D}}-{\mathbf{S}}\), and \(\mathbf{D}\) is a diagonal matrix with \(\mathbf{D }_{ii} = \sum \nolimits _j {\mathbf{S }}_{ij}\). The optimization problem (7) can also be cast into a generalized eigen-decomposition problem:

The optimal projection \(\mathbf{P}\) consists of r eigenvectors corresponding to the r largest eigenvalues.

2.3 Double adjacency graphs-based discriminant neighborhood embedding

DAG-DNE is a useful linear manifold learning method for dimensionality reduction and preserves the local geometric reconstruction relationship of data, which tries to make the gaps among submanifolds for different classes as wide as possible and for same class as compact as possible in the subspace, simultaneously. First, DAG-DNE is to construct two adjacency graphs, let \({\mathbf{F}}^b\) and \({\mathbf{F}}^w\) be the inter-class and intra-class adjacency matrices, respectively.

The inter-class adjacency matrix \({\mathbf{F}}^b\) is defined as

The intra-class adjacency matrix \({\mathbf{F}}^w\) is defined as

DAG-DNE seeks to find a projection \(\mathbf{P}\) by solving the following objective function

where \({\mathbf{G}}={\mathbf{D}}^b-{\mathbf{F}}^b -{\mathbf{D}}^w+{\mathbf{F}}^w\), and \({\mathbf{D}}^b\) and \({\mathbf{D}}^w\) are diagonal matrices with \(\mathbf{D }_{ii}^b = \sum \nolimits _j {\mathbf{F }_{ij}^b}\) and \(\mathbf{D }_{ii}^w = \sum \nolimits _j {\mathbf{F }_{ij}^w}\). The projection matrix \(\mathbf{P}\) can be found by solving the generalized eigenvalue problem as follows:

The optimal projection \(\mathbf{P}\) consists of r eigenvectors corresponding to the r largest eigenvalues.

3 Proposed methods

In this section, we develop a novel supervised subspace learning method called appropriate points choosing DAG-DNE (Apps-DAG-DNE). As described above, those methods all choose the nearest points to construct inter-class graph which can formulate the marginal between samples if and only if they belong to the different classes, and choose the nearest points to construct intra-class graph which can preserve the local geometric structure between samples if and only if they belong to the same class. However, during the classification task, we should focus on samples that are separable in the high-dimensional space, but the representations of these two points in the subspace should be close to each other if they belong to the same class rather than preserving the local structure.

3.1 Motivation

To clearly illustrate the problem, Fig. 1 gives two ways of choosing points to construct adjacency graphs. In the figure, there are two ways to find nearest neighbors to construct adjacency graphs: (1) a1, which is the traditional way to choose neighbors, and (2) b1, which is the ideal way to choose neighbors. Then, we also have two results: (1) a2, which is the result of constructing the adjacency graph using the traditional way to find points, and (2) b2, which is the result of constructing the adjacency graph using the ideal way to find points. Our purpose is to make the representations of these two points as close as possible in the new space if they belong to the same class, whether or not they are close in the high-dimensional space. However, traditional way has two shortcomings, on one hand, the traditional way chooses the nearest points and preserves the local geometric structure by establishing the relationships of them. Thus, if the two same class samples are remote, they cannot establish any relationships, and they will not be close in the subspace either. During the classification task, we should give priority to the samples in the same class that are remote, as the classification ability of the method will be severely deteriorated if they are ignored. On the other hand, the inter-class scatter is larger than intra-class, traditionally. Thus, the effect of intra-class scatter is very small in the objective function of DAG-DNE, and this will deteriorate the classification ability of DAG-DNE. The fundamental challenge is determining how to choose appropriate points to establish relationships in order to achieve the same result as the ideal way.

Procedure of graph-based subspace learning. (a1) traditional way, (a2) the result of choosing the nearest neighbors to construct adjacency graph. (b1) ideal way, (b2) the result of choosing appropriate points to construct adjacency graph. Traditional way makes the samples close if and only if they are close in the high-dimensional space, and the ideal result is that the samples should be close in the subspace if they belong to the same class whether or not they are separable in the high-dimensional space

3.2 Basic idea of Apps-DAG-DNE

Let \(\left\{ {({{\mathbf{x}}_i},{y_i})} \right\} _{i = 1}^N\) be a set of N samples in the multi-class classification task, where \({{\mathbf{x}}_i} \in {\mathbb {R}}^{d}\) and \({y_i} \in \{ 1,2,...,c\} \). Our task is to find a subspace, which let these samples, belonging to the same class, as close as possible in the subspace even though they are separable in the high-dimensional space. Therefore, we can not only reduce the complexity, but also improve the classification performance.

For a sample \({\mathbf{x}}_i\), \({AN}_K^w(i)\) indicates the index set of its K homogeneous points. It is worth noting that, K homogeneous points are its farthest points rather than its nearest neighbors. \({AN}_K^b(i)\) indicates the index set of its K heterogeneous neighbors. In order to describe the relationships between points, similar to DAG-DNE, we build two adjacency graphs: the inter-class separability graph \({{\mathbf{F}}^b}\) and the intra-class compactness graph \({{\mathbf{F}}^w}\).

The inter-class separability matrix \({{\mathbf{F}}^b}\) is defined as:

The intra-class compactness matrix \({{\mathbf{F}}^w}\) is defined as:

In the procedure of finding a projection matrix, we utilize the idea that maximizing the inter-class scatter and minimizing the intra-class scatter simultaneously. First, we define inter-class scatter and intra-class scatter as follows: the inter-class scatter is

where \({\mathbf{D}}^b\) is a diagonal matrix and its entries are column sum of \({\mathbf{F}}_{}^b\), i.e., \({\mathbf{D}}_{ii}^b = \sum \nolimits _j {{\mathbf{F}}_{ij}^b} \).

The intra-class scatter is

where \({\mathbf{D}}_{}^w\) is a diagonal matrix and its entries are column sum of \({\mathbf{F}}_{}^w\), i.e., \({\mathbf{D}}_{ii}^w = \sum \nolimits _j {{\mathbf{F}}_{ij}^w}\).

Then, we need to maximize the margin between inter-class scatter and intra-class compactness, i.e.,

Indeed, the margin \(T({\mathbf{P}})\) measures the total differences among the distances from \({{\mathbf{x}}_i}\) to the inter-class neighbors and intra-class points in the projected space.

To gain more insight, we rewrite \(T({\mathbf{P}})\) in the form of trace by following some simple algebraic steps:

where \({\mathbf{Q}}={\mathbf{D}}^b-{\mathbf{F}}^b -{\mathbf{D}}^w+{\mathbf{F}}^w\), with equation (18), it can be modified to

Given the constraint \({{\mathbf{P}}^\mathrm{T}}{\mathbf{P}} = {\mathbf{I}}\), the columns of \({\mathbf{P}}\) are orthogonal. The orthogonal projection matrix is better able to enhance the discriminant ability [14, 23, 24].

In order to solve formulation (19), we give the following theorems.

Theorem 1

If \({\mathbf{D}}^b, {\mathbf{D}}^w, {\mathbf{F}}^b, {\mathbf{F}}^w\) are all real symmetric matrices, then \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) is a real symmetric matrix.

Proof

Since \({\mathbf{D}}^b, {\mathbf{D}}^w, {\mathbf{F}}^b, {\mathbf{F}}^w\) are real symmetric matrices, so \(({\mathbf{D}}^b)^\mathrm{T}={\mathbf{D}}^b\), \(({\mathbf{D}}^w)^\mathrm{T}={\mathbf{D}}^w\), \(({\mathbf{F}}^b)^\mathrm{T}={\mathbf{F}}^b\), \(({\mathbf{F}}^w)^\mathrm{T}={\mathbf{F}}^w\), hence,

Thus, the matrix \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) is a symmetric matrix.

This completes the proof. \(\square \)

Theorem 2

Since \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) is a real symmetric matrix, the optimization problem (19) is equivalent to the eigen-decomposition problem of matrix \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\). Assume that \(\lambda _{1}\ge \cdots \ge \lambda _{i} \ge \cdots \lambda _{r}\ge 0 \ge \cdots \ge \lambda _{d}\) are the eigenvalues of \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\), and \({\mathbf{P}}\) is the corresponding eigenvector of the eigenvalue \(\lambda _r\). The optimal projection matrix \({\mathbf{P}}\) is only composed of eigenvectors corresponding to the top r largest positive eigenvalues, or

Proof

Since \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) is a real symmetric matrix, assume that \(\lambda _{i} ( 1\le i\le N)\). The eigenvalues of \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) and \({\mathbf{P}}_i\) are the corresponding eigenvectors of the eigenvalues \(\lambda _i\). According to the properties of the matrix, we yield

Thus,

Thus, the objective function (19) can be rewritten as

Since matrix \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) is non-positive definite, the eigenvalues of \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) can be positive, negative or zero. In order to maximize \(\text {tr}\{{\mathbf{P}}{\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}{\mathbf{P}}\}\), we should choose all positive eigenvalues, or \(\sum \nolimits _{i = 1}^r {{\lambda _i}}\). Thus, when \(\text {tr}\{{\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\}\) achieves its maximal value \(\sum \nolimits _{i = 1}^r {{\lambda _i}}\), the optimal solution to (19) must be

This completes the proof. \(\square \)

From Theorem 1, we know that \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) is a symmetric matrix, and switches to a simple eigenvalue and eigenvector problem with respect to symmetric matrix \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\). From Theorem 2, we know that the optimal project matrix is only composed of eigenvectors corresponding to the top r largest positive eigenvalues. Hence, the transformation matrix \(\mathbf{P}\) is constituted by the r eigenvectors of \({\mathbf{XQ}} {\mathbf{X}}^\mathrm{T}\) corresponding to its first r positive eigenvalues. So the embedding of new test sample \({\mathbf{x}}_{new} \in {\mathbb {R}}^{d}\) is accomplished by \({\mathbf{y}}_{new}={\mathbf{P}}^\mathrm{T} {\mathbf{x}}_{new}\), where \({\mathbf{y}}_{new} \in {\mathbb {R}}^{r}(r\ll d)\).

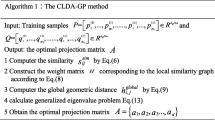

The details of the Apps-DAG-DNE are given in Algorithm 1.

4 Performance evaluation

4.1 Experiment setup

To evaluate the effectiveness of the proposed method, we have extensively validated our Apps-DAG-DNE method on two widely used datasets, i.e., Yale and Caltech-Leaves datasets, and the results are compared with NPE, DNE, LDNE and DAG-DNE. All of these methods are adopted to find the low-dimensional representations, which require the nearest neighbor parameter K for constructing adjacency graphs. For simplicity, the nearest neighbor (NN) classifier is used for classifying test images in the projected spaces.

All experiments are performed on the personal computer with a 2.30 GHz Intel(R) Core (TM) i5-6200 CPU and 8 G bytes of memory. This computer runs on windows 7, with MATLAB 2013a compiler installed.

4.2 Datasets

In order to study the performance and generality of different methods, we perform experiments on two image datasets:

-

(1)

The Caltech-Leaves dataset [25] consists of 186 images of 20 species of Leaves against cluttered different backgrounds. Each image was resized to \(32 \times 32\) pixels in the experiment. Figure 2 shows some image samples in Caltech-Leaves dataset.

-

(2)

The Yale face dataset [26] contains 165 images of 15 people. Each person has 11 images. Each image was manually cropped and resized to \(32 \times 32\) pixels. Figure 3 shows some image samples in Yale dataset.

4.3 Comparison methods

Here, we further compare the proposed Apps-DAG-DNE method with the following methods.

-

(1)

NPE [6]. NPE is an unsupervised method, which finds an embedding to preserve local information and can be extended to unseen samples.

-

(2)

DNE [9]. DNE is a supervised method, which keeps local structure by constructing an adjacency graph, and could find optimal projection directions by using spectrum analysis.

-

(3)

LDNE [12]. LDNE is also a supervised method, which optimizes the difference between the inter-class distance and the intra-class distance under constructing the adjacency graph by endowing different weights.

-

(4)

DAG-DNE [14]. DAG-DNE is also a supervised method, which finds the best projection matrix by constructing double adjacency graphs.

4.4 Performance metric

In the classification of high-dimensional data, many researchers (e.g., He [27], Zhang [28]) have used the classification accuracy to verify the performance of the methods. The performance of all methods is measured by classification accuracy which is calculated by

where CTest denotes the number of correctly classified test samples and Test denotes the number of all test samples.

4.5 Experimental results

4.5.1 Results of Caltech-Leaves recognition

In the experiment, we investigated the effect of the number of neighbors K and the ratio between the number of training and testing data to classification performance. First, we set \(K=3\) and evaluated the effect of ratio between the number of training and testing data that 60% and 90% of the training samples were randomly selected, and the remaining samples were used to test. Second, we chose 80% of samples as training samples and evaluated the effect of the number of neighbors. Without prior knowledge, K was set to be 1, 3, 5 and 7. PCA was utilized to reduce dimensionality from 1024 to 100, which could reduce the computational complexity and diminish the majority of noises. We regulated the number of projection vectors from 1 to 80 at an interval of 6, and the results were averaged over the 15 trials. Figure 4 shows the accuracy of five methods vs. dimensionality of subspace with different K, and Fig. 5 shows the accuracy of five methods vs. dimensionality of subspace with different training samples. From Figs. 4 and 5, we found that: (1) the performance of each method improved rapidly, and then almost became stable. (2) The DNE, LDNE, DAG-DNE and Apps-DAG-DNE performed better than NPE because NPE is an unsupervised method. More importantly, Apps-DAG-DNE performed better than DNE, LDNE and DAG-DNE across a wide range of dimensionality on the Caltech-Leaves dataset. (3) When the training sample size is large enough to sufficiently characterize the data distribution, such as the case for the 90% training samples on Caltech-Leaves dataset, all methods we discussed in this work can achieve good performance. Based on choosing appropriate points, our Apps-DAG-DNE delivered higher accuracy than other techniques, primarily due to advantages of choosing appropriate points to some extent.

Furthermore, Table 1 reports the best average classification accuracy on test sets of all methods under different K, and in Table 2, we summarize the statistics according to Fig. 5, including the mean accuracy, the best record and the optimal image subspace dimensions (i.e., Dim), where the optimal subspace corresponded to the highest recognition accuracy for each method in each setting. We made the following similar observations: (1) in spite of the variation of K, Apps-DAG-DNE had the highest classification accuracy among these methods. (2) Apps-DAG-DNE did not have the lowest number of dimensions of the subspace when achieving the best performance, e.g., 90% training samples, NPE: 28, DNE: 24, LDNE: 24, DAG-DNE: 24, Apps-DAG-DNE: 50. It is worth noting that when the number of dimensions of the subspace of Apps-DAG-DNE is 24, it yielded better performance than NPE, DNE, LDNE and DAG-DNE.

4.5.2 Results of Yale recognition

In this simulation, we focused on the effect of the dimensionality of subspace under different choices of nearest neighbor parameters K. Similar to the experiment on Caltech-Leaves, we set the numbers of nearest neighbors to be 1, 3, 5 and 7. We randomly selected 70% training samples from each class, where the remaining images were used for testing. For simplicity, PCA was utilized to reduce the number of dimensions from 1024 to 100. We repeated 15 trials and reported the average results. For each setting, the number of projection vectors was regulated from 1 to 80 at an interval of 6 for each fixed K value. Figure 6 shows the average accuracy values of the five methods versus the dimensionality of the subspace with different values of K on Yale dataset. From Fig. 6, we found that: (1) Apps-DAG-DNE performed better than NPE, DNE, LDNE, DAG-DNE across a wide range of dimensionality on the Yale dataset. (2) In spite of the variation of K, Apps-DAG-DNE had the highest classification accuracy among these methods. The major reason for this might have been that Apps-DAG-DNE chooses the appropriate points.

Furthermore, Table 3 reports the best average classification accuracy on test sets of all five methods under different K. We can see that Apps-DAG-DNE has the highest accuracy among these methods. By using Apps-DAG-DNE to learn subspace, we can not only reduce the computational complexity but also improve the classification performance.

5 Conclusion

In this paper, we proposed an appropriate points choosing method based on double adjacency graphs-based discriminant neighborhood embedding, called Apps-DAG-DNE, which chooses different points with traditional way to construct adjacency graphs. It chooses nearest neighbors to construct inter-class adjacency graph, which can build a margin between samples if they belong to the different classes, and chooses farthest points to construct intra-class graph, which can establish relationships between remote samples if and only if they belong to the same class. Therefore, the low-dimensional representations produced by the proposed method are close, even if they are separable in the original high-dimensional space. The experimental results show that Apps-DAG-DNE can be very effective for data classification. As for future research, we plan to introduce the knowledge of deep learning for discovering more discriminative subspace.

References

Turk MA, Pentland AP (1991) Face recognition using eigenfaces. In: Proceedings of the Conference on Computer Vision and Pattern Recognition. IEEE, pp 586–591

He XF, Yan SC, Hu YX, Niyogi P (2001) Face recognition using laplacianfaces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Jolliffe I (2002) Principal component analysis, 2nd edn. Springer, Berlin

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

He XF, Niyogi P (2003) Locality preserving projections. Adv Neural Inf Process Syst 16:153–160

He XF, Cai D, Yan SC, Zhang HJ (2005) Neighborhood preserving embedding. In: Proceedings of the Tenth International Conference on Computer Vision, vol 2. IEEE, pp 1208–1213

Martinez AM, Kak AC (2001) PCA versus LDA. IEEE Trans Pattern Anal Mach Intell 23(2):228–233

Yu H, yang J (2001) A direct LDA algorithm for high-dimensional data with application to face recognition. Pattern Recognit 34(10):2067–2070

Zhang W, Xue XY, Guo YF (2006) Discriminant neighborhood embedding for classification. Pattern Recognit 39(11):2240–2243

Yan SC, Xu D, Zhang BY, Zhang HJ (2005) Graph embedding: a general framework for dimensionality reduction. In: Proceedings of the Conference on Computer Vision and Pattern Recognition, vol 2. IEEE, pp 830–837

Yan SC, Xu D, Zhang BY, Zhang HJ, Yang Q, Lin S (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29(1):20–51

Gou JP, Zhang Y (2012) Locality-based discriminant neighborhood embedding. Comput J 56(9):1063–1082

Miao S, Wang J, Cao Q, Chen F, Wang Y (2016) Discriminant structure embedding for image recognition. Neurocomputing 174:850–857

Ding CT, Zhang L (2015) Double adjacency graphs-based discriminant neighborhood embedding. Pattern Recognit 48(5):1734–1742

Srivastava N, Rao S (2016) Learning-based text classifiers using the Mahalanobis distance for correlated datasets. Int J Big Data Intell 3(1):18–27

Lin WW, Pang XW, Wan BS, Li HF (2016) MR-LDA: an efficient topic model for classification of short text in big social data. Int J Grid High Perform Comput 8(4):100–113

Chen M, Li YH, Zhang ZF, Hsu CH, Wang SG (2016) Real-time and large scale duplicate image detection method based on multi-feature fusion. J Real-Time Image Process 13(3):557–570

Bao X, Zhang L, Wang BJ, Yang JW (2014) A supervised neighborhood preserving embedding for face recognition. In: Proceedings of the International Joint Conference on Neural Networks. IEEE, pp 278–284

Yu WW, Teng XL, Liu CQ (2006) Face recognition using discriminant locality preserving projections. Image Vis Comput 24(3):239–248

You GB, Zheng NN, Du SY, Wu Y (2007) Neighborhood discriminant projection for face recognition. Pattern Recognit Lett 28(10):1156–1163

Yang LP, Gong WG, Gu XH, Li WH, Liang YX (2008) Null space discriminant locality preserving projections for face recognition. Neurocomputing 71(16):3644–3649

Lu YQ, Lu C, Qi M, Wang SY (2010) A supervised locality preserving projections based local matching algorithm for face recognition. In: Advances in Computer Science and Information Technology, pp 28–37

Kokiopoulou E, Sadd Y (2007) Orthogonal neighborhood preserving projections: a projection-based dimensionality reduction technique. IEEE Trans Pattern Anal Mach Intell 29(12):2143–2156

Wang JH, Xu Y, Zhang D, You J (2010) An efficient method for computing orthogonal discriminant vectors. Neurocomputing 73(10):2168–2176

Caltech-Leaves dataset. http://www.vision.caltech.edu/html-files/archive.html. Accessed 30 Dec 2016

Yale dataset. http://vision.ucsd.edu/content/yale-face-database. Accessed 30 Dec 2016

Cai D, He XF, Han JW (2007) Spectral regression: a unified approach for sparse subspace learning. In: Proceedings of the Seventh International Conference on Data Mining. IEEE, pp 73–82

Zhang Z, Li FZ, Zhao MB, Zhang L, Yan SC (2016) Joint low-rank and sparse principal feature coding for enhanced robust representation and visual classification. IEEE Trans Image Process 25(6):2429–2443

Acknowledgements

This work was supported by the National Science Foundation of China (61571066 and 61472047).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ding, C., Wang, S. Appropriate points choosing for subspace learning over image classification. J Supercomput 75, 688–703 (2019). https://doi.org/10.1007/s11227-018-2687-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-018-2687-9