Abstract

High-efficiency video coding (HEVC) is a successor to the H.264/AVC standard as the newest video-coding standard using a quad-tree structure with the three block types of a coding unit (CU), a prediction unit (PU), and a transform unit (TU). This has become popular to apply to smart surveillance systems, because very high-quality image is needed to analyze and extract more precise features. On standard, the HEVC encoder uses all possible depth levels for determination of the lowest rate-distortion (RD) cost block. The HEVC encoder is more complex than the H.264/AVC standard. An efficient CU determination algorithm is proposed using spatial and temporal information in which 13 neighboring coding tree units (CTUs) are defined. Four CTUs are temporally located in the current CTU and the other nine neighboring CTUs are spatially situated in the current CTU. Based on the analysis of conditional probability values for SKIP and Merge modes, an optimal threshold value was determined for judging SKIP or Merge mode according to the CTU condition and an adaptive weighting factor. When SKIP or Merge modes were detected early, other mode searches were omitted. The proposed algorithm achieved approximately 35 % time saving with random-access configuration and 29 % time reduction with low-delay configuration while maintaining comparable rate-distortion performance, compared with HM 12.0 reference software.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The Internet of Things (IoT) was Born between 2008 and 2009, when the number of things connected to the Internet exceeded the number of people connected. By 2020, several tens of billions of devices are predicted to be connected. It is envisioned that the physical things/devices will be outfitted with different kinds of sensors and actuators and connected to the Internet via heterogeneous access networks enabled by technologies such as embedded sensing and actuating, radiofrequency identification (RFID), wireless sensor networks, real-time, and semantic Web services [1]. IoT is actually a network of networks with many unique characteristics.

Based on IoT environment, the smart surveillance technology becomes a critical component in security infrastructures and the system architecture. To accomplish scalable smart video surveillance, an inference framework in visual sensor networks is necessary, one in which autonomous scene analysis is performed via distributed and collaborative processing among camera nodes without necessity for a high-performance server [2]. Also, the main goal of smart surveillance systems is to analyze the scene focusing on the detection and recognition of suspicious activities performed by humans in the scene, so that the security personnel can pay closer attention to these preselected activities. To accomplish that, several problems have to be solved first, for instance background subtraction, person detection, tracking and re-identification, face recognition, and action recognition. Even though each of these problems have been researched in the past decades, they are hardly considered in a sequence. But each one is usually solved individually [3, 4]. As rapid development of computing hardware, more high-quality networked videos such as full high definition (full HD) and ultrahigh definition (UHD) have become popular to analyze the scene and extract the contextual information more accurately. However, it is inevitable to design a fast scheme of video-encoding system to support real-time high-quality surveillance system [5].

High efficient video coding (HEVC) is the latest international video-coding standard issued by the Joint collaborative team on video coding (JCT-VC) [6], which is a partnership between the ITU-T Video Coding Experts Group (VCEG) and the ISO/IEC Moving Picture Experts Group (MPEG), two prominent international organizations that specify video-coding standards [7]. Increasing demands for high-quality full HD, UHD, and higher-resolution video necessitate bit-rate savings for broadcasting and video streaming. HEVC aims to achieve a 50 % bit-rate reduction, compared with the previous H.264/AVC video standard, while maintaining quality.

HEVC is based on a coding unit (CU), a prediction unit (PU), and a transform unit (TU). The CU is a basic coding unit analogous to the concept of macroblocks in H.264/AVC. However, a coding tree unit (CTU) is the largest CU size that can be partitioned into four sub-CUs of sizes from 64 \(\times \) 64 to 8 \(\times \) 8. An example of the CU partitioning structure is shown in Fig. 1. This flexible quad-tree structure leads to improved rate-distortion performance and also HEVC features for advanced content adaptability. The PU is the basic unit of inter/intra-prediction containing large blocks composed of smaller blocks of different symmetric shapes, such as square, rectangular, and asymmetric. The TU is the basic unit of transformation defined independently from the PU, but the size is limited to the CU, to which the TU belongs. Separation of the block structure into three different concepts allows optimization according to role, which results in improved coding efficiency [8]. However, these advanced tools cause an extremely high computational complexity. Therefore, a decrease in the computational complexity is desired.

Much effort has been exerted to reduce the complexity. Pai-Tse et al. [9] proposed a fast zero block detection algorithm based on SAD values using inter-prediction results. Features of the proposed algorithm were applied to different HEVC transform sizes. A 48 % of time saving for QP \(= 32\) was achieved. Zhaoqing Pan et al. [10] proposed a fast CTU depth decision algorithm using the best quad-tree depth of spatial and temporal neighboring CTUs, relative to the current CTU, for an early quad-tree depth 0 decision. Correlations between the PU mode and the best CTU depth selection were also used for a depth 3 skipped decision. A 38 % of time reduction for all QPs was achieved under common testing conditions.

Hassan Kibeya et al. [11] proposed a fast CU decision algorithm for the block structure encoding process. Based on early detection of zero quantized blocks, the number of CU partitions to be searched was reduced. Therefore, a significant reduction in encoder complexity was achieved and the proposed algorithm had almost no loss of bit-rate or PSNR, compared with HM 10.0 reference software. Also there are several schemes for HEVC [12] streaming issues that may involve this new state-of-the-art codec compared to MPEG-2 streaming issues [13] and H.264/AVC streaming issues [14], as well as wireless video delivery issues [15–18].

In this study, an early SKIP and Merge mode detection algorithm is proposed using neighboring block and depth information. In Sect. 2, related researches are presented. Statistical analysis and the proposed method are presented in Sect. 3. Experimental results and discussion are shown in Sect. 4. Conclusions are presented in Sect. 5.

2 Related works

The fast encoding technique of HEVC is essential to support real-time applications. Many approaches have been reported for fast HEVC encoder design. In this section, related work is presented. In this context, there are four approaches that are generally used to achieve a fast algorithm in HEVC.

2.1 Prediction-based approach

Efficient prediction techniques are effective to achieve a fast algorithm in HEVC. A spatial and temporal correlation-based method has been proposed [19] that depends upon a correlation index of a current CTU with its spatial and temporal neighbors. The CTU is classified into the three categories of High Similarity, Medium Similarity, and Low Similarity. A CTU in the High Similarity category will have a small depth search range, a medium range in the Medium Similarity category, while a Low Similarity CTU will experience the entire depth range from 0 to 3. The algorithm provides an approximate 25 % time saving with a 0.16 % bit-rate increment.

Another method [20] adaptively determines the CU depth range using a tree block property in which small depth levels are always selected at tree blocks in homogeneous regions, and large depth levels are selected at tree blocks with active motion or rich texture, so that motion estimation can be skipped. In [21], an early CU termination algorithm has been proposed, which is commonly known as ECU. As ECU mechanism, no further processing of sub-tree is required when the current CU selects the SKIP mode as the best mode. An early skip detection (ESD) was reported by Yang et al. [22]. By checking the differential motion vector (DMV) and a coded block flag (CBF) after searching the best INTER 2N \(\times \) 2N mode, it decides whether more searches are needed. After selecting the best INTER 2N \(\times \) 2N mode which has the minimum RD cost, this algorithm checks the DMV and CBF. If DMV and CBF of the best INTER 2N \(\times \) 2N mode are equal to (0, 0) and zero, respectively, the best mode of the current CU is determined as SKIP mode early.

Generally, bi-prediction is effective when a video contains scene changes, camera panning, zoom-in/out, and fast scenes. The RD costs of the forward and backward prediction increase when bi-prediction is the best prediction mode [23]. A bi-prediction skipping method that can efficiently reduce the computational complexity of bi-prediction is presented with the assumption that if bi-prediction is selected as the best prediction mode, the RD costs of blocks included in both the forward and backward lists can be larger than the average RD cost of previous blocks, which are coded based on forward and backward prediction.

2.2 Probabilistic approach

A modeling-based CU selection method has been proposed [24] using the statistical observation that all CUs with pyramid variance of the absolute difference (PVAD) tend to be encoded at the same depth. The CU selection process is modeled as a Markov random field (MRF) inference problem optimized using a graph cut algorithm. Termination of CU splitting is determined based on an RD cost-based maximum a posteriori (MAP) approach. A proposed algorithm achieved a 53 % time savings with a bit-rate increment of 1.32 % [24] with a proposed extension [25] in which instead of using PVAD, only VAD was used because VAD is proportional to the RD cost similar to [24]. A binary classification modeled CU selection method has also been proposed [26]. An off-line support vector machine (SVM) model optimized using weights for training samples was used to classify a CU as either split or unsplit. Weights were generated as an RD-cost difference based on misclassification. SVM was applied for feature vectors to make the CU splitting termination decision. A 44.7 % reduction in complexity was achieved with 1.35 % bit-rate increment in the RA configuration, and a 41.9 % time savings with a 1.66 % bit-rate increment in the LD configuration.

Bayes’ rule-based early CU splitting and a pruning decision method have been proposed [27]. In early split testing, Bayes’ rule based on a low complexity RD cost can be used to determine whether a current CU should be split. For no splitting, a CU early pruning test is performed. A pruning decision is made based on the full RD cost of the CU. Features for both tests are periodically updated online. The method achieved an approximate 50 % encoding time reduction with only a 0.6 % increment in bit-rate.

A pyramid motion divergence (PMD)-based fast inter-CU selection method has been proposed [28] based on the statistical property that CUs with similar PMD values have the same splitting mode. Optical flow is used to find MVs so that PMD can be estimated. Optical flow estimation causes a 9 % computational burden. This method achieved an approximate 41 % time savings with an approximate 1.9 % bit-rate increment.

Based on statistical analysis, the three approaches known as SKIP mode decision (SMD), CU skip estimation (CUSE), and Early CU Termination (ECUT) have been proposed [29]. SMD is used to determine if the remaining modes, except for the SKIP mode, are performed or not. CUSE and ECUT are used to determine if large and small CU sizes are coded, respectively. Thresholds for SMD, CUSE, and ECUT are designed based on Bayes’ rule with a complexity factor. An update process is performed for estimation of statistical parameters for SMD, CUSE, and ECUT considering the characteristic RD cost. Experimental results demonstrated that the proposed CU size decision algorithm significantly reduced the computational complexity by 69 %, on average, with a 2.99 % BDBR increase.

2.3 Advanced RD-cost calculation approach

An advanced RD-cost calculation method concentrating on rate distortion optimization (RDO) was proposed [30]. Top SKIP and Early Termination were used to reduce the number of RD-cost estimations. For Top SKIP, the starting depth and larger depths of \(64\times 64\) and \(32\times 32\) are skipped if high correlation exists between the minimum depth of the current CTB and the collocated CTB in the previous frame. Early Termination is a process that is complementary to Top SKIP in which the CU splitting process is terminated if the best RD cost for the current CU is lower than a threshold value that is adaptively calculated using both spatial and temporal features. This method reduced the encoding time by up to 45 % with a negligible quality loss within 0.1dB.

Apart from fast mode decision algorithms, research has focused on improving the rate-distortion calculation technique. In this context, a mixed Laplacian-based RD-cost calculation scheme has been proposed [31] in which inter-predicted residuals exhibited different statistical characteristics for CU blocks in different depth levels. Based on the mixed Laplacian distribution, experimental results showed that the proposed rate and distortion models were capable of better estimation of actual rates and distortions than a single Laplacian distribution.

3 Proposed algorithm

3.1 Problem and motivation

Some fast algorithms can be divided into the three parts of CU splitting termination, fast prediction unit (PU) methods, and fast transform schemes. CU splitting termination has a quad-tree structure and recursively performs the \(64\times 64\) size to the \(8\times 8\) size. If the best CU can be determined, only essential CUs are split [32] with the best mode information in the upper level. This approach has a low bit ratio, because detection conditions are intuitive. However, if the condition boundary is widened, the quality loss is increased greatly. Also, it is difficult to select the best mode because the characteristic of the best mode is easily changeable. Therefore, unique characteristics are required for a particular mode. An encoder performs a mode decision process using the most common prediction mode after a size decision for the CU in the process of CU splitting. If the best mode can be predicted in advance, the search time for the mode decision process can be reduced. ECU is used for this approach. However, these types of algorithms are incompatible with other algorithms due to omission of the existing routine in advance.

The TU process recursively partitions the structure of a given prediction block into transform blocks that are represented by the residual quad-tree (RQT). An RD-cost evaluation is performed multiple times within each quad-tree structure as 1 time for a \(32\times 32\) TU, 4 times for a \(16\times 16\) TU, and 16 times for an \(8\times 8\) TU. Early Termination allows only performing the TU size that has been selected as the best size to effectively reduce time consumption. However, this approach requires many RD-cost computations. Therefore, it is suitable for use in a supporting role rather than performing a single algorithm. These problems due to use of a quad-tree structure as the most common prediction mode and transformation in RQT can be solved by avoiding unnecessary processes and the number of executions can be reduced significantly. However, there is room for improvement using spatial and temporal information for detection of SKIP and Merge modes to achieve speedup of the encoding system.

Most real-world video sequences contain a substantial amount of background and many motionless objects with high temporal correlations between successive frames and spatial correlations among adjacent pixels. Generally, these kinds of motionless regions are encoded as SKIP or Merge mode. If a CB is encoded as SKIP or Merge mode, then only the SKIP flag and corresponding Merge index are transmitted to the decoder side, which ensures that SKIP and Merge mode requires a minimum number of bits. Typical video contents include large homogenous regions and many motionless objects. These characteristics are described in Fig. 2.

When a CB is encoded as SKIP mode in HEVC, two conditions should be satisfied. One is that the motion vector difference between the current 2N\(\times \)2N PU and the neighboring PU is zero, and the another is that residuals are all quantized to zero. The CTU structure and mode decision result of a video frame from the Cactus sequence is shown in Fig. 3 where green areas represent intra-mode and red represents inter-mode. Blue and gray regions describe PU and TU splits, respectively. Yellow areas indicate blocks that are encoded as SKIP mode. Most of the stationary regions of the video frame are encoded as SKIP mode Fig. 2.

Large portions of regions that are encoded as SKIP mode exist. To distinguish these regions as SKIP or Merge mode, an efficient algorithm is needed prior to the beginning of the RD-cost calculation process. Further statistical analysis was performed using different sequences and QP values to determine CUs as SKIP. In depth level 0, the portion of SKIP as the best mode was 81.37 % on average. With a depth level of 1, 66.46 % SKIP mode was determined, on average (in Table 1).

When the depth level was deeper, the amount of SKIP mode decreased. When QP value was high, the portion of SKIP mode increased. Although the BQTerrace and BasketballPass sequences have more complicated motion activities than other sequences, they provide a sufficient amount of SKIP mode. Also, the characteristic that stationary blocks tend to be positioned around each other was observed. The development of the proposed algorithm was motivated by this observation and analysis.

3.2 An early SKIP and Merge mode detection method

In natural pictures, neighboring blocks usually exhibit similar textures. Optimal inter-prediction of a current block may be strongly correlated with adjacent blocks. Homogenous blocks, which are encoded as SKIP or Merge mode, especially tend to be located adjacent to each other (Table 1).

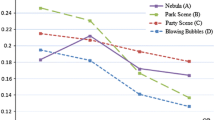

Based on this consideration, the conditional probability P(O|NC) was analyzed, where neighboring CTUs (NC) represented the event that a current CTU was encoded as depth 0 and SKIP mode, CBF, and Merge mode flag were true with NC = A, B, C, D, E, F, G, H, I, J, K, L, M corresponding to left, above-left, above, above-right, collocated-current, collocated-left, collocated-above-left, collocated-above, collocated-above-right, collocated-right, collocated below-right, collocated-below, and collocated-below-left as shown in Fig. 4. Also, O indicated the event that the current CTU was encoded as depth 0 and flags were true. Thirteen defined blocks as CTUs from A to M were used in Fig. 4. B and C class sequences were used based on the degree of motion. The conditional probabilities of adjacent CTUs were skipped when the current CTU was skipped (Tables 2, 3, 4).

The temporal CTUs A, B, C, and D had probability values from 76.0 to 77.1 %, and the spatial CTUs E, F, G, H, I, J, K, L, and M had probability values from 69.6 to 73.2 %, on average, in the class B Cactus sequence (Table 2). Also, in class B BQTerrace sequence, temporal CTUs had probability values from 78.5 to 80.1 % and spatial CTUs had probability values from 58.6 to 62.8 %. Also, each of these probabilities tended to follow the above trends from Tables 2, 3, and 4.

From experimental results, when reference CTUs were encoded as SKIP mode more than as Merge mode in depth level 0, the current CTU had a high probability to be encoded as SKIP or Merge mode, especially when the spatial CTUs A, B, C, and D, which are spatially located next to current CTU, were used. Also, the temporal CTUs E, F, G, H, I, J, K, L, and M were temporally located next to the current CTU. Based on these observations, the two groups of spatial CTUs and temporal CTUs were defined. Then, weighting factors were determined for each group and an equation was defined as:

where ETC indicates Early Termination. When the equation is satisfied, other inter-prediction processes are omitted. \(C_{n}\) represents the availability of neighboring CTUs. When CTU has SKIP flag as true or CBF is 0 or Merge flag is true, \(C_{n}\) is set to 1. Otherwise, \(C_{n}\) is 0. \(\lambda _{n}\) is a weighing factor based on a conditional probability. For spatial CTUs, \(\lambda _{n}\) is set to 1.0 and for temporal CTUs it is set to 0.75. The equation is only one form, but the three thresholds of \(T_{\mathrm{SKIP}}\), \(T_\mathrm{{CBF}}\), and \(T_\mathrm{{Merge}}\) can be obtained with individual flags: \(T_\mathrm{{SKIP}}\) using the SKIP flag, \(T_\mathrm{{CBF}}\) using the CBF flag, and \(T_\mathrm{{Merge}}\) using the Merge flag. Therefore, each has a separate threshold value. To obtain the optimal value of \(\alpha \), additional analysis was performed (Table 5). The optimal threshold value of \(\alpha \) was determined to be 4.

3.3 Overall procedure

The overall procedure and flow of the proposed algorithm are shown in Fig. 5. First, the inter-prediction stage starts after the depth of the current block is checked. When the depth is 0, we go to the next step; otherwise, original regular routines are processed. Next, 3 Early Termination Conditions (ETCs) are calculated. ETC\(_\mathrm{{SKIP}}\) is intended for SKIP mode based on the SKIP flag. ETC\(_\mathrm{{CBF}}\) is also used for SKIP; however, the coded block flag (CBF) is used to check the KIP mode. Lastly, ETC\(_\mathrm{{Merge}}\) is intended for Merge mode based on the Merge flag. ETCs are determined based on Eq. 1. Then, ETC\(_\mathrm{{SKIP}}\) is compared with \(\alpha \).

Experimentally, the optimal \(\alpha \) value was determined to be 4. When ETC\(_\mathrm{{SKIP}}\) is greater than or equal to \(\alpha \), we move to the final stage. If not, original regular routines are processed. The final stage is determination of whether the ETC is the lowest value among neighboring CTUs that have SKIP flag as true. When the ETC is lowest, the best mode for the current CTU is determined as SKIP mode, and then we move to encode the next CTU. However, if not, regular routines are processed. ETC\(_\mathrm{{CBF}}\) and ETC\(_\mathrm{{Merge}}\) use the same procedure except for the use of individual flags. Calculation of ETCs requires ETC\(_\mathrm{{SKIP}}\) to use the SKIP flag, ETC\(_\mathrm{{CBF}}\) to use CBF, and ETC\(_\mathrm{{Merge}}\) to use the Merge flag for detection of the Merge mode.

4 Simulation result and evaluation

4.1 Test conditions

The proposed algorithm was tested and evaluated on HM reference software, version 12.0. All experiments were conducted using an Intel Core (TM) i7-3770 @ 3.4 GHz with 16GB of RAM. The test conditions were

-

For each test sequence, 50 frames were encoded.

-

The group of picture (GOP) size was set to 8.

-

A value of 64 was used for the search range.

-

All experiments were performed for the four quantization parameters (QP) of 22, 27, 32, and 37.

-

Both random access (RA-Main) and low delay (LB-Main) profiles were considered.

-

Previously defined configurations [34] were used.

-

The fast encoder setting (FEN), early CU setting (ECU), and CBF fast mode setting (CFM), adaptive motion search range (ASR), and Early SKIP detection setting (ESD) were OFF.

-

Test sequences which different resolutions and belonging to different classes were used (Table 6).

-

Class A was not used for the LB-Main profile [34].

4.2 Performance evaluation

The proposed algorithm was evaluated using HM reference software version 12.0. The four parameters were (1) time required to encode a video sequence, (2) the number of bits in the encoded bit-stream, (3) the corresponding peak signal-to-noise-ratio (PSNR) value, and (4) BDBR. Bit-rate, PSNR, TS, and BDBR values were calculated for comparison of the proposed algorithm with HM 12 as:

where \(\Delta \)Bit-rate indicates the total bit-rate change (percentage), \(\Delta \mathrm{{PSNR}}_{Y}\) indicates the \(\mathrm{{PSNR}}_{Y}\) changes, and \(\Delta T\) is the time-saving factor (percentage). For bit-rate, a positive value indicated an increase in the number of bits and a negative value indicated a decrease. For \(\Delta \mathrm{{PSNR}}_{Y}\), an improvement was a positive value and a negative value indicated degradation. A negative value for the time-saving factor indicated that the algorithm consumed less time than the original reference software.

The experimental results are shown in Tables 7 and 8 for RA-main and LB-main profiles, respectively. The proposed algorithm provided, on average, 35.41 and 36.88% encoding time reductions \((\Delta T\)) for RA-Main and the LB-Main profiles, respectively, with a similar perceptual video quality. Bjontegaard Delta (BD) rate values are shown in the BDBR value, including both BD-PSNR and BD-Bit-rate [33] and all tests were carried out under common test conditions and configurations [34].

For the RA-Main configuration, the Traffic, ParkScene, Cactus, BasketballDrive, BQTerrace, BasketballPass, and BQSquare sequences exhibited good time savings with a trivial quality loss. Even with a stationary background with a high degree of motion in all of these sequences, the proposed algorithm achieved good performance. The BQMall Sequence with a rich texture, camera movement, and many objects exhibited good time savings, but with a higher degree of quality loss. The PeopleOnStree and RaceHorses sequences exhibited time saving with a trivial quality loss. The PeopleOnStreet sequence contains many objects with irregular movement. The background in the RaceHorses sequence is erratic and the sequence contains camera movement. Little SKIP mode was used in this sequence.

For the LD-Main configuration, the results were similar, but quality loss was larger. Thus, the proposed scheme is appropriate for use with the RA-Main configuration. The proposed algorithm achieved an 18.06–49.52 % of time reduction with a 0.6–1.3 % of BDBR for the RA-Main configuration with class A sequences. For class B sequences, a 28.92–45.38 % of time reduction with a 0.3–1.2 % of BDBR increment under the RA-Main profile was achieved, and a 22.48–42.04 % time savings with a 0.6–3.4 % of BDBR increment under LD-Main configuration was achieved. For class C sequences, a 26.86–30.90 % of encoding time saving with a 0.1–0.5 % BDBR increment under the RA-Main configuration was achieved, and a 15.64–26.34 % of time reduction with 0.7–4.6 % of BDBR increment under the LD-Main configuration was achieved. In class D sequences, a 16.10–43.57 % of time reduction with a 0.4–0.6 % of BDBR increment under the RA-Main configuration was achieved. In the LD-Main configuration, 12.01–36.83 % of time-saving factor with a 0.1–0.5 % of BDBR increment was achieved.

Tables 9 and 10 show the performance comparison with the existing methods, respectively. The proposed algorithm achieved more time reduction than other well-known algorithms with considerable loss. For ECU, unnecessary recursive routines were used. However, the proposed algorithm detected many SKIP and Merge modes at a depth level of 0. Thus, the proposed algorithm reduced the number of unnecessary recursive routines. It means that the proposed algorithm can reduce unnecessary routines. For ESD [22], the reported method processed all prediction stages in the beginning step. However, the proposed algorithm decided a proper CU node before the prediction stage started. Thus, the algorithm resulted in more speedup than for ESD [22].

When compared with Sampaio’s method [35], 12 % of average time-saving factor was achieved with similar BDBR under the RA-Main configuration. For Yang’s method [36], a similar performance was observed (about 12 % of time-saving factor compared to Yang’s method). ECU [21] seems good in terms of BDBR performance.

In case of the LD-Main configuration, the proposed algorithm achieved about 30 % of average time-saving factor while keeping 2.2 % of BDBR performance. For BQMall sequence (Class C), the suggested algorithm gave a little large BDBR loss. In other sequences, we can see that the proposed scheme achieves a reliable performance. In case of Sampaio’s and Yang’s algorithms, 23.79 and 17.93 % of time reductions were observed in the average encoding time, respectively. Compared to Sampaio’s method [35], the proposed algorithm yielded over 16 % of time reduction. For Yang’s algorithm [36], up to 24 % of time-saving factor was achieved. From the results, we are able to deduce that the proposed algorithm is very effective in the HEVC encoding system.

5 Conclusions

An efficient CU determination algorithm was proposed using neighboring block information for the smart surveillance system. The proposed algorithm was based on the characteristics of natural video sequences using conditional probability values between a current block and adjacent blocks. The SKIP flag and CBF were used to detect the SKIP mode. The Merge flag was examined to identify the Merge mode. When adjacent CTUs were selected as SKIP or Merge mode, further processes were omitted.

One of the most important challenges for fast algorithm development in HEVC is a trade-off between video quality and encoding time reduction. An encoding time reduction of more than 35 % in random-access configuration and a 29 % encoding time saving in low-delay configuration with a negligible video quality loss and bit-rate increment were achieved. The proposed scheme can support to make real-time HEVC video system in the networked smart surveillance system. For further work, we should consider a fast prediction unit (PU) decision scheme to obtain more spedup gain, including a selection of transform unit (TU) size, adaptively.

References

Gubbi J, Buyyab R, Marusic S, Palaniswami M (2013) Internet of Things (IoT): a vision, architectural elements, and future directions. Future Gen Comput Syst 29:1645–1660

Shu C-F, Hampapur A, Lu M, Brown L, Connell J, Senior A, Tian Y (2005) IBM smart surveillance system (S3): a open and extensible framework for event based surveillance. In: IEEE Conference on Advanced Video and Signal Based Surveillance, pp 318–323

Cho Y, Lim SO, Yang HS (2010) Collaborative occupancy reasoning in visual sensor network for scalable smart video surveillance. IEEE Trans Consum Electron 56(3):1997–2003

Nazare AC, dos Santos CE, Ferreira R, Robson Schwartz W (2014) Smart surveillance framework: a versatile tool for video analysis. In: IEEE Winter Conference on Applications of Computer Vision (WACV), pp 753–760

Castro-Munoz G, Martinez-Carballido J (2015) Real time human action recognition using full and ultra high definition video. In: International Conference on Computational Science and Computational Intelligence (CSCI), pp 509–514

Bross B, Han W-J, Sullivan GJ, Ohm J-R, Wiegand T (July 2012) High efficiency video coding (HEVC) text specification draft 8, ITU-T/ISO/IEC Joint Collaborative Team on Video Coding (JCT-VC) document JCTVC-J1003

Sullivan GJ et al (2012) Overview of the high efficiency video coding (HEVC) standard. IEEE Trans Circuits Syst Video Technol 22(12):1649–1668

Il-Koo K et al (2012) Block partitioning structure in the HEVC standard. IEEE Trans Circuits Syst Video Technol 22(12):1697–1706

Chiang P-T, Chang TS (2013) Fast zero block detection and early CU termination for HEVC video coding. In: IEEE International Symposium on Circuits and Systems (ISCAS), pp 1640–1643

Pan Z, Kwong S, Zhang Y, Lei J (2014) Fast coding tree unit depth decision for high efficiency video coding. In: IEEE International Conference on Image Processing (ICIP), pp 3214–3218

Kibeya H, Belghith F, Ben Ayed MA, Masmoudi N (2014) A fast CU partitionning algorithm based on early detection of zero block quantified transform coefficients for HEVC standard. In: IEEE International Conference on Image Processing, Applications and Systems (IPAS), pp 1–5

Schierl T, Hannuksela MM, Wang Y-K, Wenger S (2012) System layer integration of high efficiency video coding (HEVC). IEEE Trans Circuits Syst Video Technol 22(12):1871–1884

Psannis KE, Hadjinicolaou M, Krikelis A (2006) MPEG-2 streaming of full interactive content. IEEE Trans Circuits Syst Video Technol 16(2):280–285

Wenger S (2003) H.264/AVC over IP. IEEE Trans Circuits Syst 13(7):645–656

Stockhamme T, Hannuksela MM, Wiegand T (2003) H.264/AVC in wireless environments. IEEE Trans Circuits Syst Video Technol 13(7):657–673

Psannis K, Ishibashi Y (2008) Efficient flexible macroblock ordering technique. IEICE Trans Commun E91–B(08):2692–2701

Psannis KE (2015) HEVC in wireless environments. J Real Time Image Process. doi:10.1007/s11554-015-0514-6 (online published)

Psannis K, Ishibashi Y (2006) Impact of video coding on delay and jitter in 3G wireless video multicast services. EURASIP J Wirel Commun Netw 2006, Article ID 24616, 1–7

Zhang Y, Wang H, Li Z (2013) Fast coding unit depth decision algorithm for inter-frame coding in HEVC. In: Proceedings of Data Compression Conference, pp 53–62

Shen L, Liu Z, Zhang X, Zhao W, Zhang Z (2013) An effective cu size decision method for HEVC encoders. IEEE Trans Multimed 15(2):465–470

Choi K, Park S-H, Jang ES (2011) Coding tree prunning based CU early termination. Document JCTVC-F092, JCT-VC

Yang J, Kim J, Won K, Lee H, Jeon B (2011) Early SKIP Detection for HEVC, document JCTVC-G543. JCV-VC, Geneva Switzerland

Kim J, Jeong S, Cho K, Choi JS (2012) An efficient bi-prediction algorithm for HEVC. In: International Conference on Consumer Electronics (ICCE), Las Vegas

Xiong J, Li H, Meng F, Zeng B, Zhu S, Wu Q (2014) Fast and efficient inter CU decision for high efficiency video coding In: Proceedings of IEEE International Conference Image Processing, pp 3715–3719

Xiong J, Li H, Zhu S, Wu Q, Zeng B (2014) MRF-based fast HEVC inter CU decision with the variance of absolute differences. IEEE Trans Multimed 16(8):2141–2153

Shen X, Yu L (2013) CU splitting early termination based on weighted SVM. EURASIP J Image Video Process 2013(1):1–11

Cho S, Kim M (2013) Fast CU splitting and pruning for suboptimal CU partitioning in HEVC intra coding. IEEE Trans Circuits Syst Video Technol 23(9):1555–1564

Xiong J, Li H, Wu Q, Meng F (2014) A fast HEVC inter CU selection method based on pyramid motion divergence. IEEE Trans Multimed 16(2):559–564

Lee J, Kim S, Lim K, Lee S (2015) A fast CU size decision algorithm for HEVC. IEEE Trans Circuits Syst Video Technol 25(3):411–421

Cassa M, Naccari M, Pereira F (2012) Fast rate distortion optimization for the emerging HEVC standard. In: Proceedings of Picture Coding Symposium (PCS), pp 493–496

Lee B, Kim M (2011) Modeling rates and distortions based on a mixture of laplacian distributions for inter-predicted residues in quadtree coding of HEVC. IEEE Signal Process Lett 18(10):571–574

Pan Z, Kwong S, Zhang Y, Lei J (2014) Fast coding tree unit depth decision for high efficiency video coding. In: IEEE International Conference of Image Processing (ICIP), pp 3214–3218

Li X, Wien M, Ohm JR (2010) Rate-complexity-distortion evaluation for hybrid video coding. In: IEEE International Conference Multimedia and Expo, Singapore, July 19–23

Bossen F (2012) Common test conditions and software reference configurations. Document JCTVC-I1100, JCT-VC, Geneva, Switzerland

Sampaio F, Bampi S, Grellert M, Agostini L, Mattos J (2012) Motion vectors merging: low complexity prediction unit decision heuristic for the inter-prediction of HEVC encoders. In: International Conference on Multimedia and Expo (ICME), pp 657–662

Yang S, Lee H, Shim HJ, Jeon B (2013) Fast inter mode decision process for HEVE encoder. In: The IEEE 11th Image, Video, and Multidimensional Signal Processing (IVMSP) Workshop, pp 1–4

Acknowledgments

This Research was supported by the Sookmyung Women’s University Research Grants (1-1603-2006).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kim, BG. Fast coding unit (CU) determination algorithm for high-efficiency video coding (HEVC) in smart surveillance application. J Supercomput 73, 1063–1084 (2017). https://doi.org/10.1007/s11227-016-1730-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-016-1730-y