Abstract

We present a new way to find clusters in large vectors of time series by using a measure of similarity between two time series, the generalized cross correlation. This measure compares the determinant of the correlation matrix until some lag k of the bivariate vector with those of the two univariate time series. A matrix of similarities among the series based on this measure is used as input of a clustering algorithm. The procedure is automatic, can be applied to large data sets and it is useful to find groups in dynamic factor models. The cluster method is illustrated with some Monte Carlo experiments and a real data example.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Most procedures for clustering time series look at the similarity of the elements of a set of times series and build a measure of distance between two series by using their univariate features. Piccolo (1990) proposed a distance measure for classifying ARMA models using the autoregressive representation of the process. Their properties are further studied in Corduas and Piccolo (2008). Xiong and Yeung (2004) applied a model-based approach using mixtures of autoregressive moving average (ARMA) models and the EM algorithm to estimate the parameters. Scotto et al. (2011) and D’Urso et al. (2017) use the extreme value behaviour for clustering environmental time series. Liao (2005) surveys the time series clustering procedures available from the perspective of machine learning. From the Bayesian approach, Fruhwirth-Schnatter and Kaufmann (2008) build groups by using finite-mixture models estimated by Bayesian Markov Chain Monte Carlo simulation methods. Pamminger and Fruhwirth-Schnatter (2010) developed a model based approach for categorical time series. Also, in the model based approach, Alonso et al. (2006) and Vilar-Fernández et al. (2010) cluster time series by using the forecast densities. Specific frequency-domain methods for discrimination and clustering analysis of time series were proposed by Maharaj (2002) and by Caiado et al. (2006) or in the fuzzy framework by Maharaj and D’Urso (2011). Pértega and Vilar (2010) compared several parametric and nonparametric approaches. Zhang et al. (2011) introduced a two step method in which one-nearest neighbor network is built based on the similarity of time series with the triangle distance, and second the nodes with high degrees are used to cluster. Zhang (2013), Sadahiro and Kobayashi (2014) and Aghabozorgi and Wah (2014) analized methods for high-dimensional time series. Recent surveys of the field can be found in Aghabozorgi et al. (2015) and Caiado et al. (2015). The R library TSclust implements many of the previous mentioned clustering procedures (see Montero and Vilar 2014). These methods are useful when we have independent time series and the objective is to cluster them by similarity of their univariate models, in a parametric framework, or by similarity of their periodograms or autocorrelation functions.

For a set of independent realizations of vectors of stationary time series Kakizawa et al. (1998) developed measures of disparity between these vectors using the Kullback–Leibler and Chernoff information measures and proposed a spectral approximation to define quasi-distances between the time series. These measures are then used in a hierarchical, or k-means partitioned, cluster algorithm.

In this article we propose a procedure to cluster time series by their linear dependency. Our approach is general and nonparametric, as it does not assume any model for the series, and it is based on the cross-correlation coefficients between them. Golay et al. (2005) and Douzal-Chouakria and Nagabhushan (2007) have proposed using variants of the instantaneous cross-correlation, but their approach take into account the sign of the cross-correlation. Clustering by dependency has recently been analyzed by Ando and Bai (2016, 2017) assuming that the vector of time series is generated by a Dynamic Factor Model where some factors affect different groups of series. They propose an iterative estimation algorithm to find the clusters based on factor model estimation.

The contributions of this article are as follows. We first justify in Sect. 2 that clustering by similar linear dependency, taking into account the cross correlations, will produce different results than clustering for similar univariate structure, using the autocorrelations. The main contribution of this article appears in Sect. 3, that defines a general measure of linear association between two time series, the generalized cross correlation (GCC), which has useful properties for measuring their linear dependency. In particular, it is shown in Sect. 4 that is equivalent to using a dynamic version of the mutual information between two series. Section 5 derives an objective criterion for the choice of k, the number of lags to be included in building the GCC, proposes a consistent estimate of this measure and explains its use for clustering time series. Section 6 illustrates in a Monte Carlo study that the proposed procedure has a good performance on cross-dependency clustering compared to both some univariate clustering procedures and to the dependency cluster methods proposed by Douzal-Chouakria and Nagabhushan (2007) and Ando and Bai (2016). Finally, we apply our procedure to series of electricity prices in Sect. 7. Some brief conclusions are presented in Sect. 8.

2 Univariate versus bivariate clustering

Suppose two stationary linear processes, \(y_{t}\) and \(x_{t}\), which follow a vector ARMA\((p_{b}\), \(q_{b})\) model

where \(a_{1t}\) and \(a_{1t}\) are white noise processes with covariance matrix \(\Sigma _{a}\) and the polynomials \(\phi _{ij}(B)\) and \(\theta _{ij}(B)\) are of the form \(A_{ii}(B)=1-a_{1ii}B-\cdots -a_{rii}B^{r}\) or \(A_{ij}(B)=-a_{1ij} B-\cdots -a_{rij}B^{r}\). The univariate models for the time series are

and

where \(\alpha (B)=(\phi _{11}(B)\phi _{22}(B)-\phi _{12}(B)\phi _{21}(B))\) is a polynomial of maximum order \(2p_{b}\) and \(\beta _{j}(B)\) for \(j=1,\ldots ,4\) are polynomials of maximum order \(p_{b}+q_{b}\). As the sum of two MA processes that do not have lag cross correlation is another MA process, the univariate models will be ARMA\((p_{u},q_{u})\) with \(p_{u}\le 2p_{b}\), and MA order, \(q_{u}\le p_{b}+q_{b}\) (see Granger and Morris 1976). These models will be, in general, different in both series. For instance, suppose a \(\textit{VAR}(1)\) with \(\phi _{11}(B)=1,\phi _{12}(B)=0\), \(\phi _{21}(B)=-aB\), \(\phi _{22}(B)=1-\phi B\). Then \(x_{t}\) is white noise, whereas \(y_{t}\) follows an AR(1), if \(\Sigma _{a}\) is diagonal, or it follows an ARMA(1,1) if the noises have contemporaneous correlation.

Thus, using a cluster method based on similar univariate properties of the series may classify two series strongly related in different groups, and put together independent series that follow similar models. These procedure will not be useful if we want to find cluster of related series, as it is usually the objective in many applications. A possible strategy for clustering time series could be to use a two step method. First, the series are clustered by their dependency, and, second, these more homogeneous clusters can be broken down into new clusters of similar univariate structure. In this article we will concentrate in this first stage, the second can be carried out by the procedures discussed in the Introduction.

3 The generalized cross correlation measure between two time series

3.1 Definition of the generalized cross correlation measure

We want to define a general measure of linear dependency among two stationary time series, \(x_{t}\) and \(y_{t}\). Suppose that, without loss of generality, the series are standardized so that \(E(x_{t})=E(y_{t})=0\) and \(E(x_{t} ^{2})=E(y_{t}^{2})=1.\) Call \(\rho _{xx}(h)=E(x_{t-h}x_{t})=\)\(\rho _{x}(h)\) and \(\rho _{xy}(h)=E(x_{t-h}y_{t})=\rho _{yx}(-h)\). We assume that the two series are not deterministic and given any finite vector \(a=(a_{1},\ldots ,a_{k})\) and series \(X_{t,k}=(x_{t},\ldots ,x_{t-k})\), P(\(a^{\prime }X_{t,k}=0)=0.\)

The measure of dependency we search for, \(C(x_{t},y_{t})\), should verify the following properties: (1) \(0\le C(x_{t}\), \(y_{t})\le 1\); (2) \(C(x_{t} ,y_{t})=1\) if and only if there is an exact linear relationship between the two series; (3) \(C(x_{t},y_{t})=0\) if, and only if, all the cross correlation coefficients between the two time series are zero; (4) If both series are white noise and \(\rho _{xy}(h)=0\) for \(h\ne 0\), then \(C(x_{t},y_{t})=\rho _{xy}^{2}(0)\).

We can summarize the linear dependency at lag h by the matrix

and, in the same way, the linear dependency for lags between 0 and k can be summarized by putting together the \((k+1)\) square matrices of dimension two that describe the dependency at each lag, as

The matrix \(\mathbf {R}_{k}\) is symmetric non negative definite, and it corresponds to the covariance matrix of the vector stationary process \((x_{t},y_{t},x_{t-1},y_{t-1},\ldots ,x_{t-k}\), \(y_{t-k})^{\prime }\). We can arrange the components of the vector as \(Z_{t,2(k+1)}=(Y_{t,k}^{\prime },X_{t,k}^{\prime })^{\prime }\), with covariance matrix

where \(\mathbf {R}_{xx,k}\) is the \((k+1)\) squared and positive definite covariance matrix of the standardized vector of series \(X_{t,k}=(x_{t} ,x_{t-1},\ldots ,x_{t-k})^{\prime }\), \(\mathbf {R}_{yy,k}\) corresponds to \(Y_{t,k}=(y_{t},y_{t-1},\ldots ,y_{t-k})^{\prime }\) and \(\mathbf {C}_{xy,k}\) include the cross correlations between both vectors of series. Note that \(\left| \mathbf {R}_{yx,k}\right| =\left| \mathbf {R}_{k}\right| \). This matrix verifies that (1) \(0\le \left| \mathbf {R}_{yx,k}\right| \le 1\), with equality to one holding when \(\mathbf {R}_{yx,k}\) is diagonal and the two series are both serially uncorrelated and not linearly related; (2) \(\left| \mathbf {R}_{yx,k}\right| =0\) when there exists a linear combination \(a^{\prime }Z_{t}=0\) so that the series are exactly linearly related.

We will call total correlation between the two time series, \(x_{t}\) and \(y_{t}\), to

which is a measure of the distance of the two series from a bivariate white noise process, that has \(\textit{TC}=0\). A similar measure applied to a single time series was proposed as a portmanteau test by Peña and Rodríguez (2002), and it was extended as a multivariate portmanteau test by Mahdi and McLeod (2012). A related statistic based on the determinant of the cross correlation matrix of the residuals of a VARMA model has been proposed as an independence test by Robbins and Fisher (2015). However, \(\left| \mathbf {R}_{yx,k}\right| \) is not a good measure of the strength of the linear relationship between the two series, because it depends on both the cross correlations and the autocorrelations of both series. First, a large value of this determinant does not imply that the series have a weak relationship or are not linearly related. As

if \(\mathbf {C}_{xy,k}=0\), then \(|\mathbf {R}_{yx,k}|=\left| \mathbf {R} _{xx,k}\right| \left| \mathbf {R}_{yy,k}\right| \) which can be very small when the series have strong autocorrelations. For instance, \(\left| \mathbf {R}_{xx,1}\right| =1-\rho _{x}^{2}\) will be very small if the first autocorrelation coefficient is close to one. Second, although \(\left| \mathbf {R}_{yx,k}\right| =0\) implies an exact relationship between the two series the opposite is not true: a small value of this determinant does not imply a strong relationship between the series. For instance, if \(\left| \mathbf {R}_{xx,k}\right| \) is very small, because there is strong autocorrelation, then, by (7), \(|\mathbf {R}_{yx,k}|\) will also be small.

These properties of \(\left| \mathbf {R}_{yx,k}\right| \) suggests the following alternative similarity measure

that we named generalized cross correlation measure, \(\textit{GCC}(x_{t},y_{t})\) between two time series. The measure \(\textit{GCC}\) verifies: (1) \(\textit{GCC}(x_{t} ,y_{t})=\textit{GCC}(y_{t},x_{t})\) and the measure is symmetric; (2) \(0\le \textit{GCC}(x_{t},y_{t})\le 1\) for Fischer’s inequality (see Lütkepohl 1996; 3) \(\textit{GCC}(x_{t},y_{t})=1\) if and only if there is a perfect linear dependency among the series and (4) \(\textit{GCC}(x_{t},y_{t})=0\) if and only if all the cross correlation coefficients are zero.

To prove (3), as \(\textit{GCC}(x_{t},y_{t})=1\) implies \(\left| \mathbf {R} _{yx,k}\right| =0\), because the denominator is bounded, and then there is at least a row (column) which is a linear combinations of the others rows (columns). To prove (4), write \(\textit{GCC}(x_{t},y_{t})\) as

By the Hadamard’s inequality, the right hand side determinant is smaller or equal to 1 and equality is achieved if and only the matrix is diagonal, that is, if and only if \(\mathbf {C}_{xy,k}=\mathbf {0}_{xy,k}\).

Notice that for \(k=0\) the \(\textit{GCC}(x_{t},y_{t})\) is just the squared correlation coefficient between the two variables. Also, for any k, when both series are white noise and \(\rho _{xy}(h)\ne 0\) for some \(h\ne 0\) and \(\rho _{xy}(j)=0\) for all \(j\ne h\), then \(\textit{GCC}(x_{t},y_{t})=\rho _{xy}^{2}(h)\). In general, for \(k>0\), we will show in the next section that the \(\textit{GCC}(x_{t},y_{t})\) represents the increase in accuracy in prediction of the bivariate model with respect to the univariate models and it can be interpreted as an average squared correlation coefficient when we explain the residuals of an autoregressive fitting of one variable by the values of the other.

3.2 Interpretation of the generalized cross correlation measure

In order to understand better this measure note that we can write (see Peña and Rodríguez 2002)

where \(\mathbf {R}_{yx,k,-1}\) is the correlation matrix of the vector \(Z_{t,2k+1}=(y_{t-1},\ldots ,y_{t-k}\), \(X_{t,k}^{\prime })^{\prime }\), in which we have dropped the first component in \(Z_{t,2(k+1)}\), and \(R_{1,2k+1}^{2}\)\((y_{t}/y_{t-1}, \ldots , y_{t-k},x_{t},\ldots ,x_{t-k})\) is the square of the multiple correlation coefficient in the linear fit of the first component of \(Z_{t,2(k+1)}\) using as regressors the remaining \(2k+1\) variables. In other words, the notation \((y_{t}/y_{t-1},\ldots ,y_{t-k},x_{t},\ldots \), \(x_{t-k})\) denote the regression \(\widehat{y}_{t}=\sum _{j=1}^{k}c_{j}y_{t-j}+\sum _{j=0}^{k}b_{j}x_{t-j}\). By recursive use of this expression, we have

where \(R_{z}^{2}(i,2(k+1)-i)\) is the multiple correlation coefficient in the regression of the ith component of the vector \(\mathbf {Z}_{t,2(k+1)}\) on the remaining \(2(k+1)-i\) variables, and \(R_{2(k+1),0}^{2}=0\). Note that: (1) for \(1\le i\le k+1\), then \(R_{z}^{2}(i,2(k+1)-i)\) is the multiple correlation coefficient in the regression \((y_{t}/y_{t-1},\ldots ,y_{t-k+i-1} ,x_{t+i-1},\ldots ,\)\(x_{t-k+i-1})\) and (2) for \(k+2\le i\le 2(k+1)\) corresponds to the regression \((x_{t}/x_{t-1},\ldots \), \(x_{t-2(k+1)+i})\). Define \(R_{x}^{2}(i,(k+1)-i)\) and \(R_{y}^{2}(i,(k+1)-i)\) in the same way for \(i=1,\ldots .k+1\) and note that for \(k+2\le i\le 2(k+1)\) then \(R_{z} ^{2}(i,2(k+1)-i)=R_{x}^{2}(j,(k+1)-j)\) for \(j=i-(k+1)\). We have, for each individual series

Thus, \(\textit{GCC}(x_{t},y_{t})\) can be written by (13) and (14) as

and this coefficient is the ratio between geometric mean of the residual variability in the regressions \((y_{t}/y_{t-1},\ldots ,y_{t-k+i-1} ,x_{t+i-1},\ldots ,x_{t+i-k-1})\) and \((y_{t}/y_{t-1}\), \(\ldots , y_{t-k+i-1})\). For a given regression we call ESS the explained sum of squares variation, \(\textit{USS}\) the unexplained sum of squares variation and \(\textit{TSS}=\textit{ESS}+\)\(\textit{USS}\) the total sum of squares. Then

where \(R_{e_{i/X_{t}}}^{2}\) is the squared correlation coefficient when (1) we first fit by Least squares the \(\textit{AR}(k-i+1)\) autoregressive \(y_{t-i+1}=\phi _{1}y_{t-i}+\ldots +\phi _{k-i+1}y_{t-k}\) and compute the residuals, \(e_{t-i+1}^{(i)}=y_{t-i+1}-\widehat{\phi }_{1}y_{t-i}-\ldots \widehat{\phi }_{k-i+1}y_{t-k}\); (2) we regress these residuals \(e_{t}^{(i)}\)on the vector \(X_{t+i-1,k}\). Note that in these last regressions we are using ith lead values of \(x_{t}\), the contemporaneous observations, and \(k-i\) lags of \(x_{t} \). Therefore, we can write

An alternative interpretation of \(\textit{GCC}(x_{t},y_{t})\) can be obtained by using \(|\mathbf {R}_{k}|\) instead of \(|\mathbf {R}_{yx,k}|\) in the definition of (9) and applying the same ideas. It is easy to see that we compare now the ratios of \(2(k+1)\) regressions. Half of them are of the form \((x_{t}/x_{t-1},\ldots ,x_{t-k+i-1},y_{t},\ldots \), \(y_{t+i-k-1})\) versus \((x_{t}/x_{t-1},\ldots ,x_{t-k+i-1})\), where we always use the same number of lags for both variables and we always include in the regression the contemporaneous value of the other variable. The other half are of the form \((y_{t}/y_{t-1},\ldots ,y_{t-k+i-1},x_{t-1},\ldots ,x_{t+i-k-1})\) versus \((y_{t}/y_{t-1},\ldots ,y_{t-k+i-1})\), and are similar to the previous ones but now the contemporaneous value of the regressors variable is not included.

The name generalized cross correlation has been used before in the signal processing literature to describe an algorithm of maximum likelihood estimation of the time delay between two signals (Knapp and Carter 1976). For this reason we have used the name generalized cross correlation measure to differentiate it from the algorithm with the same name but with different objective. The chosen name relates it to the generalized variance (Anderson 1984), based on the determinant of the covariance matrix. This measure is also related to the effective dependence between two random variables (Peña and Rodríguez 2003). The likelihood ratio test to check that the vector of standardized normal variables \(\mathbf {X}_{k}^{\prime }\) and \(\mathbf {Y} _{k}^{\prime }\) are independent assuming multivariate normality is \(T\log (|\widehat{\mathbf {R}}_{xx,k}||\widehat{\mathbf {R}}_{yy,k} |/|\widehat{\mathbf {R}}_{yx,k}|)\) (see, for instance, Anderson 1984), where \(\widehat{\mathbf {R}}_{yx,k}\) is the estimated obtained by using the sample autocorrelation and cross correlation. This is a sufficient statistic for checking for independence under normality. Thus, the generalized cross correlation \(\textit{GCC}\) includes all the relevant information under normality about the linear dependency among the series.

4 The generalized cross correlation as dynamic mutual information

Given two continuous random variables, x and y, the mutual information, or mean information in one about the other is a measure of their joint dependency (see Kullback 1968) given by

where f(z) is the density function of z. For univariate normal random variables it is easy to see that

where \(\rho _{xy}\) is the correlation coefficient between them. This definition can be extended in a direct way to vectors of random variables (X, Y) of dimensions p and q and we will use the form

and if \(Y=y\) is a univariate variable and we assume joint multivariate normality for all we can generalize (18) to

where \(R_{y/X}\) is the multiple correlation coefficient in the regression of y on X.

Suppose now that we take \(X_{t}=(x_{t},\ldots ,x_{t-k})\) and \(Y_{t} =(y_{t},\ldots ,y_{t-k})\), where \(y_{t}\) and \(x_{t}\) are stationary time series. Then, we define the dynamic mutual information between the two series as

where \(I_{D}(X_{t},Y_{t})\) has the usual properties of I(X, Y). Assuming multivariate normality for the joint distribution \(f(X_{t},Y_{t})\), and by (20), we can integrate each term in this equation by

where \(R_{e_{h}/X_{t}}^{2}\) is the squared correlation coefficient in the regression of the variable \(e_{t,h}=y_{t-h}-E(y_{t-h}/y_{t-h-1},\ldots ,y_{t-k})\) on \(X_{t}\). By recursive application of this result we conclude that

Therefore in the Gaussian case the generalized cross correlation is a monotonic transformation of the dynamic mutual information between the two series.

5 Clustering time series with the generalized cross correlations

Given a sample of N stationary time series, in order to apply a cluster procedure based on the GCC we need to: (i) decide about the value of k, the number of lags to be used; (ii) estimate the GCC from the data and build a dissimilarity matrix of the series; (iii) define how to use this matrix to cluster the series. We will analyze these three problems below.

5.1 Selecting the number of lags k

In some problems we have prior information about the relevant lags to be used. For instance, suppose we have daily time series and we expect weakly and yearly seasonality. Then, assuming that the transformation \(n_{t}=\nabla _{7}\nabla _{365}x_{t}\), where \(\nabla _{a}x_{t}=x_{t}-x_{t-a}\), leads to a set of stationary time series, we expect non zero autocorrelations at the first lag and also at lags 7th and 365th, as well as in a window h around each of these lags for the interaction between the regular and seasonal part around the seasonal autocorrelation coefficients. That is, we may include in k a set of lag values as \((1,\ldots ,h)\), \((7-h,\ldots ,7+h)\), \((365-h,\ldots ,365+h)\) for some small value of h.

However, in many applications we do not have prior information about the number of relevant lags k to be used in computing the GCC. Then, we may think in this value as a parameter that indicates the largest lag that contains additional information about both the cross correlations and the autocorrelations of the two series. For a univariate series that follows an \(\textit{ARMA}(p,q)\) model this lag is the number of autocorrelations coefficients needed to obtain consistent estimates of the parameters of the model, that is \(p+q\). Given the values of these autocorrelations the rest are non informative, and we will call the univariate memory of a linear \(\textit{ARMA}(p,q)\) time series to \(k_{u}=p+q\).

For a set of N stationary linear time series that follow univariate ARMA\((p_{i},q_{i})\) models, we define the univariate memory of the system as

In the same way, the bivariate memory of a pair of time series is the largest lag of autocorrelations and cross-correlations coefficients with additional information about the process. It coincides with the largest lag of the cross correlations matrices needed to obtained consistent estimates of the parameters of the bivariate \(\textit{VARMA}(p_{b},q_{b})\) model, \(k_{b}=(p_{b}+q_{b})\). As it has been shown in Sect. 2, the univariate models will be ARMA\((p_{u}\le 2p_{b},q_{u}\le (p_{b}+q_{b}))\), where the exact order depend on the cancellation of roots between the AR and MA parts. Let us call c the maximum number of roots cancelled in the univariate models, and assume that \(p_{b}\ge q_{b}\) so that \(c\le p_{b}\). Then, the univariate models have maximum order \(p_{u}=2p_{b}-c,q_{u}=(p_{b}+q_{b})-c\), and the univariate memory of the system formed by the two series will be \(3p_{b}+q_{b}-2c\ge p_{b}+q_{b}\). Thus, the memory of a bivariate system where \(p_{b}\ge q_{b}\) cannot be larger than the memory of the univariate series.

As in most real time series \(p_{u}\ge q_{u}\), which implies \(p_{b}\ge q_{b} \), we expect that the maximum univariate memory of the time series will be an upper bound of the bivariate memory. We define the bivariate memory of the system as the maximum of the bivariate memory in all possible pairs, and

where \(H=N(N-1)/2\) is the number of pairs in the system.

This analysis suggests a feasible way to obtain an upper bound, \(k^{u}\ge \)k, for the bivariate memory k of the system of N time series. We can fit \(\textit{AR}(p)\) processes to all the univariate time series, select the order by the BIC criterion, and take \(k^{u}=\max _{1\le i\le N}(p_{i}).\)

A lower bound for the bivariate system memory, \(k_{u}\le k,\) can be computed by assuming that the set of time series has been generated by a Dynamic Factor Model, \(\mathbf {Z}_{t}=\mathbf {Pf}_{t}+\mathbf {n}_{t}\), where \(\mathbf {Z}_{t}\) is a multivariate vector of dimension N, the matrix \(\mathbf {P}\) is \(N\times r\) and \(\mathbf {f}_{t}\) is a vector of factors of dimension r and \(\mathbf {n}_{t}\) has some idiosyncratic autocorrelation structure. Note that this is not an important restriction as large sets of time series can always be represented by generalized dynamic factor models (see Hallin and Lippi 2013). Assuming a contemporaneous relationship between the series and the factors, it is well known that they can be estimated by the common eigenvectors of the lag covariance matrices of \(\mathbf {Z}_{t}\), (see, for instance, Peña and Box 1987) and the number of factors can be obtained by the test proposed by Lam and Yao (2012). Thus, we can compute the factors, fit an \(\textit{AR}(p)\) model to each of the factor series selecting the order \(p_{i}\) by the BIC criterion and take \(k_{l}=\max (p_{i})\). Then \(k_{l}\) is expected to be a lower bound for the true value. First, note that as \(z_{it}=\chi _{it} +n_{it}\), where \(\chi _{it}=\sum \nolimits _{j=1}^{r} p_{ij}f_{jt}\) is the common part, if \(\chi _{it}\) follows an ARMA\((a_{i},b_{i})\) model, the observed series \(z_{it}\) are expected to be ARMA of higher order, depending on the model followed by \(n_{it}\). Although some near cancellation of roots are always expected in these cases in many of the series, the maximum order for the observed series \(z_{it}\) is expected to be larger than the maximum order for the common parts, and, therefore, the estimation of the order using only the models for the factors will be a lower bound for the true system order.

If these two estimates lead to the same value of \(k=k^{u}=k_{l}\), we stop. Otherwise, we check values of k in the interval \((k_{l},k^{u})\) assuming that the two series \(\mathbf {z}_{t}=(x_{t},y_{t})^{\prime }\) are related by a vector VAR(p) model, where \(k_{l}\le p\le k^{u}\). We use VAR models to avoid the problem of cancellation of operators in the bivariate systems that makes the estimation slower and more complicated. We fit models with orders between the bounds and select the order by the BIC criterion. Calling \(k_{xy}=p\) the memory order of the pair of time series, the bivariate memory system is

However, this approach requires to fit \(H=N(N-1)/2\) bivariate VAR models for each order p. As a VAR(k) model \(\varvec{\Phi }_{k}\mathbf {(B)z} _{t}\mathbf {=a}_{t}\) with \(\varvec{\Sigma }_{a}=\mathbf {P}{\varvec{\Lambda }} \mathbf {P}^{^{\prime } }\) where \(\mathbf {P}^{^{\prime }}\mathbf {P}=\mathbf {I}\) and \(\varvec{\Lambda }\) is diagonal can be written in the structural form \(\mathbf {P}^{^{\prime } }\varvec{\Phi }_{k}\mathbf {(B)z}_{t}\mathbf {=\epsilon }_{t}\), with \(\varvec{\Sigma }_{\epsilon }={\varvec{\Lambda }},\) a faster approach to estimate this model is by fitting the regressions

for \(k_{l}\le k\le k^{u}\), and choose the value \(k^{*}\) that minimizes the BIC criterion. This procedure provides consistent estimates of the parameters (see section 10.1 of Hamilton 1994) and, therefore, of the value of k. This equation could also be estimated by Lasso (Tibshirani 1996) searching for a sparse solution, but this will increase the computational cost without clear advantages. Note that for each pair two values of k are obtained for the two dependent variables in the regression and the largest is a consistent estimate of the memory order for this pair. If the set of series is very large, we can take samples of pairs of series to estimate the bivariate memory of the system.

5.2 Estimating the generalized cross correlation

The generalized cross correlation for each pair of time series will be estimated by the sample correlation matrices. The almost sure consistency of these matrices holds for second–order stationary and ergodic processes under mild conditions (see, for instance, Theorem 6 in Chapter IV of Hannan 1970). Since \(\widehat{GCC}\) is a continuous function of \(\widehat{\varvec{R}} _{\varvec{\mathcal {X}}(i)}\), \(\widehat{\varvec{R}}_{\varvec{\mathcal {X}}(j)}\) and \(\widehat{\varvec{R}}_{\varvec{\mathcal {X}}(i,j)}\), a Slutsky’s theorem argument implies the consistency of this estimator (see, for instance, Theorems 18.8 and 18.10 of Davidson 1994).

The estimators \(\widehat{GCC}(X_{i},X_{j})\), will be obtained for all pairs (i, j) with \(i\ne j\) to construct the following \(N\times N\) dissimilarity matrix

where the elements of this matrix are defined as \(1-\widehat{GCC}(X_{i} ,X_{j})\) in order to have small dissimilarity when the series are highly dependent and high values of dissimilarity when the series are independent.

5.3 Selecting a cluster procedure

The dissimilarity matrix (25) can be used in any cluster procedure which requires this kind of input. If the number of series is not very large we can apply hierarchical clustering with single linkage (also known as nearest neighbor clustering) since it allows us to find some interesting dependence structures. For instance, the chained structure, that is, a structure where series \(X_{i}\) is related to series \(X_{i+1}\) but it is independent to series \(X_{i+2}\) for \(i\ge 1\). Figure 1a illustrates this situation, that is, the series 1–10 have a chained dependence structure. For simplicity, lets assume that we have three series having chained dependence structure. Once the closest pair of series is selected, then the remaining series will be close to one of the series in the pair but far away from the other series. However, the distance (dissimilarity) calculated by single linkage between the remaining series and the pair is small and this series will be close to the pair of series. Of course, other linkage schemes can be used depending on the dependence structure more likely for the data or we may try different approaches and select the one that provides the most interesting solution. In some applications, we may want to detect only highly cross-correlated groups of time series and, in that case, complete linkage could be the appropriate choice.

When the number of series is large we can apply multidimensional scaling to the \(N\times N\) matrix (25) and obtain a new \(N\times p\) matrix where p is the number of principal coordinates selected as variables, and usually p is much smaller than N. Then we can input these matrix in a cluster algorithm based on this sort of input.

6 Some Monte Carlo experiments on clustering time series

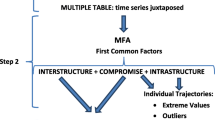

In this section, we develop several Monte Carlo experiments to evaluate the performance of the proposed similarity measures. In Sect. 6.1 we show the results for scenarios where the series are cross-dependent, and in Sect. 6.2 we present the results for scenarios where the series follow factorial models as in Ando and Bai (2016).

In the comparison we have also introduced as a reference three univariate measures based on autocorrelations as the QAC (Quantile autocovariances, Lafuente-Rego and Vilar 2015), the distance between sample autocorrelation coefficients, SAC, and the distance between partial autocorrelation coefficients, PAC. It should be notice that these methods provide a hard partition of the set of time series, but soft (fuzzy) versions are available at D’Urso and Maharaj (2009) and Vilar et al. (2018). They are compared with two bivariate measures \(\textit{TC}(x_{t},y_{t})\) defined in (6), \(\textit{GCC}(x_{t},y_{t})\) given in (9), the Douzal-Chouakria and Nagabhushan (2007) dissimilarity measure (denoted by DCN) and the Ando and Bai (2016) clustering procedure (denoted by ABC). The series are clustered for each measure by a hierarchical clustering algorithm with the single, complete and average linkages.

6.1 Cross-dependent scenarios

In this section, we consider groups of dependent series and the main goal is to cluster them by their cross-dependence. We consider a set of fifteen time series generated by the model \(x_{i,t}=\phi _{i}x_{i,t-1}+\epsilon _{i,t}\) where for \(i=1,2,\ldots ,5\) we have \(\phi _{i}=0.9\), and for \(i=6,7,\ldots ,15\), we have \(\phi _{i}=0.2\). Thus, from the univariate structure we have two clear groups. We introduce the cross dependency through the innovations \(\epsilon _{i,t}\), which are Gaussian white noise variables but with a dependence structure \(\rho (i,j)=E(\epsilon _{i,t},\epsilon _{j,t})\) which depends on the Scenario. Five scenarios are defined by indicating the non null cross-correlations. All the other possible cross correlations are assumed to be zero.

-

1.

Scenario S.1: \(\rho (i,i+1)=.5\) for \(i=1,\ldots ,9.\)

-

2.

Scenario S.2: \(\rho (i,i+1)=.5\) for \(i=1,\ldots ,9\), and \(\rho (i,i+1)=.5\) for \(i=11,\ldots ,14\).

-

3.

Scenario S.3: \(\rho (i,j)=.9\) for \(i=1,\ldots ,9\) and \(j=i+1,\ldots ,10.\)

-

4.

Scenario S.4: \(\rho (i,j)=.9\) for \(i=1,\ldots ,9\) and \(j=i+1,\ldots ,10\), and \(\rho (i,i+1)=0.5\) for \(i=11,\ldots ,14\).

-

5.

Scenario S.5: \(\rho (i,j)=.9\) for \(i=1,\ldots ,9\) and \(j=i+1,\ldots ,10\), and for \(i=11,\ldots ,14\) and \(j=i+1,\ldots ,15\).

Figure 1 illustrates these five scenarios, where the series are represented as points in an ellipse. A line connecting two points means that there exits non null cross correlation between these two series. For instance, in scenario S.1, represented in Fig. 1a, the first ten series are cross correlated whereas the last five are independent. The figure shows that scenarios S.2, S.4 and S.5 have two groups of dependent time series, whereas scenarios S.1 and S.3 have one group of dependent time series and five independent time series. A clustering for dependency should lead then to a two-cluster solution for scenarios S.2, S.4 and S.5 and a six-clusters solution for scenarios S.1 and S.3.

For the comparison of the clustering results, we will consider three measures: (1) the adjusted Rand index, ARI, which is based on counting pairs (see Hubert and Arabie 1985; 2) \(\mathcal {F}\)-measure which is based on sets overlaps (see Larsen and Aone 1999) and (3) the variation of information, VI, which is based on mutual information (see Meilă 2007). The results obtained with the three measures are similar and we will report here those obtained by using the ARI measure. The results obtained with the \(\mathcal {F}\)-measure and VI are available upon request to the authors. These measures assume known the “true clusters”. It should be notice that there is no generally accepted definition of what the “true clusters” are and this depends on the requirements of the situation, see, e.g., Hennig (2015). In our simulation experiments, we consider the groups of series that have some linear dependency as “true clusters”, that is, the series that have non null cross correlation.

In Tables 1 and 2, we report the means of the ARI measure from 1000 replicates for these five scenarios when \(T=100\) and 200, respectively. Seven clustering measures are compared: The first three, QAC, SAC and PAC only use univariate information whereas the last four, DCN, TC, GCC and ABC use the bivariate dependency between the series. The number of lags, k, for the methods SAC, PAC, TC and GCC was selected using the procedure described in Sect. 5. In Tables 3 and 4, we report the frequencies of the selected k as well as the percentage of times where \(k^{u}=k_{l}\). In method QAC, we use the tuning parameters suggested in Lafuente-Rego and Vilar (2015). In method DCN, we use the tuning parameter suggested in Montero and Vilar 2014). For method ABC, we assume that each group can be modelled by an unifactorial model. Of course, these scenarios are strongly challenging for Ando and Bai approach since the number of series is small and the procedure relies on a k-means algorithm. In particular, k-means cannot find six clusters in scenarios S.1 and S.3, and provides solutions with empty clusters.

The results of the three univariate methods are very similar across scenarios and linkage, as expected. Also, they do not improve with the sample size. This is an expectable result since the univariate methods are not designed for cross-dependency clustering.

The selected number of lags, k, was 1 or 2 in around 90% of the replicates. The percentage of times where \(k^{u}=k_{l}\) was in the range 59.80%–73.90%, which produces a low computational cost due the estimation of regressions (24).

The multivariate measures, \(\textit{TC}\) and \(\textit{GCC}\), have similar performance at the scenarios S.3 and S.5 where there one or two strongly related groups of series. But, the measure TC fails to find the clusters at scenarios S.1, S.2 and S.4 where there is a chained dependence structure. Figure 2 shows an example of the dendrograms obtained using measures TC and GCC for the first scenario. The groups or the independent series at scenario S.1 are clearly distinguishable in the dendrogram based on GCC. But, we observe that TC is not able to distinguish between the groups of series 6–10 and the group of series 11–15 at scenario S.1. Similar graphs are obtained for the other scenarios where there is a chained dependence, S.2 and S.4. The measure DCN obtains better results than TC at scenarios S.1, S.2 and S.4 but it is improved by GCC. It should be noticed that DCN takes into account the sign of the cross-correlation, that is, it consider that two positive correlated time series are closer than two negative correlated time series. This feature does not have impact in scenarios S.1–S.5 since all non-null cross-correlation are positive but it will determine the behavior of DCN in the factor model scenarios at Sect. 6.2.

Also, we observe that complete and average linkages fail to find the clusters in scenarios S.1 and S.2. The complete linkage also fails in scenario S.4. This situation is due to the chain structure of some of the clusters in scenarios S.1, S.2 and S.4. For instance, in the scenario S.1, once the first pair of series is considered as a cluster in the hierarchy then the remaining series will be far away from, at least, one series in this pair. Thus, the farthest neighbour will be far away from this pair. The same argument applies to average linkage although we observe that average linkage is better than complete. In the case of partitioning around medoids procedures, PAM-TC and PAM-GCC, we observe a similar behaviour to average linkage. It should be noticed that there is not a clear medoid in a chained dependence structure.

The proposed measure, the generalized cross-correlation, GCC, with single linkage identifies the related series as well as the independent series and as expected, it improves with the sample size. At scenario S.5, the ABC have a reasonable result since the strong dependence can be assimilated to a factor model. However, it is outperformed by \(\textit{GCC}\).

6.2 Factor model scenarios

In this section, we consider three scenarios proposed by Ando and Bai (2016). These scenarios use a dynamic factor model (DFM) with grouped factor structure as data generating process. They consider three groups, each one with three group-specific factors, \(r_{1}=r_{2}=r_{3}=3,\) and the same number of elements \(N_{1}=N_{2}=N_{3}\). In their simulation study, N was 300 and 600, and T was 100 and 200. The DFM can be expressed as

where \(G=\{g_{1},g_{2},g_{3}\}\) denotes the group membership, \(N_{j}\) is the number of elements of group j, \(j=\{1,2,3\}\), \(\varvec{x}_{it}\) is a \(p\times 1\) vector of observable variables and \(\varvec{f}_{g_{i},t}\) is an \(r_{j}\times 1\) vector of unobservable group-specific factors. The \(r_{j}\) dimensional factor of group j is a vector of Gaussian random variables having mean equal to j and unit variances, and the factors loading follows a N(0, j). The vector of observed variables, \(\varvec{x}_{i}\), has dimension \(80\times 1\) but only the first three variables are relevant since the parameter \(\varvec{\beta }\) was set as \(\varvec{\beta }=(1,2,3,0,0,\ldots ,0)^{\prime }\).

In model (26), the \(p\times 1\) vector of coefficients, \(\varvec{\beta }\), is assumed constant across group. Although Ando and Bai (2016) also consider group dependent coefficients the case of constant \(\varvec{\beta }\) is a more challenging case when we are interested in finding groups. In this paper, we concentrate our attention on this case.

The three scenarios assume that time series are obtained by model (26), but differ in the properties of the errors, \(\varepsilon _{i,t}:\)

-

1.

Scenario F.1: The errors terms, \(\varepsilon _{i,t}\), are assumed standard Gaussian.

-

2.

Scenario F.2: The errors are assumed heteroscedastic and have some cross-sectional dependence. In particular, \(\varepsilon _{i,t}=0.9\varepsilon _{i,t}^{1}+\delta _{t}\varepsilon _{i,t}^{2}\), where \(\delta _{t}=1\) if t is odd and zero otherwise, and the vectors \(\varvec{\varepsilon }^{1}=(\varepsilon _{1,t}^{1},\varepsilon _{2,t}^{1} ,\ldots ,\varepsilon _{N,t}^{1})^{\prime }\) and \(\varvec{\varepsilon }^{2} =(\varepsilon _{1,t}^{2},\varepsilon _{2,t}^{2},\ldots ,\varepsilon _{N,t} ^{2})^{\prime }\) follows a multivariate Gaussian distribution with mean \(\varvec{0}\) and covariance matrix \(\varvec{\Sigma }=(\sigma _{i,j})\) with \(\sigma _{i,j}=0.3^{|i-j|}\).

-

3.

Scenario F.3: The errors have serial and cross-sectional dependence. In particular, \(\varepsilon _{i,t}=0.2\varepsilon _{i,t-1}+e_{i,j}\) where the vector \(\varvec{e}_{t}=(e_{1,t},e_{2,t},\ldots ,e_{N,t})^{\prime }\) follows a multivariate Gaussian distribution with mean \(\varvec{0}\) and covariance matrix \(\varvec{\Sigma }=(\sigma _{i,j})\) with \(\sigma _{i,j} =0.3^{|i-j|}\).

Tables 5 and 6 report the means of the ARI measure from 1000 replicates for these three scenarios when \(N=300\) and \(T=100\) and 200 and Tables 7 and 8 for \(N=600\) and \(T=100\) and 200, respectively. Additionally, the Tables 9, 10, 11 and 12 provides information on selected k as well as the percentage of times where \(k^{u}=k_{l}\). In these scenarios, the selected number of lags, k, concentrates in the range 1–3 when \(T = 100\) and in the range 0–2 when \(T = 200\). The percentage of times where \(k^{u}=k_{l}\) was very low, which produces a moderate-hight computational cost due the estimation of regressions (24). It should be notice that we are estimating, at least, 89,700 regressions when \(N = 300\) (359,400 regressions when \(N = 600\)). The k selection step takes around 20.5 s when \(N = 300\) (82.2 s when \(N = 600\)) for each replicate using a personal computer with an Intel(R) Core(TM) i7 CPU 920 2.67GHz processor.

The three univariate measures have a poor performance on dependency clustering, as expected, since all series have the same autocorrelation structures. Also, as expected, their behavior do not improve with the sample size. The DCN measure also have a poor performance on dependency clustering. Here, we should to realize that DCN procedure considers two time series that are negatively correlated away. This is the case, for instance, when the factor loadings, \(\varvec{\lambda }_{g_{i},i}\), in model (26) have different sign than the factor loading, \(\varvec{\lambda }_{g_{i'},i'}\) with \(g_{i} = g_{i'}\), that is, the ith and \(i'\)th series belong to the same group but DCN concludes that they are far away. As in the case of univariate measure, it should be noticed that DCN is not designed for cross-dependency clustering.

The proposed measure GCC (with single and average linkages) has an almost perfect classification when \(T=200\). The GCC-single, GCC-average and PAM-GCC outperform ABC. It is surprising that they work better than ABC, as scenarios F.1–F.3 satisfy the required conditions for the ABC and the method use an iterative sophisticated estimation procedure. This result suggests that if we modify the clustering procedure built in the ABC procedure, that is, instead of clustering by a k-means algorithm applied to the estimated loadings we use the GCC measure the procedure will improve. To confirm this intuition, we perform an additional exercise where we run the full procedure proposed by Ando and Bai (2016) by now the initial clustering solution was obtained by the GCC-single, as proposed in this paper. We denote this procedure by ABC-GCC and the results are given in Tables 5–8. It is clear the improvement obtained by the new measure.

Note that both GCC and ABC improve their performance when T increases, and ABC also improve when N increases since the estimation method used in ABC benefits from a large number of series. GCC is slightly affected by the increase of N but this effect disappears when T increases. Essentially, GCC have comparable results with respect to ABC without using the information about the data generating model. The best results are obtained with ABC-GCC which combines a better starting point provided by the GCC and the use of a model-driven approach.

7 Clustering electricity prices

In this section, we consider a set of time series of hourly day-ahead prices for the New England electric market from January 2004 to December 2016. The data set is available at www.iso-ne.com. The New England region is divided in eight load zones: Connecticut (CT), Maine (ME), New Hampshire (NH), Rhode Island (RI), Vermont (VT), Northeastern Massachusetts and Boston (NEMA), Southeastern Massachusetts (SEMA) and Western/Central Massachusetts (WCMA). Each of the time series corresponds to the price of electricity in one of the eight regions at one of the 24 hours at a given weekday. For example, the first series corresponds to the price of electricity of the hour 1:00, on Thursday (since 01/01/2004 was Thursday), in the first region. Thus, we have 24x7x8=1344 time series of length 678 weeks. Notice that we have 365 (or 366) days each year for 13 years, making a total of 4749 days which are approximately 678 weeks. As an example, in Fig. 3, we represent the series of prices corresponding to hour 12th of Thursdays for the eight load zones and the aggregated load zone. We observed a common pattern in the evolution of these series.

7.1 Analysis of the aggregated zone series

In this section, we will study the 24x7=168 series corresponding to the aggregated zone. The analysis of this small data set will provide us some insights with respect to the larger one with 1344 series corresponding to the eight load zones, that will analyzed next. First, we take a “regular” difference of the series:

where \(P_{d,h}(w)\) denotes the price at weekday d, hour h, and week w with \(d=1,2,\ldots ,7\), \(h=1,2,\ldots ,24\), and \(w=2,3,\ldots ,678\). Notice that a “regular” difference in these series corresponds to a weekly seasonal difference in daily time series. It is well known that electricity price series have a strong weakly seasonality (see García-Martos and Conejo 2013) and this transformation will produce stationary time series. Some authors have argued that the electricity prices series exhibit a long memory behaviour (see, for instance, Koopman et al. 2007) but, to simplify the example, we do not explore this possibility and assume that the series \(X_{d,h}\) are stationary.

A hierarchical clustering procedure (single linkage) using the GCC measure to the 168 series, \(X_{d,h}\), gives the dendrogram presented in Fig. 5. In this case, the selected number of lags was \(k = 9\) (\(k_l = 7\), \(k^u = 9\) and the maximum considered lag was 35). This figure shows clear groups associated by the same weekday. This is to be expected, since the 24 hourly prices of a given day are simultaneously fixed in the daily market, producing a high cross-dependency. On the other hand, the univariate clustering and DCN procedures fails to detect this structure and make groups which are not related to the weekday. The adjusted Rand index for a seven clusters solution using QAC, SAC, PAC, DCN and GCC were 0.000, 0.002, 0.000, 0.225 and 0.819, respectively.

The number of clusters are selected by the Silhouette statistics (Rousseeuw 1987) and the GAP procedure (Tibshirani et al. 2001) and both lead to 13 clusters (see Fig. 4). It should be noted that the Silhouette statistic is more conclusive than the GAP statistic, since the latter increases again after 19 clusters. As mentioned in Tibshirani et al. (2001), this could suggest 13 well-separated clusters and more less-separated ones. These clusters result from the fact that the procedure first divides by day of the week (weekday) and second each day is split into sleeping and awake hours: (i) sleeping hours, 01th–06th (or 01th–07th on weekend), and (ii) awake hours, 07th–24th (08th–24th on weekend). It should be noticed that a series in a cluster is not necessarily independent to the series in another cluster. For instance, the series corresponding to hour 01th is not independent of series of hour 24th but since they are in different clusters, we can infer that the dependence between the series of hour 01th with, at least, one series in the set of hours 02th–06th is higher than the dependence between the series of hours 01th and 24th.

In order to gain insight on the obtained clusters, we will represent the set of time series as points in an ellipsoid graph where the nodes represent individual series and an edge between two nodes indicates strong cross-correlations as measured by a large value of the GCC measure (see Fig. 6). That is, an edge between nodes i and j appears if \(\textit{GCC}(X_{i},X_{j})\) is bigger than a given threshold. The threshold used to obtain Fig. 6 is based on the dendrogram in Fig. 5. In particular, we select the cutoff threshold, 0.162, such that the series are divided into two groups. In Fig. 6, we observed that the “sleeping hour” cluster exhibits an strong dependency, that is, all hours appear to be connected. Also, for the “awake hours” cluster we observed strong dependency among the series of hours from 10th to 22th but the dependencies decrease at the the hours at the frontiers of this cluster, that is the series corresponding to 07th–09th (08th–09th during weekend) and 23th–24th, that is, these series appear to have a chained dependence structure. Moreover, we observe that these dependencies change across the different days. This graph provides complementary information to the hierarchical structure of the dendrogram since it visualizes the dependencies between the series in the group.

Although the simulation experiments suggest that single linkage is preferable in presence of chained dependence structure, we also consider the complete and the average linkage. The adjusted Rand index for a seven clusters solution using single linkage versus complete and average linkage were 0.6495 and 0.8574, respectively. These values reveal a good level of concordance among the obtained clusters solutions.

7.2 Analysis of the eight load zones series

In this section, we will consider the 1344 time series corresponding to the eight load zones, that is, we have 168 series for each load zone. Figure 3 shows some examples of these series. As in the previous section, first, we take a regular difference of the series:

where \(P_{d,h,z}(w)\) denotes the price at weekday d, hour h, and week w at load zone z with \(d=1,2,\ldots ,7\), \(h=1,2,\ldots ,24\), \(w=2,3,\ldots ,678\) and \(z=1,2,\ldots ,8\). Secondly, we apply a hierarchical clustering procedure (single linkage) by using the GCC measure to the 1344 regular differentiated series, \(X_{d,h,z}\), obtaining the dendrogram presented in Fig. 7. We find a similar pattern of clusters than in the aggregated load zone.

In this data set, the Silhouette statistics and the GAP procedure provide different but plausible number of clusters, fourteen and seven, respectively. (see Fig. 8). Again, GAP statistics have additional increases suggesting more than seven well-separated clusters. The seven groups corresponds to the five working days, the weekend and an additional division of the Monday’s series. The fourteen groups in addition to the weekday takes into account two groups of hours in each day: (i) sleeping hours, 01th–06th (or 01th–07th on weekend), and (ii) the awake hours, 07th–24th (08th–24th on weekend). There is a cluster conformed by a single series (Monday, 10am, Maine). Figure 9 shows the series of prices corresponding to hour 10th of Mondays for the eight load zones and it is clear that the different pattern in Maine’s series is due to the presence of a few very large outliers.

Additionally, we have checked that the clusters found by the hierarchical clustering procedure are similar to the ones obtained by a k-means algorithm applied to the main principal coordinates using multidimensional scaling on matrix (25). 280 principal coordinates are selected that account for more than 90% of the variability. The adjusted Rand index for a seven and fourteen clusters solutions of hierarchical clustering (single linkage) and k-means procedures were 0.734 and 0.795, respectively. Figure 10 shows the biplot of the series on the two main principal coordinates (they account for 51% of the variability). Again, the groups associated to the same weekday are clearly identifiable.

As a conclusion, we observed that both clustering procedures using GCC measure reveal some interesting features of this large set of time series.

8 Conclusions

We have presented a novel procedure for clustering time series that takes into account their cross dependency. The procedure is based on pairwise measures involving the determinant of the cross correlation matrices from lag zero to lag k. A Monte Carlo study has shown the good performance of the cluster methods with respect to some alternatives.

The proposed procedure can be used both in exploratory analysis of a large set of time series that will help the modelling and, also, as a tool for improving the forecast of large sets of time series as studied with model based cluster by Wang et al. (2013). It has been shown that the proposed cluster methods can be very useful in building dynamic factor models with cluster structure, as studied by Ando and Bai (2016, 2017).

The results in this article can be extended in several directions. First, as it is well known and it has been shown in the electricity prices, cluster procedures can be sensitive to outliers and the proposed procedure can be improved by: (1) using robust estimation of the cross-correlation coefficients or; (2) applying an outlier cleaning method to the set of time series before the clustering is carried out. Second, for very large data sets other clustering algorithms can be applied, as those based on projections. Third, the method can be extended for some types of non linearity in time series. In the case of conditionally heteroscedastic time series the extension seems to be straightforward by using the cross correlation between squared residuals, but other more general types of nonlinearity could be considered. These problems will be the subject of further research.

References

Aghabozorgi, S., Wah, T.Y.: Clustering of large time series data sets. Intell. Data Anal. 18, 793–817 (2014)

Aghabozorgi, S., Shirkhorshidi, A.S., Wah, T.Y.: Time-series clustering—a decade review. Inf. Syst. 53, 16–38 (2015)

Alonso, A.M., Berrendero, J.R., Hernández, A., Justel, A.: Time series clustering based on forecast densities. Comput. Stat. Data Anal. 51, 762–766 (2006)

Anderson, T.W.: An Introduction to Multivariate Statistical Analysis, 2nd edn. Wiley, New York (1984)

Ando, T., Bai, J.: Panel data models with grouped factor structure under unknown group membership. J. Appl. Econom. 31, 163–191 (2016)

Ando, T., Bai, J.: Clustering huge number of financial time series: a panel data approach with high-dimensional predictor and factor structures. J. Am. Stat. Assoc. 112, 1182–1198 (2017)

Caiado, J., Crato, N., Peña, D.: A periodogram-based metric for time series classification. Comput. Stat. Data Anal. 50, 2668–2684 (2006)

Caiado, J., Maharaj, E.A., D’Urso, P.: Time Series Clustering. Handbook of Cluster Analysis. Chapman and Hall/CRC, Boca Raton (2015)

Corduas, M., Piccolo, D.: Time series clustering and classification by the autoregressive metric. Comput. Stat. Data Anal. 52, 1860–1872 (2008)

Davidson, J.: Stochastic Limit Theory. An Introduction for Econometricians. Oxford University Press, London (1994)

Douzal-Chouakria, A., Nagabhushan, P.N.: Adaptive dis- similarity index for measuring time series proximity. Adv. Data Anal. Classif. 1, 5–21 (2007)

D’Urso, P., Maharaj, E.A.: Autocorrelation-based fuzzy clustering of time series. Fuzzy Sets Syst. 160, 3565–3589 (2009)

D’Urso, P., Maharaj, E.A., Alonso, A.M.: Fuzzy clustering of time series using extremes. Fuzzy Sets Syst. 318, 56–79 (2017)

Fruhwirth-Schnatter, S., Kaufmann, S.: Model-based clustering of multiple time series. J. Bus. Econ. Stat. 26, 78–89 (2008)

García-Martos, C., Conejo, A.J.: Price forecasting techniques in power system. In: Webster, J. (ed.) Wiley Encyclopedia of Electrical and Electronics Engineering. Wiley, New York (2013)

Golay, X., Kollias, S., Stoll, G., Meier, D., Valavanis, A., Boesiger, P.: A new correlation-based fuzzy logic clustering algorithm for FMRI. Magn. Reson. Med. 40, 249–260 (2005)

Granger, C.W., Morris, M.J.: Time series modelling and interpretation. J. R. Stat. Soc. A 139, 246–257 (1976)

Hallin, M., Lippi, M.: Factor models in high-dimensional time series—a time-domain approach. Stoch. Process. Appl. 123, 2678–2695 (2013)

Hamilton, J.D.: Time Series Analysis. Princeton University Press, New Jersey (1994)

Hannan, E.J.: Multiple Time Series. Wiley, New York (1970)

Hennig, C.: Clustering strategy and method selection. In: Hennig, C., Meila, M., Murtagh, F., Rocci, R. (eds.) Handbook of Cluster Analysis, pp. 703–730. Chapman and Hall/CRC, Boca Raton (2015)

Hubert, L., Arabie, P.: Comparing partitions. J. Classif. 2, 193–218 (1985)

Kakizawa, Y., Shumway, R.H., Taniguchi, M.: Discrimination and clustering for multivariate time series. J. Am. Stat. Assoc. 93, 328–340 (1998)

Knapp, C., Carter, G.: The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech 24, 320–327 (1976)

Koopman, S.J., Ooms, M., Carnero, M.A.: Periodic seasonal Reg-ARFIMA-GARCH models for daily electricity spot prices. J. Am. Stat. Assoc. 102, 16–27 (2007)

Kullback, S.: Information Theory and Statistics. Dover, New York (1968)

Lafuente-Rego, B., Vilar, J.A.: Clustering of time series using quantile autocovariances. Adv. Data Anal. Classif. 10, 391–415 (2015)

Lam, C., Yao, Q.: Factor modeling for high-dimensional time series: inference for the number of factors. Ann. Stat. 40, 694–726 (2012)

Larsen, B., Aone, C.: Fast and effective text mining using linear time document clustering. In: Proceedings of the Conference on Knowledge Discovery and Data Mining, pp. 16–22 (1999)

Liao, T.W.: Clustering of time series data—a survey. Pattern Recogn. 38, 1857–1874 (2005)

Lütkepohl, H.: Handbook of Matrices. Wiley, New York (1996)

Maharaj, E.A.: Comparison of non-stationary time series in the frequency domain. Comput. Stat. Data Anal 40, 131–141 (2002)

Maharaj, E.A., D’Urso, P.: Fuzzy clustering of time series in the frequency domain. Inf. Sci. 181, 1187–1211 (2011)

Mahdi, E., McLeod, I.A.: Improved multivariate portmanteau test. J. Time Ser. Anal. 33, 211–222 (2012)

Meilă, M.: Comparing clusterings—an information based distance. J. Multivar. Anal. 98, 873–895 (2007)

Montero, P., Vilar, J.: TSclust: an R package for time series clustering. J. Stat. Softw. 62, 1–43 (2014)

Pamminger, C., Fruhwirth-Schnatter, S.: Model-based clustering of categorical time series. Bayesian Anal. 2, 345–368 (2010)

Peña, D., Box, G.E.P.: Identifying a simplifying structure in time series. J. Am. Stat. Assoc. 82, 836–843 (1987)

Peña, D., Rodríguez, J.: A powerful portmanteau test of lack of test for time series. J. Am. Stat. Assoc. 97, 601–610 (2002)

Peña, D., Rodríguez, J.: Descriptive measures of multivariate scatter and linear dependence. J. Multivar. Anal. 85, 361–374 (2003)

Pértega, S., Vilar, J.A.: Comparing several parametric and nonparametric approaches to time series clustering: a simulation study. J. Classif. 27, 333–362 (2010)

Piccolo, D.: A distance measure for classifying ARMA models. J. Time Ser. Anal. 2, 153–163 (1990)

Robbins, M.W., Fisher, T.J.: Cross-correlation matrices for tests of independence and causality between two multivariate time series. J. Bus. Econ. Stat. 33, 459–473 (2015)

Rousseeuw, P.J.: Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. Comput. Appl. Math. 20, 53–65 (1987)

Sadahiro, Y., Kobayashi, T.: Exploratory analysis of time series data: detection of partial similarities, clustering, and visualization. Comput. Environ. Urban 45, 24–33 (2014)

Scotto, M.G., Barbosa, S.M., Alonso, A.M.: Extreme value and cluster analysis of European daily temperature series. J. Appl. Stat. 38, 2793–2804 (2011)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 58, 267–288 (1996)

Tibshirani, R., Walther, G., Hastie, T.: Estimating the number of clusters in a data set via the gap statistic. J. R. Stat. Soc. B 63, 411–423 (2001)

Vilar-Fernández, J.A., Alonso, A.M., Vilar-Fernández, J.M.: Nonlinear time series clustering based on nonparametric forecast densities. Comput. Stat. Data Anal. 54, 2850–2865 (2010)

Vilar, J.A., Lafuente-Rego, B., D’Urso, P.: Quantile autocovariances: a powerful tool for hard and soft partitional clustering of time series. Fuzzy Sets Syst. 340, 38–72 (2018)

Wang, Y., Tsay, R.S., Ledolter, J., Shrestha, K.M.: Forecasting simultaneously high-dimensional time series: a robust model-based clustering approach. J. Forecast. 32, 673–684 (2013)

Xiong, Y., Yeung, D.: Time series clustering with ARMA mixtures. Pattern Recogn. 37, 1675–1689 (2004)

Zhang, X., Liu, J., Du, Y., Lv, T.: A novel clustering method on time series data. Expert Syst. Appl. 38, 11891–11900 (2011)

Zhang, T.: Clustering high-dimensional time series based on parallelism. J. Am. Stat. Assoc. 108, 577–588 (2013)

Acknowledgements

This research has been supported by Consejo Superior de Investigaciones Científicas (Grant No. ECO2015-66593-P) of MINECO/FEDER/UE. We thanks to professor Tomohiro Ando for making available their code and kindly answer some questions regarding the implementation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Alonso, A.M., Peña, D. Clustering time series by linear dependency. Stat Comput 29, 655–676 (2019). https://doi.org/10.1007/s11222-018-9830-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-018-9830-6