Abstract

This study analyses scientific fraud in the European Union by examining retractions due to Falsification, Fabrication and Plagiarism (FFP) where at least one researcher is affiliated to an EU member country. The information on publications retracted due to FFP is based on the records in the Retraction Watch Database up to 31/05/2020 and they are also reviewed in Web of Science. A total of 662 retractions due to FFP were obtained, corresponding to 24.46% of all retractions in the EU. Germany is first in the European ranking for FFP, Holland in the ranking for FF, and Italy in that for Plagiarism. 60.83% of the articles retracted due to FFP are from the Life Science and Biomedicine field. More than 75% of the articles have been published in journals that form part of the JCR and have an impact factor. There is also extensive citation of the retracted documents. Misconduct due to FFP causes a significant loss of resources and reputation, with severe effects for the authors, publishers, and institutions. It is recommended that the EU continue to apply policies and guidelines to harmonise criteria in relation to FFP, as well as to prevent and avoid scientific misconduct.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Fraudulent research practices, mainly those caused by ethical misconduct or an intentional distortion of the scientific reality, cause great damage to people, institutions and the development of science itself. These harmful behaviours are a violation of the rules of communication, transparency and integrity of scientific research.

Wager and Kleinert (2012) consider misconduct in its broadest sense to include any practice that may affect the reliability of the research record in terms of findings, conclusions or attribution. For Franzen et al. (2007) “fraud is defined by the intention to deceive as compared to error or carelessness, and is commonly classified into three categories: fabrication, falsification or plagiarism (FFP)”. This is also addressed by the European Code of Conduct for Research Integrity (ALLEA, 2018), The Office of Research Integrity (ORI) (2020) and the Organization for Economic Co-operation and Development (OECD)-Global Science Forum (OECD, Global Science Forum, 2008), which define research misconduct within the parameters of FFP and interpret these concepts as:

-

fabrication: inventing results and recording them as if they were real;

-

falsification: manipulating materials, equipment or research processes, or changing, omitting, or deleting data or results without justification.

-

plagiarism: using other people’s work or ideas without giving proper credit to the original source, thus violating the original author's rights over their intellectual results.

The guidelines of the International Committee of Medical Journal Editors (IDMJE) (2019) also consider scientific misconduct to comprise the fabrication and falsification of data, including image manipulation, and plagiarism. These three aspects of the violation of scientific ethics, which make up FFP, are seen by many authors as the most serious categories associated with scientific fraud (Barde et al., 2020; Bhutta & Crane, 2014; Dubois, et al., 2013; Hiney, 2015; Resnik, 2019), as they damage the credibility of the research process and are adverse practices that should be considered unacceptable (ALLEA, 2018).

Many articles and editorials have addressed the issue of misconduct in scientific publications, noting an increase in the number of retractions over the years. This is mainly a result of scientific fraud, especially in the field of Biomedicine and its specialities (Campos-Varela & Ruano-Ravina, 2019; Fang et al., 2012; Budd et al., 2011; Chambers et al., 2019; Rapani et al., 2020; Mena et al., 2019; Samp et al., 2012).

Studies based on surveys also show that the number of publications involving misconduct and fraud has reached disturbing levels (Godecharle et al., 2018; Martinson et al., 2005; Pupovac & Fanelli, 2015; Tavare, 2012). In a widely cited meta-analysis of surveys on misconduct in research, it was revealed that almost 2% of the scientists interviewed admitted to having fabricated, falsified or modified research data at least once, and over 14% of the scientists said that they were aware of colleagues who had falsified data (Fanelli, 2009).

However, it is important to clarify that scientific misconduct excludes honest scientific errors, interpretations or disagreements (which are a natural part of the research process) from deliberate acts intended to mislead others (Dooley & Kerch, 2000; Steen, 2011). As indicated by Resnik and Stewart (2012), it is necessary to differentiate between misconduct or fraudulent actions and honest errors, given that the former are serious accusations that have potentially dire consequences for the careers of many researchers and may even lead to the loss of funds or employment and legal action. For this reason, they should be reserved for very severe and dangerous cases or ethical violations.

According to an international study, 22 countries out of the top 40 that finance research and development in the world (55%) have a national policy that includes misconduct (Resnik et al., 2015). This goes to show that misconduct, and specifically FFP, is of major international concern. As stated by Dal-Ré et al. (2020), this definition of FFP to define research fraud has been used for more than 25 years, although other types of misconduct have also been added to ethical codes and national laws.

The main reasons for misconduct in research relate to organisational, structural, sociological and psychological variables. The most frequent are:

-

Factors linked to the pressure to publish (“publish or perish”): these occur in highly competitive academic environments, leading to professional pressure and scientific bias, together with other environmental factors associated with the work environment and exposure to stressful situations (Barde et al., 2020; Davis et al., 2007; George, 2016; Gupta, 2013; Mumford et al., 2007; Parlangeli et al., 2020; Roland, 2007; Zhang & Grieneisen, 2013).

-

Factors associated with personality traits, caused by excessive personal ambition, a desire for fame and promotion, fear of failure, megalomania, narcissistic tendencies, cynicism, lack of confidence and self-esteem or simply acting immorally due to having a somewhat deceptive nature or being a “bad apple” (Huistra & Paul, 2021; Rahman & Ankier, 2020; George, 2016; Tijdink et al., 2016; Dubois, et al., 2013; Gupta, 2013; Sovacool, 2008; Davis et al., 2007; Mumford et al., 2006).

-

Factors related to the strong competition for funding, where there are scarce resources and many interested parties (Anderson et al., 2007).

-

Factors linked to poor research practices, where variables related to inadequate supervision and a lack of experience may have an influence (Dubois, et al., 2013; Kalichman, 2020; Wright et al., 2008).

-

Factors derived from an absence or lack of harmonisation in policies, guidelines and codes of integrity or conduct that affect countries, institutions or publishers, which work to prevent or monitor misconduct and also as a deterrent for possible offenders or protection for complainants (Fanelli et al., 2015; Resnik et al., 2015; Bosch, 2014; Godecharle et al., 2014; Kornfeld, 2012; Bosch et. al, 2012).

-

Finally, factors resulting from cultural causes, where it is considered that sociocultural background may influence misconduct (Davis, 2003; Li & Cornelis, 2021).

Retractions have become the main publication format by which scientific misconduct is made visible and they are providing a new empirical basis for misconduct research (Hesselmann et al., 2017). Their objective is not to punish the authors who behave improperly, but to correct the scientific literature and guarantee its integrity. It is therefore a correction mechanism and an alert for readers. These alerts usually consist of brief warnings that frequently convey little, and in many cases imprecise, information about the specific nature of the possible changes made, the causes and the implications (Fanelli et al., 2018). Retractions must also be handled with great care; withdrawing a published article may not be easy and is one of the most serious sanctions for researchers, which may cause irreparable damage to their reputation and academic career (Wager & Williams, 2011).

Some research projects have analysed retractions on an international scale, providing information on the incidence of misconduct by country (Amos, 2014; Fanelli et al., 2015; Grieneisen & Zhang, 2012; He, 2013; Ribeiro & Vasconcelos, 2018; Steen, 2011; Zhang & Grieneisen, 2013; Zhang et al., 2020), but none of these investigate the case of the European Union in a disaggregated way, studying the 27 countries and their relationship with FFP.

This study analyses scientific fraud due to FFP in the European Union (EU) by examining the retractions in which at least one researcher, author or co-author appears who is affiliated to any of the 27 EU member countries. To interpret this phenomenon, the following have been established as specific objectives:

-

1.

To analyse the position for retractions due to FFP, FF, and Plagiarism, and to establish a ranking among these countries;

-

2.

To analyse the evolution over time of documents retracted due to FFP;

-

3.

To identify the main characteristics related to the authors and collaboration;

-

4.

To determine the main areas of research and categories to which the retracted publications belong;

-

5.

To study the number of articles involving FFP that had been funded;

-

6.

To identify the citation of articles retracted due to FFP; and

-

7.

To examine the type of document, publication and impact factor.

Methodology

This study uses a quantitative methodology. The study was carried out from February to May 2020, using The Retraction Watch Database (RWD), version 1.0.6.0. RWD is the most comprehensive specialised database on retractions. As of 31/05/2020, it included a total of 21,855 retractions. All retractions selected were also examined in the Web of Science (WOS) Core Collection database.

Search grouped by EU country

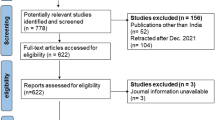

In the first stage, all EU member countries were identified and selected and the FFP-type descriptors relating to the reasons for the retraction were used. This was followed by filtering for the nature of the “Retraction” notification and the results were limited to publication date of the original article up to 01/05/2020, and publication date of the retraction up to 31/05/2020 (Fig. 1). The number of records retrieved was 665.

As RWD limits the display of records in a single search to a maximum of 600, two alternative searches were carried out. Both searches contained the same parameters identified for the general search, but the reasons for retraction were separated; first to search for Falsification/Fabrication (FF), and second for Plagiarism. The search relating to FF retrieved a total of 257 records and the search for plagiarism a total of 416 records. The two searches provided a combined total of 673 records for FFP. From these records, a total of eight were duplicated, as they included descriptors related to FF and Plagiarism (Falsification/Fabrication AND Plagiarism) as the reason for retraction. These records were discarded, leaving an initial set of 665 records. During the review, three records were discarded after observing an error in the classification of the authors' country of affiliation. The total number of records was 662.

Individual search by EU country

For the individual analysis by country, the RWD was searched 27 times using the same methodology and the same fields, but restricted to each EU member country (Fig. 2):

The total for the EU countries was 738 records, with 297 records corresponding to FF and 441 to Plagiarism. Eight duplicate records and three erroneous records were discarded. Also excluded from the general calculation, but not the individual one, were another 65 records that were duplicated due to collaboration between authors from different EU member countries. The total number of records was 662 (Fig. 3).

Localisation, registration, and verification of data in WOS

570 retractions were located from the DOI of the publication and another 83 through searches in Pubmed and Google, or directly through the publisher's website. Only nine retractions could not be located and the information from the RWD registry was taken as reference. Next, the following characteristics related to them were recorded and classified in an Excel file: affiliation country of the author(s), title, reason(s) for retraction (according to FFP descriptors), title of the publication, publisher, number of authors, publication date, retraction date, article type, and DOI.

The 662 retractions selected were verified in the WOS database, retrieving 478 records. From these records, the following additional information was obtained: number of times cited, funding details, research areas and general category or area of knowledge into which the research is classified. The number of citations was also reviewed to check those documents that had been cited after their retraction. The number of funded articles and the number of funding organisations was checked, noting the data related to: funding organisations, country, and number or code of the grant. In terms of the data on the research areas and general category, these were linked to the WOS descriptors. The 184 records from RWD that do not appear in WOS were completed and standardised with the WOS categories. The information on the impact factor of the journals corresponds to the data for 2019 that appear in the Incites Journal Citation Reports database of Clarivate Analytics. All these additional data were also added to the Excel file.

Descriptive statistics are used to present the data as counts and percentages.

Results

Retractions due to FFP in the EU

As of the date of consultation, the RWD database contains a total of 21,855 retractions, and 2,706 are from EU member countries (12.38%). Of the total retractions, 3,755 are retractions for reasons associated with FFP without duplicates, and 662 correspond to retractions due to FFP involving EU member countries (17.63%). Of the 2,706 retractions involving the EU, the 662 retractions due to FFP correspond to 24.46% of the total.

In terms of the individual data by country, three rankings have been established according to the reasons for the retraction; due to FFP, due to FF and due to plagiarism.

Ranking due to FFP

Considering the individual calculation of retractions due to FFP involving EU member countries, the result is 727 valid records once the eight duplicate and three erroneous records have been discarded. Table 1 shows the cumulative data due to FFP, with Germany being the country with the highest number of retractions due to FFP, followed by Italy, the Netherlands, France, and Spain. Figure 4 shows the FFP situation in the EU as a map.

Ranking due to FF

In a general search for FF, the total number of retractions was 1,439, of which 297 (20.64%) correspond to EU countries. This ranking shows the Netherlands in first position, followed by Germany, France, Italy and Sweden (Table 2). It also shows the data corresponding to the different reasons for retraction due to FF: due to data (74.63%), image (17.99%) or results (7.37%). It must be taken into account that we can find several descriptors related to different elements of FF in a single record.

Ranking due to plagiarism

About plagiarism, the total number of retractions in RWD is 2,364, of which 438 (18.53%) correspond to the EU, discarding the three localised errors in classification by country. Italy is shown to be in first position, followed by France, Romania, Spain and Germany (Table 3). The different elements leading to retraction due to plagiarism are: article (41,09%), text (26.73%), euphemisms for plagiarism (22.77%), data (5.12%) and image (4.29%). As in the case of FF, it must be considered that it is possible to find several descriptors related to different elements of plagiarism in a single record.

Evolution over time of the retractions

Figure 5 shows two complete decades of retractions (1999–2019), with three variables relating to cumulative data due to FFP, FF and Plagiarism. Retracted documents have been studied from 1977 to May 2020. The number of retractions due to FFP grew considerably between 2007 and 2012. A downward trend can be observed since 2012. 2012 should be noted as the year with the maximum cumulative value for FFP.

In the case of FF, the trend is similar to that for FFP, with an increase between 2007 and 2012 and a decrease from that year to 2019. The maximum value is also obtained in 2012, with more than double the retractions for this reason than in all other years. With regard to Plagiarism, 2006 sees the start of an upward trend that lasts until practically 2016 when it reached its maximum value. Plagiarism has always exceeded FF, except in the years 2003, 2012 and 2013.

The average time between the publication of an article and its retraction due to FFP is 44.26 months. In the case of FF this time period increases to approximately 66 months, and in the case of Plagiarism it falls to 35 months. The longest period of time elapsed between the publication date (10/01/1985) of an article and its retraction (02/26/2018) was 11,836 days (more than 32 years) and this was due to a case of FF. There are 262 documents (39.58%) with one year or less between the publication of the article and its retraction, and there are 400 (60.42%) if this is increased to more than one year.

Characteristics related to the authors and collaboration

Publications retracted and signed by a single author amount to 124 (18.73%), for two authors this is 135 (20.39%), for three it is a total of 83 (12.54%) and for more than three authors we have 320 (48.34%). The 662 records are signed by a total of 2,933 authors, between authors affiliated to the EU and authors from other collaborating countries. Of these authors, a total of 303 (10.33%) are repeat offenders who have more than one retraction. Specifically, there are 206 (67.99%) with 2 retractions, 45 (14.85%) with 3,14 (4.62%) with 4, and 38 (12.54%) with more than 5. In Table 4 we can see the ranking of repeat offenders (authors and co-authors) with multiple retractions due to FFP. The table also includes the total number of retractions. Many of these retractions are currently under investigation and could become FFP-classified retractions in the future.

There are co-authorship relationships between: Stapel and Ruys; Jendryczko and Drozdz; Herrmann, Brach, Mertelsmann and Gruss; Memon, Hicks and Larsen; Bolt, Hempelmann and Dapper; Bezouska and Kren.

A total of 175 records (26.44%) include collaboration between authors from the EU and other countries, 91 (52%) of which are records relating to FF and 84 (48%) to Plagiarism. The country with the highest number of collaborations involving FFP is the United States with 80 (45.71%) of the 175 records, followed by China with 18 (10.29%) and the United Kingdom with 11 (6.29%). With regard to collaborations involving FF, the United States leads the results again with a total of 65 records (71.43%) out of the 91, and it is followed by the United Kingdom and Switzerland with eight (8.79%). In the case of Plagiarism, China leads the way with 16 records (19.05%) out of the total of 84, followed by the United States with 15 (17.85%) and Pakistan with 7 (8.33%).

Retractions by research area and category

Regarding research areas, 60.83% of retracted articles are part of the Life Science and Biomedicine (LSB) Area, 15.43% are from Social Sciences (SS) and 13.65% from Technology (T). To a lesser extent, 5.79% are found in articles on Physical Sciences (PS) and 4.30% in those on Arts and Humanities (AH) (Fig. 6).

The records have been classified into 107 categories that form part of the above five research areas. The first ten categories in order of importance are: Biochemistry & Molecular Biology (6%, LSB), Psychology (5.64%, SS), Cell Biology (5.27%, LSB), Cardiovascular System & Cardiology (3.27%, LSB), Surgery (3.27%, LSB), Oncology (3%, LSB), Neurosciences & Neurology (2.91%, LSB), Science & Technology—Other Topics (2.91%, T), Engineering (2.55%, T) and General & Internal Medicine (2.45%, LSB). The leading category in Physical Sciences is Chemistry, and this is in position 13 of this ranking (2.09%, PS). About Arts and Humanities, Literature comes first, and this is in position 34 (1%, AH).

Funded documents

Of the 662 records, 478 were in WOS, and 124 of these were funded (25.94%). If the country of origin of the funding body is taken into account, the main funder is the United States with 27 records (21.77%), followed by Italy with 12 records (9.68%), Germany, Spain, and China with 8 (6.45% each), the European Union itself with 7 (5.65%), Poland and Sweden with 5 (4.03% each), France, Czechia, Ireland, Portugal and the United Kingdom with 4 (3.23% each), Japan with 3 (2.42%), and the others with 21 records (16.94%).

In terms of funding bodies, a total of 316 organisations have provided funding (governmental bodies, universities, foundations, laboratories, associations, etc.). It is worth highlighting the following: US Department of Health & Human Services, National Institutes of Health (NIH) whose funding is associated with 19 records (15.32%), the European Union through funds and projects with 7 records (5.65%), the Italian Government, mainly through the Ministry of Education, Universities and Research (MIUR), with 7 records (5.65%), the German Research Foundation with 6 records (4.84%), the Spanish Government, mainly through the Ministry of Economic and Business Affairs (MINECO), with 6 records (4.84%), the Portuguese Foundation for Science and Technology with 5 records (4.03%), and The Czech Science Foundation (also known as the Grant Agency of the Czech Republic) with 5 records (4.03%). If we look at the breakdown of funding, we have FF with 80 records (64.52%) which is almost double the number for Plagiarism, which has 44 (35.48%).

Citation of the retracted articles

The number of records that have been cited is 418 (63.14%). Of these, 210 have been retracted due to FF and 208 due to Plagiarism. Of the 418 records cited, 350 (83.73%) were cited after retraction. A total of 172 of these correspond to FF (49.14%), and 178 to Plagiarism (50.86%). The records with the highest number of citations after their retraction were those retracted due to Plagiarism, with 1 particular record, published in 2005, having more than 150 citations. There are 3 records with over 100 citations, published in 2002, 2012, and 2015. There are 16 records (12 due to Plagiarism and 4 due to FF) with over 50 citations, and the remaining 330 have fewer than 50 citations.

Type of document, publication, and impact factor

In terms of the type of document: 460 (69.49%) are research articles, 109 (16.47%) are review articles, 42 (6.34%) are clinical studies, 14 (2.11%) are book chapters/reference works, 14 (2.11%) are case reports, 11 (1.66%) are conference papers, 4 (0.60%) are editorials, 2 (0.30%) are meta-analyses and the remaining 6 (0.91%) correspond to other unclassified types.

The 662 records are related to a total of 448 publications, 337 (75.22%) of which are included in the Journal Citation Report (JCR) and have an impact factor. Table 5 shows the titles of the journals with the highest number of articles retracted due to FFP and their impact factor. The number of records included in journals in the JCR is 483 (72.96%), compared to 179 (27.04%) that are not.

Discussion

Retractions due to FFP in the EU account for only 662 of the retractions recorded in RWD. Many authors agree that retractions are a very limited phenomenon, when compared to other types of publication (Amos, 2014; Wager & Williams, 2011). According to Fanelli (2014), “retractions represent less than 0.02% of annual publications”. Brainard and You (2018) add that “about four of every 10,000 papers are retracted”. They therefore seem to be a rarity, but their severity and significant impact mean that they cannot be ignored.

Fang et al. (2012) point out that retractions show distinctive time and geographic patterns that may reveal underlying causes. There is some similarity between these data and those presented in our study up to 2012. The annual average number of retractions due to FFP in the decade from 2009 to 2019 was approximately 289 and in the EU, it was 49, with 19 dues to FF and 30 dues to Plagiarism. Since 2006, the number of retractions due to FFP has increased, increasing more than tenfold by 2012. The most productive countries in the EU also present the highest number of retractions due to FFP (Germany, Italy, Holland, France).

Many of these European retractions seem to follow a common pattern associated with the identification of repeat offenders. So, in each country there are one or more people adopting immoral behaviour (“every flock has its black sheep”) who at times cause the statistics to sky-rocket. When one of these cases is brought to light, it seems to slowly have a knock-on effect on all of the research by that author, and consequently the number of retractions from that country increases. Several studies have identified that the increase in the retraction rate is due to the impact of repeat offenders (Shuai et al., 2017; Steen et al., 2013; Zhang & Grieneisen, 2013), authors who produce multiple retractions and are known as “prolific retractors” (Fanelli et al., 2015).

This is the case, for example, with Diederik Stapel with 53 retractions due to FF, occupying fifth position on The Retraction Watch Leaderboard (2020), with clear suspicions of fraud due to data fabrication. This author, with a promising career and dean of the Faculty where he worked, published in the prestigious journal Science. However, his deception was exposed, leading to extensive media coverage, for example with reports in The New York Times (Bhattarcharjee, 2013). His actions led to his dismissal and the scandal had even greater consequences, seriously damaging the image of research in his field of knowledge, in Social Psychology and in Social Sciences in general (Stroebe et al., 2012). Another Dutch case is that of the anthropologist Mart Bax, who is suspected of having invented not only data, but also complete articles. Holland’s leading position in the European ranking due to FF seems to be mainly determined by the 61 retractions (72.62%) associated with these authors.

It is also worth mentioning the case of the German Joachim Boldt with over 103 retractions in the field of intensive medicine and anaesthesia, although only 10 are currently classified as FF. This author holds a disgraceful European record for retractions and the second position worldwide (The Retraction Watch Leaderboard, 2020), with works published mainly in the 1990s. These started to be retracted in December 2010 (Hemmings & Shafer, 2020) and they are still being investigated and retracted (more than 18 in 2020). Many of his studies had supported the alleged efficacy of intravenous solutions containing hydroxyethyl starch or hetastarch to stabilise blood pressure in patients after surgery or trauma, misrepresenting the risks and putting patients at risk (Marcus, 2018). His actions, in addition to having serious implications for clinical practice, led to his dismissal (Wise, 2013) and the German authorities also considered filing criminal charges (Marcus, 2018).

We must face the fact that “a fraudulent article looks much the same as a nonfraudulent one” (Trikalinos et al., 2008). FF frauds are hidden among real methodologies, with apparently truthful data and results, and disguised as honest. They are costly to replicate, in both time and money, and have undergone an assessment process and peer review, generally by prestigious journals. They are frauds where the data is frequently modified to suit the objectives, hypotheses or results. It is therefore very difficult to detect them. Kornfeld and Titus (2016) warn that 10 to12 people are convicted of fraud by the ORI each year, which are deceptively low figures.

Fraud due to FF can have different consequences and may lead to a loss of employment or qualifications, fines or even the launching of criminal investigations. Stroebe et al. (2012) outline four types of effects of scientific fraud: a) that may damage the reputation and professional career of its authors and co-authors, b) in the case of clinical research, that may endanger people, c) that may delay scientific progress and waste very valuable resources, and finally, d) that may damage the image of science as a whole and undermine trust in it.

As a result of all these circumstances, retracting an article requires well-founded suspicions that may arise during the peer review or be raised by readers experts on the subject. When this occurs, publishers must exercise great caution, especially in cases of FFP, as they must request clarification, collect evidence, request institutional investigations and respond, lastly and when necessary, by issuing a retraction or correction. Publishers are therefore obliged to report and warn about possible suspicions. However, they are often faced with the dilemma of not wanting to hinder possible ongoing cases or become involved in litigation. Moreover, a retraction does not always come from the publisher; occasionally it is the authors, collaborators, institutions or ethics committees themselves who launch an investigation into any suspicions or recommend withdrawal. In any case, retracting is not easy and openly declaring that it is for a fraudulent reason is very delicate and complex.

In the case of plagiarism, the Polish Andrzej Jendryczko is the most prolific researcher with a total of 17 retractions, between 1985 and 1996, and whose case was brought to light thanks to new technologies and Medline searches (Marshall, 1998). Plagiarism is, a priori, the easiest form of fraud to identify. Its effects, as indicated by Fanelli (2009), do not distort scientific knowledge, although they have significant consequences on the careers of the people involved and the institutions implicated. This fraudulent behaviour is much less sophisticated as it involves copying complete articles or texts with exact phrases.

The considerable number of retractions that are presented with disguised and euphemistic ways to suggest plagiarism should not be underestimated. For example: “significant textual, unattributed or excessive overlap”, “reused and unauthorized use of material”, “several citations were lost”, “shows extensive similarities with earlier publications” or “borrowing large portions of text”. However, a considerable improvement in retraction notices has been observed in recent years, where some publishers clearly identify plagiarism, providing detailed information and citing original sources to correct matters and compensate the wronged authors.

The new technologies and the social and academic networks seem to be playing a very important role in identifying fraud. In the case of plagiarism, they are providing resources aimed at achieving early detection. It is also worth highlighting the role of specialised publications such as Retraction WatchFootnote 1 and PubPeer,Footnote 2 which are key to monitoring, dissemination and transparency in many of these cases. And, of course, the regulatory bodies such as the US Office of Research Integrity (ORI)Footnote 3 and the institutional ethics committees, which widely contribute to alleviating and reporting scientific fraud. Specifically in Europe, it is worth highlighting the work of the European Research Council (ERC),Footnote 4 a body reporting to the European Commission and whose Scientific Council has the Standing Committee on Conflict of Interest, Scientific Misconduct and Ethical Issues (CoIME), which is responsible for drawing up guidelines regarding fraud, conflicts of interest and other related ethical issues. The CoIME works with the ERC Executive Agency (ERCEA)Footnote 5 on the approach, notification and maintenance of records of suspected cases of scientific misconduct (European Research Council, 2018; European Research Council, 2012). It is also worth highlighting the important contribution of ALLEA, the European Federation of Academies of Sciences and Humanities,Footnote 6 founded in 1994, which currently brings together 59 academies from over 40 European countries and which has the ALLEA Permanent Working Group on Science and Ethics (PWGSE) (ALLEA, 2018).

Retracted articles continue to be cited, sometimes for decades afterwards, at relatively high rates (Fang & Casadevall, 2011; Hagberg, 2020). The continuous citation of works retracted due to fraud causes a problem and a significant risk to the integrity of new and future research (Bar-Ilan & Halevi, 2018), as erroneous information or false data are propagated over time, which can have serious implications. This has occurred with an article retracted in 2008 that received citations continuously for eleven years after its retraction. In it, false data were reported regarding a nutritional treatment whose supposed benefit has circulated widely among researchers and clinical staff, increasing the risk of incorrect treatment for patients (Schneider et al., 2020).

In our study, it has been possible to observe a high percentage of articles cited after their retraction, mostly in Biomedicine. It is thought that the repeated citation of retracted works tends to occur by accident or through carelessness (Schneider et al., 2020) and may be due to various circumstances: lack of clarity about the reasons for the retraction (Theis-Mahon & Bakker, 2020), citation of articles in printed journals, without awareness of the subsequent retraction (Couzin & Unger, 2006) or indirect citation without checking the status of the original sources (Budd et al., 2016).

We have also seen that more than 70% of the articles are in JCR journals and have an impact factor, which leads to a greater impact and influence on the scientific community and, therefore, many more possibilities of replicating false data and information. Noyori and Richmond (2013) consider that the incentives to publish in high impact journals, in terms of professional development and obtaining research grants, may be an important stimulus driving fraudulent authors. Likewise, Fang and Casadevall (2011) suggest the existence of a correlation between retractions and publication in journals with a high impact factor. This may be due to the greater recognition and reward that can be gained by authors which in turn encourages misbehaviour by some authors when presenting their work. They also add that the high demands of these journals in their review and quality processes may lead some authors to take the poor decision to manipulate data in order to be able to meet the high expectations of these publications (Fang & Casadevall, 2011).

The risk in many cases comes from people who believe that personal or scientific success comes not from merit and hard work, but from questionable practices and short cuts (Chapman & Lindner, 2016). In any case, the decision to take short cuts in order to gain a benefit, in addition to be a breach of ethical standards, can be very risky, as the visibility of these publications means that the papers are more exposed.

The fact that research is mainly funded with public funds and that faulty science threatens public welfare can also not be ignored (Pickett & Roche, 2018). In a study carried out by Stern et al. (2014) on 290 articles selected from the ORI database and retracted due to misconduct, between 1992 and 2012, it is calculated that the average of each article retracted and funded by the U.S. National Institutes of Health (NIH) was a direct cost of around $392,582. If we were to use this average direct cost figure as a reference for the 662 articles retracted due to FFP in the EU, the total figure would stand at US$259,889,284.

Another study carried out by Gammon and Franzini (2013) on 17 cases of misconduct reported by the ORI, using a model based on a sequential mixed method to define different elements related to cost, determined that the cost ranges between US$116,160 and US$2,192,620. Both studies confirm that FFP cases may lead to losses of millions of dollars in research grants. In any case, they are amounts that represent a high price to be paid by the bodies that fund projects, whether public or private. It is also worth adding the mistrust that is generated among funders, which may threaten future investments.

Study limitations

It was not possible to obtain information corresponding to citations received and funding for 184 records that did not appear in WOS and this may affect the results of the corresponding section. Nor have we analysed the citations received from other sources, such as Scopus and Google Scholar.

Conclusions

Scientific publications come with a guarantee that is necessary for the dissemination and credibility of science. Their development and publication require respect for some fundamental ethical standards based on accuracy and on the rigour and integrity of scientists.

Retractions are an important communication tool for correcting science and warning about those studies that are no longer valid or reliable. They are therefore a correction mechanism when the existence of honest errors requires this, but they are also a prevention system that alerts us to fraud. Retractions as a system could be improved. They could identify the reasons in a standardised manner and be more explicit and transparent to avoid possible confusion. They could also improve their dissemination mechanism to avoid the ongoing citing of some works.

Retractions due to FFP represent 24.46% of all retractions published in the EU. Germany is first in the European ranking due to FFP, Holland is first due to FF and Italy is first due to Plagiarism. There are a higher number of retractions due to Plagiarism (438) than FF (297). In the period 2012–2019, we see a downward trend in retractions due to FFP in the EU. The average time between the publication of an article and its retraction due to FFP is 44.26 months.

The existence of repeat offenders is a significant factor, but there are still many non-repeat offenders also involved in FFP cases. Fraudulent information causes a significant loss of funding and reputation, with severe “adverse effects” for authors, publishers, and institutions. Fraudulent authors are a blot on the copybook of science, on the reputation of many institutions and on research in many countries.

In terms of research areas, 60.83% of articles retracted due to FFP fall within the Life Science and Biomedicine area. A total of 124 records declare that they have been funded. There is extensive citation of the retracted documents and up to 83.73% have been cited since their retraction. 72.96% of the documents have been published in JCR journals and have an impact factor.

Common policies and guidelines should be applied in the EU that serve to harmonise criteria, as well as to prevent and avoid scientific fraud. There should be policies that warn about the severity and consequences of acts of misconduct, but that also have an informative and educational purpose. Teaching ethical aspects from the earliest stages of training for scientists may be a key aspect in managing to alleviate this scourge. Another option is more severe penalties of a greater legal scope to be applied to those who violate ethical standards, embezzle funds and even endanger patients.

New lines of research are being considered, in the context of the European Union, related to environmental factors in academic and research settings linked to the possible effects or reasons that could incite fraud or questionable research practices (QRPs).

References

ALLEA (2018). The European Code of Conduct for Research Integrity. https://ec.europa.eu/research/participants/data/ref/h2020/other/hi/h2020-ethics_code-of-conduct_en.pdf.

Amos, K. A. (2014). The ethics of scholarly publishing: Exploring differences in plagiarism and duplicate publication across nations. Journal of Medical Library Association, 102(2), 87–91.

Anderson, M. S., Ronning, E. A., De Vries, R., & Martinson, B. C. (2007). The perverse effects of competition on scientists´ work and relationships. Science and Engineering Ethics, 13(4), 437–461.

Barde, F., Peiffer-Smadja, N., & de La Blanchardiere, A. (2020). Scientific misconduct: A major threat for medical research. La Revue de medicine interne, 41, 330–334.

Bar-Ilan, J., & Halevi, G. (2018). Temporal characteristics of retracted articles. Scientometrics, 116(3), 1771–1783.

Bhattarcharjee, Y. (2013). The Mind of a Con Man. The New York Times. http://archive.nytimes.com/www.nytimes.com/2013/04/28/magazine/diederik-stapels-audacious-academic-fraud.html.

Bhutta, Z., & Crane, J. (2014). Should research fraud be a crime? BMJ. https://doi.org/10.1136/bmj.g4532.

Bosch, X. (2014). Improving biomedical journals´ ethical policies: The case of research misconduct. Journal of Medical Ethics, 40(9), 644–646.

Bosch, X., Hernández, C., Pericas, J. M., Doti, P., & Marusic, A. (2012). Misconduct policies in high-impact biomedical journals. PLoS ONE, 7(12), e51928.

Brainard, J., & You, J. (2018). What a massive database of retracted papers reveals about science publishing´s “death penalty.” Science, 362(6413), 391–393.

Budd, J. M., Coble, Z., & Abritis, A. (2016). An investigation of retracted articles in the biomedical literature. Proceedings of the Association for Information Science and Technology, 53, 1–9.

Budd, J.M., Coble, Z. & Anderson, K. (2011). Retracted Publications in Biomedicine: Cause for Concern. ACRL 2011 Conference Proceedings, 390–395.

Campos-Varela, I., & Ruano-Ravina, A. (2019). Misconduct as the main cause for retraction. A descriptive study of retracted publications and their authors. Gaceta sanitaria, 33(4), 356–360.

Chambers, L.M., Michener, C.M. & Falcone, T. (2019). Plagiarism and data falsification are the most common reasons for retracted publications in obstetrics and gynaecology. BJOG: An international journal of obstetrics and gynaecology, 126(9), 1134–1140.

Chapman, D. W., & Lindner, S. (2016). Degrees of integrity: The threat of corruption in higher education. Studies in Higher Education, 41(2), 247–268.

Couzin, J., & Unger, K. (2006). Scientific misconduct. Cleaning up the paper trail. Science, 31(5770), 38–43. https://doi.org/10.1126/science312.5770.38.

Dal-Ré, R., Bouter, L. M., Cuijpers, P., Gluud, C., & Holm, S. (2020). Should research misconduct be criminalized? Research Ethics, 16(1–2), 1–12.

Davis, M. S. (2003). The role of culture in research misconduct. Accountability in Research: Policies and Quality Assurance, 10(3), 189–201.

Davis, M. S., Riske-Morris, M., & Diaz, S. R. (2007). Casual factors implicated in research misconduct: Evidence from ORI case files. Science and Engineering Ethics, 13(4), 395–414.

Dooley, J. J., & Kerch, H. M. (2000). Evolving research misconduct policies and their significance for physical scientists. Science and Engineering Ethics, 6(1), 109–121.

Dubois, J. M., Anderson, E. E., Chibnall, J., Carroll, K., Gibb, T., Ogbuka, C., & Rubbelke, T. (2013). Understanding research misconduct: A comparative analysis of 120 cases of professional wrongdoing. Accountability in Research: Policies and Quality Assurance, 20(5–6), 320–338.

European Research Council (2012). ERC Scientific Misconduct Strategy. https://erc.europa.eu/sites/default/files/document/file/ERC_Scientific_misconduct_strategy.pdf.

European Research Council (2018). Scientific misconduct cases in 2018. https://erc.europa.eu/sites/default/files/document/file/Scientific_Misconduct_cases_2018.pdf.

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE, 4(5), e5738.

Fanelli, D. (2014). Rise in retractions is a signal of integrity. Nature, 509(7498), 33. https://doi.org/10.1038/509033a.

Fanelli, D., Costas, R., & Larivière, V. (2015). Misconduct policies, academic culture and career stage, not gender of pressures to publish, affect scientific integrity. PLoS ONE, 10(6), e0127556.

Fanelli, D., Ioannidis, J. P. A., & Goodman, S. (2018). Improving the integrity of published science: An expanded taxonomy of retractions and corrections. European Journal of Clinical Investigation, 48(4), e12898.

Fang, F. C., & Casadevall, A. (2011). Retracted science and the retraction index. Infection and Immunity, 79(10), 3855–3859.

Fang, F. C., Steen, R. G., & Casadevall, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proceedings of the National Academy of Sciences, 109(42), 17028–17033.

Franzen, M., Rödder, S., & Weingart, P. (2007). Fraud: causes and culprits as perceived by science and the media. EMBO Reports, 8(1), 3–7.

Gammon, E., & Franzini, L. (2013). Research misconduct oversight: Defining case costs. Journal of Health Care Finance, 40(2), 75–99.

George, S. L. (2016). Research misconduct and data fraud in clinical trials: prevalence and causal factors. International Journal of Clinical Oncology, 21, 15–21. https://doi.org/10.1007/s10147-015-0887-3.

Godecharle, S., Fieuws, S., Nemery, B., & Dierickx, K. (2018). Scientists still behaving badly? A survey within industry and universities. Science and Engineering Ethics, 24(6), 1697–1717.

Godecharle, S., Nemery, B., & Dierickx, K. (2014). Heterogeneity in European research integrity guidance: Relying on values or norms? Journal of empirical research on human research ethics: JERHRE, 9(3), 79–90.

Grieneisen, M. L., & Zhang, M. (2012). A comprehensive survey of retracted articles from the scholarly literature. PLoS ONE, 7(10), e44118.

Gupta, A. (2013). Fraud and misconduct in clinical research: A concern. Perspectives in Clinical Research, 4(2), 144–147.

Hagberg, J. M. (2020). The unfortunately long life of some retracted biomedical research publications. Journal of Applied Physiology, 128(5), 1381–1391.

He, T. (2013). Retraction of global scientific publications from 2001 to 2010. Scientometrics, 96(2), 555–561.

Hemmings, H., Jr., & Shafer, S. L. (2020). Futher retractions of articles by Joachim Boldt. British Journal of Anaesthesia, 25(3), 409–411.

Hesselmann, F., Graf, V., Schmidt, M., & Reinhart, M. (2017). The visibility of scientific misconduct: A review of the literature on retracted journal articles. Current Sociology Review, 65(6), 814–845.

Hiney, M. (2015). Research integrity: What it means, why it is important and how we might protect it. Mountain View (California): Science Europe. https://www.scienceeurope.org/media/dnwbwaux/briefing_paper_research_integrity_web.pdf.

Huistra, P., & Paul, H. (2021). Systemic explanations of scientific misconduct: Provoked by spectacular cases of norm violation? Journal of Academic Ethics. https://doi.org/10.1007/s10805-020-09389-8.

International Committee of Medical Journal Editors (IDMJE) (2019). Recommendations for the Conduct, Reporting, Editing, and Publication of Scholarly Work in Medical Journals. http://www.icmje.org/icmje-recommendations.pdf.

Kalichman, M. (2020). Survey study of research integrity officers´ perceptions of research practices associated with instances of research misconduct. Research Integrity and Peer Review, 5(1), 17.

Kornfeld, D. S. (2012). Perspective: research misconduct: The search for a remedy. Academic Medicine, 87(7), 877–882.

Kornfeld, D. S., & Titus, S. L. (2016). Stop ignoring misconduct. Nature, 537(7618), 29–30.

Li, D., & Cornelis, G. (2021). Differing perceptions concerning research misconduct between China and Flanders: A qualitative study. Accountability in Research: Policies and Quality Assurance, 28(2), 63–94.

Marcus, A. (2018). A scientist´s fraudulent studies put patients at risk. Science, 362(6413), 394.

Marshall, E. (1998). The internet: A powerful tool for plagiarism sleuths. Science, 279(5350), 474.

Martinson, B. C., Anderson, M. S., & De Vries, R. (2005). Scientists behaving badly. Nature, 435(7043), 737–738.

Mena, J. D., Ndoye, M., Cohen, A. J., Kamal, P., & Breyer, B. N. (2019). The landscape of urological retractions: The prevalence of reported research misconduct. BJU international, 124(1), 174–179.

Mumford, M. D., Devenport, L. D., Brown, R. P., Connelly, S., Murphy, S. T., Hill, J. H., & Antes, A. L. (2006). Validation of ethical decision maker measures: Evidence for a new set of measures. Ethics & Behavior, 16(4), 319–345.

Mumford, M. D., Murphy, S. T., Connelly, S., Hill, J. H., Antes, A. L., Brown, R. P., & Davenport, L. D. (2007). Environmental influences on ethical decision making: Climate and environmental predictors of research integrity. Ethics & Behavior, 17(4), 337–366.

Noyori, R., & Richmond, J. P. (2013). Ethical conduct in chemical research publishing. Advanced Synthesis & Catalysis, 355(1), 3–9.

OECD, Global Science Forum (2008). Best Practices for Ensuring Scientific Integrity and Preventing Misconduct. http://www.oecd.org/science/inno/40188303.pdf.

Parlangeli, O., Guidi, S., Marchigiani, E., Bracci, M., & Liston, P. M. (2020). Perceptions of work-related stress and ethical misconduct amongst non-tenured researchers in Italy. Science and Engineering Ethics, 26(1), 159–181.

Pickett, J. T., & Roche, S. P. (2018). Questionable, objectionable or criminal? Public opinion on data fraud and selective reporting in science. Science and Engineering Ethics, 24(1), 151–171.

Pupovac, V., & Fanelli, D. (2015). Scientists admitting to plagiarism: A meta-analysis of surveys. Science and Engineering Ethics, 21(5), 1331–1352.

Rahman, H., & Anker, S. (2020). Dishonesty and research misconduct within the medical profession. BMC Medical Ethics, 21(1), 22.

Rapani, A., Lombardi, T., Berton, F., Del Lupo, V., Di Lenarda, R., & Stacchi, C. (2020). Retracted publications and their citation in dental literature: A systematic review. Clinical and experimental dental research, 6(4), 383–390.

Resnik, D. B. (2019). Is it time to revise the definition of research misconduct? Accountability in Research: Policies and Quality Assurance, 26(2), 123–137.

Resnik, D. B., Rasmussen, L. M., & Kissling, G. E. (2015). An international study of research misconduct policies. Accountability in Research: Policies and Quality Assurance, 22(5), 249–266.

Resnik, D. B., & Stewart, C. N. (2012). Misconduct versus honest error and scientific disagreement. Accountability in Research: Policies and Quality Assurance, 19(1), 56–63.

Ribeiro, M. D., & Vasconcelos, S. M. R. (2018). Retractions covered by retraction Watch in the 2013–2015 period: prevalence for the most productive countries. Scientometrics, 114, 719–734.

Roland M. C. (2007). Publish and perish. Hedging and fraud in scientific discourse. EMBO reports, 8(5), 424–428.

Samp, J. C., Schumock, G. T., & Pickard, A. S. (2012). Retracted publications in the drug literature. Pharmacotherapy, 32(7), 586–596.

Schneider, J., Ye, D., Hill, A. M., & Whitehorn, A. S. (2020). Continued post-retraction citation of a fraudulent clinical trial report, 11 years after it was retracted for falsifying data. Scientometrics, 125, 2877–2913.

Shuai, X., Rollins, J., Moulinier, I., Custis, T., Edmunds, M., & Schilder, F. (2017). A multidimensional investigation of the effects of publication retraction on scholarly impact. Journal of the Association for Information Science and Technology, 68, 2225–2236.

Sovacool, B. K. (2008). Exploring scientific misconduct: Isolated individuals, impure institutions, or an inevitable idiom of modern science? Bioethical Inquiry, 5, 271–282.

Steen, R. G. (2011). Retractions in the scientific literature: Is the incidence of research fraud increasing? Journal of Medical Ethics, 37(4), 249–253.

Steen, R. G., Casadevall, A., & Fang, F. C. (2013). Why has the number of scientific retractions increased? PLoS ONE, 8(7), e68397.

Stern, A. M., Casadevall, A., Fang, Steen RG., & FC, . (2014). Financial costs and personal consequences of research misconduct resulting in retracted publications. Elife, 3, 2956. https://doi.org/10.7554/eLife.02956.

Stroebe, W., Postmes, T., & Spears, R. (2012). Scientific misconduct and the myth of self-correction in Science. Perspectives on Psychological Science, 7(6), 670–688.

Tavare, A. (2012). Scientific misconduct is worryingly prevalent in the UK, shows BMJ survey. BMJ, 344, e377. https://doi.org/10.1136/bmj.e377.

The Office of Research Integrity (ORI) (2020). Definition of Research Misconduct. https://ori.hhs.gov/definition-misconduct.

The Retractation Watch Leaderboard (2020). https://retractionwatch.com/the-retraction-watch-leaderboard/.

Theis-Mahon, N. R., & Bakker, C. J. (2020). The continued citation of retracted publications in dentistry. Journal of Medical Library Association, 108(3), 389–397.

Tijdink, J. K., Bouter, L. M., Veldkamp, C. L. S., van de Ven, P. M., Wilcherts, J. M., & Smulders, Y. M. (2016). Personality traits are associated with research misbehavior in Dutch Scientists: A cross-sectional study. PLoS ONE, 11(9), e0163251.

Trikalinos, N. A., Evangelou, E., & Ioannidis, J. P. A. (2008). Falsified papers in high-impact journals were slow to retract and indistinguishable from nonfraudulent papers. Journal of Clinical Epidemiology, 61(5), 464–470.

Wager, E., & Kleinert, S. (2012). Cooperation between research institutions and journals on research integrity cases: Guidance from the committee on publication ethics (COPE). Maturitas, 72, 165–169.

Wager, E., & Williams, P. (2011). Why and how do journals retract articles? An analysis of Medline retractions 1988–2008. Journal of Medical Ethics, 37(9), 567–570.

Wise, J. (2013). Boldt: the great pretender. BMJ, 346, 16–18. https://doi.org/10.1136/bmj.f1738.

Wright, D. E., Titus, S. L., & Cornelison, J. B. (2008). Mentoring and research misconduct: an analysis of research mentoring in closed ORI cases. Science and engineering ethics, 14(3), 323–336.

Zhang, Q., Abraham, J., & Fu, H. Z. (2020). Collaboration and its influence on retraction based on retracted publications during 1978–2017. Scientometrics, 125, 213–232.

Zhang, M., & Grieneisen, M. L. (2013). The impact of misconduct on the published medical and non-medical literature, and the news media. Scientometrics, 96, 573–587.

Funding

No funding was received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Rights and permissions

About this article

Cite this article

Marco-Cuenca, G., Salvador-Oliván, J.A. & Arquero-Avilés, R. Fraud in scientific publications in the European Union. An analysis through their retractions.. Scientometrics 126, 5143–5164 (2021). https://doi.org/10.1007/s11192-021-03977-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-03977-0