Abstract

The paper presents a large-scale study (covering 26,998,764 items) of the development in the number of references over time (1996–2019) in three document types (articles; reviews; notes) from seven fields (Arts and Humanities; Social Sciences; Computer Science; Mathematics; Engineering; Medicine; Physics and Astronomy). Using interval ratios instead of average numbers, the paper makes it possible to follow the development, and to locate the main causes of growth in the number of references over time. The results show significant differences between fields and document types. The number of references in journal articles and reviews are growing in all fields (except for the reviews in Arts and Humanities that remain stable over time), but at different pace; The number of references in notes are growing in some fields (again at different pace) and are stable in others. The observed growth is primarily caused by a drop in short reference lists and a corresponding increase in a bit longer and medium size reference lists. Long and very long reference lists remain much more stable in shares over time, and does therefore not contribute much to the observed growth. The results underline the importance of normalizing citation data, and for taking citation inflation into account when conducting citation analyses expanding different fields, document types, and longer time-periods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The development in number of references in scientific publications over time has been studied at least since 1965 when Derek J. de Solla Price reported that on average, journal papers contain 15 references. More specifically, he had found that about 10% of all papers contained no references whereas 5% contained more than 45 references. According to de Solla Price (1965), 85% of all papers contained 25 or fewer references. This is a quite unique method when reporting the number of references. Many studies have followed in the footsteps of de Solla Price, but have only provided the average or median number of references, and thus not interval ratios. All of these studies show a clear tendency: The number of references in scientific publications is growing.

About 15 years after the publication of the study by Derek J. de Solla Price, Eugene Garfield noticed a substantial growth in the number of references per item in 37 core biochemical journals (Garfield 1979, 1980). Garfield examined the growth rate by developing an R/S journal indicator where R is the number of references contained in all publications during a specified year and S being the number of source articles (S) it published that year. Furthermore, by revisiting earlier studies Garfield confirmed the increasing R/S in biochemistry, mathematics and botany. The growth rates differed but the tendency to increasing reference lists was clear (Garfield 1979, 1980). Garfield suggested five possible explanations for the increase in the number of references. Firstly, fragmented publishing implies referencing more as each study is split into several publications. Secondly, the growth of the literature would also imply an increase although the increase should level out or all publications become reviews. Thirdly, citation consciousness may incite authors to cite more. Fourthly, improved current awareness systems help authors be aware of recently published studies. Finally, available databases help authors find older studies as well and their retrospective search capability is thus also strengthened (Garfield 1979).

More recent studies have also shown that the number of references has increased. The average number of references increased substantially over a 38 year-period in the journal “Polymer testing” (Jaunich, 2018). Similar results are found in 8 engineering journals (Ucar et al. 2014) although growth rates differ over time. Ucar et al. found a strong growth trend in the number of references in engineering papers. Krampen (2010) found increasing numbers of references using a sample of 45 English and 45 German articles on developmental psychology, psychological diagnosis and assessment, and social psychology. Similarly, by examining one volume from each decade Lipetz (1999) documented that papers without references disappeared in The Journal of the American Society for Information Science (JASIST) during 50 years of publication, and that the average number of references per paper had grown exponentially. Also studying JASIST, Tsay (2008) found that the average number of references cited per paper increased 2 to 3 times over a period of about 25 years. Sánchez-Gil et al. (2018) reported an increasing average number of references in all scientific areas and categories, except in some Arts and Humanities categories although there is considerable variation across fields as well as within fields. However, the analysis by Sánchez-Gil et al. does not normalize for document types, and as reviews generally contain considerably more references normalization is necessary. Without normalization the findings could be caused by an increase in specific publication types.

A number of studies have shown that the number of references differ across fields. Hyland (2004) found that the average number of references per publication range from 24.8 in magnetic physics to 104.0 in sociology. Sánchez-Gil et al. (2018) found considerable differences across five knowledge areas ranging from an average of 24.79 references per publication in health sciences to 36.55 in life sciences. Yang et al. (2006) reported differences in the average number of references in five andrology journals.

Meadows (1974) argued that the increase in the number of references will level out and reach a maximum. The number of references in a paper may also decrease over time due to journal limitations which is reported by Anger (1999) describing a maximum limit of four references in a health sciences journal for brief communications, case reports and letters to the editor which would. However, although a limited study, Ucar et al. (2014) found a doubling of the median number of references from 1972 to 2000 and a growth acceleration after the year 2000. The acceleration is explained predominantly by a greater access to information by the generalization of the Internet, and they consequently argue that as such “there is still no sign of the so called saturation phase” (Ucar et al. 2014: 1863).

Pan et al. (2018) argue that the growth in scientific production as well as in the number of references per paper has widespread implications for scientific communities in general and more specifically how academic knowledge is connected, accessed and evaluated. Normalization is one of the areas where an increasing number of references plays an important role. Bibliometric studies often include the use of field-normalized indicators to account for the varying publication and citation cultures across fields (Waltman 2016). However, numerous approaches for normalized indicators exist (see Bornmann and Marx 2018). One way is by so-called “citing-side”, “a priori”, “ex ante” or “source” normalization (Zitt 2013). This approach is based on references in the citing documents (for recent examples see, for instance Frandsen et al. (2019), Mingers and Meyer (2017), Fang (2015)). Consequently, any changing patterns in the number of references in specific document types as well as within fields are important for citing-side normalization of impact indicators.

In summary, studies have repeatedly reported a growing number of references in research publications from most fields. A number of reasons for this growth have been suggested, but consensus is yet to emerge. To get a better understanding of the growth rate, we return to the praxis of reporting interval ratios. This will help to identify the causes of the growth: Is the growth caused mainly by authors writing very long papers with many references or is it primarily caused by more gradual shifts from shorter reference lists to medium size reference lists, etc.? Also: is the growth rate the same in different fields, for different document types, and over time? Finally, what are the consequences for the normalization of impact indicators? The aim of this study is to answer these questions.

We therefore report interval ratios for seven fields (Arts and Humanities; Social Sciences; Computer Science; Mathematics; Engineering; Medicine; Physics and Astronomy) and three types of journal papers (journal articles; notes; reviews) for a period of 24 years (1996–2019), and discuss how the findings may affect citing-side normalization. Bornmann and Marx (2018) appeal for more studies analyzing the validity of the various field-normalized indicators. This study adds to the knowledge base within the field by examining potentially changing patterns in the number of references.

Methods

Data was retrieved from Scopus ultimo March 2020. The publication window was set to 1996–2019 and limited to three document typesFootnote 1 (articles, notes and reviews) and seven subject areasFootnote 2 (Arts and Humanities, Computer science, Engineering, Mathematics, Medicine, Physics and Astronomy, Social sciences). The selection of document types and subject areas were guided by an aspiration to cover variations of both, and the selection thus covers a total of 26,931,419 items.

Retrieved items were organized by number of references using twelve intervals: 0–9; 10–19: 20–29: 30–39; 40–49; 50–59; 60–69; 70–79; 80–89; 90–99; 100–199; 200–299 (see "Appendices 1–21"). Interval ratios for each document type were calculated year-by-year as a percentage number. For example: In Arts and Humanities (2019) we retrieved 15,367 journal articles with a reference list containing 0 to 19 references. This amounts to 18.6 percent of the total number of retrieved journal articles that year (82,406). Table 1 illustrates the calculation of interval ratios for Arts and Humanities journal articles published in 2019.

Note that the percentages are calculated using reference lists in the range of 0–299 as total. There are, of course, a (small) number of journal articles with longer reference lists. Thus, the actual percentages are probably a little bit lower.

The organization of the data in intervals makes it possible to collapse retrieved reference intervals and perform analyses on larger interval ratios. Articles can serve as an example: Publications with less than 60 references account for more than 92% of the publications and thus some of the ratio intervals were collapsed to provide a clearer picture in the figures. Thus, analyses on the growth rate of references in journal articles were performed using six interval ratios (0–19; 20–39; 40–59; 60–79; 80–99; 100–199). Not all ratio intervals are included in all figures to enable an overview of the dominant tendencies for each document type. Reviews tend to have longer reference lists whereas few reviews have short reference lists. Consequently, analyses of reviews were performed using four interval ratios (0–49; 50–99; 100–299). The number of references tend to be smaller in notes and we therefore concentrate on the first four intervals. Other interval ratios are easily constructed from data provided in the appendices.

Results are presented in graphs showing the development over time for the period 1996–2019 in absolute numbers and ratios.

Results

First, we consider the development over time using absolute numbers and a general increase in the number of publications is evident. The number of publications in our data set has more than tripled from 1996 to 2019. Serving as an example, Fig. 1 presents an overview of the development in the number of references in journal articles. The number of articles with at least 50 references is more than 10 times higher in 2019 than 1996. Articles with 20 to 49 references have at least tripled in 2019 compared to 1996. Finally, articles with 0–19 references have only increased slightly or even decreased. Consequently, due to the general increase in the number of publications indexed in Scopus we are analyzing the development over time using ratios.

Now we turn to ratio intervals. The results of the investigation show significant differences between fields and document types.

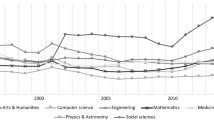

We find that the number of references in journal articles is growing relatively in all fields, but not at the same pace (see Figs. 2, 3 and 4). Journal articles with short reference lists (0–19 references) dominated in the beginning of the investigated period—especially in the fields of Computer Science, Engineering, Mathematics, and Physics and Astronomy. Here the short reference list was the most frequent kind until 2010 (Computer Science), 2013 (Engineering and Mathematics), and 2014 (Physics and Astronomy) when it was replaced at the first spot by journal articles with a bit longer reference lists (20–39 references). A similar replacement is found in Medicine, Social Sciences, and Arts and Humanities. Yet, in these fields the shift took place at an earlier point (Medicine: 2000; Social Sciences: 2002; Arts and Humanities: 2005). Medium length reference lists (40–59 references) were rarer guests in the first part of the investigated period, but show a steady increase in all fields over time—overtaking the second spot in Arts and Humanities (2015), Computer Science (2018), and Engineering (2019), and even ending up sharing first spot in Social Sciences (2019).

Journal articles with longer reference lists are much fewer relatively and are found to be more constant in shares over time - except for journal articles with 60–79 references in Social Sciences that are found to almost double its share over time.

These findings verify that the number of references in journal articles has been growing over time. They also reveal that the main cause of the growth in all fields is a drop in the share of articles with short reference lists (1–9 references) and an increase in share of articles with a bit longer and medium size reference lists (20–39 references and 40–59 references (and even 60–79 references especially in Social Sciences)). Journal articles with long reference lists (80–99) and very long reference lists (100–199) have shares that are found to be quite constant over time. There are marked differences in growth rates between fields. Arts and Humanities and Social Sciences display much more steady tendencies compared to the five other fields.

Turning to the development in the number of references in review articles, we find that this is quite diverse (see Fig. 3) during the period 1996–2019. Review articles with shorter reference lists (0–49 references) are dominating in all fields, but in Computer Science, Engineering, Mathematics, Medicine, and Physics and Astronomy their shares decreased heavily over time. Social Sciences display a moderate drop in shorter reference lists over time, and in Arts and Humanities the share of shorter reference lists is practically constant over time. In Medicine, the share of shorter reference lists went from almost 70 percent in 1996 to around 45 percent in 2019. In Computer Science (90% to 20%), Engineering (85% to 30%), Mathematics (85% to 50%), and Physics and Astronomy (75% to 30%) the drop was even more significant. The share of shorter reference lists only dropped around 15% in Social Sciences, from around 75% in 1996 to around 60 percent in 2019.

The shares of medium size reference lists (50–99 references) and long reference lists (100–199) gradually increased over time in all fields (except Arts and Humanities). As we are dealing with shares, the growth is of course most significant in the same fields where a significant drop in shorter reference lists were observed. Yet, the two types of reference lists are not growing at the same pace between fields. Long reference lists in Computer Science, Engineering, and Physics and Astronomy are now the most frequent kind, but still takes the third spot on the other fields.

Consequently, the primary cause of the observed growth in number of references in reviews over time was a drop in shorter reference lists and an increase in medium size and long reference lists.

Compared to the two other document types (articles and reviews), the number of references in notes are found to be more constant over time. Most notes contain a limited number of references. Figure 4 therefore only show results of notes with very short reference lists (0–9 references), short reference lists (10–19 references), and medium size reference lists (20–29 references and 30–39 references).

Only a few notes were published within the field of Mathematics during the first part of the investigated period, consequently causing the observed variability in the reported shares. When ignoring this variability, it is evident that in four of the seven fields (Arts and Humanities, Mathematics, Medicine, and Physics and Astronomy), notes display characteristics of a stable document type with a quite constant number of references. The same is not the case for the three other fields where the share of very short reference lists (0–9) have dropped significantly over time. In these fields it is especially notes with short reference lists (10–19) that have experienced a growth over time.

Thus, the results show some differences between fields, but again data reveals that in fields where the number of references have grown in notes, the growth is primarily caused by a drop in reference lists of the shorter kind and a growth in a bit longer reference lists. The share of the longest reference lists seems to be quite stable.

Discussion and conclusion

The results of the investigation show significant differences between fields and document types. The number of references in journal articles and reviews is growing in all fields (except for the reviews in Arts and Humanities that remain stable over time), but at a different pace; the number of references in notes is growing in some fields (again at a different pace) whereas it remains stable in others.

By focusing on interval ratios, this study reveals that the main cause of the observed growth in number of references is a drop in the share of short reference lists and a corresponding increase in the share of a bit longer and medium size reference lists. The share of long and very long reference lists remain much more stable in shares over time.

Before discussing the implications of the study, the limitations need to be considered—specifically the unit of analysis and the quality of data. First, the analyses are performed on seven subject areas as indexed in Scopus. Differences in the number of references across fields as well as subfields are documented (Sánchez-Gil et al. 2018) and thus a more fine-grained analysis could potentially have shown even greater differences within fields. However, for the purpose of this paper, field differences remain clear using the current data set. Secondly, data quality is of great importance for any bibliometric study, and Scopus (as well as Web of Science) suffer from various documented inaccuracies (Krauskop 2019; Van Eck and Waltman 2017). Consequently, we expect some inaccuracies in the obtained data. However, as we expect these inaccuracies to be evenly distributed across the data set, bias is not expected.

Finding that the number of references in articles and reviews are increasing across all fields (except for Arts and Humanities reviews) lends support to Ucar et al. (2014) stating that a saturation phase is not in sight. Collectively, the seven fields are displaying a substantial decrease in publications with very few references, but also that the declining pace varies across fields. This support the need for field-specific studies of document types (Sánchez-Gil et al. 2018). Yet, the stable number of references in notes in four of the seven fields suggests that the development in the number of references is also closely linked to the document type. This may be explained by certain journal limitations (Anger 1999) or by certain document types having reached a maximum as suggested by Meadows (1974). A third explanation offered in several studies is that the increased number of references is correlated with an increase in paper length. Abt and Garfield (2002) analyzed 41 journals from physical, life, and social sciences and found a linear relationship between the average number of references and the normalized paper lengths. Papers in review journals have on average twice the number of references as research papers of the same lengths. Similar results were reported by Costas et al. (2012) who had analyzed the use of bibliographic references by individual scientists in three different research areas and found that within each area the number of references increased with paper length. However, Hyland (2004) found great disciplinary differences in the number of references even when correcting for the number of words (the number of references per 1000 words ranged from 7.3 in Mechanical Engineering to 15.5 in Molecular Biology), and Vosner et al. (2016) found that the average number of references is increasing whereas the number of pages per publication is decreasing, although it has remained stable in recent years. Thus, the third explanation might be somewhat questionable.

Normalization is a key principle in citation analysis, and a number of normalization procedures have been suggested. The many field-normalized indicators measure citation impact comparably, but they are not equivalent, and the choice of field-normalized indicator can lead to different results in a research evaluation (Bornmann et al. 2019). One of these field-normalizing approaches is citing-side normalization which normalize impact indicators by correcting for the effect of reference list length (Waltman 2016). Zhou and Leydesdorff (2011: 362) describe the procedure as follows: “Each of the […] citing documents contains a number of references (k) and is accordingly attributed to the cited document with a fractional weight 1/k.” The number of references in the citing paper is used to normalize a specific citation. In some cases, the average number of references in the same journal as the citing document is used as weighting factor (Bornmann et al. 2019). It is important to keep in mind that the number of references used to normalize the citations only includes references that falls within a certain reference window and that points to a publication in a journal covered by the database used for the analysis i.e. so-called active references (Zitt and Small 2008). Several indicators exist that are based on source normalization approaches. Waltman and van Eck (2012) examines three mean source normalized citation score indicators and find that they are all strongly correlated.

Zitt (2010) acknowledges that there are limitations to citing-side normalization and explains that a pool of documents with few references may produce anomalies. Mingers and Kaymaz (2019) encountered problems with cases with extremely high as well as cases with extremely low numbers of active citations when computing normalized book citations in Google Scholar. In the case of no active citations available the target book could not be normalized. Furthermore, Zitt (2010) argues that journals with constraints on the number of references can also produce irregularities although Zitt argues that the principle of aggregation reduces this type of problem. Waltman and van Eck (2012, p. 714) concludes that “[w]hen taking a source normalization approach, it is especially important to exclude journals with very small numbers of active references”.

Consequently, researchers working with citing-side normalization recognizes the importance of the number of references. However, so far there has been little work done to address the consequences of the general increase in the average number of references. In a recent study, Petersen et al. (2019) estimates that the increase in reference lists accounts for one-third of the growth rate for the total number of references produced by the scientific literature published in any given year. While they acknowledge the importance of normalizing citation data, they argue that the problem of citation inflation may be even more fundamental:

“The problem is rather simple—when citations are produced in distinct historical periods their ‘nominal values’ are inconsistent and thus cannot simply be added together” (Petersen et al. (2019: 1855).

The authors provide convincing examples showing that the cutoff for the top 1% of articles in the journal Science published in the year 2000 corresponds to 200 citations, whereas the top 1% cutoff for publications from 1965 was just under 100 citations. Similarly, they calculate that the cutoff for the top 10% of social science publications from 1965 is around 10 citations, whereas in 2000 the threshold had risen to more than 40 citations. Furthermore, Bornmann and Mutz (2015, p. 2221) argue that the growth may be caused by “a mixture of internal and external (sociological, historical, psychological) practices that continuously have altered the ways of viewing science”. These examples illustrate why neither field-normalization nor a fixed citation window can overcome the temporal bias induced by citation inflation. The authors therefore develop a six-step procedure for obtaining data that may be used for citation deflation. This procedure involves defining a target subject and obtaining deflator time series, but do not address the importance of document types.

Our results underline the importance of normalizing citation data, and for taking citation inflation into account when conducting citation analyses expanding longer time-periods. However, our results not only show differences in number of references between fields and over time, but also that a third parameter play a role. Thus, the number of references is not only field and time dependent, but also document type-specific. To obtain even more fine-grained citation deflator data, future developers may therefore also take our results into account, and develop procedures incorporating the document type of citing documents into their equations.

Availability of data and material

See appendices.

Notes

Searched as DOCUMENT TYPE and further limited to SOURCE TYPE: Journal.

As a journal may be indexed with more than one subject area code, subject areas slightly overlap.

References

Abt, H. A., & Garfield, E. (2002). Is the relationship between numbers of references and paper lengths the same for all sciences? Journal of the American Society for Information Science and Technology, 53(13), 1106–1112. https://doi.org/10.1002/asi.10151.

Anger, J. (1999). Should there be a recommended limit to the number of references in a scientific article? Sao Paulo Medical Journal, 117(1), 42–43. https://doi.org/10.1590/S1516-31801999000100010.

Bornmann, L., & Marx, W. (2018). Critical rationalism and the search for standard (field-normalized) indicators in bibliometrics. Journal of Informetrics, 12(3), 598–604.

Bornmann, L., & Mutz, R. (2015). Growth rates of modern science: A bibliometric analysis based on the number of publications and cited references. Journal of the Association for Information Science and Technology, 66(11), 2215–2222.

Bornmann, L., Tekles, A., & Leydesdorff, L. (2019). The convergent validity of several (field-normalized) bibliometric indicators: How well does I3 perform for impact measurement? In Paper presented at the 17th International Conference on Scientometrics and Informetrics, ISSI 2019.

Costas, R., van Leeuwen, T. N., & Bordons, M. (2012). Referencing patterns of individual researchers: Do top scientists rely on more extensive information sources? Journal of the American Society for Information Science and Technology, 63(12), 2433–2450. https://doi.org/10.1002/asi.22662.

Fang, H. (2015). A theoretical model of scientific impact based on citations. Malaysian Journal of Library & Information Science, 20(3), 1–13.

Frandsen, T. F., Jacobsen, R. H., Nicolaisen, J., & Ousager, J. (2019). Pressure to publish: A bibliometric study of PhD-students (1993–2009). Information Research, 24(4), http://informationr.net/ir/24-4/colis/colis1925.html.

Garfield, E. (1979). Trends in biochemical literature. Trends in Biochemical Sciences, 4(12), N290.

Garfield, E. (1980). Number of biochemical articles is growing, but why also the number of references per article. Current Contents, 11, 5–16.

Hyland, K. (2004). Disciplinary discourses: social interactions in academic writing. Harlow: Pearson Education Ltd.

Jaunich, M. (2018). Editorial: The average number of references per article published in Polymer Testing over the years. Polymer Testing, 68, A1–A2. https://doi.org/10.1016/j.polymertesting.2018.05.034.

Krampen, G. (2010). Acceleration of citing behavior after the millennium? Exemplary bibliometric reference analyses for psychology journals. Scientometrics, 83(2), 507–513. https://doi.org/10.1007/s11192-009-0093-z.

Krauskopf, E. (2019). Missing documents in Scopus: the case of the journal Enfermeria Nefrologica. Scientometrics, 119(1), 543–547. https://doi.org/10.1007/s11192-019-03040-z.

Lipetz, B. A. (1999). Aspects of JASIS authorship through five decades. Journal of the American Society for Information Science, 50(11), 994–1003. https://doi.org/10.1002/(SICI)1097-4571(1999)50:11%3C994::AID-ASI5%3E3.0.CO;2-U.

Meadows, A. J. (1974). Communication in science. London: Butterworth.

Mingers, J., & Meyer, M. (2017). Normalizing Google Scholar data for use in research evaluation. Scientometrics, 112(2), 1111–1121.

Mingers, J., & Kaymaz, E. (2019). Normalizing book citations in Google Scholar: A hybrid cited-side citing-side method. Journal of Data and Information Science, 4(2), 19–35.

Pan, R. K., Petersen, A. M., Pammolli, F., & Fortunato, S. (2018). The memory of science: Inflation, myopia, and the knowledge network. Journal of Informetrics, 12(3), 656–678. https://doi.org/10.1016/j.joi.2018.06.005.

Petersen, A. M., Pan, R. K., Pammolli, F., & Fortunato, S. (2019). Methods to account for citation inflation in research evaluation. Research Policy, 48, 1855–1865.

Sanchez-Gil, S., Gorraiz, J., & Melero-Fuentes, D. (2018). Reference density trends in the major disciplines. Journal of Informetrics, 12(1), 42–58. https://doi.org/10.1016/j.joi.2017.11.003.

Solla Price, D. J. (1965). Networks of scientific papers. Science, 149(3683), 510–515.

Tsay, M. Y. (2008). Journal bibliometric analysis: A case study on the JASIST. Malaysian Journal of Library & Information Science, 13(2), 121–139.

Ucar, I., Lopez-Fernandino, F., Rodriguez-Ulibarri, P., Sesma-Sanchez, L., Urrea-Mico, V., & Sevilla, J. (2014). Growth in the number of references in engineering journal papers during the 1972–2013 period. Scientometrics, 98(3), 1855–1864. https://doi.org/10.1007/s11192-013-1113-6.

Van Eck, N. J., & Waltman, L. (2017). Accuracy of citation data in Web of Science and Scopus. In Paper presented at the 16th International Conference on Scientometrics and Informetrics, ISSI 2017..

Vosner, H. B., Kokol, P., Bobek, S., Zeleznik, D., & Zavrsnik, J. (2016). A bibliometric retrospective of the Journal Computers in Human Behavior (1991–2015). Computers in Human Behavior, 65, 46–58. https://doi.org/10.1016/j.chb.2016.08.026.

Waltman, L. (2016). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391.

Waltman, L., & van Eck, N. J. (2012). Source normalized indicators of citation impact: an overview of different approaches and an empirical comparison. Scientometrics, 96(3), 699–716. https://doi.org/10.1007/s11192-012-0913-4.

Yang, H., Pan, B. C., & Chen, J. (2006). Citation analysis of five journals in andrology. Archives of Andrology, 52(6), 433–440. https://doi.org/10.1080/01485010600840764.

Zhou, P., & Leydesdorff, L. (2011). Fractional counting of citations in research evaluation: A cross- and interdisciplinary assessment of the Tsinghua University in Beijing. Journal of Informetrics, 5(3), 360–368.

Zitt, M. (2010). Citing-side normalization of journal impact: A robust variant of the Audience Factor. Journal of Informetrics, 4(3), 392–406.

Zitt, M. (2013). Variability of citation behavior between scientific fields and the normalization problem: The “citing-side” normalization in context. Collnet Journal of Scientometrics and Information Management, 7(1), 55–67.

Zitt, M., & Small, H. (2008). Modifying the journal impact factor by fractional citation weighting: The audience factor. Journal of the American Society for Information Science and technology, 59(11), 1856–1860.

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Code availability

Not applicable.

Appendices

Appendix 1: Arts and humanities; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 4612 | 10,755 | 12,877 | 13,051 | 11,563 | 9268 | 6566 | 4596 | 2996 | 1917 | 4043 | 162 | 82,406 |

2018 | 5455 | 10,672 | 11,967 | 11,710 | 10,269 | 7926 | 5492 | 3663 | 2530 | 1695 | 3421 | 155 | 74,955 |

2017 | 5169 | 9647 | 11,100 | 11,106 | 9548 | 7389 | 5188 | 3487 | 2336 | 1487 | 3258 | 165 | 69,880 |

2016 | 4889 | 9210 | 10,536 | 10,240 | 8777 | 6813 | 4754 | 3301 | 2221 | 1604 | 3455 | 218 | 66,018 |

2015 | 5438 | 9966 | 11,094 | 10,171 | 8497 | 6523 | 4562 | 3188 | 2144 | 1464 | 3563 | 273 | 66,883 |

2014 | 6392 | 10,971 | 11,810 | 10,971 | 9048 | 6805 | 4574 | 3208 | 2243 | 1564 | 4077 | 362 | 72,025 |

2013 | 7417 | 11,159 | 11,729 | 10,269 | 8458 | 6305 | 4345 | 2936 | 2068 | 1503 | 3974 | 410 | 70,573 |

2012 | 6237 | 9491 | 10,095 | 9012 | 7323 | 5277 | 3747 | 2573 | 1822 | 1166 | 3304 | 311 | 60,358 |

2011 | 5522 | 8301 | 8761 | 7805 | 6500 | 4736 | 3375 | 2271 | 1555 | 1106 | 2990 | 298 | 53,220 |

2010 | 3896 | 6250 | 6702 | 6157 | 5218 | 3899 | 2669 | 1887 | 1283 | 868 | 2494 | 290 | 41,613 |

2009 | 4246 | 6172 | 6603 | 5927 | 4824 | 3476 | 2412 | 1660 | 1124 | 865 | 2308 | 219 | 39,836 |

2008 | 4927 | 6564 | 6808 | 6201 | 4830 | 3308 | 2411 | 1608 | 1083 | 801 | 2281 | 284 | 41,106 |

2007 | 4651 | 6305 | 6641 | 5675 | 4451 | 3240 | 2279 | 1492 | 996 | 719 | 2082 | 230 | 38,761 |

2006 | 4550 | 5934 | 5805 | 5181 | 3766 | 2852 | 1898 | 1299 | 887 | 660 | 1808 | 222 | 34,862 |

2005 | 4397 | 5630 | 5478 | 4648 | 3563 | 2399 | 1606 | 1061 | 748 | 540 | 1444 | 194 | 31,708 |

2004 | 4108 | 5326 | 5255 | 4258 | 3223 | 2246 | 1459 | 949 | 679 | 470 | 1409 | 171 | 29,553 |

2003 | 3887 | 4987 | 4958 | 4094 | 3053 | 2113 | 1449 | 992 | 679 | 459 | 1404 | 141 | 28,216 |

2002 | 4265 | 5288 | 5110 | 4158 | 3076 | 2029 | 1326 | 910 | 675 | 483 | 1272 | 158 | 28,750 |

2001 | 2842 | 3677 | 3625 | 3147 | 2382 | 1641 | 1100 | 718 | 496 | 378 | 1089 | 129 | 21,224 |

2000 | 2704 | 3771 | 3516 | 2821 | 2102 | 1546 | 985 | 725 | 505 | 397 | 1195 | 135 | 20,402 |

1999 | 2659 | 3716 | 3462 | 2743 | 2036 | 1438 | 986 | 662 | 504 | 343 | 1083 | 127 | 19,759 |

1998 | 2747 | 3855 | 3365 | 2725 | 2044 | 1415 | 897 | 639 | 443 | 294 | 1040 | 170 | 19,634 |

1997 | 2785 | 3689 | 3441 | 2600 | 1843 | 1263 | 799 | 591 | 436 | 299 | 939 | 133 | 18,818 |

1996 | 2932 | 3726 | 3355 | 2533 | 1756 | 1248 | 779 | 548 | 384 | 286 | 941 | 105 | 18,593 |

Appendix 2: Arts and humanities; reviews; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 1660 | 2465 | 2437 | 2082 | 1608 | 1217 | 912 | 659 | 462 | 381 | 936 | 95 | 14,914 |

2018 | 2795 | 3713 | 3649 | 2885 | 2340 | 1610 | 1190 | 812 | 574 | 445 | 1141 | 101 | 21,255 |

2017 | 2782 | 4041 | 3926 | 3153 | 2491 | 1853 | 1229 | 922 | 695 | 521 | 1490 | 159 | 23,262 |

2016 | 3007 | 3802 | 3695 | 3093 | 2309 | 1741 | 1253 | 966 | 688 | 503 | 1655 | 195 | 22,907 |

2015 | 2268 | 3184 | 3167 | 2735 | 2129 | 1629 | 1245 | 933 | 646 | 506 | 1824 | 184 | 20,450 |

2014 | 1917 | 2364 | 2305 | 1909 | 1535 | 1182 | 888 | 708 | 510 | 397 | 1440 | 171 | 15,326 |

2013 | 1606 | 1840 | 1664 | 1408 | 1053 | 828 | 582 | 472 | 347 | 311 | 981 | 154 | 11,246 |

2012 | 1848 | 2119 | 2119 | 1814 | 1420 | 1079 | 806 | 609 | 467 | 328 | 1053 | 139 | 13,801 |

2011 | 2078 | 3012 | 2956 | 2374 | 1819 | 1287 | 958 | 616 | 472 | 339 | 1090 | 110 | 17,111 |

2010 | 2274 | 3406 | 3509 | 2787 | 2095 | 1513 | 1068 | 782 | 585 | 443 | 1318 | 122 | 19,902 |

2009 | 2012 | 2860 | 2870 | 2318 | 1773 | 1259 | 933 | 705 | 530 | 402 | 1292 | 134 | 17,088 |

2008 | 1350 | 1610 | 1593 | 1354 | 1012 | 779 | 599 | 414 | 336 | 273 | 866 | 86 | 10,272 |

2007 | 1434 | 1630 | 1470 | 1178 | 937 | 690 | 546 | 409 | 313 | 212 | 737 | 111 | 9667 |

2006 | 1429 | 1809 | 1711 | 1415 | 1087 | 801 | 596 | 487 | 367 | 263 | 893 | 125 | 10,983 |

2005 | 1470 | 1813 | 1700 | 1491 | 1204 | 868 | 639 | 486 | 354 | 278 | 1061 | 166 | 11,530 |

2004 | 1281 | 1635 | 1468 | 1209 | 985 | 750 | 566 | 463 | 336 | 233 | 874 | 124 | 9924 |

2003 | 1270 | 1534 | 1453 | 1260 | 930 | 778 | 533 | 396 | 274 | 222 | 760 | 84 | 9494 |

2002 | 1228 | 1425 | 1225 | 1094 | 807 | 545 | 437 | 329 | 229 | 174 | 573 | 59 | 8125 |

2001 | 526 | 581 | 582 | 464 | 334 | 238 | 184 | 144 | 94 | 85 | 310 | 35 | 3577 |

2000 | 643 | 592 | 514 | 422 | 327 | 246 | 175 | 141 | 103 | 82 | 311 | 47 | 3603 |

1999 | 573 | 651 | 490 | 395 | 278 | 237 | 150 | 122 | 98 | 68 | 294 | 46 | 3402 |

1998 | 492 | 546 | 477 | 350 | 283 | 183 | 145 | 120 | 80 | 89 | 311 | 32 | 3108 |

1997 | 575 | 582 | 452 | 358 | 285 | 194 | 164 | 107 | 96 | 69 | 272 | 35 | 3189 |

1996 | 543 | 535 | 445 | 312 | 235 | 211 | 159 | 117 | 73 | 68 | 225 | 31 | 2954 |

Appendix 3: Arts and humanities; notes; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 767 | 452 | 216 | 111 | 64 | 37 | 17 | 20 | 16 | 6 | 8 | 0 | 1714 |

2018 | 930 | 495 | 210 | 108 | 68 | 53 | 25 | 15 | 11 | 7 | 15 | 0 | 1937 |

2017 | 948 | 505 | 173 | 98 | 59 | 31 | 23 | 11 | 14 | 8 | 13 | 3 | 1886 |

2016 | 837 | 415 | 194 | 96 | 42 | 33 | 22 | 21 | 11 | 8 | 42 | 6 | 1727 |

2015 | 768 | 349 | 135 | 64 | 32 | 31 | 16 | 12 | 11 | 5 | 26 | 0 | 1449 |

2014 | 814 | 348 | 181 | 79 | 37 | 25 | 18 | 18 | 8 | 5 | 19 | 3 | 1555 |

2013 | 688 | 304 | 114 | 56 | 41 | 16 | 15 | 5 | 11 | 5 | 10 | 1 | 1266 |

2012 | 680 | 279 | 144 | 68 | 36 | 35 | 18 | 13 | 11 | 12 | 77 | 18 | 1391 |

2011 | 734 | 320 | 159 | 70 | 51 | 45 | 30 | 21 | 10 | 13 | 45 | 9 | 1507 |

2010 | 642 | 333 | 144 | 61 | 47 | 19 | 18 | 13 | 13 | 5 | 26 | 2 | 1323 |

2009 | 515 | 238 | 126 | 57 | 37 | 34 | 22 | 12 | 12 | 6 | 30 | 6 | 1095 |

2008 | 587 | 307 | 133 | 74 | 35 | 22 | 12 | 10 | 5 | 7 | 16 | 7 | 1215 |

2007 | 474 | 274 | 113 | 69 | 43 | 18 | 6 | 4 | 7 | 4 | 16 | 8 | 1036 |

2006 | 487 | 243 | 83 | 51 | 34 | 18 | 9 | 3 | 4 | 4 | 2 | 1 | 939 |

2005 | 403 | 190 | 90 | 48 | 27 | 11 | 8 | 3 | 5 | 1 | 8 | 2 | 796 |

2004 | 404 | 219 | 85 | 66 | 33 | 14 | 9 | 6 | 6 | 3 | 13 | 1 | 859 |

2003 | 342 | 176 | 81 | 47 | 29 | 16 | 14 | 6 | 3 | 3 | 11 | 3 | 731 |

2002 | 343 | 196 | 96 | 50 | 25 | 27 | 10 | 10 | 5 | 4 | 13 | 1 | 780 |

2001 | 194 | 105 | 70 | 26 | 15 | 15 | 7 | 6 | 6 | 5 | 7 | 2 | 458 |

2000 | 241 | 143 | 63 | 26 | 20 | 14 | 13 | 4 | 0 | 1 | 4 | 1 | 530 |

1999 | 193 | 99 | 55 | 33 | 15 | 11 | 2 | 5 | 0 | 2 | 4 | 0 | 419 |

1998 | 196 | 108 | 55 | 40 | 29 | 15 | 5 | 2 | 3 | 4 | 4 | 0 | 461 |

1997 | 171 | 112 | 56 | 32 | 16 | 13 | 8 | 3 | 7 | 1 | 5 | 0 | 424 |

1996 | 169 | 96 | 55 | 26 | 16 | 16 | 4 | 5 | 2 | 0 | 5 | 0 | 394 |

Appendix 4: Computer science; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 6351 | 29,386 | 41,731 | 39,688 | 28,658 | 16,598 | 8997 | 4848 | 2766 | 1658 | 3286 | 240 | 184,207 |

2018 | 4831 | 24,640 | 33,894 | 31,019 | 21,523 | 12,552 | 6623 | 3474 | 2010 | 1209 | 2203 | 134 | 144,112 |

2017 | 5860 | 24,857 | 30,917 | 26,247 | 17,555 | 10,108 | 5390 | 2821 | 1577 | 928 | 1809 | 112 | 128,181 |

2016 | 6229 | 24,860 | 28,659 | 23,221 | 14,842 | 8476 | 4496 | 2388 | 1347 | 810 | 1567 | 91 | 116,986 |

2015 | 6311 | 24,197 | 26,177 | 20,607 | 13,150 | 7251 | 3710 | 2068 | 1182 | 688 | 1296 | 80 | 106,717 |

2014 | 6237 | 24,800 | 25,929 | 19,948 | 12,040 | 6379 | 3461 | 1895 | 996 | 638 | 1151 | 56 | 103,530 |

2013 | 7635 | 27,256 | 24,817 | 17,948 | 10,549 | 5505 | 2915 | 1494 | 886 | 504 | 910 | 63 | 100,482 |

2012 | 8060 | 29,811 | 23,820 | 15,748 | 8696 | 4614 | 2309 | 1149 | 690 | 450 | 780 | 46 | 96,173 |

2011 | 7797 | 26,091 | 21,308 | 13,923 | 7564 | 3886 | 2062 | 1063 | 565 | 404 | 638 | 47 | 85,348 |

2010 | 7724 | 23,499 | 19,113 | 12,338 | 6613 | 3395 | 1805 | 962 | 547 | 294 | 624 | 47 | 76,961 |

2009 | 9098 | 23,959 | 18,609 | 11,478 | 5993 | 2946 | 1544 | 782 | 463 | 298 | 523 | 34 | 75,727 |

2008 | 9454 | 21,628 | 16,329 | 9885 | 5171 | 2403 | 1259 | 655 | 365 | 219 | 421 | 36 | 67,825 |

2007 | 9747 | 20,321 | 14,818 | 8587 | 4250 | 2071 | 1044 | 532 | 292 | 182 | 337 | 17 | 62,198 |

2006 | 12,041 | 18,702 | 12,776 | 7185 | 3509 | 1656 | 799 | 426 | 227 | 145 | 247 | 16 | 57,729 |

2005 | 10,641 | 15,412 | 10,336 | 5435 | 2521 | 1244 | 619 | 330 | 205 | 110 | 170 | 8 | 47,031 |

2004 | 7546 | 12,832 | 8619 | 4478 | 1989 | 927 | 432 | 244 | 148 | 67 | 127 | 6 | 37,415 |

2003 | 6110 | 11,698 | 7804 | 3922 | 1756 | 814 | 396 | 213 | 132 | 75 | 135 | 10 | 33,065 |

2002 | 9327 | 13,969 | 8020 | 3850 | 1646 | 800 | 383 | 203 | 126 | 76 | 151 | 9 | 38,560 |

2001 | 11,776 | 15,500 | 8387 | 3731 | 1647 | 768 | 408 | 203 | 128 | 86 | 173 | 17 | 42,824 |

2000 | 8624 | 12,260 | 7203 | 3421 | 1498 | 714 | 364 | 200 | 138 | 64 | 161 | 10 | 34,657 |

1999 | 7717 | 11,360 | 6587 | 2960 | 1386 | 676 | 328 | 193 | 117 | 68 | 147 | 16 | 31,555 |

1998 | 7977 | 11,332 | 6373 | 2915 | 1225 | 625 | 297 | 179 | 87 | 66 | 133 | 14 | 31,223 |

1997 | 7479 | 10,753 | 6108 | 2656 | 1197 | 584 | 278 | 181 | 76 | 49 | 127 | 20 | 29,508 |

1996 | 7872 | 10,921 | 5803 | 2423 | 1035 | 492 | 254 | 147 | 73 | 58 | 92 | 10 | 29,180 |

Appendix 5: Computer science; reviews; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 146 | 266 | 288 | 293 | 339 | 348 | 319 | 379 | 373 | 320 | 2098 | 388 | 5557 |

2018 | 189 | 294 | 285 | 248 | 297 | 300 | 257 | 301 | 285 | 240 | 1503 | 294 | 4493 |

2017 | 216 | 328 | 319 | 280 | 278 | 297 | 259 | 261 | 243 | 225 | 1060 | 187 | 3953 |

2016 | 201 | 256 | 284 | 290 | 221 | 214 | 227 | 194 | 178 | 166 | 748 | 132 | 3111 |

2015 | 189 | 210 | 207 | 202 | 171 | 161 | 179 | 143 | 119 | 106 | 553 | 104 | 2344 |

2014 | 201 | 228 | 186 | 182 | 163 | 166 | 138 | 122 | 107 | 107 | 439 | 48 | 2087 |

2013 | 194 | 213 | 212 | 192 | 172 | 135 | 121 | 112 | 95 | 87 | 508 | 66 | 2107 |

2012 | 280 | 373 | 371 | 320 | 275 | 182 | 163 | 123 | 112 | 103 | 340 | 52 | 2694 |

2011 | 215 | 304 | 274 | 253 | 213 | 151 | 146 | 102 | 76 | 67 | 246 | 30 | 2077 |

2010 | 142 | 163 | 173 | 143 | 111 | 89 | 57 | 51 | 47 | 62 | 156 | 16 | 1210 |

2009 | 170 | 185 | 144 | 114 | 80 | 73 | 65 | 55 | 30 | 34 | 147 | 24 | 1121 |

2008 | 118 | 156 | 121 | 94 | 90 | 68 | 52 | 46 | 23 | 28 | 103 | 9 | 908 |

2007 | 304 | 304 | 155 | 102 | 91 | 74 | 55 | 33 | 33 | 28 | 79 | 14 | 1272 |

2006 | 527 | 559 | 317 | 237 | 138 | 107 | 85 | 48 | 42 | 23 | 104 | 16 | 2203 |

2005 | 724 | 803 | 466 | 336 | 211 | 134 | 72 | 60 | 56 | 36 | 111 | 12 | 3021 |

2004 | 539 | 625 | 411 | 253 | 180 | 105 | 79 | 47 | 43 | 19 | 90 | 9 | 2400 |

2003 | 605 | 531 | 313 | 222 | 158 | 107 | 71 | 35 | 40 | 32 | 74 | 5 | 2193 |

2002 | 231 | 249 | 165 | 119 | 56 | 55 | 37 | 29 | 23 | 11 | 63 | 5 | 1043 |

2001 | 142 | 145 | 95 | 71 | 31 | 20 | 31 | 8 | 14 | 12 | 25 | 4 | 598 |

2000 | 128 | 150 | 84 | 55 | 38 | 30 | 18 | 19 | 11 | 5 | 19 | 2 | 559 |

1999 | 105 | 99 | 65 | 55 | 30 | 16 | 16 | 8 | 9 | 5 | 12 | 1 | 421 |

1998 | 169 | 171 | 94 | 70 | 38 | 19 | 22 | 10 | 9 | 5 | 28 | 2 | 637 |

1997 | 167 | 162 | 99 | 70 | 44 | 19 | 12 | 15 | 10 | 16 | 25 | 4 | 643 |

1996 | 147 | 147 | 107 | 48 | 31 | 21 | 11 | 8 | 12 | 5 | 15 | 2 | 554 |

Appendix 6: Computer science; notes; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 144 | 153 | 64 | 25 | 9 | 8 | 3 | 2 | 1 | 3 | 3 | 0 | 415 |

2018 | 164 | 118 | 50 | 17 | 17 | 17 | 8 | 5 | 3 | 7 | 7 | 0 | 413 |

2017 | 121 | 121 | 49 | 30 | 13 | 13 | 2 | 5 | 5 | 1 | 14 | 0 | 374 |

2016 | 158 | 117 | 44 | 23 | 15 | 18 | 6 | 2 | 3 | 3 | 9 | 2 | 400 |

2015 | 242 | 119 | 43 | 19 | 6 | 2 | 2 | 1 | 2 | 0 | 3 | 0 | 439 |

2014 | 199 | 81 | 26 | 11 | 6 | 7 | 1 | 3 | 2 | 2 | 9 | 0 | 347 |

2013 | 111 | 66 | 19 | 9 | 3 | 0 | 2 | 0 | 1 | 0 | 1 | 0 | 212 |

2012 | 125 | 59 | 18 | 5 | 7 | 2 | 1 | 3 | 0 | 2 | 2 | 2 | 226 |

2011 | 122 | 58 | 15 | 9 | 3 | 3 | 0 | 0 | 2 | 0 | 2 | 0 | 214 |

2010 | 106 | 52 | 9 | 2 | 4 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 174 |

2009 | 118 | 46 | 9 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 176 |

2008 | 85 | 26 | 6 | 5 | 2 | 3 | 0 | 0 | 0 | 1 | 1 | 0 | 129 |

2007 | 101 | 11 | 9 | 2 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 125 |

2006 | 82 | 25 | 5 | 6 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 120 |

2005 | 58 | 15 | 11 | 2 | 1 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 90 |

2004 | 50 | 17 | 3 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 73 |

2003 | 73 | 24 | 9 | 3 | 2 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 114 |

2002 | 66 | 28 | 15 | 6 | 3 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 120 |

2001 | 51 | 33 | 15 | 2 | 1 | 2 | 2 | 1 | 0 | 1 | 1 | 1 | 110 |

2000 | 37 | 14 | 4 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 58 |

1999 | 30 | 12 | 5 | 6 | 2 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 57 |

1998 | 22 | 9 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 32 |

1997 | 22 | 9 | 3 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 36 |

1996 | 23 | 4 | 4 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 34 |

Appendix 7: Engineering; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 15,661 | 73,544 | 99,312 | 88,868 | 60,461 | 34,141 | 17,173 | 8232 | 4074 | 2120 | 3675 | 294 | 407,555 |

2018 | 15,658 | 74,627 | 90,927 | 75,408 | 49,487 | 27,006 | 13,222 | 6194 | 2916 | 1590 | 2836 | 233 | 360,104 |

2017 | 30,052 | 85,899 | 87,986 | 67,627 | 41,848 | 22,001 | 10,479 | 5010 | 2321 | 1299 | 2394 | 201 | 357,117 |

2016 | 21,901 | 78,783 | 82,490 | 60,434 | 34,672 | 17,671 | 8150 | 3815 | 1833 | 1032 | 1962 | 190 | 312,933 |

2015 | 25,407 | 83,590 | 77,081 | 52,727 | 29,774 | 14,732 | 6483 | 3060 | 1623 | 862 | 1790 | 182 | 297,311 |

2014 | 24,301 | 78,653 | 71,093 | 48,295 | 25,948 | 12,258 | 5384 | 2482 | 1241 | 702 | 1468 | 128 | 271,953 |

2013 | 25,810 | 81,077 | 66,882 | 42,222 | 21,874 | 10,022 | 4253 | 1997 | 955 | 604 | 1180 | 113 | 256,989 |

2012 | 27,736 | 77,419 | 58,126 | 34,720 | 17,197 | 7741 | 3358 | 1516 | 747 | 433 | 889 | 52 | 229,934 |

2011 | 30,019 | 77,994 | 53,598 | 30,572 | 14,399 | 6186 | 2753 | 1239 | 594 | 345 | 876 | 96 | 218,671 |

2010 | 33,278 | 77,241 | 48,861 | 26,560 | 12,518 | 5244 | 2369 | 1080 | 615 | 317 | 776 | 97 | 208,956 |

2009 | 37,672 | 76,971 | 46,589 | 24,350 | 10,629 | 4549 | 1905 | 897 | 481 | 271 | 657 | 59 | 205,030 |

2008 | 38,641 | 70,556 | 40,568 | 19,796 | 8662 | 3649 | 1524 | 784 | 422 | 237 | 577 | 55 | 185,471 |

2007 | 39,952 | 65,779 | 36,427 | 16,620 | 6848 | 2798 | 1298 | 629 | 344 | 218 | 527 | 67 | 171,507 |

2006 | 43,391 | 60,522 | 30,606 | 13,925 | 5702 | 2352 | 1048 | 501 | 267 | 154 | 466 | 54 | 158,988 |

2005 | 39,980 | 50,644 | 25,230 | 11,092 | 4272 | 1791 | 808 | 366 | 220 | 150 | 283 | 39 | 134,875 |

2004 | 32,322 | 43,697 | 22,759 | 9727 | 3659 | 1506 | 642 | 354 | 181 | 130 | 286 | 28 | 115,291 |

2003 | 26,978 | 37,487 | 19,816 | 8266 | 3218 | 1329 | 585 | 279 | 171 | 139 | 305 | 35 | 98,608 |

2002 | 31,804 | 40,071 | 19,319 | 7788 | 2997 | 1185 | 609 | 283 | 153 | 115 | 320 | 32 | 104,676 |

2001 | 40,818 | 43,033 | 19,913 | 7492 | 2981 | 1183 | 582 | 264 | 186 | 115 | 385 | 42 | 116,994 |

2000 | 37,440 | 38,742 | 17,611 | 6860 | 2627 | 1199 | 568 | 313 | 194 | 117 | 378 | 49 | 106,098 |

1999 | 36,167 | 37,294 | 16,877 | 6450 | 2638 | 1107 | 502 | 258 | 175 | 109 | 337 | 48 | 101,962 |

1998 | 35,370 | 36,550 | 16,319 | 6225 | 2299 | 1049 | 485 | 285 | 159 | 120 | 291 | 53 | 99,205 |

1997 | 36,159 | 36,557 | 15,658 | 5704 | 2165 | 913 | 396 | 240 | 136 | 83 | 305 | 48 | 98,364 |

1996 | 35,441 | 36,048 | 15,726 | 6127 | 2454 | 1225 | 594 | 360 | 175 | 135 | 362 | 45 | 98,692 |

Appendix 8: Engineering; reviews; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 304 | 556 | 733 | 753 | 692 | 793 | 702 | 687 | 616 | 633 | 3903 | 917 | 11,289 |

2018 | 359 | 649 | 763 | 642 | 642 | 658 | 604 | 578 | 569 | 479 | 3045 | 693 | 9681 |

2017 | 409 | 701 | 778 | 760 | 712 | 677 | 582 | 537 | 512 | 438 | 2471 | 533 | 9110 |

2016 | 464 | 744 | 767 | 629 | 572 | 568 | 524 | 427 | 436 | 374 | 1946 | 350 | 7801 |

2015 | 411 | 604 | 606 | 519 | 421 | 456 | 423 | 339 | 296 | 266 | 1429 | 257 | 6027 |

2014 | 408 | 538 | 593 | 520 | 444 | 404 | 313 | 327 | 282 | 265 | 1279 | 261 | 5634 |

2013 | 410 | 621 | 554 | 501 | 415 | 400 | 335 | 271 | 244 | 207 | 1075 | 190 | 5223 |

2012 | 607 | 925 | 878 | 720 | 565 | 436 | 315 | 307 | 275 | 241 | 957 | 163 | 6389 |

2011 | 505 | 751 | 744 | 607 | 436 | 382 | 292 | 248 | 191 | 192 | 831 | 112 | 5291 |

2010 | 420 | 536 | 463 | 423 | 341 | 288 | 246 | 186 | 175 | 159 | 688 | 101 | 4026 |

2009 | 364 | 488 | 513 | 447 | 280 | 264 | 177 | 140 | 176 | 150 | 569 | 83 | 3651 |

2008 | 366 | 450 | 441 | 483 | 271 | 208 | 184 | 160 | 139 | 100 | 439 | 80 | 3321 |

2007 | 766 | 636 | 501 | 684 | 295 | 239 | 160 | 119 | 125 | 110 | 346 | 57 | 4038 |

2006 | 1270 | 1159 | 701 | 439 | 290 | 208 | 178 | 144 | 88 | 81 | 354 | 54 | 4966 |

2005 | 1708 | 1666 | 957 | 544 | 337 | 246 | 171 | 125 | 121 | 89 | 361 | 62 | 6387 |

2004 | 1274 | 1011 | 654 | 410 | 261 | 212 | 145 | 96 | 93 | 83 | 239 | 51 | 4529 |

2003 | 1090 | 887 | 465 | 307 | 228 | 139 | 110 | 78 | 57 | 52 | 198 | 40 | 3651 |

2002 | 594 | 438 | 274 | 200 | 124 | 111 | 74 | 63 | 54 | 39 | 136 | 32 | 2139 |

2001 | 365 | 334 | 201 | 121 | 89 | 82 | 70 | 49 | 44 | 24 | 120 | 23 | 1522 |

2000 | 296 | 253 | 166 | 112 | 85 | 82 | 51 | 25 | 25 | 19 | 73 | 10 | 1197 |

1999 | 329 | 292 | 154 | 106 | 69 | 45 | 27 | 32 | 27 | 14 | 65 | 9 | 1169 |

1998 | 553 | 472 | 249 | 137 | 79 | 57 | 34 | 30 | 24 | 14 | 70 | 13 | 1732 |

1997 | 506 | 503 | 266 | 134 | 91 | 55 | 47 | 34 | 33 | 27 | 72 | 29 | 1797 |

1996 | 519 | 570 | 293 | 161 | 91 | 57 | 40 | 41 | 34 | 20 | 105 | 17 | 1948 |

Appendix 9: Engineering; notes; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 421 | 223 | 97 | 57 | 24 | 8 | 7 | 3 | 1 | 1 | 5 | 1 | 848 |

2018 | 538 | 215 | 94 | 50 | 25 | 17 | 9 | 4 | 2 | 1 | 1 | 0 | 956 |

2017 | 408 | 203 | 89 | 45 | 24 | 12 | 10 | 7 | 3 | 1 | 5 | 0 | 807 |

2016 | 446 | 168 | 74 | 26 | 16 | 12 | 11 | 2 | 2 | 0 | 1 | 0 | 758 |

2015 | 480 | 180 | 80 | 28 | 21 | 11 | 5 | 3 | 3 | 1 | 3 | 0 | 815 |

2014 | 530 | 170 | 61 | 18 | 14 | 6 | 3 | 1 | 0 | 0 | 2 | 0 | 805 |

2013 | 476 | 143 | 55 | 28 | 7 | 3 | 3 | 2 | 3 | 0 | 1 | 0 | 721 |

2012 | 696 | 145 | 58 | 23 | 8 | 7 | 2 | 3 | 1 | 0 | 2 | 0 | 945 |

2011 | 396 | 105 | 44 | 28 | 1 | 5 | 1 | 2 | 2 | 1 | 4 | 1 | 590 |

2010 | 493 | 108 | 47 | 17 | 8 | 3 | 5 | 0 | 0 | 1 | 0 | 0 | 682 |

2009 | 481 | 125 | 48 | 21 | 4 | 3 | 2 | 1 | 0 | 0 | 2 | 0 | 687 |

2008 | 459 | 97 | 27 | 6 | 2 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 593 |

2007 | 428 | 71 | 20 | 7 | 2 | 4 | 1 | 0 | 0 | 0 | 1 | 1 | 535 |

2006 | 416 | 85 | 21 | 8 | 2 | 1 | 2 | 0 | 0 | 0 | 1 | 0 | 536 |

2005 | 281 | 71 | 14 | 7 | 3 | 2 | 3 | 0 | 1 | 0 | 2 | 0 | 384 |

2004 | 166 | 46 | 21 | 6 | 3 | 0 | 1 | 1 | 0 | 0 | 2 | 0 | 246 |

2003 | 150 | 40 | 15 | 9 | 5 | 1 | 2 | 2 | 0 | 0 | 0 | 0 | 224 |

2002 | 182 | 59 | 16 | 5 | 5 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 270 |

2001 | 184 | 44 | 12 | 3 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 245 |

2000 | 133 | 21 | 12 | 4 | 2 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 174 |

1999 | 95 | 28 | 9 | 8 | 4 | 3 | 0 | 0 | 1 | 0 | 0 | 0 | 148 |

1998 | 102 | 25 | 3 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 133 |

1997 | 69 | 31 | 7 | 2 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 112 |

1996 | 93 | 30 | 3 | 1 | 0 | 1 | 0 | 2 | 0 | 0 | 0 | 0 | 130 |

Appendix 10: Mathematics; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 6699 | 29,303 | 36,053 | 29,050 | 18,744 | 10,044 | 5010 | 2460 | 1281 | 707 | 1213 | 76 | 140,640 |

2018 | 6900 | 28,459 | 32,315 | 25,089 | 15,365 | 8053 | 3781 | 1875 | 1023 | 544 | 951 | 62 | 124,417 |

2017 | 8373 | 30,586 | 31,909 | 23,100 | 13,377 | 6610 | 3174 | 1665 | 776 | 410 | 803 | 57 | 120,840 |

2016 | 9399 | 31,543 | 30,693 | 21,180 | 11,726 | 5855 | 2813 | 1376 | 735 | 376 | 817 | 50 | 116,563 |

2015 | 9506 | 31,222 | 29,584 | 19,681 | 10,636 | 5015 | 2380 | 1185 | 704 | 357 | 692 | 44 | 111,006 |

2014 | 10,128 | 32,531 | 30,018 | 19,225 | 9946 | 4583 | 2114 | 1164 | 569 | 334 | 625 | 42 | 111,279 |

2013 | 11,164 | 34,035 | 27,707 | 17,430 | 8684 | 3995 | 1901 | 913 | 483 | 280 | 518 | 36 | 107,146 |

2012 | 11,924 | 34,047 | 26,256 | 15,406 | 7490 | 3412 | 1646 | 753 | 421 | 233 | 435 | 24 | 102,047 |

2011 | 11,739 | 31,742 | 23,661 | 13,445 | 6474 | 3065 | 1375 | 687 | 329 | 222 | 395 | 41 | 93,175 |

2010 | 12,043 | 30,284 | 22,015 | 12,147 | 5776 | 2597 | 1314 | 625 | 337 | 185 | 346 | 43 | 87,712 |

2009 | 13,921 | 31,430 | 22,202 | 11,838 | 5291 | 2404 | 1112 | 544 | 320 | 177 | 355 | 35 | 89,629 |

2008 | 13,756 | 28,895 | 19,548 | 10,004 | 4625 | 1967 | 1000 | 436 | 233 | 139 | 254 | 30 | 80,887 |

2007 | 13,502 | 27,072 | 17,790 | 8787 | 3969 | 1701 | 817 | 390 | 214 | 114 | 255 | 23 | 74,634 |

2006 | 12,930 | 24,360 | 15,399 | 7384 | 3367 | 1464 | 676 | 332 | 174 | 95 | 186 | 16 | 66,383 |

2005 | 12,228 | 21,462 | 13,238 | 6235 | 2663 | 1150 | 494 | 256 | 134 | 82 | 159 | 9 | 58,110 |

2004 | 11,045 | 20,145 | 12,524 | 5846 | 2391 | 1034 | 464 | 227 | 128 | 53 | 117 | 7 | 53,981 |

2003 | 10,051 | 18,541 | 11,565 | 5354 | 2299 | 969 | 429 | 233 | 102 | 60 | 89 | 9 | 49,701 |

2002 | 12,954 | 20,036 | 11,376 | 4974 | 2008 | 839 | 405 | 193 | 113 | 78 | 147 | 13 | 53,136 |

2001 | 15,646 | 21,625 | 11,731 | 5063 | 2041 | 903 | 421 | 218 | 115 | 70 | 151 | 15 | 57,999 |

2000 | 12,629 | 18,250 | 10,115 | 4344 | 1760 | 799 | 347 | 178 | 128 | 57 | 179 | 22 | 48,808 |

1999 | 11,637 | 17,188 | 9390 | 3900 | 1614 | 746 | 344 | 185 | 124 | 65 | 134 | 25 | 45,352 |

1998 | 11,923 | 17,079 | 9010 | 3879 | 1445 | 668 | 275 | 181 | 112 | 68 | 135 | 20 | 44,795 |

1997 | 11,639 | 16,980 | 8888 | 3550 | 1436 | 610 | 279 | 157 | 92 | 51 | 141 | 20 | 43,843 |

1996 | 12,925 | 16,409 | 8324 | 3209 | 1273 | 572 | 302 | 142 | 88 | 46 | 118 | 15 | 43,423 |

Appendix 11: Mathematics; reviews; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 65 | 194 | 241 | 197 | 169 | 168 | 126 | 121 | 81 | 95 | 465 | 82 | 2004 |

2018 | 62 | 157 | 204 | 172 | 149 | 134 | 109 | 120 | 91 | 63 | 396 | 87 | 1744 |

2017 | 76 | 211 | 229 | 220 | 160 | 135 | 127 | 114 | 86 | 81 | 348 | 64 | 1851 |

2016 | 73 | 187 | 217 | 172 | 131 | 95 | 81 | 83 | 69 | 70 | 289 | 46 | 1513 |

2015 | 70 | 157 | 157 | 138 | 108 | 96 | 77 | 59 | 62 | 37 | 218 | 34 | 1213 |

2014 | 63 | 123 | 141 | 141 | 85 | 79 | 73 | 63 | 47 | 54 | 186 | 29 | 1084 |

2013 | 103 | 167 | 170 | 164 | 113 | 80 | 73 | 57 | 53 | 51 | 187 | 32 | 1250 |

2012 | 145 | 301 | 282 | 219 | 177 | 103 | 89 | 62 | 51 | 50 | 180 | 32 | 1691 |

2011 | 123 | 227 | 232 | 169 | 111 | 95 | 78 | 46 | 43 | 48 | 161 | 22 | 1355 |

2010 | 97 | 143 | 127 | 102 | 96 | 75 | 50 | 44 | 36 | 31 | 120 | 11 | 932 |

2009 | 73 | 130 | 114 | 61 | 66 | 47 | 52 | 26 | 29 | 20 | 87 | 16 | 721 |

2008 | 81 | 121 | 74 | 94 | 62 | 42 | 41 | 19 | 19 | 17 | 61 | 14 | 645 |

2007 | 128 | 173 | 124 | 99 | 76 | 45 | 35 | 28 | 21 | 15 | 54 | 7 | 805 |

2006 | 258 | 334 | 292 | 203 | 130 | 96 | 62 | 36 | 25 | 12 | 67 | 11 | 1526 |

2005 | 418 | 598 | 419 | 254 | 154 | 88 | 46 | 30 | 41 | 24 | 79 | 12 | 2163 |

2004 | 200 | 323 | 276 | 178 | 99 | 72 | 46 | 27 | 15 | 21 | 79 | 13 | 1349 |

2003 | 171 | 312 | 252 | 167 | 118 | 66 | 45 | 30 | 19 | 20 | 57 | 5 | 1262 |

2002 | 133 | 144 | 115 | 78 | 33 | 34 | 30 | 19 | 13 | 10 | 37 | 5 | 651 |

2001 | 99 | 128 | 85 | 50 | 33 | 28 | 23 | 13 | 16 | 5 | 32 | 3 | 515 |

2000 | 111 | 124 | 96 | 72 | 32 | 32 | 20 | 13 | 16 | 2 | 24 | 3 | 545 |

1999 | 78 | 90 | 59 | 40 | 32 | 18 | 7 | 7 | 9 | 3 | 16 | 2 | 361 |

1998 | 82 | 92 | 74 | 51 | 19 | 21 | 14 | 8 | 9 | 4 | 19 | 3 | 396 |

1997 | 65 | 94 | 55 | 30 | 25 | 15 | 10 | 13 | 6 | 6 | 18 | 4 | 341 |

1996 | 77 | 94 | 48 | 31 | 17 | 13 | 7 | 10 | 4 | 2 | 15 | 2 | 320 |

Appendix 12: Mathematics; notes; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 209 | 116 | 32 | 15 | 1 | 4 | 0 | 1 | 0 | 0 | 1 | 1 | 380 |

2018 | 188 | 70 | 20 | 10 | 7 | 4 | 1 | 1 | 0 | 1 | 1 | 0 | 303 |

2017 | 188 | 82 | 32 | 10 | 5 | 3 | 2 | 0 | 0 | 1 | 2 | 0 | 325 |

2016 | 186 | 81 | 27 | 6 | 5 | 6 | 1 | 2 | 0 | 1 | 2 | 0 | 317 |

2015 | 146 | 64 | 19 | 9 | 7 | 6 | 3 | 0 | 0 | 0 | 3 | 5 | 262 |

2014 | 188 | 74 | 21 | 14 | 2 | 3 | 2 | 3 | 1 | 0 | 1 | 0 | 309 |

2013 | 105 | 55 | 21 | 5 | 3 | 2 | 1 | 0 | 0 | 0 | 1 | 0 | 193 |

2012 | 137 | 44 | 15 | 7 | 4 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 208 |

2011 | 154 | 72 | 16 | 5 | 1 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 251 |

2010 | 149 | 38 | 9 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 199 |

2009 | 172 | 58 | 13 | 6 | 2 | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 255 |

2008 | 182 | 60 | 17 | 10 | 2 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 274 |

2007 | 142 | 25 | 11 | 4 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 184 |

2006 | 103 | 19 | 10 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 135 |

2005 | 47 | 15 | 7 | 1 | 2 | 2 | 0 | 0 | 0 | 0 | 1 | 0 | 75 |

2004 | 29 | 15 | 2 | 2 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 50 |

2003 | 44 | 9 | 4 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 61 |

2002 | 58 | 11 | 4 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 76 |

2001 | 33 | 14 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 52 |

2000 | 14 | 8 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 25 |

1999 | 19 | 17 | 11 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 50 |

1998 | 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 13 |

1997 | 24 | 4 | 3 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 33 |

1996 | 14 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 18 |

Appendix 13: Medicine; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 32,632 | 87,901 | 123,439 | 114,430 | 74,021 | 41,855 | 21,419 | 11,750 | 6437 | 3803 | 6544 | 491 | 524,722 |

2018 | 32,954 | 87,021 | 116,893 | 104,276 | 67,184 | 36,883 | 18,252 | 9801 | 5368 | 3107 | 5286 | 428 | 487,453 |

2017 | 34,697 | 85,298 | 113,742 | 100,841 | 63,223 | 33,791 | 16,673 | 8727 | 4745 | 2774 | 4941 | 414 | 469,866 |

2016 | 38,234 | 87,710 | 112,135 | 97,267 | 60,841 | 32,213 | 15,654 | 8257 | 4439 | 2709 | 5207 | 529 | 465,195 |

2015 | 40,521 | 88,291 | 109,707 | 94,705 | 58,224 | 31,019 | 14,905 | 7918 | 4477 | 2717 | 5832 | 628 | 458,944 |

2014 | 40,624 | 92,283 | 109,327 | 90,649 | 55,051 | 28,911 | 13,990 | 7436 | 4202 | 2458 | 5405 | 568 | 450,904 |

2013 | 41,289 | 92,255 | 105,857 | 86,256 | 51,673 | 26,786 | 12,698 | 6673 | 3695 | 2327 | 4994 | 498 | 435,001 |

2012 | 41,028 | 89,549 | 100,167 | 79,535 | 47,099 | 23,732 | 11,042 | 5734 | 3162 | 1921 | 4067 | 376 | 407,412 |

2011 | 41,601 | 90,307 | 96,601 | 75,533 | 44,727 | 22,721 | 10,330 | 5445 | 3001 | 1807 | 4022 | 378 | 396,473 |

2010 | 41,978 | 86,978 | 90,744 | 69,004 | 40,512 | 19,867 | 8882 | 4520 | 2406 | 1412 | 2961 | 278 | 369,542 |

2009 | 43,272 | 85,827 | 85,661 | 64,921 | 37,982 | 18,906 | 8133 | 4017 | 2111 | 1182 | 2343 | 185 | 354,540 |

2008 | 40,897 | 82,267 | 81,553 | 60,311 | 35,441 | 17,508 | 7679 | 3725 | 1950 | 1051 | 2111 | 176 | 334,669 |

2007 | 38,463 | 77,769 | 76,739 | 56,234 | 32,953 | 16,307 | 7110 | 3375 | 1668 | 997 | 2001 | 164 | 313,780 |

2006 | 38,758 | 74,920 | 71,311 | 51,643 | 29,843 | 14,724 | 6444 | 3016 | 1557 | 919 | 1661 | 162 | 294,958 |

2005 | 35,551 | 68,556 | 64,807 | 47,329 | 26,537 | 13,392 | 5537 | 2547 | 1263 | 709 | 1397 | 104 | 267,729 |

2004 | 31,012 | 63,491 | 59,944 | 43,424 | 23,971 | 11,523 | 4923 | 2268 | 1100 | 615 | 1158 | 81 | 243,510 |

2003 | 30,023 | 61,475 | 58,837 | 41,177 | 22,346 | 10,441 | 4520 | 2106 | 1034 | 591 | 1096 | 83 | 233,729 |

2002 | 31,171 | 61,935 | 57,791 | 40,551 | 21,984 | 10,607 | 4788 | 2329 | 1233 | 756 | 1782 | 135 | 235,062 |

2001 | 33,478 | 62,567 | 57,354 | 39,741 | 21,599 | 10,464 | 4890 | 2500 | 1326 | 909 | 2066 | 202 | 237,096 |

2000 | 33,631 | 61,739 | 56,582 | 38,551 | 20,859 | 10,015 | 4747 | 2426 | 1347 | 883 | 2129 | 213 | 233,122 |

1999 | 35,250 | 61,986 | 55,680 | 37,215 | 19,770 | 9408 | 4365 | 2112 | 1188 | 753 | 1880 | 181 | 229,788 |

1998 | 37,467 | 63,936 | 54,781 | 34,893 | 18,088 | 8519 | 3940 | 1960 | 1057 | 689 | 1828 | 196 | 227,354 |

1997 | 38,355 | 65,500 | 54,442 | 33,502 | 17,306 | 8077 | 3675 | 1860 | 1071 | 654 | 1781 | 186 | 226,409 |

1996 | 33,562 | 57,324 | 48,654 | 31,209 | 15,609 | 7133 | 3143 | 1530 | 800 | 475 | 1312 | 154 | 200,905 |

Appendix 14: Medicine; reviews; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 2814 | 5925 | 7740 | 8687 | 8580 | 7701 | 6537 | 5439 | 4329 | 3686 | 13,864 | 1918 | 77,220 |

2018 | 3155 | 5843 | 7259 | 8142 | 8072 | 7309 | 6099 | 5089 | 4135 | 3483 | 13,284 | 1804 | 73,674 |

2017 | 3212 | 6233 | 7751 | 8469 | 7879 | 7166 | 5721 | 4923 | 4017 | 3410 | 12,232 | 1609 | 72,622 |

2016 | 3140 | 6262 | 7265 | 7941 | 7592 | 6845 | 5562 | 4494 | 3793 | 3214 | 11,211 | 1294 | 68,613 |

2015 | 2767 | 5415 | 6677 | 6846 | 6683 | 5917 | 4781 | 4162 | 3481 | 2928 | 9917 | 1114 | 60,688 |

2014 | 2512 | 5671 | 7050 | 7091 | 6655 | 5837 | 4940 | 4052 | 3458 | 2848 | 9965 | 1078 | 61,157 |

2013 | 2522 | 6157 | 7511 | 6977 | 6466 | 5633 | 4500 | 3845 | 3155 | 2671 | 9117 | 942 | 59,496 |

2012 | 2890 | 7662 | 9248 | 8327 | 7317 | 6027 | 4712 | 3948 | 3281 | 2778 | 9490 | 985 | 66,665 |

2011 | 2816 | 6711 | 7924 | 6999 | 6192 | 5360 | 4051 | 3526 | 2816 | 2317 | 8268 | 905 | 57,885 |

2010 | 2254 | 5771 | 8040 | 7538 | 6794 | 6000 | 4606 | 3669 | 2878 | 2417 | 8574 | 881 | 59,422 |

2009 | 2817 | 6738 | 8400 | 7939 | 6881 | 6002 | 4583 | 3785 | 2940 | 2389 | 8226 | 945 | 61,645 |

2008 | 2980 | 7192 | 8671 | 8030 | 6820 | 5868 | 4470 | 3584 | 2790 | 2350 | 7881 | 827 | 61,463 |

2007 | 4891 | 9557 | 9135 | 8240 | 6697 | 5524 | 4209 | 3358 | 2606 | 2147 | 7122 | 791 | 64,277 |

2006 | 5711 | 11,783 | 10,644 | 8646 | 6911 | 5654 | 4185 | 3230 | 2613 | 2074 | 6938 | 840 | 69,229 |

2005 | 6317 | 11,997 | 10,940 | 8529 | 6776 | 5383 | 4023 | 3225 | 2422 | 1932 | 6483 | 743 | 68,770 |

2004 | 6234 | 11,820 | 10,898 | 8570 | 6458 | 5227 | 3733 | 3137 | 2357 | 1836 | 6134 | 704 | 67,108 |

2003 | 5180 | 9798 | 8975 | 7408 | 6284 | 5107 | 3744 | 2997 | 2211 | 1685 | 5729 | 718 | 59,836 |

2002 | 3446 | 6744 | 6713 | 5952 | 5028 | 3951 | 3105 | 2362 | 1815 | 1440 | 4617 | 592 | 45,765 |

2001 | 3922 | 6079 | 5622 | 4834 | 4022 | 3275 | 2411 | 1877 | 1408 | 1159 | 3656 | 449 | 38,714 |

2000 | 4834 | 7038 | 5933 | 4903 | 4045 | 3080 | 2155 | 1740 | 1345 | 977 | 3507 | 451 | 40,008 |

1999 | 4529 | 6360 | 5111 | 4277 | 3419 | 2612 | 1955 | 1488 | 1089 | 944 | 2958 | 412 | 35,154 |

1998 | 4159 | 5899 | 4819 | 3926 | 3015 | 2332 | 1791 | 1375 | 1105 | 836 | 2769 | 422 | 32,448 |

1997 | 4240 | 5538 | 4690 | 3851 | 3002 | 2284 | 1795 | 1363 | 1066 | 822 | 2786 | 361 | 31,798 |

1996 | 2793 | 4235 | 3678 | 2923 | 2327 | 1796 | 1413 | 1086 | 798 | 638 | 2315 | 337 | 24,339 |

Appendix 15: Medicine; notes; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 15,589 | 5470 | 1103 | 440 | 217 | 136 | 88 | 52 | 42 | 20 | 68 | 5 | 23,230 |

2018 | 13,758 | 5107 | 1216 | 524 | 249 | 164 | 88 | 59 | 37 | 27 | 43 | 2 | 21,274 |

2017 | 13,642 | 5168 | 1194 | 484 | 271 | 131 | 74 | 64 | 19 | 19 | 37 | 3 | 21,106 |

2016 | 14,394 | 5055 | 1272 | 461 | 224 | 127 | 72 | 38 | 23 | 16 | 38 | 1 | 21,721 |

2015 | 13,275 | 4471 | 1042 | 384 | 190 | 91 | 62 | 33 | 20 | 14 | 41 | 3 | 19,626 |

2014 | 14,118 | 4585 | 1026 | 419 | 215 | 111 | 85 | 37 | 31 | 20 | 50 | 9 | 20,706 |

2013 | 13,613 | 3934 | 1044 | 371 | 162 | 87 | 64 | 43 | 17 | 16 | 33 | 2 | 19,386 |

2012 | 11,730 | 3770 | 1046 | 381 | 171 | 110 | 69 | 35 | 20 | 13 | 39 | 1 | 17,385 |

2011 | 10,784 | 3590 | 996 | 340 | 178 | 83 | 51 | 29 | 19 | 11 | 48 | 3 | 16,132 |

2010 | 8585 | 3444 | 1066 | 405 | 193 | 104 | 54 | 37 | 25 | 18 | 51 | 4 | 13,986 |

2009 | 8379 | 3168 | 986 | 403 | 210 | 88 | 54 | 44 | 29 | 8 | 40 | 2 | 13,411 |

2008 | 7612 | 2779 | 960 | 363 | 173 | 99 | 52 | 54 | 18 | 15 | 37 | 5 | 12,167 |

2007 | 6477 | 2131 | 766 | 277 | 119 | 38 | 41 | 20 | 10 | 9 | 27 | 1 | 9916 |

2006 | 5485 | 1942 | 678 | 267 | 105 | 56 | 29 | 22 | 12 | 3 | 27 | 4 | 8630 |

2005 | 5087 | 1559 | 463 | 196 | 86 | 48 | 24 | 12 | 10 | 5 | 13 | 5 | 7508 |

2004 | 4450 | 1323 | 416 | 178 | 74 | 33 | 24 | 11 | 13 | 5 | 18 | 2 | 6547 |

2003 | 3689 | 1282 | 403 | 160 | 83 | 46 | 25 | 14 | 11 | 4 | 8 | 0 | 5725 |

2002 | 3237 | 1120 | 443 | 169 | 82 | 41 | 15 | 20 | 11 | 5 | 9 | 0 | 5152 |

2001 | 3189 | 1154 | 434 | 166 | 69 | 38 | 21 | 13 | 6 | 8 | 14 | 0 | 5112 |

2000 | 2661 | 999 | 390 | 174 | 83 | 32 | 18 | 15 | 7 | 12 | 19 | 3 | 4413 |

1999 | 2839 | 911 | 367 | 161 | 76 | 47 | 30 | 14 | 12 | 8 | 11 | 1 | 4477 |

1998 | 2109 | 618 | 213 | 108 | 43 | 16 | 11 | 12 | 2 | 2 | 13 | 2 | 3149 |

1997 | 1814 | 551 | 190 | 97 | 43 | 23 | 10 | 8 | 8 | 3 | 6 | 2 | 2755 |

1996 | 444 | 208 | 95 | 58 | 23 | 14 | 5 | 4 | 2 | 3 | 5 | 0 | 861 |

Appendix 16: Physics and astronomy; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 6328 | 34,570 | 53,492 | 57,200 | 44,911 | 29,291 | 16,829 | 9350 | 4885 | 2865 | 5151 | 288 | 265,160 |

2018 | 7503 | 37,233 | 53,885 | 53,608 | 40,585 | 25,900 | 14,444 | 8175 | 4221 | 2454 | 4591 | 219 | 252,818 |

2017 | 8526 | 39,703 | 53,708 | 50,834 | 36,684 | 22,092 | 12,422 | 6894 | 3547 | 2093 | 3837 | 203 | 240,543 |

2016 | 10,084 | 43,271 | 54,842 | 48,872 | 32,832 | 19,598 | 10,945 | 5827 | 3280 | 1899 | 3702 | 228 | 235,380 |

2015 | 11,103 | 46,361 | 54,700 | 46,337 | 30,975 | 17,699 | 9637 | 5284 | 2887 | 1706 | 3315 | 216 | 230,220 |

2014 | 12,942 | 49,065 | 55,978 | 45,309 | 28,594 | 15,798 | 8334 | 4567 | 2538 | 1515 | 3005 | 193 | 227,838 |

2013 | 14,402 | 52,573 | 55,977 | 42,722 | 25,745 | 14,043 | 7462 | 3996 | 2182 | 1407 | 2597 | 205 | 223,311 |

2012 | 16,158 | 51,902 | 52,472 | 38,613 | 21,939 | 12,055 | 6234 | 3536 | 1997 | 1224 | 2344 | 119 | 208,593 |

2011 | 18,458 | 54,761 | 51,712 | 35,191 | 19,762 | 10,500 | 5585 | 3068 | 1829 | 1120 | 2099 | 133 | 204,218 |

2010 | 17,489 | 53,811 | 49,036 | 31,792 | 17,587 | 9002 | 4944 | 2794 | 1680 | 966 | 1947 | 151 | 191,199 |

2009 | 21,954 | 58,719 | 49,269 | 30,479 | 15,832 | 8253 | 4489 | 2516 | 1479 | 897 | 1799 | 148 | 195,834 |

2008 | 23,395 | 59,694 | 47,381 | 28,368 | 14,574 | 7196 | 3935 | 2120 | 1239 | 778 | 1460 | 136 | 190,276 |

2007 | 25,080 | 57,861 | 44,245 | 24,950 | 12,379 | 6353 | 3428 | 1904 | 1052 | 670 | 1302 | 118 | 179,342 |

2006 | 24,711 | 57,148 | 42,386 | 23,242 | 11,276 | 5860 | 3046 | 1667 | 988 | 600 | 1187 | 101 | 172,212 |

2005 | 21,142 | 48,548 | 36,969 | 20,472 | 9898 | 5076 | 2674 | 1529 | 816 | 502 | 920 | 95 | 148,641 |

2004 | 17,432 | 44,318 | 34,327 | 19,288 | 9215 | 4886 | 2506 | 1409 | 784 | 464 | 800 | 51 | 135,480 |

2003 | 15,748 | 41,186 | 33,680 | 18,483 | 8816 | 4369 | 2284 | 1303 | 711 | 405 | 716 | 50 | 127,751 |

2002 | 20,577 | 44,620 | 33,880 | 18,639 | 9277 | 4479 | 2423 | 1344 | 759 | 450 | 782 | 71 | 137,301 |

2001 | 26,648 | 48,975 | 33,782 | 17,927 | 8591 | 4335 | 2306 | 1216 | 719 | 397 | 846 | 85 | 145,827 |

2000 | 27,360 | 47,646 | 31,033 | 16,335 | 7849 | 3956 | 2066 | 1079 | 639 | 369 | 848 | 71 | 139,251 |

1999 | 28,297 | 46,957 | 30,021 | 14,918 | 7129 | 3564 | 1847 | 1041 | 604 | 351 | 704 | 90 | 135,523 |

1998 | 26,032 | 47,612 | 29,601 | 14,690 | 6666 | 3336 | 1788 | 901 | 538 | 323 | 664 | 82 | 132,233 |

1997 | 28,134 | 46,665 | 28,173 | 13,548 | 6072 | 2959 | 1459 | 860 | 476 | 284 | 599 | 76 | 129,305 |

1996 | 26,989 | 45,492 | 27,389 | 12,764 | 5772 | 2821 | 1447 | 826 | 436 | 261 | 615 | 69 | 124,881 |

Appendix 17: Physics and astronomy; reviews; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 358 | 287 | 364 | 348 | 387 | 402 | 374 | 346 | 331 | 318 | 2190 | 598 | 6303 |

2018 | 163 | 324 | 342 | 369 | 342 | 330 | 335 | 285 | 292 | 300 | 1767 | 526 | 5375 |

2017 | 154 | 317 | 354 | 337 | 384 | 381 | 342 | 332 | 316 | 281 | 1644 | 434 | 5276 |

2016 | 168 | 346 | 415 | 378 | 365 | 342 | 283 | 297 | 267 | 220 | 1290 | 339 | 4710 |

2015 | 120 | 274 | 307 | 324 | 299 | 299 | 243 | 242 | 195 | 230 | 1109 | 268 | 3910 |

2014 | 186 | 296 | 376 | 339 | 336 | 263 | 257 | 257 | 217 | 195 | 1214 | 272 | 4208 |

2013 | 201 | 302 | 354 | 315 | 309 | 290 | 223 | 218 | 186 | 183 | 951 | 238 | 3770 |

2012 | 299 | 685 | 749 | 565 | 475 | 383 | 312 | 256 | 251 | 214 | 981 | 215 | 5385 |

2011 | 237 | 409 | 455 | 421 | 341 | 278 | 262 | 244 | 151 | 183 | 740 | 155 | 3876 |

2010 | 158 | 261 | 270 | 214 | 227 | 193 | 157 | 138 | 141 | 117 | 598 | 140 | 2614 |

2009 | 118 | 195 | 237 | 185 | 173 | 146 | 122 | 106 | 123 | 113 | 453 | 104 | 2075 |

2008 | 179 | 218 | 201 | 196 | 180 | 129 | 128 | 115 | 95 | 80 | 373 | 103 | 1997 |

2007 | 332 | 391 | 261 | 236 | 169 | 183 | 128 | 107 | 99 | 79 | 384 | 86 | 2455 |

2006 | 575 | 726 | 548 | 423 | 359 | 258 | 176 | 153 | 135 | 71 | 397 | 107 | 3928 |

2005 | 563 | 839 | 620 | 519 | 343 | 232 | 182 | 124 | 111 | 90 | 441 | 104 | 4168 |

2004 | 492 | 788 | 477 | 348 | 268 | 234 | 152 | 132 | 115 | 75 | 416 | 100 | 3597 |

2003 | 429 | 644 | 538 | 438 | 306 | 252 | 159 | 137 | 114 | 79 | 350 | 95 | 3541 |

2002 | 421 | 702 | 366 | 247 | 153 | 144 | 105 | 77 | 71 | 51 | 252 | 92 | 2681 |

2001 | 378 | 642 | 261 | 159 | 128 | 100 | 90 | 65 | 64 | 40 | 225 | 70 | 2222 |

2000 | 197 | 289 | 200 | 185 | 123 | 101 | 61 | 53 | 46 | 48 | 187 | 59 | 1549 |

1999 | 242 | 296 | 226 | 142 | 84 | 56 | 43 | 38 | 35 | 36 | 154 | 61 | 1413 |

1998 | 229 | 373 | 228 | 144 | 90 | 62 | 37 | 39 | 35 | 26 | 145 | 41 | 1449 |

1997 | 163 | 249 | 184 | 118 | 85 | 48 | 55 | 39 | 30 | 29 | 158 | 53 | 1211 |

1996 | 231 | 402 | 240 | 140 | 86 | 64 | 42 | 34 | 33 | 24 | 145 | 42 | 1483 |

Appendix 18: Physics and astronomy; notes; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 305 | 236 | 68 | 38 | 17 | 9 | 5 | 5 | 2 | 0 | 5 | 1 | 691 |

2018 | 219 | 206 | 65 | 30 | 19 | 13 | 6 | 0 | 2 | 1 | 2 | 1 | 564 |

2017 | 248 | 163 | 54 | 21 | 25 | 13 | 8 | 2 | 2 | 3 | 3 | 0 | 542 |

2016 | 295 | 213 | 83 | 40 | 20 | 12 | 4 | 3 | 1 | 1 | 3 | 0 | 675 |

2015 | 275 | 181 | 85 | 36 | 21 | 9 | 2 | 3 | 0 | 0 | 3 | 2 | 617 |

2014 | 316 | 202 | 47 | 21 | 3 | 7 | 5 | 3 | 0 | 0 | 1 | 0 | 605 |

2013 | 352 | 185 | 49 | 25 | 6 | 1 | 0 | 1 | 1 | 1 | 2 | 0 | 623 |

2012 | 385 | 150 | 31 | 15 | 5 | 3 | 2 | 1 | 0 | 1 | 0 | 0 | 593 |

2011 | 354 | 159 | 46 | 24 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 586 |

2010 | 385 | 106 | 34 | 12 | 5 | 2 | 2 | 2 | 1 | 0 | 0 | 0 | 549 |

2009 | 357 | 119 | 30 | 17 | 3 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 529 |

2008 | 292 | 95 | 30 | 10 | 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 430 |

2007 | 371 | 105 | 21 | 2 | 4 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 506 |

2006 | 313 | 91 | 21 | 5 | 4 | 2 | 0 | 0 | 1 | 1 | 0 | 0 | 438 |

2005 | 121 | 20 | 7 | 3 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 152 |

2004 | 167 | 42 | 7 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 219 |

2003 | 127 | 48 | 13 | 6 | 3 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 198 |

2002 | 188 | 62 | 13 | 6 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 272 |

2001 | 109 | 35 | 12 | 2 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 159 |

2000 | 129 | 33 | 6 | 2 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 172 |

1999 | 118 | 33 | 13 | 4 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 169 |

1998 | 166 | 29 | 4 | 3 | 1 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 206 |

1997 | 104 | 27 | 7 | 6 | 2 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 148 |

1996 | 190 | 38 | 8 | 3 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 241 |

Appendix 19: Social sciences; journal articles; number of references

Year | 0–9 | 10–19 | 20–29 | 30–39 | 40–49 | 50–59 | 60–69 | 70–79 | 80–89 | 90–99 | 100–199 | 200–299 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2019 | 8234 | 20,421 | 29,481 | 35,820 | 35,636 | 29,924 | 22,051 | 14,910 | 9670 | 6017 | 10,464 | 312 | 222,940 |

2018 | 9359 | 19,268 | 27,436 | 31,558 | 29,970 | 24,178 | 17,147 | 11,585 | 7279 | 4705 | 8012 | 280 | 190,777 |

2017 | 9364 | 19,172 | 25,288 | 29,170 | 26,884 | 21,605 | 15,140 | 10,038 | 6333 | 3908 | 6973 | 329 | 174,204 |

2016 | 9899 | 19,070 | 25,164 | 26,817 | 24,921 | 19,282 | 13,645 | 8993 | 5770 | 3685 | 6814 | 426 | 164,486 |

2015 | 10,397 | 19,548 | 24,942 | 26,019 | 23,473 | 18,147 | 12,519 | 8367 | 5357 | 3282 | 6734 | 455 | 159,240 |

2014 | 10,635 | 19,811 | 24,956 | 25,717 | 22,408 | 17,223 | 11,908 | 7729 | 4981 | 3257 | 6796 | 589 | 156,010 |

2013 | 12,063 | 20,016 | 23,705 | 23,867 | 20,703 | 15,618 | 10,674 | 7046 | 4663 | 3040 | 6793 | 710 | 148,898 |

2012 | 11,543 | 19,538 | 22,337 | 21,775 | 18,239 | 13,362 | 9196 | 6059 | 3911 | 2476 | 5743 | 593 | 134,772 |

2011 | 10,913 | 17,950 | 20,876 | 19,913 | 16,919 | 12,101 | 8457 | 5348 | 3496 | 2322 | 5388 | 712 | 124,395 |

2010 | 9893 | 16,789 | 19,075 | 18,244 | 14,702 | 10,858 | 7234 | 4863 | 3262 | 2111 | 5102 | 737 | 112,870 |

2009 | 10,189 | 16,220 | 18,093 | 16,953 | 13,324 | 9594 | 6371 | 4097 | 2671 | 1827 | 4373 | 650 | 104,362 |

2008 | 9274 | 14,626 | 16,106 | 14,771 | 11,848 | 8313 | 5698 | 3551 | 2362 | 1587 | 3807 | 449 | 92,392 |

2007 | 8208 | 13,230 | 14,550 | 13,066 | 10,240 | 7197 | 4911 | 2984 | 2031 | 1310 | 3210 | 418 | 81,355 |

2006 | 7172 | 11,786 | 12,718 | 11,247 | 8454 | 6144 | 3904 | 2572 | 1684 | 1112 | 2713 | 270 | 69,776 |

2005 | 7224 | 10,511 | 10,937 | 9412 | 7039 | 4860 | 3177 | 2065 | 1356 | 864 | 1757 | 175 | 59,377 |

2004 | 6891 | 10,124 | 10,307 | 8709 | 6488 | 4394 | 2751 | 1791 | 1115 | 725 | 1653 | 147 | 55,095 |

2003 | 6694 | 9755 | 9917 | 8173 | 6110 | 4042 | 2669 | 1634 | 1110 | 700 | 1748 | 143 | 52,695 |