Abstract

Journal Citation Reports (JCR) and its journal ranking in terms of impact factor are highly influential in research evaluation. Comparisons of impact factor are valuable only when journals are of the same subject. However, a particular JCR subject category, Information Science and Library Science (IS–LS), combines two different study fields, namely Management Information Systems (MIS) and Library and Information Science (LIS). The combination of these subjects in a single category has caused the undesirable suppression of LIS journals in annual rankings. This study used papers and citation data from 88 IS–LS journals published between 2005 and 2014 to study subfield differences between MIS and LIS and their impact factor performances over 10 years. The study further examined the subfield differences within LIS, examining the differences and performances of library science, information science, and scientometric research. The results indicate that MIS and LIS are considerably different in terms of publishing and citation characteristics, cited subjects, and author affiliations. Moreover, significant differences were observed among LIS subfields. Furthermore, the results suggested that MIS and LIS pertain to two different research communities. Stakeholders must consider this difference and allow reasonable subfield differentiation and rank adjustment when using JCR for constructive research evaluations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Journal ranking is an influential indicator for evaluating the scholarly impact of journal articles (Lowry et al. 2013). Publication in top ranked journals can considerably benefit the evaluation of the research performance of scholars, thereby provide them with advantages in various factors, including their promotion, rewards, and funding opportunities (Campanario and Cabos 2014). Journal Citation Reports (JCR), a well-established annual publication, was originally proposed by the Institute of Scientific Information and is now published by Clarivate Analytics. It is an internationally influential journal ranking system for research evaluation (Eisenberg and Wells 2014). JCR classifies journal subjects into more than 240 categories. A journal can be classified into multiple subject categories and is annually ranked with its peer journals from the same category based on different performance measures including impact factor (IF), which is the most crucial indicator for journal evaluation (García et al. 2012).

According to JCR, its subject categorization is based on objective analyses of citation patterns and citation relations among the considered journals (Boyack et al. 2005). However, studies have disputed the appropriateness of JCR subject categorization (Levitt and Thelwall 2009; Levitt et al. 2011; Bensman and Leydesdorff 2009; Leydesdorff et al. 2010; Leydesdorff 2006). Researchers have expressed concerns regarding inappropriate subject classification in JCR because of the possible negative consequences. Comparisons of journal IF values are meaningful only when they belong to the same subject (Jacsó 2012). Problems occur when two or more subjects are collocated within a single subject category. Different academic cultures and citation practices of those subjects may obfuscate the comparisons of journals and reduce the validity of journal rankings.

One particular category, “Information Science and Library Science” (IS–LS), has been subjected to several such disputes (Abrizah et al. 2015; Larivière et al. 2012; Tseng and Tsay 2013; Waltman and van Eck 2012). The IS–LS category comprises two apparently related yet distinct subjects, namely Library and Information Science (LIS) and Management Information Systems (MIS). Although the two study fields are associated with “information,” MIS research primarily focuses on the development and applications of information systems and technologies in business organizations. The intellectual foundation and research concerns of MIS are considerably different from those of LIS. The two subjects correspond with two different research communities (Larivière et al. 2012; Tseng and Tsay 2013). Moreover, LIS involves the integration of library science (LS) and emergent, interdisciplinary information science (IS) (Prebor 2010; Warner 2001). Recently, the transformation of the traditional bibliometrics further engendered a growing, distinct field of study termed scientometrics (SM). IS–LS category can be considered to contain two key study areas (MIS and LIS) or four subfields (i.e., MIS, LS, IS, and SM).

Although LIS and MIS are different, the extent of the differences between the two fields in terms of their publishing and citation practices and author bases have not been empirically examined using comprehensive and longitudinal data. Furthermore, the number of IS–LS journals has increased in recent years (Abrizah et al. 2015; Ni et al. 2013a, b). The performance of MIS and LIS journals in annual rankings and the irrational comparisons due to the collocation of the two subjects in one category require examination. In this study, comprehensive, longitudinal analyses were conducted of all journals included in the IS–LS category for 10 years (2005–2014). The analyses focused on the following questions:

-

1.

How different were MIS and LIS in terms of their paper and citation characteristics? Furthermore, how different were the subdivisions of LIS, namely LS, IS, and SM?

-

2.

How different were MIS and LIS in terms of their knowledge foundations, that is, the cited subjects? How different were the three LIS subfields?

-

3.

How different were MIS and LIS in terms of their author bases observed from author affiliations? How different were the three LIS subfields?

-

4.

How did MIS and LIS journals perform in the annual IF-based rankings? How were the performances of the three LIS subfields?

Problems of JCR subject categorization

The JCR Subject Category is a crucial classification system for academia because of its influence in research evaluation. However, approaches used by the system for categorizing academic subjects are not free of criticism. Disputes have occurred in terms of the increasing usage of JCR journal rankings in assessing research performance (Jacsó 2012). For example, the evolving nature of subjects may create problems. The classification of a journal may become inappropriate over time because of changes in journal content or academic disciplinary structures (Leydesdorff 2007). Comparisons of research performance require subject classification to be stable and complete; however, the JCR Subject Category may not necessarily provide a stable and complete classification (Levitt and Thelwall 2009; Levitt et al. 2011).

Self-claimed objectivity by JCR is another problem. Those involved in JCR have claimed that its subject categories were developed based on objective analyses of citation relations among SCI and SSCI journals (Boyack et al. 2005). However, Pudovkin and Garfield (2002) indicated that the current JCR subject categorizations are rooted in subjective, manual classification practices from the 1960s. The current classification of JCR journals is a combination of semiautomatic procedures and manual judgments. The resulting classifications may be less objective and reasonable than claimed.

A concern regarding the appropriateness and applicability of JCR subject categorization relates to the conglomerating of some heterogeneous subjects into one category (Borgman and Rice 1992; Harzing 2013). The IS–LS category has been the most disputed category. For example, Abrizah et al. (2013) used the Library of Congress Classification (LCC) to classify 79 JCR IS–LS journals and indicated that 22 did not belong to subclass Z [Bibliography, Library Science, Information References (General)]. Moreover, the study indicated that only 46 of the journals were included in Scopus under the subject of LIS. The IS–LS category comprised a significant number of non-LIS journals. It also highlighted the deficiencies of both JCR and LCC in differentiating the subfields in the IS–LS domain; LCC is old and time-bound and thus is even more inadequate in representing the subfield differences of the contemporary IS–LS scholarship.

Abrizah et al. (2015) surveyed 234 authors and editors who had published in IS–LS journals on their preferred categorization of 83 IS–LS journals from the 2011 edition of JCR. Four subject options were provided to the participants: information science, library science, information systems (ISys), and do not know/undecided. On the basis of popularity, the study categorized 39 titles to LS, 23 to IS, and 21 to ISys (equivalent to MIS in this study). Their study showed that MIS authors who published in ISys journals were generally unaware that ISys journals were classified under the IS–LS category. This suggests a gap between JCR subject categorization and subjective perceptions of researchers.

Other researchers have employed different methods to study the IS–LS categorization problem. Subfield distinction based on journal similarities is a popular approach. Journal similarities can be observed from different angles; similarity of the journals’ author base is one of them. Ni et al. (2013a, b) employed venue-author-coupling (VAC), a metric that indicates the similarity of author profiles of two research publications, to study the subfield differences within IS–LS in JCR. Inspired by bibliographic coupling, they compared journal similarity based on the co-presence of authors in two publication venues. Their analysis revealed four distinct subfields, including Management Information Systems, specialized information and library science, library science focused (practice-oriented), and information science focused (research-oriented).

Content similarity is another popular angle to examine journal similarity. Bibliographic coupling, cocitation analysis, and coword analysis are frequently used methods to study content similarity. Sugimoto et al. (2008) conducted a cocitation analysis of 16 MIS and 15 LIS journals. Their analyses revealed that LIS and MIS had different research objectives. They also revealed that only four MIS and three LIS journals were related to each other. Wang and Wolfram (2015) conducted bibliographic coupling and co-citation analyses on papers by 40 high-impact IS–LS journals from the 2011 edition of JCR. The results revealed that MIS and LIS were indeed two distinct clusters. Tseng and Tsay (2013) performed bibliographic coupling and coword analyses on articles in IS–LS journals published between 2000 and 2009. The results indicated that the journals could be categorized into five subfields, namely IS, LS, SM, MIS, and other marginally related topics. The MIS cluster in particular was different from the other subfields. Related studies have confirmed that LIS and MIS are two different research communities, and that they do not have substantial interactions.

Ni et al. (2013a) incorporated both author similarity and content similarity in their study of journal differences and subfield distinction. They examined the IS–LS subfields with the aforementioned VAC metrics, along with three additional measures: shared editorial board membership, journal cocitation analysis, and topic modeling that measured topic similarity based on author–conference–topic correlations. With the composite use of the four facets, they identified three subclusters (i.e., MIS, LS, and IS) in 58 IS–LS journals. The results also indicate that the MIS cluster was systematically distinct from the LS and IS clusters along all of the four facets.

When the two distinct study fields are categorized into one subject category, comparisons of markedly diverse items occur. In particular, if two study fields have different publishing patterns, citation practices, author groups, and other factors that may systematically influence IF values, the collocation of these subjects can be detrimental because one of the subjects may systematically suppress the other (Abrizah et al. 2015).

Moreover, LIS itself is a highly heterogeneous subject, and certain trends are contributing to the widening gap within the study domain. First, the distinction between practitioner and non-practitioner authors constitutes a major factor for the varying research practices in LIS. Finlay et al. (2013) reported a tension between library practitioner and non-practitioner authors based on content analysis of 4827 peer-reviewed articles from twenty LIS journals. Between 1956 and 2011, distinct differences in research subject matters existed between the two groups of authors. Walter and Wilder (2016) also found a rather diverse author population in 31 LIS journals. Analyzing papers published between 2007 and 2012, they found that faculty in LIS departments accounted for only 31% of the journal literatures, while librarians constituted 23%. Other authors included faculty from the computer science departments and management departments, each accounting for 10%.

The evolving research topics also contributed to the widening gap within LIS. Tuomaala et al. (2014) analyzed the paper topics in LIS journals across 1965, 1985, and 2005 and found that, in 1965 and 1985, there were only moderate changes in the topics, methods, and approaches in LIS research. But after 1985, radical changes took place. Studies on LIS service activities diminished, whereas research in information retrieval, information seeking, and scientific communication grew. Their findings concurred with Milojević et al. (2011) in that library science, scientometrics, and information science are still three main fields under LIS. But the evolving shares of research topics over years had shown a shift from library science to information science and scientometrics. This certainly have an effect on the relative citation impact of those subfields.

Although studies have offered systematic observations and scientific evidence on the differences between MIS and LIS or within LIS, these observations are limited because of their short time span (e.g., Abrizah et al. 2013; Abrizah et al. 2015; Ni et al. 2013a) or small number of sample journals (e.g., Sugimoto et al. 2008; Wang and Wolfram 2015). Author affiliations have not been previously studied and compared. Furthermore, subfield differences within LIS have not been examined. Therefore, this study aimed to perform a longitudinal, comprehensive analysis based on all JCR IS–LS journals from 2005 to 2014. The analysis systematically compared the papers and reference characteristics, cited subjects, and author affiliations of those subfields to observe subfield differences and systemic suppression in the IS–LS category.

Research methods

In this study, annual lists from JCR were used to identify journals in order to obtain papers and citations to be used. Data collection and processing procedures are as follows:

Identification of source journals and paper records

The source journals are those that had been included in the IS–LS category during 2005–2014. In this study, 92 journal titles were included in this category, and two were purged from the analyses because the Web of Science (WoS) discontinued indexing them after 2006. Another two journals continued with new titles during the study period and were thus merged with the new titles. This study analyzed 88 source journals (“Appendix”). The WoS was searched to identify 96,001 papers labeled as “article” and published by these source journals between 2005 and 2014. These papers included 1219,196 reference entries and 351,404 references to identifiable journals.

Subject classification of source journals

To determine subfield differences within the IS–LS category, this study first differentiated between MIS and LIS journals. The LIS journals were further subdivided into three subfields: LS, IS, and SM. To avoid the double counting of journals that may obscure subsequent analyses, each journal was only classified into one subfield. Abrizah et al. (2015) constituted the major reference source for our journal classification; the results of their study revealed the IS–LS authors’ subjective perceptions of journal categorization for the IS–LS journals. However, some journals in our sample were not included in their study. Moreover, because we wanted to observe the current three main LIS subfields (Milojević et al. 2011; Tuomaala et al. 2014), adjustment to the classification was necessary. We consulted different journal classification sources to assist our judgment, including the Global Serials Directory in Ulrichsweb, SCImago subject classification, and the US Library of Congress catalog. The definitions of the four journal groups are as follows (see “Appendix” for the classification result):

-

MIS (25 journals) includes all journals that were classified by Abrizah et al. (2015) as of “information systems”; journals that were absent in their list but were classified by the Library of Congress as being outside subclass Z or by SCImago as being outside the LIS category are also added to this group. One particular journal, the International Journal of Information Management, was classified by Abrizah et al. (2015) as of “information science”. But it was classified by the Library of Congress as being outside subclass Z, which denotes bibliography, library science, and information resources. We thus relocated it into this group.

-

LS (34 journals) includes the journals that had been classified by Abrizah et al. (2015) as of “library science” except five particular journals whose content scope during our studied period had actually departed from librarianship concerns and focused more on broader information science issues (see “Appendix” for the titles); they were thus relocated to the information science group. One particular journal was absent in Abrizah et al. (2015), the Law Library Journal. It was added to this group for its obvious library science orientation.

-

IS (26 journals) includes the journals that had been classified by Abrizah et al. (2015) as of “information science” except three particular journals whose content scope was predominantly on scientometrics, plus five journals, as aforementioned, that were classified by Abrizah et al. (2015) as library science journals but actually had focused on the broader information science issues.

-

Scientometrics (three journals) includes three journals whose titles and content scope are explicitly based on this particular topic.

Subject classification of cited journal references

To observe differences in intellectual foundations of the subfields, this study classified the references to journals for cited subject comparisons. The subject classification of the references was based on journal titles, where 351,404 journal references together referred to 20,876 journals. However, not all of the referenced journals were included in WoS. Because of the difficulty and time required to verify all journal titles and to classify them, we manually compared the 20,876 journals to the 2015 version of the JCR journal list. Only those referencing the 2015 JCR journals were retained for the subsequent analyses. As a result, only 154,523 references were retained for our study sample. We used a self-developed subject scheme that condenses and simplifies JCR categories to classify the referenced journals (Table 1). To sensitize subfield observations between MIS and LIS, we subdivided the JCR IS–LS category into four subject areas by using the same principles as described in the previous section.

Classification of the author affiliations

This study determined author affiliations based on address data available in paper records. If a paper had multiple institutional addresses in its record, those institutions were all considered institutional authors of the paper regardless of the actual number of human authors. Authority control was implemented to ascertain that papers generated from the same institution but labeled with different forms of institutional names were combined. The institutions were classified based on Table 1, which contains 18 subject categories. Classification was based on institution names and institutional websites. Different from the classification of journal references, the classification of author affiliations only differentiated MIS and LIS departments. We did not further distinguish LIS institutions into the smaller subfields of LS, IS, and SM because this was difficult for most institutions. If an institution appears to focus mostly on information systems and operations for managerial purposes, it is classified as a MIS institution. By contrast, if an institution demonstrates a significant proportion of research in information science issues outside Management Information Systems, it is considered a LIS institution. The current iSchools members, the American Library Association accredited schools, and different libraries and archives are typical LIS institutions. For the classification of non-information research institutions, if one seems to address more than two subject categories as in Table 1, it is classified into the first subject as suggested by its institution name or by the first subject mentioned in its Web site.

Findings

Characteristics of publishing and citations in four IS–LS subfields

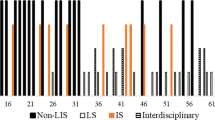

Figure 1 presents the chronological distribution of the number of journals and papers in the four subfields. This figure indicates that LIS, including LS, IS, and SM, constituted the largest proportion of the IS–LS journals and papers. LS and IS had the highest contributions to journals and papers, and their paper production decreased slightly over the studied 10-year period. By contrast, MIS journals published a disproportionally small amount of papers every year, which increased slightly. However, one should remember that MIS researchers also publish in journals outside the IS–LS category, for instance, in computer science and management journals. So our observation within the IS–LS category may have very likely under estimated the actual MIS paper production, and we suspect that the extent of under-estimation should have far exceeded that of LIS.

Table 2 presents the distribution of papers and references based on the original and adjusted data. The distribution based on the original data is slightly misleading because of a particular LS journal (Library Journal) and three IS journals (i.e., EContent, Online, and Scientist). These publications were professional trade magazines, not research journals: they published numerous non-research articles with few or no references. To focus on research journals, this study eliminated these four publications and observed distributions based on the adjusted data.

On the basis of the adjusted data, the distribution of MIS and LIS journals was approximately 3:7 (29.76% vs. 70.24%), whereas the distribution of MIS and LIS papers was 2:8 (18.40% vs. 81.60%). LIS journals published more papers than MIS journals, indicating that research paper production in LIS was more intense than in MIS. Furthermore, in a comparison of MIS with the two larger LIS subfields (LS and IS), the ratio of journal distribution for MIS, LS, and IS was approximately 3:4:3, whereas the ratio of their paper distribution was approximately 2:4:3.5. MIS had a considerably lower publishing intensity than the other two fields.

MIS journals and papers employed a different citation practice from that of LIS. The adjusted data indicated that MIS references accounted for 32.11% of the total references. Each MIS paper cited an average of 49.67 citations, which is approximately twice that of LIS (49.67 vs. 14.42). Moreover, it is considerably higher than those of the three LIS subfields. The MIS citation practice may have partially contributed to constantly high IF values of the MIS journals as shown in the following section. By contrast, LS was characterized by higher paper production but lower citation usage than all other subfields, and it is always lowest in IF values.

Cited subjects of four IS–LS subfields

Table 3 and Fig. 2 show the distributions of the top five subjects in the four subfields. MIS was different from LIS regardless of whether the latter was considered one category or classified into three subfields. First, MIS cited few LIS journals. The references to LIS journals accounted for only 4.17% of total references, which were not among the five most cited subjects. By contrast, LIS as a complete field or as three subfields referenced the highest proportion of LIS journals. Second, LIS was characterized by subject self-citation, whereas the most cited subject area in MIS was computer science and not MIS. Third, the five most cited subjects in MIS were evenly distributed. By contrast, distribution was highly concentrated for the two most cited subjects in LIS. The first and second most cited subjects (LIS and computer science) accounted one-third to half of the references in the LIS subfields. These findings indicate that MIS and LIS are considerably different.

In LIS subfields, IS and SM were similar in terms of cited subject distributions, whereas LS was evidently different from the other subfields. First, LS was the most self-cited subject at the aggregate level (LIS as a complete field) and subfield levels (LIS divided into three subfields). Compared to the other LIS subfields, LS cited the highest number of LIS references (37.29%) and LS references (24.06%). Second, LS cited the lowest number of “other” references among the three subfields. These findings indicate that LS scholars represent a more closed community than those of IS and SM in terms of referencing outside knowledge. Third, the five most cited subjects in IS and SM were exactly the same although the order was slightly different. LS was different from IS and SM because it referenced social sciences more than management; however, the difference was small.

Pearson tests showed that distributions of the cited subjects (divided into 18 subjects as in Table 1) in LS and IS were moderately correlated (.469, p < .05). Distributions of IS and SM were highly correlated (.624, p < .01). By contrast, for MIS, distributions were not correlated to those of the other subfields. Chi square tests using the 10 most cited subjects (nine most cited subjects and all others) in the four subfields further showed that the four subfields were significantly different (p < .001; Table 4). Cramer’s V values reflected a strong association between subfield distinction and cited subjects when MIS was compared with other subfields (from .355 to .484). This association indicates that MIS was different from LIS. By contrast, the effect of subject distinction was weaker among the three LIS subfields; however, significant differences were observed in their cited subjects.

Author affiliations of four IS–LS subfields

Table 5 and Fig. 3 present distributions of the five most cited author groups in the four subfields. MIS was evidently different from LIS. First, for LIS as a complete subject and as three subfields, related institutions comprised the most cited author group. However, different observations were made for MIS. Second, the first most cited and second most cited author groups suggested that MIS research was highly business- and management-oriented. MIS institutions constituted a relatively small author base for MIS journals. This observation suggests that MIS journals are an extended publication venue for business and management scholars, whose research involves information systems and technologies. By contrast, the composition of author groups for the LIS journals evidently exhibited no such characteristics. Economics and business authors constituted an inconsequential proportion. Third, for the MIS journals, the LIS institutions constituted the four most cited author groups; however, MIS institutions were not within the five most cited author groups for the LIS subfields. This indicates a unidirectional relationship between MIS and LIS. Although some LIS researchers participated in MIS-oriented research, LIS journals were not a primary publication venue for MIS researchers. This result is consistent with that of Abrizah et al. (2015), indicating that most MIS researchers were unfamiliar with the IS–LS category and LIS journals in that category.

In the three LIS subfields, marked differences were observed in their distributions. First, LS was considerably different from IS and SM because it exhibited an extremely high number of authors from LIS institutions (the most cited group; 87.92%). This observation indicates that LS researchers represented a considerably confined research community. By contrast, IS and SM were multidisciplinary. Second, IS and SM were highly similar in terms of cited subject distributions; author affiliation distributions exhibited a notable difference between the two subfields. IS had more authors from medical science and communication, whereas SM had more authors from social sciences and science backgrounds.

Pearson tests using all author groups indicated no significant correlation between the subfields. Chi square tests showed significant differences among the four subfields (p < .001; Table 6). All comparisons, excluding IS versus SM, indicated a strong association between subfield distinction and author affiliations. The tests reaffirmed that MIS and LIS were considerably different. Moreover, the three LIS subfields were considerably different from each other in terms of their author sources.

IF performance of the four IS–LS subfields

Table 7 shows the average annual IF values of the four subfields (5-year IF values for 2005–2007 were not available in JCR). Distributions indicate a predetermined hierarchy among those subfields. MIS systematically outperformed LIS in both 2- and 5-year IF. During 2006–2007, the 2-year IF values of MIS were twice those of LIS. Although the differences were reduced from 2008 because of the increased IF values of SM, MIS consistently had higher IF values than LIS. In the 5-year IF distribution, MIS was also higher than LIS. By contrast, LS was systematically suppressed in the IF rankings. For example, in a comparison of LS and IS, the annual 2-year IF values of IS were approximately twice those of LS. This difference is further increased in the 5-year IF. Moreover, a similar pattern was observed between IS and SM in the observed years. SM has dominated the IF rankings within the LIS as an emergent subfield since 2008.

An f-test of the annual averaged IF values demonstrated a significant difference between MIS and LIS journals [f = 2.072, sig. = .154, t(86) = − 4.089, p < .001]. A one-way analysis of variance (ANOVA) Tamhane T2 test of the four subfields further indicated significant differences between LS versus MIS (MD = − 1.046***, SE = .2006, p < .001) and LS versus IS (MD = − .4845*, SE = .1702, p < .05). This indicated that LS journals were consistently suppressed in the journal IF ranking.

The quartile distribution of the LIS versus MIS journals further indicates the systematic domination of MIS in IS–LS journal rankings. For each annual ranking, the IS–LS journals were first sorted in terms of the IF value (2-year) and divided quarterly into four groups. Figure 4 presents the Q1–Q4 journal numbers of the MIS journals versus LIS journals. The figure indicates that the number of LIS journals increased from Q1 to Q4 each year. By contrast, the number of MIS journals decreased. For most of years, excluding 2011–2013, the number of MIS journals in Q4 was zero. MIS journals always look much superior in the IS–LS category because of the lower IF values of the LIS journals while ideally they are better viewed as two different fields.

Discussion and conclusion

MIS journals consistently rank highest among other journals in the JCR IS–LS category. This study further revealed that the LS journals consistently ranked the lowest over the study period. However, further investigations should be conducted to determine whether the ostensible superiority of MIS journals and consistent inferiority of LS journals are the result of the respective “real” impact on the same study field or that of different subfields.

This study results suggest that MIS and LIS journals were different in terms of papers and citations, cited subjects, and author affiliations. MIS was characterized by low paper production and a high number of references per paper. By contrast, LIS as a complete field and LS in particular were characterized by a high paper production with a low usage of references per paper. The intensive use of citations in MIS papers may have contributed to its higher IF performances on average. In cited subjects, MIS and LIS were significantly different in their primary sources of knowledge. Their citation usages were different in terms of quality (referenced subjects) and quantity (percentages of uses). Furthermore, MIS and LIS differed in terms of author affiliations. The author base of MIS journals demonstrated a strong correlation with business and management fields. By contrast, LIS authorship was dispersed across a range of subject areas. All the comparisons in this study indicate that MIS and LIS are two different research communities, which is consistent with the results of other studies.

The collocation of the two subjects in the single IS–LS category has resulted in the consistent superiority of MIS journals and inferiority of LIS journals. The suppression of LIS journals must be discouraged. However, this suppression may not necessarily benefit MIS scholarship. If the objective of research evaluation and journal ranking is to benchmark the performances of scholarly journals for continuous growth and improvement, the superficially superior ranking results in misleading evaluations of MIS journals. When a journal with average performance is assisted by other journals from different subject fields, it loses an opportunity for improvement.

A similar suppression was observed within LIS between LS and the other two subfields. However, the case of LS and its peer subfields is different from that of MIS versus LIS. Although LS journals showed strong and significant differences in its author base, differentiating library scientists from information scientists, individually or institutionally, is now difficult and impractical. The significant yet relatively weaker differences in the cited subjects further indicated that the LIS subfields share common attributes. However, the suppression of LS journals should not be overlooked. LS should be considered a specialization within LIS scholarship with a distinct, topical focus on libraries and librarianship. Stakeholders in research evaluation should consider that the particular focus of LS confines its citation impact within its subject boundary. This confinement is a natural constraint and not a symptom of scholarly inferiority.

For the future evaluation of IS scholarship, JCR should separate MIS journals from LIS journals into different subject categories. Before this separation, the subfield differentiation and adjustment of journal rankings should be permitted, even encouraged. In addition, the incorporation of weighted citation metrics rather than the sole reliance on impact factor should be encouraged in research evaluations. Currently, some citation indicators that are normalized for comparisons across subject disciplines exist, such as the Eigenfactor, Article Influence Score, source normalized impact per paper (SNIP), and SCImago Journal rank (Walters 2017). The introduction of such indicators may provide a better ground for comparing research impact across different subject areas.

Misrepresentation of subjects is not unique to JCR. Errors in subject classification can be observed in various sources of citation metrics including Scopus, CWTS, SCImago, and Eigenfactor (Walters 2017). Stakeholders should consider the differences between natural constraints and actual citation impact. They must also consider the components of appropriate evaluations and the possible biases embedded in subject classification. For other research domains with similar subject collocation problems in JCR, the approach used in this study may help discern subfield differences and systemic subfield suppression in their respective categories.

References

Abrizah, A., Noorhidawati, A., & Zainab, A. N. (2015). LIS journals categorization in the journal citation report: A stated preference study. Scientometrics, 102(2), 1083–1099.

Abrizah, A., Zainab, A. N., Kiran, K., & Raj, R. G. (2013). LIS journals scientific impact and subject categorization: A comparison between web of science and scopus. Scientometrics, 94(2), 721–740.

Bensman, S. J., & Leydesdorff, L. (2009). Definition and identification of journals as bibliographic and subject entities: Librarianship versus ISI Journal Citation Reportsmethods and their effect on citation measures. Journal of the American Society for Information Science and Technology, 60(6), 1097–1117.

Borgman, C. L., & Rice, R. E. (1992). The convergence of information science and communication: A bibliometric analysis. Journal of the American Society for Information Science, 43(6), 397–411.

Boyack, K. W., Klavans, R., & Börner, K. (2005). Mapping the backbone of science. Scientometrics, 64(3), 351–374.

Campanario, J. M., & Cabos, W. (2014). The effect of additional citations in the stability of Journal Citation Report categories. Scientometrics, 98(2), 1113–1130.

Eisenberg, T., & Wells, M. T. (2014). Ranking law journals and the limits of Journal Citation Reports. Economic Inquiry, 52(4), 1301–1314.

Finlay, S. C., Ni, C., Tsou, A., & Sugimoto, C. R. (2013). Publish or practice? An examination of librarians’ contributions to research. Portal: Libraries and the Academy, 13(4), 403–421.

García, J. A., Rodríguez-Sánchez, R., Fdez-Valdivia, J., Robinson-García, N., & Torres-Salinas, D. (2012). Mapping academic institutions according to their journal publication profile: Spanish universities as a case study. Journal of the American Society for Information Science and Technology, 63(11), 2328–2340.

Harzing, A. W. (2013). Document categories in the ISI Web of Knowledge: Misunderstanding the Social Sciences? Scientometrics, 94(1), 23–34.

Jacsó, P. (2012). The problems with the subject categories schema in the eigen factor database from the perspective of ranking journals by their prestige and impact. Online Information Review, 36(5), 758–766.

Larivière, V., Sugimoto, C. R., & Cronin, B. (2012). A bibliometric chronicling of library and information science’s first hundred years. Journal of the American Society for Information Science and Technology, 63(5), 997–1016.

Levitt, J. M., & Thelwall, M. (2009). The most highly cited library and information science articles: Interdisciplinarity, first authors and citation patterns. Scientometrics, 78(1), 45–67.

Levitt, J. M., Thelwall, M., & Oppenheim, C. (2011). Variations between subjects in the extent to which the social sciences have become more interdisciplinary. Journal of the American Society for Information Science and Technology, 62(6), 1118–1129.

Leydesdorff, L. (2006). Can scientific journals be classified in terms of aggregated journal-journal citation relations using the Journal Citation Reports? Journal of the American Society for Information Science and Technology, 57(5), 601–613.

Leydesdorff, L. (2007). Mapping interdisciplinarity at the interfaces between the Science Citation Index and the Social Science Citation Index. Scientometrics, 71(3), 391–405.

Leydesdorff, L., de Moya-Anegón, F., & Guerrero-Bote, V. P. (2010). Journal maps on the basis of scopusdata: A comparison with the Journal Citation Reports of the ISI. Journal of the American Society for Information Science and Technology, 61(2), 352–369.

Lowry, P. B., Moody, G., Gaskin, J., Galletta, D. F., Humphreys, S., Barlow, J. B., et al. (2013). Evaluating journal quality and the association for information systems (AIS) senior scholars’ journal basket via bibliometric measures: Do expert journal assessments add value? MIS Quarterly, 37(4), 993–1012.

Milojević, S., Sugimoto, C. R., Yan, E., & Ding, Y. (2011). The cognitive structure of library and information science: Analysis of article title words. Journal of the American Society for Information Science and Technology, 62(10), 1933–1953.

Ni, C., Sugimoto, C. R., & Cronin, B. (2013a). Visualizing and comparing four facets of scholarly communication: Producers, artifacts, concepts, and gatekeepers. Scientometrics, 94(3), 1161–1173.

Ni, C., Sugimoto, C. R., & Jiang, J. (2013b). Venue-author-coupling: A measure for identifying disciplines through author communities. Journal of the American Society for Information Science and Technology, 64(2), 265–279.

Prebor, G. (2010). Analysis of the interdisciplinary nature of library and information science. Journal of Librarianship and Information Science, 42(4), 256–267.

Pudovkin, A. I., & Garfield, E. (2002). Algorithmic procedure for finding semantically related journals. Journal of the American Society for Information Science and Technology, 53(13), 1113–1119.

Sugimoto, C. R., Pratt, J. A., & Hauser, K. (2008). Using field cocitation analysis to assess reciprocal and shared impact of LIS/MIS fields. Journal of the American Society for Information Science and Technology, 59(9), 1441–1453.

Tseng, Y.-H., & Tsay, M.-Y. (2013). Journal clustering of library and information science for subfield delineation using the bibliometric analysis toolkit: CATAR. Scientometrics, 95(2), 503–528.

Tuomaala, O., Järvelin, K., & Vakkari, P. (2014). Evolution of library and information science, 1965–2005: Content analysis of journal articles. Journal of the Association for Information Science and Technology, 65(7), 1446–1462.

Walters, W. H. (2017). Citation-based journal rankings: Key questions, metrics, and data sources. IEEE Access, 5, 22036–22053.

Waltman, L., & van Eck, N. J. (2012). A new methodology for constructing a publication-level classification system of science. Journal of the American Society for Information Science and Technology, 63(12), 2378–2392.

Wang, F., & Wolfram, D. (2015). Assessment of journal similarity based on citing discipline analysis. Journal of the Association for Information Science and Technology, 66(6), 1189–1198.

Warner, J. (2001). W(H)ITHER information science? The Library Quarterly: Information, Community, Policy, 71(2), 243–255.

Acknowledgements

Funding was provided by Ministry of Science and Technology, Taiwan (TW) (Grant No. MOST 107-3017-F-002-004-), Ministry of Education (Grant No. 108L900204).

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Huang, Mh., Shaw, WC. & Lin, CS. One category, two communities: subfield differences in “Information Science and Library Science” in Journal Citation Reports. Scientometrics 119, 1059–1079 (2019). https://doi.org/10.1007/s11192-019-03074-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-019-03074-3