Abstract

Government funding is a key scientific research resource, and it has made a concrete contribution to the world’s science and technology development. But these funds come from common taxpayers, so we need to evaluate the effectiveness of these funds. Generally speaking, policymakers adopt the method of peer review to make assessments. Compared to kinds of shortcomings of peer review, the paper here proposed the benchmarking evaluation method based on the academic publication outputs of supporting funds, mainly guiding indicators from scientometrics. At first, with the academic publication output extracted from the concluding report project manager submitted to the government after the fund finished, we designed the analysis framework to search and define the research field the fund belonged to. And then from the following three perspectives, including quantity, quality and relative influence, we compared the research fund output to the field output. Later, we took one fund program from national program on key basic research project of China (973 Program) in the field of quantum physics as an example to make an empirical analysis to demonstrate its effectiveness. At last, we found that the funded program performance was superior to the field, and even about 11.65% of the research achievement reaches the top 1/1000 of the world, but the research was lacking in remarkable papers, so it needs further improvement.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recently, the National Bureau of Statistics, Ministry of Science and Technology, and the Ministry of Finance of the People’s Republic of China jointly issued the “National Statistical Bulletin on Investment in Science and Technology in 2016”(http://www.most.gov.cn/kjbgz/201710/P020171012374292508458.pdf). It showed that in 2016, China’s research and development investment had reached 1.56,767 trillion yuan. This represented an increase of 150.69 billion yuan from 2015, with a growth rate of 9.9%. Moreover, research and development investment accounted for 2.2% of the GDP, up 0.05% from the 2015 value. At the same time, China’s research projects financed by science and technology investment have also increased significantly in terms of the amount of funding and subsidies. Using the National Natural Science Foundation of China (NSFC) as an example, it funded a total of 18,136 general projects in 2017, funded at approximately 10.69 billion yuan.

Aside from the NSFC, there are many other ministries providing funding grants in China including the Ministry of Science and Technology, Ministry of education, National Development and Reform Commission, Ministry of Agriculture and others. Such bodies will now ask however, after years of implementation, has the project achieved the project’s original aim? This is the grim reality facing the current government policy makers, government, and university scientific research managers.

Based on current research, relevant studies can be divided into the following four categories:

-

1.

At the country level, Wang et al. (2011) analyzed the impact of government funding on research output based on 500,807 Science Citation Index (SCI) papers published in 2009 in 10 countries. Xu et al. (2015) explored the funding ratios of 21 major countries/territories in social science based on 813,809 research articles collected from Web of Science (WoS) from 2009 to 2013. Zhang et al.(2010) extracted the social science papers from Science Citation Index Expanded (SCIE) and Social Science Citation Index (SSCI), which were subsidized by science foundations around the world, and compared the papers by subject, institution and country.

-

2.

Regarding annual statistics, Guo et al. (2011) conducted a statistical analysis by subject, region, and organization of the output of papers funded by the NSFC and indexed by CSTPCD (Chinese Scientific and Technical Information of China). Bo and Zeng (2011) explored the general situation of social science research in China based on paper output analysis looking at annual national social science funding between 2000 and 2009. Zou and Tang (2011) surveyed and studied the general situation of the input and output of major projects under the National Science Fund of China. Yu et al. (2013) used scientometric methods to analyze paper output and their influence. These were indexed by SCIE and CSTPCD and originated from the NSFC, the National High-tech R&D Program of China (863 Program), the National Program on Key Basic Research Project of China (973 Program), and the National Key Technology Research and Development Program of the Ministry of Science and Technology of China.

-

3.

From a funded field perspective, Lewison (1998) looked at papers in the field of gastroenterology to determine the sources of funding and the relative impact of papers funded by different groups as well as the impact of unfunded ones. Chen et al. (2013) presented a longitudinal analysis of global nanotechnology development with nanotechnology papers and nanotechnology patents funded by the National Science Foundation (NSF). Huang et al. (2006) combined bibliometric analysis and content visualization tools to identify growth trends, research topic distribution, and the evolution in NSF funding and commercial patenting activities recorded in the field of nanotechnology. Cao et al. (2013) reviewed the role of the NSFC on the development of Parkinson’s disease from 1990 to 2012. The authors looked at the total number of projects and NSFC funding allocated to Parkinson’s disease, as well as papers published. Huang et al. (2016) compared research proposals awarded by the US NSF and the NSFC in the field of big data.

-

4.

Looking at the relationship between funding support and paper quality, Wang and Shapira (2011) used a funding acknowledgment analysis to investigate the impact of research sponsorship in the case of nanotechnology to probe the funding patterns of leading countries and agencies. They then compared the impact of funded studies against those with no funding. More recently, Wang and Shapira (2015) conducted a detailed investigation of the relationships between research sponsorship and publication impacts, and examined the citations and journal impact factors to show that publications from grant sponsored research exhibit higher impacts in terms of both journal ranking and citation counts than research that is not grant sponsored. Zhao (2010) studied the characteristics and impact of funded research in the library and information science field, and showed the impact of grant-funded research as measured by citation counts that were substantially higher than unfunded research. Looking at previous studies, Rigby (2013) had concerns about the influence of the number of funding acknowledgments and research impact. Using OLS regression and two rank tests, Rigby demonstrated a statistically significant but weak link between the number of funding acknowledgments and high impact papers. Abdullah (2016) investigated the relationships between the citation impact of scientific papers and the sources of funding that are acknowledged as having supported those publications. They also examined several relationships that are potentially associated with funding including first citation, total citations, and the chances of becoming highly cited. Furthermore, the authors explored the links between citations and types of funding by organization and also with combined measures of funding. The results of that study found that funding is not related to the first citation, but is significantly related to the number of citations and the top percentile citation impact. Costas and Leeuwen (2012) presented a general bibliometric analysis on “funding acknowledgments” (FAs) and observed that publications with FAs present a higher impact compared with publications without them. Differences were found across countries and disciplines in the share of publications with FAs and the acknowledgment of peer interactive communication. China has the highest share of publications acknowledging funding. Boyack and Jordan (2011) introduced grant-to-article linkage data associated with National Institutes of Health (NIH) grants and performed a high-level analysis of the publication outputs and impacts associated with those grants. Articles acknowledging US Public Health Service (PHS, which includes NIH) funding were cited twice as often as US-authored articles acknowledging no funding source.

In addition, some researchers also analyzed the coverage and limitations of funding data from publication acknowledgments. Grassano et al. (2017) used a novel dataset of manually extracted and coded data on the funding acknowledgments of 7,510 publications representing UK cancer research in 2011. These ‘reference data’ were compared with funding data provided by the WoS and MEDLINE/PubMed. Findings showed a high recall (about 93%) of WoS funding data. In contrast, MEDLINE/PubMed data retrieved less than half of the UK cancer publications acknowledging at least one funder. Conversely, both databases have high precision (+ 90%); that is, very few cases of publications with no acknowledgments to funders were identified as having funding data. Nonetheless, funders acknowledged in UK cancer publications were not correctly listed by MEDLINE/PubMed and WoS in approximately 75% and 32% of the cases, respectively. In addition, Tang et al. (2017) examined the distribution of FAs by citation index database, language, and acknowledgment type, and noted coverage limitations and potential biases in the previous analysis. They further argued that despite being of great value, bibliometric analyses of FAs should be used with caution.

Based on the current research situation both in China and abroad, we found that there were a number of problems in the research of current research projects as follows: (1) while great attention is paid to country of origin, annual statistics, and the relationship between funding support and paper quality, there is little assessment of the implementation of specific projects; (2) while there is a sharp focus on the analysis of publication output, there is less research of other project outputs including patents, education of talented researchers, and communications of results. As highlighted by LI (2004), an academician of the Chinese Academy of Engineering, “we have paid too much attention to the amount of output, and not enough attention to the quality of the output and the actual impact of the output, including the contribution to national security and national economy, the contribution to the enterprise, the talent output and potential or deep driving and radiating effects. Only by focusing our attention on how to truly raise the output, can we obtain more investment in research and create a virtuous circle.”

Nevertheless, publication output can be one important indicator of the effectiveness of funding. For this reason, the present paper aims to examine 973 projects of the Ministry of Science and Technology to create an index system based on the quantity, quality, and relative influence of the project’s output.

Process, method and indicators

Process

Our evaluation of research funding was achieved with the following four steps:

-

(1)

Data preparation. Based on the concluding report project leader provided to fund management organization we extracted the research publications to make further study.

-

(2)

Data download. The publications provided limited bibliographic information, including authors, journal, publication year, volume, issue, and page range. For further analysis, we needed to search the papers in the database to obtain in-depth bibliographic information including authors’ institutions, authors’ countries, cited references, cited times, and subject classification. Here, we used Web of Science as the data source, and retrieved all research publications in the concluding report in the WoS; this information was classed as Data 1-A. The WoS, now owned by Clarivate Analytics, included clean bibliographic information, and easily linked to the cited papers by clicking on cited times.

Then we extracted the cited references in the research publications, and searched in the WoS. This information was termed Data 1-B, we did not consider those documents not indexed by WoS because no database can include every document. We consider that WoS covers all the important journals in the main subjects.

The next step involved citing papers found in the research publications in the WoS, named as Data 1-C, which was closely related to the research topics represented by the research publications. These three data categories were defined as Data 1.

-

(3)

Data Validation. First, we searched research publications in the WoS, and then collected the keywords from the publications and sorted the keywords from most to least.

Keyword sets mean the keywords sets from the searched publications, and KY1 is the first keyword with highest amount, while KY2 is the second keyword with the second highest amount. In the format, N1 > N2 > N3 > N4 > N5.

Based on the keywords set, we retrieved the publications in the WoS as the data 2, and one keyword by ones following the amount order, to add to the query, and to check the precision and recall.

P means precision.

R means recall.

In general, with all keywords in the research publications to search in the WoS, we will get the highest R with 100%, but the P will be relatively lowest, in which P was interlock with the number of keywords. To balance the P and R and make sure the R with the relatively high value, we considered that R must be higher than 80%, and P was higher comparatively.

Method

-

(1)

Benchmarking bibliometrics

Here we adopt the benchmarking thinking. In Wikipedia (2018), benchmarking is the process of comparing one’s business processes and performance metrics to industry bests or best practices from other companies. Moreover, many other benchmarking nouns, such as competitor benchmarking, effective benchmarking, benchmark assessments, performance benchmarking, have been derived.

In the paper, we took the research publication the research program funded as the research object and with the bibliometrics method to make further evaluations, including citation analysis, co-citation analysis, social network analysis, journal co-citation analysis and et al.

-

(2)

Citation Analysis

Earlier in 1972, Garfield (1972) proposed citation analysis could be taken as a tool in journal evaluation and journals can be ranked by frequency and impact of citations for science policy studies. In essence, journal is the collection of publications with the relatively similar research theme, while the program research publications represent was also the publications sets with much more similar research theme. And Moed (2009) also thought citation analysis is one of key methodologies in the field of evaluative bibliometrics, aimed to construct indicators of research performance from a quantitative statistical analysis of scientific-scholarly documents.

Indicator

In management, dimensions typically measured are quality, time and cost, here we used the bibliometrics indicators to define the following three dimensions, including quantity, quality and relative influence (or efficiency).

-

(1)

Quantity

-

(1)

The number of papers published in the “four preeminent peer-reviewed journals “ (N1 for short)

Four preeminent peer-reviewed journals refer to NATURE, SCIENCE, CELL and PNAS (Proceedings of the National Academy of Sciences of the United States of America) (Handley et al. 2015; Khan and Hanjra 2009). In general, the more the number of papers published in these four journals, the higher the quality of the publication output of the project.

-

(2)

The number of papers published in the top 10% impact factor in the subject (N2 for short)

In general, the quality of papers have great relationship with the impact factor of the publishing journal (Leeuwen and Moed 2005; Hu and Wu 2014, 2017).

Here we took the number of papers published in journals with the top 10% impact factor in the corresponding areas, as one of the key quantity indicators.

-

(3)

The number of papers published in journals with the top 50% impact factor in the subject (N3 for short)

The paper published in a journal ranking in the top 50% impact factor, also could be recognized as the paper quality better than the average level in the subject.

-

(2)

Quality

-

(1)

Local cited times (Q1 for short)

The number of cited times refers to the times that the paper is cited by others. The citation can be regarded as the “recognition” by the peer, although there were some other citation motivations (Feng and Yishan 2009; Bonzi and Snyder 1991). Therefore, the number of cited times can reflect its influence. In this paper, cited times come from the paper in the research field, not the global cited times in the WoS, so could be named as the local cited times and Q1 for short.

-

(2)

Average cited times per paper (Q2 for short)

The average cited times per paper in the research publications is the average cited number of per paper in the group, which reflects the average level of the paper in the group. The formula can be expressed as:

In this formula, P means the paper sets from the research publication representing the program fund, and TC (P) is the sum of all the papers’ cited times, while N (P) is the number of papers.

-

(3)

Number of Top X papers (Q3)

Yang Wei, the former director of the National Natural Science Foundation of China, said in an interview of People’s Daily that a paper is outstanding, if the paper’s cited times is one in a thousand in the subject(Siyao and Yongxin 2016).

Drawing on the core ideas above, we designed the Top X paper as follows: on the basis of standardization of factors such as publishing year and document type, we ordered the papers in the same field with cited times from high to low, and the papers in the former X were Top X papers. The index can reflect the influence and impact of the paper in the field. We define the top 0.1% papers as the remarkable papers, 0.1–1% papers as outstanding papers, 1–10% papers as excellent papers, and 10–50% papers as original papers.

-

(3)

Relative influence (Short for RI)

Relative influence refers to the influence compared to the average level of the field, calculated by the ratio of the influence of the citation of a paper to the influence of the average citation of the group’s overall papers. The formula can be expressed as:

\({\text{RI}}\left( {P_{1} } \right)\) means the relative influence of P1; \(CT\left( {P_{1} } \right)\) is the local cited times of P1; CT(Pi) is the local times of Pi; N is the number of group’s overall papers.

The index reflects the relative research performance of the paper. If the value is greater than 1, it shows that the influence of this paper is higher than the average, while less than 1, it indicates that it is lower than the average.

The relative influence here refers to the ratio of a paper’s cited times to the average in the field.

In all, all the indicators in the above three dimensions could be summarized in the Table 1.

Data

Research fund output (RFO)

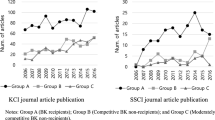

From the concluding report, we extracted 103 papers all indexed by SCI, and the year distribution was as follows:

The SCI paper produced by the project is mainly concentrated from 2011 to 2015, and presents a steady growth trend, and 20.6 SCI papers were published annually.

Field output (FO)

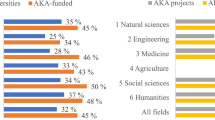

The project funding is from Ministry of Science and Technology of China in the realm of quantum physics, and supported in 2011 lasting for 5 years. With eight iterations, the R in the Fig. 1. was to 80.01%, and the query in the WoS was as follows on January 18th, 2016: Topic search with [(“linear optic*” AND quantum) OR “electromagnetically induced transparency” OR (“neutral atom*” AND quantum) OR (“key distribution*” AND quantum) OR “quantum memor*” OR “quantum nonlocal*” OR “photon entanglement*” OR “photon detect*” OR (“resonance fluorescence” AND quantum) OR “quantum local*”], and Document Types limited to (ARTICLE OR REVIEW) in the Science Citation Index Expanded (SCI) (Fig. 2).

Based on the query, we searched 17381 papers beginning in 1905 in the SCI, and the document type was limited to article and review. For the annual output before 2006 was less than 150, and The number of papers had been reached 16,156 from 2006 to 2015, more than 92 %. The detailed annual distribution is shown in the following Fig. 3.

From 2006 to 2015, the research output showed a gradual rising trend, from 1212 in 2006 to 2000 in 2015, boasting annual growth rates more than 50% in the past 10 years.

The research output in this field is in a steady rising stage in 2006–2015 years, indicating that the related research is getting more and more attention.

Results

Quantity comparison

There were 4 papers in the research fund output published in the “four preeminent peer-reviewed journals”, compared to 284 papers in the field. And the paper number in the top 10% journals is 63, accounted for 61.17%, while 3021 papers in the field published in the 10% journals, accounted for the entire field of 17.38% (Table 2).

Furthermore, all 103 SCI papers in the fund were in the top 50% journals. This further demonstrates that the project is in the forefront of the world’s research level in this research field.

In the field, all the papers published in kinds of journals, including 284 papers in the “four preeminent peer-reviewed journals”, 3021 in the top 10% journals, 13161 in the top 50% journals, and 4220 in the bottom 50% journals.

Compared to the general level of development in the subject, the fund research is better than the average research level in the field. And also, the fund had a relatively high proportion outstanding research, 3.9% compared to 1.6%, 61.2% to 17.4%, and 100% to 75.7%.

Quality comparison

Cited times and cited times per paper

The highly cited papers in the research fund output was 182, while 1439 in the field output. Moreover, the cited times per paper of the research fund was 15.51, higher than the 8.31 of the field (Table 3).

Top X papers distribution

In the Top X, we defined in the four levels, covering 0.1%, 1%, 10% and 50%, in which the papers listed in the 0.1%, means remarkable research output, and papers in the 1% was outstanding research. Then if the papers belonged to 10%, we considered them as the excellent research, while in the 50%, they could be recognized as original research (Table 4).

In the output publications, there were no papers in the 0.1%, so we could consider that no remarkable research, and 6 papers positioned in the 1%, accounting for 5.83% of output, means part of research could be recognized as outstanding research. Furthermore, 19 papers in the 10% and 49 papers in the 50%, so nearly half of research reached to the 50%.

Compared to the whole field, the output ratio of outstanding research, the excellent research and original research for the fund research, all surpass the corresponding level, it is much better than it was expected to be, based on the field development. Although the remarkable ratio is lower than the field, the research fund supported has the relatively high influence in the field, and has a positive role in promoting the development of the field.

Relative influence comparison

According to the analysis, in the 17,381 papers, there are 4609 papers with the relative influence higher than 1, which is higher than the average level of the influential papers, accounting for 26.52%. And the paper with the greatest relative influence is 147.12329 (Table 5).

For the 103 papers project output, there were 53 papers with relative influence higher than 1, accounting for 51.46%. And one of the most influential papers is 17.14286, published in NATURE in 2015.

From the perspective of relative influence, there are 51.46% papers in the project output higher than the average level, compared to 26.52% in the field. It indicates the overall research performance is better than the field.

Conclusion

Methods of research fund evaluation, especially after the fund duration, usually adopt the peer review method. Here based on the output publications of funded programs, we proposed a method from scientometrics, and widely used in the cited time, which could reveal the impact of funding. And considering cited times subject to the publishing year and subject, we made standardization and relative influence to the cited times in order to make appropriate comparison.

Then, this paper designs an indicator system from three perspectives, covering quantity, quality and relative influence, and takes a program funded by National Program on Key Basic Research Project of China (973 Program) as an example. We compare and analyze the research output through benchmarking from scientometrics thinking, with the above indicator system.

In terms of quantity, the proportion of program papers in the world’s top four journals, the top 10% journals and the top 50% journals are all higher than the research status in the field.

In terms of quality, the program is higher than average level of the field, in the cited times per paper, reflecting the program research better in the field. Besides, the project performance is superior to the field, in outstanding papers output, excellent papers output and original papers output. But there are no remarkable papers as output, the research program needs further improvement.

In terms of efficiency, the relative influence of the project is higher than the average level in the field, and even about 11.65% of the research achievement reaches the top 1/1000 of the world.

However, this is only from the perspective of scientific papers to make benchmarking bibliometrics, one kind of project output. Research output includes not only the publications, and even talent, patents, products and other achievements, so in the future research, it will be necessary to explore a more comprehensive indicator system to complete the research fund evaluation.

References

Bo, Y., & Zeng, J. (2011). Metrological analysis of national social sciences funding annual projects (2000–2009). Science and Technology Management Research, 31, 45–48.

Bonzi, S., & Snyder, H. W. (1991). Motivations for citation: A comparison of self citation and citation to others. Scientometrics, 21, 245–254.

Boyack, K. W., & Jordan, P. (2011). Metrics associated with NIH funding: A high-level view. Journal of the American Medical Informatics Association, 18, 423–431.

Cao, H., Chen, G., & Dong, E. (2013). Progress of basic research in Parkinson’s disease in China: Data mini-review from the National Natural Science Foundation. Translational neurodegeneration, 2, 18.

Chen, H., Roco, M. C., Son, J., Jiang, S., Larson, C. A., & Gao, Q. (2013). Global nanotechnology development from 1991 to 2012: Patents, scientific publications, and effect of NSF funding. Journal of Nanoparticle Research, 15, 1–21.

Costas, R., & Leeuwen, T. N. (2012). Approaching the “reward triangle”: General analysis of the presence of funding acknowledgments and “peer interactive communication” in scientific publications. Journal of the American Society for Information Science and Technology, 63, 1647–1661.

Feng, M., & Yishan, W. (2009). A survey study on motivations for citation: A case study on periodicals research and library and information science community in China. Journal of Data and Information, 2, 28–43.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178, 471–479.

Gök, A., Rigby, J., & Shapira, P. (2016). The impact of research funding on scientific outputs: Evidence from six smaller European countries. Journal of the Association for Information Science and Technology, 67, 715–730.

Grassano, N., Rotolo, D., Hutton, J., Lang, F., & Hopkins, M. M. (2017). Funding data from publication acknowledgments: Coverage, uses, and limitations. Journal of the Association for Information Science and Technology, 68, 999–1017.

Guo, H., Pan, Y., Ma, Z., Su, C., Yu, Z., & Xu, B. (2011). Quantitative analysit of papers sponsored by Natural Science Foundation of China. Science & Technology Review, 29, 61–66.

Handley, I. M., Brown, E. R., Moss-Racusin, C. A., & Smith, J. L. (2015). Quality of evidence revealing subtle gender biases in science is in the eye of the beholder. Proceedings of the National Academy of Sciences, 112, 13201.

Hu, Z., & Wu, Y. (2014). Regularity in the time-dependent distribution of the percentage of never-cited papers: An empirical pilot study based on the six journals. Journal of Informetrics, 8, 136–146.

Hu, Z., & Wu, Y. (2017). A probe into causes of non-citation based on survey data. Social Science Information, 56, 1–13.

Huang, Z., Chen, H., Li, X., & Roco, M. C. (2006). Connecting NSF funding to patent innovation in nanotechnology (2001–2004). Journal of Nanoparticle Research, 8, 859–879.

Huang, Y., Zhang, Y., Youtie, J., Porter, A. L., & Wang, X. (2016). How does national scientific funding support emerging interdisciplinary research: A comparison study of big data research in the US and China. PLoS ONE, 11, e154509.

Khan, S., & Hanjra, M. A. (2009). Footprints of water and energy inputs in food production—global perspectives. Food Policy, 34, 130–140.

Leeuwen, T. N. V., & Moed, H. F. (2005). Characteristics of journal impact factors: The effects of uncitedness and citation distribution on the understanding of journal impact factors. Scientometrics, 63, 357–371.

Lewison, G. (1998). Gastroenterology research in the United Kingdom: funding sources and impact. Gut, 43, 288–293.

LI, G. (2004) Improving the efficiency of scientific research depends on the ouput impact. Invention and Innovation, 1–5.

Moed, H. F. (2009). New developments in the use of citation analysis in research evaluation. Archivum immunologiae et therapiae experimentalis, 57, 13.

Rigby, J. (2013). Looking for the impact of peer review: Does count of funding acknowledgements really predict research impact? Scientometrics, 94, 57–73.

Siyao, L., & Yongxin, Z. (2016). Basic research is the supply side of innovation—an interview with Yang Wei, director of the National Natural Science Foundation of China. Beijing: People’s Daily.

Tang, L., Hu, G., & Liu, W. (2017). Funding acknowledgment analysis: Queries and caveats. Journal of the Association for Information Science and Technology, 68, 790–794.

Wang, X., Liu, D., Ding, K., & Wang, X. (2011). Science funding and research output: a study on 10 countries. Scientometrics, 91, 591–599.

Wang, J., & Shapira, P. (2011). Funding acknowledgement analysis: An enhanced tool to investigate research sponsorship impacts: The case of nanotechnology. Scientometrics, 87, 563–586.

Wang, J., & Shapira, P. (2015). Is there a relationship between research sponsorship and publication impact? An analysis of funding acknowledgments in nanotechnology papers. PLoS ONE, 10, 555–582.

Wikipedia (2018) Benchmarking. https://en.wikipedia.org/wiki/Benchmarking. Accessed 24 November 2018.

Xu, X., Tan, A. M., & Zhao, S. X. (2015). Funding ratios in social science: the perspective of countries/territories level and comparison with natural sciences. Scientometrics, 104, 673–684.

Yu, Z., Ma, Z., Guo, H., Wang, N., & Jia, J. (2013). A quantitative analysis of papers sponsored by National Science and Technology programs. Journal of Intelligence, 32, 1–5.

Zhang, A., Gao, P., & Liu, S. (2010). Analysis on the output of social science paper subsidized by science foundations of countries. Information Science, 28, 705–708.

Zhao, D. (2010). Characteristics and impact of grant-funded research: A case study of the library and information science field. Scientometrics, 84, 293–306.

Zou, G., & Tang, Y. (2011). Survey and analysis on input and output of major Projects of Natural Science Fund in China. Science and Technology Management Research, 31, 32–35.

Acknowledgements

This work is supported by National Social Science Foundation of China (No. 17CTQ029). Also we would like to thank anonymous reviewers for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gao, Jp., Su, C., Wang, Hy. et al. Research fund evaluation based on academic publication output analysis: the case of Chinese research fund evaluation. Scientometrics 119, 959–972 (2019). https://doi.org/10.1007/s11192-019-03073-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-019-03073-4