Abstract

This study explores the characteristics of scientific activity patterns through co-author affiliations to obtain new insights into interdisciplinary research. To classify the interdisciplinarity in research, we explored and compared two different approaches: the diversity of disciplines reflected in the listed affiliations of the authors and the diversity of the subject categories reflected in the reference list. To assess the diversity in departmental affiliations, we developed an explorative methodology that retrieves feature words from a combination of manual work and the thesaurus function in the Thomson Data Analyzer text mining tool. To assess the diversity in references, we followed the conventional approach applied in previous work. With both approaches, we relied on diversity as the measure for assessing interdisciplinarity of 157,710 articles published in PloS One (2007–2016). Based on a comparison between the results of both approaches, our study confirms that different methodologies and indicators can produce seriously inconsistent, and even contradictory results. In addition, different indicators may capture different understandings of such a multi-faceted concept as interdisciplinarity. Our results are summarized in a schematic representation of this twofold perspective as a method of indexing the different types of interdisciplinarity commonly found in research studies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The increasing complexity of the challenges involved in scientific progress demands ever more frequent application of capabilities and knowledge of different scientific fields (Ledford 2015). As science increasingly deals with boundary-spanning problems, important research ideas often transcend the scope of a single discipline or program. Thus, combining two or more disciplines into one’s research is valuable for pushing academic capability forward and for accelerating scientific discovery. In this context, various policy and funding initiatives have been developed to encourage interdisciplinary research (IDR) (Wang and Shapira 2015). As two of the leading scientific funding organizations in the world, the US National Science Foundation (NSF) and the National Natural Science Foundation of China (NSFC) have both given high priority to promoting IDR. Each supports IDR through a number of specific incentives to promote leading-edge discoveries in a wide range of scientific areas. Both also sponsor high-level strategic academic exchange platforms to foster IDR, innovation culture, and favorable collaboration environments (Huang et al. 2016).

Despite the growing attention IDR has received, there is a lack of objective consensus in the literature as to the definition of “interdisciplinary” (Huutoniemi et al. 2010). The definition of a “discipline” and discussions of the varieties of interdisciplinary, multidisciplinary, and transdisciplinary research have occupied much scholarly debate (NSF 2004). Stokols et al. (2003) provide distinct definitions for unidisciplinary, multidisciplinary, interdisciplinary, and transdisciplinary science. Compared to unidisciplinary research, which relies solely on the methods/concepts/theories associated with a single discipline, interdisciplinarity is a process in which researchers work jointly to address a common problem; however, each retains their own disciplinary perspective. In multidisciplinary research, scholars also work jointly to address a common problem, but each researcher works independently and sequentially from their own discipline-specific perspective. Transdisciplinarity describes the process where researchers work jointly to develop and use a shared conceptual framework that draws discipline-specific theories, concepts, and methods together. Choi and Pak (2006) contrast the definitions of multi-, inter-, and transdisciplinary research, finding that each of the three terms reflect a continuum of increasing levels of involvement by multiple disciplines. Although the distinctions between each of the above terms are valuable, evidence of the continuum found in empirical studies can often make it difficult to distinguish which is which (Rafols and Meyer 2010). In this paper, we have used the term interdisciplinary (interdisciplinarity) in a more general sense to encompass trans- and cross-disciplinary research. We do see multidisciplinary as a separate term to refer, for instance, to journals that publish articles from different fields, such as Nature and Science.

One of the most broadly-accepted definitions of IDR is set forth in a National Academies’ report: “Interdisciplinary research is a mode of research by teams or individuals that integrates information, data, techniques, tools, perspectives, concepts, and/or theories from two or more disciplines or bodies of specialized knowledge to advance fundamental understanding or to solve problems whose solutions are beyond the scope of a single discipline or area of research practice”. (National Academies 2004). Similarly, Porter et al. (2007) state that IDR requires an integration of concepts, techniques, and/or data from different fields of established research. Derived from the above definitions, knowledge integration is the essence of IDR—that is, a particular mode of merging theories and concepts, techniques and tools, or information and data from various fields of knowledge (Klein 2008). Knowledge integration can stem from integrating the knowledge of different disciplines, i.e., interdisciplinarity in its purest sense, but more broadly, knowledge integration may also bring together knowledge originating from different research traditions, teams, regions, or schools of thought. The more an article, or any other item under investigation, integrates different sources of knowledge, the more it is interdisciplinary (Rousseau et al. 2018).

As stated above, knowledge integration is the focal point of IDR. Hence, collaboration seems to be of secondary importance in IDR studies. Yet, in the majority of practical cases, IDR implies the collaboration of different persons or even different teams. In other words, collaboration is an important means of knowledge integration. In reality, research managers and policymakers are relying more and more on multi-institutional collaborations to develop strong, intellectually diverse teams to answer complex research questions (Suresh 2012). One of the goals of many institutions and funding programsFootnote 1 is to stimulate collaboration as a means to promote interdisciplinarity (Rijnsoever and Hessels 2011). Rafols and Meyer (2010) provide the example of a biophysics and a biochemistry lab collaborating in the interdisciplinary field of bionanoscience.

However sophisticated the process of knowledge integration might be, in the end, one has to face the reality of measuring such “knowledge integration” and the challenges it presents. In this study, we draw on a comparison of approaches from two different perspectives: the disciplinary diversity reflected in departmental affiliations and the disciplinary diversity of a publication’s reference list. By combining these two approaches, we have tried to present a more informative framework for measuring knowledge integration in IDR.

The paper is organized as follows. Following an introduction and a brief overview of the literature, we present a systematic approach to measuring the disciplinary diversity in departmental affiliations in “Data and methods” section. “Results” section contains the results of the analysis along with a comparison between the departmental affiliations method and the other more widespread analytical approach—disciplinary diversity in the reference list. A schematic representation of this twofold perspective is also provided. In “Discussion and conclusion” section, we draw our conclusions and discussions.

Brief literature review

IDR is generally treated as a source of creativity and innovation (Dogan and Pahre 1990), and as a mode of research that has a positive influence on breakthroughs and outcomes that promote economic growth or answer social needs (Rafols and Meyer 2010; Schmickl and Kieser 2008). Hence, measuring IDR is highly desirable for policymakers, funders, research managers, and science sociologists (Wagner et al. 2011). Yet, defining a unique and absolute measure of IDR is a big challenge due to the complexity of the concept. For this reason, several proxy indicators have been created, with each proxy indicator delivering different insights about the interdisciplinary nature of the research under study.

Before going into detail, we note that this literature review does not cover the evaluation of IDR as we consider this to be quite a different issue. Instead, we have focused on the quantitative measures used to assessing interdisciplinarity in research articles, or in other words, for identifying the interdisciplinary research outcomes. Through a comprehensive review of studies on interdisciplinarity, Wagner et al. (2011) examined the different approaches to understanding and measuring IDR. Their study reveals that bibliometric measures are the most frequently studied and used quantitative measures. Bibliometrics measures include co-authorships, co-inventors, references, citations, and so on (Morillo et al. 2003; Rinia et al. 2002; Leydesdorff and Rafols 2011; Leydesdorff et al. 2018). It is worth noting that, bibliometric methods can robustly demonstrate some form of knowledge integration. However, they seldom reveal either the modality (interdisciplinary, transdisciplinary, cross-disciplinary et al.) of research or the level of intrinsic knowledge integration.

Among other methods, article reference analysis is probably the most widespread analytical approach for measuring IDR (Porter et al. 2007; Wang et al. 2015; Zhang et al. 2016; Mugabushaka et al. 2016). Porter and Rafols (2009) introduced an “integration score” that describes the number of disciplines cited by a paper, along with the “concentration” and “distance” of those disciplines. Rafols and Meyer (2010) note that the percentage of references outside the discipline of the citing paper is a simple and often used indicator of IDR. This measurement has also been suggested or applied in other works (e.g., Garfield et al. 1978; Larivière and Gingras 2010). However, any measure that includes citations (or references) has to deal with the problem of “why does one cite”, which includes the authors’ preferences for certain fields, journals, and/or languages. Moreover, citation analysis is further complicated by the fact that disciplines are not sharply defined and are vastly different in size (Rousseau et al. 2018). Using different subject classifications from different bibliometric databases (for instance, Web of Science, Scopus, etc.) can also lead to inconsistent results.

Abramo et al. (2012) further note that a common methodological trait in studies based on reference analysis is the assumption that a certain paper inherits the subject category(s) of the publishing journal as determined by international bibliometric databases. In this study, the authors analyzed the degree of collaboration among scientists from different disciplines based on the Italian classifications for academics in science to identify the most frequent “combinations of knowledge” in research activities. Their study shifts the problem of recognizing IDR through the semantic analysis of an article or the scientific classifications of the papers cited to that of identifying the specializations of its authors. The series of studies taken by Abramo et al. (2012, 2017) are very inspiring, yet they are difficult to generalize since, to the best of our knowledge, Italy’s unique field classification system for authors is not used in other countries. Further, placing an individual within a specialty or discipline requires expert judgment or biographical information that includes the person’s educational background, research area, and position because authors do not list their disciplines in the published literature. Obviously, determining the disciplinary classifications for authors by either of these methods is impractical with large-scale datasets.

That said, analyzing IDR through the co-authorship of scholarly output is not widely used in the literature due to a lack of available data sources. A potential solution may be to conceive IDR in terms of the diversity of the disciplines involved in the authors’ departmental affiliations (Zhang et al. 2018). Schummer (2004) uses the percentage of co-occurrences of departmental affiliations based on different disciplines as an indicator of IDR. However, his dataset of 600 papers published in “nano” journals in the period 2002–2003 only provides limited scope for generalizing this approach. Porter et al. (2008) analyzed 110 researchers and compared several bibliometric indicators of IDR in their research outputs. Their results coincide with Schummer (2004), in that the main drawback with this method is authors not listing their disciplines in the published literature. This gap in the literature triggered the first motivation for this study—the need for a systematic approach to measuring the disciplinary diversity in departmental affiliations. Such an approach would be common to all countries and regions and applicable to large-scale datasets.

Taking advantage of Italy’s unique classification system for authors, Abramo et al. (2018) investigated the convergence of two bibliometric approaches in measuring IDR: one based on reference analysis, the other based on the disciplinary diversity of a publication’s authors. The authors find that, in general, the diversity of the reference list grows with the number of fields reflected in a paper’s author list. However, this general tendency varies across disciplines, and noticeable exceptions are found at individual paper level. Adams et al. (2016) note that the same project may be indexed as interdisciplinarity for one parameter (e.g., departmental affiliations or universities) and not for another (diversity of references). The main objective of their study was to compare the consistency of indicators for interdisciplinarity and, if possible, identify a preferred methodology. Their study reveals that the choice of data, methodology, and indicators can produce seriously inconsistent, and even contradictory results despite being applied to a common set of disciplines or countries. For this reason, Adams et al. (2016) point out that it is essential to consider a framework for analysis that draws on multiple indicators, rather than expecting any simplistic index to produce an informative outcome on its own.

Following the experiments of Adams et al. (2016) and Abramo et al. (2018), we will further compare our departmental affiliations approach with the more conventional reference analysis approach. The objective of the comparison is not to identify a better or preferred methodology; instead, we aim to investigate how different indicators may capture different or complementary features of such a multi-faceted concept as interdisciplinarity. Further, we hope this research will contribute to a more informative “framework” for indexing multiple aspects of interdisciplinarity in research studies.

Data and methods

Data

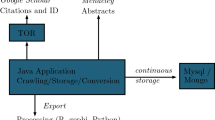

All articles published during 2007–2016 in the multidisciplinary journal PloS One were downloaded from Clarivate Analytics’ Web of Science (WoS). The total sample dataset contained 162,501 records. The references for each paper were retrieved from WoS. The author affiliationsFootnote 2 were obtained by first identifying feature words in the affiliation names from the full author attributions. The feature words were: Dept, Sch, Ctr, Coll, Inst, Lab, Assoc, Fac, Div, etc. Then, the thesaurus function in the Thomson Data Analyzer (TDA) text mining tool was used to clean the information. Because our research question focuses on affiliations at the departmental level, but some of the feature words are also common to university and institution names, e.g., the Harbin Institute of Technology, a thorough manual check was conducted to reduce noise. After discarding the records that did not include affiliations or lacked relevant feature words, 351,822 affiliations were confirmed from the bylines of the remaining 157,710 articles.

Methods

Setting the discipline classification systems

Several traditional “intellectual” classification schemes are used in bibliometrics, such as the 22 broad classifications for fields in the Essential Science Indicators database or the 250 + subject categories system from the WoS and Journal Citation Reports databases. Glänzel and Schubert (2003) proposed and subsequently developed a hierarchical scheme called the ECOOM subject classification. ECOOM has 16 major disciplines and 68 sub-disciplines. To conduct our analysis of the reference list, the 250 + WoS categories corresponding to the references in the articles were retrieved directly from the WoS database. In terms of the subject classification for the affiliations, we used the ECOOM classifications. There are two reasons for choosing the ECOOM classifications over the WoS scheme for affiliation analysis. First, the subject-related feature words in the affiliation names are usually not specific enough to allow proper matches with WoS’s 250 + classifications, but the larger granularity of ECOOM’s 16 major disciplines do. Second, ECOOM’s hierarchical structure means that the WoS subjects used to classify the references can be compared and matched to higher-level categories in the ECOOM scheme. More specifically, the 250 + categories in WoS can each be assigned to one of ECOOM’s 68 sub-disciplines, which are aggregated into the 16 major disciplines of the ECOOM hierarchy. This matching hierarchy helps to build up the vocabulary of disciplinary feature words retrieved from the department affiliations (see Fig. 1) and facilitates the most challenging task of matching the affiliations with the discipline most relevant to their work.

All subject-related feature words from the 351,822 affiliation names were retrieved following the framework outlined above. Each affiliation was assigned to one of ECOOM’s 16 major fields through several rounds of machine-based and manual processing. Some adjustments to ECOOM’s system were required to completely match all the records, as shown in Table 1.

As a result, ECOOM’s 16 major disciplines were reduced to a total of 13 major disciplines: (1) Computer Science & Information Technology; (2) Agriculture & Environment; (3) Biology; (4) Medicine; (5) Chemistry; (6) Engineering; (7) Geosciences & Space Sciences; (8) Mathematics; (9) Psychology; (10) Physics; (11) Arts & Humanities; (12) Social Sciences (General); and (13) Social Sciences (Economics & Management).

Some brief notes about the adjustments made to ECOOM’s 16 major disciplines follow:

-

1.

“Multidisciplinary Sciences” was excluded. Given our main research question is to study interdisciplinarity in research, each affiliation needed to be classified into one or more specific disciplines. Therefore, the field of “Multidisciplinary Sciences” is not applicable to this study.

-

2.

The two medicine fields were merged into a single discipline “Medicine”, and the two fields of “Biology” and “Bioscience” were merged into a single discipline “Biology”. These changes were made because many affiliations contain feature words that are only broadly related to their disciplines, such as “Dept Med” and “Dept Bio”.

-

3.

Some interdisciplinary fields in ECOOM were divided into specific disciplines. “Biomedical Research” was divided into “Biology” and “Medicine”. “Neuroscience & Behavior” was divided into “Medicine” and “Psychology”. Note that in cases where an affiliation name contained a word related to biomedical, it was assigned to both “Biology” and “Medicine” in our classification system. In other words, multi-assignments were allowed.

-

4.

“Psychology” was added as an independent discipline. In recent years, there has been a great deal of development in psychological research, and the trends within this field are commonly found in the names of affiliations all over the world.

-

5.

“Computer Science & Information Technology” was added as a new major discipline. As a result of rapid developments in the field of Computer Science, many relevant research institutions have been established all over the world. We frequently found many feature words related to computer science and information technology in the affiliation names in our data sample. So, to maintain a relatively even data distribution for each discipline, we separated “Computer Science & Information Technology” from “Engineering and Social Sciences I (General, Regional, & Community Issues)”.

-

6.

Social Sciences I & II were divided into two specific disciplines: “Social Science (Economy & Management)” and “Social Science (General)”. Again, this adjustment was made to maintain a relatively even data distribution for each discipline within the sample.

As previously mentioned, every major discipline and sub-discipline in the ECOOM scheme has a clear match to the categories in WoS. In its 2016 edition, WoS tags 252 categories to 12,000 + journals. The six newest categories, “Audiology & Speech-language Pathology”, “Cell & Tissue Engineering”, “Ergonomics”, “Green & Sustainable Science & Technology”, “Logic”, and “Nanoscience & Nanotechnology”, were only added in 2016 and are not included in ECOOM’s classification scheme. These categories were removed, along with “Multidisciplinary Sciences” as mentioned above, to arrive at a final list of 246 WoS categories. These 246 WoS categories were brought in concordance with the 16 major ECOOM fields to further help classify the affiliations into our 13 disciplines. The specific procedure we followed is further explained in the following section.

Assigning affiliations to their corresponding discipline(s)

Discipline-specific lexicons for the 246 WoS categories were used to correlate the discipline feature words retrieved from the affiliation names with a field of research. All feature words were carefully checked using a combination of manual and machine-based work to build a vocabulary of feature words for each major discipline. The resulting list was used to assign the affiliations to one or more of the 13 disciplines in our classification system. These 13 disciplines will be referred to as affiliation disciplines.

Throughout the entire process, we focused on a balance between data precision and the recall rate, and this trade-off between accuracy and efficiency was the primary motivator for the decision to include specific feature words in our vocabulary. After several rounds of manual and machine-based screening, 499 discipline feature words were collected from the affiliation names and 351,822 affiliations were identified and classified into the 13 different disciplines. Records without listed affiliations were excluded, resulting in 157,710 articles for further analysis, i.e., 97.05% of the original data sampled.

Some basic rules were obeyed in our processing procedure:

-

1.

Unknown words in affiliation names were classified manually using additional sources. Generally, the abbreviations of academic terms in affiliation names, such as Med, Bio, and Stat, made it easy to identify their discipline(s). However, some words, e.g., “Fis” and “Quim”, were harder to classify. In these cases, a manual trace of the author’s full affiliations in the original document to the affiliation’s official website was conducted to confirm their field of research. In the example above, “Fis” and “Quim” were identified as the Spanish abbreviations for “Física” and “Química”; the corresponding English words are Physics and Chemistry.

-

2.

“Full matching” technology was applied whenever needed. For instance, the term “Dis” is an abbreviation for the medical feature word “Disease”. However, “Dis” may also appear in “ADIS” or “Discovery”, etc. The same applies to other abbreviations, such as “eye”, “soc”, “eth”, “art”, “gene”. In these cases, “full match” was set for these particular words to avoid possible noise.

-

3.

Where an abbreviation may have two different meanings, the full affiliation name was used. For example, when the feature word “Vet” appears alone in an affiliation name, it usually means Veterinary and should be classified into “Biology”. However, when it appears within the phrase “Vet Affairs” or “Vet Admin”, it represents “Veterans”, and the related affiliation was generally found to be a veterans’ rehabilitation center, which was classified into “Medicine”. A manual check of the full affiliation name resolved each case.

-

4.

In terms of affiliations with only abbreviations for names, specific information was obtained through a manual online search. For instance, the abbreviation “CIRAD” stands for the “Centre de Coopération Internationale en Recherche Agronomique pour le Développement” which in English is the “French Agricultural Research Centre for International Development”. Obviously, this organization falls within “Agriculture & Environment” in our classification scheme, therefore “CIRAD” was added to the thesaurus with “full matching” technology.

-

5.

Affiliations with more than one discipline feature word in their name, like “Dept Biochem” and “Div Biomed Stat & Informat”, were regarded as interdisciplinary affiliations and were given multiple assignments into each corresponding discipline. For example, “Dept Biochem” was assigned to both “Biology” and “Chemistry”.

Applying measurements for IDR

As stated in the section of literature review, the concept of IDR is both abstract and complex, which makes it difficult for a single indicator to fully represent or measure IDR. Therefore, it is not surprising that different indicators may deliver inconsistent and even contradictory results. Considering diversity, however, as a proxy of interdisciplinarity, it is always true that less diversity means more specialized research, while greater diversity reflects more integrative research (Rousseau et al. 2018). Stirling (2007) included the concept of disparity in bibliometric network structures for a more precise concept of diversity, which led to the widely-used Rao-Stirling diversity measure. Stirling (2007) and Leinster and Cobbold (2012) point out that the notion of diversity has three components: variety, balance, and disparity, and that neglecting one of these three aspects may distort the final assessment of diversity.

Our operationalization of the notion of diversity is twofold: one component of diversity is based on author affiliations; the other is based on references. More precisely, each affiliation was assigned to one or more of the 13 disciplines (see Table 1), whereas each cited publication (that was indexed in WoS) was assigned to one or more WoS category. In a second step, we then assessed the diversity of the affiliations and the diversity of cited literature for each individual paper.

In terms of the specific diversity measure, we focus on the three components of diversity: variety, balance, and disparity, as outlined in Stirling (2007). Further, we applied the integrated diversity measure (ID) from Zhang et al. (2016). The ID measure was derived from ecology (Jost 2009; Leinster and Cobbold 2012) as a monotone transformation of the Rao-Stirling indicator (Rao 1982; Stirling 2007). Although the two indicators are highly correlated, ID has some advantages over Rao-Stirling. The ID indicator is scaled, which means one can consider increases or decreases in diversity as percentages. For example, a 20% higher ID value indicates 20% more diversity. Additionally, ID has more discriminative power than the Rao-Stirling indicator (Zhang et al. 2016). Concrete definitions follow.

Variety is defined as the number of non-empty categories assigned to system elements. In this study, the system elements were: (1) the affiliations in the bylines; and (2) the WoS-indexed references. Accordingly, the non-empty categories were: (1) the disciplines in the 13-discipline system (Table 1); and (2) the subject categories in WoS.

Balance is a function of the pattern of the element assignments across categories—called “evenness” in ecology and “concentration” in economics. The Gini index is a well-known concentration/evenness measure where, if G denotes the Gini concentration measure, then B = 1 − G is the corresponding measure of evenness or balance (Nijssen et al. 1998). In this study, we adopted “B = 1 − G” as the balance indicator to measure the disciplinarity distribution balances in affiliations and in cited references.

Disparity refers to the manner and degree to which things are distinguished. Disparity is the antithesis of similarity.

where sij is the Salton similarity between the subject categories i and j. In calculating the diversity in a reference list, sij was based on cross-citation similarity matrix of the WoS subject classifications during the period 1991–2015.Footnote 3 In calculating the diversity of affiliations, sij was derived from a cross-citation similarity matrix of the 13 affiliation disciplines, which is aggregated from WoS subject cross-citation matrix according to the hierarchical structure of ECOOM classification, and the corresponding relationship shown in Table 1. dii = 0 for all i. Then, we independently calculated the average disparity between the references and between the affiliations for each publication.

The ID measure, which comprises all three of the above components (Zhang et al. 2016), is defined as

where \(p_{i} = {\raise0.7ex\hbox{${x_{i} }$} \!\mathord{\left/ {\vphantom {{x_{i} } X}}\right.\kern-0pt} \!\lower0.7ex\hbox{$X$}}\); \(X = \sum xi\). dij is the disparity between the subject categories i and j, obtained from formula (1). Figure 1 outlines each of the data processing methods used.

Results

Descriptive statistics of diversity measures in affiliations

The descriptive statistics for each of the author affiliation diversity measures are shown in Table 2. The mean number of affiliation disciplines (variety) for each paper was 2.39, which means that each paper involved an average collaboration of more than two disciplines (of the 13 total disciplines). The ratio of papers with collaborating authors was 99.13%, while the collaboration ratio across different affiliations was as high as 73.07%. It is worth noting that the collaboration ratio across disciplines was even higher than the ratio across affiliations, at 75.49%; however, this was mostly due to multiple category assignments for affiliations like “Dept Biochem”.

It is also noteworthy that the largest value for affiliation variety was 12. This means that the co-authors of that paper work at institutions spanning almost all of the 13 disciplines. The paper in question, titled “A collaboratively-derived Science-Policy research agenda” (Sutherland et al. 2012), was published in 2012, by 52 authors from 31 different institutions in five countries. This paper identified key open questions on the relationship between science and policy to help improve the mutual understanding and effectiveness of those working at the intersection of these areas. The 52 participants were selected from a wide range of experiences including people in government and non-governmental organizations, academia, and industry. The only discipline not covered in the paper was Computer Science & Information Technology. Surprisingly, this paper does not have a similarly high diversity value using the reference analysis method. In terms of affiliations diversity, the paper lies in the top 0.02%, while only in the top 74% in terms of references diversity. This observation confirms our claim in the “Methods” section: different indicators may deliver inconsistent and even contradictory results.

Figure 2 further shows the distribution of individual articles according to: the number of authors (Fig. 2a); the number of affiliations (Fig. 2b); and the variety of affiliation disciplines (Fig. 2c). In PloS One, articles with five authors dominated, and articles with a single or double affiliation accounted for 55.17% of the dataset. In terms of the variety of disciplines in the author affiliations, the co-occurrence of two disciplines was dominant. All three figures showed long tail distributions to the right.

The relationship between the disciplinary diversity in affiliations and disciplinary diversity in references

Table 3 displays the Spearman correlations of the different diversity indicators for both affiliations and references. As shown, the correlations between each pair of corresponding indicators (in bold) appear to be relatively weak or even unrelated.

We further divided the entire dataset of 157,710 publications into three subpopulations:

-

I.

Author affiliations assigned to a single discipline (single-disciplined affiliations)—38,662 publications (25% of the dataset);

-

II.

Author affiliations assigned to multiple disciplines but where the proportion of one discipline was higher than 60% (one dominate discipline)—50,167 publicationsFootnote 4 (32%);

-

III.

Author affiliations assigned to multiple disciplines with no one discipline exceeding a proportion of 60%—68,881 publications (43%).

We then assessed each of these subpopulations for diversity using the reference list method as the basis for analysis. Figure 3 illustrates the distribution of ID values reflected in the references across the three subpopulations. The ID values for the reference lists of papers with a single disciplinary affiliation chart distinctly above the other two lines at the lower end of the ID scale (to the left in Fig. 3). However, at ID levels above 7, papers with no dominant discipline account for the highest proportion of papers. In general, papers with affiliations that reflect multiple or more evenly distributed disciplines accounted for a larger proportion of papers with a high ID than papers with affiliations in only one discipline. This finding is similar to the observation found in Abramo et al. (2018), where the authors distinguished publications between single authors and multi-authors (with single discipline vs. multiple disciplines). Although we find a general increase in the diversity of cited references from “single-disciplined affiliations” to “one-discipline dominating in multi-affiliations”, and finally, to “no-discipline dominating in multi-affiliations”, it should be noted that a range of reference-based diversity values was observed in each subpopulation. Noticeable exceptions were found at the individual paper level, which are further explained in “Schematic representation of a twofold perspective” section.

When zooming into the largest subpopulation—multi-affiliations with no dominant discipline—we found a monotonic increasing trend in both reference-based variety and ID value as the variety of affiliation disciplines increased (see Table 4 for the average diversity values of the reference list for subpopulation III). However, we again note that there are exceptions for individual papers. Some papers with author affiliations spanning more than five different disciplines do show very low reference diversity; and vice versa. This leads to further insights in the next section.

Schematic representation of a twofold perspective

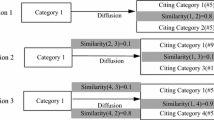

In this section, we explore knowledge integration from two different perspectives—disciplinary diversity in affiliations and disciplinary diversity in references. Figure 4 provides a schematic representation of this twofold perspective following Porter et al. (2007) and Rafols and Meyer (2010). Since this study examines individual publications and knowledge integration through the disciplines associated with author affiliations and references, the central node in each graph represents the focal literature. The affiliation discipline sets are on the left, and the reference discipline sets are on the right. Each of the colored icons in the network represents a discipline. There are four possible typologies of different combinations:

-

(i)

Low affiliation disciplinary diversity–low reference disciplinary diversity (LDA–LDR) represents cases of specialized disciplinary research, where all references are from same or similar disciplines and produced by authors from the same or similar affiliation disciplines.

-

(ii)

Low affiliation disciplinary diversity–high reference disciplinary diversity (LDA–HDR) indicates cases where the references involve a variety of subjects, but the author affiliations are within the same or similar disciplines.

-

(iii)

High affiliation disciplinary diversity–low reference disciplinary diversity (HDA–LDR) represents cases of specialized research, where all references are from the same or similar disciplines, but the author affiliations fall within a variety of disciplines.

-

(iv)

High affiliation disciplinary diversity–high reference disciplinary diversity (HDA–HDR) denotes cases where the references cover a variety of subjects and are produced by authors with a variety of affiliation disciplines.

To populate this schematic model with our empirical data, we ranked each paper in the sample according to two measures of variety, variety_A, variety_R, and two measures of integrated diversity, ID_A, ID_R, in descending order. The top half of all publications for each measure was regarded as “high”, and the bottom half were regarded as “low”. After a cross-matching process between the high and low groups based on each measure, we identified representative paper samples for each combination, as shown in Fig. 4. The proportion of articles for each of the four combinations is shown in Table 5.

Table 5 shows that the “low–low” and “high–high” combinations occur much more frequently than the “low–high” or “high–low” combinations in terms of the variety of different disciplines reflected in the references and affiliations of each article. However, in terms of the ID measure, the distribution among the four combinations is relatively even. This observation deserves deeper exploration in the future.

The results in Table 5 indicate that authors from the same or similar affiliation disciplines can produce both specialized disciplinary research and interdisciplinary research when measured in term of their reference list. Similarly, authors from a variety of affiliation disciplines can also produce either disciplinary or interdisciplinary research from the perspective of their cited references. In addition to the example of the “HDA–LDR” category presented in “Descriptive statistics of diversity measures in affiliations” section (Sutherland et al. 2012), the papers listed in Table 6 serve as representative examples of each of the four combinations from the perspective of ID values. It is worth noting that the papers used to illustrate the LDA–LDR and LDA–HDR categories both have the lowest ID_A values across the entire dataset, but carry distinctly different ID_R values. In the LDA–LDR category, the paper’s co-authors have several different affiliations but all are related to medicine, and the topic of the paper focuses on medical issues. This is typical of the “LDA–LDR” category. In contrast, the paper in the LDA–HDR category examines the cross-disciplinary topic of “Stress and the Multiple-Role Woman” with authors from one social science institution. This paper has high reference diversity and cites publications from a rich variety of different subjects including psychology, sociology, neurosciences, psychiatry, gerontology, endocrinology and metabolism, behavioral sciences, biology, management, economics, etc. Again, this is typical of “LDA–HDR” papers. The HDA–HDR paper explores the topic of “refining time-activity classification of human subjects using the global positioning system” and shows high diversity in both author affiliations and cited references. From a bibliometrics perspective, “HDA–HDR” research might demonstrate more evidence of knowledge integration. Thus, this category may deserve more attention by research managers and policymakers.

Discussion and conclusion

In recent years, more and more attention has been paid to exploring the integration of different disciplines from the perspective of the collaborators’ fields of study. In bibliometrics, the co-authors of an article represent a wealth of information. Their research affiliations, disciplines, geographical distributions, and so on, can all be used for deep investigations and the analysis of the patterns and laws of interdisciplinary activities. This study attempted to explore the characteristics of scientific activity patterns from the perspective of the co-author affiliations with the aim of revealing new insights for interdisciplinary research.

We presented an explorative methodology for retrieving feature words to classify the disciplines reflected in affiliations based on a combination of manual work and the thesaurus function in TDA’s text mining tool. By adapting the conceptual framework provided by Rafols and Meyer (2010), we investigated two different aspects of interdisciplinarity: the disciplines of the authors’ listed affiliations and the subject categories appearing in their reference lists. Diversity was measured using integrated diversity in both respects.

Current policies often implicitly assume that IDR can readily be identified and traced, but this is far from true. Based on a comparison of results for these two different approaches to measuring interdisciplinarity, our study confirms the conclusion of Adams et al. (2016) that different methodologies and indicators can produce seriously inconsistent and even contradictory results. We wholeheartedly agree with the authors that a more sophisticated framework for indexing multiple aspects of interdisciplinarity in any unit of research combined with expert reviews and interpretations could be informative. However, unlike Adams et al. (2016) where the authors focus on analyzing projects in grouped units, disciplines, and countries, we zoom in on each individual paper and provide examples that represent the typical inconsistencies that result from using different approaches. In our opinion, these findings indicate that there is no one preferred methodology for identifying interdisciplinarity as Adams et al. (2016) attempted to explore.

The low correlation and inconsistent results between the two measurements observed in this study are not surprising. As Leydesdorff and Rafols (2011) state, different indicators may capture different understandings of such a multi-faceted concept as interdisciplinarity. Contradiction between two indicators does not mean either is invalid. Rather, we attempt to summarize some limitations in each of the two approaches to measurement.

Limitations on reference-based diversity measurements

Reference-based analysis is one of the most conventional analytical tools for IDR, but it is far from optimal. First, due to the lack of subject classification system at the individual paper level, one has to assume that a certain reference paper inherits the subject category(s) of the publishing journal in a bibliometric database (Abramo et al. 2012). Second, in choosing a bibliometric database, scholars often opt for Clarivate’s WoS. However, WoS has some inherent limitations. WoS data are much richer and more fine-grained in science than in the social sciences and humanities. Many journals are assigned to multiple categories. Moreover, books, book chapters, and regional non-English journals are not as well represented. Therefore, relying on WoS data can lead to biased results, especially in social sciences and humanities. Third, authors sometimes “tune” their cited references for specific journals (Adams et al. 2016), making the reference list a less reliable data source for IDR analysis. Lastly, using references as a basis for analysis must incorporate the question: “why does one cite”. A large portion of references are only “mentioned” in the background or introduction section, which does not demonstrate any real knowledge integration in the essence of the research. Without distinguishing the different “use” of cited references, reference-based measurement seems unable to provide direct information about the interdisciplinary nature of the research itself.

Limitations of affiliation-based diversity measurement

The study of Abramo et al. (2012) had an exceptional advantage in that each Italian academic must classifies themselves in one, and only one, of 370 scientific fields. These fields are grouped into 14 disciplinary areas. However, given this advantage, it is difficult to generalize the research methodologies applied in Abramo et al. (2012). Therefore, for large-scale empirical studies, a more practical way of determining an author’s field is through their departmental or institutional affiliations. Although our approach is based on the diversity in affiliations and does overcome the problem of assigning disciplines to researchers who no longer work in the field in which they took their degree, there are some difficulties and challenges with this approach. For example, many abbreviations in the affiliation names are ambiguous and could have represented several different feature words. Determining which feature word applies demands a tedious amount of labor in searching for more information. Further, some affiliations are intrinsically interdisciplinary which may not be reflected in their name. Finally, determining interdisciplinary attributes solely from the author’s affiliation(s) may lead to biased conclusions, since affiliations may reflect organizational units rather than purely disciplinary ones.

Perspectives on future research

In future research, we will seek to further refine and improve our methods to overcome the above limitations. In this regard, bottom-up approaches based on clusters formed by articles using co-citation, co-words, bibliographic coupling, and network analysis will be explored to better capture the disciplinary features of both affiliations and individual authors. The disciplinary portrait of the co-authors may be derived with greater precision from more complete information. Further, we also intend to generalize these methods to larger bibliometric sets for deeper analysis and verification.

We strongly agree with Wagner et al. (2011) that much development is needed before metrics can adequately reflect the actual phenomenon of interdisciplinarity. We further stress that bibliometrics methods, whatever the measurement and from whatever perspective, can only be useful for identifying “candidates” for a further examination of IDR. To assess the actual level of interdisciplinarity in research or its novelty, a combination of expert peer reviews and a deeper interpretation of contents are vital.

Notes

For example, at the level of consolidated scientific research programmes, US science and technology funding agencies are increasingly supporting large-scale, centralized, grant-based research projects that span multiple disciplines and institutions (Corley et al. 2006). European programs are promoting collaboration and creating regional and international scientific networks of different generations of researchers to spread skills (European Commission 2012). Further, leading research institutes are demanding innovative solutions that combine knowledge from different scientific disciplines (National Academies 2004).

In the following part, affiliation(s) always refers to the departmental affiliation(s) listed by an author.

The cross-citation matrix of all SCs (1991–2015) was constructed by Lin Zhang based on an in-house database of the Centre for R&D Monitoring (ECOOM), Belgium.

Some experiments were tried before we set the threshold of 60%. Compared to other thresholds, for instances, 70% or 80%, the threshold of 60% provides a much more balanced distribution of publications among the three sub-datasets.

References

Abramo, G., D’Angelo, C. A., & Costa, F. D. (2012). Identifying interdisciplinarity through the disciplinary classification of coauthors of scientific publications. Journal of the Association for Information Science & Technology, 63(11), 2206–2222.

Abramo, G., D’Angelo, C. A., & Di Costa, F. (2017). Do interdisciplinary research teams deliver higher gains to science? Scientometrics, 111(1), 317–336.

Abramo, G., D’Angelo, C. A., & Zhang, L. (2018). A comparison of two approaches for measuring interdisciplinary research output: The disciplinary diversity of authors vs the disciplinary diversity of the reference list. Journal of Informetrics. (under review).

Adams, J., Loach, T., & Szomszor, M. (2016). Interdisciplinary research: Methodologies for identification and assessment. Digital Research Reports. London: Digital Science.

Choi, B. C., & Pak, A. W. (2006). Multidisciplinarity, interdisciplinarity and transdisciplinarity in health research, services, education and policy: 1. Definitions, objectives, and evidence of effectiveness. Clinical and Investigative Medicine. Medecine Clinique et Experimentale, 29(6), 351–364.

Corley, E. A., Boardman, P. C., & Bozeman, B. (2006). Design and the management of multi-institutional research collaborations: Theoretical implications from two case studies. Research Policy, 35(7), 975–993.

Dogan, M., & Pahre, R. (1990). Creative marginality: Innovation at the intersections of social sciences. Boulder: Westview Press.

European Commission. (2012). Enhancing and focusing EU international cooperation in research and innovation: A strategic approach. Brussels, COM (2012) 497 final. Retrieved April 24, 2018, from http://ec.europa.eu/research/iscp/pdf/policy/com_2012_497_communication_from_commission_to_inst_en.pdf#view=fit&pagemode=none.

Garfield, E., Malin, M., & Small, H. (1978). Citation data as science indicators. In Y. Elkana, J. Lederberg, R. K. Merton, A. Thackray, & H. Zuckerman (Eds.), Toward a metric of science: The advent of science indicators (pp. 179–208). New York: Wiley.

Glänzel, W., & Schubert, A. (2003). A new classification scheme of science fields and subfields designed for scientometric evaluation purposes. Scientometrics, 56(3), 357–367.

Hu, M., Li, W., Li, L., Houston, D., & Wu, J. (2016). Refining time-activity classification of human subjects using the global positioning system. PLoS ONE, 11(2), e0148875.

Huang, Y., Zhang, Y., Youtie, J., Porter, A. L., & Wang, X. (2016). How does national scientific funding support emerging interdisciplinary research: A comparison study of big data research in the US and China. PLoS ONE, 11(5), e0154509.

Huutoniemi, K., Klein, J. T., Bruun, H., & Hukkinen, J. (2010). Analyzing interdisciplinarity: Typology and indicators. Research Policy, 39(1), 79–88.

Jost, L. (2009). Mismeasuring biological diversity: Response to Hoffmann and Hoffmann (2008). Ecological Economics, 68(4), 925–928.

Klein, J. T. (2008). Evaluation of interdisciplinary and transdisciplinary research: A literature review. American Journal of Preventive Medicine, 35(2), 116–123.

Larivière, V., & Gingras, Y. (2010). On the relationship between interdisciplinarity and scientific impact. Journal of the Association for Information Science and Technology, 61(1), 126–131.

Ledford, H. (2015). How to solve the world’s biggest problems. Nature, 525(7569), 308–311.

Leinster, T., & Cobbold, C. A. (2012). Measuring diversity: The importance of species similarity. Ecology, 93(3), 477–489.

Leydesdorff, L., & Rafols, I. (2011). Indicators of the interdisciplinarity of journals: Diversity, centrality, and citations. Journal of Informetrics, 5(1), 87–100.

Leydesdorff, L., Wagner, C. S., & Bornmann, L. (2018). Betweenness and diversity in journal citation networks as measures of interdisciplinarity—A tribute to Eugene Garfield. Scientometrics, 114(2), 567–592.

Maas, F., Spoorenberg, A., Brouwer, E., Bos, R., Efde, M., Chaudhry, R. N., et al. (2015). Spinal radiographic progression in patients with ankylosing spondylitis treated with TNF-alpha blocking therapy: A prospective longitudinal observational cohort study. PLoS ONE, 10(4), e0122693.

Morillo, F., Bordons, M., & Gómez, I. (2003). Interdisciplinarity in science: A tentative typology of disciplines and research areas. Journal of the American Society for Information Science and Technology, 54(13), 1237–1249.

Mugabushaka, A. M., Kyriakou, A., & Papazoglou, T. (2016). Bibliometric indicators of interdisciplinarity: the potential of the Leinster–Cobbold diversity indices to study disciplinary diversity. Scientometrics, 107(2), 593–607.

National Academies Committee on Facilitating Interdisciplinary Research, Committee on Science, Engineering and Public Policy. (2004). Facilitating interdisciplinary research. Washington, DC: National Academies Press.

National Science Foundation. (2004). Retrieved April 26, 2018, from https://www.nsf.gov/od/oia/additional_resources/interdisciplinary_research/definition.jsp.

Nijssen, D., Rousseau, R., & Hecke, P. V. (1998). The Lorenz curve: A graphical representation of evenness. Coenoses, 13(1), 33–38.

Porter, A. L., Cohen, A. S., Roessner, J. D., & Perreault, M. (2007). Measuring researcher interdisciplinarity. Scientometrics, 72(1), 117–147.

Porter, A. L., & Rafols, I. (2009). Is science becoming more interdisciplinary? Measuring and mapping six research fields over time. Scientometrics, 81(3), 719–745.

Porter, A. L., Roessner, D. J., & Heberger, A. E. (2008). How interdisciplinary is a given body of research? Research Evaluation, 17(4), 273–282.

Rafols, I., & Meyer, M. (2010). Diversity and network coherence as indicators of interdisciplinarity: Case studies in bionanoscience. Scientometrics, 82(2), 263–287.

Rao, C. R. (1982). Diversity and dissimilarity coefficients: A unified approach. Theoretical Population Biology, 21(1), 24–43.

Rijnsoever, F. J. V., & Hessels, L. K. (2011). Factors associated with disciplinary and interdisciplinary research collaboration. Research Policy, 40(3), 463–472.

Rinia, E. J., Leeuwen, T. N. V., & Raan, A. F. J. V. (2002). Impact measures of interdisciplinary research in physics. Scientometrics, 53(2), 241–248.

Rousseau, R., Zhang, L., & Hu, X. J. (2018). Knowledge Integration: Its meaning and measurement. In W. Glänzel, H. Moed, U. Schmoch, & M. Thelwall (Eds.), Springer handbook of science and technology indicators. Berlin: Springer.

Schmickl, C., & Kieser, A. (2008). How much do specialists have to learn from each other when they jointly develop radical product innovations? Research Policy, 37(3), 473–491.

Schummer, J. (2004). Multidisciplinarity, interdisciplinarity, and patterns of research collaboration in nanoscience and nanotechnology. Scientometrics, 59(3), 425–465.

Stirling, A. (2007). A general framework for analysing diversity in science, technology and society. Journal of the Royal Society, Interface, 4(15), 707.

Stokols, D., Fuqua, J., Gress, J., Harvey, R., Phillips, K., Baezconde-Garbanati, L., et al. (2003). Evaluating transdisciplinary science. Nicotine & Tobacco Research, 5(Suppl_1), S21–S39.

Sumra, M. K., & Schillaci, M. A. (2015). Stress and the multiple-role woman: Taking a closer look at the “Superwoman”. PLoS ONE, 10(3), e0120952.

Suresh, S. (2012). Global challenges need global solutions. Nature, 490, 337–338.

Sutherland, W. J., Bellingan, L., Bellingham, J. R., Blackstock, J. J., Bloomfield, R. M., Bravo, M., et al. (2012). A collaboratively-derived science-policy research agenda. PLoS ONE, 7(3), e31824.

Wagner, C. S., Roessner, J. D., Bobb, K., Klein, J. T., Boyack, K. W., Keyton, J., et al. (2011). Approaches to understanding and measuring interdisciplinary scientific research (IDR): A review of the literature. Journal of Informetrics, 5(1), 14–26.

Wang, J., & Shapira, P. (2015). Is there a relationship between research sponsorship and publication impact? An analysis of funding acknowledgments in nanotechnology papers. PLoS ONE, 10(2), 555–582.

Wang, J., Thijs, B., & Glänzel, W. (2015). Interdisciplinarity and impact: Distinct effects of variety, balance, and disparity. PLoS ONE, 10(5), e0127298.

Zhang, L., Rousseau, R., & Glänzel, W. (2016). Diversity of references as an indicator of the interdisciplinarity of journals: Taking similarity between subject fields into account. Journal of the Association for Information Science and Technology, 67(5), 1257–1265.

Zhang, L., Sun, B., & Huang, Y. (2018). Interdisciplinarity measurement based on interdisciplinary collaborations: A case study on highly cited researchers of ESI social sciences. Journal of the China Society for Scientific and Technical Information, in Chinese, 37(3), 231–242.

Zhang, L., Sun, B., Huang, Y., & Chen, L. X. (2017). Interdisciplinarity and collaboration: On the relationship between disciplinary diversity in the references and in the departmental affiliations. In Proceedings of ISSI 2017—The 16th international conference on scientometrics and informetrics (pp. 1064–1075). Wuhan University, China.

Zhou, L., Liu, L., Liu, X., Chen, P., Liu, L., Zhang, Y., et al. (2014). Systematic review and meta-analysis of the clinical efficacy and adverse effects of Chinese herbal decoction for the treatment of gout. PLoS ONE, 9(1), e85008.

Acknowledgements

The present study is an extended version of an article presented at the 16th International Conference on Scientometrics and Informetrics, Wuhan (China), 16–20 October 2017 (Zhang et al. 2017). The authors would like to acknowledge support from the National Natural Science Foundation of China (Grant No. 71573085), the Innovation Talents of Science and Technology in HeNan Province (Grant Nos. 16HASTIT038, 2015GGJS-108), and the Excellent Scholarship in Social Science in HeNan Province (No. 2018-YXXZ-10). We thank Giovanni Abramo and Ronald Rousseau for inspiring discussions.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Zhang, L., Sun, B., Chinchilla-Rodríguez, Z. et al. Interdisciplinarity and collaboration: on the relationship between disciplinary diversity in departmental affiliations and reference lists. Scientometrics 117, 271–291 (2018). https://doi.org/10.1007/s11192-018-2853-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-018-2853-0