Abstract

Citation count is an important indicator for measuring research outputs. There have been numerous studies that have investigated factors affecting citation counts from the perspectives of cited papers and citing papers. In this paper, we focused specifically on citing papers and explored citations sourced from prestigious affiliations in the computer science discipline. The QS World University Rankings was employed to identify prestigious citations, named QS citations. We used the Microsoft Academic Graph, a massive scholarly dataset, and conducted different kinds of analysis between papers with QS citations and those without QS citations. We discovered that papers with QS citations are generally associated with higher total citation counts than those without QS citations. We extended the analysis to authors and journals, and the results indicated that when authors or journals have higher proportions of papers with QS citations, they are usually associated with higher values of the H-index or the Journal Impact Factor respectively. Additionally, papers with QS citations are also associated with a higher Altmetric Attention Score and a higher number of specific types of altmetrics such as tweet counts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The evaluation of research outputs is a highly investigated topic in the scholarly community, entailing many important aspects, ranging from the discovery of impactful scientific work, the determination of tenure promotion, to the allocation of research resources. With a dramatic boost in the number of research publications in the past years, an increasing number of impact indicators have been developed to facilitate the process of research evaluation. Citation counts, however, have become one of the most widely acknowledged metrics to assess research quality, in spite of controversial drawbacks (MacRoberts and MacRoberts 1989; Thelwall 2016; Leydesdorff and Shin 2011). Most other recognized indicators, such as the H-index for researchers and the Journal Impact Factor (JIF) for journals (Hirsch 2005; Garfield 2006), are also intrinsically based on citation counts. Thus, many studies have been conducted on citation analysis for understanding the factors that affect citation counts.

A recent literature review summarized 28 factors affecting the citation counts from 198 articles, and found that some factors such as the JIF and the number of authors, may play very important roles in citation performance (Tahamtan et al. 2016). Slyder et al. (2011) made similar observations by examining the influence of a variety of factors, in which the seniority of authors and the JIF showed stronger impacts on citation counts. Some studies analysed highly cited papers from a bibliometric perspective and identified the characteristics of these papers, for example highly cited papers typically involved more collaborative research (Aksnes 2003; Ivanovic and Ho 2016). Other studies mainly investigated specific factors, such as institutional interaction and international cooperation (Yan and Sugimoto 2011; Rousseau and Ding 2016; Persson 2010; Khor and Yu 2016). Apart from the studies on the impact of different factors, some studies used statistical methods or machine learning algorithms to predict the future citation counts of papers based on various factors such as the number of authors and the number of references (Brizan et al. 2016; Yu et al. 2014). These studies have covered a wide range of factors to different extents, mostly from the perspective of the cited papers.

According to Tahamtan et al. (2016), a paper’s citation count could be affected by its citing papers, as the citing papers could increase the cited paper’s visibility and subsequently induce further citations. There are related studies on leveraging the impact of citing papers. At the paper level, Yan and Ding (2010) proposed a technique of weighted citation, employing the JIF of the citing journals to assign weights, and evaluating the cited papers through the total values of the weighted citations. At the author level, Ding and Cronin (2011) investigated weighted citations to distinguish between an author’s popularity and his prestige. While the popularity of an author was measured by the number of times an author was cited, the prestige of an author was measured by the number of times an author was cited by highly cited papers. At the journal level, popular journal-level impact indicators such as the JIF, the Immediacy Index, the Cited Half-life, and the Scimago Journal Rank have been extensively analysed and compared with one another (Leydesdorff 2009). Alternatives to these recognized indicators have also been proposed, for example the Integrated Impact Indicator (I3) which is based on percentile ranks (Leydesdorff 2012), and the Highly Cited Papers Index (HCP Index) which provides a measure of the level of international excellence of institutions (Tijssen et al. 2002). Additionally, a method of weighted PageRank was examined as an indicator of a journal’s prestige (Bollen et al. 2006). The Eigenfactor score (Bergstrom 2007; West et al. 2010) rates scientific journals through weighted citations, considering that citations from highly ranked journals make larger contributions than citations from lower ranked journals. These previous studies have investigated citing papers from a variety of perspectives.

In this work, we also focused on citing papers, based on the assertion that the weights of citations should be differentiated to reflect the prestige of citing papers (Yan and Ding 2010; Ding and Cronin 2011). However, in contrast to previous studies, we leveraged the affiliations of the citing papers to determine the prestige of citing papers. Specifically, we investigated the citations sourced from distinguished academic affiliations, as identified by the QS World University Rankings.Footnote 1 We labelled these prestigious citations as QS citations. This particular type of citation, to the best of our knowledge, has not yet been investigated in previous works. In this paper, we studied QS citations in the computer science discipline as a case study, with the aim of extending our analysis to other disciplines in future work. The main objective of this study was to understand the association between the QS citation and the overall bibliometric and altmetric performances of a paper. We employed the Microsoft Academic Graph (MAG), a massive scholarly dataset provided by Microsoft Research (Sinha et al. 2015), and conducted a series of experiments investigating QS citations at the paper, author and journal levels. The bibliometric indicators used in this study were the total citation count, the H-index and the JIF for papers, authors and journals respectively. In addition, since altmetrics has attracted more and more attention as an alternative measure of impact (Piwowar 2013; Costas et al. 2015; Bornmann 2015; Erdt et al. 2016), we also analysed the association between QS citations and the Altmetric Attention Score provided by Altmetric.com,Footnote 2 as well as other specific types of altmetrics, such as tweet counts. Resultantly, this paper proposes and tests three research hypotheses:

-

Hypothesis 1 (H1) Papers with QS citations are associated with higher total citation counts than papers without QS citations.

-

Hypothesis 2 (H2) Authors and journals having higher proportions of papers with QS citations over all their papers, are associated with higher H-index and Journal Impact Factor values respectively.

-

Hypothesis 3 (H3) Papers with QS citations are associated with higher social media attention in terms of the Altmetric Attention Scores and other specific types of altmetrics than papers without QS citations.

This paper is organized in the following manner. In the “Methods” section, we present our concept and definition of QS citations, as well as a description of our dataset and experiments conducted for testing the three hypotheses. Results of the experiments are presented in the “Results” section. Final comments and discussions are made in a “Conclusion” section at the end of the paper.

Methods

In this section, we firstly introduce and define QS citation and other indicators used in the experiments. Next, we describe the process of data retrieval. Finally we present the experiment designs.

Definition of QS citation

As the university plays a principal role in the development of academic research, our assertion is that citations sourced from prestigious universities are more important than citations sourced from less prestigious universities. Prestigious universities can be identified through university rankings. We considered three well-known university ranking systems, namely the Academic Ranking of World Universities (ARWU)Footnote 3 (Freyer 2014), the CWTS Leiden Rankings (CWTS)Footnote 4 (Waltman et al. 2012), and the QS World University Rankings (Dobrota et al. 2016). All of the three ranking systems cover broad research fields and offer overall rankings for global universities. On the one hand, ARWU and CWTS mainly focus on academic and research performance, and their rankings are generally based on bibliometric data. The QS ranking, on the other hand, is based more on reputational surveys, and thus it is not as much affected by bibliometric data (Dobrota et al. 2016). The CWTS ranking 2015 considers neither conference proceedings nor books (CWTS Leiden Ranking 2015 Methodology), thus it is not suitable for our study, since conference proceedings are very important publishing sources in the computer science discipline. The QS World University Rankings by Subject 2015—Computer Science and Information Systems lists 400 universities, while the ARWU-Computer Science 2015 lists 200 universities, and 165 of these are also on the QS ranking. Therefore, with an overlap of 82.5% with the ARWU ranking, and covering even more universities, the QS ranking was adopted in our work to identify the prestigious affiliations for the computer science discipline. We do not, however, conclude that the QS ranking system is better than other ranking systems. The evaluation of different university ranking systems is not the main focus of our paper, although there seems to be some similarities between the rankings, despite the different methodologies applied (Aguillo et al. 2010).

In the present work, we refer to universities listed in the QS ranking as QS universities. Conversely, universities not listed in the QS ranking are referred to as non-QS universities. We thus define QS papers as papers which are affiliated with QS universities, and non-QS papers as papers which are affiliated with non-QS universities. A paper is said to be affiliated with a university, when the author of the paper is affiliated with this university. When there are multiple authors on a paper, the source affiliation of the paper is determined by the most prestigious affiliation of the first author. Note that in our work, the author’s affiliation as stated on the paper is considered rather than the current affiliation of the author. If a paper is cited by a QS paper, then the citation is defined as a QS citation, otherwise, the citation is a non-QS citation. A paper with a QS citation is defined as a QS-Ci paper, otherwise as a non-QS-Ci paper as it has no QS citation. A QS paper is not necessarily a QS-Ci paper and vice versa. These relationships are illustrated in Fig. 1. In addition, we define QS50 citations as citations from the top 50 universities in the QS ranking, and a paper is referred to as a QS50-Ci paper if it has at least one QS50 citation. QS50-Ci papers are actually a subset of QS-Ci papers. For clarity, all the terms defined above are presented in Table 1.

Data retrieval

We used the Microsoft Academic Graph (MAG), a massive scholarly dataset provided by Microsoft Research (Sinha et al. 2015). The dataset version used in this paper was released on 5th February 2016. We extracted all computer science related papers along with their authors, affiliations and citations. The QS universities were identified to recognize the QS citations. In addition, the Altmetric Attention Scores and other altmetrics of a set of papers were collected from Altmetric.com. Corresponding details are provided in the following sub-sections.

Computer science paper retrieval

In our work, the subjects to which the papers belong were determined by the publishing venues. Journal papers and conference papers were treated in the same way, since these two kinds of venues are both important in the computer science discipline. If a journal or a conference was considered to be in the area of computer science, the papers published in this journal or conference proceeding were regarded as computer science related papers. Hence, we collected the venue entries indexed in DBLP,Footnote 5 a bibliographic database which covers publications from major computer science journals and conference proceedings. Next, we made a mapping on titles of DBLP venue entries to the venue entries in MAG. A match indicated that the venue was recorded in DBLP and thus related to the computer science discipline. Other venue entries which had different record names in both sources required manual checking for further confirmation. Once all of the computer science venues in MAG were identified, the corresponding papers were retrieved accordingly. Finally, we acquired a total of 3,595,365 computer science papers published across 1216 journals and 1279 conference proceedings.

QS university identification

The QS World University Rankings by Subject 2015—Computer Science and Information SystemsFootnote 6 was employed in this work. The ranking presents the best 400 universities in the list. A mapping on university names between university entries in MAG and the entries in the QS ranking was required. However, it was found in MAG, that a single university could be represented by several affiliation entries. For example, there are entries “Harvard University” and “Harvard Medical School” in MAG, both of which should be mapped to “Harvard University” in the QS ranking. For some QS universities, too many related affiliation entries were found in MAG, thus we sorted the affiliation entries in MAG by the number of associated papers, and considered the entries with the most associated papers which jointly covered at least 90% of the total number of papers. For example, if a certain QS university has five relevant affiliation entries in MAG and the respective paper counts are 1000, 500, 100, 50 and 10, then only the first two affiliation entries are considered. Using this method, we obtained 435 affiliation entries in MAG mapped to the 400 QS universities.

Retrieval of altmetric attention scores

Altmetric.com tracks and analyses the online activities related to the research papers it indexes from a number of social media platforms such as Twitter and blogs (Costas et al. 2015). Based on the altmetrics (e.g., tweet counts, blog mentions) that Altmetric.com collects for the papers, an Altmetric Attention Score is assigned to each indexed paper. The Altmetric Attention Score is intended to measure the online influence of the paper and represents an aggregate value of the paper’s altmetrics. It reflects the popularity of the paper on the Internet. A higher Altmetric Attention Score indicates that the paper has received more social media attention (Erdt et al. 2016). Since Altmetric.com was founded in 2011, when the collection of altmetrics started in earnest, we retrieved Altmetric Attention Scores for the extracted computer science papers that were published in 2011 onward and which had at least one citation. On 18 December 2016, we finally obtained 66,000 papers for which Altmetric Attention Scores were available. The collected values of the Altmetric Attention Score ranged from 0 to 1858.568. Detailed altmetrics, such as tweet counts, were also fetched via the API. It is to be noted that the Altmetric Attention Score shown on the website is represented as an integer, but it could be in decimal form when fetched via the API provided by Altmetric.com.

Experiments

We conducted a series of experiments at paper, author, and journal levels to test the proposed hypotheses. Comparative experiments were designed in a manner that objects such as papers were allocated to different groups for observing the differences between groups, and thereby exploring the association between QS citations and the overall bibliometric and altmetric performance of the objects. Furthermore, a Mann–Whitney test was run after each comparison to verify the statistical significance of the result.

Experiments for H1

In order to test H1, we compared the citation counts between QS-Ci papers and non-QS-Ci papers. At first, we considered the latest recorded statuses of papers in the dataset and designed four scenarios to facilitate overall comparisons. Next, the age of the paper was also taken into account, so as to ensure that we compared papers of the same age. Finally, we studied the association between early citation counts and long-term citation counts for QS50-Ci papers, QS-Ci papers, and non-QS-Ci papers.

The four comparative scenarios are illustrated in Fig. 2. In each scenario, papers were divided into two different groups and compared by citation counts. Papers without citations were excluded from the comparisons. In Scenario A, papers with citations were divided into QS-Ci papers and non-QS-Ci papers. Scenario B is the counterpart of Scenario A, in which papers with citations were divided into QS papers and non-QS papers. While Scenario A utilized the affiliations of citing papers to group papers, the affiliations of cited papers were used in Scenario B. In Scenarios C and D, QS papers and non-QS papers were respectively further subdivided into QS-Ci papers and non-QS-Ci papers.

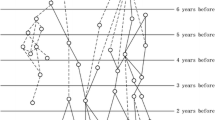

Comparisons in the previous four scenarios were static, but since papers were published in different years, we made another analysis based on the age of a paper. At the nth year after publication (n = 1 to 5), papers were classified into three groups: QS50-Ci papers, QS-Ci papers, and non-QS-Ci papers. For the group of non-QS-Ci papers, we also excluded papers without any citation. Citation performance, in terms of average citation counts and median of citation counts, was compared amongst the three groups of the same age.

In addition, since early citations lay a foundation for the citation performance of papers and can be used to predict future citation counts (Yu et al. 2014; Stegehuis et al. 2015), we investigated the association between early citation counts and long-term citation counts for the three groups of papers: QS50-Ci papers, QS-Ci papers, and non-QS-Ci papers. Citations received within 4 years after publication were considered as early citations. At the nth year after publication (n = 1 to 4), from the three groups of papers we selected the papers with the same range of citation counts. The ranges of early citations were 1 to 5 for the first year, 6 to 10 for the first 2 years, 11 to 15 for the first 2 years, and 16 to 20 for the first 4 years. The ranges were based on our assumption that papers obtaining such many citations at the particular age should be potentially of high quality, and thus a comparison on long-term citation counts amongst the papers is reasonable. We thus compared 5-year citation counts and 10-year citation counts amongst the three groups of selected papers. Only papers published before 2005 were considered to ensure each paper had a 10-year citation window.

Experiments for H2

To test H2, we extended our analysis to author and journal levels. However, we considered the proportion of QS-Ci papers rather than the absolute number of QS citations. For authors, since the H-index has been widely acknowledged as an author-level metric (Hirsch 2005; Malesios 2015), we explored its association to the proportion of QS-Ci papers in our experiments. For journals, the JIF could be regarded as the most frequently used metric (Garfield 2006), and thus the association between the JIF and the proportion of QS-Ci papers was investigated.

We considered the authors who we had previously retrieved as having authored computer science papers, and ignored those who had published fewer than 5 papers or who had received zero citations. Thus, there were 206,271 authors considered in the experiments. We extracted their papers not only from the discipline of computer science, but from all disciplines. The H-index values for the authors were firstly calculated. Next, for each author, we calculated the proportion of QS-Ci papers over all papers of that author. For example, assuming Author X had published 10 papers which included 3 QS-Ci papers and 7 non-QS-Ci papers, then the proportion of QS-Ci papers for Author X is 30%. We refer to this proportion as P-QS-Ci rate. The association between the H-index and P-QS-Ci rate was explored by comparing the average P-QS-Ci rates amongst the authors grouped by different ranges of the H-index values. In addition, we also calculated the proportion of papers with at least one citation (including both QS and non-QS citations) for each author, and named this proportion P-Ci rate. Average P-Ci rates were compared amongst the author groups. Pearson and Spearman correlations were calculated between the H-index values and the P-QS-Ci rates, and P-Ci rates respectively.

Inspired by the H-index, a set of the most influential papers of an author in terms of most citation counts can be identified as Hirsh core, or H-core (Burrell 2007). Papers in this core set are referred to as H-core papers and conversely the remaining papers are referred to as non-H-core papers. We further investigated the H-core papers by looking into the proportion of QS-Ci papers over H-core papers, which was intended to reveal how many H-core papers had QS citations. For instance, if Author Y had 10 H-core papers, and 6 of these papers were QS-Ci papers, then the proportion of QS-Ci papers over H-core papers for Author Y is 60%. We compared the average proportions of QS-Ci papers between H-core papers and non-H-core papers.

For the extracted computer science journals, we computed the 5-year JIF of 2010 by dividing the citation counts in 2010 by the total number of papers published in the five previous years, namely from 2005 to 2009.Footnote 7 Journals with fewer than 50 papers during the 5 years were excluded. As a result, a total of 872 journals were considered. For each journal, we acquired a set of papers that were published between 2005 and 2009. In this set, we calculated the proportion of papers that received QS citations in 2010, and named it J-P-QS-Ci rate for that journal. For example, assuming Journal X published 100 papers between 2005 and 2009. In year 2010, out of 100 papers, 40 papers received QS citations, so that the J-P-QS-Ci rate for Journal X is 40%. Average J-P-QS-Ci rates were taken into comparison amongst the groups of journals in different ranges of JIF. Pearson and Spearman correlations were calculated between the 5-year JIF and the J-P-QS-Ci rates.

We list the terms and paper groups used in the experiments in Table 2.

Experiments for H3

For H3, the association between QS citations and altmetrics were investigated. The 66,000 papers, for which Altmetric Attention Scores and corresponding altmetrics such as tweet counts were collected, were classified as QS-Ci papers and non-QS-Ci papers for comparison. According to the fetched data, most of the papers had non-null records of CiteULike counts, Mendeley counts, and tweet counts, thus we also compared the two types of papers based on these three metrics. Furthermore, as papers with high Altmetric Attention Scores attracted more attention, we compared the counts of the two types of papers in high score ranges.

Results

In this section, the results of different experiments testing the three research hypotheses are presented. Mann–Whitney tests were conducted for all comparisons to verify their statistical significance.

Results for H1

The paper groups were first compared by citation counts in the four scenarios, shown in boxplots in Fig. 3. It is observed in Fig. 3a for Scenario A, that QS-Ci papers (paper group A1) have considerably higher total citation counts than non-QS-Ci papers (paper group A2). In this case, the median of the citation counts of QS-Ci papers is 8, four times as large as that of non-QS-Ci papers. This difference was found to be statistically significant (p < 0.01). In Fig. 3b for Scenario B, QS papers (paper group B1) have slightly higher total citation counts (with 5 as median) than non-QS papers (paper group B2) with a median of 4. While the difference between the two groups is relatively small, it was found to be statistically significant (p < 0.01). In the last two subfigures for Scenarios C and D (Fig. 3c, d), considerably higher total citation counts are consistently associated with QS-Ci papers, regardless of whether the papers were published by QS universities or not. The comparison results for both Scenarios C and D were found to be statistically significant (p < 0.01).

Boxplot of citation counts of different groups of papers in the four scenarios A, B, C, and D. For Scenario A: A1 is the group of QS-Ci papers (N = 1,373,701), and A2 is the group of non-QS-Ci papers (N = 803,167); for Scenario B: B1 is the group of QS papers (N = 709,766), and B2 is the group of non-QS papers (N = 1,467,102); for Scenario C: C1 is the group of QS papers that are QS-Ci papers (N = 537,969), C2 is the group of QS papers that are non-QS-Ci papers (N = 171,797); for Scenario D: D1 is the group of non-QS papers that are QS-Ci papers (N = 835,732), and D2 is the group of non-QS papers that are non-QS-Ci papers (N = 631,370)

Table 3 shows the results of comparisons of citation counts amongst the three groups of papers that are at the same age. It can be observed that QS50-Ci papers consistently have the highest average total citation counts, followed by QS-Ci papers, while non-QS-Ci papers have the lowest average total citation counts. The same pattern is found in the comparison of the median total citation counts. Mann–Whitney tests verified that statistical significance (p < 0.01) was achieved in the comparisons between QS50-Ci and QS-Ci papers, QS50-Ci and non-QS-Ci papers, and QS-Ci and non-QS-Ci papers for all age groups considered.

In Table 4, long-term citation counts were compared amongst the three groups of papers, given that at the same age, papers in the three groups have the same range of early citation counts. As seen in Table 4, although the papers have comparable early citation counts, QS50-Ci papers grow to become the most influential papers in terms of 10-year citation counts, followed by QS-Ci papers. Non-QS-Ci papers show the weakest performance, though their 10-year citation counts are in fact not so low. The difference in 10-year citation counts between QS50-Ci papers and QS-Ci papers is small, while the difference between QS-Ci papers and non-QS-Ci papers is relatively large. For all age groups considered, we ran Mann–Whitney tests over the comparisons on both 5-year and 10-year citation counts between QS50-Ci and QS-Ci papers, QS50-Ci and non-QS-Ci papers, and QS-Ci and non-QS-Ci papers. The tests results indicated that all other comparisons were statistically significant at p < 0.01, while the comparison of the 10-year citation counts between the QS50-Ci and QS-Ci papers at the age of 4 years after publication was significant at p < 0.05 (p = 0.03).

According to the results presented above, in a variety of comparisons, papers with QS citations tend to be associated with higher total citation counts than papers without QS citations. We thereby accept hypothesis H1.

Results for H2

H2 is concerned with QS citations at author and journal levels. Regarding the association between the H-index and the P-QS-Ci rate, it is observed in Table 5 that the P-QS-Ci rate tends to increase alongside the H-index. This would indicate that the P-QS-Ci rate shows a common growth trend with the H-index, and thus could be able to reflect the level of the H-index which an author could achieve. This is validated with medium to high significant correlations (Pearson correlation r = 0.45, Spearman correlation rho = 0.6, p < 0.01) between the H-index and the P-QS-Ci rate. For comparison, the P-Ci rates are also presented in Table 5. The correlation coefficients between the H-index and the P-Ci rate are however lower (r = 0.39, rho = 0.53, p < 0.01). In addition, as seen in Table 5, for authors with H-index higher than 5, their P-Ci rates are quite close, while their P-QS-Ci rates are more distinguishable. While the comparisons between all other author groups are statistically significant at p < 0.01, the comparisons between authors with the highest H-index and authors with H-index values ranging from 26 to 30 (i.e., comparisons in the last two rows of Table 5) are statistically significant in terms of the average P-QS-Ci rate at p < 0.05 (p = 0.04), but not statistically significant for the average P-Ci rate (p = 0.05).

The associations amongst QS-Ci papers, H-core papers, and non-H-core papers are presented in Table 6, where the average proportions of QS-Ci papers in H-core papers are all higher than 80% for authors with H-index across different ranges, while the average proportions in non-H-core papers are much lower. This finding indicates that most H-core papers generally tend to be QS-Ci papers. It is to be noted that some authors did not have non-H-core papers, i.e., all of their papers had received enough citations to become H-core papers. Thus in Table 6, the number of authors with non-H-core papers is less than the number of authors with H-core papers for all ranges of the H-index.

For journals, the association between the JIF and the J-P-QS-Ci rate is presented in Table 7, where the ranges of the JIF were determined by quartiles. In Table 7, journals with a high JIF typically tend to have higher J-P-QS-Ci rates than journals with a low JIF. This comparison is found to be statistically significant according to Mann–Whitney tests. A higher JIF means more citations were received by the papers published in the journal, and a higher J-P-QS-Ci rate means more QS-Ci papers were published by the journal, so this is consistent with the results for H1 above, which demonstrate that QS-Ci papers are associated with higher citation counts. The correlation between the JIF and the J-P-QS-Ci rate is also strong, with a Pearson correlation coefficient of 0.84 (p < 0.01) and a Spearman correlation coefficient of 0.94 (p < 0.01).

Based on the experimental results at the author and journal levels presented above, we can conclude that authors and journals with high proportions of QS-Ci papers are generally associated with high values of the H-index and the JIF respectively, thereby accepting H2.

Results for H3

Even though the relationship between bibliometrics and altmetrics is still under discussion in the research community (Bornmann 2015; Erdt et al. 2016), we found QS-Ci papers to be associated with higher values of altmetrics. Table 8 shows the altmetric performance of QS-Ci papers and non-QS-Ci papers. In the last two rows, we exclude papers with zero Altmetric Attention Scores. In general, QS-Ci papers outperform non-QS-Ci papers in terms of the aggregate Altmetric Attention Scores and other specific types of altmetrics, especially for Mendeley counts, where the average value for QS-Ci papers (28.52) is twice as high as that for non-QS-Ci papers (13.79). It is worth pointing out however, that when papers with zero Altmetric Attention Scores were included in the comparison, the differences in the average Altmetric Attention Score and average tweet count between QS-Ci papers and non-QS-Ci papers were not statistically significant (p > 0.05). When these papers were excluded, the differences in the average Altmetric Attention Score and average tweet count between QS-Ci papers and non-QS-Ci papers were statistically significant (p < 0.01). This could be due to the fact that 56.64% (37,383 papers) of all the 66,000 papers have zero Altmetric Attention Scores. In Table 9, we can see that there were sufficiently more QS-Ci papers than non-QS-Ci papers when looking at the subgroups with Altmetric Attention Scores between 1 and 5, 5 and 10, 10 and 20, and above 20. Therefore, we can accept H3, that papers with QS citations are associated with higher Altmetric Attention Scores than papers without QS citations.

Conclusion

In this paper, we studied citations sourced from top universities in the computer science discipline. Experimental results showed that QS-Ci papers were associated with higher total citation counts than non-QS-Ci papers, not only in the overall comparisons but also in the comparisons of papers of the same age. It was also found that if papers had early QS citations (QS citations received within 4 years after publication), they had considerably higher long-term citation counts (10-year citation counts), when compared to papers without early QS citations, given that both types of papers had similar numbers of early citations. We also performed experiments which explored the association between QS citations and the H-index for authors, and between QS citations and the JIF for journals. The results showed that if authors or journals had more QS-Ci papers, they had higher values of the H-index or the JIF. This was actually consistent with the comparative results at the paper level. In addition, the results also revealed that QS-Ci papers gained more attention on social media than non-QS-Ci papers, as QS-Ci papers were found to be associated with higher values of the Altmetric Attention Score, as well as higher values of other specific altmetrics, such as tweet counts.

According to our experimental results, we can claim that prestigious citations could be effectively and efficiently identified through the source affiliations of the citing papers. Compared to other methods of measuring the prestige of citing papers proposed by previous studies, our approach has the following advantages. QS citations can be applied to both journal and conference papers, unlike the weighted citations (Yan and Ding 2010) which only consider journal papers. When the PageRank algorithm is employed (Bollen et al. 2006) to identify citations of more importance, it would require comparatively more computing resources to construct the paper network and calculate the PageRank scores. When important citations are identified based on whether the citing papers are highly cited papers (Ding and Cronin 2011), it takes time for citing papers to accumulate citations, but QS citations could be identified as soon as the citing papers are published.

There are limitations to this study. We consider a paper as a QS-Ci paper even when it only has one QS citation. However, a paper with multiple QS citations could potentially be of better quality. In addition, once a non-QS-Ci paper receives its first QS citation, it turns into a QS-Ci paper. When a non-QS-Ci paper gets more and more citations, the possibility for it to become a QS-Ci paper increases as well. Consequently, the total citation count comparisons between QS-Ci papers and non-QS-Ci papers could be biased. Thus, a further detailed study on QS-Ci papers and the dynamic process of non-QS-Ci papers becoming QS-Ci papers is required in the future. Other results, such as the one investigating early and long-term citations, however show that QS-Ci papers could be potentially more important. While the QS ranking was employed in this paper, it is reasonable to infer that similar experimental results would be obtained if prestigious citations were identified using other university rankings. However, another limitation of the study is that university rankings do not cover research centres established by influential industrial companies. One more concern could be raised on the timestamp associated with the university rankings. If the rankings of different years are employed, there could be differences in the ranks of the universities, even though the universities listed remain relatively stable across the years. Furthermore, only the computer science discipline is considered in this study.

In future studies, we plan to extend the exploration of QS citations to other disciplines. We also plan an in-depth investigation into QS-Ci papers, in order to have a better understanding of the relationship between QS citations and total citation counts. Based on such a study, we could develop an effective model to predict the future citation performance of papers and authors. Additionally, we could address the issue of identifying prestigious affiliations by proposing a more general framework, which would consider a wider range of research affiliations and factor in the time effect.

Notes

http://www.topuniversities.com/university-rankings. Accessed 25 September 2017.

http://www.altmetric.com. Accessed 25 September 2017.

http://www.shanghairanking.com. Accessed 25 September 2017.

http://www.leidenranking.com. Accessed 25 September 2017.

http://dblp.uni-trier.de. Accessed 25 September 2017.

Calculation of 5-year impact factor: http://wokinfo.com/essays/impact-factor/. Accessed 25 September 2017.

References

Aguillo, I. F., Bar-Ilan, J., Levene, M., & Ortega, J. L. (2010). Comparing university rankings. Scientometrics, 85(1), 243–256.

Aksnes, D. W. (2003). Characteristics of highly cited papers. Research Evaluation, 12(3), 159–170.

Bergstrom, C. (2007). Eigenfactor: Measuring the value and prestige of scholarly journals. College & Research Libraries News, 68(5), 314–316.

Bollen, J., Rodriquez, M. A., & Van de Sompel, H. (2006). Journal status. Scientometrics, 69(3), 669–687.

Bornmann, L. (2015). Alternative metrics in scientometrics: A meta-analysis of research into three altmetrics. Scientometrics, 103(3), 1123–1144.

Brizan, D. G., Gallagher, K., Jahangir, A., & Brown, T. (2016). Predicting citation patterns: Defining and determining influence. Scientometrics, 108(1), 183–200.

Burrell, Q. L. (2007). On the H-index, the size of the Hirsch core and Jin’s A-index. Jounrnal of Informetrics, 1(2), 170–177.

Costas, R., Zahedi, Z., & Wouters, P. (2015). Do “altmetrics” correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. Journal of the Association for Information Science and Technology, 66(10), 2003–2019.

CWTS Leiden Ranking 2015 Methodology. (2015). Centre for Science and Technology Studies, Leiden University. Retrieved from http://www.leidenranking.com/Content/CWTS Leiden Ranking 2015.pdf.

Ding, Y., & Cronin, B. (2011). Popular and/or prestigious? Measures of scholarly esteem. Information Processing and Management, 47(1), 80–96.

Dobrota, M., Bulajic, M., Bornmann, L., et al. (2016). A new approach to the QS university ranking using the composite I-distance indicator: Uncertainty and sensitivity analyses. Journal of the Association for Information Science and Technology, 67(1), 200–211.

Erdt, M., Nagarajan, A., Sin, S. J., & Theng, Y. (2016). Altmetrics: An analysis of the state-of-the-art in measuring research impact on social media. Scientometrics. https://doi.org/10.1007/s11192-016-2077-0.

Freyer, L. (2014). Robust rankings review of multivariate assessments illustrated by the Shanghai rakings. Scientometrics. https://doi.org/10.1007/s11192-014-1313-8.

Garfield, E. (2006). The history and meaning of the journal impact factor. Journal of the American Medical Association, 295(1), 90–93.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. PNAS, 102(46), 16569–16572.

Ivanovic, D., & Ho, Y. (2016). Highly cited articles in the information science and library science category in social science citation index: A bibliometric analysis. Journal of Librarianship and Information Science, 48(1), 36–46.

Khor, K. A., & Yu, L. (2016). Influence of international co-authorship on the research citation impact of young universities. Scientometrics, 107(3), 1095–1110.

Leydesdorff, L. (2009). How are new citation-based journal indicators adding to the bibliometric toolbox? Journal of the American Society for Information Science and Technology, 60(7), 1327–1336.

Leydesdorff, L. (2012). Alternatives to the journal impact factor: I3 and the top-10% (or top-25%?) of the most-highly cited papers. Scientometrics, 92(2), 355–365.

Leydesdorff, L., & Shin, J. C. (2011). How to evaluate universities in terms of their relative citation impacts: Fractional counting of citations and the normalization of differences among disciplines. Journal of the American Society for Information Science and Technology, 62(6), 1146–1155.

MacRoberts, M. H., & MacRoberts, B. R. (1989). Problems of citation analysis: A critical review. Journal of the American Society for Information Science, 40(5), 342–349.

Malesios, C. (2015). Some variations on the standard theoretical models for the h-index: A comparative analysis. Journal of the Association for Information Science and Technology, 66(11), 2384–2388.

Persson, O. (2010). Are highly cited papers more international? Scientometrics. https://doi.org/10.1007/s11192-009-0007-0.

Piwowar, H. (2013). Value all research products. Nature. https://doi.org/10.1038/493159a.

Rousseau, R., & Ding, J. (2016). Does international collaboration yield a higher citation potential for US scientists publishing in highly visible interdisciplinary journals? Journal of the Association for Information Science and Technology, 67(4), 1009–1013.

Sinha, A., Shen, Z., Song, Y., et al. (2015). An overview of microsoft academic service (MAS) and applications. In Proceedings of the 24th international conference on World Wide Web. https://doi.org/10.1145/2740908.2742839.

Slyder, J. B., Stein, B. R., Sams, B. S., et al. (2011). Citation pattern and lifespan: A comparison of discipline, institution, and individual. Scientometrics. https://doi.org/10.1007/s11192-011-0467-x.

Stegehuis, C., Litvak, N., & Waltman, L. (2015). Predicting the long-term citation impact of recent publications. Journal of Informetrics. https://doi.org/10.1016/j.joi.2015.06.005.

Tahamtan, I., Afshar, A. S., & Ahamdzadeh, K. (2016). Factors affecting number of citations: A comprehensive review of the literature. Scientometrics. https://doi.org/10.1007/s11192-016-1889-2.

Thelwall, M. (2016). Interpreting correlations between citation counts and other indicators. Scientometrics, 108(1), 337–347.

Tijssen, R., Visser, M., & van Leeuwen, T. (2002). Benchmarking international scientific excellence: Are highly cited research papers an appropriate frame of reference? Scientometrics, 54(3), 381–397.

Waltman, L., Calero-Medina, C., Kosten, J., et al. (2012). The Leiden ranking 2011/2012: Data collection, indicators, and interpretation. Journal of the American Society for Information Science and Technology, 63(12), 2419–2432.

West, J. D., Bergstrom, T. C., & Bergstrom, T. C. (2010). The Eigenfactor Metrics™: A network approach to assessing scholarly journals. College & Research Libraries, 71(3), 236–244.

Yan, E., & Ding, Y. (2010). Weighted citation: An indicator of an article’s prestige. Journal of the American Society for Information Science and Technology, 61(8), 1635–1643.

Yan, E., & Sugimoto, C. R. (2011). Institutional interactions: Exploring social, cognitive, and geographic relationships between institutions as demonstrated through citation networks. Journal of the American Society for Information Science and Technology, 62(8), 1498–1514.

Yu, T., Yu, G., Li, P., et al. (2014). Citation impact prediction for scientific papers using stepwise regressionanalysis. Scientometrics, 101(2), 1233–1252.

Acknowledgements

This research is supported by the National Research Foundation, Prime Minister’s Office, Singapore under its Science of Research, Innovation and Enterprise programme (SRIE Award No. NRF2014-NRF-SRIE001-019).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Luo, F., Sun, A., Erdt, M. et al. Exploring prestigious citations sourced from top universities in bibliometrics and altmetrics: a case study in the computer science discipline. Scientometrics 114, 1–17 (2018). https://doi.org/10.1007/s11192-017-2571-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-017-2571-z