Abstract

This paper investigates the pattern of teaching and research performances, the relationship between them, and the convergence for Italian public HEIs in the period 2000–2010, by comparing different bootstrap robust non-parametric frontier estimators. Overall we find an efficiency improvement, mainly driven by research, whereas teaching efficiency increases only in the very first years of the sample period. We also ascertain a slightly positive relationship between research and teaching performances. Furthermore, we find that Italian HEIs converge, in the observed period, although research and teaching do it at a different pace. Our empirical findings are robust to alternative estimators and bootstrapped bias correction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The analysis of efficiency of public higher education institutions (HEIs) has received a growing attention for the design of strategies able to improve the performance of the public sector, in a context of substantial reduction of resources. Universities are nowadays forced to compete for alternative sources of funding, and also to increase their efficiency (Aghion et al. 2010; Bergantino et al. 2013) and differentiate the range of outputs (Olivares and Wetzel 2011; Sánchez-Barrioluengo 2014). Moreover, public funds have been increasingly allocated on the basis of the performance achieved in research and teaching activities and through the use of new public management tools (Herbst 2007; Auranen and Nieminenb 2010; Hicks 2012). At the same time, the increased frequency of research assessments generates a substantial pressure for improvements (Rebora and Turri 2013).

The reform process of the HEI systems in the EU, the so-called Bologna process, whose implementation started at the end of 90s, furtherly stresses the importance of improving research and teaching performance to make European HEIs more competitive with respect to non-EU universities (Huisman and Van der Wende 2004). The relatively low number of graduates and the excessively long timespans of student careers have, in fact, become a pressing concern throughout Europe (Lambert and Butler 2006). In Italy, such problems are even more critical because of the persistent gap between North and South (Agasisti and Dal Bianco 2009) and the shortage of public resources assigned to HEIs relatively to similar economies.

The evaluation of the reform process appears to be a multifaceted problem that raises various questions. Did the reform improve the efficiency of HEIs? Was it equally successful in both research and teaching? Can we identify any nexus between research and teaching? Was there convergence in research, or efficiency, or both?

Our study sheds a light on the above issues by analyzing the pattern of research and teaching efficiency of Italian public HEIs in the period 2000–2010, since the implementation of Bologna process. To that end, we employ a joint model of HEIs activity and two separated models of research and teaching by using non-parametric and parametric techniques (DEA, bootstrapped DEA, FDH, bootstrapped FDH). After showing the frontier estimations, we investigate the relationship between research (teaching) performance and teaching (research) activity, by applying a non-parametric approach (Daraio and Simar 2005). Thereafter, we turn the attention to the efficiency dynamics of the full model and of the above two activities separately. We finally address the convergence issue, using different strategies: we employ β-convergence and σ-convergence (Barro and Sala-i-Martin 1991, 1992).Footnote 1 We use data from two different sources: our main source is the Italian National Evaluation Committee (Comitato Nazionale per la Valutazione del Sistema Universitario, CNVSU) database that includes data on each university institution, collected on yearly basis by centers of evaluation (Nuclei di Valutazione); additional data on the research activity are drawn by the Web of Science (WoS) database, which is part of the ISI Web of Knowledge.

This paper contributes to the literature because it adopts the most comprehensive sample of Italian HEIs to analyze efficiency in both teaching and research, and it is the first study assessing convergence in the above activities in Italy. Our study relates to a growing literature briefly reviewed in the next section. For its aim, it is close to Bonaccorsi et al. (2006) and Guccio et al. (2015). With respect to the first paper, also investigating the relationship between teaching and research for Italian HEIs, we use a far more comprehensive dataset, which include observations from a larger number of HEIs and for a substantially longer time span. Moreover, we expand the analysis by using and comparing different estimators. Guccio et al. (2015), on the contrary uses a sample of public and private HEIs for the same time span, but it does not address research efficiency as we do in our multi-output production model. We, moreover, provide an additional robustness check by using several non-parametric estimators.

The analysis provides several insightful results. Italian HEI system shows an overall efficiency growth in the observed period. In particular, a persistent increase in research efficiency gave the largest contribution to total efficiency improvement, whereas teaching efficiency increased in the very initial years of the sample period but declined afterwards. We interpret the dynamics of teaching efficiency as the result of the reform of graduate courses implemented by the end of 90s (as in Guccio et al. 2015), whereas the improvement in research efficiency could have been affected by the funding scheme rewarding HEIs’ research performance. We find a slightly positive relationship between research and teaching performances achieved by HEIs. We also find that Italian universities converged in terms of efficiency, although research and teaching converged at a different pace. Teaching efficiency, which shows higher efficiency scores on average, did not improve noticeably but converged faster. In opposition, research activities showed a constant and remarkable increase in the level of efficiency at the expenses of the speed of convergence. Results show to be robust with respect to different assumptions on production technology, to alternative estimators and to bias correction.

The paper is organized as follow. “Background” section provides an overview on efficiency studies on the HEI sector and on the Italian High Education System. “Methodology” section describes the methodological framework. “Data and HEI production models” section is devoted to data collection and analysis. “Results” section shows our findings. “Concluding remarks” section completes the study with few remarks.

Background

Literature review

HEI efficiency has been broadly explored by using parametric and, above all, non-parametric techniques such as DEA. Several empirical strategies have developed since early studies (Tomkins and Green 1988; Beasley 1990, 1995; Dundar and Lewis 1995; Johnes and Johnes 1995; Madden et al. 1997). Recent dynamic assessments of efficiency apply techniques such as the Malmquist index used in combination with DEA frontier estimation. Flegg et al. (2004) study efficiency of British HEIs for the period 1981–1993; Kempkes and Pohl (2010) focused on German HEI system between 1998 and 2003.Footnote 2 Non-parametric frontier methods have been broadly preferred because they have the advantage of allowing the study efficiency of multi-input and multi-output production processes and do not require an a priori specification of the functional form. This is in many cases a considerable advantage that prevails over some technical limitations (Johnes 2006).

The analysis of efficiency cannot overlook the fact that universities are complex multi-input multi-output entities that jointly ‘produce’ research and teaching using facilities, academic and non-academic staff, students and financial resources as inputs. The multi-output nature of the HEI activity brings out the issue of potential trade-off between teaching and research (Hattie and Marsh 1996, 2004). This is a well-known and highly controversial issue in the literature. From an empirical point of view, it has been mainly explored at researcher/professor or faculty levels. Feldman (1987) found a weakly positive correlation between research productivity and teaching effectiveness; Hattie and Marsh (1996) conducted a meta-analysis of 58 studies at professor level and found no correlation. Some other scholars, conversely, reported a common perception among academics that the two activities are positively related (Kremer 1990; Neumann 1992).

However, this issue has not been sufficiently explored in terms of efficiency. Among the few exceptions, St. Aubyn et al. (2009) compare teaching and research efficiency scores of 27 HEI systems and suggest that the trade-off strictly depends on the country’s context. Several HEI systems seem to specialize in one of the tasks while few countries show comparable scores on both activities. Sellers-Rubio et al. (2010) find complementarity between teaching efficiency and research efficiency in their study on a single Spanish university. Bonaccorsi et al. (2006) study efficiency of 45 Italian HEIs in the period 1995–1999 using a large set of variables, including financial and human resources collected by the Italian Conference of University Rectors (CRUI) as well as data on research outputs drawn by the Information Science Institute (ISI). By applying a robust non-parametric approach, they study how research efficiency is affected by education efficiency without finding evidence of a trade-off. Actually, they find that increasing scientific quality improves educational efficiency. On the contrary, Wolszczak-Derlacz and Parteka (2010) find a strongly negative correlation between teaching work-load (measured by the number of students per academic staff member) and research efficiency (measured by the number of ISI publications per academic staff member), for a sample consisting of several HEIs in seven western European countries including Italy. Finally, De Witte et al. (2013a) uses an empirical strategy close to the one used in the present paper on a sample of 155 professor belonging to a single department of a Belgian HEI, and find that efficiency scores decrease when accounting for potential spillovers between the two activities.

Institutional background and efficiency assessment of Italian HEIs

The system of Italian HEIs is one of the largest in the EU and it is known to be fairly inefficient with respect to European counterparts (Lambert and Butler 2006) and characterized by a structural geographical gap (Agasisti and Dal Bianco 2009; Agasisti and Pohl 2012; Guccio et al. 2015). Although considered as a strongly centralized system, it has undergone a deep and unsystematic process of reform since late 80s. Among the main features of Italian HEIs’ reforms: the financial autonomy, established in 1993; the reform of funding schemes (2004), which now allows transfers from the national governments to be partially distributed to universities on the basis of their teaching and research efficiency and effectiveness; the structures of courses, which were reformed starting from the academic year 2001–2002 in order to meet the Bologna process targets. This reform introduced a new 3 + 2 model and more flexibility in the definition of teaching programs, aiming at tackling the longstanding problems of the Italian HEI system: a low number of graduates, a high dropout rate, a long average student career time (Agasisti and Catalano 2006).

Several works investigate the effect of the reform on the Italian HEI system: Agasisti and Dal Bianco (2009), Guccio et al. (2015), Laureti et al. (2014) analyze teaching efficiency based on the frontier estimation approach, while Di Pietro and Cutillo (2008) focuses on drop-out rates, Cappellari and Lucifora (2009) on enrolment decisions, and Brunori et al. (2012) studies the effects of the reform in terms of fairness.

Some relevant facts emerge: (1) the efficiency of Italian universities grew due to the reform of courses’ structure; (2) the Italian HEI system is characterized by a structural geographical gap; (3) the reform resulted in a reduction of drop-out rates, a substantial increase in the enrolments, and an improvement in the fairness of the access procedures.

Methodology

This study uses an economic production model of HEI to measure the relative efficiency of the Italian HEIs in the sample. This production model is defined using the concept of efficiency frontier based on the work of Farrell (1957), Debreu (1951), and Koopmans (1951). Two main analytical approaches are available to estimate efficiency frontiers empirically: parametric and non-parametric.Footnote 3 Non-parametric estimators can be used to measure the relative efficiency of organizations or parts of organizations that have similar attributes and share the same goals and objectives, such as HEIs that perform teaching and research activities.

In this study, the economic production model of HEIs is measured empirically using Data Envelopment Analysis (DEA), a mathematical optimization model introduced by Charnes et al. (1978) that generalized Farrell’s single input/output measure into a multiple-input/multiple-output technique. DEA model estimates the convex hull of the production function by a linear programming optimization, and the inefficiency is defined by a convex envelope of the data. For comparative purpose we also employ the Free Disposal Hull (FDH) model developed by Deprins et al. (1984), which relaxes the convexity assumption of DEA model.Footnote 4

According to Simar and Wilson (2008), FDH measurement provides different results compared to DEA estimator and can obtain better data fit and thus reveals important characteristics of the data. Furthermore comparing DEA and FDH estimators may be useful because the FDH estimator is shown to be consistent and has attractive asymptotic properties (Daraio and Simar 2007). Moreover, following Simar and Wilson (1998, 2000) and Badin and Simar (2009) we perform the bootstrap procedure for the DEA and FDH estimators in order to obtain bias corrected results.Footnote 5 Furthermore, we test for the assumption of Constant Returns to Scale (CRS) against Variable Returns to Scale (VRS), using the bootstrap test proposed by Simar and Wilson (2002), and for convexity between the DEA and FDH estimators using a similar statistical test as suggested by Daraio and Simar (2005).

In our analysis, we use an output-oriented model assuming that HEIs maximize their outputs for given inputs, as it is common in the literature on higher education. We also use a Shephard (1970) output-oriented distance function, which is the reciprocal of Farrell (1957) distance function. Consequently, efficiency scores assume values between zero and one.

We first assess the performance of public HEIs in our sample using a full model of HEI activity and then two separated models of research and teaching (see e.g. Avkiran 2001; Bonaccorsi et al. 2006, 2007; Archibald and Feldman 2008; Agasisti and Dal Bianco 2009) to assess the nexus between these activities. From a production point of view, a single HEI jointly produces teaching and research. However, as mentioned in the previous section, complementarity or trade-off may exist between the activities, and several approaches were developed to address the issue (for a survey of the parametric approach, see Worthington 2001).

In nonparametric efficiency analysis, traditional methods to assess complementarity or trade-off between activities—or ‘scope’ economiesFootnote 6 (e.g. Fare et al. 1994)—are based on the comparison between the frontier of single product DMUs (or specialized DMUs) and the frontier of multiproduct DMUs constructed from the weighted sum of single product DMUs (or specialized DMUs). As observed by Daraio and Simar (2007), however, this approach introduces additional assumptions and potential biases.

In order to investigate the HEI performance in research and teaching activities, we use the approach in Bonaccorsi et al. (2006, 2007) that applies the methodology proposed by Daraio and Simar (2005, 2007) based on the comparison between the conditional performance measures and the unconditional ones on the frontier. More specifically, Bonaccorsi et al. (2006, 2007) suggests to study the relationship between HEIs' teaching and research activities using the general methodology that Daraio and Simar (2005, 2007) design to investigate the influence of the operational environment.Footnote 7 The influence of the conditioning variable can be deduced by comparing the conditioned and non-conditioned efficiency estimates. Therefore, the ratio of conditioned to unconditioned estimates is non-parametrically regressed against the conditioning variable. In an output oriented model, an upward sloping curve indicates a positive effect of the conditional variable, while a decreasing slope indicates a negative influence. A flat curve indicates the absence of the exogenous influence. We perform such analysis using both FDH and a robust order-m estimator that is less influenced by extreme points.Footnote 8

Finally, we study the dynamics of efficiency in terms of convergence of the Italian public HEIs in the considered period. We then apply unconditional β-convergence and σ-convergence, originally developed by Barro and Sala-i-Martin (1991, 1992), to technical efficiency in line with several recent studies (Weill 2009; Casu and Girardone 2010; Zhang and Matthews 2012; Ayadi et al. 2013, Guccio et al. 2015). To be clear, we use the term β-convergence to indicate that a poorer performer grows faster than a better performer, and σ-convergence to indicate that the dispersion of efficiency scores diminishes over the observed period. Therefore, we estimate unconditional β-convergence:

where \(\hat{\theta }_{i,t}\) is the efficiency score estimated in the first stage for university \(i\) at time \(t\), \(\Delta\hat{\theta}_{i,t} = \ln \hat{\theta}_{i,t} - \ln \hat{\theta}_{i,t - 1},\epsilon_{i,t}\) is the error term, and β is the convergence parameter that is negative in case of convergence and whose value represents the speed of convergence.

The following autoregressive distributed lag model allows studying the dynamics of the dispersion of efficiency scores:

where \(E_{i,t} = \ln \hat{\theta }_{i,t} - \ln \bar{\hat{\theta }}_{t} ;E_{i,t - 1} = \ln \hat{\theta }_{i,t - 1} - \ln \bar{\hat{\theta }}_{t - 1} ;\Delta E_{i,t} = E_{i,t} - E_{i,t - 1} ;\bar{\hat{\theta }}_{t}\) is the mean of efficiency scores \(\hat{\theta }\) of all universities at time t, \(\epsilon_{i,t}\) is the error term. The relevant parameter for the goal of the analysis is \(\sigma\). A negative value of \(\sigma\) denotes convergence, i.e. dispersion of efficiency scores diminishes over time, and the coefficient represents the speed of convergence. In line with Casu and Girardone (2010) and Guccio et al. (2015), we use uncorrected efficiency scores to estimate Eqs. (1) and (2) by pooled OLS, and by pooled OLS including the lagged dependent variable.

Data and HEI production models

The selection of inputs and outputs in context such as ours is not straightforward and strictly depends on the availability of data. To make our results and implications more general, we select the most parsimonious set of inputs and outputs and use variables commonly adopted by the literature. In fact, studies on HEIs’ efficiency based on non-parametric frontier estimation techniques differ substantially in terms of methodological and empirical strategy and also in the choice of the appropriate set of inputs and outputs needed for the definition of the ‘production’ process.

The lack of appropriate measures of quality of HEI activities is a major concern in the literature on university efficiency and, in this respect, the choice of the proper set of outputs is rather controversial. As far as teaching activity is concerned, the total number of graduates is a commonly used output (Johnes 2008; Agasisti and Pérez-Esparrells 2010; Kantabutra and Tang 2010; Wolszczak-Derlacz and Parteka 2011), although such a measure tends to be biased towards large-output-low-quality universities. Among more sophisticated output measures, we recall: student self-reported acquired competences (Joumady and Ris 2005), number of graduates weighted by their degree classification (Johnes 2006), number of graduates within the standard length of the courses (Agasisti and Dal Bianco 2009) graduation scores, graduation rates and graduates’ employment rates (Kuah and Wong 2011), the ratio between the number of years scheduled for each degree course and the average number of years of delay (Bergantino et al. 2013). We employ the most used output measure for teaching, namely the number of graduates (GRAD). We are aware that our selection does not allow to capture the aspect of quality. However, Guccio et al. (2015) compares results from different models using alternatively the number of graduates or regular graduates (both weighted or not by the their degree score), and finds that the above measures lead to equivalent results.

Output selection is even more problematic when considering research activities. Several studies use indirect measures, such as the amount of external resources attracted (Johnes 2008; Agasisti and Pérez-Esparrells 2010) and HEI prestige (Johnes and Yu 2008). However, consistent data on publications in journals with a positive impact factor are now available: Bonaccorsi et al. (2006) and Parteka and Wolszczak-Derlacz (2013) use uniform bibliometric data from Thomson Reuters’ ISI Web of Science database (a part of the ISI Web of Knowledge), which lists publications from quality journals (with a positive impact factor) in most scientific fields. The latter source of data for research is the most used in recent literature and we draw from it to build our research output variable, RESEARCH, i.e. the number of publications. We therefore count all publications (scientific articles, proceedings papers, meeting abstracts, reviews, letters, notes) in a given year, subject to the requirement that at least one author declared an institutional affiliation with a HEI.

Input selection has proved to be less controversial and generally does not include quality measures, again with few exceptions.Footnote 9 Actually, most works on HEI efficiency include administrative staff and number of students (Abbott and Doucouliagos 2003; Johnes 2006, 2008; Agasisti and Dal Bianco 2009; Kantabutra and Tang 2010; Wolszczak-Derlacz and Parteka 2011; Sav 2012; Guccio et al. 2015). Some studies consider also current expenditures (Johnes 2008; Johnes and Yu 2008) and available places in libraries, laboratories and teaching rooms as measures of the physical endowment of HEIs (Athanassopoulos and Shale 1997; Johnes and Yu 2008; Agasisti and Dal Bianco 2009; Guccio et al. 2015). Accordingly, in our joint model of production, we use the total number of students (STUD), the total number of academic staff (AS), and the total number of available seats in teaching rooms, libraries and laboratories (STR) as inputs of HEIs’ production process, and GRAD and RESERCH as outputs.

Then, consistently with several studies (Avkiran 2001; Bonaccorsi et al. 2006, 2007; Archibald and Feldman 2008; Agasisti and Dal Bianco 2009) we employ two separated models: the “teaching production” model, with inputs STUD, AS, and STR, and output GRAD; the “research production” model, with inputs AS and STR and output RESERCH.

Data collected on inputs and outputs for public HEIs in Italy for the academic years 2000–2001 to 2010–2011 form the basis of the empirical analysis. The dataset we use is drawn from two main sources. The first one is the CNVSU database that includes data on each university collected on yearly basis by Nuclei di Valutazione. The second source is the Web of Science (WoS) database, being a part of the ISI Web of Knowledge, for the data about the publications (articles, proceedings papers, editorial materials, book chapters, book reviews, etc.) of the affiliated staff of each university in the sample. Data have been analyzed for reporting errors, outliers and missing values. Therefore, the resulting dataset consists of a sample of cross-sectional and time series observations for 54 Italian public HEIs for 11 years for a total of 594 observations.Footnote 10

Table 1 summarizes the employed variables, while the relative descriptive statistics are listed in Table 2 showing also some productivity indexes based on those variables. In the upper part of Table 2, we see that Italian universities are quite large: on average, they enroll about 31,000 students, employ 1000 professors, and have 20,000 seats in their facilities, with an output of about 4000 graduates and 1000 publications. It is clear from the standard deviation and minimum and maximum values that data are spread out over a large range of values. In the lower part of Table 2 we also report different productivity indexes on teaching and research activity.

A first easy way to evaluate and compare the different performance of HEIs is to use performance indicators that are supposed to reflect somehow the performance of different organizations. Here we propose four indexes based on teaching and research activity. On average, Italian HEIs ‘produce’ 4 graduates per professor and 0.2 graduates per seat in facilities on early basis. In terms of research productivity, Italian average is about 1 publication per professor and 0.05 publication per seat.

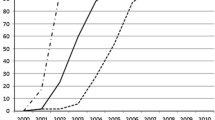

Since we have a panel data covering 11 academic years, we are also interested in studying the dynamics of such productivity indicators in the observed period. Figure 1 reports the boxplot of productivity indicators with respect to teaching and research. A positive trend in teaching productivity occurs in the very first years and up to 2005/2006, while research productivity increases at a more constant rate. In addition, dispersion is persistently noticeable in the period.

Results

This section describes our results in the following fashion. After presenting technical efficiency scores obtained through several estimators and under different assumptions, we show our findings on the nexus between teaching and research. Converge estimates conclude the section.

Efficiency assessment

Following the methodology proposed by Simar and Wilson (2002), we test for the existence of returns to scale (i.e. CRS vs VRS) for the full model. In our full model, where we have three inputs and two outputs, we obtain a p value of 0.27 > 0.05 (with B = 2000 bootstrap replications), hence we cannot reject the null hypothesis of CRS. Furthermore, as suggested by Daraio and Simar (2005), we employ a similar statistical test for convexity assumption on the results obtained under CRS and FDH estimates. We obtained a p value of 0.19 > 0.05 (with B = 2000 bootstrap replications) hence, we cannot reject the null hypothesis of CRS.

Overall, these two tests indicate that proper estimates for measuring the performance of public Italian HEIs in our sample are obtained by the CRS model. Nevertheless, for a comparative purpose, we show average efficiency results obtained using the different estimators in Table 3.

It is not surprising that results show, on average, a higher efficiency score for FDH estimates, due to the less restrictive assumptions of the estimator.

Results support the common perception of inefficiency as a relevant issue for Italian public HEIs. In the full model, as expected, average efficiency scores are lower for the biased corrected CRS (0.62) and the VRS (0.72) than for the biased corrected FDH efficiency scores (0.85).

A comparison of results between models allows stating that, on average, Italian universities show higher efficiency in teaching than in research activity. Moreover, the standard deviations show a high variability in the performance in all models, and this is more acute in research than in teaching. The above results are consistent with respect to different estimators, assumptions on returns to scale, and bias correction. These results invite a further analysis on the nexus between the two activities.

The relationship between teaching and research

We first perform some preliminary correlation and then follow the approach in Bonaccorsi et al. (2006, 2007) that applies the methodology proposed by Daraio and Simar (2005, 2007) based on the comparison of the conditional performance measures with the unconditional ones on the frontier. In our context, the relationship between teaching and research assumes a relevant role for the existence of a potential trade-off. We then address several related aspects.

First of all, a potential trade-off may imply that best performers in one activity are less efficient in the other one. In order to provide a preliminary test, we calculate the Spearman rank-correlation coefficient (Table 4).Footnote 11 Results show that teaching and research ranks are indeed positive, although poorly, correlated: if a university is a best performer in the first activity, this does not imply or prevent it to be a best performer in the second one.

We then analyze the effect of some variables connected with teaching (research) activity on the performance in research (teaching), exploring the relationship of the total number of students (STUD*)Footnote 12 and the total number of graduates (GRAD*)Footnote 13 on HEIs’ research performance estimates and the total number of publications (RESERCH*)Footnote 14 as determinant of the teaching performance. Correlations between such variables and estimated efficiency scores are reported in Table 5 and confirm that teaching and research performances in our sample are largely independent. However, a positive relationship emerges between STUD* or GRAD* and research performance, and between RESEARCH* and teaching performance.

Overall, the preliminary results are generally consistent with those obtained in previous literature, which show a limited scope between teaching and research activities and performances. However, these results are obtained from unconditional performance indicators and cannot fully capture the nexus between research and teaching. To provide a more robust check of our findings we apply the methodology proposed by Daraio and Simar (2007) to assess complementarity, or trade-off, between activities—or scope economies—based on the comparison of the conditional performance measures with the unconditional ones on the frontier. In particular, we use the conditional robust efficiency scores, where research (teaching) activity is treated as an external factor to analyze its impact on teaching (research) performance. To this purpose we first use conditional and unconditional robust FDH. Furthermore, to take account of potential outliers in our sample, we employ an order-m estimator which does not assume convexity of the production set and is less influenced by extreme points. Table 6 provides the descriptive statistics of the conditional and unconditional estimators.Footnote 15 Graphical inspection of Table 6 confirms that both teaching and research performances benefit from each other activity, although the effect is relatively small.

In order to better establish the influence of the other activities on the efficiency scores, we show in Figs. 2 and 3 the ratio of conditional to unconditional performance estimates against our external factors and its smoothed non-parametric regression line. In both figures we display estimations based on robust FDH scores (left-hand side) and on order-m scores (right hand side).

Since we employ an output oriented model, an upward sloping curve indicates a positive effect of the external factor, while a decreasing slope indicates a negative influence. A flat curve corresponds to the absence of the influence.

Figure 2 examines the influence of research activity on HEIs’ teaching performance. It represents a scatter plot of the ratios of conditional and unconditional performance estimates (both robust FDH and order-m) against RESEARCH* and its smoothed nonparametric regression line in order to assess the influence. In Fig. 3 we show results for conditional and unconditional performance research estimates against STUD* and GRAD* respectively. The effect is slightly positive. More specifically, the left-hand graph in Fig. 2 (FDH) shows an initial positive effect for low levels of research activity. However this effects is not detected using the more robust order-m estimator (right-hand side graph). Looking at the effects of teaching, Fig. 3 shows a quite similar pattern for research performance estimates. However, in this case the positive effect of teaching activities assumed as environmental factors occurs in both FDH and order-m estimates. In summary, teaching and research are poorly positive related, and the existence of a trade-off is not supported by our analysis.Footnote 16 This is consistent with previous findings in the literature (e.g. Bonaccorsi et al. 2006).

Teaching and research efficiency dynamics

Teaching and research are not strongly related and this leads us to analyze whether their dynamics in the decade after the reform are equivalent or not. That is, we are interested in investigating the specific dynamics of the two activities and their contribution to the aggregate efficiency trend of Italian HEIs. Figure 4 shows the box-plot for the full model estimated by FDH, CRS-DEA and VRS-DEA. FDH scores are indeed higher on average. Despite a diverging average efficiency score, all the models show comparable pattern. Overall efficiency increased after 2002–2003, with the only exception represented by the 2008–2009, and this is consistent with previous findings on the overall efficiency trend in Italy (Agasisti and Wolszczak-Derlacz 2014).

However, the dynamics are not constant and may hide different patterns with respect to teaching and research. Indeed, teaching and research efficiencies did not change in the same fashion in the observed period, as shown in Figs. 5 and 6, and more clearly in Fig. 7, which includes only averaged-by-year CRS corrected and uncorrected scores. Teaching efficiency increased mainly in the early years after the end of new first-cycle courses, and declined later, still maintaining an overall increase in efficiency. This result is comparable to previous findings available in the literature (Agasisti and Dal Bianco 2009; Guccio et al. 2015) and such dynamics can be explained as the result of the reform of graduate courses implemented by the end of 90s. Indeed, a large share of un-regular students in old courses had the opportunity to shift to the new ones, which, moreover, had a shorter length. This led to a significant increase in the teaching output in the early years after the implementation of the reform. Conversely, on average, research efficiency increased persistently over the period, although dispersion was constantly high. This result can be connected, in our opinion, to the reforms in the HEIs’ funding scheme, which turned to be linked to the performance in this activity.

Results would also suggest that research and teaching efficiency trends are basically independent, consistently with results shown in the previous paragraph.

Convergence assessment

Figure 4 (see supra) offers some preliminary insights on convergence. Dispersion of efficiency scores is constantly persistent although it slightly declines over time in the full model. Figures 5 and 6 suggest that persistence in the dispersion of efficiency scores may be due to research activity since teaching efficiency dispersion actually decreases, especially when considering CRS.

The same pattern emerges more clearly by plotting the Kernel density of CRS bias corrected scores of the first and last year for full, research and teaching models. In Fig. 8, we report the univariate kernel smoothing distributions (Wand and Jones 1995) estimated through reflection method to determine densities for performance estimates. The criterion for bandwidth selection follows the plug-in method proposed by Sheather and Jones (1991). The comparison between the first year and the last one suggests that dispersion of efficiency scores persisted, as it can be seen by looking at the thickness of the tails of both the distributions (2000 and 2010) in the upper left graph, while both teaching and research improved on average in the observed period, resulting in an overall improvement of efficiency of Italian HEIs. However, by disentangling efficiency, the two components show different patterns: universities converged in teaching efficiency (lower left graph) and did not converge, at least noticeably, in terms of research efficiency (upper right graph).

Although interesting, graphical inspection of the first and the last year does not allow analyzing the pattern of convergence along the observed period as a whole. In order to study convergence more in depth, we estimate Eqs. 1 and 2 (Tables 7, 8, respectively) by using uncorrected CRS efficiency scores for the full, teaching, and the research models.Footnote 17 As mentioned in “Methodology” section, a negative β parameter denotes convergence, meaning that in the observed period, poorest performers grew faster than best performers.

Table 7 shows that, overall, universities converged at a very slow pace as a result of a slow convergence of research efficiency. Teaching efficiency, in contrast, displayed a considerable rate of convergence, denoted by a larger and strongly significant coefficient.

The same configuration applies to σ-convergence reported in Table 8. The dynamics of dispersion of teaching efficiency scores give the strongest contribution to overall σ-convergence as denoted by a coefficient larger than that for research scores and strongly significant. Thus, estimation results confirm graphical inspections of densities: although Italian HEIs increased their efficiency with respect to both main activities, convergence did not follow a similar pattern, as β and σ-convergence are stronger for teaching than for research efficiency. A more conspicuous improvement in research efficiency occurred at the expenses of a slower convergence between universities, and the reverse pattern applies to teaching efficiency.

To check the robustness of the results we also perform the estimates including HEIs’ fixed effects to control for unobserved heterogeneity related to HEIs characteristics. The estimates confirm our previous findings. Moreover, we check whether convergence results hold when controlling for the other activity. We then re-run β and σ-convergence models introducing the external factor. Results reported in the Appendix (Tables 9, 10, 11, 12) show that β and σ-convergence estimates are robust to the inclusion of the variables connected to the complementary activity.

Concluding remarks

In this paper, we have analyzed teaching and research efficiency of Italian HEIs in the period 2000–2010.We have used non-parametric frontier techniques (DEA and FDH) to study the dynamics of overall efficiency, and also disentangled HEIs activities by investigating research and teaching efficiency separately. Results show that Italian HEIs increased their efficiency in the observed period and that research contributed primarily to such improvement by growing constantly. Conversely, teaching efficiency increased in the early years after the reform and declined afterwards. We have, moreover, analyzed convergence of technical efficiency by employing unconditional β-convergence and σ-convergence. Comparison between the full model and distinct models of production for research and teaching provides indications about the relative efficiency dynamics. In particular, Italian universities converged in terms of efficiency, but teaching and research converged at a different pace, as the latter showed a slower speed in both β and σ-convergence.

Finally, we have investigated the relationship between teaching and research on several dimensions, showing that being a best performer in one activity does not imply or prevent a university to be a best performer in the other one. In fact, a slightly positive relationship between teaching and research performances arises, consistently with previous results in the literature, which do not find any evidence of the supposed trade-off between the two activities. Results are robust with respect to different frontier estimators (namely, FDH, order-m and DEA) and different assumptions on the returns to scale. The findings about the relationship between research and teaching may offer useful empirical underpinnings for theoretical analysis, and for the university management and policies aiming to improve the performance of HEIs.

It should be noticed that this paper does not control for quality of research and teaching and for geographical heterogeneity affecting efficiency. Further developments could involve the application of conditional β and σ-convergence and a richer set of inputs and outputs including quality measures, and the relaxation of the implicit assumption of fixed technology.

Notes

An increased interest in cross-country comparisons emerged in more recent times, due to the reform of HEI systems within EU (the so-called Bologna Process) that aimed at creating a common European HEI system. An example of such a strand of research is Parteka and Wolszczak-Derlacz (2013) that compares 266 HEIs in 7 European countries in the period 2001–2005.

The advantage of using a non-parametric technique is that it provides a “best performance” frontier that identifies efficient HEIs able to provide a benchmark for under-performing HEIs. An alternative method identifying a frontier is the Stochastic Frontier Analysis (SFA; Aigner et al. 1977; Meeusen and Van den Broeck 1977). In contrast to non-parametric frontier, this approach provides a measure of statistical noise in its estimation of efficiency. However, SFA requires the specification of the functional form of the production process and the distribution of data and error terms.

Notwithstanding their large use, traditional non-parametric models such as DEA and FDH estimators have received some criticism since they rely on extreme points, and they could be extremely sensitive to data selection, aggregation, model specification and data errors (Simar and Wilson 2008). As an alternative, estimators based on partial frontiers and the resulting partial efficiency scores are proposed to provide robust measures of efficiency at extreme data points: Cazals et al. (2002) proposes the nonparametric order-m estimator and Daouia and Simar (2007) order-α quantile type frontiers.

An alternative to analyze the marginal effect of one activity (assumed to be an external or environmental factor) on the other one’s performance is to employ a two-stage DEA analysis (i.e. Sellers-Rubio et al. 2010). A robust semiparametric approach proposed by Simar and Wilson (2007) requires a restrictive separability condition between the input–output space and the space of external or environmental factors. This is certainly unrealistic in our analysis. We thank an anonymous reviewer for this point.

Notice that the ratios are not bounded by 1, in case of m-order estimator.

The sample is coherent with the one used by Agasisti and Wolszczak-Derlacz (2014) and virtually represents all the public HEIs with general purpose in Italian system. Some public HEIs are excluded due the incompleteness of the data in the observed period (namely: Università di Macerata; Politecnico di Bari; Università di Napoli “L’Orientale”; IUAV di Venezia). Other HEIs are excluded from the sample for the peculiarities of those institutions (Scuola Normale Superiore di Pisa; Scuola Superiore di Studi Universitari e Perfezionamento Sant'Anna di Pisa; Scuola Internazionale Superiore di Studi Avanzati di Trieste; Scuola IMT di Lucca; Istituto Universitario di Studi Superiori di Pavia; Università degli Studi di Roma "Foro Italico"; Università per Stranieri di Siena; Università per Stranieri di Perugia).

Results are robust with respect to bias correction and to the assumptions of variable returns to scale and free disposability of inputs, and results are available upon request.

STUD stands for STUD• 104.

GRAD stands for GRAD• 103.

RESEARCH stands for RESEARCH• 103.

To save space we do not report all the results obtained using different estimators. The analytical results of the conditional and unconditional estimates are available upon request.

We also perform a number of other robustness checks to assess effects between other activities and performances including a two-stage robust semiparametric approach proposed by Simar and Wilson (2007) obtaining results comparable with those reported here. Results are available upon request.

However, our findings are robust with respect to variable returns to scale. Estimates are available upon request.

References

Abbott, M., & Doucouliagos, C. (2003). The efficiency of Australian universities: A data envelopment analysis. Economics of Education Review, 22, 89.

Agasisti, T., & Catalano, G. (2006). Governance models of university systems towards quasi-markets? European comparison. Journal of Higher Education policy and Management, 28, 245–262.

Agasisti, T., & Dal Bianco, A. (2009). Reforming the university sector: Effects on teaching efficiency-evidence from Italy. Higher Education, 57, 477–498.

Agasisti, T., & Pérez-Esparrells, C. (2010). Comparing efficiency in a cross-country perspective: The case of Italian and Spanish state universities. Higher Education, 59, 85–103.

Agasisti, T., & Pohl, C. (2012). Comparing German and Italian public universities: Convergence or divergence in the higher education landscape? Managerial and Decision Economics, 33, 71–85.

Agasisti, T., & Wolszczak-Derlacz, J. (2014). Exploring universities’ efficiency differentials between countries in a multi-year perspective: An application of bootstrap DEA and Malmquist index to Italy and Poland, 2001–2011, IRLE working paper no. 113-14.

Aghion, P., Dewatripont, M., Hoxby, C., Mas-Colell, A., & Sapir, A. (2010). The governance and performance of universities: Evidence from Europe and the US. Economic Policy, 25, 7–59.

Aigner, D. J., Lovell, C. A. K., & Schmidt, P. (1977). Formulation and estimation of stochastic frontier production functions. Journal of Econometrics, 6, 21–37.

Archibald, R. B., & Feldman, D. H. (2008). Explaining increases in higher education costs. Journal of Higher Education, 79, 268–295.

Athanassopoulos, A., & Shale, E. (1997). Assessing the comparative efficiency of higher education institutions in the UK by means of data envelopment analysis. Education Economics, 5, 117–134.

Auranen, O., & Nieminenb, M. (2010). University research funding and publication performance—An international comparison. Research Policy, 39, 822–834.

Avkiran, N. K. (2001). Investigating technical and scale efficiencies of Australian universities through data envelopment analysis. Socio-Economic Planning Sciences, 35, 57–80.

Ayadi, R., Boussemart, J., Leleu, H., & Saidane, D. (2013). Mergers and acquisitions in European banking higher productivity or better synergy among business lines? Journal of Productivity Analysis, 39, 165–175.

Badin, L., & Simar, L. (2009). A bias-corrected nonparametric envelopment estimator of frontiers. Econometric Theory, 25, 1289–1318.

Barro, R. J., & Sala-i-Martin, X. (1991). Convergence across states and regions. Brookings Papers Economic Activity, 1, 107–182.

Barro, R. J., & Sala-i-Martin, X. (1992). Convergence. Journal of Political Economy, 100, 223–251.

Beasley, J. (1990). Comparing university departments. Omega, 18, 171–183.

Beasley, J. (1995). Determining teaching and research efficiencies. Journal of the Operational Research Society, 46, 441–452.

Bergantino, A. S., Capozza, C., & Porcelli, F. (2013). Measuring the efficiency of the Italian university system: The role of market structure. A two step DEA analysis at faculty level. Paper presented at the XXV conference of the Italian Association of Public Economics (SIEP).

Bonaccorsi, A., Daraio, C., & Simar, L. (2006). Advanced indicators of productivity of universities an application of robust nonparametric methods to Italian data. Scientometrics, 66, 389–410.

Bonaccorsi, A., Daraio, C., & Simar, L. (2007). Productivity and efficiency of European universities. In A. Bonaccorsi & C. Daraio (Eds.), Universities and strategic knowledge creation: Specialization and performance in Europe. Cheltenham: Edward Elgar.

Brunori, P., Peragine, V., & Serlenga, L. (2012). Fairness in education: The Italian university before and after the reform. Economics of Education Review, 31, 764–777.

Cappellari, L., & Lucifora, C. (2009). The “Bologna Process” and college enrollment decisions. Labour Economics, 16, 638–647.

Casu, B., & Girardone, C. (2010). Integration and efficiency convergence in EU banking markets. Omega, 38, 260–267.

Cazals, C., Florens, J. P., & Simar, L. (2002). Nonparametric frontier estimation: A robust approach. Journal of Econometrics, 106, 1–25.

Charnes, A., Cooper, W. W., & Rhodes, E. (1978). Measuring the efficiency of decision making units. European Journal of Operational Research, 2, 429–444.

Daouia, A., & Simar, L. (2007). Non-parametric efficiency analysis: A multivariate conditional quantile approach. Journal of Econometrics, 140, 375–400.

Daraio, C., & Simar, L. (2005). Introducing environmental variables in nonparametric frontier models: A probabilistic approach. Journal of Productivity Analysis, 24, 93–121.

Daraio, C., & Simar, L. (2007). Advanced robust and nonparametric methods in efficiency analysis. Methodology and applications. New York: Springer.

De Witte, K., Rogge, N., Cherchye, L., & Van Puyenbroeck, T. (2013a). Accounting for economies of scope in performance evaluations of university professors. Journal of the Operational Research Society, 64, 1595–1606.

De Witte, K., Rogge, N., Cherchye, L., & Van Puyenbroeck, T. (2013b). Economies of scope in research and teaching: A non-parametric investigation. Omega, 41, 305–314.

Debreu, G. (1951). The coefficient of resource utilization. Econometrica, 19, 273–292.

Deprins, D., Simar, L., & Tulkens, H. (1984). Measuring labor efficiency in post offices. In M. Marchand, P. Pestieau, & H. Tulkens (Eds.), The performance of public enterprises: Concepts and measurements. Amsterdam: North Holland.

Di Pietro, G., & Cutillo, A. (2008). Degree flexibility and university drop-out: The Italian experience. Economics of Education Review, 27, 546–555.

Dundar, H., & Lewis, D. (1995). Departmental productivity in American universities: Economies of scale and scope. Economics of Education Review, 14, 119–144.

Fare, R., Grosskopf, S., & Lovell, C. (1994). Production frontiers. Cambridge: Cambridge University Press.

Farrell, M. (1957). The measurement of productive efficiency. Journal of the Royal Statistical Society Series A (General), 120, 253–290.

Feldman, K. (1987). Exploring the relationship between research productivity and college students’ ratings of teachers: Quantitative reviews and the logic of analysis. Paper presented at the Annual Meeting of the American Educational Research Association, Washington, DC.

Flegg, A. T., Allen, D. O., Field, K., & Thurlow, T. W. (2004). Measuring the efficiency of British universities: A multi-period data envelopment analysis. Education Economics, 12, 231–249.

Guccio, C., Martorana, M. F., & Monaco, L. (2015). Evaluating the impact of the Bologna Process on the efficiency convergence of Italian universities: A non-parametric frontier approach. Journal of Productivity Analysis. doi:10.1007/s11123-015-0459.

Hattie, J., & Marsh, H. W. (1996). The relationship between research and teaching: A meta-analysis. Review of Educational Research, 66, 507–542.

Hattie, J., & Marsh, H. W. (2004). One journey to unravel the relationship between research and teaching. Research and teaching: Closing the divide? An international colloquium, Winchester, March 18–19.

Herbst, M. (2007). Financing public universities: The case of performance funding. Dordrecht: Springer.

Hicks, D. (2012). Performance-based university research funding systems. Research Policy, 41, 251–261.

Huisman, J., & Van der Wende, M. (2004). The EU and Bologna: Are supra-and international initiatives threatening domestic agendas? European Journal of Education, 39, 349–357.

Johnes, J. (2006). Data envelopment analysis and its applications to the measurement of efficiency in higher education. Economics of Education Review, 25, 273–288.

Johnes, J. (2008). Efficiency and productivity change in the English higher education sector from 1996/97 to 2004/5. Manchester School, 76, 653–674.

Johnes, J., & Johnes, G. (1995). Research funding and performance in U.K. University departments of economics: A frontier analysis. Economics of Education Review, 14, 301–314.

Johnes, J., & Yu, L. (2008). Measuring the research performance of Chinese higher education institutions using data envelopment analysis. China Economic Review, 19, 679–696.

Joumady, O., & Ris, C. (2005). Performance in European higher education: A non-parametric production frontier approach. Education Economics, 13, 189–205.

Kantabutra, S., & Tang, J. (2010). Efficiency analysis of public universities in Thailand. Tertiary Education and Management, 16, 15–33.

Kempkes, G., & Pohl, C. (2010). The efficiency of German universities—Some evidence from nonparametric and parametric methods. Applied Economics, 42, 2063–2079.

Koopmans, T. C. (1951). An analysis of production as an efficient combination of activities. In T. C. Koopmans (Ed.), Activity analysis of production and allocation, Cowles Commission for Research in Economics, monograph 13 (pp. 33–37). New York: Wiley.

Kremer, J. (1990). Construct validity of multiple measures in teaching, research and services and reliability of peer ratings. Journal of Educational Psychology, 82, 213–218.

Kuah, C., & Wong, K. (2011). Efficiency assessment of universities through data envelopment analysis. Procedia Computer Science, 3, 499–506.

Lambert, R., & Butler, N. (2006). The future of European universities: Renaissance or decay?. London: Centre for European Reform.

Laureti, T., Secondi, L., & Biggeri, L. (2014). Measuring the efficiency of teaching activities in Italian universities: An information theoretic approach. Economics of Education Review, 42, 147–164.

Madden, G., Savage, S., & Kemp, S. (1997). Measuring public sector efficiency: A study of economics departments at Australian universities. Education Economics, 5, 153–168.

Meeusen, W., & van den Broeck, J. (1977). Efficiency estimation from Cobb–Douglas production function with composed error. International Economic Review, 8, 435–444.

Neumann, R. (1992). Perceptions of the teaching-research nexus: A framework for analysis. Higher Education, 23, 159–171.

Olivares, M., & Wetzel, H. (2011). Competing in the higher education market: Empirical evidence for economies of scale and scope in German higher education institutions, University of Lüneburg working paper series in economics, no. 223.

Parteka, A., & Wolszczak-Derlacz, J. (2013). Dynamics of productivity in higher education: Cross-European evidence based on bootstrapped Malmquist indices. Journal of Productivity Analysis, 40, 67–82.

Rebora, G., & Turri, M. (2013). The UK and Italian research assessment exercises face to face. Research Policy, 42, 1657–1666.

Sánchez-Barrioluengo, M. (2014a). Articulating the ‘three-missions’ in Spanish universities. Research Policy, 43(10), 1760–1773.

Sav, G. T. (2012). Productivity, efficiency, and managerial performance regress and gains in United States universities: A data envelopment analysis. Advances in Management & Applied Economics, 2, 13–32.

Sellers-Rubio, R., Mas-Ruiz, F. J., & Casado-Dìaz, A. B. (2010). University efficiency: Complementariness versus trade-off between teaching, research and administrative activities. Higher Education Quarterly, 64, 373–391.

Sheather, S. J., & Jones, M. C. (1991). A reliable data-based bandwidth selection method for Kernel density estimation. Journal of the Royal Statistical Society: Series B (Methodological), 53, 683–690.

Shephard, R. W. (1970). Theory of cost and production functions. Princeton: Princeton University Press.

Simar, L., & Wilson, P. (1998). Sensitivity analysis of efficiency scores: How to bootstrap in nonparametric frontier models. Management Science, 44, 49–61.

Simar, L., & Wilson, P. (2000). Statistical inference in nonparametric frontier models: The state of the art. Journal of Productivity Analysis, 13, 49–78.

Simar, L., & Wilson, P. (2002). Nonparametric tests of returns to scale. European Journal of Operations Research, 139, 115–132.

Simar, L., & Wilson, P. (2007). Estimation and inference in two-stage, semi-parametric models of production processes. Journal of Econometrics, 136, 31–64.

Simar, L., & Wilson, P. (2008). Statistical inference in nonparametric frontier models: Recent developments and perspectives. In H. O. Fried, C. A. Knox Lovell, & S. S. Schmidt (Eds.), The measurement of productive efficiency and productivity growth (pp. 421–521). New York: Oxford University Press.

St. Aubyn, M., Pina, A., Garcia, F., & Pais, J. (2009). Study on the efficiency and effectiveness of public spending on tertiary education. Directorate General Economic and Monetary Affairs (DG ECFIN), European Commission economic papers, no. 390.

Tomkins, C., & Green, R. (1988). An experiment in the use of data envelopment analysis for evaluating the efficiency of UK university departments of accounting. Financial Accountability And Management, 4, 147–164.

Wand, M. P., & Jones, M. C. (1995). Kernel smoothing. London: Chapman and Hall Press.

Weill, L. (2009). Convergence in banking efficiency across European countries. Journal of International Financial Markets, Institutions and Money, 19, 818–833.

Wolszczak-Derlacz, J., & Parteka, A. (2010). Scientific productivity of public higher education institutions in Poland—A comparative bibliometric analysis. EY—Better Governance, Warsaw.

Wolszczak-Derlacz, J., & Parteka, A. (2011). Efficiency of European public higher education institutions: A two-stage multicountry approach. Scientometrics, 89, 887–917.

Worthington, A. (2001). An empirical survey of frontier efficiency measurement techniques in education. Education Economics, 9, 245–268.

Zhang, T., & Matthews, K. (2012). Efficiency convergence properties of Indonesian banks 1992–2007. Applied Financial Economics, 22, 1465–1478.

Acknowledgments

Preliminary versions of this paper were presented in the XXVII annual congress of the Italian Society of Public Economics (Ferrara, 2015), and seminars at the Kyoto Sangyo University and University of Munich. We thank the participants and discussants, as well as two anonymous reviewers for several helpful comments. The usual caveat applies.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Guccio, C., Martorana, M.F. & Mazza, I. Efficiency assessment and convergence in teaching and research in Italian public universities. Scientometrics 107, 1063–1094 (2016). https://doi.org/10.1007/s11192-016-1903-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-1903-8