Abstract

This pioneering approach to the subject area of Information Literacy Assessment in Higher Education (ILAHE) aims at gaining further knowledge about its scope from a terminological-spatial perspective and also at weighting and categorizing relevant terms on the basis of levels of similarity. From a retrospective and selective search, the bibliographic references of scientific literature on ILAHE were obtained from the most representative databases (LISA, ERIC and WOS), comprising the period 2000–2011 and restricting results to English language. Keywords in titles, descriptors and abstracts of the selected items were labelled and extracted with Atlas.ti software. The main research topics in this field were determined through a co-words analysis and graphically represented by the software VOSviewer. The results showed two areas of different density and five clusters that involved the following issues: evaluation-education, assessment, students-efficacy, learning-research, and library. This method has facilitated the identification of the main research topics about ILAHE and their degree of proximity and overlapping.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction and objectives

Information Literacy (IL) refers to a set of individual competencies which have an impact on education systems. In higher education (HE) IL has become a main issue of concern . Increasingly, universities are promoting a culture of IL education, mostly among their students. Universities are well aware of the need for promoting IL skills and fostering a larger autonomy for dealing with information-related problems (Pinto and Sales 2008; Shenton and Fitzgibbons 2010). Thus, HE institutions ought to provide the appropriate assessment tools to reveal and analyze student achievements concerning IL skills and their level of acquisition of the related competencies. However, while there is a substantial bibliographic production about IL and HE, this is not the case for the more recent topic of “assessment” within the scope of IL and HE. On the other hand, its growth in recent years suggests that this is a subject with an immediate promising future. Hence, there is an increasing interest in addressing the issue of “Information Literacy Assessment in Higher Education” (ILAHE).

This work focuses on the bibliometric analysis of the scientific production on ILAHE from 2000 to 2011, including a number of professional databases. The overall goal is to view and explore the most representative keywords and key research issues in this strategic subject field. Given the multidisciplinary nature of the research, it is very important to be aware of the likely degree of terminological proximity and overlapping among the different trends that might be found.

Literature review

A number of bibliometric studies, such as Nazim and Ahmad (2007) and Aharony (2010), have shown an increase of IL publications over the years, mostly in USA and UK. Pinto et al. (2010) followed a qualitative and quantitative approach to examine the changes in the last three decades in relation to two interdependent clusters of concepts: IL and computer literacy. Pinto et al. (2013) conducted a bibliometric study on the scientific literature on IL (1974–2011), and also a co-words analysis of the research production in the areas of Social Sciences and Health Sciences Pinto et al. (2013). Meanwhile, studies about the evolution of IL publications underline the growing emphasis of the literature on students’ learning outcomes assessment (Rader 2002).

There are plenty of literature reviews on both IL and HE. However, no revisions have been found addressing the triad “assessment”, “IL” and “HE”. Although the concepts of evaluation and assessment are closely related, IL literature clearly differentiates them. “While evaluation involves rating the performance of services, programs, or individual instructors, etc., assessment concentrates on what students are learning” (Rabine and Cardwell 2000). The meanings and types of assessment, and the approaches to the study of its basic aspects are so varied that the potential of research in this area seems almost unlimited. In any case, both evaluation and assessment are necessary components of the IL program (Chen and Lin 2011). Within the field of IL, assessment models and assessment tools, while being different, complement each other. Most models related to IL assessment are of two basic types: either behaviourist or constructivist. While behaviourist learning theory is associated with traditional objective testing, the constructivist paradigm is characterized by shared theoretical principles of curricular design, psychology, and assessment. In this last view, “a broader range of assessment tools is needed to capture important learning goals and processes and to connect assessment more directly to ongoing instruction” (Shepard 2000). In constructivist assessment models, assessment drives the curricular design. Teachers first determine the tasks that students will perform to demonstrate their command of a competence, and then build a curriculum that will enable students to perform those tasks well is developed (Mueller 2013). Indeed, “increased understanding of the user’s point of view will be a valuable aid to both research and practice in order to best promote and teach information literacy skills” (Gross and Lathan 2008). Classroom assessment is the kind of evaluation that can be used as a part of instruction itself to support and enhance learning (Shepard 2000). The constructivist approach considers assessment to be a part of the learning process and not as a separate process (Fourie and van Niekerk 2001). Kirkpatrick’s constructivist model distinguishes four levels of evaluation: reaction, learning, behavior, and results (Salisbury and Ellis 2003). The Cross/Angelo model takes classroom assessment as being learner-centered, teacher-directed, mutually beneficial, formative, context-specific, continuing, and rooted in good teaching practice (Stewart 1999). The model of the Information Literate University identified linkages between management, research, students and graduates, curriculum, staff development, and librarians (Webber and Johnson 2006).

Another example presents sustainable IL assessment within authentic assessment projects that collect and assess student work through course learning goals, pre-existing assignments, and students’ self-assessments based on ePortfolios and rubrics (Bussert et al. 2009). A conceptual framework for understanding student behavior related to reading, writing, and thinking as well as information seeking—the fundamental components of IL—is presented in a constructivist model (Nichols 2009). Another interesting work proposes a model for teaching and learning intervention which integrates ideas from the fields of IL, teaching and learning, e-learning, and information behavior (Walton and Hepworth 2011). In line with this research, there have been initiatives encouraging collaborative IL activities (Oakleaf et al. 2011) and formative assessment: “the use of formative assessment creates effective information literacy instruction by acknowledging variation in information literacy skills among students” (Dunaway and Orblych 2011).

Many librarians have developed their own tools to assess IL (Walsh 2009). The National Survey of Student Engagement gathers information about student participation in programs and activities that institutions provide for their learning and personal development (Indiana University 2013). The Information Literacy Test is an ACRL’s computerized, multiple-choice test conceived as a measurement instrument to assess Competency Standards for Higher Education (Cameron et al. 2007). The SAIL (Standardized Assessment of IL Skills) test is an IL assessment tool designed for comparing both groups of students and individuals (SAILS 2000). An interactive assessment tool based on problems, scenario and Web, iSkills (TM), was the result of a broad effort to establish standards for performance and certification of ICT literacy proficiencies (Somerville et al. 2008). This tool is a reflection of the collaboration of academic librarians across the US to provide a national perspective on information competence (Brasley et al. 2009). The Project Information Literacy is a national research study on how college students conduct research and find information, considering their needs, strategies, and workarounds (Head and Eisenberg 2010). Another research project assess learning according to the dimensions of critical thinking, analytical reasoning, and written communication (Arum and Roksa 2010). The Information Skills Survey is an evidence-based test instrument designed to evaluate Law, Education, and Social Science students’ IL skills (Catts 2005). The self-reporting IL-HUMAS and INFOLITRANS surveys (Pinto and Sales 2008; Pinto 2010) may also be of interest.

There have been, however, few examples of assessments developed jointly by librarians and course faculty, and even fewer “authentic assessments” using measures requiring real-world research (Brown and Kingsley-Wilson 2010). Among these authentic assessment tools, portfolios and rubrics stand out. While portfolios focus on a meaningful collection of student performance, reflection and evaluation of their own work, rubrics are scoring scales used to assess student performance considering a task-specific set of criteria (Mueller 2013). “Future investigations could include evaluations of a wide variety of performance assessments, including student bibliographies, research journals, and portfolios. All these areas of additional research will help build a strong foundation for future uses of information literacy assessment rubrics” (Oakleaf 2009).

Materials and methods

The methodology followed is of a mixed and empirical nature, as it is based on qualitative and quantitative procedures and real data. For the bibliometric study of the selected ILAHE terms, a co-words analysis on the basis of the co-occurrence of keywords taken from titles, abstracts and descriptors of original documents was used. In this way, the obtained conceptual network illustrate ILAHE’s landscape (Jacobs 2002).

The methodological steps we followed are: searching and selecting databases to build an ILAHE’s database; labeling keywords, selecting and counting (frequencies); finding and analyzing keywords co-occurrences (co-occurrence matrix); analyzing VOSViewer output (similarity matrix) to map keywords; visualization of results, including spatial (density) and thematic (clusters) views; and finally, synthesizing outcomes from a critical interpretive perspective (Bawden 2012).

The ILAHE’s database

A selective search on ILAHE’s English bibliographic references, from 2000 to 2011, was performed. It was extensive but not exhaustive, and was performed in three databases: LISA (101 items), WOS (112 items), and ERIC (84 items). The following search terms were used: “information literacy” and (“test” or “evaluation” or “assessment”) and (“university” or “higher education” or “universities”). The 297 references retrieved were narrowed down to 268 after validation and the removal of duplicates. Each reference contained the following elements: title, year of publication, publisher, abstract, descriptors, external link, and document type.

Keywords frequencies and co-occurrences

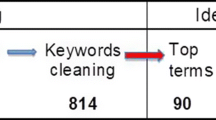

The database search results were used as a basis to label keywords within the selected content indicators (title, descriptors and abstract) using Atlas.ti 7.0 software. This is a personal-computer software useful for text interpretation. Its goal was “to develop a tool that effectively supports the human interpreter, especially in the handling of complex informational structures” (Muhr 1991, p. 350). The coding function available in ATLAS/ti, “designed under the influence of the methodology of grounded theory”, has been used here for keywords detection. Thus, the most frequent keywords were selected, ruling out those which frequency was less than 3 % of the total number of references, because it is understood that these are not relevant to the research. Focusing on the resulting set of keywords, we want to learn its structure from the most basic perspective provided by the analysis of co-occurrence between pairs of words. For this purpose, an algorithm that allows the development of a matrix recording the levels of co-occurrence among pairs of keywords (co-occurrence matrix) has been used.

VOS viewer analysis

From the co-occurrence matrix, VOSViewer software worked out the pertinent similarity matrix, from which the map that visually identifies the main research themes on ILAHE is achieved. Thus, different areas of keywords’ density can be obtained and potential conceptual clustering can also be performed (van Eck and Waltman 2010).

Results

The set of 268 retrieved references on ILAHE included “journal papers (76.20 %), reports (11.50 %), books (7.70 %), and conference proceedings (4.60 %)”. Looking at the evolution of publications, some growth is observed in the course of the period under study (Fig. 1).

The most productive journals belong to the field of Library and Information Science (Table 1).

The 268 retrieved articles are authored by 562 scholars, representing a collaboration index of 2.10 authors per article (Table 2). This collaboration occurs in 168 items, i.e. 62.70 % of the works are signed by two or more authors.

Authors’ scientific production is displayed in ascending order. 432 authors have a single publication. There is only an author with seven publications. Average productivity is 1.15 items/author (Table 3).

Regarding productivity, the statistical analysis carried out with SPSS 20 software revealed that the variables follow a normal distribution according to their means and standard deviations (non-parametric Kolmogorov–Smirnov test).

Details about country, professional profile, and number of publications from the most productive authors were also obtained (Table 4).

A list of authors with two publications on ILAHE is also displayed (Table 5).

The results presented above provide a descriptive overview of the quantitative scientific production on ILAHE for the period under study. It is nevertheless true that the most relevant and detailed result of this research are those obtained from the analysis of keywords present in three of the content indicators used in the searches, namely, titles, descriptions, and abstracts.

VOSViewer’s density and cluster views

In order to identify the main research themes within the ILAHE’s field, a total of 575 different keywords were analyzed, representing a rate of 2.15 keywords/item retrieved. The analysis focused on the 140 terms with the largest levels of co-occurrence.

The map obtained by means of VOSViewer software presented two significant views of the main keywords, one relative to its density and the other to its clustering. Density “depends both on the number of neighboring items and on the weights of these items” (van Eck and Waltman 2010). A rather scattered distribution with two areas is provided: on the one hand, a central area of high density (core), large size and irregular shape including the more weighted and close terms; and secondly, a low-density zone (periphery), composed of the remaining ones, more distant from each other, although not less significant (Fig. 2). As it can be seen, there are no clearly dominant terms. Ultimately, the size and shape of the core respond to ILAHE’s domain, characterized by its sharp interdisciplinary nature, in which different paradigms converge.

The second image shows how the different keywords under study are organized into networks according to physical criteria of modularity (“modularity based clustering”). VOSViewer uses colors to discriminate the words that belong to each cluster, as well as the number of clusters. The name of each of them, however, must be specified by the analyst in accordance with the words that are part of it. The analysis revealed five clearly defined clusters that reflected the main tendencies in the ILAHE field (Fig. 3). The structure of the map highlights the remarkable overlapping between the different clusters.

From the analysis of both density and cluster views one can infer the complexity and dynamism of the ILAHE field, in which the overlapping of five consolidated lines of research stands out. This explains the large size of the core, in which a set of keywords can be found from the five revealed clusters. The Evaluation-Education cluster, arguably the densest, overlapped the others because all of its keywords share their space with at least one of the other clusters, i.e. the whole word of the cluster also fits inside, at least, another of the squares in the Figure. The squares in Fig. 3 have been outlined to specify the keywords of each cluster. The Assessment and Students-Efficacy clusters are also quite centered, which results in a total overlapping. The Learning-Research and Library clusters are less centered, since both have a significant number of terms that do not share space with any other.

Research fronts

Each cluster, or research front, consists of a particular set of keywords. Each keyword has a normalized weight and a map location.

Cluster 1: Evaluation-education

This is the first research front, due to its overall weight, number of keywords, density, and overlapping level. Out of its thirty-nine concepts, thirty-two are located in the core part of the cluster, and only seven in its periphery (Table 6). A large number of them are related to training, assessment, education, technology and, to a lesser extent, information and library. However, the average weight per keyword is not outstanding.

Core keywords are mostly generic, in contrast to the specific nature of the periphery ones: “Websites”, “information technology”, “information retrieval”, “college students”, “computer assisted instruction”, “information seeking”, and “library skills”. Most of these terms are related, either directly or indirectly, to information and communication technologies (ICT).

Cluster 2: Assessment

This is the second research trend, according to its overall weight and size, with thirty-eight items (Table 7). It is located at the left side of the map, being included within the Students-Efficacy thematic area (Fig. 3). It also shares space to some extent with the trends Evaluation-Education and Learning-Research.

In its spatial distribution of the terms, nuclear terms (twenty five) dominate over peripheral (thirteen). Precisely these terms reflect emerging lines of research concerning assessment models, methods and techniques: “portfolio”, “authentic assessment”, “rubric”, “self-assessment”, “campus”, “collaboration”, “validity”, “reliability”, “administrators”, “information literacy assessment”, “educators”, “ACRL”, and “iSkills” (Table 7).

Cluster 3: Students-efficacy

The research topic Students-Efficacy is the third one according to its overall weight and size, and consists of twenty nine keywords (Table 8).

Students-Efficacy keywords are located in the left side area of the map. Its surface surrounds the Assessment cluster (Fig. 3). This trend of research stands out due to its average weight per word, higher than the ILAHE’s average (Table 8). Words’ distribution is well balanced, with sixteen in the core and thirteen in the periphery areas. The latter are: “efficacy”, “Internet”, “tables”, “information fluency”, “interviews”, “observation”, “research projects”, “information resources”, “essay”, “intervention”, “nursing students”, “diagnostic” and “IL-HUMASS”.

Cluster 4: Learning/research

The fourth cluster is named Learning-Research and includes twenty one items (Table 9). This is a less dense cluster, with only eight nuclear keywords.

It is also the group with the lowest average weight per word. The thirteen peripheral words are: “USA”, “usability”, “users”, “engineering”, “e-learning”, “university libraries”, “distance learning”, “tutorial”, “online information literacy”, “user training”, “UK”, “accreditation” and “library research”.

Cluster 5: Library

The research front about Library is the smallest in terms of overall weight and number of keywords. However, their average weight is the biggest (Table 10). This means that all the words of this research topic are relevant to the ILAHE field.

This is a rather peripheral cluster, with a significant percentage of keywords that do not physically overlap any of the remaining clusters (Fig. 3). Peripheral words are: “portfolio assessment”, “library services”, “information science”, “library and information science”, “librarianship”, “guidelines”, “user education”, and “library use”.

Discussion

The comparative study of the five research trends contributes to the organization of their relative importance (Table 11). This table shows a balance among the importance of the five research topics in terms of size (number of keywords) and weights (normalized and average). There are some research topics that stand out due to their values (size and weight), like cluster 1 (Evaluation-education), which presents the largest size and weight values. Other topics stand out because of their mean values (weight), like cluster 5 (Library), which average weight is the largest of all. In summary, the results presented in Table 11 confirm that all trends are important for the future scientific development of the ILAHE field.

The research front Students-Efficacy surrounds the Assessment one, on the left side of the map (Fig. 3). Something similar happens in the right area, in which the other three trends (Evaluation-Education, Learning-Research and Library) are located. It is evident that the conceptual development of ILAHE takes place around these five clusters, which are clearly interrelated, and suggests two future research macro-topics that are complementary: Students-Efficacy-Assessment and Evaluation-Education-Learning-Research-Library (Table 12).

If these two macro-trends are compared according to the three scales (number of keywords, mean and normalized weights), a great similarity is found. This indicates that the issues embraced by the ILAHE field represent, in the period studied, a balanced terminological scene, at least from the spatial perspective of this work.

At the other end, binary trends represented by the following pairs of words are evident (Table 13).

Any of these pairs of terms raises a suggestive relationship that needs further investigation.

Other emerging fields of study have also arisen, such as “authentic assessment” that, despite its small number of terms (“self-assessment”, “authentic assessment” and “rubric”), offers great possibilities for research (Sharma 2007; Oakleaf 2009; Bussert et al. 2009).

In relation to ICT, seventeen terms (12 %) have been found scattered among the five clusters. Six of them are nuclear and eleven are peripheral. The dispersion of the nuclear terms is considerable: “technology”, “computer literacy”, “computers”, “databases”, “ICT” and “reference service”. In addition, the ICT-related words in the periphery are intermingled with the others on the map, and this confirms its progressive penetration into the ILAHE field. However, there are no data to infer the possibility that these terms should constitute a specific technological field.

On the contrary, “international discourse has informed the growing understanding that information literacy and technology fluency converges within ICT literacy. This construct reflects a departure from the standard information literacy approaches found within college campuses, including libraries, which define and evaluate the information and technology skills of students separately” (Somerville et al. 2007). In sum, “today there are important types of analytical thinking, communication, quantitative reasoning, and information skills that cannot be used, or learned, without technology” (Ehrmann 2004).

Conclusions

The subject field of ILAHE, located at a disciplinary crossroads, is represented by five clusters that outline the following research trends: Evaluation-Education, Assessment, Students-Efficacy, Learning-Research and Library.

However, these lines overlap significantly, as a result of their terminology “proximity”. Although there are a number of established terms, there seems to be a lack of a greater presence of peripheral terms representing the most current research. According to the results, it could be stated that the ILAHE subject field is characterized, for the particular period under study, by the overlapping of the terminology groups found. The excessive number of clusters and, above all, the considerable overlapping among them, show that we are facing a sub domain that is still being developed, since a more consolidated field should usually have less clusters in terms of the number of keywords that are included in them and less overlapping areas. For future studies, we should check if the micro-clusters found in this work might generate new research topics.

References

Aharony, N. (2010). Information literacy in the professional literature: An exploratory analysis. Aslib Proceedings, 62(3), 261–282.

Arum, R., & Roksa, J. (2010). Learning to Reason and Communicate in College: Initial Report of Findings from the CLA Longitudinal Study Learning to Reason and Communicate in College: Initial Report of Findings from the CLA Longitudinal Study (p. 34).

Bawden, D. (2012). On the gaining of understanding : Syntheses, themes and information analysis. Library and Information Research, 36(112), 147–162.

Brasley, S., Beile, P., & Katz, I. (2009). Assessing information competence of students using iSkills: A commercially-available, standardized instrument. In S. Hiller (Ed.), Library Assessment Conference. Seattle, Washington (US).

Brown, C. P., & Kingsley-Wilson, B. (2010). Assessing organically: Turning an assignment into an assessment. Reference Services Review, 38(4), 536–556.

Bussert, L., Diller, K. R., & Phelps, S. F. (2009). Voices of authentic assessment: Stakeholder experiences implementing sustainable information literacy assessments. In A. of R. Libraries (Ed.), Proceedings of the 2008 Library Assessment Conference: Building effective, sustainable, practical assessment (pp. 165–176). Washington DC.

Cameron, L., Wise, S. L., & Lottridge, S. M. (2007). The development and validation of the information literacy test. College and Research Libraries, 68(3), 229–236.

Catts, R. (2005). Information skills survey, technical manual. Canberra: CAUL.

Chen, K., & Lin, P. (2011). Information literacy in university library user education. Aslib Proceedings, 63(4), 399–418.

Dunaway, M. K., & Orblych, M. T. (2011). Formative assessment: Transforming information literacy instruction. Reference Services Review, 39(1), 24–41.

Ehrmann, S. C. (2004). Beyond computer literacy : Implications of technology for the content of a college education. Library Education, 90(4), 6–13.

Fourie, I., & van Niekerk, D. (2001). Follow-up on the use of portfolio assessment for a module in research information skills: An analysis of its value. Education for Information, 19(2), 107–126.

Gross, M., & Lathan, D. (2008). Self-views of information-seeking skills: Undergraduates` understanding of what it means to be information literate. OCLC Research.

Head, A. J., & Eisenberg, M. B. (2010). Project Information Literacy Progress Report, truth be told: How college students evaluate and use information in the digital age (pp. 1–72). Washington DC.

Indiana University. (2013). National survey of student engagement. Retrieved from http://nsse.iub.edu/.

Jacobs, N. (2002). Co-term network analysis as a means of describing the information landscapes of knowledge communities across sectors. Journal of Documentation, 58(5), 548–562.

Mueller, J. (2013). Authentic assessment toolbox. Retrieved from http://jfmueller.faculty.noctrl.edu/toolbox/.

Muhr, T. (1991). ATLAS/ti: A Prototype for the support of text interpretation. Qualitative Sociology, 14(4), 349–371.

Nazim, M., & Ahmad, M. (2007). Research trends in information literacy: A bibliometric study. SRELS Journal of Information Management, 44(1), 53–62.

Nichols, J. T. (2009). The 3 directions: Situated information literacy. College and Research Libraries, 70(6), 515–530.

Oakleaf, M. (2009). Using rubrics to assess information literacy: An examination of methodology and interrater reliability. Journal of the American Society for Information Science and Technology, 60(5), 969–983.

Oakleaf, M., Millet, M. S., & Kraus, L. (2011). All together now: Getting faculty, administratiors, and staff engaged in information literacy assessment. Portal: Libraries and the Academy, 11(3), 831–852.

Pinto, M. (2010). Design of the IL-HUMASS survey on information literacy in higher education: A self-assessment approach. Journal of Information Science, 36(1), 86–103.

Pinto, M., Cordón, J. A., & Gómez, R. (2010). Thirty years of information literacy (1977–2007): A terminological, conceptual and statistical analysis. Journal of Librarianship and Information Science, 42, 3–19.

Pinto, M., Escalona, M. I., & Pulgarín, A. (2013a). Information literacy in social sciences and health sciences: A bibliometric study (1974–2011). Scientometrics, 95(3), 1071–1094.

Pinto, M., Pulgarin, A., & Escalona, I. (2013). Viewing information literacy concepts: A comparison of two branches of knowledge. Scientometrics.

Pinto, M., & Sales, D. (2008). INFOLITRANS: A model for the development of information competence for translators. Journal of Documentation, 64(3), 413–437.

Rabine, J., & Cardwell, C. (2000). Start making sense practical approaches to outcomes assessment for libraries. Research Strategies, 17, 319–335.

Rader, H. B. (2002). Information literacy 1973–2002: A selected literature review. Library Trends, 51(2), 242–259.

SAILS. (2000). Project SAILS (standardized assessment of information literacy skills). Kent State University.

Salisbury, F., & Ellis, J. (2003). Online and face-to-face: evaluating methods for teaching information literacy skills to undergraduate arts students. Library Review, 52(5), 209–217.

Sharma, S. (2007). From chaos to clarity : Using the Research portfolio to teach and assess information literacy skills. Journal of Academic Librarianship, 33(1), 127–135.

Shenton, A. K., & Fitzgibbons, M. (2010). Making information literacy relevant. Library Review, 59(3), 165–174.

Shepard, L. A. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), 4–14.

Somerville, M. M., Lampert, L. D., Dabbour, K. S., Harlan, S., & Schader, B. (2007). Toward large scale assessment of information and communication technology literacy: Implementation considerations for the ETS ICT literacy instrument. Reference Services Review, 35(1), 8–20.

Somerville, M. M., Smith, G. W., & Macklin, A. S. (2008). The ETS iSkills (TM) assessment: a digital age tool. Electronic Library, 26(2), 158–171.

Stewart, S. L. (1999). Assessment for library instruction: The cross/angelo model. Research Strategies, 16(3), 165–174.

Van Eck, N. J., & Waltman, L. (2010). Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics, 84(2), 523–538.

Walsh, A. (2009). Information literacy assessment where do we start? Journal of Librarianship and Information Science, 41(1), 19–28.

Walton, G., & Hepworth, M. (2011). A longitudinal study of changes in learners’ cognitive states during and following an information literacy teaching intervention. Journal of Documentation, 67(3), 449–479.

Webber, S., & Johnson, B. (2006). Working towards the information literate university. In G. Walton & A. Pope (Eds.), Information literacy: recognising the need (pp. 47–58). Oxford: Chandos.

Acknowledgments

This research has been funded by the Spanish Research Program I+D+I (Research, Development and Innovation), through the project “Information Competencies Assessment of Spanish University Students in the Field of Social Sciences” (EDU 2011-29290). I’m grateful to professor Joaquin Granell for his help in translating the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pinto, M. Viewing and exploring the subject area of information literacy assessment in higher education (2000–2011). Scientometrics 102, 227–245 (2015). https://doi.org/10.1007/s11192-014-1440-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-014-1440-2