Abstract

This paper focuses the attention on the ch-index, a recent bibliometric indicator similar to the Hirsch (h) index, to evaluate the published research output of a scientist (Ajiferuke and Wolfram, Proceedings of the 12th international conference of the international society for scientometrics and informetrics. Rio de Janeiro, pp. 798–808, 2009). Ch-index is defined as the number such that, for a general group of scientific publications, ch publications are cited by at least ch different citers while the other publications are cited by no more than ch different citers. The basic difference from the classical h is that, according to ch, the diffusion of one author’s publication is evaluated on the basis of the number of different citing authors (or citers), rather than the number of received citations. The goal of this work is to discuss the pros and cons of ch and identify its connection with h. A large sample of scientists in the Quality Engineering/Management field are analyzed so as to investigate the novel indicator’s characteristics. Then, the analysis is preliminarily extended to other scientific disciplines. The most important result is that ch is almost insensitive to self-citations and/or citations made by recurrent citers, and it can be profitably used for complementing h.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Evaluating the scientific production of a scientist is a very relevant problem; doing it in a synthetic way is even more complicated. Many bibliometric indicators have been proposed over the years, such as the total number of papers produced, the total number of citations, or other indicators associated to the journals where publications are submitted, like the ISI impact factor (ISI-IF), the immediacy index and the cited half-life (Westney 1998; Glänzel and Moed 2002; Garfield 2006). In 2005, Hirsch suggested a new indicator (h) defined as “the number such that, for a general group of papers, h papers received at least h citations while the other papers received no more than h citations” (Hirsch 2005; Hirsch 2007). This indicator has many merits: it is simple to calculate using the most popular search engines (Web of Science, Scopus, Google Scholar), it has an immediate intuitive meaning and it is able to synthesize two important features of a scholar’s scientific production into a single number—i.e. the quantity of papers (assessment of productivity) and the corresponding citation rate (assessment of diffusion/impact).

The attention of the scientific community to h is attested by the appearance of a large number of publications about reflections, analyses and criticism (Moed 2005; Egghe 2006; Glänzel 2006; Kelly and Jennions 2006; Rousseau 2006; Saad 2006; Bornmann and Daniel 2007; Costas and Bordons 2007; Orbay et al. 2007; Schreiber 2007; Van Raan 2006; Wendl 2007; Harzing and van der Wal 2008; Mingers 2009; Franceschini and Maisano 2010a). Another tangible sign of the popularity of h is the appearance of many proposals for new variants and improvements (Lehmann et al. 2005; Banks 2006; Batista et al. 2006; Braun et al. 2006; Lehmann et al. 2006; BiHui et al. 2007; Burrell 2007a; Burrell 2007b; Castillo et al. 2007; Katsaros et al. 2007; Sidiropoulos et al. 2007; Schreiber 2008; Antonakis and Lalive 2008; Woeginger 2008; Franceschini and Maisano 2009; Egghe 2010; Franceschini and Maisano 2010b, c). Nevertheless, although these methods are aimed at enhancing the synthetic information of the h-index, some of them undermine its characteristics of easy calculation and immediate intuitive meaning.

This paper focuses the attention on another h-based indicator, i.e. the citer h-index or ch-index, recently introduced by Ajiferuke and Wolfram (2009, 2010). This indicator is defined as the number such that, for a general group of papers, ch papers are cited by at least ch different citers while the other papers are cited by no more than ch different citers. It can be immediately noticed that this definition is similar to the one of h, with the only exception that, for each publication, received citations are replaced by different citers (or citing authors). For example, a ch index of 15 immediately conveys that an individual has 15 papers, each with at least 15 different citers. It should be pointed out that this characteristic of immediate meaning is not typical of many other h-based indicators (Anderson et al. 2008). The new paradigm of ch is to evaluate the diffusion/impact of a publication on the basis of the authors “contaminated” or “infected” by it—as said by Goffman and Newill (1964)—rather than the corresponding citation number. In other words, this indicator takes account of the portion of members of the scientific community that are interested in a publication. The idea of considering the number of citers rather than the number of citations was suggested many years ago by Chang (1975), Dieks and Chang (1976) and Braun et al. (1985). Also, it is mentioned—for example—in some recent guideline proposals for evaluating academic research performance (Collegio dei presidenti di corso di studi in Matematica 2008). However, apart from the exploratory study of Ajiferuke and Wolfram (2010), no relevant attempt to analyze the differences between the two approaches has appeared yet.

From a practical point of view, ch is more difficult to calculate than h. In fact, it is necessary to know not only the number of citations received by each publication, but also the name of each citing (co-)author. According to the definition of ch, if the same citing author cites a publication more than one time, then he has to be counted only once. Ch-index can be calculated similarly to h, that is to say by ranking the publications in decreasing order with respect to the number of different citers and identifying the break-even point between publications’ rank and number of citers (see the example in Fig. 1).

Example of calculation of h and ch using the same (fictitious) input data. The inset on the right side of the figure shows the count of the different citing (co-)authors for the 5th publication. Publications are ordered decreasingly with respect to the number of received citations. For simplicity, this ranking is assumed to coincide with the one obtained ordering the publications decreasingly with respect to the number of different citers

Here are some possible advantages of the new indicator, in comparison with h.

-

Ch-index is not influenced by self-citations or recurrent citers: authors citing the same paper many times are counted only once.

-

Citations have not the same importance: some papers may have a relatively small number of citations, but—at the same time—may interest a large number of scientists and vice versa.

-

Ch-index is less influenced by some database errors than h: in some (not infrequent) cases, citations received by one paper are duplicated, that is to say that the same citing paper is erroneously counted two or more times (Harzing and van der Wal 2008). This specific situation may distort the resulting h value, but not ch.

The goal of this paper is to (1) analyse ch by expanding the Ajiferuke and Wolfram’s (2010) exploratory study, (2) identify the indicator’s most interesting characteristics and (3) observe the possible relationship with h. To focalize the analysis, the study is circumscribed to a sample of researchers in the Quality Engineering/Management field, which is the area of interest of the authors of this paper. Then, the study is preliminarily extended to other scientific disciplines, so as to investigate possible differences.

The remaining of the paper is organised into three sections. Section “Methodology” illustrates the analysis methodology, the selection of the scientists to examine and the information to collect. Section “Analysis results” presents and discusses the analysis results, both in Quality Engineering/Management and in the other examined scientific areas. Section “Further considerations on ch” contains further comments and reflections on ch. Finally, the conclusions are given, summarising the original contribution of the paper.

Methodology

The analysis of ch is carried out on a representative sample of scientists in the Quality Engineering/Management area, including scientists of different seniority. Sample selection is performed through the following procedure:

-

Ten different Quality journals are chosen from the most representative in this discipline (see Table 1) (ASQ—American Society for Quality 2009; Harzing 2009). It can be noticed that these journals have different publishers and only a small portion of them are indexed by Thomson Scientific (Thomson Reuters 2010). Table 1 reports the journals’ abbreviations/acronyms used hereafter.

-

For each journal, the authors of (at least) one paper, published in the reference year (for example 2008), are identified. As shown in the last column of Table 1, the number of scientists associated to each journal may change from journal to journal, depending on the typical number of co-authors per article, the number of articles per issue and the number of issues per year.

-

Next, for each of the identified scientists, the following indicators are calculated: h, ch, C (total number of citations), and A (total number of different citers).

The analysis of ch is preliminarily extended to six other scientific disciplines, in order to have an idea of the possible differences as regards to the Quality Engineering/Management area. For each discipline, a relatively limited sample of authors is randomly selected from some of the most representative scientific journals indexed by Thomson Scientific (see Table 2) (Thomson Reuters 2010).

Citation statistics are collected using the Google Scholar (GS) search engine. It was decided to use this database (1) because of the greater coverage and (2) because it can be automatically queried through dedicated software applications, such as Publish or Perish or other ad hoc applications (Meho and Yang 2007; Harzing 2009; Harzing and Van der Wal 2008). While determining h and C is quite easy and fast, on the other hand, the calculation of ch and A is much more complicated. A structured procedure for calculating the indicators (for a single scientist) is illustrated in the flow-chart in Fig. 2.

This procedure is completely automated by an ad hoc software application, created in Visual Basic and able to query GS automatically. It is worth mentioning that in the Web of Science, the number of citers to a given scientist can be obtained by few clicks, by using the Analyze Results feature (Thomson Reuters 2010). Unfortunately, search is limited to citing papers published on journals/proceedings that are listed by Thomson Scientific. This is the reason why we did not use this tool for our research.

One of the problems encountered in our analysis is represented by homonym scientists/authors. In general, authors with common names or identified by full surname and first name(s)’ initial(s)—rather than full first name(s)—are subject to this kind of problem. The practical effect is that contributions of different homonym authors are erroneously added up, with the result of distorting and “inflating” bibliometric indicators. Luckily, these “suspected” authors can be quite easily detected and then excluded from the analysis.

All the scientists’ bibliometric indicators are calculated taking into account the publications/citations accumulated up to the moment of the analysis (November 2009). Also, it is worthwhile mentioning that the proposed ad hoc software application makes it possible to define two temporal filters to calculate the above mentioned indicators, considering (1) the papers published by one author and/or (2) the citations accumulated during a precise time span.

Analysis results

Results of the analysis in the Quality Engineering/Management field

The most relevant results are related to a set of scientists in the field of Quality Engineering/Management; precisely, 835 authors of publications in the journals listed in Table 1. Figure 3 represents the h and ch histograms and reveals that the distributions are both right skewed and seem quite similar. The relationship between ch and h is, with good approximation, linear. For the purpose of example, Fig. 4 illustrates the results related to three of the 10 journals. In general, it can be said that the correlation between the two indicators is strong (high R 2 values). This result represents a large-scale confirmation of the initial results obtained by Ajiferuke and Wolfram (2010).

Histograms related to the h and ch values for 835 scientists in the Quality Engineering/Management field. The graph in Fig. 5 aggregates the results related to the ten journal dataset. It can be also noticed that ch values are generally larger than the corresponding h values, as confirmed by the value of the angular coefficient of the tendency line—i.e. 1.37. The same graph shows that variability related to ch is not perfectly homogeneous over the h domain. Precisely, it slightly increases with an increase in h. Therefore, the proportionality between ch and h can be considered less strong for high h values (increase of seniority)

Figure 5 can be also used to show the benefit of combining the information provided by h and ch when evaluating the output of a scientist. For example, let consider two (prolific) scientists, I and II, and the corresponding circled points. According to h, scientist I can be considered slightly better than II—i.e. h(I) = 30 to h(I) = 29. On the contrary, according to ch, the diffusion of scientist II is much higher—i.e. ch(II) = 46 to ch(I) = 35. This apparent paradox is due to the fact that a significant part of the citations received by scientist I are referable to recurrent citers.

Ch versus h for 835 scientists in the Quality Engineering/Management field. Each of the scientists published (at least) one paper in one of the scientific journals indicated in the legend (on the right). It can be noticed that points—independently on the reference journal—are approximately distributed around the same line. Also, it is interesting to consider the position of scientist I and II: although scientist I’s h value is larger than scientist II’s, his ch value is much smaller

Figure 6 reports two graphs related to the scientists’ C and A values. Scientists—in the horizontal axes—are sorted in decreasing order with respect to their C values.

While the general correlation between the total number of papers and C is well-known in the literature (Lehmann et al. 2006), an original result is represented by the appreciable correlation (R 2 = 0.87) between A and C, as illustrated in the graph in Fig. 7. The proportionality between A and C looks specially strong for small C values. The angular coefficient of the tendency line (i.e. 1.25) roughly corresponds to the typical number of different citers for each received citation.

A versus C values for 835 scientists in the Quality Engineering/Management field. It is interesting to consider the position of scientist I and II: although scientist I’s C-value is larger than scientist II’s (i.e. C(I) = 2993 to C(II) = 2786 citations), his A-value is much smaller (i.e. A(II) = 5405 to A(I) = 1074 different citers)

The two circled points are related to the same two scientists examined in Fig. 5. The considerations about h and ch similarities and differences can be extended also to C and A.

Going back to Fig. 5, the linear proportionality between ch and h can be reasonably justified by the following considerations:

-

(a)

considering a scholar’s scientific production, Hirsch empirically showed that h 2 is approximately proportional to C (h 2 ∝ C), with a coefficient of proportionality that may change a little depending on the shape of the citation distribution function (Hirsch 2005; Anderson et al. 2008).

-

(b)

h synthesises the number of publications and the corresponding citations by means of the same criterion with which ch synthesises the number of publications and the corresponding citers. The only difference is that, while C is given by the sum of the citations related to the publications, A is given by the union of the different citers related to the publications. Thus, since approximately h2 ∝ C, it seems reasonable to assume that also ch2 ∝ A.

-

(c)

Since A ∝ C—as shown in Fig. 7—it can be said that ch2 ∝ A ∝ C. An empirical confirmation, especially for low C values, is given by the graph in Fig. 8. Being both h 2 and ch2 directly proportional to C, we conclude that ch ∝ h.

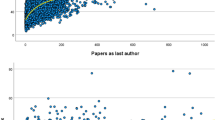

Preliminary results in other scientific fields

Figure 9 represents the relationship between ch and h in six other scientific fields. Consistently with what seen before for Quality Engineering/Management, graph patterns are approximately linear and the angular coefficients related to the different disciplines are quite similar (around 1.2–1.5). Mathematics is the discipline with the lowest (i.e. 1.21); this result can be probably explained by the fact that this field is the one with the lowest tendency to multi-authorship (Amin and Mabe 2000; Suárez-Balseiro et al. 2009).

ch versus h values for scientists related to six different scientific disciplines. The number of authors considered is reported in Table 2

It needs be reasserted that these results are based on samples consisting of a limited number of authors (about 250 for each discipline, as shown in Table 2). In the future, this analysis will be expanded to a more organic set of scientific disciplines, considering larger author samples.

Further considerations on ch

Analysing preliminary results, some further considerations can be proposed:

-

First of all, ch represents an original indicator for excluding the contribution of self-citations. If one author cites his publications over and over again, or if most of the citations are received by recurrent citers, the ch value is not inflated. In addition, it must be remembered that, the problem of recurrent citers has not been discussed as much effectively (Glänzel and Thijs 2004; Debackere et al. 2006; Frandsen 2007).

-

Differently from many h-based indicators, ch does not lose the h’s characteristic of immediate meaning (Egghe 2006; BiHui et al. 2007; Anderson et al. 2008; Schreiber 2008). In fact, although being useful and effective, most of the h-based indicators suggested in the literature result in numeric values without immediate meaning (Franceschini et al. 2007).

-

Similarly to h, ch can be used not only for evaluating the diffusion of the scientific production of individual scientists, but also for academic research groups, university departments or, more in general, organizations made up of scientists (Da Luz et al. 2008). The way of calculation is the same as that one shown in Fig. 1, with the only exception that the articles are related to a whole research group or scientific organization and not to an individual researcher.

-

ch, compared with most of the bibliometric indicators that are based on citations, is less prone to manipulations. Since assessments of the scientific production of a scientist or research group are more and more based on the number of received citations, with important effects on decisions concerning incentives, promotions, faculty positions, research grants etc., they might lead some research groups to create “self-interest partnerships”, aimed at systematically citing reciprocal works. This opportunistic behaviour is facilitated by the fact that, from one author’s viewpoint, expanding the number of paper references is simple and does not cost too much (Nature Cell Biology Editorial 2009). Being not sensitive to recurring citers, ch would suffer from this practice to a lesser degree. On the contrary, incrementing the list of co-authors reduces the weight associated to a single co-author’s contribution.

-

Citer-based indicators, such as ch and A, give credit to all the co-authors of a citing paper (for instance, not just the first one). In disciplines where hyperauthorship is an issue—e.g. biomedicine, where it can be found citing papers with literally hundreds of authors—this may seriously inflate these indicators (Cronin 2001). Identifying a fully satisfying solution to this problem is a question that remains open and merits investigation. Fortunately, in the chosen sample area we have not found any case of hyperauthorship and therefore our empirical analysis is uninfluenced by this effect.

-

ch and h, may complement each other, in particular when performing comparisons of scientists or research groups, on the basis of the diffusion of their publications. Precisely, the two indicators can be used together to define the axes of a “diffusion map”, which allows the bibliometric positioning and comparison (see Fig. 10). This map is particularly useful for comparing scientists with high h values (senior scientists).

Example of a simplified map (ch–h map) for evaluating and comparing scientists or research groups on the basis of the scientific production diffusion. The map associates ch values (vertical axis—indicator of diffusion in terms of different citers) with h values (horizontal axis—indicator of diffusion in terms of received citations). The dotted line represents the average (linear) relationship between ch and h

h-index provides a measure of diffusion in terms of citations, while ch in terms of citers. Figure 10, for example, represents three characteristic situations:

-

(a)

Scientist or research group with publications in which the number of received citations (measured by h) and different citers (measured by ch) are generally balanced (see Figs. 5, 9).

-

(b)

Scientist or research group with publications having a relatively large number of received citations with respect to the number of different citers. This situation could be due to a relatively high rate of self-citations and recurrent citers’ citations.

-

(c)

Scientist or research group with publications having a relatively large number of different citers with respect to the number of received citations. This could be the case of (1) publications with a low rate of self-citations and recurrent citers’ citations, (2) publications cited by citing papers with a high number of different co-authors.

-

This work contributes to developing a new paradigm, where the number of citers serves as the basis of research impact assessment, in addition to the number of citations. Table 3 summarizes the pros and the cons of these two different philosophies.

Conclusions

This paper enhances the initial study of Ajiferuke and Wolfram (2010) on the ch-index, a recent variant of h, which represents a different way for evaluating the impact/diffusion of one researcher’s published output in the scientific community. ch is based on the number of different citers related to a publication, rather than the simple number of received citations. The new paradigm assesses the impact of one scientist from the viewpoint of citers rather than citations. To emphasize this aspect, another citer-based indicator, A (total number of different citers), is used and compared with C (total number of citations). Based on a sample of 2345 scientists, the analysis showed that ch is generally directly proportional to h and the same goes for A and C. This behaviour was observed in Quality Engineering/Management and preliminarily confirmed in other six scientific disciplines, with slight differences in the value of the coefficient of proportionality, due to different multi-authorship aptitudes.

In spite of being generally correlated with h, ch constitutes an original approach to tackle the problem of self-citations or citations made by recurrent citers. It must be remembered that, although the current literature suggests many indicators to overcome the first problem, on the other hand, the problem of recurrent citers has not been discussed as much effectively. In this regard, ch is less prone to manipulations than many other bibliometric indicators. Furthermore, it was shown that ch and h represent two complementary ways to evaluate/compare the diffusion of the scientific production of different scientists or research groups. A possible practical tool for using them in a combined way is represented by the here introduced “diffusion map” (or ch–h map).

Similarly to h, and differently from many other h-based indicators, ch has an immediate meaning. Unfortunately, ch is significantly more complicate to calculate than h. An ad hoc software application was designed and implemented to automate the ch calculation procedure. This software is able to perform automatic queries to Google Scholar database, which is one of the most popular search engines for bibliometric analyses.

Further development of this research will be the extension of the analysis to a wider set of scientists and/or other scientific disciplines, so as to empirically confirm preliminary findings on the basis of more exhaustive data.

References

Ajiferuke, I., & Wolfram, D. (2009). Citer analysis as a measure of research impact: Library and information science as a case study. In B. Larsen & J. Leta (Eds.), Proceedings of the 12th international conference of the international society for scientometrics and informetrics (ISSI) (pp. 798–808). Rio de Janeiro.

Ajiferuke, I., & Wolfram, D. (2010). Citer analysis as a measure of research impact: Library and information science as a case study. Scientometrics (to appear). doi:10.1007/s11192-009-0127-6.

Amin, M., & Mabe, M. (2000). Impact factors: Use and abuse. In Perspectives in publishing (n. 1, October 2000) Oxford: Elsevier Science. http://www.elsevier.com.

Anderson, T. R., Hankin, R. K. S., & Killworth, P. D. (2008). Beyond the Durfee square: Enhancing the h-index to score total publication output. Scientometrics, 76(3), 577–588.

Antonakis, J., & Lalive, R. (2008). Quantifying scholarly impact: IQp versus the Hirsch h. Journal of the American Society for Information Science and Technology, 59(6), 956–969.

ASQ—American Society for Quality. (2009, September 10). www.asq.org.

Banks, M. G. (2006). An extension of the Hirsch index: Indexing scientific topics and compounds. Scientometrics, 69(1), 161–168.

Batista, P. D., Campiteli, M. G., Kinouchi, O., & Martinez, A. S. (2006). Is it possible to compare researchers with different scientific interests? Scientometrics, 68(1), 179–189.

BiHui, J., LiMing, L., Rousseau, R., & Egghe, L. (2007). The R- and AR-indices: Complementing the h-index. Chinese Science Bulletin, 52(6), 855–963.

Bornmann, L., & Daniel, H. D. (2007). What do we know about the h index? Journal of the American Society for Information Science and Technology, 58(9), 1381–1385.

Braun, T., Glänzel, W., & Schubert, A. (1985). Scientometric Indicators: A 32-country comparative evaluation of publishing performance and citation impact. Philadelphia: World Scientific.

Braun, T., Glänzel, W., Schubert, A. (2006). A Hirsch-type index for journals. The Scientist, 69(1), 169–173.

Burrell, Q. L. (2007a). On the h-index, the size of the Hirsch core and Jin’s A-index. Journal of Informetrics, 1(2), 170–177.

Burrell, Q. L. (2007b). Hirsch index or Hirsch rate? Some thoughts arising from Liang’s data. Scientometrics, 73(1), 19–28.

Castillo, C., Donato, D., & Gionis, A. (2007). Estimating number of citations using author reputation. In String processing and information retrieval (pp. 107–117). Berlin/Heidelberg: Springer.

Chang, K.H. (1975). Evaluation and survey of a subfield of physics: Magnetic resonance and relaxation studies in The Netherlands, FOM-Report n. 37175, Utrecht.

Collegio dei presidenti di corso di studi in Matematica (2008) Considerazioni e proposte relative agli indicatori di qualità di attività scientifica e di ricerca, e ai parametri per le valutazioni comparative. http://users.unimi.it/barbieri/indicatoriMAT_29nov08.pdf.

Costas, R., & Bordons, M. (2007). The h-index: Advantages, limitations and its relation with other bibliometric indicators at the micro level. Journal of Informetrics, 1(3), 193–203.

Cronin, B. (2001). Hyperauthorship: A postmodern perversion or evidence of a structural shift in scholarly communication practices? Journal of the American Society for Information Science and Technology, 52(7), 558–569.

Da Luz, M. P., Marques-Portella, C., Mendlowicz, M., Gleiser, S., Coutinho, E. S., & Figueira, I. (2008). Institutional h-index: The performance of a new metric in the evaluation of Brazilian psychiatric post-graduation programs. Scientometrics, 77(2), 361–368. doi:10.1007/s11192-007-1964-9.

Debackere, K., Thijs, B., & Schubert, A. (2006). A concise review on the role of author self-citations in information science, bibliometrics and science policy. Scientometrics, 67(2), 263–277.

Dieks, D., & Chang, K. H. (1976). Differences in impact of scientific publications: Some indices derived from a citation analysis. Social Studies of Science, 6(2), 247–267. doi:10.1177/030631277600600204.

Egghe, L. (2006). Theory and practise of the g-index. Scientometrics, 69(1), 131–152.

Egghe, L. (2010). The Hirsch-index and related impact measures. In B. Cronin (Ed.), Annual Review of Information Science and Technology (ARIST), Vol. 44.

Franceschini, F., Galetto, M., & Maisano, D. (2007). Management by measurement: Designing key indicators and performance measurement systems. Berlin: Springer Verlag.

Franceschini, F., & Maisano, D. (2009). The Hirsch index in manufacturing and quality engineering. Quality and Reliability Engineering International, 25, 987–995. doi:10.1002/qre.1016.

Franceschini, F., & Maisano, D. (2010a). Analysis of the Hirsch index’s operational properties. European Journal of Operational Research, 203(2), 494–504. doi:10.1016/j.ejor.2009.08.001.

Franceschini, F., & Maisano, D. (2010b). The Hirsch spectrum: A novel tool for analysing scientific Journals. Journal of Informetrics, 4(1), 64–73. doi:10.1016/j.joi.2009.08.003.

Franceschini, F., & Maisano, D. (2010c). A survey of quality engineering-management journals by bibliometric indicators. Quality and Reliability Engineering International (to appear). doi:10.1002/qre.1083.

Frandsen, T. F. (2007). Journal self-citations—analysing the JIF mechanism. Journal of Informetrics, 1(1), 47–58.

Garfield, E. (2006). The history and meaning of the journal impact factor. Journal of the American Medical Association, 295(1), 90–93.

Glänzel, W. (2006). On the opportunities and limitations of the h-index. Science Focus, 1(1), 10–11.

Glänzel, W., & Moed, H. F. (2002). Journal impact measures in bibliometric research. Scientometrics, 53(2), 171–193.

Glänzel, W., & Thijs, B. (2004). Does co-authorship inflate the share of self-citations? Scientometrics, 61(3), 395–404.

Goffman, W., & Newill, V. A. (1964). Generalization of epidemic theory: an application to the transmission of ideas. Nature, 204, 225–228.

Harzing A. W. (2009). Reflections on the h-index, www.harzing.com.

Harzing, A. W., & van der Wal, R. (2008). Google Scholar as a new source for citation analysis. Ethics in Science and Environmental Politics, 8(11), 61–73.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102, 16569–16572.

Hirsch, J. E. (2007). Does the h index have predictive power? Proceedings of the National Academy of Sciences of the United States of America, 104(49), 19193–19198.

Katsaros D., Sidiropoulos A., & Manolopous Y. (2007, April 27). Age decaying H-index for social network of citations. In Proceedings of workshop on social aspects of the web poznan. Poland.

Kelly, C. D., & Jennions, M. D. (2006). The h index and career assessment by numbers. Trends in Ecology and Evolution, 21(4), 167–170.

Lehmann, S., Jackson, A.D., & Lautrup, B.E. (2005). Measures and mismeasures of scientific quality. http://arxiv.org/abs/physics/0512238.

Lehmann, S., Jackson, A. D., & Lautrup, B. E. (2006). Measures for measures. Nature, 444, 1003–1004.

Meho, L. I., Yang, K. (2007). Impact of data sources on citation counts and rankings of LIS faculty: Web of Science vs. Scopus and Google scholar. Journal of the American Society for Information Science and Technology, 58(13), 2105–2125.

Mingers, J. (2009). Measuring the research contribution of management academics using the Hirsch-index. Journal of the Operational Research Society, 60(9), 1143–1153. doi:10.1057/jors.2008.94.

Moed, H. F. (2005). Citation analysis in research evaluation. Dordrecht: Springer, ISBN 1402037139.

Nature Cell Biology Editorial. (2009). Credit where credit is due. Nature Cell Biology 11(1), 1. doi:10.1038/ncb0109-1.

Orbay, M., Karamustafaoglu, O., & Oner, F. (2007). What does Hirsch index evolution explain us? A case study: Turkish Journal of Chemistry. Biblios, 27(8), 1–5.

Rousseau, R. (2006). New developments related to the Hirsch index, E-prints in Library and Information Science (ELIS), eprints.rclis.org/6376/.

Saad, G. (2006). Exploring the h-index at the author and journal levels using bibliometric data of productive consumer scholars and business-related journals respectively. Scientometrics, 69(1), 117–120.

Schreiber, M. (2007). Self-citation corrections for the Hirsch index. EuroPhysics Letters 78. doi:10.1209/0295-5075/78/30002.

Schreiber, M. (2008). A modification of the h-index: the h m -index accounts for multi-authored manuscripts. http://arxiv.org/abs/0805.2000v1.

Sidiropoulos, A., Katsaros, D., & Manolopoulos, Y. (2007). Generalized Hirsch h-index for disclosing latent facts in citation networks. Scientometrics, 72(2), 253–280.

Suárez-Balseiro, C., García-Zorita, C., & Sanz-Casado, E. (2009). Multi-authorship and its impact on the visibility of research from Puerto Rico. Information Processing and Management, 45(4), 469–476.

Thomson Reuters. (2010). 2008 Journal citation reports—science edition. http://www.isiknowledge.com.

Van Raan, A. F. J. (2006). Comparison of the Hirsch-index with standard bibliometric indicators and with peer judgment for 147 chemistry research groups. Scientometrics, 67(3), 491–502.

Wendl, M. (2007). H-index: however ranked, citations need context. Nature, 449, 403.

Westney, L. C. H. (1998). Historical rankings of science and technology: A citationist perspective. The Journal of the Association for History and Computing, 1(1). http://journals2.iranscience.net:800/mcel.pacificu.edu/mcel.pacificu.edu/history/jahcI1/Westney/Westney.htm.

Woeginger, G. H. (2008). An axiomatic characterization for the Hirsch-index. Mathematical Social Sciences, 56, 224–232.

Acknowledgments

The authors would like to thank the anonymous reviewers for their valuable suggestions to improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Franceschini, F., Maisano, D., Perotti, A. et al. Analysis of the ch-index: an indicator to evaluate the diffusion of scientific research output by citers. Scientometrics 85, 203–217 (2010). https://doi.org/10.1007/s11192-010-0165-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-010-0165-0